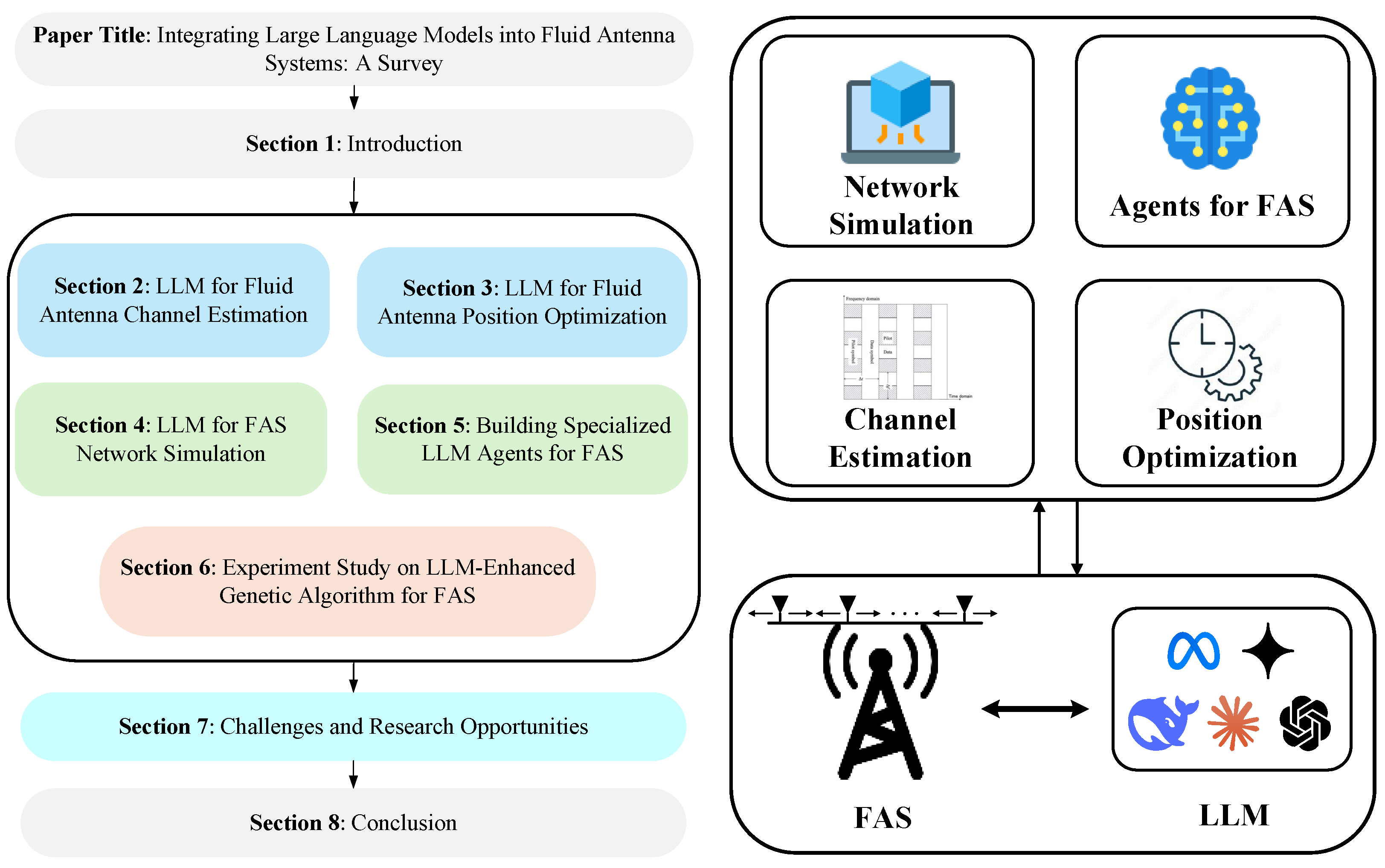

Integrating Large Language Models into Fluid Antenna Systems: A Survey

Abstract

1. Introduction

2. LLM for Fluid Antenna Channel Estimation

3. LLM for Fluid Antenna Position Optimization

3.1. LLM as a Black-Box Optimization Search Model

- No convergence guarantee. Prompt engineering and parameter tuning critically affect the solution quality of LLM-based black-box optimization.

- Susceptibility to local optima. The iterative generation process often converges to suboptimal solutions and exhibits instability in continuous variable optimization.

- High computational cost. Each optimization iteration requires a complete forward pass of the LLM, making the process substantially less efficient than conventional optimization algorithms.

3.2. LLM-Guided Deep Reinforcement Learning

- Low sample efficiency. DRL demands extensive environmental interaction samples, resulting in high training costs and challenges for real-world physical systems.

- Difficulty in learning effectively under sparse rewards. DRL struggles to learn efficiently in sparse reward scenarios.

- High sensitivity to hyperparameters. Extensive experimentation is needed for tuning, and training can be unstable due to unsuitable hyper-parameters.

- Limited generalization in highly dynamic environments. DRL generalization performance may drop sharply when environmental conditions change significantly.

- LLM-generated data for enhancing sample efficiency. LLMs can provide prior knowledge by generating reasonable initial policies or sub-goals based on existing knowledge, thereby reducing random exploration and accelerating DRL convergence. Additionally, LLMs can generate data to simulate expert trajectories. For example, Wang et al. [39] use LLM to generate construction plans while DRL manages execution, drastically cutting training time. For another example, Zhu et al. [40] proposed a novel approach called LAMARL (LLM-aided multi-agent reinforcement learning), which utilizes LLMs to generate prior policies and achieves an average 185.9% improvement in sample efficiency. Most recently, Du et al. [41] introduced RLLI, integrating RL with LLM interaction. It employs LLM-generated feedback as RL rewards to enhance convergence and uses LLM-assisted optimization to improve sample efficiency by reducing redundant computations.

- LLM for handling sparse rewards. LLM can automatically generate dense reward signals by designing intermediate rewards based on task descriptions to guide the learning process. For example, Ma et al. [42] proposed Eureka, which leverages LLMs to automate and optimize reward functions for DRL tasks.

- LLM-based hyperparameter optimization. LLM-based hyperparameter optimization automates the tuning process for DRL, efficiently discovering optimal configurations. For example, Liu et al. [43] proposed a framework named AgentHPO, which utilizes LLM agents to automate the hyperparameter optimization process.

- LLM-enhanced generalization. LLM-guided DRL improves generalization by decomposing tasks and facilitating transfer learning. It breaks complex tasks into familiar subtasks, enabling policy reuse and zero-shot or few-shot adaptation through natural language understanding. For example, Ahn et al. [44] proposed SayCan, an innovative framework that leverages LLMs to interpret high-level instructions and decompose them into executable subtasks, which are then sequentially executed by DRL agents.

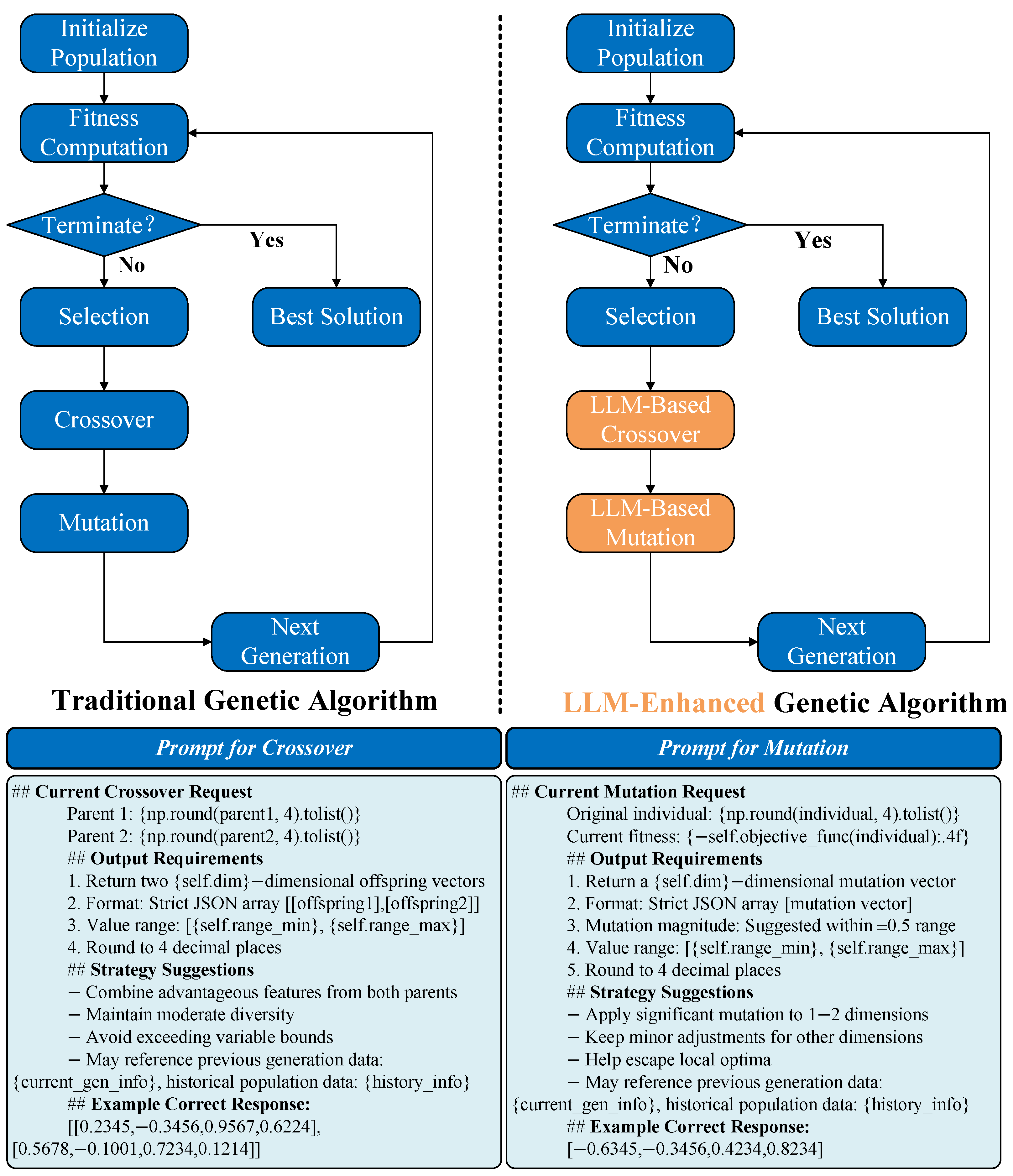

3.3. LLM-Guided Evolutionary Algorithms

- Trapped into local optima. Traditional evolutionary algorithms, such as genetic algorithms, often become trapped in suboptimal solutions due to limited population diversity or deceptive fitness landscapes.

- Curse of dimensionality. Search efficiency declines exponentially as problem dimensionality increases, requiring specialized handling for problems exceeding thousands of dimensions. Certain optimization processes may require tens of thousands of fitness evaluations, leading to high computational expenses.

- Constraint handling difficulties. Formalizing expert knowledge into fitness function constraints proves challenging.

- Helps to escape from local optima. By leveraging LLMs’ capabilities in crossover, mutation, and other exploration operations, LLM-enhanced evolutionary algorithms demonstrate improved ability to escape local optima. For example, as reported in [14], Deepmind developed MindEvolution, which adapts a genetic algorithm to solve natural language problems using LLM to perform crossover and mutation in text-based solutions.

- LLM-powered dimensionality reduction. LLMs leverage natural language to describe complex individuals, mapping high-dimensional spaces into low-dimensional semantic representations and thus overcoming the limitations of traditional numerical encoding. The introduction of LLM can break through the efficiency bottleneck of hyperparameter optimization in evolutionary algorithms. For example, Romera et al. [45] proposed the FunSearch method, which combines LLM and evolutionary algorithms to compress the search space, achieving significant breakthroughs in solving problems such as CapSet. For another example, Hameed et al. [46] proposed an innovative approach leveraging ChatGPT-3.5 and Llama3 to generate optimized particle positions and velocity suggestions, which replace underperforming particles in PSO, thereby reducing model evaluation calls by 20–60% and significantly accelerating convergence.

- LLM-based semantic constraint processing. LLM can automatically transform expert-described fuzzy constraints into computable mathematical expressions while dynamically adjusting constraint weights and integrating multimodal constraints, thereby eliminating the need for manual constraint design in traditional evolutionary algorithms. For example, Shinohara et al. [47] proposed a method called LMPSO. For constraint handling, this method enables users to directly specify constraints in natural language, with the LLM automatically adhering to these constraint requirements when generating solutions. Additionally, through a meta-prompt mechanism, it supports dynamic adjustment of constraints or heuristic rules during the optimization process.

3.4. AlphaEvolve-like Approach: LLM as Solution Program Generator and Critic

- Iterative solution refinement. The optimization solution evolves through iterations, mitigating early-stage error accumulation and outperforming single-pass self-optimization in closed-loop systems.

- Feedback learning mechanism. This AlphaEvolve-like approach can demonstrate effective learning from performance feedback.

- Creativity–rigor Synergy. This approach successfully integrates the creative generation of optimization solutions with rigorous evaluation protocols.

3.5. Comparison and Discussion

4. LLM for FAS Network Simulation

- Human-like behavior modeling. LLMs are capable of simulating human behaviors in a highly realistic manner, thereby generating network traffic patterns that closely resemble real-world conditions. Human interactions with networks are typically personalized and diverse, involving variations in access time, usage habits, application types, and traffic fluctuations. In complex environments, such behaviors are multi-modal and context-dependent rather than uniform. LLMs can capture such dynamics, learn behavioral diversity and patterns from real-world data, and generate adaptive, anthropomorphic traffic that evolves over time and context.

- Strong adaptivity to new scenarios. With the integration of LLMs, FAS systems can adapt in real-time to changing network conditions, such as congestion or signal degradation, by modifying user behaviors such as adjusting video quality or pausing downloads. While LLMs generally exhibit some inference latency, they can still simulate dynamic network environments by leveraging pre-trained models and responding quickly to shifts in network conditions. Achieving true real-time adaptability would require specialized hardware or further optimization, such as model compression, to enhance inference speed and efficiency.

- Controllable and interpretable traffic generation. Unlike traditional black-box models, LLMs offer explicit control over simulation parameters through prompt engineering, such as quality of service (QoS) requirements and hardware limitations, improving the transparency and repeatability of traffic generation.

- Reduced complexity and improved simulation efficiency. By converting complex simulation tasks into high-level natural language descriptions, LLMs streamline the simulation process, reducing computational burden and improving the speed of generating large-scale simulations.

5. Building Specialized LLM Agents for FAS

- Tool use and planning pattern. The model employs a multi-task coordination and strategic decomposition mechanism to break down objectives into sequentially executed subtasks, dynamically optimizing task priorities through real-time feedback. By integrating external tools, it effectively mitigates hallucination issues and knowledge obsolescence, enabling efficient handling of complex problems. For example, The LLM-Planner proposed by Song et al. [54] employs a hierarchical planning and dynamic re-planning coordination framework, significantly enhancing agent decision-making capabilities in complex scenarios. Experimental results on the ALFRED benchmark demonstrate the system’s strong generalization ability and dynamic environment adaptation under few-shot conditions.

- ReAct pattern. The ReAct pattern enables cognitive–behavioral unity through its “reason–plan–act–optimize” loop, creating self-correcting intelligence via dynamic task processing. This paradigm moves beyond one-way generation, achieving “knowledge–action unity” through environmental interaction and continuous optimization. For example, Wang et al. [55] proposed an LLM-based agent framework that generates hyperparameter optimization strategies through a multi-path reasoning mechanism while dynamically integrating environmental feedback for strategy adaptation. The framework employs an enhanced WS-PSO-CM algorithm for hyperparameter evaluation, establishing an advanced ReAct pattern. Experimental results demonstrate that compared to conventional manual heuristic methods, this framework achieves a remarkable 54.34% performance improvement in hyperparameter optimization tasks, exhibiting significant optimization effectiveness.

- Multi-agent pattern. The multi-agent establishes a complex task-processing system through multi-agent collaboration. Task execution in multi-agent systems can be parallelized, unlike in single-agent systems where it is sequential, thereby improving efficiency and reducing latency. For example, Lowe et al. [56] proposed the MADDPG algorithm, which introduces a novel centralized training with decentralized execution (CTDE) paradigm. During training, each agent’s critic network receives the concatenated observations and actions of all agents as input, enabling the learning of global coordination patterns while maintaining policy independence. For execution, agents rely solely on local observations to make autonomous decisions, achieving full decentralization. Experimental results demonstrate this framework’s superior capability in addressing the non-stationarity challenges inherent in multi-agent reinforcement learning (MARL) environments.

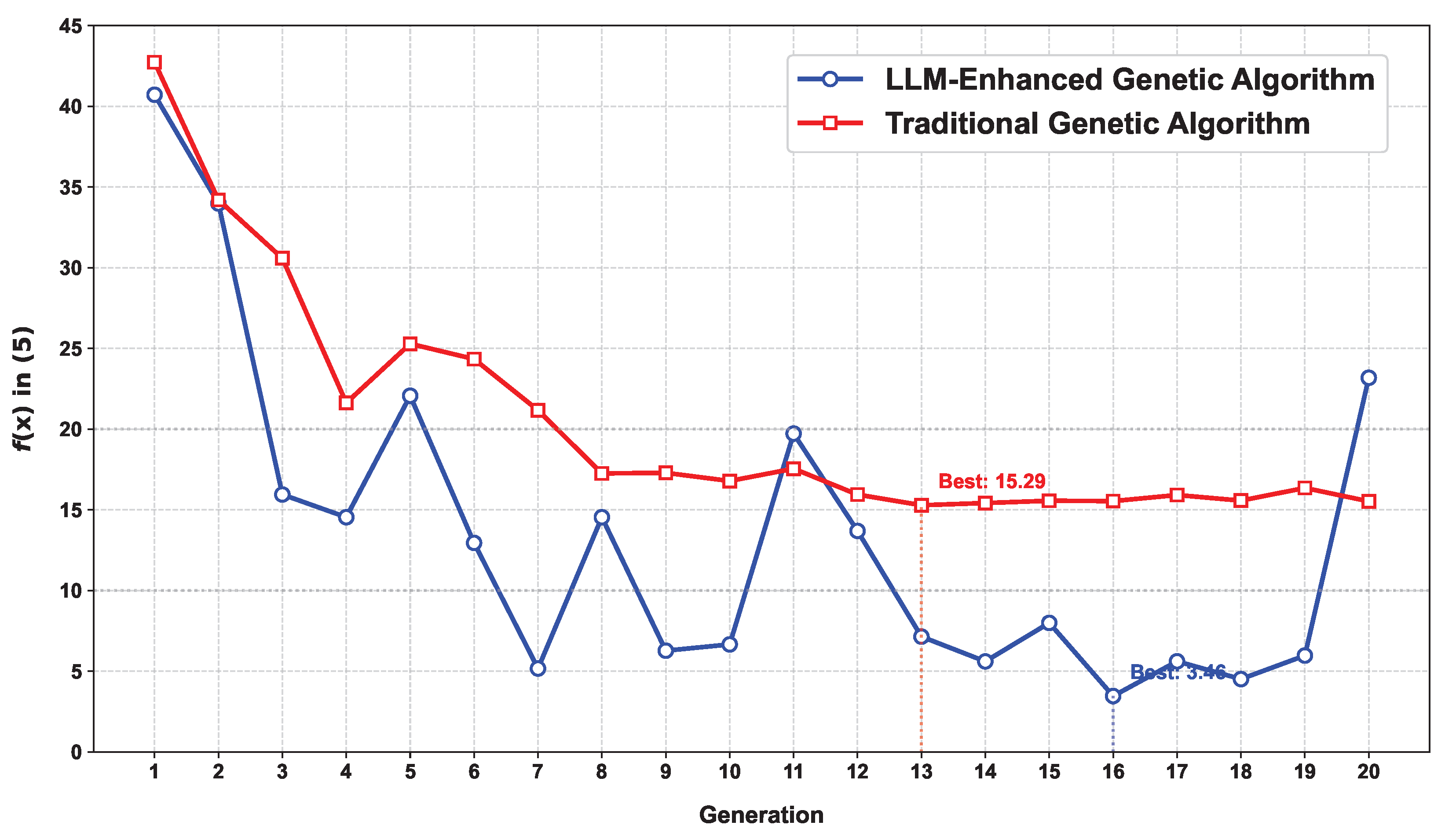

6. Experiment Study on LLM-Enhanced Genetic Algorithm for FAS

6.1. System Model and Problem Formulation

6.2. Traditional Genetic Algorithm

6.3. LLM-Enhanced Genetic Algorithm

6.4. Experiment Study

7. Challenges and Research Opportunities

- Fusion and alignment of multi-modal data. FAS environments involve a variety of heterogeneous information sources, such as antenna configurations, CSI, environmental semantics, and user behavior patterns. However, most LLMs are primarily trained on text data, making it difficult to align and integrate multimodal inputs (e.g., numerical, visual, and semantic data). Developing unified frameworks capable of processing and aligning these diverse data sources remains a significant technical challenge [59]. Bridging this gap will require the development of sophisticated multimodal learning systems that can handle both structured data (e.g., CSI) and unstructured data (e.g., user behavior) seamlessly.

- Low-latency inference and computational efficiency. FASs often operate in edge environments with limited computational resources and stringent latency requirements. Current LLMs are computationally expensive and exhibit high inference latency, limiting their real-time adaptability in dynamic FAS scenarios. Lightweight approaches such as LoRA and mixture-of-experts (MoE) models have shown promise in improving efficiency, but further research is required to optimize these models for low-latency inference in resource-constrained environments [60]. The development of efficient model architectures that can provide high-performance inference with minimal computational overhead will be crucial for enabling real-time FAS applications.

- Security and interpretability. While LLM-driven optimization in FAS offers greater flexibility and autonomy, the black-box nature of these models presents significant risks, especially in mission-critical communication scenarios [61]. Unpredictable behaviors could arise if LLMs are left uncontrolled, leading to potential instability in system performance. Therefore, building interpretable, constraint-aware LLM controllers that allow users to understand and predict model decisions is essential for ensuring safe and trustworthy deployment. Research focused on enhancing the transparency and robustness of LLMs will play a key role in addressing these concerns.

- Simulation fidelity and generalization. Despite the success of generative traffic models such as TrafficLLM and ChatSim in controlled environments, their performance in complex, real-world FAS networks with bursty interference or rare user scenarios remains limited. These models often struggle to generalize beyond the specific datasets they were trained on, particularly when exposed to unpredicted network conditions. Expanding and diversifying datasets, along with creating practical test beds that closely align with real-world FAS scenarios, is essential for improving the robustness and generalization of these models.

- Standardization and evaluation of LLM-based agents. Current LLM agent designs for FAS are still fragmented, with significant variation in architectural choices, interaction protocols, and tool integrations across different use cases. There is a pressing need for a unified framework that allows for the benchmarking, evaluation, and development of reusable, FAS-specific intelligent agents [62]. Establishing standardized evaluation criteria and fostering collaboration within the research community will help streamline progress in this area and enable fair comparisons across different approaches.

- Hybrid optimization framework. Integrating LLMs with black-box optimization search model, reinforcement learning, evolutionary algorithms, and AlphaEvolve-like approach can lead to the development of hybrid intelligence frameworks that improve both the adaptability and efficiency of FASs in dynamic environments;

- Self-optimization, continuously evolving, autonomous agents. Developing self-optimizing, continuously evolving autonomous agents, such as Auto-Agent for FAS, that can adapt in real-time based on feedback from the network environment will be crucial for building intelligent, autonomous systems capable of optimizing FAS operations without human intervention.

- Generalizable simulation libraries and benchmark environments. Constructing generalizable simulation libraries and benchmark environments specifically designed for FAS, 6G, and ISAC scenarios will provide a strong foundation for evaluating and comparing various traffic simulation models. These platforms will be critical for validating new techniques and ensuring their effectiveness in real-world deployments.

- LLM-empowered FAS-ISAC. Leveraging LLMs to enhance joint sensing and communication systems within FAS-ISAC networks offers significant potential for intelligent environmental perception, adaptive waveform optimization, and real-time data fusion. Such integrations will enable next-generation integrated networks that are more efficient, intelligent, and adaptable to the ever-changing network environment.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Wong, K.K.; Shojaeifard, A.; Tong, K.F.; Zhang, Y. Fluid Antenna Systems. IEEE Trans. Wirel. Commun. 2021, 20, 1950–1962. [Google Scholar] [CrossRef]

- Wong, K.K.; Tong, K.F. Fluid Antenna Multiple Access. IEEE Trans. Wirel. Commun. 2022, 21, 4801–4815. [Google Scholar] [CrossRef]

- Wong, K.K.; Shojaeifard, A.; Tong, K.F.; Zhang, Y. Performance Limits of Fluid Antenna Systems. IEEE Commun. Lett. 2020, 24, 2469–2472. [Google Scholar] [CrossRef]

- Wong, K.K.; Chae, C.B.; Tong, K.F. Compact Ultra Massive Antenna Array: A Simple Open-Loop Massive Connectivity Scheme. IEEE Trans. Wirel. Commun. 2024, 23, 6279–6294. [Google Scholar] [CrossRef]

- Xu, H.; Wong, K.K.; New, W.K.; Ghadi, F.R.; Zhou, G.; Murch, R.; Chae, C.B.; Zhu, Y.; Jin, S. Capacity Maximization for FAS-assisted Multiple Access Channels. IEEE Trans. Commun. 2024, 73, 4713–4731. [Google Scholar] [CrossRef]

- Zhang, Q.; Shao, M.; Zhang, T.; Chen, G.; Liu, J.; Ching, P.C. An Efficient Sum-Rate Maximization Algorithm for Fluid Antenna-Assisted ISAC System. IEEE Commun. Lett. 2025, 29, 200–204. [Google Scholar] [CrossRef]

- Xu, Z.; Zheng, T.; Dai, L. LLM-Empowered Near-Field Communications for Low-Altitude Economy. IEEE Trans. Commun. 2025, 1. [Google Scholar] [CrossRef]

- Liu, Q.; Mu, J.; Chen, D.; Zhang, R.; Liu, Y.; Hong, T. LLM Enhanced Reconfigurable Intelligent Surface for Energy-Efficient and Reliable 6G IoV. IEEE Trans. Veh. Technol. 2025, 74, 1830–1838. [Google Scholar] [CrossRef]

- Li, H.; Xiao, M.; Wang, K.; Kim, D.I.; Debbah, M. Large Language Model Based Multi-Objective Optimization for Integrated Sensing and Communications in UAV Networks. IEEE Wirel. Commun. Lett. 2025, 14, 979–983. [Google Scholar] [CrossRef]

- Quan, H.; Ni, W.; Zhang, T.; Ye, X.; Xie, Z.; Wang, S.; Liu, Y.; Song, H. Large Language Model Agents for Radio Map Generation and Wireless Network Planning. IEEE Netw. Lett. 2025, 1. [Google Scholar] [CrossRef]

- Wu, D.; Han, W.; Liu, Y.; Wang, T.; Xu, C.z.; Zhang, X.; Shen, J. Language prompt for autonomous driving. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 8359–8367. [Google Scholar]

- Kou, W.B.; Zhu, G.; Ye, R.; Wang, S.; Tang, M.; Wu, Y.C. Label Anything: An Interpretable, High-Fidelity and Prompt-Free Annotator. arXiv 2025, arXiv:2502.02972. [Google Scholar] [CrossRef]

- Kou, W.B.; Lin, Q.; Tang, M.; Lei, J.; Wang, S.; Ye, R.; Zhu, G.; Wu, Y.C. Enhancing Large Vision Model in Street Scene Semantic Understanding through Leveraging Posterior Optimization Trajectory. arXiv 2025, arXiv:2501.01710. [Google Scholar] [CrossRef]

- Lee, K.H.; Fischer, I.; Wu, Y.H.; Marwood, D.; Baluja, S.; Schuurmans, D.; Chen, X. Evolving deeper llm thinking. arXiv 2025, arXiv:2501.09891. [Google Scholar]

- Skouroumounis, C.; Krikidis, I. Skip-Enabled LMMSE-Based Channel Estimation for Large-Scale Fluid Antenna-Enabled Cellular Networks. In Proceedings of the ICC 2023-IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023; pp. 2779–2784. [Google Scholar] [CrossRef]

- Ma, W.; Zhu, L.; Zhang, R. Compressed Sensing Based Channel Estimation for Movable Antenna Communications. IEEE Commun. Lett. 2023, 27, 2747–2751. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, J.; Dai, L.; Heath, R.W. Successive Bayesian Reconstructor for Channel Estimation in Fluid Antenna Systems. IEEE Trans. Wirel. Commun. 2025, 24, 1992–2006. [Google Scholar] [CrossRef]

- Xu, B.; Chen, Y.; Cui, Q.; Tao, X.; Wong, K.K. Sparse Bayesian Learning-Based Channel Estimation for Fluid Antenna Systems. IEEE Wirel. Commun. Lett. 2025, 14, 325–329. [Google Scholar] [CrossRef]

- Xiao, Z.; Cao, S.; Zhu, L.; Liu, Y.; Ning, B.; Xia, X.G.; Zhang, R. Channel Estimation for Movable Antenna Communication Systems: A Framework Based on Compressed Sensing. IEEE Trans. Wirel. Commun. 2024, 23, 11814–11830. [Google Scholar] [CrossRef]

- Ji, S.; Psomas, C.; Thompson, J. Correlation-Based Machine Learning Techniques for Channel Estimation with Fluid Antennas. In Proceedings of the ICASSP 2024–2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 8891–8895. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, J.; Wang, C.; Wang, C.C.; Wong, K.K.; Wang, B.; Chae, C.B. Learning-Induced Channel Extrapolation for Fluid Antenna Systems Using Asymmetric Graph Masked Autoencoder. IEEE Wirel. Commun. Lett. 2024, 13, 1665–1669. [Google Scholar] [CrossRef]

- Eskandari, M.; Burr, A.G.; Cumanan, K.; Wong, K.K. cGAN-Based Slow Fluid Antenna Multiple Access. IEEE Wirel. Commun. Lett. 2024, 13, 2907–2911. [Google Scholar] [CrossRef]

- Tang, E.; Guo, W.; He, H.; Song, S.; Zhang, J.; Letaief, K.B. Accurate and Fast Channel Estimation for Fluid Antenna Systems with Diffusion Models. arXiv 2025, arXiv:2505.04930. [Google Scholar] [CrossRef]

- Liu, B.; Liu, X.; Gao, S.; Cheng, X.; Yang, L. LLM4CP: Adapting Large Language Models for Channel Prediction. J. Commun. Inf. Netw. 2024, 9, 113–125. [Google Scholar] [CrossRef]

- Yang, H.; Lambotharan, S.; Derakhshani, M. FAS-LLM: Large Language Model-Based Channel Prediction for OTFS-Enabled Satellite-FAS Links. arXiv 2025, arXiv:2505.09751. [Google Scholar] [CrossRef]

- Li, Z.; Yang, Q.; Xiong, Z.; Shi, Z.; Quek, T.Q.S. Bridging the Modality Gap: Enhancing Channel Prediction with Semantically Aligned LLMs and Knowledge Distillation. arXiv 2025, arXiv:2505.12729. [Google Scholar] [CrossRef]

- Cheng, Z.; Li, N.; Zhu, J.; She, X.; Ouyang, C.; Chen, P. Sum-Rate Maximization for Fluid Antenna Enabled Multiuser Communications. IEEE Commun. Lett. 2024, 28, 1206–1210. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, M.; Xu, H.; Yang, Z.; Wong, K.K.; Zhang, Z. Joint Beamforming and Antenna Design for Near-Field Fluid Antenna System. IEEE Wirel. Commun. Lett. 2025, 14, 415–419. [Google Scholar] [CrossRef]

- Qin, H.; Chen, W.; Li, Z.; Wu, Q.; Cheng, N.; Chen, F. Antenna Positioning and Beamforming Design for Fluid Antenna-Assisted Multi-User Downlink Communications. IEEE Wirel. Commun. Lett. 2024, 13, 1073–1077. [Google Scholar] [CrossRef]

- Yao, J.; Xin, L.; Wu, T.; Jin, M.; Wong, K.K.; Yuen, C.; Shin, H. FAS for Secure and Covert Communications. IEEE Internet Things J. 2025, 12, 18414–18418. [Google Scholar] [CrossRef]

- Xiao, Z.; Pi, X.; Zhu, L.; Xia, X.G.; Zhang, R. Multiuser Communications with Movable-Antenna Base Station: Joint Antenna Positioning, Receive Combining, and Power Control. IEEE Trans. Wirel. Commun. 2024, 23, 19744–19759. [Google Scholar] [CrossRef]

- Ding, J.; Zhou, Z.; Jiao, B. Movable Antenna-Aided Secure Full-Duplex Multi-User Communications. IEEE Trans. Wirel. Commun. 2025, 24, 2389–2403. [Google Scholar] [CrossRef]

- Guan, J.; Lyu, B.; Liu, Y.; Tian, F. Secure Transmission for Movable Antennas Empowered Cell-Free Symbiotic Radio Communications. In Proceedings of the 2024 16th International Conference on Wireless Communications and Signal Processing (WCSP), Hefei, China, 24–26 October 2024; pp. 578–584. [Google Scholar] [CrossRef]

- Wang, C.; Li, G.; Zhang, H.; Wong, K.K.; Li, Z.; Ng, D.W.K.; Chae, C.B. Fluid Antenna System Liberating Multiuser MIMO for ISAC via Deep Reinforcement Learning. IEEE Trans. Wirel. Commun. 2024, 23, 10879–10894. [Google Scholar] [CrossRef]

- Waqar, N.; Wong, K.K.; Chae, C.B.; Murch, R.; Jin, S.; Sharples, A. Opportunistic Fluid Antenna Multiple Access via Team-Inspired Reinforcement Learning. IEEE Trans. Wirel. Commun. 2024, 23, 12068–12083. [Google Scholar] [CrossRef]

- Weng, C.; Chen, Y.; Zhu, L.; Wang, Y. Learning-Based Joint Beamforming and Antenna Movement Design for Movable Antenna Systems. IEEE Wirel. Commun. Lett. 2024, 13, 2120–2124. [Google Scholar] [CrossRef]

- Huang, S.; Yang, K.; Qi, S.; Wang, R. When large language model meets optimization. Swarm Evol. Comput. 2024, 90, 101663. [Google Scholar] [CrossRef]

- Yang, C.; Wang, X.; Lu, Y.; Liu, H.; Le, Q.V.; Zhou, D.; Chen, X. Large language models as optimizers. arXiv 2023, arXiv:2309.03409. [Google Scholar] [PubMed]

- Wang, G.; Xie, Y.; Jiang, Y.; Mandlekar, A.; Xiao, C.; Zhu, Y.; Fan, L.; Anandkumar, A. Voyager: An open-ended embodied agent with large language models. arXiv 2023, arXiv:2305.16291. [Google Scholar] [CrossRef]

- Zhu, G.; Zhou, R.; Ji, W.; Zhao, S. LAMARL: LLM-Aided Multi-Agent Reinforcement Learning for Cooperative Policy Generation. IEEE Robot. Autom. Lett. 2025, 10, 7476–7483. [Google Scholar] [CrossRef]

- Du, H.; Zhang, R.; Niyato, D.; Kang, J.; Xiong, Z.; Cui, S.; Shen, X.; Kim, D.I. Reinforcement Learning with LLMs Interaction for Distributed Diffusion Model Services. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 1–18. [Google Scholar] [CrossRef]

- Ma, Y.J.; Liang, W.; Wang, G.; Huang, D.A.; Bastani, O.; Jayaraman, D.; Zhu, Y.; Fan, L.; Anandkumar, A. Eureka: Human-level reward design via coding large language models. arXiv 2023, arXiv:2310.12931. [Google Scholar]

- Liu, S.; Gao, C.; Li, Y. AgentHPO: Large language model agent for hyper-parameter optimization. In Proceedings of the The Second Conference on Parsimony and Learning (Proceedings Track), Stanford, CA, USA, 24 March 2025. [Google Scholar]

- Ahn, M.; Brohan, A.; Brown, N.; Chebotar, Y.; Cortes, O.; David, B.; Finn, C.; Fu, C.; Gopalakrishnan, K.; Hausman, K.; et al. Do as i can, not as i say: Grounding language in robotic affordances. arXiv 2022, arXiv:2204.01691. [Google Scholar] [CrossRef]

- Romera-Paredes, B.; Barekatain, M.; Novikov, A.; Balog, M.; Kumar, M.P.; Dupont, E.; Ruiz, F.J.; Ellenberg, J.S.; Wang, P.; Fawzi, O.; et al. Mathematical discoveries from program search with large language models. Nature 2024, 625, 468–475. [Google Scholar] [CrossRef]

- Hameed, S.; Qolomany, B.; Belhaouari, S.B.; Abdallah, M.; Qadir, J.; Al-Fuqaha, A. Large Language Model Enhanced Particle Swarm Optimization for Hyperparameter Tuning for Deep Learning Models. IEEE Open J. Comput. Soc. 2025, 6, 574–585. [Google Scholar] [CrossRef]

- Shinohara, Y.; Xu, J.; Li, T.; Iba, H. Large language models as particle swarm optimizers. arXiv 2025, arXiv:2504.09247. [Google Scholar]

- Novikov, A.; Vũ, N.; Eisenberger, M.; Dupont, E.; Huang, P.S.; Wagner, A.Z.; Shirobokov, S.; Kozlovskii, B.; Ruiz, F.J.; Mehrabian, A.; et al. AlphaEvolve: A coding agent for scientific and algorithmic discovery. arXiv 2025, arXiv:2506.13131. [Google Scholar] [CrossRef]

- Wang, C.; Wong, K.K.; Li, Z.; Jin, L.; Chae, C.B. Large Language Model Empowered Design of Fluid Antenna Systems: Challenges, Frameworks, and Case Studies for 6G. arXiv 2025, arXiv:2506.14288. [Google Scholar] [CrossRef]

- Pollacia, L.F. A survey of discrete event simulation and state-of-the-art discrete event languages. Acm Sigsim Simul. Dig. 1989, 20, 8–25. [Google Scholar] [CrossRef]

- Zhou, H.; Huang, X.; Deng, L. Enhancing Network Traffic Classification with Large Language Models. In Proceedings of the 2024 IEEE International Conference on Big Data, Washington, DC, USA, 15–18 December 2024; pp. 7282–7291. [Google Scholar] [CrossRef]

- Cui, T.; Lin, X.; Li, S.; Chen, M.; Yin, Q.; Li, Q.; Xu, K. TrafficLLM: Enhancing Large Language Models for Network Traffic Analysis with Generic Traffic Representation. arXiv 2025, arXiv:2504.04222. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, Z.; Lu, Y.; Xu, C.; Liu, C.; Zhao, H.; Chen, S.; Wang, Y. Editable Scene Simulation for Autonomous Driving via Collaborative LLM-Agents. arXiv 2024, arXiv:2402.05746. [Google Scholar] [CrossRef]

- Song, C.H.; Wu, J.; Washington, C.; Sadler, B.M.; Chao, W.L.; Su, Y. Llm-planner: Few-shot grounded planning for embodied agents with large language models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 2998–3009. [Google Scholar]

- Wang, W.; Peng, J.; Hu, M.; Zhong, W.; Zhang, T.; Wang, S.; Zhang, Y.; Shao, M.; Ni, W. LLM Agent for Hyper-Parameter Optimization. arXiv 2025, arXiv:2506.15167. [Google Scholar] [CrossRef]

- Lowe, R.; Wu, Y.I.; Tamar, A.; Harb, J.; Pieter Abbeel, O.; Mordatch, I. Multi-agent actor-critic for mixed cooperative-competitive environments. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Zhu, L.; Ma, W.; Ning, B.; Zhang, R. Movable-Antenna Enhanced Multiuser Communication via Antenna Position Optimization. IEEE Trans. Wirel. Commun. 2024, 23, 7214–7229. [Google Scholar] [CrossRef]

- Sampson, J.R. Adaptation in Natural and Artificial Systems; MIT Press: Cambridge, MA, USA, 1976. [Google Scholar]

- Tian, Y.; Zhao, Q.; el abidine Kherroubi, Z.; Boukhalfa, F.; Wu, K.; Bader, F. Multimodal Transformers for Wireless Communications: A Case Study in Beam Prediction. arXiv 2023, arXiv:2309.11811. [Google Scholar] [CrossRef]

- Zoph, B.; Bello, I.; Kumar, S.; Du, N.; Huang, Y.; Dean, J.; Shazeer, N.; Fedus, W. ST-MoE: Designing Stable and Transferable Sparse Expert Models. arXiv 2022, arXiv:2202.08906. https://arxiv.org/abs/2202.08906. [Google Scholar]

- Shi, D.; Shen, T.; Huang, Y.; Li, Z.; Leng, Y.; Jin, R.; Liu, C.; Wu, X.; Guo, Z.; Yu, L.; et al. Large Language Model Safety: A Holistic Survey. arXiv 2024, arXiv:2412.17686. [Google Scholar] [CrossRef]

- Wang, S.; Long, Z.; Fan, Z.; Huang, X.; Wei, Z. Benchmark Self-Evolving: A Multi-Agent Framework for Dynamic LLM Evaluation. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 3310–3328. [Google Scholar]

- Wang, W.; Chen, W.; Luo, Y.; Long, Y.; Lin, Z.; Zhang, L.; Lin, B.; Cai, D.; He, X. Model Compression and Efficient Inference for Large Language Models: A Survey. arXiv 2024, arXiv:2402.09748. [Google Scholar] [CrossRef]

- Ke, Z.; Ming, Y.; Joty, S. NAACL2025 Tutorial: Adaptation of Large Language Models. arXiv 2025, arXiv:2504.03931. [Google Scholar] [CrossRef]

| LLM-BB | LLM-DRL | LLM-EA | LLM-Alpha | |

|---|---|---|---|---|

| Design difficulty | ✩ | ✩✩ | ✩✩ | ✩✩✩ |

| Solution quality | ✩ | ✩✩✩✩ | ✩✩✩ | ✩✩✩✩ |

| FLOP requirement | ✩ | ✩✩✩ | ✩✩ | ✩✩✩✩ |

| Memory requirement | ✩ | ✩✩ | ✩✩ | ✩✩✩ |

| Real time | ✩✩ | ✩✩✩✩ | ✩✩ | ✩ |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Path 1 Rx pitch angle, | Path 1 Rx azimuth angle, | ||

| Path 1 Tx pitch angle, | Path 1 Tx azimuth angle, | ||

| Path 1 Rx pitch angle, | Path 2 Rx azimuth angle, | ||

| Path 1 Tx pitch angle, | Path 2 Tx azimuth angle, | ||

| Path 1 Rx pitch angle, | Path 3 Rx azimuth angle, | ||

| Path 1 Tx pitch angle, | Path 3 Tx azimuth angle, | ||

| Movable range | [−1,1] × [−1,1] | Path loss coefficient, | 2.5 |

| Path 1 Gain, | 0.634 | Path 2 gain, | 0.1768 |

| Path 3 Gain, | 0.1768 | Frequency, f | 60 GHz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, T.; Gao, Y.; Zhang, T.; Shao, M.; Ni, W.; Xu, H. Integrating Large Language Models into Fluid Antenna Systems: A Survey. Sensors 2025, 25, 5177. https://doi.org/10.3390/s25165177

Deng T, Gao Y, Zhang T, Shao M, Ni W, Xu H. Integrating Large Language Models into Fluid Antenna Systems: A Survey. Sensors. 2025; 25(16):5177. https://doi.org/10.3390/s25165177

Chicago/Turabian StyleDeng, Tingsong, Yan Gao, Tong Zhang, Mingjie Shao, Wanli Ni, and Hao Xu. 2025. "Integrating Large Language Models into Fluid Antenna Systems: A Survey" Sensors 25, no. 16: 5177. https://doi.org/10.3390/s25165177

APA StyleDeng, T., Gao, Y., Zhang, T., Shao, M., Ni, W., & Xu, H. (2025). Integrating Large Language Models into Fluid Antenna Systems: A Survey. Sensors, 25(16), 5177. https://doi.org/10.3390/s25165177