Smart City Infrastructure Monitoring with a Hybrid Vision Transformer for Micro-Crack Detection

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

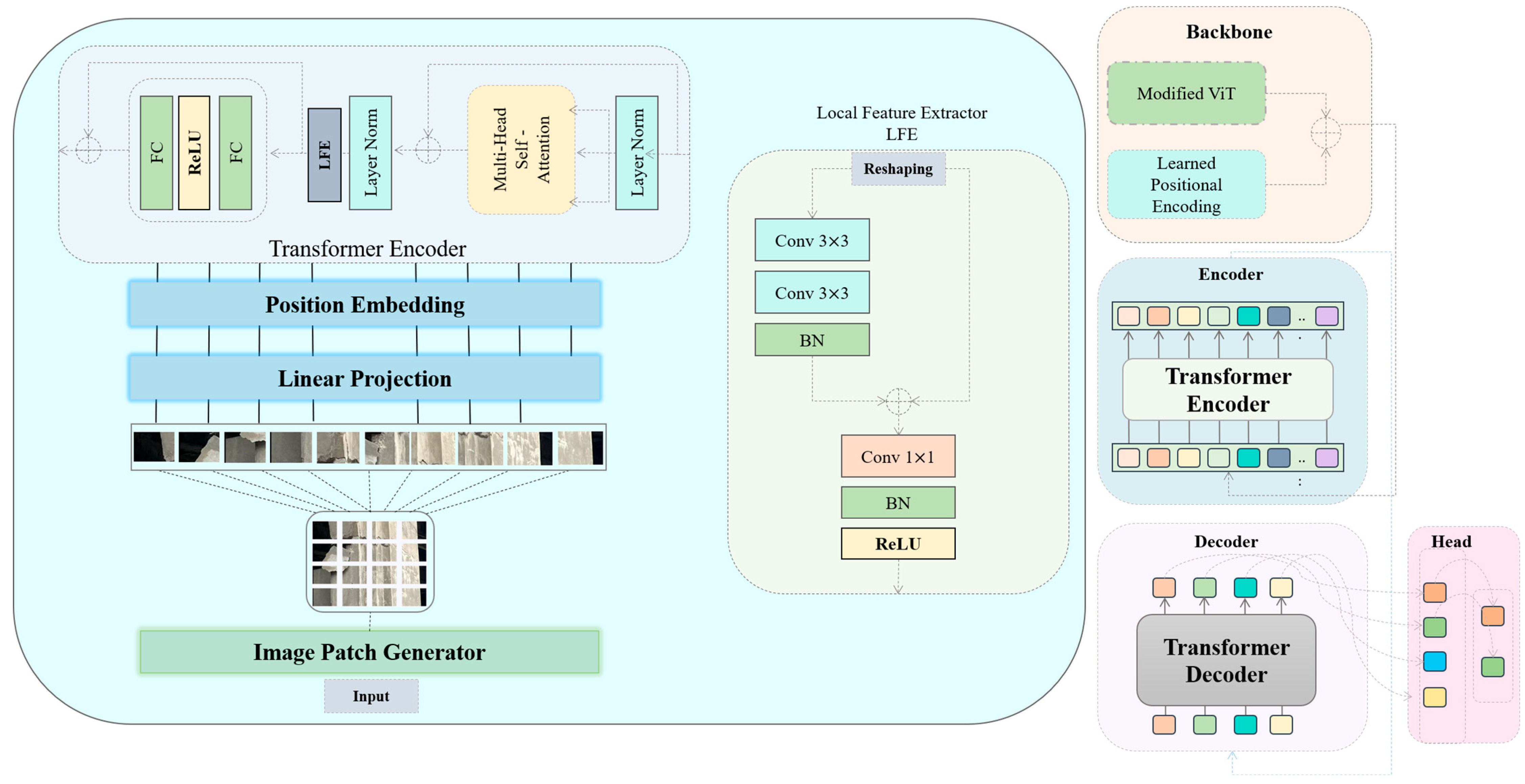

The Proposed Model

4. Experiments and Results

4.1. Dataset

4.2. Comparison with Baseline Models

4.3. Comparison with SOTA Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nicolini, E. Urban Safety, Socio-Technical Solutions for Urban Infrastructure: Case Studies. Buildings 2024, 14, 1754. [Google Scholar] [CrossRef]

- Wang, M.; Yin, X. Construction and maintenance of urban underground infrastructure with digital technologies. Autom. Constr. 2022, 141, 104464. [Google Scholar] [CrossRef]

- Brunelli, M.; Ditta, C.C.; Postorino, M.N. New infrastructures for Urban Air Mobility systems: A systematic review on vertiport location and capacity. J. Air Transp. Manag. 2023, 112, 102460. [Google Scholar] [CrossRef]

- Manivasakan, H.; Kalra, R.; O’Hern, S.; Fang, Y.; Xi, Y.; Zheng, N. Infrastructure requirement for autonomous vehicle integration for future urban and suburban roads–Current practice and a case study of Melbourne, Australia. Transp. Res. Part A Policy Pract. 2021, 152, 36–53. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Kutlimuratov, A.; Mirzaev, D.; Dauletov, A.; Botirov, T.; Zakirova, M.; Mukhiddinov, M.; Cho, Y.I. Lightweight UAV-Based System for Early Fire-Risk Identification in Wild Forests. Fire 2025, 8, 288. [Google Scholar] [CrossRef]

- Oh, B.K.; Park, H.S. Urban safety network for long-term structural health monitoring of buildings using convolutional neural network. Autom. Constr. 2022, 137, 104225. [Google Scholar] [CrossRef]

- Oulahyane, A.; Kodad, M.; Bouazza, A.; Oulahyane, K. Assessing the Impact of Deep Learning on Grey Urban Infrastructure Systems: A Comprehensive Review. In Proceedings of the 2024 International Conference on Decision Aid Sciences and Applications (DASA), Manama, Bahrain, 11–12 December 2024; pp. 1–9. [Google Scholar]

- Rane, N. Transformers in Intelligent Architecture, Engineering, and Construction (AEC) Industry: Applications, Challenges, and Future Scope. SSRN Electron. J. 2023, 13. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, C.; Yu, S.X.; McKenna, F.; Law, K.H. Adaln: A vision transformer for multidomain learning and predisaster building information extraction from images. J. Comput. Civ. Eng. 2022, 36, 04022024. [Google Scholar] [CrossRef]

- Ashayeri, M.; Abbasabadi, N. Unraveling energy justice in NYC urban buildings through social media sentiment analysis and transformer deep learning. Energy Build. 2024, 306, 113914. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Shukhratovich, M.B.; Kakhorov, A.; Cho, Y.-I. Breaking New Ground in Monocular Depth Estimation with Dynamic Iterative Refinement and Scale Consistency. Appl. Sci. 2025, 15, 674. [Google Scholar] [CrossRef]

- Kosova, F.; Altay, Ö.; Ünver, H.Ö. Structural health monitoring in aviation: A comprehensive review and future directions for machine learning. Nondestruct. Test. Eval. 2025, 40, 1–60. [Google Scholar] [CrossRef]

- Mohebbifar, M.R.; Omarmeli, K. Defect detection by combination of threshold and multistep watershed techniques. Russ. J. Nondestruct. Test. 2020, 56, 80–91. [Google Scholar] [CrossRef]

- Jahanshahi, M.R.; Kelly, J.S.; Masri, S.F.; Sukhatme, G.S. A survey and evaluation of promising approaches for automatic image-based defect detection of bridge structures. Struct. Infrastruct. Eng. 2009, 5, 455–486. [Google Scholar] [CrossRef]

- Saleh, A.K.; Sakka, Z.; Almuhanna, H. The application of two-dimensional continuous wavelet transform based on active infrared thermography for subsurface defect detection in concrete structures. Buildings 2022, 12, 1967. [Google Scholar] [CrossRef]

- Lin, Y.Z.; Nie, Z.H.; Ma, H.W. Structural damage detection with automatic feature-extraction through deep learning. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 1025–1046. [Google Scholar] [CrossRef]

- Zhu, H.; Wei, G.; Ma, D.; Yu, X.; Dong, C. Research on the detection and identification method of internal cracks in semi-rigid base asphalt pavement based on three-dimensional ground penetrating radar. Measurement 2025, 244, 116486. [Google Scholar] [CrossRef]

- Karimi, N.; Mishra, M.; Lourenço, P.B. Automated surface crack detection in historical constructions with various materials using deep learning-based YOLO network. Int. J. Archit. Herit. 2025, 19, 581–597. [Google Scholar] [CrossRef]

- Krishnan, S.S.R.; Karuppan, M.N.; Khadidos, A.O.; Khadidos, A.O.; Selvarajan, S.; Tandon, S.; Balusamy, B. Comparative analysis of deep learning models for crack detection in buildings. Sci. Rep. 2025, 15, 2125. [Google Scholar] [CrossRef]

- Kim, B.; Yuvaraj, N.; Sri Preethaa, K.R.; Arun Pandian, R. Surface crack detection using deep learning with shallow CNN architecture for enhanced computation. Neural Comput. Appl. 2021, 33, 9289–9305. [Google Scholar] [CrossRef]

- Abbas, Y.M.; Alghamdi, H. Semantic segmentation and deep CNN learning vision-based crack recognition system for concrete surfaces: Development and implementation. Signal Image Video Process. 2025, 19, 339. [Google Scholar] [CrossRef]

- Song, F.; Wang, D.; Dai, L.; Yang, X. Concrete bridge crack semantic segmentation method based on improved DeepLabV3+. In Proceedings of the 2024 IEEE 13th Data Driven Control and Learning Systems Conference (DDCLS), Kaifeng, China, 17–19 May 2024; pp. 1293–1298. [Google Scholar]

- Liu, Y. DeepLabV3+ Based Mask R-CNN for Crack Detection and Segmentation in Concrete Structures. Int. J. Adv. Comput. Sci. Appl. 2025, 16, 142–149. [Google Scholar] [CrossRef]

- Shahin, M.; Chen, F.F.; Maghanaki, M.; Hosseinzadeh, A.; Zand, N.; Khodadadi Koodiani, H. Improving the concrete crack detection process via a hybrid visual transformer algorithm. Sensors 2024, 24, 3247. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Qingyi, W.; Bo, C. A novel transfer learning model for the real-time concrete crack detection. Knowl.-Based Syst. 2024, 301, 112313. [Google Scholar] [CrossRef]

- Lin, X.; Meng, Y.; Sun, L.; Yang, X.; Leng, C.; Li, Y.; Niu, Z.; Gong, W.; Xiao, X. Building Surface Defect Detection Based on Improved YOLOv8. Buildings 2025, 15, 1865. [Google Scholar] [CrossRef]

- Yadav, D.P.; Sharma, B.; Chauhan, S.; Dhaou, I.B. Bridging Convolutional Neural Networks and Transformers for Efficient Crack Detection in Concrete Building Structures. Sensors 2024, 24, 4257. [Google Scholar] [CrossRef]

- Quan, J.; Ge, B.; Wang, M. CrackViT: A unified CNN-transformer model for pixel-level crack extraction. Neural Comput. Appl. 2023, 35, 10957–10973. [Google Scholar] [CrossRef]

- Ma, M.; Yang, L.; Liu, Y.; Yu, H. A transformer-based network with feature complementary fusion for crack defect detection. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16989–17006. [Google Scholar] [CrossRef]

- Tan, C.; Liu, J.; Zhao, Z.; Liu, R.; Tan, P.; Yao, A.; Pan, S.; Dong, J. ETAFHrNet: A Transformer-Based Multi-Scale Network for Asymmetric Pavement Crack Segmentation. Appl. Sci. 2025, 15, 6183. [Google Scholar] [CrossRef]

| Layer Name | Type | Kernel Size | Activation |

|---|---|---|---|

| Input | Feature Map | - | - |

| Conv | Convolution | 3 × 3 | - |

| Conv | Convolution | 3 × 3 | - |

| BN | Batch Normalization | - | - |

| Connection | Residual Connection | - | - |

| Conv | Convolution | 1 × 1 | ReLU |

| BN | Batch Normalization | - | ReLU |

| Configuration | Accuracy | F1-Score | Notes |

|---|---|---|---|

| DETR (baseline) | 91.05 | 0.89 | Without ViT |

| ViT + DETR | 93.11 | 0.90 | With ViT (without LFE) |

| ViT + DETR | 95.0 | 0.93 | With ViT (with LFE) |

| Model | Accuracy (%) | Precision | Recall | F1-Score | mAP@0.5 |

|---|---|---|---|---|---|

| DETR (baseline) | 91.5 | 0.89 | 0.88 | 0.89 | 0.82 |

| ViT-DETR | 93.11 | 0.91 | 0.90 | 0.90 | 0.85 |

| YOLOv5m | 91.12 | 0.86 | 0.85 | 0.86 | 0.81 |

| YOLOv6m | 91.67 | 0.89 | 0.88 | 0.88 | 0.82 |

| YOLOv7m | 92.78 | 0.91 | 0.90 | 0.90 | 0.85 |

| YOLOv8m | 93.89 | 0.92 | 0.91 | 0.92 | 0.86 |

| YOLOv9m | 94.16 | 0.93 | 0.92 | 0.92 | 0.86 |

| Ours (ViT+LFE-DETR) | 95.0 | 0.94 | 0.93 | 0.93 | 0.89 |

| Model | Accuracy (%) | Precision | Recall | F1-Score | mAP@0.5 | Category | Reference |

|---|---|---|---|---|---|---|---|

| Canny + Sobel [14] | 72.3 | 0.65 | 0.60 | 0.62 | – | Traditional | Jahanshahi et al. |

| Wavelet + IR [15] | 76.4 | 0.69 | 0.65 | 0.67 | – | Traditional | Saleh et al. |

| Shallow CNN [20] | 84.1 | 0.81 | 0.79 | 0.80 | 0.74 | CNN | Kim et al. |

| DeepLabV3+ [22] | 88.9 | 0.87 | 0.85 | 0.86 | 0.78 | CNN | Song et al. |

| YOLOv5m [27] | 91.12 | 0.86 | 0.85 | 0.86 | 0.81 | CNN (YOLO) | Lin et al. |

| YOLOv9m [27] | 94.16 | 0.93 | 0.92 | 0.92 | 0.86 | CNN (YOLO) | Lin et al. |

| DETR [25] | 91.05 | 0.89 | 0.88 | 0.89 | 0.82 | Transformer | Carion et al. |

| Adaln [9] | 91.5 | 0.90 | 0.89 | 0.89 | 0.83 | Transformer | Guo et al. |

| ViT-DETR [24] | 93.11 | 0.91 | 0.90 | 0.90 | 0.85 | Transformer | Shahin et al. |

| CrackViT [29] | 93.8 | 0.92 | 0.91 | 0.91 | 0.86 | Hybrid CNN + Transformer | Quan et al. |

| FCFNet [30] | 93.9 | 0.92 | 0.91 | 0.91 | 0.86 | Hybrid CNN + Transformer | Ma et al. |

| ETAFHrNet [31] | 94.2 | 0.93 | 0.92 | 0.92 | 0.86 | Hybrid CNN + Transformer | Tan et al. |

| ViLFD(Ours) | 95.0 | 0.94 | 0.93 | 0.93 | 0.89 | HybridViT+LFE | This Work |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasimov, R.; Cho, Y.I. Smart City Infrastructure Monitoring with a Hybrid Vision Transformer for Micro-Crack Detection. Sensors 2025, 25, 5079. https://doi.org/10.3390/s25165079

Nasimov R, Cho YI. Smart City Infrastructure Monitoring with a Hybrid Vision Transformer for Micro-Crack Detection. Sensors. 2025; 25(16):5079. https://doi.org/10.3390/s25165079

Chicago/Turabian StyleNasimov, Rashid, and Young Im Cho. 2025. "Smart City Infrastructure Monitoring with a Hybrid Vision Transformer for Micro-Crack Detection" Sensors 25, no. 16: 5079. https://doi.org/10.3390/s25165079

APA StyleNasimov, R., & Cho, Y. I. (2025). Smart City Infrastructure Monitoring with a Hybrid Vision Transformer for Micro-Crack Detection. Sensors, 25(16), 5079. https://doi.org/10.3390/s25165079