Abstract

With the deep integration of edge computing and Internet of Things (IoT) technologies, the computational capabilities of intelligent edge cameras continue to advance, providing new opportunities for the local deployment of video understanding algorithms. However, existing video captioning models suffer from high computational complexity and large parameter counts, making them challenging to meet the real-time processing requirements of resource-constrained IoT edge devices. In this work, we propose EdgeVidCap, a lightweight video captioning model specifically designed for IoT edge cameras. Specifically, we design a hybrid module termed Synergetic Attention State Mamba (SASM) that incorporates channel attention mechanisms to enhance feature selection capabilities and leverages State Space Models (SSMs) to efficiently capture long-range spatial dependencies, achieving efficient spatiotemporal modeling of multimodal video features. In the caption generation stage, we propose an adaptive attention-guided LSTM decoder that can dynamically adjust feature weights according to video content and auto-regressively generate semantically rich and accurate textual descriptions. Comprehensive evaluations of EdgeVidCap on mainstream datasets, including MSR-VTT and MSVD are analyzed. Experimental results demonstrate that our system demonstrated enhanced precision relative to existing investigations, and our streamlined frame filtering mechanism yielded greater processing efficiency while creating more dependable descriptions following frame selection.

1. Introduction

With the flourishing development of short video platforms and the explosive growth of multimedia content, automatic video captioning technology has demonstrated broad application prospects in domains such as content retrieval, accessibility, and intelligent media processing [1,2]. Video captioning aims to automatically transform video content into accurate and fluent natural language descriptions. This task requires simultaneous understanding of visual content, temporal relationships, and semantic information, making it a significant research topic at the intersection of computer vision and natural language processing [3]. In recent years, with the rapid advancement of edge computing technology and significant improvements in deep neural network performance, intelligent edge devices have been widely deployed in video processing. These intelligent cameras equipped with edge computing capabilities (hereinafter referred to as edge cameras) possess more powerful local computational resources and can achieve real-time video analysis and processing, providing new technological pathways for the practical application of video captioning technology [4,5].

Video captioning technology has evolved from template-based approaches and statistical learning methods to deep learning paradigms. Early studies primarily relied on predefined templates and rules, where objects, actions, and scenes detected in videos were used to populate language templates [6]. Subsequently, statistical machine learning methods introduced more flexible language models, but these approaches struggled to handle complex visual-language associations [7]. In the deep learning era, the research paradigm for video captioning has undergone a fundamental transformation. The CNN-RNN architecture pioneered end-to-end caption generation [8], where Convolutional Neural Networks (CNNs) extract visual features and Recurrent Neural Networks (RNNs) perform sequence decoding. However, such methods often overlook the long-term temporal dependencies within videos. To better model temporal information, researchers have proposed various improvements: the introduction of attention mechanisms enables models to dynamically focus on essential parts of a video [9]; temporal attention networks further enhance the modeling of action and event evolution [10,11]; and hierarchical attention structures facilitate multi-scale feature extraction from frame-level to segment-level [12,13]. With the breakthrough progress of Transformers across various domains [14,15], video captioning methods based on self-attention mechanisms have gradually emerged as a research hotspot. Although these approaches demonstrate superior performance in modeling long-range dependencies, Vision Transformers (ViTs) are constrained by the quadratic computational complexity of their self-attention mechanisms, rendering them difficult to deploy in real-world scenarios with limited computational resources. Existing methodologies still suffer from the following critical issues: (1) redundant video information, high model computational complexity, and lack of targeted keyframe selection strategies; (2) insufficient multimodal feature fusion, suboptimal intermodal alignment, and excessive model parameters.

To address these aforementioned challenges, we propose EdgeVidCap, a channel-spatial dual-branch lightweight video captioning model for IoT edge cameras, incorporating cross-attention mechanisms into the keyframe selection process, enabling efficient extraction of salient information from video sequences. In terms of feature representation, the model integrates miniGPT-based visual object features, deep audio features, and 3D optical flow motion features to construct comprehensive multimodal representations. During the feature fusion stage, a hybrid module termed Synergetic Attention State Mamba (SASM) is designed, which introduces channel attention mechanisms to enhance feature selection capabilities while incorporating State Space Models (SSMs) to efficiently capture long-range spatial dependencies, thereby achieving efficient spatiotemporal modeling of multimodal video features. In the caption generation phase, an adaptive attention-guided sentence decoder is proposed, which dynamically adjusts feature weights according to contextual information to generate more accurate and natural descriptive text.

Our contributions are as follows.

- To propose a keyframe guidance mechanism that achieves precise localization and extraction of critical information in video sequences through cross-attention computation.

- To design a hybrid module called Synergetic Attention State Mamba (SASM), which incorporates channel attention mechanisms to enhance feature selection capabilities while leveraging State Space Models (SSMs) to efficiently capture long-range spatial dependencies, enabling efficient spatiotemporal modeling of multimodal video features.

- Introduce adaptive attention mechanisms to construct a sentence decoder that achieves selective focus on fused features through dynamic adjustment of feature weights at different temporal instances.

2. Related Work

The research on video captioning began with the encoder–decoder framework. Venugopalan et al. [6] first proposed an end-to-end sequence-to-sequence learning model (S2VT), which utilized CNNs to extract video frame features and employed LSTMs for sequence decoding. Building on this, Yao et al. [16] introduced 3D convolutional networks to enhance the representation of spatiotemporal features. Pan et al. [17] designed a hierarchical LSTM network to separately model the temporal structure of videos and the language generation process. Although these methods established the basic paradigm for video captioning, they often faced challenges such as difficulties in modeling long-term dependencies and insufficient utilization of features.

To address these limitations, Krishna et al. [18] proposed a dense video captioning framework that improved the description of long videos through segment-wise processing. Wang et al. [19] designed a bidirectional LSTM structure to better leverage contextual information for guiding caption generation. The introduction of attention mechanisms significantly boosted the performance of video captioning. Early works primarily focused on temporal attention, such as the hierarchical temporal attention network proposed by Yu et al. [20], which adaptively focused on essential time segments in videos. With further advancements, spatial attention also gained attention. Zhang et al. [21] developed a spatiotemporal dual-attention mechanism to achieve fine-grained visual feature extraction.

With the successful application of the Transformer architecture in computer vision, recent research has increasingly focused on transferring the capabilities of large language models to video captioning tasks. Dai et al. [22] proposed a temporal-aware Transformer structure based on visual encoders, which improved the modeling of video dynamics while maintaining efficient inference. Guo et al. [23] proposed a semantic guidance network for video captioning, which effectively improved description accuracy and generalization ability by implementing a novel scene frame sampling strategy to address visual information redundancy and scene information omission. Yang et al. [24] introduced the VideoChat model, which significantly enhanced the naturalness and accuracy of captions by fine-tuning ChatGPT with video understanding instructions. Wang et al. [25] designed the Video-LLaMA framework, injecting video understanding capabilities into the LLaMA model, thereby enabling more flexible video–text interactions.

3. Problem Definition

3.1. Task Overview

Video captioning is a challenging multimodal task that aims to generate natural language descriptions for video content automatically. Given an input video sequence, the goal is to produce a coherent and semantically accurate textual description that captures the key visual events, objects, and their temporal relationships. This task is particularly challenging for IoT edge cameras due to computational constraints and the need for real-time processing.

3.2. System Pipeline Overview

The core objective of video captioning is to summarize and reinterpret video content using natural language. Due to the significant heterogeneity between video and natural language, the data processing involved in this task is highly complex. As such, video captioning represents a major challenge in computer vision. Having established the overall workflow, we now formally define the mathematical representations: Let the training dataset be represented as , where denotes the i-th video and represents its corresponding ground-truth caption. Each video is decomposed into a sequence of frames: , where S represents the total number of frames. According to the approach in [26], the dataset is divided into training, testing, and validation subsets in 65%, 30%, and 5%, respectively. These subsets are constructed as follows: The training set is as follows: ; the testing set is as follows: ; and the validation set is as follows: .

During the data preprocessing stage, each video is first decomposed into S frames using the FFmpeg tool, i.e., . Subsequently, the audio information of each video is extracted to construct an audio set . Finally, cross-attention computation is used to obtain X keyframes as the input to the model, denoted as follows: . During the encoding stage, the sampled frame set F is input into 2D-CNN and 3D-CNN models. The 2D-CNN model outputs a P-dimensional feature vector, defined as , while the 3D-CNN model outputs a Q-dimensional feature vector, . The corresponding audio information extracted from video A is input into Google’s pre-trained audio model VGGish [27], which extracts an R-dimensional audio feature vector, . The final textual description generated by the model is represented as follows: .

4. Model Construction

4.1. Model Architecture

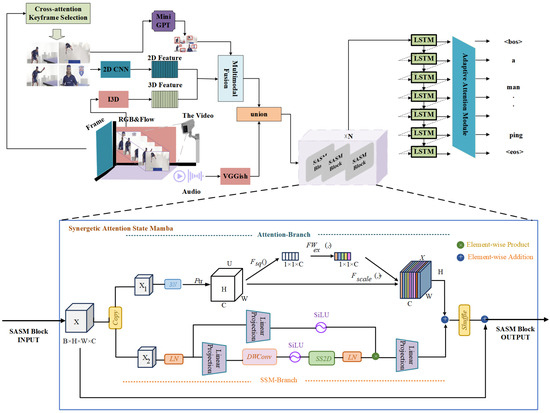

The architecture of our proposed algorithmic model is illustrated in Figure 1. The model takes a video as input, which is first decomposed into a sequence of image frames and a single-channel audio stream using FFmpeg. Given the high similarity between adjacent video frames, directly processing the complete frame sequence incurs substantial redundant computations, resulting in prolonged processing times and the potential introduction of extraneous information. To address this, we introduce a visual span-based keyframe selection mechanism (Section 4.2), which retains only the essential keyframes to supply visual features to the model, thereby effectively reducing computational overhead. In the feature extraction stage, we employ multiple methods to derive features from different modalities: static visual features of keyframes are extracted using a 2D CNN and a pruned miniGPT architecture; spatiotemporal 3D visual features of the video are captured via the I3D network [12]; and deep audio features are obtained from the video’s audio component based on Google’s pre-trained VGGish model [27]. Subsequently, we construct a multimodal fusion network (Section 4.3) to integrate the 2D and 3D visual features, while concatenating the audio features extracted by VGGish. Considering the distinct representational characteristics of visual and audio temporal features, we design the Synergetic Attention State Mamba Block (Section 4.4). This module adopts a dual-branch parallel architecture to achieve synergistic optimization between channel attention mechanisms and spatial state modeling. Finally, the fused encoding vector is fed into the adaptive sentence decoding module (Section 4.5). Within this module, the adaptive attention mechanism processes the hidden states output by the LSTM at each time step, dynamically adjusting the weights assigned to encoding vectors at different moments to enhance focus on critical content across multimodal features. A word probability distribution is then generated via the Softmax function, ultimately yielding a textual description corresponding to the input video.

Figure 1.

Channel-spatial dual-branch lightweight video description model for IoT edge cameras. Overview of the proposed algorithmic model for video description generation. The input video is decomposed into frames and audio using FFmpeg. Keyframes are selected via a visual span-based mechanism (Section 4.2) to reduce redundancy. Features are extracted using 2D CNN with pruned miniGPT for static visuals, I3D for spatiotemporal visuals, and VGGish for audio. These are fused in a multimodal network (Section 4.3), enhanced by the Synergetic Attention State Mamba Block (Section 4.4) for synergistic optimization, and decoded adaptively (Section 4.5) to produce textual descriptions.

4.2. Visual Span Video Keyframe Selection

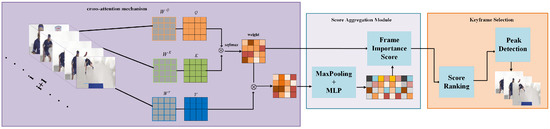

In video content description generation tasks, processing all video frame sequences generates substantial computational redundancy, which is time-consuming and prone to producing partially extraneous information [28,29]. To mitigate these issues, we propose a novel video span keyframe selection methodology, as illustrated in Figure 2. Through the computation of attention weights between consecutive frames, scene transitions can be precisely captured [30]. When significant differences exist between two frames, the attention distribution exhibits greater dispersion; conversely, when two frames demonstrate high similarity, the attention becomes more concentrated. This mechanism facilitates the efficient identification and extraction of semantically significant frames within extended video sequences, thereby reducing computational overhead while preserving critical visual information.

Figure 2.

Visual-spanning keyframe selection. The framework processes input video sequences through attention-based mechanisms, computing attention weights between consecutive frames to precisely capture scene transitions. The architecture employs a sparse aggregation module to analyze temporal relationships: when significant differences exist between consecutive frames, the attention distribution exhibits greater dispersion, indicating potential scene transitions; conversely, when frames demonstrate high similarity, attention becomes more concentrated. Through similarity assessment, similar frames can be removed while retaining keyframes with greater differences.

The pseudocode of the algorithm is presented in Algorithm 1. Utilizing the cross-attention mechanism, the algorithm processes a given video comprising N sampled frames, denoted as .

| Algorithm 1 Cross-attention keyframe selection |

| Input: |

|

| Output: Keyframe set K |

| Steps: |

|

We first utilize a visual encoder to extract features from each frame, resulting in frame-level features , where L denotes the number of patches/tokens into which a frame is divided, and d represents the dimensionality of each token’s feature vector. To evaluate scene changes, the differences between consecutive frames and are iteratively assessed.

In the Cross-Attention mechanism, to compute the attention distribution between frames and , we treat as the Query (Q), and as the Key (K) and Value (V). By applying learnable linear projection matrices , , and , the original features are mapped into the attention subspace, as formulated in Equation (1):

The dimensions of are all , matching the dimensions of the original features while incorporating a new learnable parameter space. Given Q and K, the attention weight matrix can be computed as in Equation (2).

Each element of the matrix represents the association between the token and the token in the pair of frames. The use of the softmax operation ensures that the sum of attention weights for each row equals 1.

In the video keyframe selection task, our focus lies in the overall frame-level difference rather than the detailed output at the token level. To this end, an aggregation operation can be applied to the attention matrix to derive a scalar representing the inter-frame similarity . One of the most commonly used methods is to compute either the mean or the maximum value of the elements in , as in Equation (3).

Here, Pool represents a pooling operator, such as average or max pooling. A larger indicates a closer similarity between the two frames, whereas a smaller reflects a more substantial content difference between the frames. As highlighted in this investigation, to emphasize the identification of keyframes with “the greatest changes”, we define the difference function as depicted in Equation (4).

The difference function intuitively reflects the “magnitude of change” between frame and frame . When takes a relatively large value (i.e., when similarity is very low), it indicates a significant visual transition at position i, often corresponding to a new scene or action turning point.

To select keyframes that best represent significant content changes, the difference sequence can be sorted, or the values can be compared to a threshold . Frames with the highest scores or those exceeding the threshold are selected for further consideration. When is set as a reference threshold for identifying peaks of change in a video, the final keyframe set K is represented as in Equation (5).

Here, K serves as the final set of selected keyframes, capturing the most significant scene changes.

We consider the relationships among all tokens within frames, rather than merely conducting comparisons at corresponding positions, which enables the capture of complex spatial variations. Through aggregation operations on the attention matrix , the analysis can be elevated from token-level granular changes to frame-level holistic differences, thereby providing a more comprehensive understanding of temporal dynamics within the video sequence.

4.3. Multimodal Attention Fusion

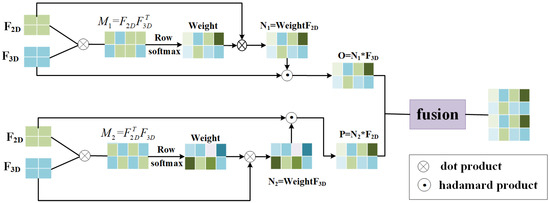

A video is composed of a series of scenes or segments organized sequentially over time, and semantic relationships exist between these scenes or segment sequences. Specifically, the current segment may depend on information conveyed by the previous segment, or it may be highly correlated with subsequent segments. This reflects the interdependence of video segments and their contextual relationships [31,32,33]. In real-world scenarios, different types of representational information exhibit strong complementarity. Leveraging multi-source information can effectively uncover latent signals, offering both consistency and complementarity from various perspectives. Consistency refers to shared, common information, while complementarity represents independent or mutually exclusive types of information [34,35]. Therefore, we extract its 2D CNN features using the ResNet152 network and its 3D CNN features using the I3D network. Using the Self-Attention Embedding module, we capture the representational information of each individual modality, obtaining the single-modality feature representations and enhanced by Self-Attention. Since and are derived from the Self-Attention Embedding module, they inherently incorporate the contextual information of their respective modalities. To integrate these two types of visual representational information, we propose a dual-modality attention fusion mechanism. The structure of the proposed network is illustrated in Figure 3.

Figure 3.

Multimodal attention fusion. The network architecture demonstrates our proposed dual-modality attention fusion mechanism designed to integrate 2D and 3D visual representational information. The architecture processes two input feature streams: F2D (2D CNN features extracted using ResNet152) and F3D (3D CNN features extracted using I3D network), both enhanced by the Self-Attention Embedding module. The fusion process employs attention weight computation through matrix operations, followed by row-wise softmax normalization to generate attention distributions. The mechanism utilizes both dot product and Hadamard product operations to capture different types of feature interactions between the two modalities. The final fused representation combines the contextual information from both 2D spatial features and 3D spatiotemporal features.

To achieve dual-modality fusion, we begin by calculating a pair of matching matrices and on the two single-modality representations to capture their interaction information. The matching matrices are computed as shown in Equation (6).

Secondly, the attention distribution weights, denoted as , are calculated for the matching matrices using the softmax function, as shown in Equation (7). represents the correlation between the 2D CNN and 3D CNN features extracted from the video. A higher weight value indicates stronger interactions between different visual features, underscoring the significance of the fused information.

Subsequently, the attention distribution weights are multiplied with the single-modality feature matrices to obtain the final attention representation matrices and , as defined in Equation (8).

Finally, a multiplicative gating mechanism is employed to perform element-wise multiplication between the attention representation and the other single-modality representation, resulting in the intermodality attention matrices O and P, as defined in Equation (9). The matrices O and P are then combined to form the dual-modality attention fusion feature , as described in Equation (10).

4.4. Synergetic Attention State Mamba

4.4.1. Preliminary

Recently developed SSM architectural models [36,37,38], including Structured State Space Sequence Models (S4) and Mamba, establish their core mechanisms upon the theoretical foundations of traditional continuous systems. The operational principle of such systems involves utilizing an intermediate latent state variable as a bridge to achieve effective transformation from one-dimensional input functions or sequences to corresponding outputs , as described in Equations (11) and (12). This aforementioned process can be mathematically represented as a linear Ordinary Differential Equation (ODE).

where represents the state matrix, while and denote the projection parameters.

Given the inherent characteristics of deep neural networks in processing discrete sequence data, continuous-time systems must undergo appropriate numerical discretization processes. Based on the Zero-Order Hold approximation, matrix exponential functions are employed for precise discretization transformation, which is defined following Equations (13) and (14).

where the discretization time step serves as a critical hyperparameter that directly influences the fidelity of system dynamics characteristics. The discretized state space equation is subsequently transformed into the following recurrence relation.

Notably, modern SSM architectures achieve equivalent transformation between recursive and convolution computation through ingenious mathematical transformations. Specifically, the system’s impulse response can be represented as a structured convolution kernel.

4.4.2. SASM Block

Recent related studies have validated the exceptional performance of Mamba architecture across multiple critical application domains. Yang et al. proposed the SMamba framework [39], which effectively addresses real-time challenges in event-driven object detection through the introduction of sparsification mechanisms, providing novel technical pathways for high-frequency visual processing tasks. In the neuroimaging domain, Kannan et al. designed the BrainMT hybrid architecture [40] that ingeniously integrates Mamba’s sequential modeling advantages with Transformer’s attention mechanisms, successfully achieving precise modeling of long-range spatiotemporal dependencies in functional magnetic resonance imaging data. Xiao et al. constructed a frequency domain-enhanced Mamba model [41] that demonstrates significant performance improvements in remote sensing image super-resolution reconstruction tasks, providing compelling evidence for the effectiveness of combining frequency domain prior knowledge with State Space Models. Cui et al. proposed the MGCM framework [42] that achieves breakthrough progress in cancer prognosis analysis through deep integration of multimodal graph convolution with Mamba architecture.

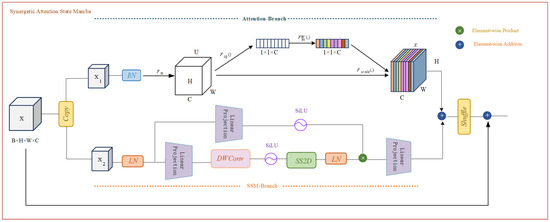

Although the aforementioned studies demonstrate that SSMs achieve efficient long-sequence modeling through their elegant mathematical formulation, their fixed linear transformation mechanism constrains the model’s adaptive processing capabilities for input features. In video content understanding scenarios, different visual feature channels carry heterogeneous semantic information. For instance, specific channels may focus on capturing object details, while others are more oriented toward scene layout or motion patterns. Traditional SSMs lack the perceptual capability for such feature heterogeneity, rendering them unable to achieve dynamic feature selection and weight allocation, thereby limiting their performance potential in complex visual tasks. Based on this observation, we propose the Synergetic Attention State Mamba (SASM) module, which achieves synergetic optimization of channel attention and spatial state modeling through a dual-branch parallel architecture. The pseudocode of the algorithm is presented in Algorithm 2.

| Algorithm 2 Synergetic Attention State Mamba Block |

| Input: |

|

| Output: Output tensor |

| Steps: |

|

As illustrated in Figure 4, the SASM module receives input features and simultaneously feeds them into the Attention-Branch and SSM-Branch for parallel processing. The Attention-Branch focuses on channel-level feature importance modeling, initially compressing the spatial dimensions through a global average pooling operation , which aggregates the spatial information of each channel into a single global descriptor.

Figure 4.

Synergetic Attention State Mamba block. This demonstrates the proposed Synergetic Attention State Mamba (SASM) module, which adopts a dual-branch parallel architecture to achieve synergetic optimization of channel attention and spatial state modeling. Unlike traditional SSMs with fixed linear transformation mechanisms, the SASM module integrates dynamic feature selection and weight allocation capabilities to handle heterogeneous semantic information across different visual feature channels.

This global pooling strategy can effectively capture the statistical characteristics of each channel across the entire spatial domain, providing compact yet information-rich feature representations for subsequent channel importance assessment. Subsequently, a bottleneck structure is constructed through two-layer linear projections, incorporating SiLU activation functions to learn complex nonlinear dependencies among channels.

where , , and r represents the compression ratio. The first linear projection reduces parameter count and enhances generalization capability through dimensionality reduction operations (setting ), while the SiLU activation function exhibits superior gradient flow characteristics and nonlinear expression capabilities compared to conventional ReLU. The second projection layer restores the feature dimensionality to the original channel count, ultimately constraining the output values within the range through the Sigmoid activation function , thereby generating normalized channel attention weights. This approach not only effectively controls model complexity but also facilitates the network’s learning of more robust and semantically rich inter-channel relational representations by introducing a moderate information bottleneck, thus achieving precise quantification and dynamic adjustment of feature importance across different channels.

The SSM-Branch focuses on efficient modeling of spatial long-range dependencies. Input features first undergo layer normalization (LN) for feature standardization, an operation that stabilizes the training process and alleviates gradient vanishing problems, ensuring numerical stability for subsequent state space modeling. The normalized features are then subjected to linear projection for feature transformation, mapping the input features to a representation space suitable for state space processing.

Subsequently, the local spatial receptive field is enhanced through depthwise separable convolution (DWConv):

Depthwise separable convolution exhibits lower computational complexity compared to standard convolution while effectively capturing local spatial patterns, providing rich local contextual information for subsequent global state space modeling. This local–global hierarchical modeling strategy ensures that the model can perceive fine-grained spatial details while establishing long-range spatial dependencies. Subsequently, the transformed features are fed into the two-dimensional state space module (SS2D) for long-range spatial dependency modeling:

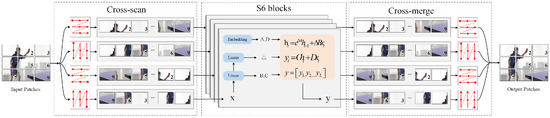

SS2D captures inter-frame temporal dependencies while inheriting the linear complexity of S6, which is crucial for generating accurate and coherent video captions. SS2D comprises three components: the Temporal Scanning and Merging operation (TSM), the visual S6 block, and the feature fusion operation. Figure 5 intuitively illustrates the internal mechanism of SS2D in video caption generation. Specifically, the temporal scanning and merging operation initially unfolds the video frame sequence into visual feature streams along four distinct directions (forward temporal, backward temporal, intra-scene keyframes, and scene transition points). Subsequently, the visual S6 block processes all sequences to extract spatiotemporal features, ensuring comprehensive capture of dynamic changes and scene information within the video content. Finally, the feature fusion operation integrates the output features from the four directions, constructing rich visual-semantic representations to generate accurate caption descriptions that are highly aligned with the video’s visual content.

Figure 5.

The internal modeling process of SS2D. This demonstrates the internal modeling process of the SS2D (two-dimensional state space) module, which extends traditional one-dimensional State Space Models to two-dimensional space to effectively capture long-range dependencies across spatial domains. The architecture demonstrates the cross-scan mechanism that processes input video frames in multiple directions, followed by S6 blocks that maintain recursive updates of hidden states in both horizontal and vertical directions. The process includes cross-merge operations that integrate features from different scanning directions. Unlike self-attention mechanisms with quadratic complexity O((HW)2), the SS2D design achieves linear time complexity O(HW), significantly reducing computational overhead and making it particularly suitable for rapid processing of large batches of video frames.

The SS2D module (Figure 5) represents an extension of traditional one-dimensional State Space Models to two-dimensional space, which effectively captures long-range dependencies across the entire spatial domain with linear time complexity by maintaining recursive updates of hidden states in both horizontal and vertical directions. Compared to the quadratic complexity of self-attention mechanisms, this design significantly reduces computational overhead, making it particularly suitable for processing high-resolution video frames. The collaborative fusion mechanism achieves feature integration of the two branches through element-wise multiplication operations.

where ⊙ denotes the element-wise multiplication operation. This fusion strategy enables attention weights A to perform dynamic weighting of spatial features from the SSM-Branch along the channel dimension, achieving channel-sensitive spatial feature selection. Specifically, spatial features of important channels are amplified while contributions from redundant channels are suppressed, thereby enhancing the discriminative capability and semantic consistency of the overall feature representation. This gating mechanism effectively combines the selectivity of attention with the temporal modeling capability of State Space Models, achieving complementary advantages.

4.5. Adaptive Attention Module

The Adaptive Attention Module serves as the core component connecting multimodal video features with text generation in our video captioning framework. During the caption generation process, this module dynamically computes attention weights between the LSTM decoder’s hidden states and the fused multimodal features , enabling context-aware text generation that adaptively focuses on relevant visual content at each decoding step. During the network decoding stage, an adaptive attention mechanism [43] is incorporated to extract attention-enhanced weights by combining the attention-fused visual features F with the hidden state outputs of the parallel LSTM decoder [44] at each time step. This mechanism adaptively adjusts the correlation ratio between the multimodal semantic hidden information and the adaptive attention outputs , focusing more effectively on the key contents of the multimodal representations encoded by the mul-feature. The adaptive attention computation process is as follows:

First, the enhanced information for the adaptive attention mechanism is calculated using Equations (23)–(25), providing attention information under the fusion mode for the adaptive attention module.

At this point, , , , and represent the weight parameters, and F denotes the output feature vector from the modality attention fusion. To ensure dimensional alignment during matrix addition in Equation (23), is employed for dimension adjustment; , where is a vector with all elements equal to 1; ; and represents the feature of the dual-modality fused attention at time step t.

Secondly, the visual sentinel module in Equation (26) adaptively regulates the output of the attention mechanism. During the adaptive regulation process, the determination of depends on whether it is more influenced by the information , reinforced through adaptive attention, or by the hidden information captured based on dual-modality fused attention.

The computation of the visual sentinel is defined in Equation (27).

Here, is obtained from the last element of the output result of the softmax function, while is derived from the fusion of the multimodal fused hidden information and the visual fused hidden information .

Unlike static attention that treats all decoding steps uniformly, our adaptive attention considers the semantic evolution of the generated text sequence to determine which visual aspects are most relevant for predicting the next word . This is achieved by conditioning the attention computation on the decoder’s hidden state , which encodes the contextual information from previously generated words.

5. Experimental Analysis

5.1. Experimental Platform

The hardware configuration for our study involves training the model initially on a cloud server equipped with an Intel(R) Xeon(R) Gold 5118 CPU @ 2.30 GHz and an NVIDIA Tesla V100 GPU, followed by deployment of the final model to an edge camera utilizing the NVIDIA Jetson TX2 for evaluating runtime speed and performance. It has a dual-core Denver 2 64-bit CPU and a quad-core ARM A57 Complex graphics processor architecture with 256 CUDA cores for parallel computing and a Pascal graphics acceleration architecture. In addition, the CPUs and the graphics processor share 8 GB of RAM. For model assessment, we employed the MSR-VTT and MSVD datasets, each comprising videos paired with their corresponding human-annotated descriptive sentences (ground truth). In terms of evaluation metrics, this work adopts the Bilingual Evaluation Understudy (BLEU) [45], the recall-oriented understudy for the evaluation of the girth with the longest common sequence (ROUGE-L) [46], the metric for the evaluation of translation with explicit ordering (METEOR) [47], and the Consensus-based Image Description Evaluation (CIDEr) [48]. The training platform and the experimental runtime environment for the model are given in Table 1.

Table 1.

Experimental platform and environment configuration.

5.2. Data Preprocessing

The proposed model utilizes the MSVD [49] and MSR-VTT [26] datasets. First, the extracted video frames are formatted and resized to 224 × 224 pixels. Then, 2D CNN features for each frame are extracted using the ResNet model, which incorporates channel attention. Additionally, 3D CNN features for the videos are extracted using the I3D pre-trained model, integrating optical flow and 3D spatial information. Finally, audio features corresponding to each video are extracted. The audio features are obtained using VGGish, a large-scale pre-trained model proposed by Google’s Speech Understanding team. VGGish is trained on the extensive AudioSet dataset [10], offering strong generalization capabilities. For the textual descriptions in the datasets, the following steps are performed: First, sentences are tokenized using the Stanford NLP Toolkit, then truncated to 20 words, with punctuation removed and all words converted to lowercase. Next, the descriptions are tokenized, and all vocabularies are aggregated. A word frequency threshold is set to five, with words exceeding the threshold included in the vocabulary, while low-frequency words are excluded. Special tokens such as “<bos>“, “<eos>“, “<pad>“, and “<nan>“ are added to the vocabulary. Finally, the generated vocabulary is numerically indexed, and each textual description is vectorized into a format suitable for computation.

5.3. Experimental Parameter Settings

In the Self-Attention Embedding layer, the pooling kernel size is set to 3 × 3, and the output dimensions of the adaptive attention mechanism and other network components are all set to 1024.

During the model training, the Adam optimizer is utilized, and the batch size is set to 64. The initial learning rate is set to 0.0001, and it decays by a factor of 0.1 if the evaluation score on the validation set does not improve for 20 epochs after every 100 iterations. Gradient clipping is applied during backpropagation to prevent gradient explosion during training. During the description generation phase, the Dropout technique is employed, with a dropout rate of 0.5 to prune neurons in the network model. This approach reduces the interdependence among neurons in the video content description model, improving the generalization capability of the model while preventing overfitting.

5.4. Analysis of Experimental Results

To validate the effectiveness of the proposed video content description generation model, a comparative analysis was conducted against state-of-the-art models, including OA-BTG, POS + VCT, ORG-TRL (IRV2 + C3D), and SGN. The evaluation metrics used for comparison include BLEU, ROUGE, METEOR, and CIDEr. The detailed qualitative results are presented in Table 2 and Table 3, while the quantitative results are illustrated in Figure 6.

Table 2.

Comparative experiments of models on the MSVD dataset.

Table 3.

Comparative experiments of models on the MSR-VTT dataset.

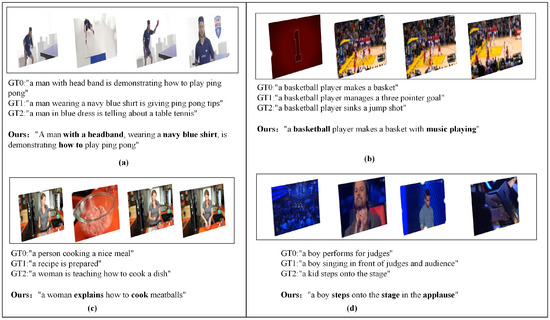

Figure 6.

Test examples of the model. This demonstrates representative test examples of the model across different video content scenarios. Each example (a–d) displays sequential video frames, accompanied by multiple ground truth (GT) descriptions and the descriptions generated by the model for the videos. The comparison between ground truth annotations and model outputs demonstrates the system’s capability to generate contextually appropriate and semantically accurate video descriptions across different domains and activity types.

From the perspective of the short video description generation task, although the MSVD dataset features shorter video durations and relatively simpler scenes, it still places high demands on the model’s ability to generate accurate descriptions and capture core actions or scene elements. As shown in Table 2, the proposed model (“ours”) achieves the highest or near-highest scores across BLEU-4, METEOR, ROUGE-L, and CIDEr metrics, surpassing advanced methods such as ViT/L14 and ORG-TRL. Notably, “ours” demonstrates a significant advantage in metrics like METEOR and CIDEr, which emphasize semantic and content matching more. This indicates the model’s superior ability to comprehend video actions and semantic information, enabling precise descriptions of key content. Further comparisons reveal that compared to alternative methods requiring complex features or external knowledge augmentation; “ours” exhibits robust performance improvements under identical or similar feature conditions, suggesting that the multimodal feature fusion and temporal-context modeling strategies employed in “ours” effectively enhance the capture of core actions, events, and semantic information within short video scenarios.

The MSR-VTT dataset encompasses more diverse scenes and multimodal information, with longer video durations and more complex content, imposing greater demands on a model’s ability to integrate temporal modeling, action recognition, scene understanding, and language generation. As shown in Table 3, the proposed “ours” model also achieves top-tier or leading results across BLEU-4, METEOR, ROUGE-L, and CIDEr metrics. Particularly for BLEU-4 and CIDEr, where performance differences indicate a model’s ability to capture critical semantic words and video attributes, “ours” outperforms other prominent methods, demonstrating its superiority in temporal dependency modeling and visual–semantic alignment. Compared to methods also reliant on visual features or knowledge graphs (e.g., ViT/L14, TextKG and STLF), the proposed model achieves more effective cross-modal information flow by improving multimodal feature fusion, temporal clue extraction, and language decoding strategies. It balances the diversity of video content with the accuracy of language expression during the generation process.

To validate the applicability and effectiveness of our proposed model in practical IoT edge computing environments, we deployed the trained model on edge devices for inference testing to evaluate its actual performance under resource-constrained conditions. Based on the performance comparison presented in Table 4, our proposed model demonstrates superior efficiency on the edge device platform (NVIDIA Jetson TX2) compared to existing state-of-the-art approaches. Specifically, our model achieves the lowest memory footprint of 2.97 GB, representing a reduction of 7.2% and 4.2% compared to Gated-ViGAT (3.2 GB) and SLSMedvc (3.1 GB), respectively. More importantly, our approach exhibits the fastest inference time of 0.3000 seconds, outperforming both Gated-ViGAT (0.3483 s) and SLSMedvc (0.3090 s) by 13.9% and 2.9%, respectively.

Table 4.

Performance comparison of models on edge device NVIDIA Jetson TX2.

These improvements are particularly significant for edge deployment scenarios where computational resources are constrained and real-time processing is critical. Compared to Vision Transformer-based works, our Mamba-based architecture demonstrates superior computational efficiency and resource utilization on edge devices. While ViT architectures face significant computational and memory bottlenecks when processing long sequences due to their quadratic complexity in self-attention mechanisms, our Mamba-based approach effectively mitigates this issue through linear complexity state space modeling. The synergistic optimization achieved through our Synergetic Attention State Mamba Block not only maintains strong representational capabilities but also significantly reduces computational overhead, making it exceptionally well-suited for practical edge computing applications in video understanding tasks.

To comprehensively evaluate the performance of the proposed model, we implemented multiple network structure versions within the same experimental environment. These versions integrated different functional modules, including support for multimodal data, uniform frame sampling strategies, cross-attention frame selection mechanisms, miniGPT-based visual localization, and adaptive attention mechanisms. By conducting ablation experiments on the same dataset, we could independently examine each module’s contribution to the overall performance and its impact on the quality of video descriptions. As depicted in Table 5 and Table 6, experimental results demonstrate the performance of each module across various evaluation metrics, providing strong evidence for informed decisions in subsequent model selection and optimization.

Table 5.

Ablation study of the model on the MSVD dataset.

Table 6.

Ablation study of the nodel on the MSR-VTT dataset.

Component Contribution Analysis: The ablation study results demonstrate that the contribution of each component to model performance exhibits a distinct progressive pattern. The miniGPT method based on large-scale pre-trained language models outperforms traditional cross-attention mechanisms on both benchmark datasets (MSVD: BLEU-4 59.2 vs. 58.1; MSR-VTT: BLEU-4 40.3 vs. 39.2), fully demonstrating the inherent advantages of large-scale pre-trained language models. The combination of the visual localization frame selection strategy with the miniGPT framework produces significant synergistic effects (MSR-VTT: BLEU-4 improvement from 40.3 to 42.3), while the introduction of adaptive attention mechanisms further enhances model performance, particularly when combined with the miniGPT architecture (9.4% BLEU-4 improvement on MSR-VTT). Although the multi-component collaborative configuration already exhibits superior performance, the final integration of the proposed SASM module achieves optimal performance across all evaluation metrics, reaching BLEU-4 61.6 and CIDEr 123.9 on the MSVD dataset, and BLEU-4 45.1 and ROUGE-L 63.8 on MSR-VTT. That is, these results confirm the existence of significant positive synergistic effects among the proposed components.

Cross-Dataset Performance Analysis: The experimental results on two benchmark datasets reveal the adaptive characteristics of the proposed model in processing video content of different complexities. The model’s overall performance on the MSVD dataset significantly exceeds that on MSR-VTT (baseline configuration BLEU-4: 58.1 vs. 39.2), reflecting the inherent differences in video–text alignment difficulty between the two datasets. Notably, advanced components demonstrate a greater relative contribution on MSR-VTT, the more challenging dataset. For instance, the combination of adaptive attention mechanism with miniGPT achieves a 9.4% BLEU-4 improvement on MSR-VTT compared to only 2.2% on MSVD, indicating that complex datasets better highlight the technical value of advanced components. Notably, the proposed SASM component under simplified configuration shows remarkable performance enhancement on MSR-VTT (3.7% BLEU-4 improvement compared to baseline miniGPT), further validating its superior capability in handling complex video content. The experimental results also demonstrate that SASM consistently provides stable performance gains across different configuration combinations, improving CIDEr by 1.9% on MSVD and ROUGE-L by 1.3% on MSR-VTT. This finding fully demonstrates the critical value of the proposed SASM module as the final refinement component of the model architecture, providing comprehensive and significant performance improvements for video description tasks.

6. Concluding Remarks

In this work, we present EdgeVidCap, a lightweight video captioning model tailored explicitly for resource-constrained IoT edge cameras. Our approach addresses the critical challenge of deploying sophisticated video understanding algorithms on edge devices while maintaining competitive performance. The key innovation lies in the Synergetic Attention State Mamba (SASM) module, which synergistically combines channel attention mechanisms with State Space Models to achieve efficient spatiotemporal feature modeling. Additionally, our adaptive attention-guided LSTM decoder dynamically adjusts feature weights to generate semantically rich and contextually accurate textual descriptions. Comprehensive experiments on MSR-VTT and MSVD datasets demonstrate that EdgeVidCap achieves competitive performance compared to state-of-the-art methods while significantly reducing computational complexity and parameter count, making it well-suited for real-time deployment on IoT edge devices. Looking ahead, extending our framework to multi-lingual caption generation and incorporating domain-specific knowledge for specialized applications such as surveillance or autonomous driving represents another valuable direction. Finally, developing more sophisticated attention mechanisms that can better capture fine-grained temporal dynamics and object interactions in complex video scenarios remains an important area for future exploration. These developments will continue to advance the practical deployment of intelligent video understanding systems in edge computing environments.

Nevertheless, several limitations warrant consideration. The lightweight architecture may struggle with highly complex scenes involving numerous objects or rapid transitions, and the model’s ability to maintain contextual coherence across extremely long video sequences remains constrained by the inherent limitations of State Space Models for extended temporal modeling. Additionally, certain content types such as abstract videos or highly technical domains may challenge the current framework’s representational capacity. Looking ahead, extending our framework to multi-lingual caption generation and incorporating domain-specific knowledge for specialized applications such as surveillance or autonomous driving represents another valuable direction. Finally, developing more sophisticated attention mechanisms that can better capture fine-grained temporal dynamics and object interactions in complex video scenarios remains an important area for future exploration. These developments will continue to advance the practical deployment of intelligent video understanding systems in edge computing environments.

Author Contributions

Methodology, writing—original draft, L.G. and X.L.; software, J.W., P.Z.; investigation, J.X., L.L. and Y.H.; writing—review and editing, B.Y., Q.Z. and K.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China, under Grant Nos. 2023YFB4503903 and 2020YFC0832500; the National Natural Science Foundation of China, under Grant Nos. U22A20261 and 61402210; the Gansu Province Science and Technology Major Project—Industrial Project, under Grant Nos. 22ZD6GA048 and 23ZDGA006; the HY-Project, under Grant No. 4E49EFF3; the Gansu Province Key Research and Development Plan—Industrial Project, under Grant No. 22YF7GA004; the Gansu Provincial Science and Technology Major Special Innovation Consortium Project, under Grant No. 21ZD3GA002; the Fundamental Research Funds for the Central Universities, under Grant Nos. lzujbky-2024-jdzx15, lzujbky-2022-kb12, lzujbky-2021-sp43, lzujbky-2020-sp02, and lzujbky-2019-kb51; the Open Project of Gansu Provincial Key Laboratory of Intelligent Transportation, under Grant No. GJJ-ZH-2024-002; and the Science and Technology Plan of Qinghai Province, under Grant No. 2020-GX-164; 2023 China Higher Education Institutions Industry-Academia-Research Innovation Fund for Digital Intelligence and Educational Projects No. 2023RY020.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

References

- Aafaq, N.; Mian, A.; Liu, W.; Gilani, S.Z.; Shah, M. Video description: A survey of methods, datasets, and evaluation metrics. ACM Comput. Surv. 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Han, D.; Shi, J.; Zhao, J.; Wu, H.; Zhou, Y.; Li, L.-H.; Khan, M.K.; Li, K.-C. LRCN: Layer-residual Co-Attention Networks for visual question answering. Expert Syst. Appl. 2025, 263, 125658. [Google Scholar] [CrossRef]

- Chen, S.; Jin, Q. Multi-modal conditional attention fusion for dimensional emotion prediction. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 571–575. [Google Scholar]

- Huang, S.-H.; Lu, C.-H. Sequence-Aware Learnable Sparse Mask for Frame-Selectable End-to-End Dense Video Captioning for IoT Smart Cameras. IEEE Internet Things J. 2023, 11, 13039–13050. [Google Scholar] [CrossRef]

- Lu, C.-H.; Fan, G.-Y. Environment-aware dense video captioning for IoT-enabled edge cameras. IEEE Internet Things J. 2021, 9, 4554–4564. [Google Scholar] [CrossRef]

- Venugopalan, S.; Rohrbach, M.; Donahue, J.; Mooney, R.; Darrell, T.; Saenko, K. Sequence to sequence-video to text. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4534–4542. [Google Scholar]

- Zhao, B.; Gong, M.; Li, X. Hierarchical multimodal transformer to summarize videos. Neurocomputing 2022, 468, 360–369. [Google Scholar] [CrossRef]

- Pan, Y.; Yao, T.; Li, Y.; Mei, T. X-linear attention networks for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10971–10980. [Google Scholar]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv 2023, arXiv:2304.10592. [Google Scholar]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar]

- Hou, Y.; Zhou, Q.; Lv, H.; Guo, L.; Li, Y.; Duo, L.; He, Z. VLSG-net: Vision-Language Scene Graphs network for Paragraph Video Captioning. Neurocomputing 2025, 636, 129976. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Liu, Y.; Sun, Y.; Chen, Z.; Feng, C.; Zhu, K. Global spatial-temporal information encoder-decoder based action segmentation in untrimmed video. Tsinghua Sci. Technol. 2024, 30, 290–302. [Google Scholar] [CrossRef]

- Zhang, B.; Gao, J.; Yuan, Y. Memory-enhanced hierarchical transformer for video paragraph captioning. Neurocomputing 2025, 615, 128835. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Chen, C.; Wang, Z.; Cui, L.; Lin, C.-W. Magic ELF: Image deraining meets association learning and transformer. arXiv 2022, arXiv:2207.10455. [Google Scholar]

- Yao, L.; Torabi, A.; Cho, K.; Ballas, N.; Pal, C.; Larochelle, H.; Courville, A. Describing videos by exploiting temporal structure. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4507–4515. [Google Scholar]

- Pan, P.; Xu, Z.; Yang, Y.; Wu, F.; Zhuang, Y. Hierarchical recurrent neural encoder for video representation with application to captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1029–1038. [Google Scholar]

- Krishna, R.; Hata, K.; Ren, F.; Fei-Fei, L.; Niebles, J.C. Dense-captioning events in videos. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 706–715. [Google Scholar]

- Wang, X.; Chen, W.; Wu, J.; Wang, Y.-F.; Wang, W.Y. Video captioning via hierarchical reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4213–4222. [Google Scholar]

- Yu, H.; Wang, J.; Huang, Z.; Yang, Y.; Xu, W. Video paragraph captioning using hierarchical recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4584–4593. [Google Scholar]

- Zhang, J.; Peng, Y. Object-aware aggregation with bidirectional temporal graph for video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8327–8336. [Google Scholar]

- Wang, Y.; Li, K.; Li, Y.; He, Y.; Huang, B.; Zhao, Z.; Zhang, H.; Xu, J.; Liu, Y.; Wang, Z.; et al. Internvideo: General video foundation models via generative and discriminative learning. arXiv 2022, arXiv:2212.03191. [Google Scholar]

- Guo, L.; Zhao, H.; Chen, Z.; Han, Z. Semantic guidance network for video captioning. Sci. Rep. 2023, 13, 16076. [Google Scholar] [CrossRef]

- Li, K.; He, Y.; Wang, Y.; Li, Y.; Wang, W.; Luo, P.; Wang, Y.; Wang, L.; Qiao, Y. Videochat: Chat-centric video understanding. arXiv 2023, arXiv:2305.06355. [Google Scholar]

- Zhang, H.; Li, X.; Bing, L. Video-llama: An instruction-tuned audio-visual language model for video understanding. arXiv 2023, arXiv:2306.02858. [Google Scholar]

- Xu, J.; Mei, T.; Yao, T.; Rui, Y. Msr-vtt: A large video description dataset for bridging video and language. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5288–5296. [Google Scholar]

- Hung, Y.-N.; Lerch, A. Feature-informed Latent Space Regularization for Music Source Separation. arXiv 2022, arXiv:2203.09132. [Google Scholar]

- Guo, K.; Zhang, Z.; Guo, H.; Ren, S.; Wang, L.; Zhou, X.; Liu, C. Video super-resolution based on inter-frame information utilization for intelligent transportation. IEEE Trans. Intell. Transp. Syst. 2023, 24, 13409–13421. [Google Scholar] [CrossRef]

- Liao, J.; Yu, C.; Jiang, L.; Guo, L.; Liang, W.; Li, K.; Pathan, A.-S.K. A method for composite activation functions in deep learning for object detection. Signal Image Video Process. 2025, 19, 362. [Google Scholar] [CrossRef]

- Xiao, Y.; Yuan, Q.; Jiang, K.; Chen, Y.; Wang, S.; Lin, C. Multi-Axis Feature Diversity Enhancement for Remote Sensing Video Super-Resolution. IEEE Trans. Image Process. 2025, 34, 1766–1778. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, J.; Jiao, Y.; Zhang, Y.; Chen, L.; Li, K. A Multimodal Semantic Fusion Network with Cross-Modal Alignment for Multimodal Sentiment Analysis. In ACM Transactions on Multimedia Computing, Communications and Applications; ACM: New York, NY, USA, 2025. [Google Scholar]

- Liu, Z.; Dang, F.; Liu, X.; Tong, X.; Zhao, H.; Liu, K.; Li, K. A Multimodal Fusion Framework for Enhanced Exercise Quantification Integrating RFID and Computer Vision. Tsinghua Sci. Technol. 2025. [Google Scholar] [CrossRef]

- Xia, C.; Sun, Y.; Li, K.-C.; Ge, B.; Zhang, H.; Jiang, B.; Zhang, J. Rcnet: Related context-driven network with hierarchical attention for salient object detection. Expert Syst. Appl. 2024, 237, 121441. [Google Scholar] [CrossRef]

- Xu, G.; Deng, X.; Zhou, X.; Pedersen, M.; Cimmino, L.; Wang, H. Fcfusion: Fractal componentwise modeling with group sparsity for medical image fusion. IEEE Trans. Ind. Inform. 2022, 18, 9141–9150. [Google Scholar] [CrossRef]

- Zhao, H.; Guo, L.; Chen, Z.; Zheng, H. Research on video captioning based on multifeature fusion. Comput. Intell. Neurosci. 2022, 2022, 1204909. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Wang, F.; Wang, J.; Ren, S.; Wei, G.; Mei, J.; Shao, W.; Zhou, Y.; Yuille, A.; Xie, C. Mamba-Reg: Vision Mamba Also Needs Registers. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 14944–14953. [Google Scholar]

- Yang, N.; Wang, Y.; Liu, Z.; Li, M.; An, Y.; Zhao, X. SMamba: Sparse Mamba for Event-based Object Detection. Proc. Aaai Conf. Artif. Intell. 2025, 39, 9229–9237. [Google Scholar] [CrossRef]

- Kannan, A.; Lindquist, M.A.; Caffo, B. BrainMT: A Hybrid Mamba-Transformer Architecture for Modeling Long-Range Dependencies in Functional MRI Data. arXiv 2025, arXiv:2506.22591. [Google Scholar]

- Xiao, Y.; Yuan, Q.; Jiang, K.; Chen, Y.; Zhang, Q.; Lin, C. Frequency-Assisted Mamba for Remote Sensing Image Super-Resolution. IEEE Trans. Multimed. 2025, 27, 1783–1796. [Google Scholar] [CrossRef]

- Cui, J.; Li, Y.; Shen, D.; Wang, Y. MGCM: Multi-modal Graph Convolutional Mamba for Cancer Survival Prediction. Pattern Recognit. 2025, 169, 111991. [Google Scholar] [CrossRef]

- Yan, C.; Hao, Y.; Li, L.; Yin, J.; Liu, A.; Mao, Z. Task-adaptive attention for image captioning. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 43–51. [Google Scholar] [CrossRef]

- Jin, N.; Yang, F.; Mo, Y.; Zeng, Y.; Zhou, X.; Yan, K.; Ma, X. Highly accurate energy consumption forecasting model based on parallel LSTM neural networks. Adv. Eng. Inform. 2022, 51, 101442. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002. [Google Scholar]

- Lin, C.-Y. Rouge: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 25–30 June 2005; pp. 65–72. [Google Scholar]

- Vedantam, R.; Zitnick, C.L.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Chen, D.; Dolan, W.B. Collecting highly parallel data for paraphrase evaluation. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011. [Google Scholar]

- Hou, J.; Wu, X.; Zhao, W.; Luo, J.; Jia, Y. Joint Syntax Representation Learning and Visual Cue Translation for Video Captioning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Zhang, Z.; Shi, Y.; Yuan, C.; Li, B.; Wang, P.; Hu, W.; Zha, Z.J. Object Relational Graph with Teacher-Recommended Learning for Video Captioning. Computer Vision and Pattern Recognition. arXiv 2020, arXiv:2002.11566. [Google Scholar]

- Ryu, H.; Kang, S.; Kang, H.; Yoo, C.D. Semantic Grouping Network for Video Captioning. In National Conference on Artificial Intelligence. arXiv 2021, arXiv:2102.00831. [Google Scholar]

- Gu, X.; Chen, G.; Wang, Y.; Zhang, L.; Luo, T.; Wen, L. Text with knowledge graph augmented transformer for video captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18941–18951. [Google Scholar]

- Jing, S.; Zhang, H.; Zeng, P.; Gao, L.; Song, J.; Shen, H.T. Memory-based augmentation network for video captioning. IEEE Trans. Multimed. 2023, 26, 2367–2379. [Google Scholar] [CrossRef]

- Shen, Y.; Gu, X.; Xu, K.; Fan, H.; Wen, L.; Zhang, L. Accurate and fast compressed video captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 15558–15567. [Google Scholar]

- Luo, X.; Luo, X.; Wang, D.; Liu, J.; Wan, B.; Zhao, L. Global semantic enhancement network for video captioning. Pattern Recognit. 2024, 145, 109906. [Google Scholar] [CrossRef]

- Ma, Y.; Zhu, Z.; Qi, Y.; Beheshti, A.; Li, Y.; Qing, L.; Li, G. Style-aware two-stage learning framework for video captioning. Knowl.-Based Syst. 2024, 301, 112258. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).