1. Introduction

With the rapid advancement of the unmanned aerial vehicle (UAV) domain, the quadrotor—owing to its distinctive characteristics—has been extensively employed in various UAV control systems [

1,

2,

3,

4,

5]. The simple structure, high stability, strong maneuverability, rapid response, and exceptional payload adaptability of the system allow for high-precision execution of tasks, including aerial hovering and trajectory tracking [

6,

7,

8,

9]. However, in practical applications, quadrotors are often confronted with challenges, including the difficulty of accurately modeling wind dynamics, uncertainty in environmental parameters, and inherently nonlinear dynamical equations [

10,

11]. Thus, it is both critical and urgent to deepen our understanding of UAV adaptability, robustness, and intelligence under environmental disturbances.

Outdoor wind disturbances represent a significant factor affecting the stability and performance of quadrotor control systems. To achieve precise trajectory tracking of a quadrotor in the presence of such disturbances, existing control approaches under complex environmental conditions can be broadly categorized into model-based and data-driven strategies. Model-based methods explicitly incorporate aerodynamic disturbance terms into the quadrotor’s dynamical equations and design disturbance-rejection controllers to enhance tracking accuracy [

12,

13]. In [

14], a robust H

∞ guaranteed cost controller is developed for quadrotors, concurrently addressing model uncertainties and external disturbances to effectively attenuate perturbation effects. In [

15], a sliding-mode controller is combined with a fixed-time disturbance observer to design a controller for the position–attitude system, thereby handling time-varying wind disturbances in trajectory tracking. In [

16], a model predictive control-based position controller is integrated with an SO(3)-based nonlinear robust attitude control law to counteract external disturbances. Model-based controllers largely depend on accurate dynamical models of wind disturbances and can deliver stable and reliable performance under low uncertainties. Nonetheless, these controllers often exhibit limited adaptability when handling complex nonlinear and multivariable coupling effects, and their parameter tuning presents significant difficulties.

Compared to model-based control strategies, data-driven approaches demonstrate enhanced adaptive capabilities when addressing complex nonlinear, time-varying, or unknown disturbances [

17,

18,

19]. Regarding data-driven state observers for quadrotors, ref. [

20] employed Koopman operator theory to construct dynamics in two distinct environments and integrated them with an MPC controller for trajectory tracking. Ref. [

21] proposed a reinforcement learning (RL) framework incorporating external force compensation and a disturbance observer to attenuate wind gust effects during trajectory tracking. In [

22], a novel wind perturbation estimator utilizing neural networks and cascaded Lyapunov functions was used to derive a full backstepping controller for quadrotor trajectory tracking. Beyond observer design, deep reinforcement learning (DRL) methods enable direct policy learning through reward function design, allowing quadrotors to interact with wind-disturbed environments. In [

23], an RL-based controller was trained to directly generate desired three-axis Euler angles and throttle commands for disturbance rejection. Ref. [

24] proposes a distributed architecture utilizing multi-agent reinforcement learning to reduce user perception delay and minimize UAV energy consumption. Ref. [

25] integrated policy relief and significance weighting into incremental DRL to enhance control accuracy in dynamic environments. In [

26], dual-critic neural networks supplant the conventional actor–critic framework to approximate solutions to Hamilton–Jacobi–Bellman equations for disturbance-robust quadrotor tracking. However, such data-driven methods typically employ static neural networks with frozen weights post-training. This approach relies on wind-condition-specific datasets during offline training, limiting generalization capability beyond the training distribution. Moreover, when environmental dynamics drift, conventional online fine-tuning strategies are highly susceptible to catastrophic forgetting.

To address trajectory tracking control for quadrotors under significantly varying wind disturbances and mitigate catastrophic forgetting during continual learning, this paper proposes a continual reinforcement learning framework. This framework resolves the inability of RL policies to adapt to substantial environmental changes during quadrotor trajectory tracking in wind-disturbed environments. Most existing continual learning methods operate at either the parameter level [

27,

28] or the functional output level [

29], focusing on global connection strength or output similarity. Continual backpropagation (CBP) [

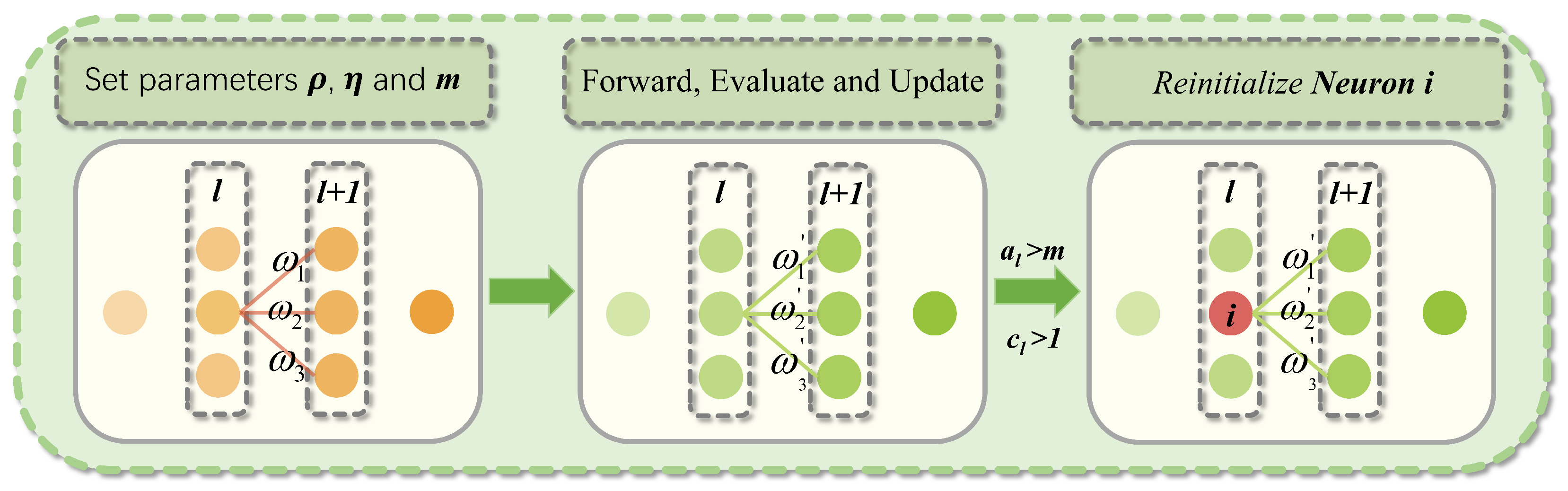

30] employs selective resetting of inefficient neurons at the structural level, continuously activating dormant units while maintaining an effective representation rank without additional loss terms, old model storage, or parameter importance evaluation, thereby enabling lightweight continual learning. As illustrated in

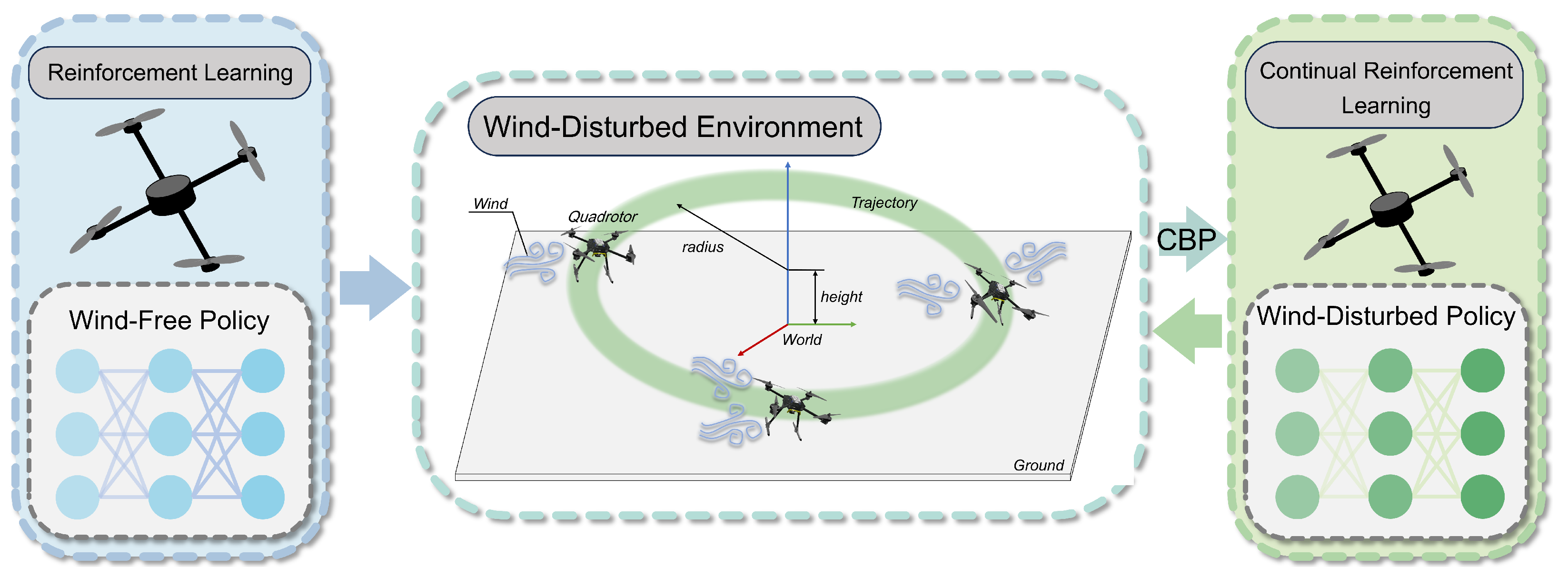

Figure 1, our framework first trains a foundation trajectory tracking policy using proximal policy optimization (PPO) in wind-free conditions. Subsequently, it integrates CBP-based continual reinforcement learning to adapt this foundation policy to progressively evolving wind fields. By enhancing neural network plasticity through CBP, the framework improves quadrotor adaptation in dynamic environments. Additionally, a novel reward function is designed to enhance policy accuracy and effectiveness. In summary, this work investigates quadrotor trajectory tracking in disturbed environments via continual deep reinforcement learning. The primary contributions are summarized as follows:

We propose a novel continual reinforcement learning framework for quadrotor trajectory tracking, which significantly enhances adaptability in continuously varying wind fields.

We analyze the characteristics of tracking control and design an innovative reward function to accelerate network training convergence and improve control precision.

We validate the proposed framework on a quadrotor simulation platform. Experimental results demonstrate that our method achieves high trajectory tracking accuracy both in wind-free conditions and under gradually increasing wind disturbances, evidencing its strong learning capability.

The remainder of this paper is organized as follows.

Section 2 formulates the trajectory tracking problem and elaborates on the reward function design.

Section 3 introduces the proposed continual reinforcement learning framework and its core mechanisms.

Section 4 presents comprehensive experimental results with comparative analysis under wind-disturbed conditions. Finally,

Section 5 concludes this paper and discusses future research directions.

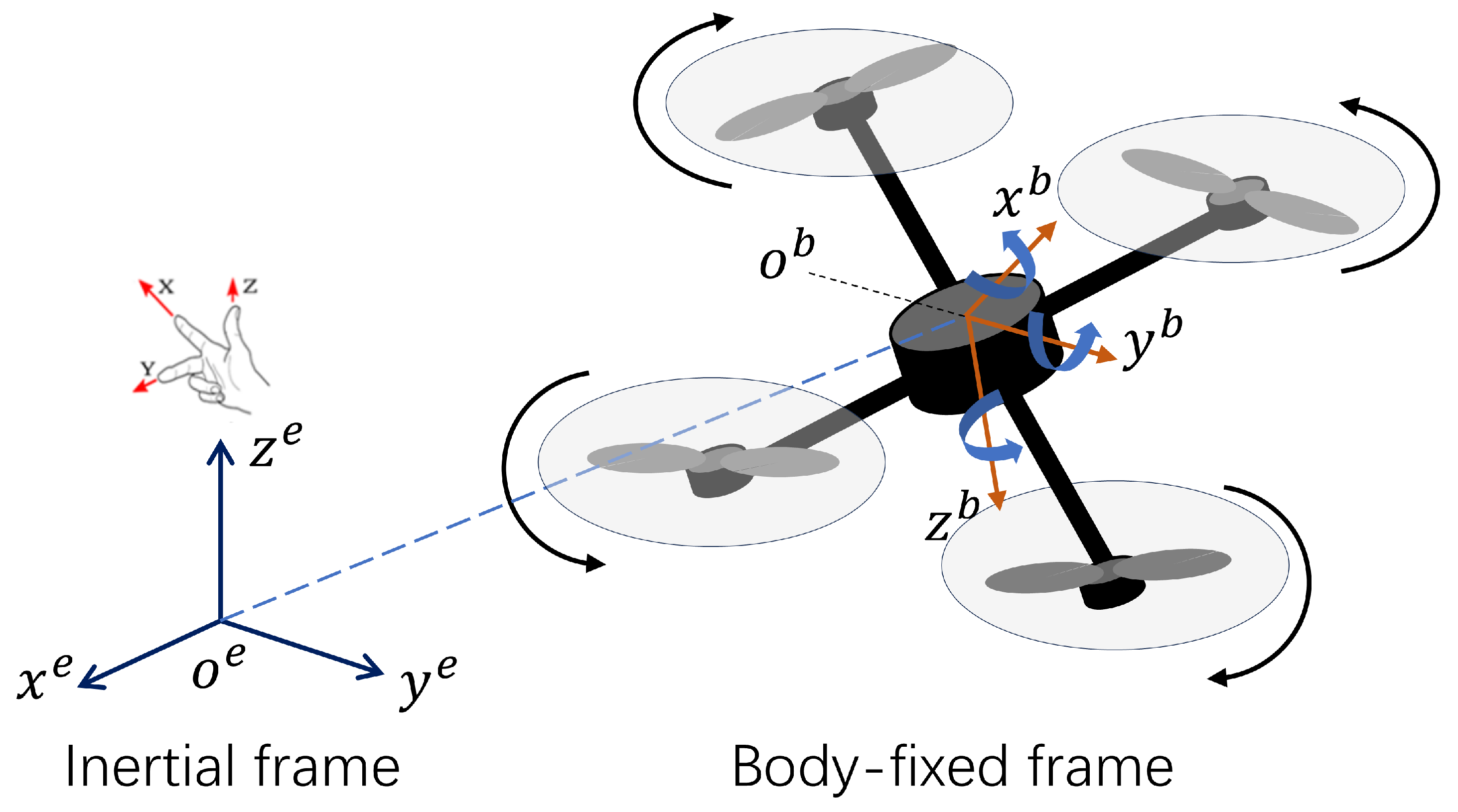

3. Continual Reinforcement Learning Method

3.1. Problem Description

In this study, environmental variations are formulated as a sequence of temporally ordered subtasks. The state transition function drifts during environmental changes while the state and action spaces remain invariant. Let

denote the dynamic environment set, where

represents specific characteristics of the dynamic environment at the

h-th stage. The primary objective of our reinforcement learning model is to derive an optimal control policy that maximizes the expected reward

under network parameters

. The expected long-term return is expressed as follows:

where

denotes a complete episode trajectory,

represents the instantaneous reward after agent–environment interaction, and

is the discount factor. According to the policy gradient theorem, the expectation of

under the distribution

is given by

During the learning process, model parameters are optimized through policy approximation, expressed as follows:

The external disturbance problem refers to situations involving environmental transitions. The goal of continual learning is to enable autonomous updating from

to

when environmental characteristics transition to

:

3.2. The PPO Algorithm

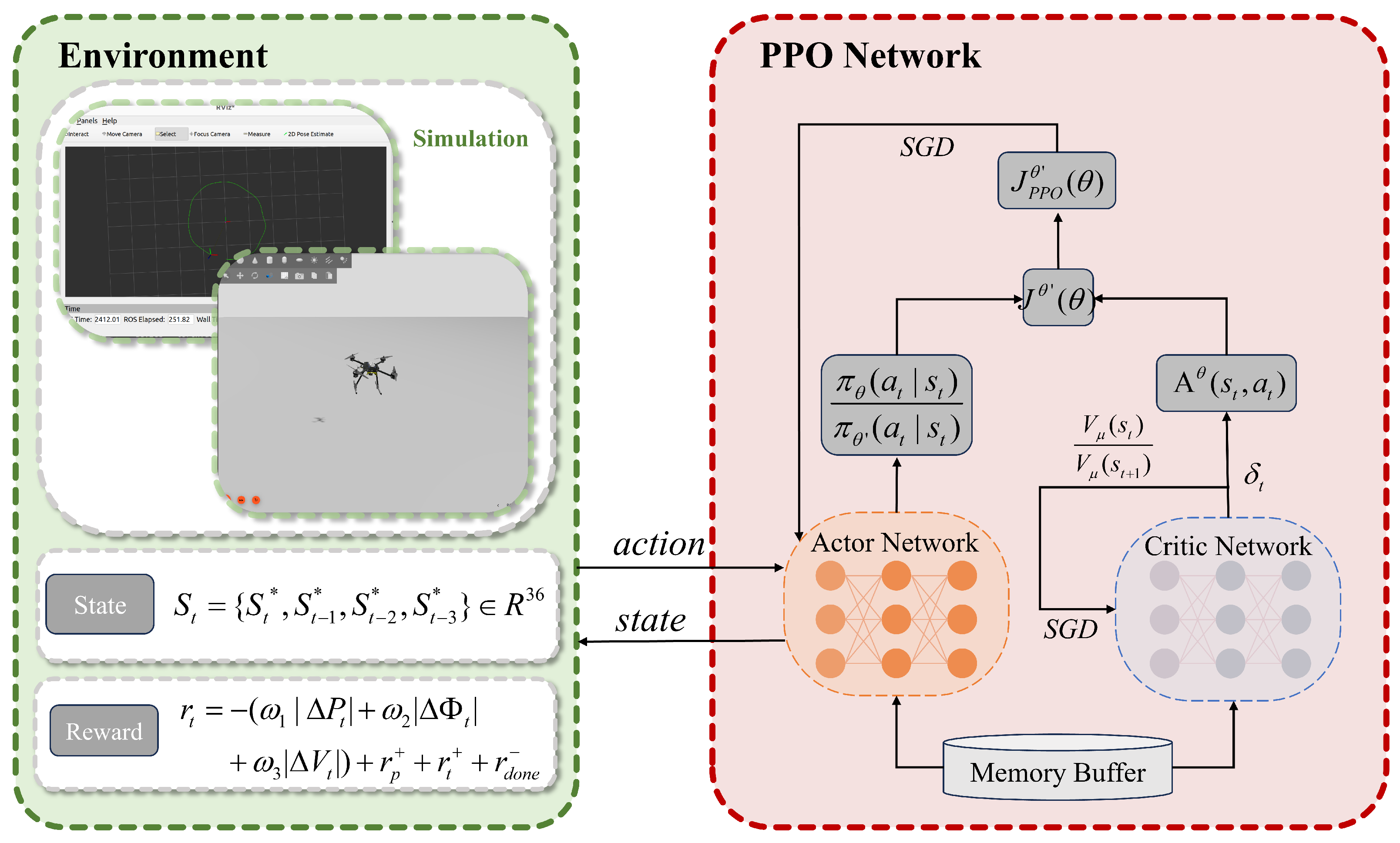

Compared to value-based methods, policy-based reinforcement learning offers distinct advantages by directly learning the policy itself, thereby accelerating the learning process and enhancing decision-making performance in continuous action spaces. PPO integrates policy gradients with importance sampling to address the inherent sample inefficiency of basic policy gradient methods. Utilizing an actor–critic architecture, the PPO-based quadrotor controller design is illustrated in

Figure 3.

PPO employs generalized advantage estimation (GAE), which balances the trade-offs between Monte Carlo methods and single-step temporal difference (TD) approaches through weighted fusion of multi-step TD errors. The GAE formulation is defined as follows:

In Equation (

10),

serves as a trade-off parameter regulating the weighting distribution of multi-step returns,

denotes the single-step TD error, and

represents the state-value function. This design enables the advantage function to capture global information from multi-step interactions while mitigating the high variance inherent in Monte Carlo methods.

To enhance policy update stability and prevent excessive fluctuations that could destabilize optimization, the algorithm incorporates the advantage function

and importance sampling into the objective function (

6), yielding the surrogate objective function:

In Equation (

11),

denotes the behavioral policy parameters interacting with the environment, while

represents the optimization parameters. The objective

is computed via the product of the advantage

(evaluated from samples

) and the tractable importance ratio

. To maintain proximity between the behavioral policy

and optimized policy

during importance sampling, we enforce policy constraints through a clipping function, resulting in the final objective:

By integrating generalized advantage estimation and a clipping mechanism, PPO effectively balances bias–variance trade-offs during policy optimization while preventing abrupt policy updates. These properties establish PPO as an ideal solution for trajectory tracking in quadrotors, particularly when addressing continuous action spaces and multiple disturbance scenarios.

3.3. CBP Method

In dynamic environments, quadrotors must continuously adapt to fluctuating wind conditions. While traditional deep learning approaches perform well in static settings, they suffer from loss of plasticity in continual learning scenarios [

30]. To enhance the agent’s adaptability to dynamic environments and facilitate efficient exploration, this study integrates continual backpropagation (CBP) into the PPO framework. Unlike conventional approaches that freeze network weights after training, CBP reinitializes neurons at the structural level to preserve architectural diversity.

Figure 4 illustrates the operational principle of CBP within the neural network architecture.

The principal advantage of CBP lies in its unit utility assessment and selective reinitialization mechanism. The utility metric for hidden unit

i in layer

l quantifies its contribution to downstream layers via an exponentially weighted moving average:

where

denotes the decay rate balancing historical and current contributions,

represents the activation of unit

i in layer

l at time

t, and

denotes the weight connecting unit

i in layer

l to unit

k in layer

.

When resetting a hidden unit, CBP initializes all outgoing weights to zero to prevent perturbation of learned network functions. However, this zero-initialization renders new units immediately nonfunctional and vulnerable to premature resetting. To mitigate this, newly initialized units receive a grace period of m updates during which they are reset-exempt. Only after exceeding this maturity threshold m are units considered mature. Subsequently, at each update step, a fraction of mature units per layer undergo reinitialization. In practice, is set to an extremely small value, ensuring approximately one unit replacement per several hundred updates.

During each network parameter update, CBP introduces only two additional operations compared to standard forward/backward propagation: unit utility updates and neuron resets. The unit utility update applies an exponential moving average to every neuron in each hidden layer, involving one absolute-value summation and one multiply–accumulate per weight. Its time complexity is , which is on the same order as the total number of network parameters. Neuron resets select and reinitialize a constant number of mature units at a very low rate ; because this occurs infrequently and affects only a fixed number of units per event, its overall overhead is negligible. In terms of memory overhead, CBP allocates for each hidden unit an additional utility value and age , totaling . Thus, CBP maintains continuous structural variation and network plasticity with minimal extra time and space costs, fully compatible with standard neural network training pipelines.

Through CBP integration, the quadrotor UAV iteratively updates network parameters to maintain adaptability in complex, dynamically evolving environments.

3.4. Integrated Method

Reinforcement learning experiments exhibit greater stochasticity and variance compared to supervised learning due to inherent algorithmic randomness and sequential data dependencies influenced by the learning process itself [

32,

33].

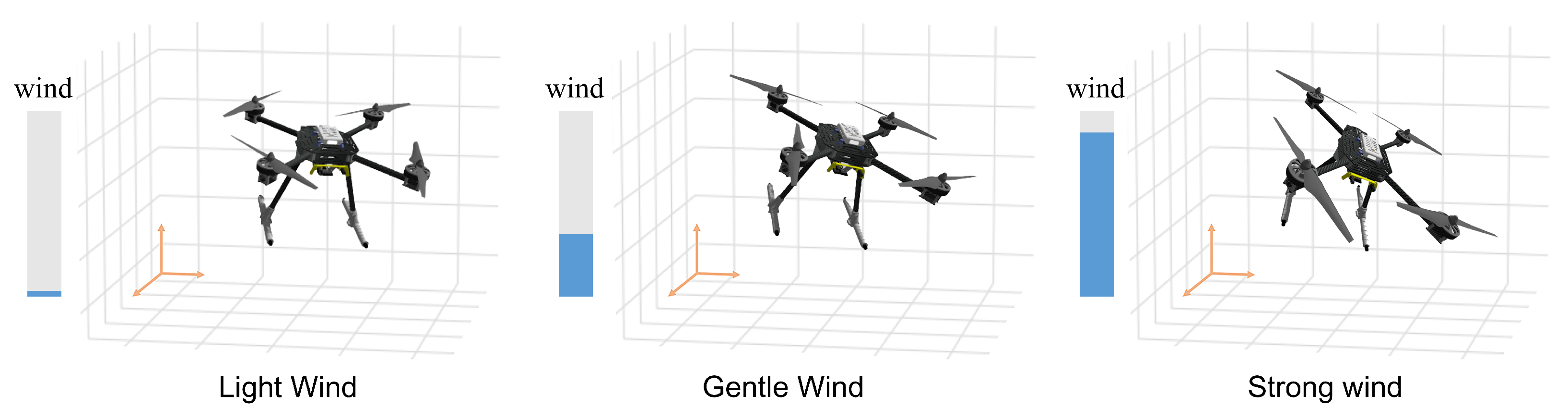

Figure 5 illustrates quadrotor flight dynamics under varying wind conditions: stable flight occurs under low winds; moderate winds induce attitude deviations and instability; and high winds cause significant flight disruption or failure.

A foundation policy trained in environment exhibits degraded performance when deployed in altered environment , necessitating adaptive capability. This study proposes a continual reinforcement learning framework where wind-free control strategies serve as foundational models, enabling policy adaptation to environmental changes. By integrating CBP’s continual learning mechanism into PPO’s policy gradient updates, our approach maintains learned policy retention while flexibly generating new action distributions, achieving rapid adaptation to dynamic wind fields.

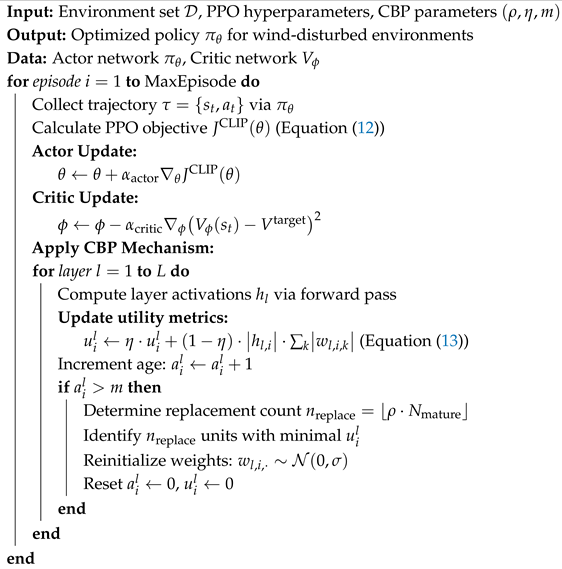

Algorithm 1 details the continual reinforcement learning process for updating quadrotor control policy

in dynamic environments. Wind disturbance signals serve as environmental variables during trajectory tracking. The policy

pretrained in

serves as the foundation model, where the agent learns through standard policy gradients in

while CBP concurrently performs structural neuron updates. After each full network update, neurons meeting reinitialization criteria are reset. Since the agent has acquired fundamental representations of the

state–action space, the CBP algorithm enhances network plasticity to facilitate adaptation to

variations.

| Algorithm 1: Continual reinforcement learning for UAV control. |

![Sensors 25 04895 i001 Sensors 25 04895 i001]() |

4. Experiment and Discussion

4.1. Experimental Setup and Parameter Configuration

The training process was executed on an Ubuntu 22.04 system equipped with an AMD Ryzen 7 6800H CPU and NVIDIA RTX 3050 GPU. Our continual reinforcement learning control algorithm was implemented in Python 3.10 using PyTorch 2.4, with the Adam optimizer employed for network training [

34]. We established a simulation environment integrating Gazebo 2 with the PX4 flight controller, leveraging Gazebo’s high-fidelity physics engine to simulate quadrotor flight under wind disturbances [

35]. The parameters of the quadrotor are shown in

Table 1. The learning framework follows the architecture depicted in

Figure 1.

The PPO implementation utilizes separate actor and critic networks, each containing four hidden layers. The actor network processes state observations as input and outputs system actions , with hidden layer dimensions of 64, 128, 256, and 64 neurons, respectively. The critic network accepts system states and actions as input and estimates state value , featuring hidden layers of 128, 256, 256, and 128 neurons. All hidden layers employ ReLU activation functions.

Reward function weights were empirically determined, with state error components defined as penalty terms to promote stable flight control. Given the critical importance of position and attitude control in trajectory tracking, larger weights were assigned to position () and attitude () penalties, while velocity and control smoothness penalties received lower weights (, ). To enhance exploration and simplify training, two sparse reward components were implemented: a positional accuracy reward triggered when and a critical failure penalty imposed for crashes or boundary violations.

The operational boundary for trajectory tracking was defined as a

cubic volume. Additional training parameters, referenced from prior works [

25,

36], are detailed in

Table 2.

To evaluate the performance of the continual reinforcement learning-based quadrotor control strategy in windy environments, this study conducts trajectory tracking experiments under both wind-free and wind-disturbed conditions. The root mean square error (RMSE) of tracking deviation serves as the quantitative evaluation metric for assessing the tracking control performance of the proposed method. Let

M denote the total number of samples and

represent the positional tracking error of the

i-th sample. The RMSE is calculated as follows:

4.2. Performance Analysis in the Wind-Free Environment

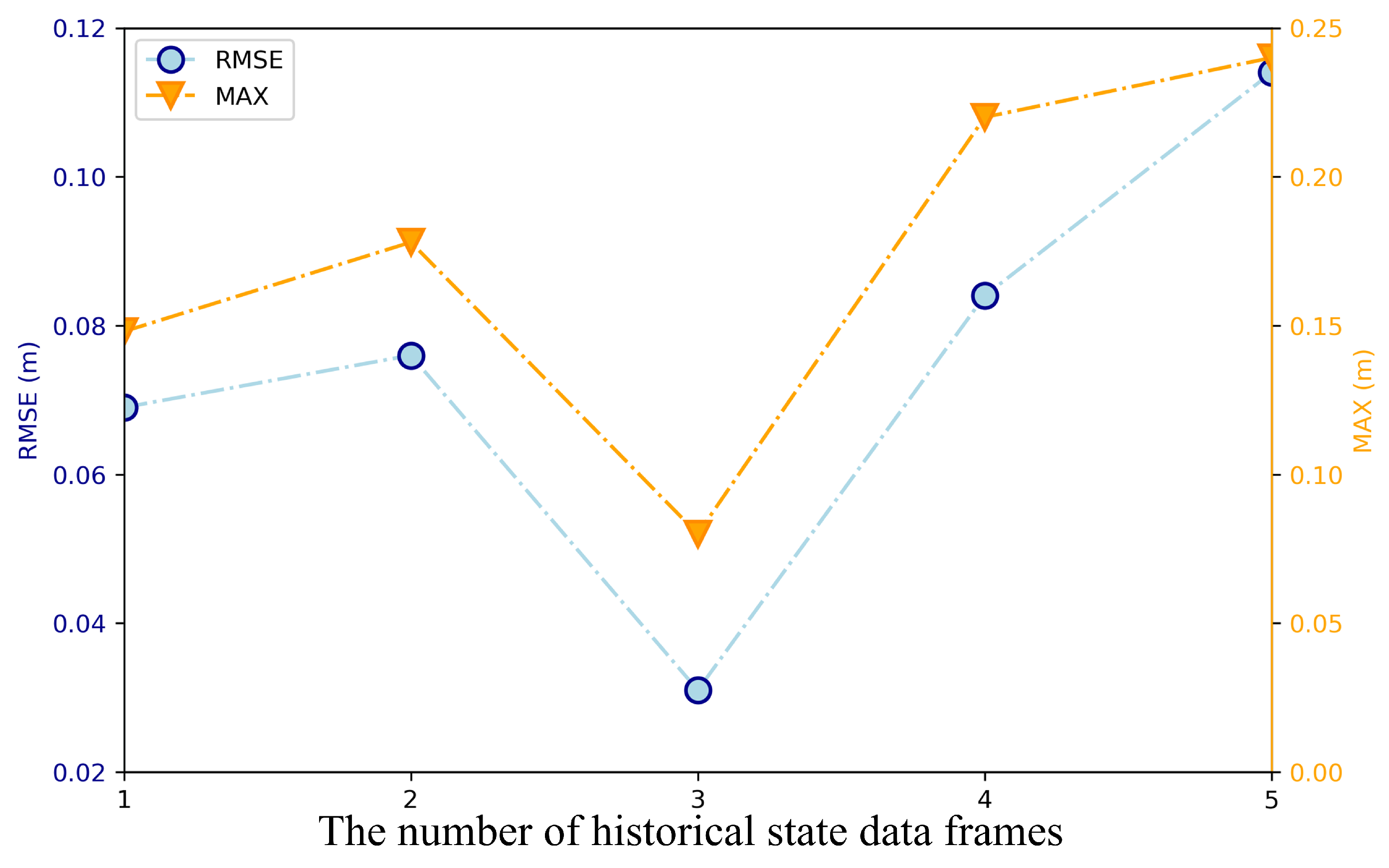

The reward function design incorporates not only the current flight state but also historical states from the preceding

n timesteps to capture motion trends and environmental context for policy learning.

Figure 6 illustrates the variation in control error RMSE and maximum error with different history lengths

n. The curves demonstrate significant error reduction as

n increases from 0 to 3. For

, errors exhibit a slight increase, indicating that excessive historical information introduces redundant noise. Consequently, this study selects

as an optimal compromise between information sufficiency and network complexity.

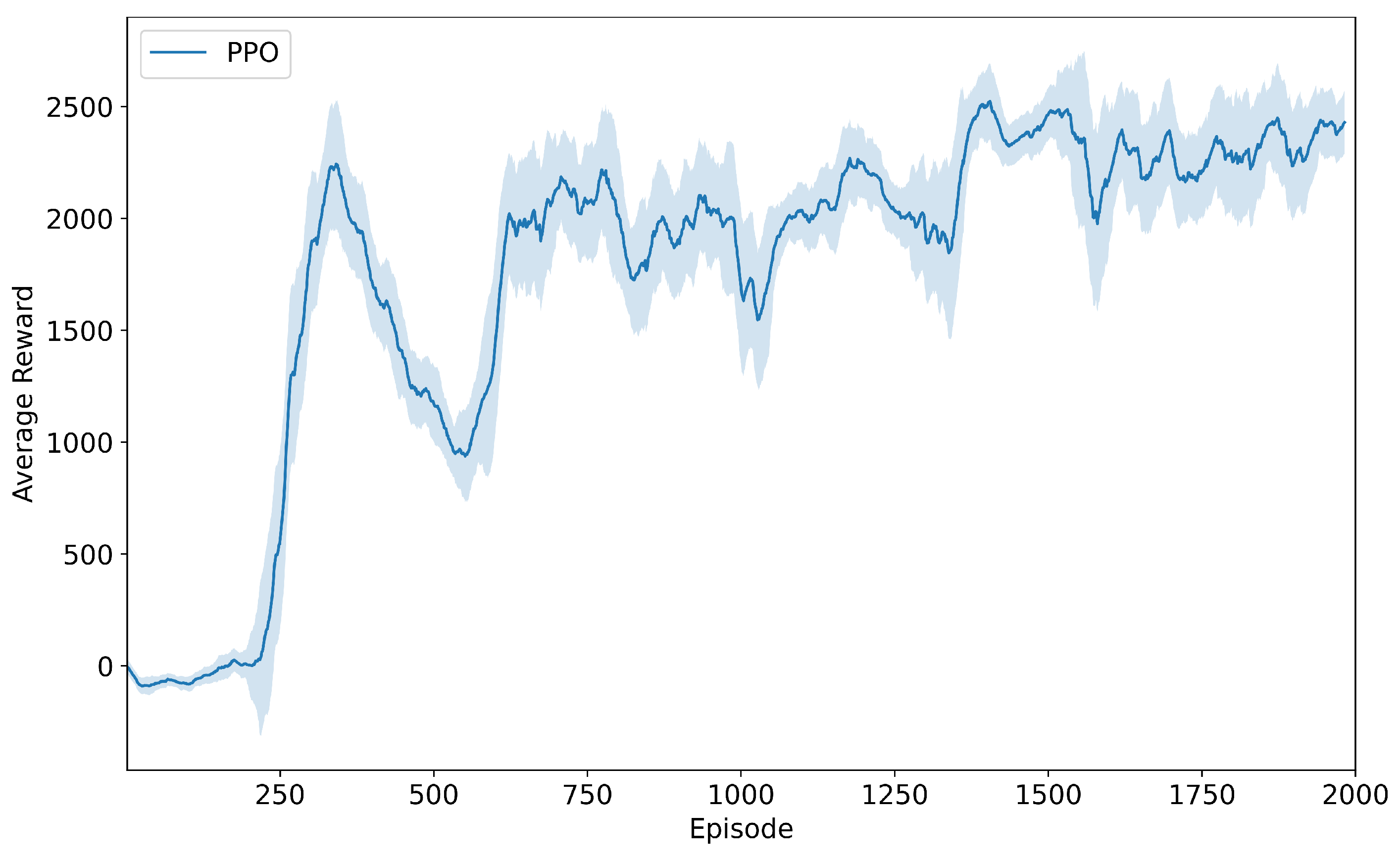

Under wind-free conditions, we trained the quadrotor’s trajectory tracking policy using the PPO algorithm over 2000 training episodes.

Figure 7 shows the corresponding average reward curve: During the initial 200 episodes, the policy engaged primarily in random exploration, resulting in substantial reward fluctuations near zero. Between episodes 200 and 350, guided by the advantage function, the policy distribution converged toward optimal trajectories, causing rapid reward growth. From episodes 350 to 600, the clipped probability mechanism constrained excessive gradient updates, leading to a transient reward decrease. Beyond episode 600, entropy regularization stabilized the reward curve, maintaining high values with minimal oscillations.

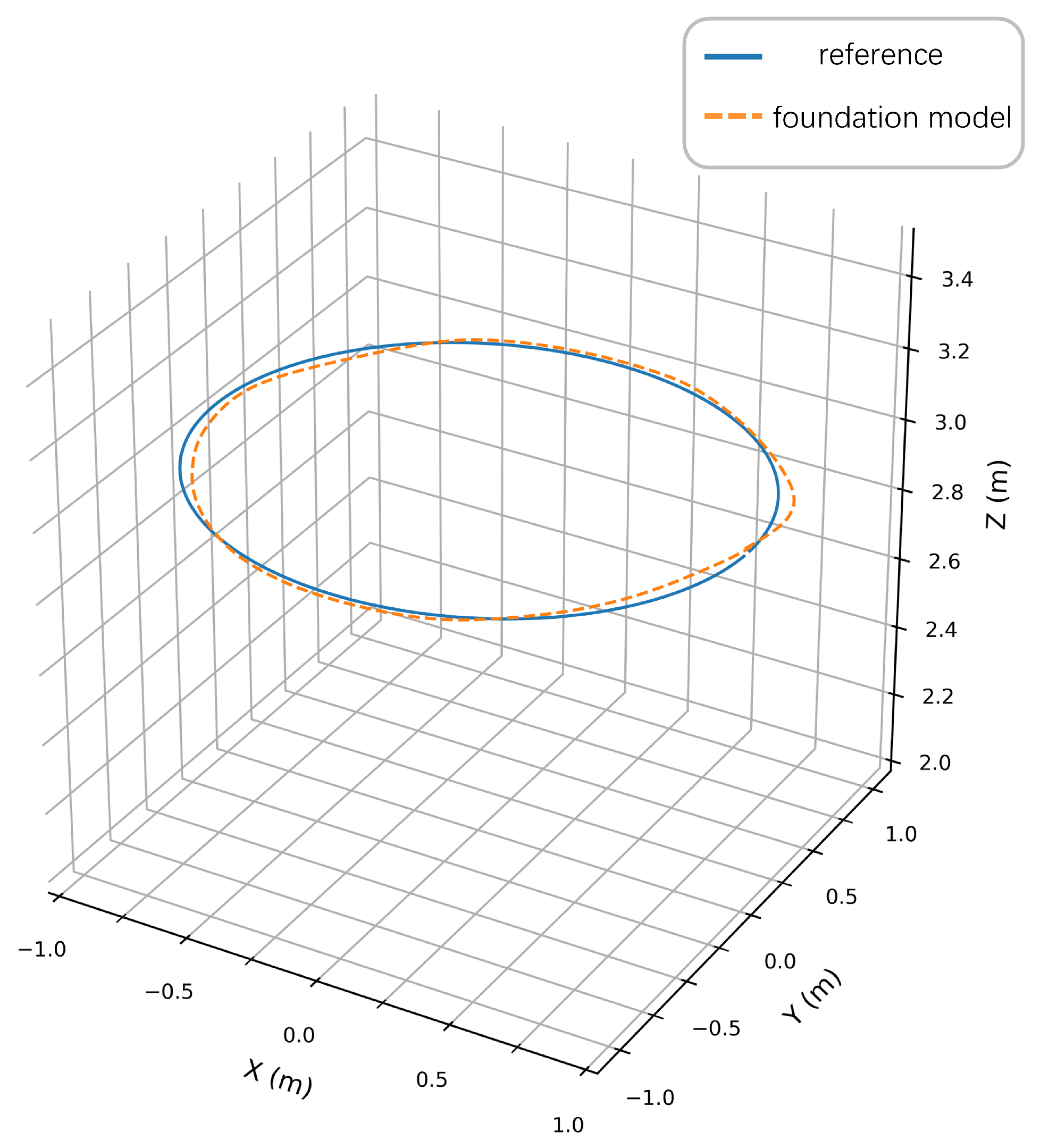

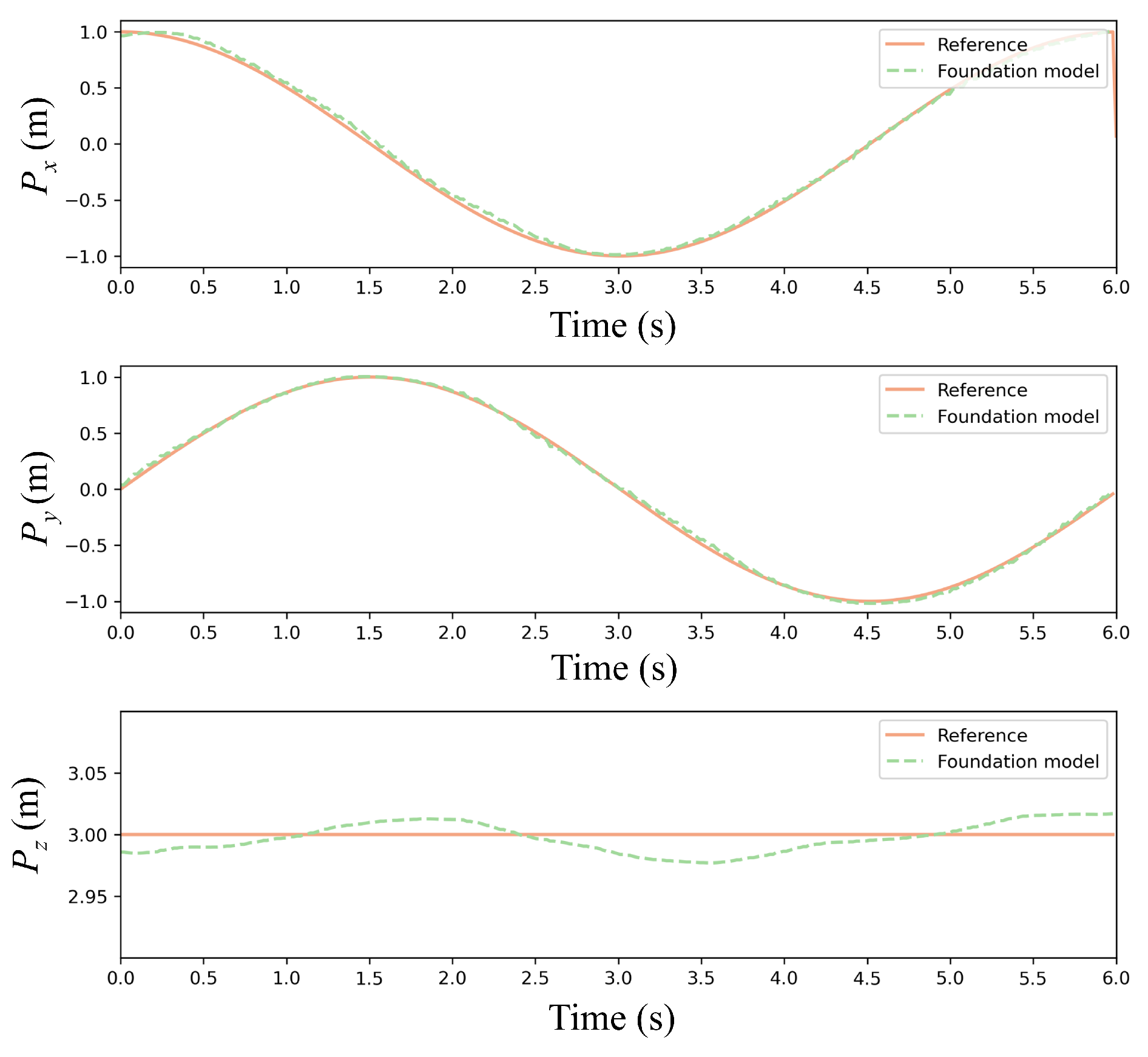

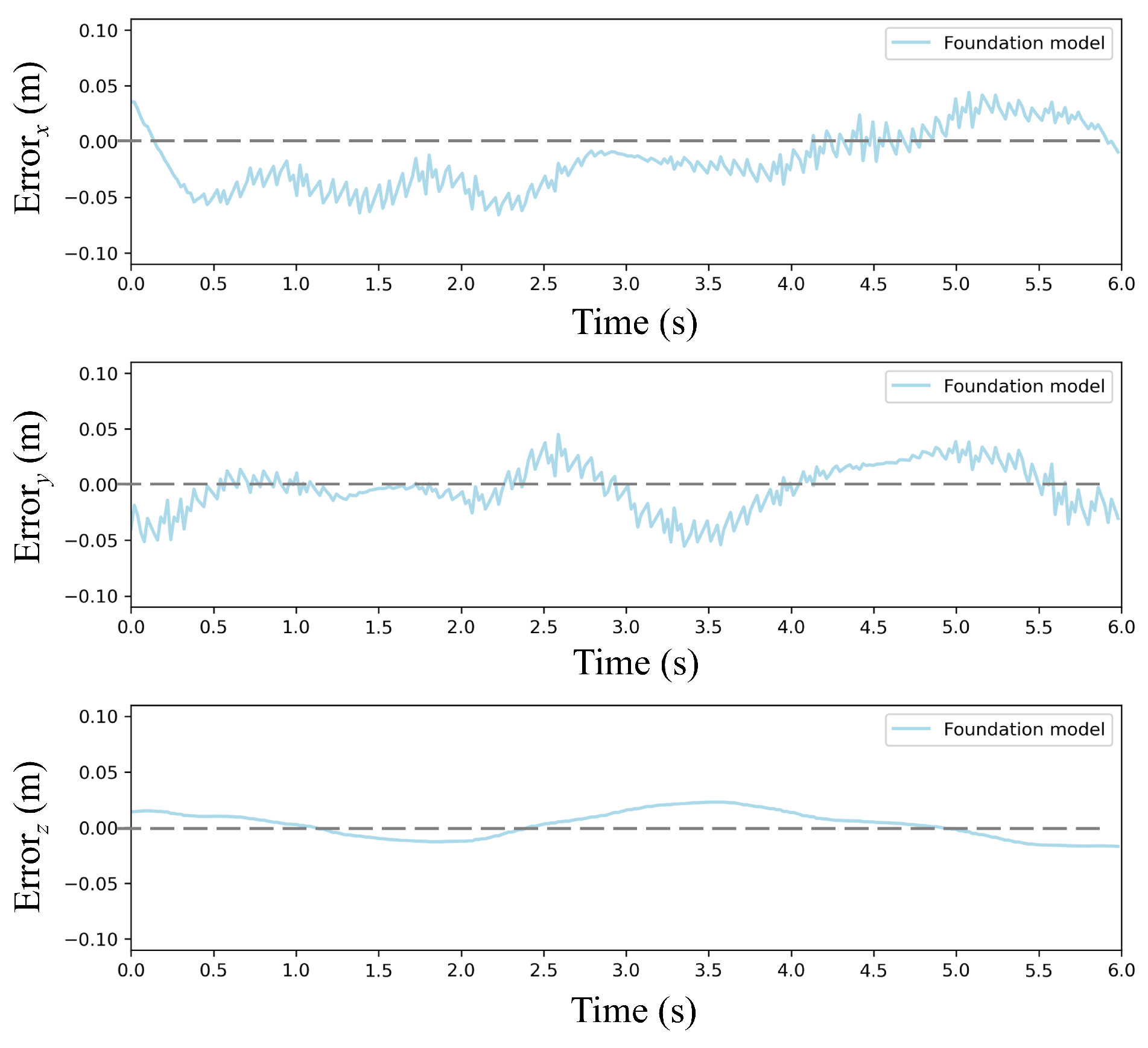

To quantitatively validate the pretrained PPO policy,

Figure 8 compares reference and actual trajectories in a disturbance-free environment. The quadrotor tracked a circular path with radius = 1 m from initial position (1, 0, 3) m at a constant linear velocity (1 m/s). As shown in

Figure 8, the 3D trajectory plot explicitly compares the reference and prediction with the foundation model. As demonstrated in

Figure 9 and

Figure 10, the foundation model achieves high-precision trajectory tracking in wind-free environments. The policy achieves precise tracking with near-perfect circular closure, indicating negligible cumulative drift. Maximum deviations occur at curvature maxima where centrifugal forces peak, yet all-axis errors remain below 0.05 m, satisfying precision thresholds for quadrotor maneuvering tasks.

This result empirically verifies that the PPO-derived policy converges to a robust nominal controller under ideal conditions, establishing a foundational model for subsequent continual learning.

4.3. Performance Analysis in the Wind-Disturbed Environment

4.3.1. Performance in Stepwise Wind

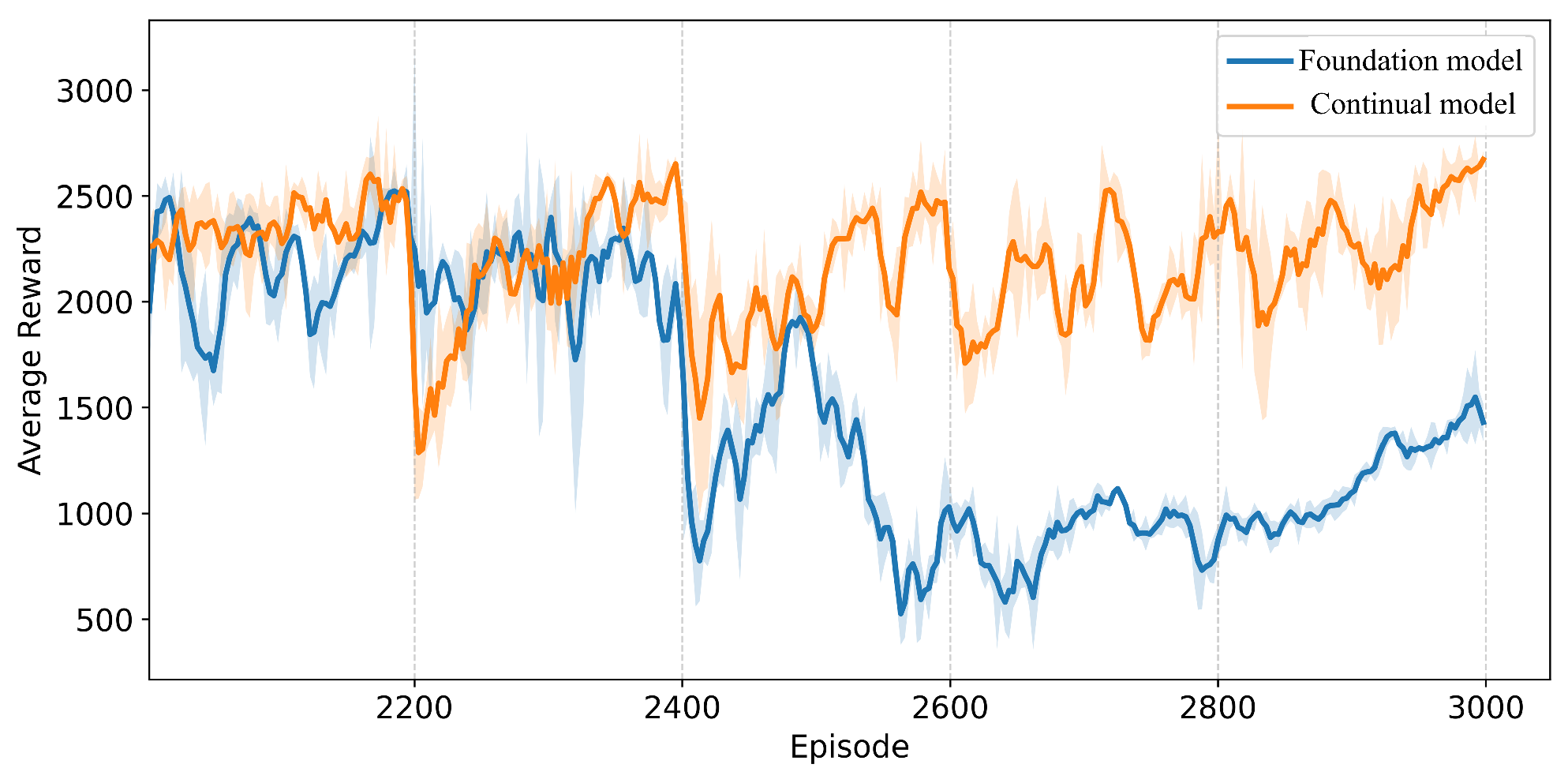

To evaluate the continual learning capability of our algorithm in dynamic wind fields, we constructed a stepwise wind variation scenario: after 2000 episodes of foundation model training, a wind speed increment of 7 m/s was applied every 200 episodes along both

x- and

y-axes, creating a progressively intensifying wind field.

Figure 11 compares the average reward curves of PPO and our continual reinforcement learning approach, with the horizontal axis representing training episodes and the vertical axis the average reward. Experimental results demonstrate that during low-wind phases, both methods exhibit comparable oscillation amplitudes while maintaining high returns. As wind speed progressively increases, the PPO strategy shows continuous reward degradation, indicating insufficient disturbance adaptability. In contrast, the CBP-enhanced policy exhibits transient reward drops after each wind speed change but rapidly recovers to prior levels, maintaining stable oscillations thereafter. The characteristic sawtooth reward pattern signifies substantially enhanced network plasticity, confirming the algorithm’s continual learning and rapid adaptation capabilities in dynamic environments.

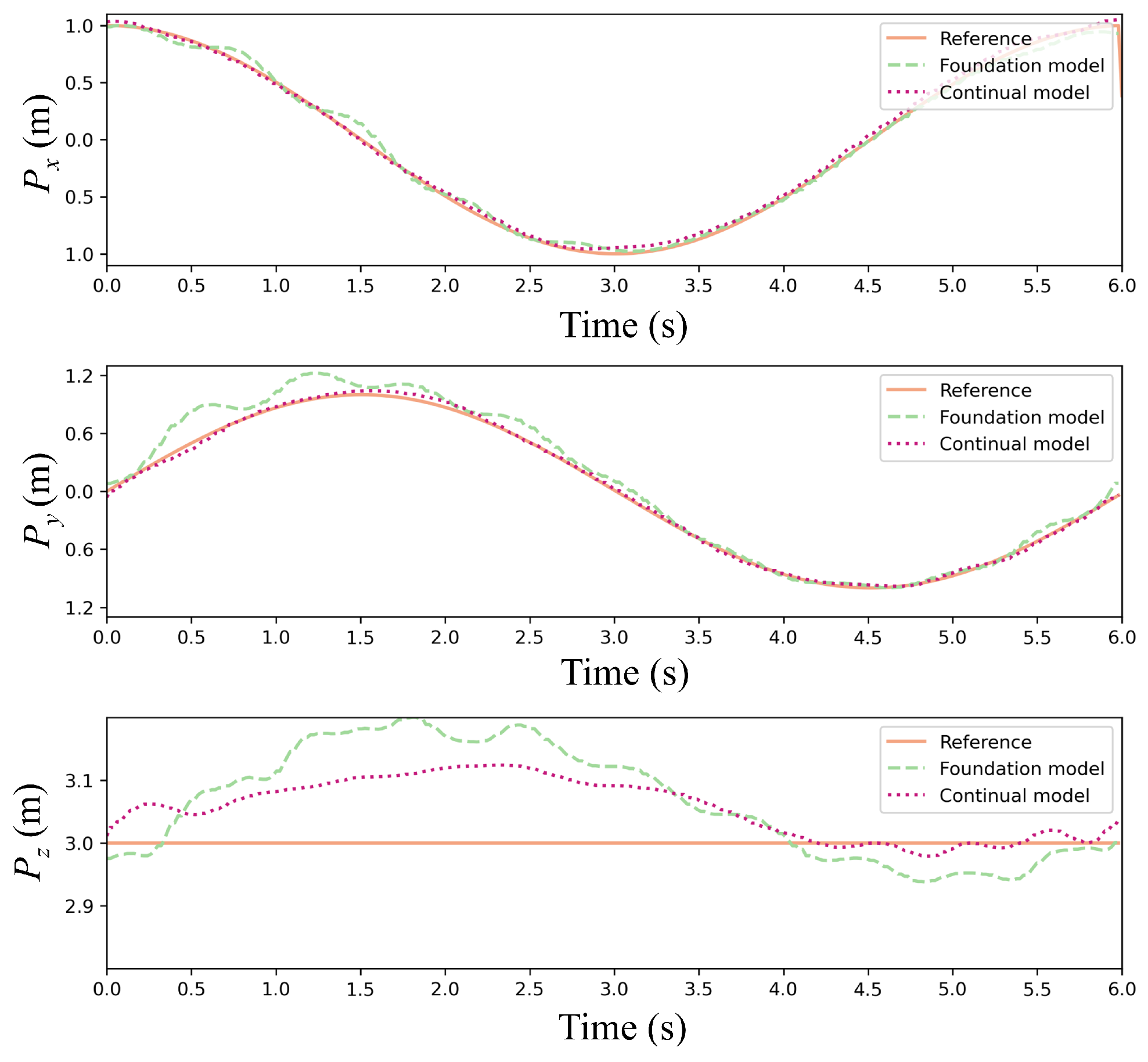

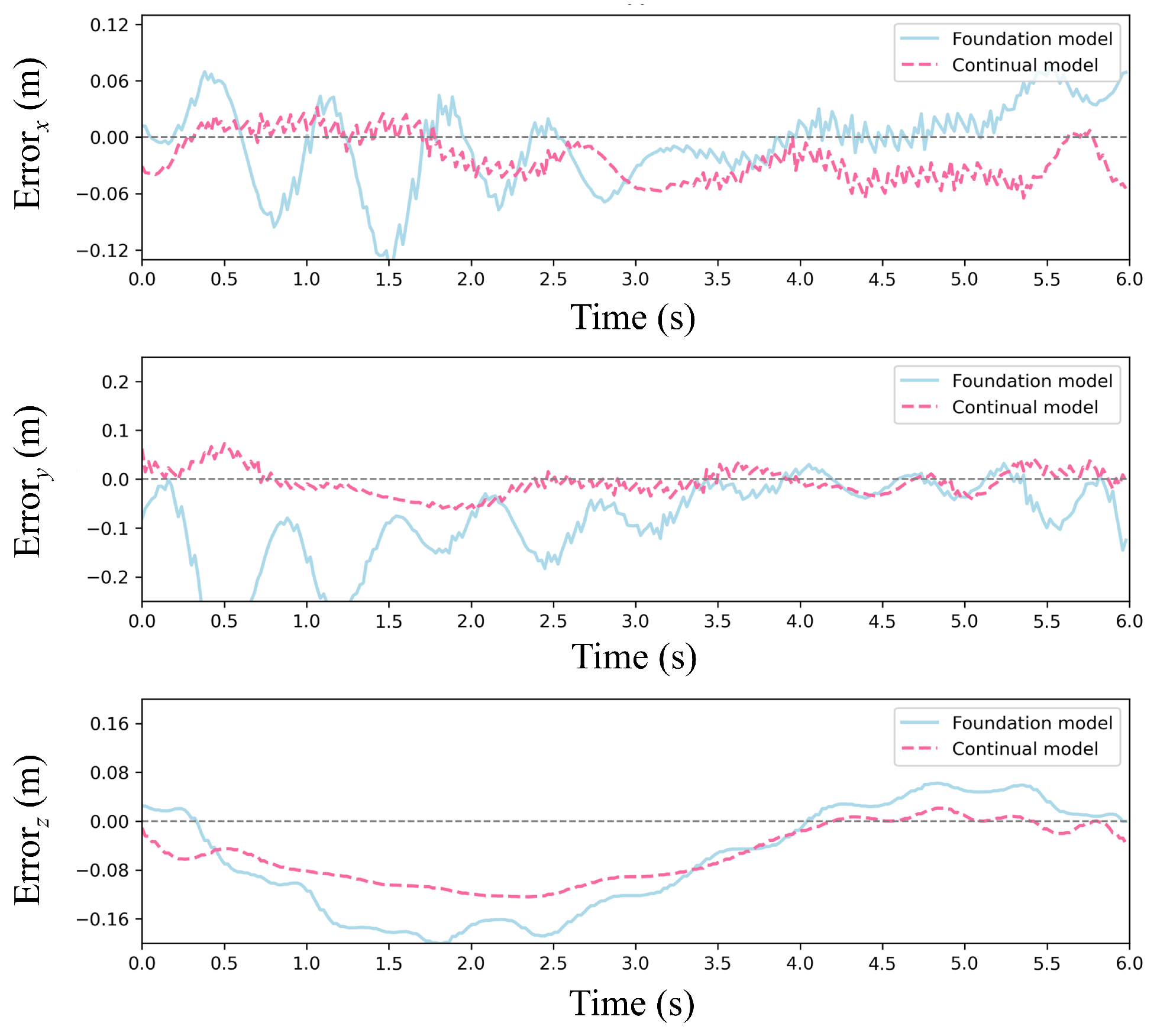

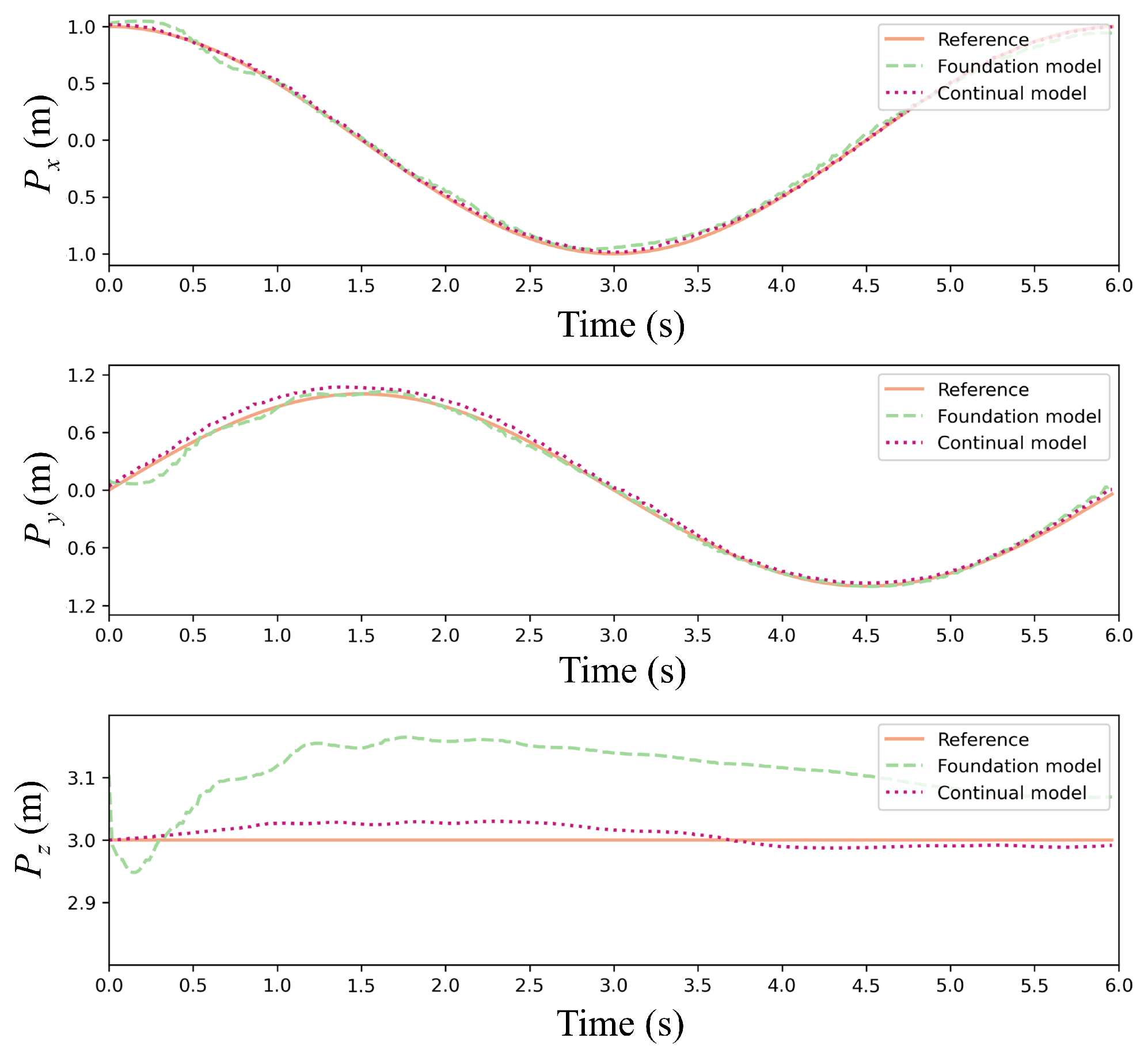

Figure 12 and

Figure 13 present trajectory comparisons under extreme wind conditions (cumulative 35 m/s along

x/

y-axes).

Figure 12 displays reference versus controlled trajectories in three spatial dimensions, while

Figure 13 shows corresponding positional errors. The results indicate that our continual reinforcement learning achieves lower error peaks than PPO at each wind speed increment while maintaining faster recovery to foundation performance. Overall average error is reduced by approximately 15%, validating the CBP algorithm’s significant enhancement of policy robustness and precision. Critically, the continual reinforcement learning method maintains accurate path tracking under strong disturbances, whereas standard PPO exhibits substantial deviation.

These findings demonstrate that CBP’s selective neuron resetting and structural diversity preservation empower reinforcement learning policies to maintain efficacy in evolving wind fields, significantly enhancing quadrotor adaptation to novel conditions.

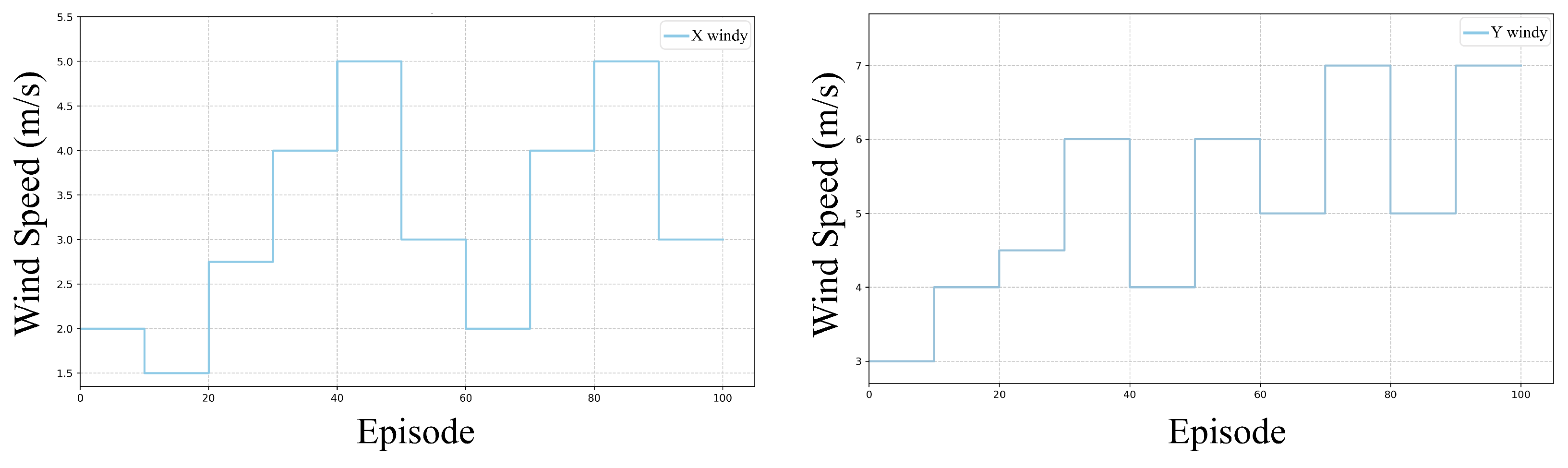

4.3.2. Performance Under Stochastic Wind

To validate the adaptability of the proposed continual reinforcement learning framework in realistic wind environments, we conducted additional trajectory tracking experiments in a stochastic wind scenario. As depicted in

Figure 14, random sampling of wind speeds in the

x-axis

and

y-axis

was performed every 10 episodes (approximately 1 min). Both

and

were subject to a normal disturbance distribution with parameters

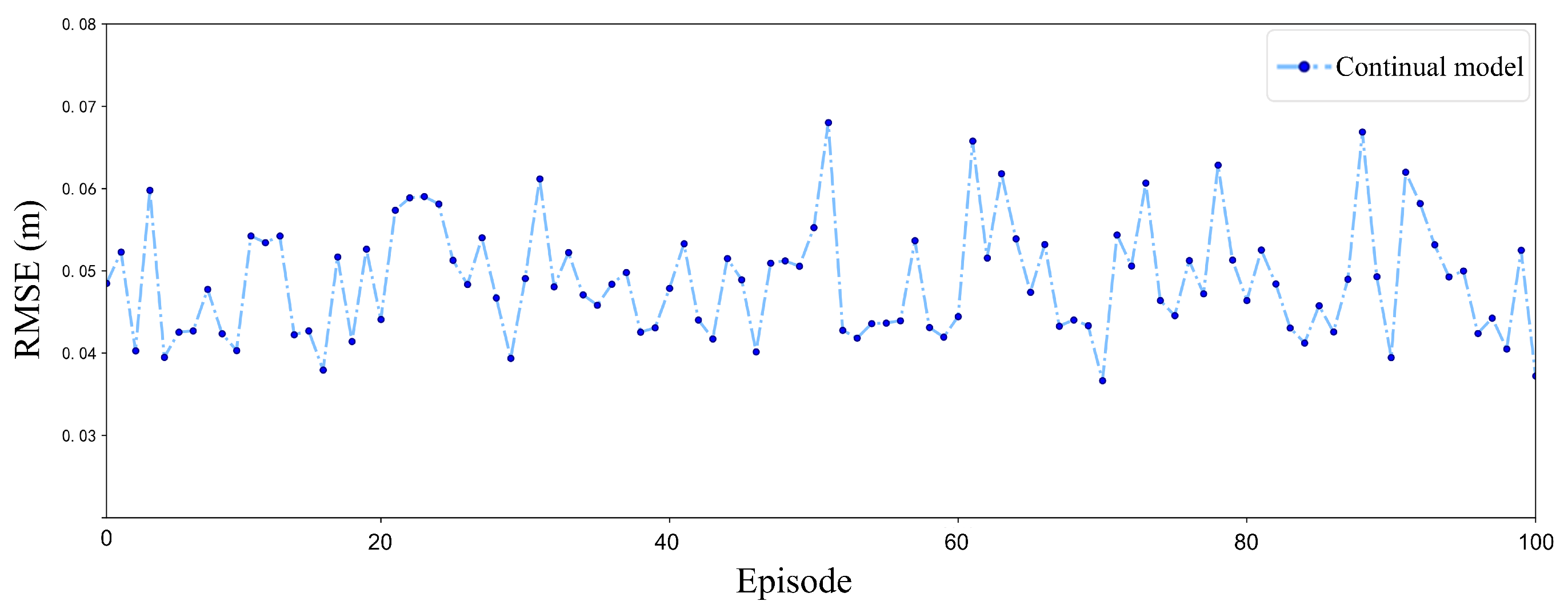

. The trajectory reference entailed a circular path with a radius of 1 m starting from the initial position at (1, 0, 3) m, moving at a constant linear velocity of 1 m/s. The evaluation criterion remained the RMSE of positional tracking, comparing two methods: the foundational model and the proposed continual reinforcement learning model. The training duration spanned 100 episodes to observe long-term adaptation trends.

Figure 15 illustrates the RMSE of the continual model training in a stochastic wind field over 100 episodes. The continual model exhibits notable stability throughout the training period. The RMSE values consistently remain low, predominantly below 0.06 m, indicating the efficacy of the CBP mechanism. This mechanism’s capacity to dynamically reset inefficient neurons enables the model to adjust to varying stochastic wind conditions without experiencing catastrophic forgetting. Consequently, when confronted with new wind patterns, the model promptly reconfigures its neural network to identify fresh optimal policies while retaining valuable insights from prior wind conditions.

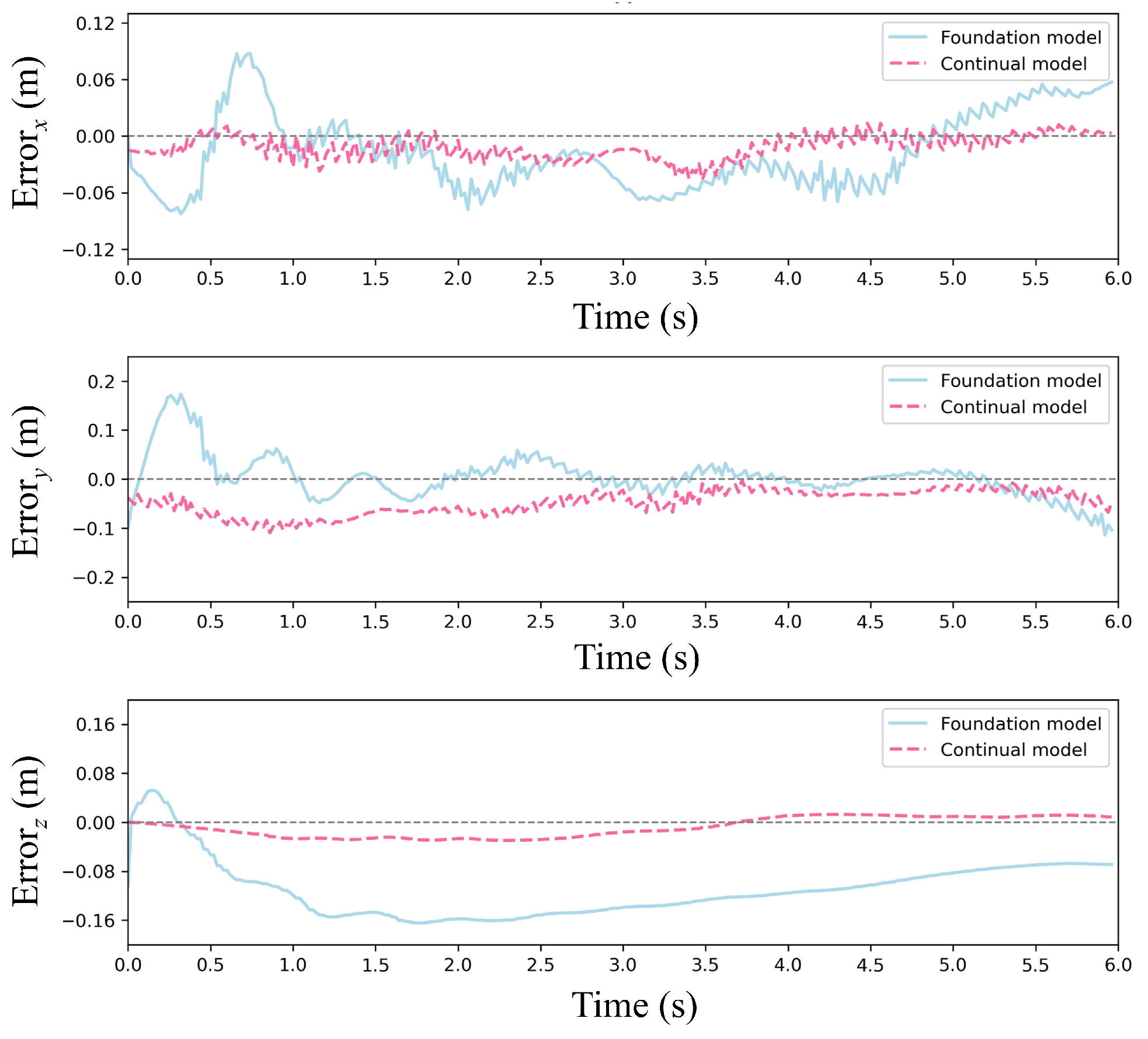

Figure 16 and

Figure 17 present a comparative analysis of the foundation model and the continual model in a single episode under random wind conditions.

Figure 16 depicts trajectory comparisons between the two models, showing that the continual model closely aligns with the reference trajectory in the three directions, even amidst significant random wind disturbances. Further insights from

Figure 17, focusing on error dynamics, reveal that the continual model maintains positional errors within ±0.06 m across all three directions, swiftly recovering from transient wind gusts. In contrast, the foundation model exhibits persistent error accumulation, particularly evident in y-direction errors exceeding ±0.2 m in later episodes. This disparity arises from the utility-based neuron management of CBP, wherein underperforming neurons are reset to explore adaptive strategies in response to new disturbance patterns induced by random winds while retaining valuable features learned from prior wind conditions.

Stochastic wind disturbances emulate natural atmospheric conditions more accurately, featuring unstructured variations in magnitude and direction. This necessitates enhanced real-time adaptation and robustness from the controller. The outcomes in the stochastic scenario validate the superior performance of the suggested framework over the conventional PPO model in terms of real-time adaptability and robustness.