Enhanced Landslide Visualization and Trace Identification Using LiDAR-Derived DEM

Abstract

1. Introduction

2. Overview of the Study Area

3. Data

3.1. Data Collection

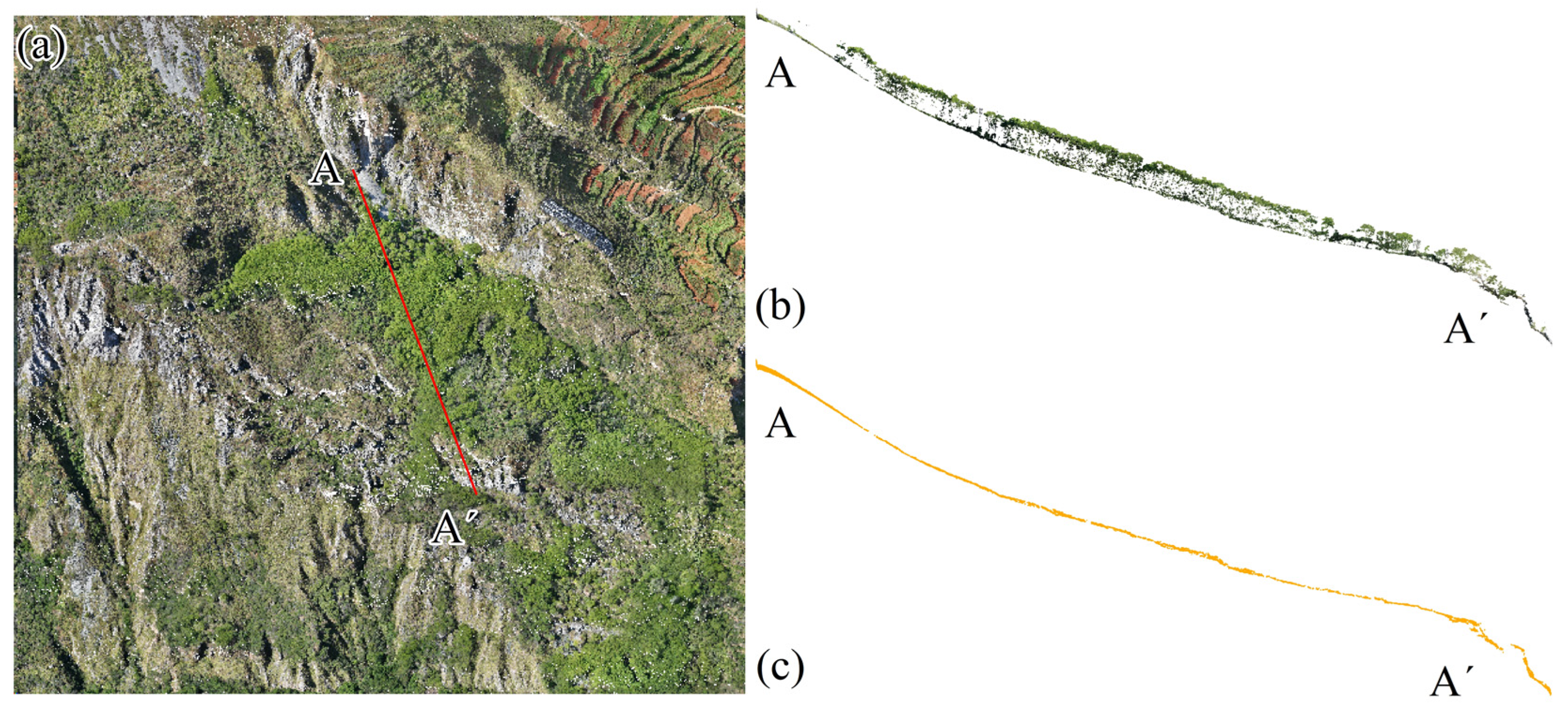

3.2. Data Preprocessing

3.3. Construction of LiDAR-DEM

4. Methods

4.1. LiDAR-DEM Visualization

4.1.1. Hillshade

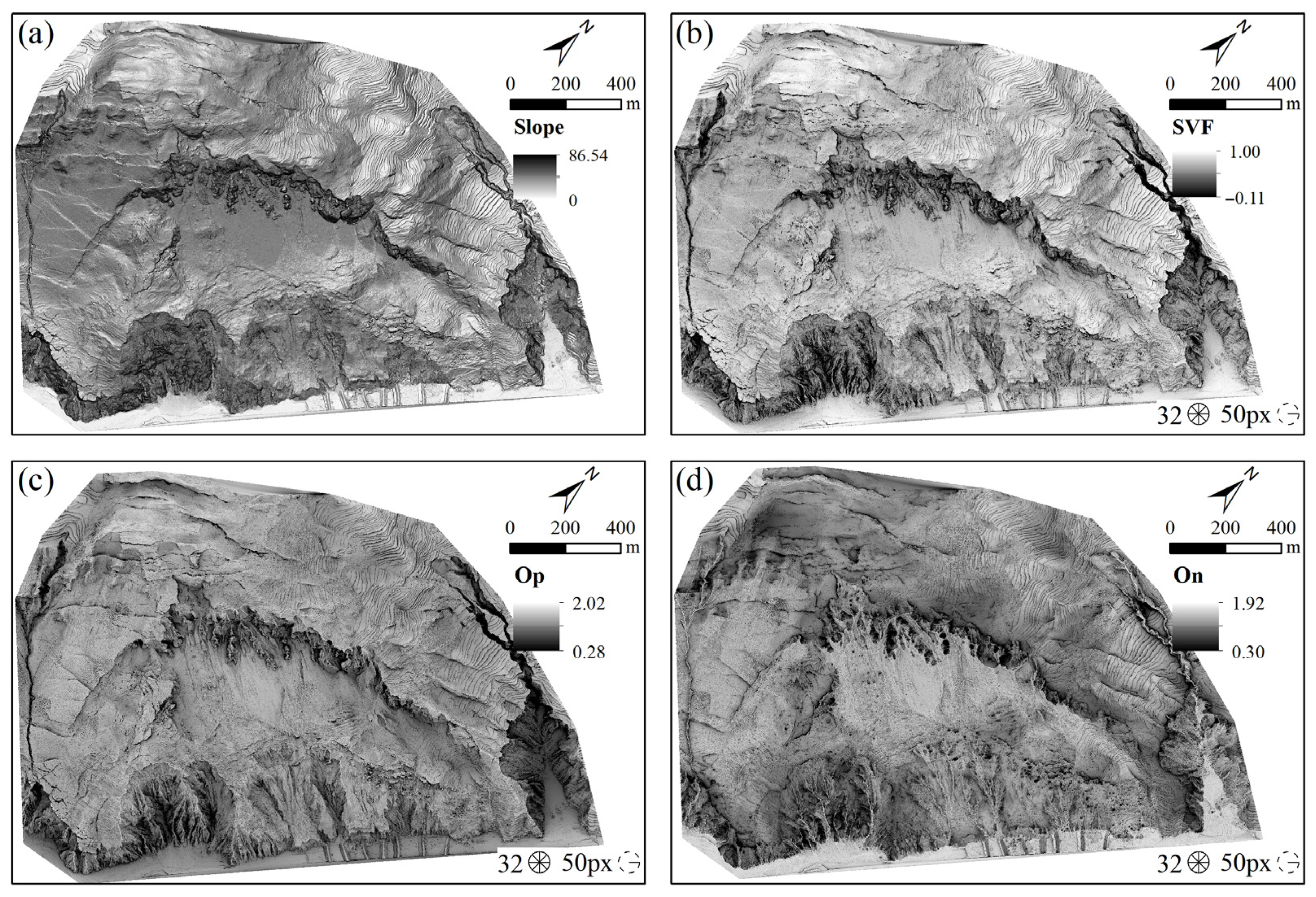

4.1.2. Terrain Slope

4.1.3. Sky View Factor

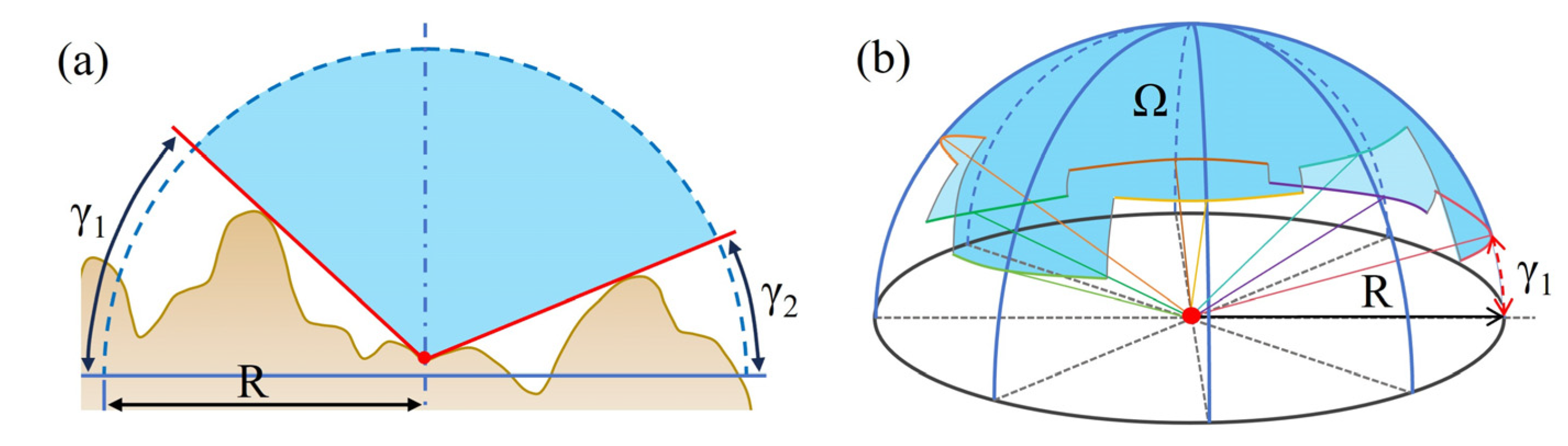

4.1.4. Terrain Openness

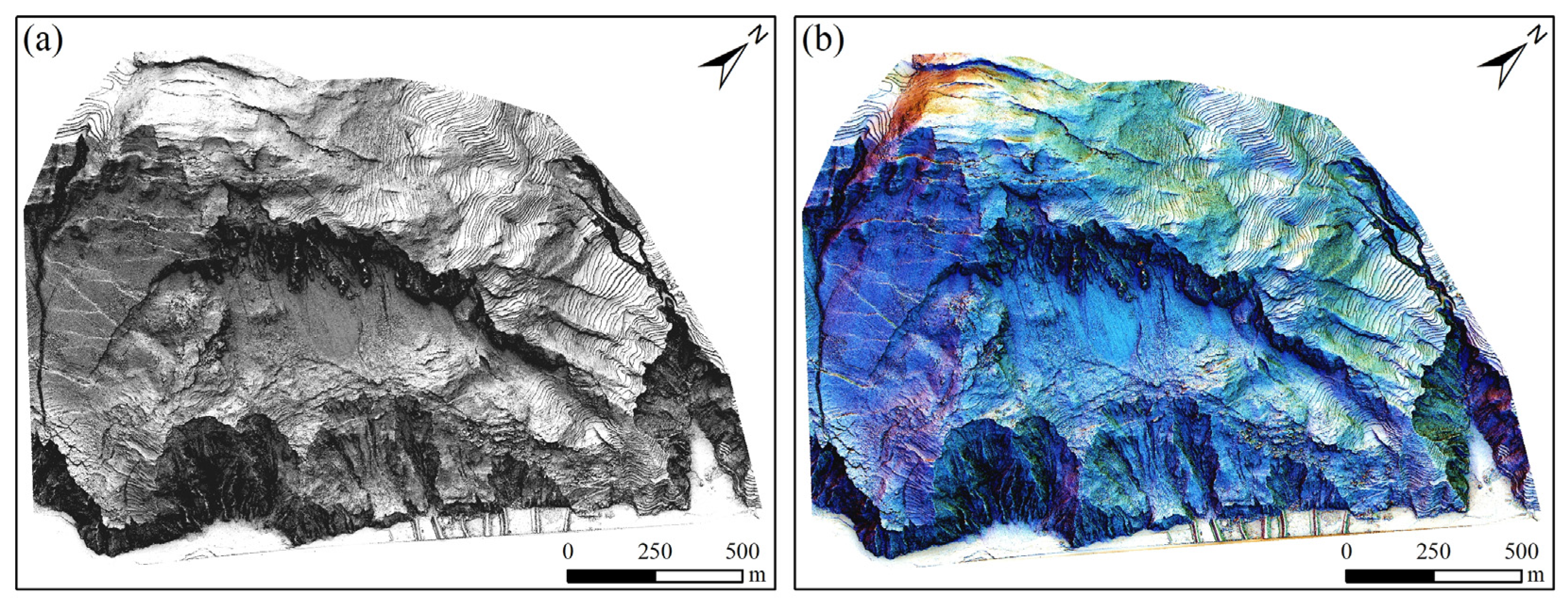

4.2. Image Fusion Technology

4.3. Recognition and Extraction of Landslide Trace

4.3.1. Concentration–Area Fractal Model

4.3.2. Landslide Trace Extraction

5. Results and Discussion

5.1. Analysis of LiDAR-DEM Visualization Results

5.2. Optimization of Calculation Parameters for SVF and Openness

5.2.1. Number of Horizontal Search Directions

5.2.2. Maximum Search Radius

5.3. Analysis of Enhanced Display Results

5.4. Landslide Trace Recognition and Extraction

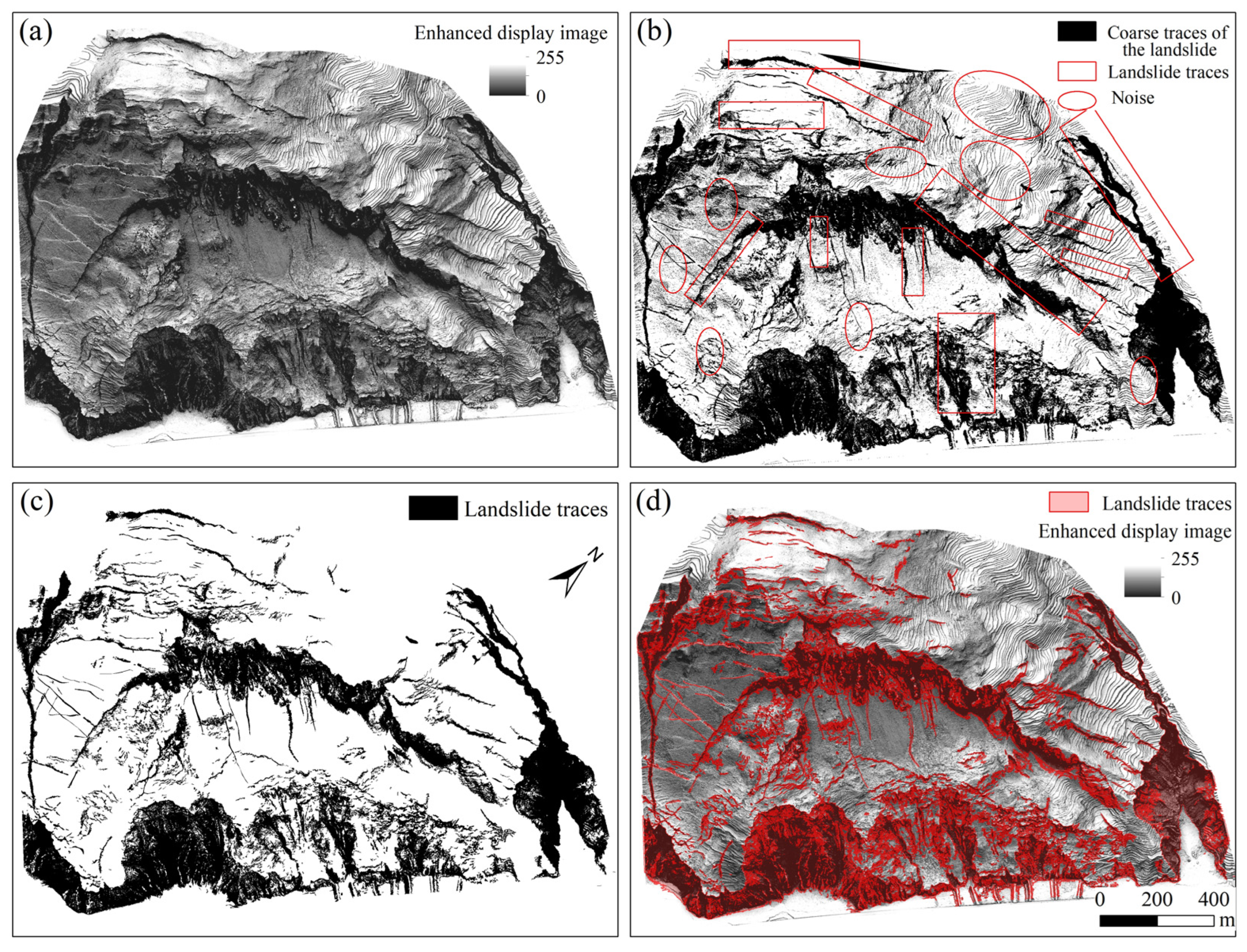

5.4.1. Coarse Identification of Landslide Traces Based on the C-A Fractal Model

5.4.2. Landslide Trace Denoising and Extraction

5.5. Landslide Trace Extraction Comparison

6. Conclusions

- (1)

- Enhanced display of landslide terrain based on LiDAR-DEM. Firstly, visualization images are generated using slope, SVF, openness, and hillshade techniques, and then pixel-level image fusion methods are applied to integrate the features of different visualization images, resulting in an enhanced display image of landslides. This image enhances the capability to identify typical landslide geomorphic features such as cracks at the rear edge, landslide walls, erosion gullies, landslide steps, and erosion areas at the front edge, facilitating the accurate extraction of landslide information.

- (2)

- Landslide trace extraction based on enhanced display images. By utilizing the image value characteristics of landslide and non-landslide areas in the enhanced display images, a threshold is obtained through a fractal model for image segmentation. Subsequently, the Mean-Shift algorithm is employed for denoising, which enables the effective extraction of landslide traces and achieves semi-automated landslide trace extraction, overcoming the limitations of traditional methods that rely on manual threshold selection for landslide trace recognition.

- (3)

- Framework for enhanced landslide terrain display and trace recognition. This paper presents a method for enhanced landslide terrain display and trace recognition based on airborne LiDAR data, integrating various terrain visualization techniques and image fusion technologies to achieve enhanced display of landslide terrain and integrating fractal models with denoising algorithms for trace recognition and extraction. This framework optimizes the presentation of terrain enhancement visualization features and trace recognition in landslide-prone areas, enhancing the accuracy and efficiency of landslide trace recognition through the application of multiple integrated technologies.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xu, Q.; Zhao, B.; Dai, K.; Dong, X.; Li, W.; Zhu, X.; Yang, Y.; Xiao, X.; Wang, X.; Huang, J. Remote sensing for landslide investigations: A progress report from China. Eng. Geol. 2023, 321, 107156. [Google Scholar] [CrossRef]

- Albanwan, H.; Qin, R.; Liu, J. Remote sensing-based 3D assessment of landslides: A review of the data, methods, and applications. Remote Sens. 2024, 16, 455. [Google Scholar] [CrossRef]

- Dong, X.; Xu, Q.; She, J.; Li, W.; Liu, F.; Zhou, X. Preliminary study on interpretation of geological hazards in Jiuzhaigou based on multi-source remote sensing data. Geomat. Inf. Sci. Wuhan Univ. 2020, 45, 432–441. [Google Scholar]

- Han, L.; Duan, P.; Wang, F.; Wu, H.; Li, J. Landslide Crack Identification Enhanced by Multi-Feature Fusion Based on Airborne LiDAR-DEM. J. Nat. Disasters 2025, 34, 79–88. [Google Scholar] [CrossRef]

- Shahabi, H.; Homayouni, S.; Perret, D.; Giroux, B. Mapping Complex Landslide Scars Using Deep Learning and High-Resolution Topographic Derivatives from LiDAR Data in Quebec, Canada. Can. J. Remote Sens. 2024, 50, 2418087. [Google Scholar] [CrossRef]

- Chen, T.; Hu, Z.; Wei, L.; Hu, S. Data processing and landslide information extraction based on UAV remote sensing. J. Chin. Geogr. Resour. Sci. 2017, 19, 692–701. [Google Scholar]

- Sun, J.; Yuan, G.; Song, L.; Zhang, H. Unmanned Aerial Vehicles (UAVs) in Landslide Investigation and Monitoring: A Review. Drones 2024, 8, 30. [Google Scholar] [CrossRef]

- Lu, H.; Li, W.; Xu, Q.; Yu, W.; Zhou, S.; Li, Z.; Zhan, W.; Li, W.; Xu, S.; Zhang, P. Active landslide detection using integrated remote sensing technologies for a wide region and multiple stages: A case study in southwestern China. Sci. Total Environ. 2024, 931, 172709. [Google Scholar] [CrossRef] [PubMed]

- Glenn, N.F.; Streutker, D.R.; Chadwick, D.J.; Thackray, G.D.; Dorsch, S.J. Analysis of LiDAR-derived topographic information for characterizing and differentiating landslide morphology and activity. Geomorphology 2006, 73, 131–148. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in landslide investigations: A review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef]

- Görüm, T. Landslide recognition and mapping in a mixed forest environment from airborne LiDAR data. Eng. Geol. 2019, 258, 105155. [Google Scholar] [CrossRef]

- Chen, W.; Li, X.; Wang, Y.; Chen, G.; Liu, S. Forested landslide detection using LiDAR data and the random forest algorithm: A case study of the Three Gorges, China. Remote Sens. Environ. 2014, 152, 291–301. [Google Scholar] [CrossRef]

- Chen, G.; Hao, S.; Jiang, B.; Yu, Y.; Che, Z.; Liu, H.; Yang, R.; Che, Z. Identification and Evaluation of Small Landslides in Dense Vegetation Areas Based on Airborne LiDAR Technology. Remote Sens. Nat. Resour. 2024, 36, 196–205. [Google Scholar]

- Sun, T. Study on Enhanced Display and Identification Method of Landslide Hazard by Airborne LiDAR. Master’s Thesis, Chengdu University of Technology, Chengdu, China, 2021. [Google Scholar]

- Guo, C.; Xu, Q.; Dong, X. Landslide identification based on SVF terrain visualization method--a case study of typical landslide in Danba County, Sichuan Province. J. Chengdu Univ. Technol. Sci. Technol. Ed. 2021, 48, 705–713. [Google Scholar]

- Guo, C.; Xu, Q.; Dong, X.; Li, W.; Zhao, K.; Lu, H.; Ju, Y. Geohazard recognition and inventory mapping using airborne LiDAR data in complex mountainous areas. J. Earth Sci. 2021, 32, 1079–1091. [Google Scholar] [CrossRef]

- Verbovšek, T.; Popit, T.; Kokalj, Ž. VAT method for visualization of mass movement features: An alternative to hillshaded DEM. Remote Sens. 2019, 11, 2946. [Google Scholar] [CrossRef]

- Han, L.; Duan, P.; Liu, J.; Li, J. Research on Landslide Trace Recognition by Fusing UAV-Based LiDAR DEM Multi-Feature Information. Remote Sens. 2023, 15, 4755. [Google Scholar] [CrossRef]

- Pellicani, R.; Argentiero, I.; Manzari, P.; Spilotro, G.; Marzo, C.; Ermini, R.; Apollonio, C. UAV and airborne LiDAR data for interpreting kinematic evolution of landslide movements: The case study of the Montescaglioso landslide (Southern Italy). Geosciences 2019, 9, 248. [Google Scholar] [CrossRef]

- Liu, J.; Hsiao, K.; Shih, P.T. A geomorphological model for landslide detection using airborne LIDAR data. J. Mar. Sci. Technol. 2012, 20, 4. [Google Scholar]

- Lo, C.; Lee, C.; Keck, J. Application of sky view factor technique to the interpretation and reactivation assessment of landslide activity. Environ. Earth Sci. 2017, 76, 375. [Google Scholar] [CrossRef]

- Wang, X.; Fan, X.; Yang, F.; Dong, X. Remote sensing interpretation method of geological hazards in Lush mountainous area. Geomat. Inf. Sci. Wuhan Univ. 2020, 45, 1771–1781. [Google Scholar]

- Yin, C. Extraction of Landslide Features and Analysis of Distribution Correlation Based on Airborne LiDAR. Master’s Thesis, Chongqing Jiaotong University, Chongqing, China, 2021. [Google Scholar]

- Pradhan, B.; Al-Najjar, H.A.; Sameen, M.I.; Mezaal, M.R.; Alamri, A.M. Landslide detection using a saliency feature enhancement technique from LiDAR-derived DEM and orthophotos. IEEE Access 2020, 8, 121942–121954. [Google Scholar] [CrossRef]

- He, Q.; Dong, X.; Li, H.; Deng, B.; Sima, J. A Micro-Topography Enhancement Method for DEMs: Advancing Geological Hazard Identification. Remote Sens. 2025, 17, 920. [Google Scholar] [CrossRef]

- Sestras, P.; Badea, G.; Badea, A.C.; Salagean, T.; Oniga, V.; Roșca, S.; Bilașco, S.; Bruma, S.; Spalević, V.; Kader, S. A novel method for landslide deformation monitoring by fusing UAV photogrammetry and LiDAR data based on each sensor’s mapping advantage in regards to terrain feature. Eng. Geol. 2025, 346, 107890. [Google Scholar] [CrossRef]

- Liao, Z.; Dong, X.; He, Q. Calculating the Optimal Point Cloud Density for Airborne LiDAR Landslide Investigation: An Adaptive Approach. Remote Sens. 2024, 16, 4563. [Google Scholar] [CrossRef]

- Zeybek, M.; Şanlıoğlu, İ. Point cloud filtering on UAV based point cloud. Measurement 2019, 133, 99–111. [Google Scholar] [CrossRef]

- Michałowska, M.; Rapiński, J. A review of tree species classification based on airborne LiDAR data and applied classifiers. Remote Sens. 2021, 13, 353. [Google Scholar] [CrossRef]

- Agüera-Vega, F.; Agüera-Puntas, M.; Martínez-Carricondo, P.; Mancini, F.; Carvajal, F. Effects of point cloud density, interpolation method and grid size on derived Digital Terrain Model accuracy at micro topography level. Int. J. Remote Sens. 2020, 41, 8281–8299. [Google Scholar] [CrossRef]

- Li, P.F.; Zhang, X.C.; Yan, L.; Hu, J.F.; Li, D.; Dan, Y. Comparison of interpolation algorithms for DEMs in topographically complex areas using airborne LiDAR point clouds. Trans. Chin. Soc. Agric. Eng. 2021, 37, 146–153. [Google Scholar]

- Canuto, M.A.; Estrada-Belli, F.; Garrison, T.G.; Houston, S.D.; Acuña, M.J.; Kováč, M.; Marken, D.; Nondédéo, P.; Auld-Thomas, L.; Castanet, C. Ancient lowland Maya complexity as revealed by airborne laser scanning of northern Guatemala. Science 2018, 361, eaau0137. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, A.; Tian, Q.; Ge, W.; Jia, W.; Yao, B.; Yuan, D.; Abdellatif, M. Identification of Fault Lineaments Using Hillshade Maps Based on ALOS-PALSAR DEM: A Case Study in the Western Qinling Region. Seismol. Geol. 2022, 44, 130–149. [Google Scholar]

- Devereux, B.J.; Amable, G.S.; Crow, P. Visualisation of LiDAR terrain models for archaeological feature detection. Antiquity 2008, 82, 470–479. [Google Scholar] [CrossRef]

- Challis, K.; Forlin, P.; Kincey, M. A generic toolkit for the visualization of archaeological features on airborne LiDAR elevation data. Archaeol. Prospect. 2011, 18, 279–289. [Google Scholar] [CrossRef]

- Chiba, T.; Kaneta, S.; Suzuki, Y. Red relief image map: New visualization method for three dimensional data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1071–1076. [Google Scholar]

- Zakšek, K.; Oštir, K.; Kokalj, Ž. Sky-view factor as a relief visualization technique. Remote Sens. 2011, 3, 398–415. [Google Scholar] [CrossRef]

- Yokoyama, R.; Shirasawa, M.; Pike, R.J. Visualizing topography by openness: A new application of image processing to digital elevation models. Photogramm. Eng. Remote Sens. 2002, 68, 257–266. [Google Scholar]

- Doneus, M. Openness as visualization technique for interpretative mapping of airborne lidar derived digital terrain models. Remote Sens 2013, 5, 6427–6442. [Google Scholar] [CrossRef]

- Kokalj, Ž.; Zakšek, K.; Oštir, K.; Pehani, P.; Čotar, K.; Somrak, M. Relief visualization toolbox, ver. 2.2. 1 manual. Remote Sens. 2016, 3, 398–415. [Google Scholar]

- Korvin, G. Fractal Models in the Earth Sciences; Elsevier: Amsterdam, The Netherlands, 1992; Volume 396. [Google Scholar]

- Cheng, Q. The perimeter-area fractal model and its application to geology. Math. Geol. 1995, 27, 69–82. [Google Scholar] [CrossRef]

- Zuo, R.; Wang, J. Fractal/multifractal modeling of geochemical data: A review. J. Geochem. Explor. 2016, 164, 33–41. [Google Scholar] [CrossRef]

- Fernández-Martínez, M.; Sánchez-Granero, M.A. Fractal dimension for fractal structures. Topol. Its Appl. 2014, 163, 93–111. [Google Scholar] [CrossRef]

- Sun, W.; Xu, G.; Gong, P.; Liang, S. Fractal analysis of remotely sensed images: A review of methods and applications. Int. J. Remote Sens. 2006, 27, 4963–4990. [Google Scholar] [CrossRef]

- Ge, X.; Wu, B.; Li, Y.; Hu, H. A multi-primitive-based hierarchical optimal approach for semantic labeling of ALS point clouds. Remote Sens. 2019, 11, 1243. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Saadi, S.B. Automated liver and tumor segmentation based on concave and convex points using fuzzy c-means and mean shift clustering. Measurement 2020, 150, 107086. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Anand, S.; Mittal, S.; Tuzel, O.; Meer, P. Semi-supervised kernel mean shift clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1201–1215. [Google Scholar] [CrossRef]

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Böhner, J. System for automated geoscientific analyses (SAGA) v. 2.1.4. Geosci. Model Dev. 2015, 8, 1991–2007. [Google Scholar] [CrossRef]

- Kokalj, Ž.; Somrak, M. Why not a single image? Combining visualizations to facilitate fieldwork and on-screen mapping. Remote Sens. 2019, 11, 747. [Google Scholar] [CrossRef]

- Li, Y.; Chen, G.; Han, Z.; Zheng, L.; Zhang, F. A hybrid automatic thresholding approach using panchromatic imagery for rapid mapping of landslides. GIScience Remote Sens. 2014, 51, 710–730. [Google Scholar] [CrossRef]

| Equipment | Metric | Parameter |

|---|---|---|

| LiDAR System | Range/m | 450 |

| Range Accuracy/cm | ±2 | |

| Number of Returns | 5 | |

| Laser Wavelength/nm | 905 | |

| POS | Horizontal Accuracy/cm | 1 |

| Vertical Accuracy/cm | 1.5 | |

| Mapping Camera | Effective Focal Length/mm | 2000 |

| Focal Length/nm | 24 | |

| Flight Parameters | Relative Flight Altitude/m | 180 |

| Side Overlap/% | 30 | |

| Flight Speed/(m/s) | 10 | |

| Laser Pulse Rate/kHz | 240 |

| Image Type | Calculation Parameter Settings | Color Gradient | Fusion Method | Fusion Order | Opacity |

|---|---|---|---|---|---|

| SVF | Search radius: 5, number of search directions: 16 | Black to White | Multiply | 4 | 25% |

| Op | Search radius: 5, number of search directions: 16 | Black to White | Overlay | 3 | 50% |

| On | Search radius: 5, number of search directions: 16 | White to Black | Overlay | 2 | 50% |

| Slope | Black to White | Luminosity | 1 | 50% | |

| Hillshade | SEA: 35°, SAA: 45°, 135°, and 225° | Black to White | Opacity | 0 | 100% |

| RGB-PCA-Hillshade | RGB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lv, J.; Lu, C.; Ye, M.; Long, Y.; Li, W.; Yang, M. Enhanced Landslide Visualization and Trace Identification Using LiDAR-Derived DEM. Sensors 2025, 25, 4391. https://doi.org/10.3390/s25144391

Lv J, Lu C, Ye M, Long Y, Li W, Yang M. Enhanced Landslide Visualization and Trace Identification Using LiDAR-Derived DEM. Sensors. 2025; 25(14):4391. https://doi.org/10.3390/s25144391

Chicago/Turabian StyleLv, Jie, Chengzhuo Lu, Minjun Ye, Yuting Long, Wenbing Li, and Minglong Yang. 2025. "Enhanced Landslide Visualization and Trace Identification Using LiDAR-Derived DEM" Sensors 25, no. 14: 4391. https://doi.org/10.3390/s25144391

APA StyleLv, J., Lu, C., Ye, M., Long, Y., Li, W., & Yang, M. (2025). Enhanced Landslide Visualization and Trace Identification Using LiDAR-Derived DEM. Sensors, 25(14), 4391. https://doi.org/10.3390/s25144391