Abstract

In recent years, the use of photoplethysmography (PPG)-based heart rate detection has gained considerable attention as a cost-effective alternative to conventional electrocardiography (ECG) for applications in healthcare and fitness tracking. Although deep learning methods have shown promise in heart rate estimation and motion artifact removal from PPG signals recorded during physical activity, their computational requirements and need for extensive training data make them less practical for real-world conditions when ground truth data is unavailable for calibration. This study presents a one-size-fits-all approach for heart rate estimation during physical activity that employs aggregation-based techniques to track heart rate and minimize the effects of motion artifacts, without relying on complex machine learning or deep learning techniques. We evaluate our method on four publicly available datasets—PPG-DaLiA, WESAD, IEEE_Training, and IEEE_Test, all recorded using wrist-worn devices—along with a new dataset, UTOKYO, which includes PPG and accelerometer data collected from a smart ring. The proposed method outperforms the CNN ensemble model for the PPG-DaLiA dataset and the IEEE_Test dataset and reduces the mean absolute error (MAE) by 1.45 bpm and 5.71 bpm, respectively, demonstrating that effective signal processing techniques can match the performance of more complex deep learning models without requiring extensive computational resources or dataset-specific tuning.

1. Introduction

In recent years, there has been a rapid increase in the use of smart wearable devices, such as smartwatches and smart rings, which allow individuals to monitor various physiological parameters in real time [,,]. Namely, the implementation of photoplethysmography (PPG) over conventional electrocardiography (ECG) has gained considerable attention due to its non-invasive and cost-effective nature. Whereas an ECG device measures the heart’s electrical activity through electrodes placed on the body, a PPG device typically uses a light-emitting diode (LED) to shine light into the skin while a photodetector measures the amount of light that is either reflected or transmitted through the tissue. Due to the pulsatile nature of the circulatory system, changes in blood volume are reflected in the absorbed light, which can be used to derive health metrics, including heart rate (HR) [,].

In practice, PPG signals are often collected from sensors placed on the wrist or fingers, which can lead to motion artifacts (MAs), especially during physical activity. MAs can compromise the reliability of the measured PPG signal, leading to inaccuracies in heart rate detection and other derived metrics [,]. An accurate measurement of heart rate during exercise is especially useful as it provides valuable insights into intensity, recovery, and training effectiveness [,]. Beyond fitness monitoring, accurate heart rate measurement during physical movement has applications in clinical contexts, where it can assist in the monitoring of patients with cardiovascular conditions during daily activities [,,].

Despite the growing interest in PPG-based monitoring, the development of algorithms to process PPG signals and mitigate the effects of motion artifacts remains challenging. Spectrum-based approaches form the basis of many of these algorithms, leveraging time-frequency spectra derived from both PPG and acceleration signals to identify and remove periodic components caused by motion artifacts and isolate the frequencies associated with the heart rate []. Notable algorithms include SpaMa [] and its enhanced version, SpaMaPlus [], which rely on spectral analysis and focus on identifying and removing peaks in the acceleration spectrum from the PPG spectrum to mitigate motion artifacts. The highest remaining peak in the PPG spectrum is then used to calculate the heart rate. SpaMaPlus improves upon SpaMa by incorporating a mean filter over recent heart rate estimates and implementing a heart rate tracking step and a reset mechanism to handle abrupt changes in heart rate. More advanced algorithms include TROIKA [] and JOSS [], which leverage sparsity-based spectrum estimation and spectral peak tracking techniques to estimate heart rate during intense physical activity, such as treadmill running. TROIKA employs singular spectrum analysis (SSA) for signal decomposition and reconstruction, discarding the SSA components whose frequencies closely match those of motion artifacts detected from accelerometer signals. JOSS extends this approach by formulating a joint sparse signal recovery model, enabling improved spectrum estimation using both PPG and accelerometer data. The spectral peak tracking mechanism reinforces the heart rate estimation by assuming that the spectral peak corresponding to the heart rate remains constant or shifts minimally between overlapping time windows.

Many existing algorithms rely on subject-specific tuning, where several adjustable parameters are tuned to each session specifically, which limits their generalization to daily life where ground truth data are unavailable for calibration []. Moreover, these algorithms are often evaluated on short-duration datasets, which do not account for the variability in and complexity of longer-term recordings. The use of longer datasets is critical for ensuring real-world application and robust performance across diverse scenarios. In particular, many landmark studies have relied heavily on the IEEE Signal Processing Cup in 2015 datasets [,], which were collected in controlled laboratory settings for a short duration with limited types of physical activities.

More recently, deep learning methods have been proposed as powerful alternatives to classical methods of signal processing and PPG-based heart rate estimation [,,,]. Several studies have demonstrated that deep learning models, such as convolutional neural networks (CNNs) [] and recurrent neural networks (RNNs) [], can outperform traditional algorithms in mitigating motion artifacts and providing accurate heart rate measurements. Biswas et al. combined CNN and LSTM architectures to estimate heart rate and perform biometric identification using pre-processed PPG signals []. Similarly, Shen et al. [] and Shashikumar et al. [] employed a 50-layer ResNeXt CNN and a wavelet transform followed by a CNN, respectively, to detect atrial fibrillation from PPG signals. Reiss et al. [] introduced an end-to-end deep learning framework for heart rate estimation, leveraging convolutional neural networks to process the time-frequency spectra of synchronized PPG and accelerometer signals, which significantly outperformed classical approaches.

Unfortunately, deep learning approaches often come with significant computational costs, requiring high processing power and memory resources. Such requirements pose challenges for their integration into smart wearable devices, which are constrained by limited computational capabilities and battery life [,].

In this work, we demonstrate that it is possible to achieve performance comparable to state-of-the-art deep learning models without relying on machine learning. Our proposed method highlights that, in certain applications, effective signal processing and algorithmic innovations can bridge the gap traditionally addressed by deep learning. This is particularly relevant for scenarios where machine learning may not be feasible due to computational constraints, limited access to annotated training datasets, or preferences for simpler, interpretable solutions. By advancing non-machine learning approaches, we provide a valuable alternative that expands the toolbox of techniques available for wearable applications, supporting the development of lightweight and scalable solutions.

2. Materials and Methods

2.1. Datasets

Reiss et al. [] provide a comprehensive review of the most widely used PPG datasets for HR detection, highlighting their characteristics and summarizing the performance of the common algorithms evaluated on them. In this paper, we evaluate our novel algorithm on these publicly available datasets in addition to a new dataset, UTOKYO, which includes 68 PPG and accelerometer recordings of activity sessions collected using a smart ring.

All the publicly available datasets evaluated in this study were recorded using a wrist-worn PPG device and an ECG device for the ground truth. To match the methods used in [] and to maintain uniformity across all the datasets, the ground truth for the heart rate is calculated over an 8 s sliding window, with a window shift of 2 s. Likewise, the PPG- and accelerometer-derived heart rates are calculated with the same sliding window size and window shift. The choice of the window duration is also supported by the fact that the heart rate does not change instantaneously.

The details of the datasets are outlined in the following paragraphs.

- 1.

- PPG-DaLiA: This dataset includes a single-channel PPG signal sampled at 64 Hz and three-axis accelerometer data sampled at 32 Hz. To maintain uniformity in the analysis, all the signals were sampled to 32 Hz. The dataset comprises 36 h of data for 15 subjects during a progression of daily activities, which includes sitting, climbing stairs, table soccer, cycling, driving, having lunch, walking, and working. More details on this dataset are provided in [].

- 2.

- WESAD: This dataset includes a single-channel PPG signal sampled at 64 Hz and three-axis accelerometer data sampled at 32 Hz. For uniformity, all the signals were sampled to 32 Hz. The dataset comprises data for 15 participants, recorded for approximately 100 min each, and includes a mix of activities, such as reading, watching videos, preparing for and giving a speech, and meditation. More details on this dataset are provided in [].

- 3.

- IEEE_Training: This dataset was created for the IEEE Signal Processing Cup in 2015. It includes two-channel PPG signals and three-axis accelerometer data, all sampled at a rate of 125 Hz. Down-sampling of the signals to 25 Hz was performed to match the sampling rate of the original paper []. The dataset captures 12 treadmill sessions of approximately 5 min each, performed at varying speeds by different subjects. More details on this dataset are provided in [,,].

- 4.

- IEEE_Test: This dataset was also developed for the IEEE Signal Processing Cup in 2015, using the same recording parameters and ground truth conditions as IEEE_Training. However, for this dataset, 8 different subjects each performed 10 sessions of arm activities, which included 4 sessions of mixed arm exercises and 6 sessions of intensive arm exercises, each lasting approximately 5 min. More details on this dataset are provided in [,,].

- 5.

- UTOKYO: This dataset includes 7 warm-up sessions, 14 walking sessions, and 47 running sessions, of which 35 were performed outdoors and 12 were performed on an indoor treadmill. The walking and running sessions lasted between 5 and 10 min, whereas the warm-up sessions lasted between 10 and 15 min. The data was collected for 20 different subjects, and each subject collected at least 2 sessions of data. The PPG data was recorded with a sampling rate of 182 Hz, while the three-axis acceleration data was sampled at a rate of 52 Hz. For uniformity, the signals were resampled to 39 Hz. The ground truth for the heart rate was obtained from a chest-worn ECG device as the mean heart rate calculated over 8 s sliding windows, with a window shift of 2 s. However, when deriving the heart rates from the PPG and acceleration signals, a sliding window of 16 s (with the same window shift size) was used compared to the other datasets to avoid having empty windows after outlier removal, as the signals of this dataset contained more noise. During the analysis, the start times of the smart ring and ECG recordings were aligned to ensure synchronization.

Table 1 summarizes the mean and standard deviation of the ECG-derived reference heart rates across all the datasets, with the UTOKYO dataset further specified by activity type.

Table 1.

Mean and standard deviation of ECG-derived heart rates in beats per minute (bpm)across datasets.

2.2. Methodology

2.2.1. Data Collection for the UTOKYO Dataset

The dataset was collected from 20 participants of Japanese ethnicity between 20 and 30 years of age, all of whom engaged in regular exercise at the time of the data collection. The activities included running, walking, and warm-up exercises. The PPG data was collected using a smart ring (SOXAI RING 1) worn on the index or middle finger of the dominant hand, depending on participant comfort. The participants were fitted with appropriately sized rings, and the device’s position was adjusted at the start of every session to improve signal acquisition. The smart ring recorded two-channel PPG signals using red (655 nm) and infrared (940 nm) light sources. The data was transmitted to a smartphone in real time via Bluetooth Low Energy (BLE) and saved to a smartphone’s storage every 30 s. Recordings shorter than 30 s were excluded from the analysis. The reference HR data was collected at a rate of 1 Hz using a chest-worn Polar H10 N ECG device from Polar Inc.

2.2.2. Proposed Algorithm

The following algorithm was applied uniformly to both the publicly available datasets and the UTOKYO dataset, without any subject-specific or dataset-specific parameter tuning. It should be noted that a better performance can be achieved with the presented method by applying dataset-specific tuning, but the parameters described in this section are presented as a one-size-fits-all solution when calibration is not available.

- A.

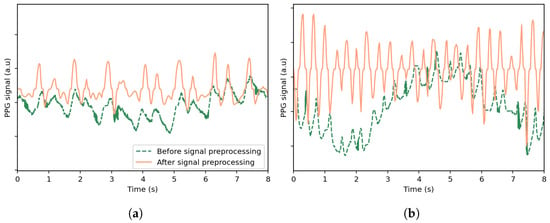

- Signal PreprocessingOutliers in the PPG signal are identified as values that are either negative or exceed three standard deviations above the mean of the positive values in the signal. As such, a value p[n] in the PPG signal is considered an outlier ifwhere and are the mean and standard deviation computed over the positive values of a specified PPG signal.Outlier values are subsequently replaced using linear interpolation between neighboring valid values to ensure continuity. A bandpass filter is then applied to the signal using a fifth-order Butterworth filter to retain frequencies between 0.5 Hz and 5 Hz. Resampling is also performed using cubic interpolation to ensure that the PPG and accelerometer signals have the same sampling frequency. Each signal is first mapped onto a uniformly spaced time axis derived from its original timestamps and then interpolated onto a new uniform time grid at the desired sampling rate. For uniformity, the signals are analyzed using a sliding window and a window shift whose sizes match the analysis of the publicly available datasets. A median filter with a kernel size of 7 and a moving average filter with a window size of 3 are also applied to reduce noise and smooth the signal.These operations are defined in the following equations:where is the output of the median filter, and is the output of the moving average filter.Figure 1 illustrates the effects of preprocessing on selected PPG signal segments from the UTOKYO dataset for both walking and running conditions. As shown, the processed signals exhibit clearer pulse peaks and reduced baseline drift.

Figure 1. Comparison of the original PPG signal and the signal after preprocessing, shown for selected time windows from the UTOKYO dataset for (a) a walking session and (b) a running session. The preprocessing steps include outlier removal with linear interpolation, bandpass filtering, resampling, and smoothing.

Figure 1. Comparison of the original PPG signal and the signal after preprocessing, shown for selected time windows from the UTOKYO dataset for (a) a walking session and (b) a running session. The preprocessing steps include outlier removal with linear interpolation, bandpass filtering, resampling, and smoothing. - B.

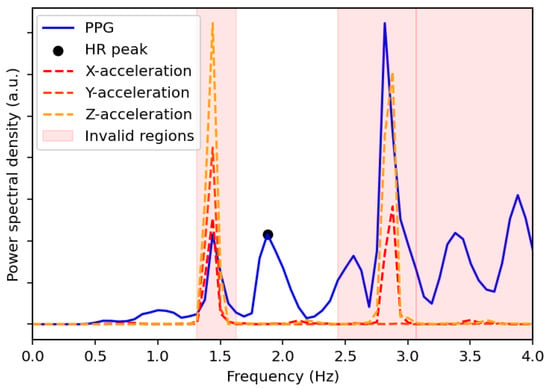

- Spectral AnalysisThe power spectral density (PSD) and its corresponding frequencies are computed using Welch’s method for the three directions of the acceleration signals and the PPG signal. The PSD peaks are subsequently identified for each signal. Only the frequencies of the two highest PSD peaks from each acceleration direction are retained, resulting in a maximum of six candidate frequencies, which can be combined to form a full set :where and denote the frequencies at the first and second highest PSD peaks of the acceleration signal in the direction . To avoid redundancy, duplicate frequencies are removed.The frequencies of the PPG signal within a defined range of ±0.1 Hz around each unique peak frequency in are flagged for removal. The removal range is further extended to include neighboring frequencies if their PSD values are within 5% of the PSD of the removed PPG peak. Additionally, all the frequencies beyond a threshold, defined as 5% greater than the highest peak frequency in , are also removed. The heart rate, HR, for the given window is then determined from the frequency, , corresponding to the highest valid peak remaining in the PPG spectrum using the following equation:Figure 2 shows the PSDs of the PPG signal and acceleration signals as a function of frequency for a specified time window of the UTOKYO dataset. The second highest peak in the spectrum of the z-axis acceleration aligns with the strongest peak in the PPG spectrum, suggesting that this dominant PPG component is a possible motion artifact. This is confirmed by the reference heart rate for the specified time window, which coincides with the highest valid PPG peak located at around 1.88 Hz (113 bpm).

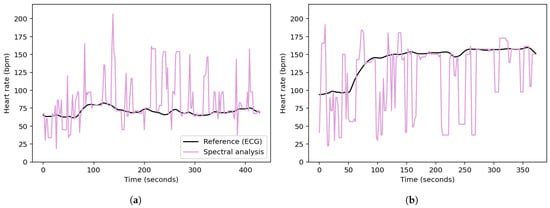

Figure 2. Power spectral density comparison between the PPG signal and the x-, y-, and z-acceleration signals for a selected time window of the UTOKYO dataset. The estimated HR peak from the spectral analysis is also labeled, which corresponds to 113 bpm and matches the ground truth measurement. The regions with invalid peaks are also shaded in red.Examples of the spectral analysis step applied to specified walking and running sessions from the UTOKYO dataset are shown in panels (a) and (b) of Figure 3, respectively.

Figure 2. Power spectral density comparison between the PPG signal and the x-, y-, and z-acceleration signals for a selected time window of the UTOKYO dataset. The estimated HR peak from the spectral analysis is also labeled, which corresponds to 113 bpm and matches the ground truth measurement. The regions with invalid peaks are also shaded in red.Examples of the spectral analysis step applied to specified walking and running sessions from the UTOKYO dataset are shown in panels (a) and (b) of Figure 3, respectively. Figure 3. Heart rates obtained from the spectral analysis of (a) a walking session and (b) a running session from the UTOKYO dataset.

Figure 3. Heart rates obtained from the spectral analysis of (a) a walking session and (b) a running session from the UTOKYO dataset. - C.

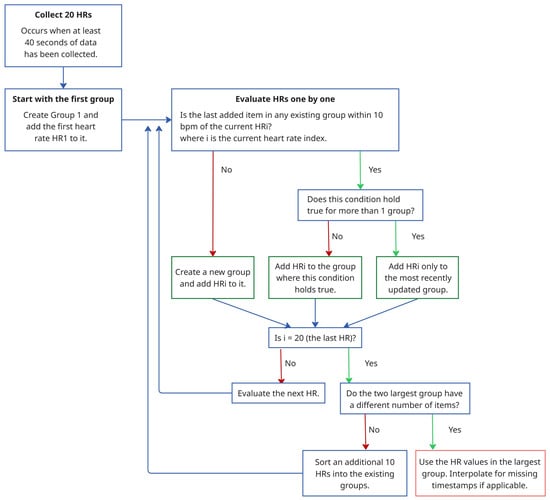

- Stability- and Aggregation-Based Heart Rate Tracking (SAB-HRT) To ensure accurate heart rate estimation, a heart rate tracking step is implemented. Like many existing algorithms, this step aims to prevent large fluctuations in heart rate between subsequent measurements in a sequence. This step can be applied continuously and begins when 20 HR values are collected, which occurs every 40 s when using a sliding window with a 2 s shift. The algorithm traverses these HR values sequentially to form stability groups, starting by assigning the first HR value to an initial group. Then, for each subsequent HR, the algorithm verifies whether the difference between the last element of each group is within 10 bpm of the current HR. If the difference is within this threshold for one or more groups, the HR is added to the most recently updated group only. Otherwise, a new group is created to accommodate the HR value. Thus, each HR value should be assigned to a single group only. After all the HR values are grouped, the largest group is selected as representing the HR values for this time period. At timestamps with missing HR values within the selected group, the HR values are interpolated to ensure continuity. If multiple groups have the same size, the algorithm extends the analysis window by half its original size (20 s) and repeats the grouping process. The logic for this process is visualized in the flowchart in Figure 4. As a final step, a continuous moving average filter with a 30 s window is applied to smooth the output signal and enhance signal readability. Importantly, the 30 s window is a conservative choice; our tests show that the size can be reduced to 10 s while keeping the MAE difference minimal.

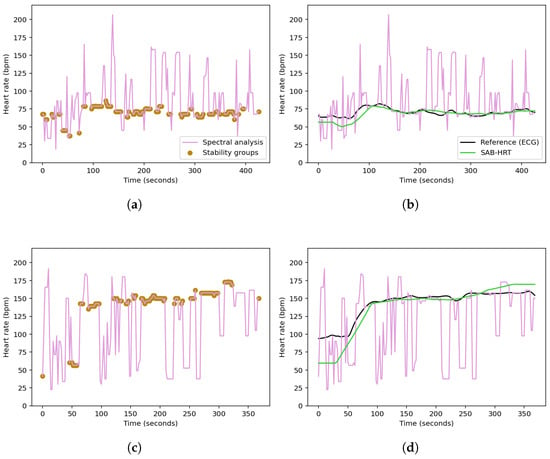

Figure 4. Flowchart illustrating the SAB-HRT procedure.Panels (a) and (c) in Figure 5 display the stability group points formed by the SAB-HRT method that were identified from the heart rate estimates obtained via the spectral analysis for the specified walking and running sessions, respectively, shown in Figure 3. Figure 5b,d illustrate the process of connecting and smoothing the selected points to address abrupt transitions.

Figure 4. Flowchart illustrating the SAB-HRT procedure.Panels (a) and (c) in Figure 5 display the stability group points formed by the SAB-HRT method that were identified from the heart rate estimates obtained via the spectral analysis for the specified walking and running sessions, respectively, shown in Figure 3. Figure 5b,d illustrate the process of connecting and smoothing the selected points to address abrupt transitions. Figure 5. Identification of the stability group points from the spectral heart rate estimates for a specified (a) walking session and (c) running session from the UTOKYO dataset. The post-processing step, where the selected points are connected and smoothed to produce a continuous heart rate signal, are shown in (b) and (d), respectively.

Figure 5. Identification of the stability group points from the spectral heart rate estimates for a specified (a) walking session and (c) running session from the UTOKYO dataset. The post-processing step, where the selected points are connected and smoothed to produce a continuous heart rate signal, are shown in (b) and (d), respectively.

3. Results and Discussion

The results are presented as the mean absolute error (MAE) in beats per minute (bpm) for the current method without any subject-specific or dataset-specific tuning, with the same parameters applied uniformly across all the datasets. The MAE results for the publicly available datasets are compared to those of the classical methods (SpaMa, SpaMaPlus, and Schaeck2017) as well as the average and ensemble CNN models presented in [], which employed leave-one-session-out cross-validation.

The results obtained demonstrate that non-machine learning solutions can serve as a viable alternative for heart rate estimation. Specifically, our proposed method reduced the MAE for the PPG-DaLiA dataset by 1.45 bpm, as shown in Table 2. While the CNN ensemble previously achieved the lowest MAE of 7.65 ± 4.2 bpm, our method obtained an MAE of 6.2 ± 2.0 bpm. Likewise, the MAEs for the WESAD dataset showed less than 1 bpm difference between the CNN ensemble and our proposed method, where MAEs of 7.47 ± 3.3 bpm and 8.1 ± 2.6 bpm were obtained, respectively. For the WESAD dataset, our method outperformed classical approaches (SpaMa, SpaMaPlus, and Schaeck2017) as well as the CNN average by achieving lower MAE values, as detailed in Table 3. For the IEEE_Test dataset, our method also demonstrated a lower MAE (10.8 ± 9.6 bpm) compared to all the other methods, except for SpaMa (9.2 ± 11.4 bpm).

Table 2.

Evaluation results on the dataset PPG-DaLiA achieved with the three classical methods (leave-one-session-out cross-validation), the best performing CNN architecture (ensemble prediction; results averaging 7 repetitions), and this paper’s proposed algorithm. The results are given in MAE (bpm) across all the subjects.

Table 3.

Evaluation results on the dataset WESAD achieved with the three classical methods (leave-one-session-out cross-validation), the best performing CNN architecture (ensemble prediction; results averaging 7 repetitions), and this paper’s proposed algorithm. The results are given in MAE (bpm) across all the subjects.

However, our algorithm did not perform equally well across all the datasets. Namely, on the IEEE_Training dataset, our method yielded an MAE of 6.5 ± 3.6 bpm, which was higher than that of all the other evaluated methods except SpaMa. For instance, the CNN ensemble achieved a lower MAE of 4 ± 5.4 bpm. Detailed results for the IEEE_Training and IEEE_Test datasets are shown in Table 4 and Table 5, respectively.

Table 4.

Evaluation results on the dataset IEEE_Training achieved with the three classical methods (leave-one-session-out cross-validation), the best performing CNN architecture (ensemble predictions), and this paper’s proposed algorithm. The results are given in MAE (bpm) across all the subjects.

Table 5.

Evaluation results on the dataset IEEE_Test achieved with the three classical methods (leave-one-session-out cross-validation), the best performing CNN architecture (ensemble predictions), and this paper’s proposed algorithm. The results are given in MAE (bpm) across all the subjects.

One objective of collecting the UTOKYO dataset was to explore the use of finger PPG and accelerometer signals, as all the other datasets were recorded using wrist-worn devices. A major challenge when analyzing signals from a smart ring is the high level of motion artifacts. These elevated noise levels result from the smart ring being prone to rotating and shifting around the finger during movement, unlike wrist-worn devices, such as smart watches, which usually maintain a fixed orientation. Additionally, 35 running sessions from the dataset were performed outdoors, which exhibited greater motion artifacts than those obtained indoors under laboratory conditions, potentially due to increased exposure to ambient light and greater variability in movements.

To quantify the influence of motion artifacts on the PPG signals of each dataset, we calculated three signal quality indices (SQIs) previously defined by Song et al. []. The P index indicates the presence of high-frequency noise by measuring the reduction in local extrema after smoothing. The Q index reflects the influence of baseline wander, and the R index assesses motion artifact contamination based on the variability in the available peak and valley points. Higher values across all three indices indicate better signal quality. To facilitate a comparison between datasets, we also report the relative signal quality index (rSQI), which expresses the signal quality of each dataset relative to the UTOKYO dataset. The SQIs are presented in Table 6. All the rSQI values are positive, indicating that the UTOKYO dataset contains the noisiest PPG signals among all the datasets evaluated.

Table 6.

Average PPG signal quality for each dataset using the P, Q, and R indices and the relative signal quality (rSQI) with respect to the UTOKYO dataset.

The three classical methods and the current method were evaluated on the UTOKYO dataset. For the classical methods, the results were obtained with session-specific tuning. Previous findings show that these methods are highly sensitive to parameter setting [], which motivated the use of session-specific tuning to allow for a comparison between the lowest achievable MAEs and those of our method, which maintained fixed and unchanged parameters. The adjusted parameters for the SpaMa methods included the number of PPG and acceleration peaks considered in the spectral analysis, as well as the minimum frequency difference required to remove overlapping peaks []. The adjusted parameters for the Schaeck2017 algorithm included the maximum allowable difference between two consecutive heart rates, the standard deviation used in the Gaussian band stop filter, and the size of the correlation window [].

The proposed method evaluated on the UTOKYO dataset achieved an overall MAE of 7.9 ± 8.2 bpm, whereas the SpaMa and SpaMaPlus methods yielded an MAE of 37.6 ± 26.2 bpm and 32.3 ± 26.0 bpm, respectively. The Schaeck2017 algorithm obtained an MAE of 14.1 ± 15.7 bpm. Results are summarized in Table 7.

Table 7.

Evaluation results on the dataset UTOKYO achieved with the three classical methods (session-optimized) and this paper’s proposed algorithm. The results are given in MAE (bpm) across all the subjects.

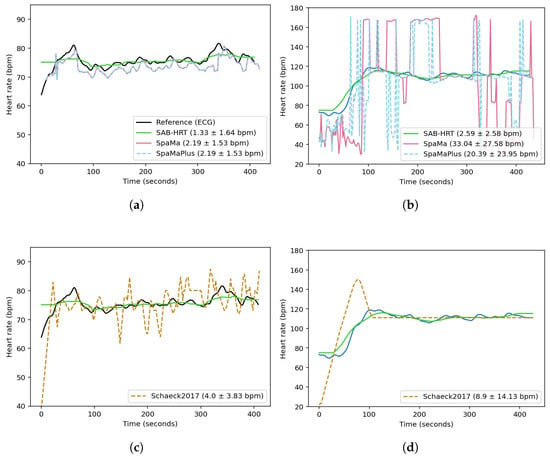

Figure 6 compares the reconstructed heart rates for two sessions from the UTOKYO dataset using the three classical methods and the current method. Figure 6a,c correspond to an indoor walking session recorded under low-noise conditions. The SpaMa and SpaMaPlus methods both yielded MAEs of 2.19 bpm, while the proposed algorithm achieved an MAE of 1.33 bpm, and theSchaeck2017 method obtained an MAE of 4.0 bpm. In contrast, Figure 6b,d present an outdoor running session with substantially higher noise levels. Under these conditions, the performance of SpaMa and SpaMaPlus deteriorates significantly, with MAEs of 33.0 and 20.4 bpm, respectively. The current method remains more robust in this high-noise environment, achieving an MAE of 2.6 bpm. The Schaeck2017 method yields an MAE of 8.9 bpm. These results suggest that while SpaMa and SpaMaPlus can be effective in evaluating signals with less motion artifacts, their application may be limited in real-world scenarios.

Figure 6.

Comparison of the reconstructed heart rates obtained using the three classical methods and this paper’s proposed algorithm across two sessions from the UTOKYO dataset. Panels (a,c) show the results for a walking session (low motion artifacts). Panels (b,d) show the results for an outdoor running session (high motion artifacts). The legend labels report the method name followed by the MAE ± standard deviation (in bpm), calculated over time for these sessions.

Although the performance of deep learning models is compared to our proposed method for the publicly available datasets, a comparison was not conducted for the UTOKYO dataset. This decision was based on the complexity involved in implementing and validating deep learning models within the scope of this work. Thus, further investigation into the application of deep learning methods for finger-based PPG and accelerometer data is warranted.

A common issue with many tracking algorithms is the accumulation of errors over time. Our method tries to mitigate this issue by not relying solely on the previous heart rate value but instead considering multiple values. As such, even in cases where the algorithm makes an incorrect prediction, the error propagation can be limited. However, this improvement comes at the cost of requiring a longer time window, which may be a limitation for real-time applications that demand immediate feedback.

In terms of computational efficiency, our method performed comparably to the three classical approaches. Specifically, across all five datasets, our method was on average 28% faster, albeit using 6% more memory than the Schaeck2017 method, which was specifically designed for embedded applications and reported to be up to 80 times faster than the JOSS algorithm []. By contrast, our method was approximately 2% slower than SpaMa and 8% slower than SpaMaPlus, while requiring 52% and 49% less memory, respectively. Regarding deep learning-based methods, the CNN architecture presented in [] utilizes 8.5 million parameters and requires 69.5 million computations per heart rate estimation, making it unsuitable for deployment on resource-constrained devices. However, the authors also introduce a resource-optimized CNN model with only 26 K parameters, designed to operate within a 32 KB memory footprint, which increases the MAE of the PPG-DaLiA and WESAD datasets to 9.99 ± 5.9 bpm and 8.2 ± 3.6 bpm, respectively. As noted previously, we did not implement this CNN model in the current study, and further investigation is warranted for a complete assessment of its computational efficiency.

Furthermore, the final post-processing step of our algorithm includes a moving average filter. We chose to incorporate this step because commercial PPG- and ECG-based wearables commonly apply similar post-processing to enhance the readability of the displayed heart rate signal for users. However, depending on the target application, particularly those requiring a faster response time, it may be desirable to reduce the filter’s window size. Thus, to evaluate the influence of the window size, we repeated the analysis using a shorter 10-second window. The resulting changes in the MAE were minimal, with variations remaining within ±1.3 bpm across all the datasets. Specifically, the MAE increased for PPG-DaLiA (+0.5 bpm), UTOKYO (+0.6 bpm), and WESAD (+1.2 bpm), while it decreased for IEEE_Test (–0.4 bpm) and IEEE_Training (–1.3 bpm).

An additional drawback of the current method is its reliance on the presence of detectable PSD peaks. In scenarios where the input signal is entirely corrupted or absent, such as when the sensor loses consistent contact with the skin, this method may be less reliable than machine learning or deep learning models that can leverage other data sources and health trends to generate HR estimates.

4. Conclusions

Our approach demonstrates that non-machine learning methods can deliver performance comparable to more complex deep learning models, offering a viable alternative for heart rate estimation. This method is especially valuable in contexts where deep learning may not be suitable, such as when computational resources are limited, annotated datasets are scarce, or simpler, more interpretable solutions are preferred. By leveraging signal processing and algorithmic solutions, our method expands the toolkit for wearable technology, providing an efficient and lightweight solution.

Moreover, many existing algorithms depend on subject-specific or dataset-specific tuning, which can restrict their generalization in real-world applications where ground truth data is unavailable for calibration. To address this challenge, our method proposes a one-size-fits-all approach, with parameters not tailored to any specific dataset, yet still achieving results comparable to other algorithms. However, in cases where calibration data is available, additional tuning of the parameters can further improve performance.

Author Contributions

Conceptualization, S.C.C. and F.M.; methodology, S.C.C. and F.M.; software, S.C.C.; validation, S.C.C.; formal analysis, S.C.C.; investigation, S.C.C.; resources, F.M., H.T. and Y.Y.; data curation, F.M., H.T. and Y.Y.; writing—original draft preparation, S.C.C.; writing—review and editing, S.C.C. and Y.Y.; supervision, Y.Y., T.W. and J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the Japan Science and Technology (JST) Agency MOONSHOT R&D Grant JPMJMS2021.

Institutional Review Board Statement

Ethical approval for this study was granted by the Ethics Review Board at The University of Tokyo under Application No. 21-11, ensuring compliance with ethical research guidelines involving human subjects. All the subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Review Board of The University of Tokyo (No. 21-11).

Informed Consent Statement

Informed consent was obtained from all the subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to institutional and commercial restrictions. Access can be granted for non-commercial, academic research purposes only and is subject to approval by SOXAI Inc. and The University of Tokyo.

Conflicts of Interest

SOXAI Inc., a full-time or part-time employer of several authors, supported the study by providing salary to the authors, measurement equipment, and associated firmware but had no role in the design of the study; in the collection, analyses, or interpretation of data; or in the decision to publish the results. The Chief Executive Officer (CEO) of SOXAI Inc, Dr. T. Watanabe, was involved only in a supervisory capacity to facilitate access to measuring devices and firmware. SOXAI Inc. does not intend to commercialize, patent, or incorporate the proposed algorithm into its products.

References

- Castaneda, D.; Esparza, A.; Ghamari, M.; Soltanpur, C.; Nazeran, H. ’A review on wearable photoplethysmography sensors and their potential future applications in health care. Int. J. Biosens. Bioelectron. 2018, 4, 195–202. [Google Scholar] [PubMed]

- Rawassizadeh, R.; Price, B.A.; Petre, M. Wearables: Has the age of smartwatches finally arrived? Commun. ACM 2014, 58, 45–47. [Google Scholar] [CrossRef]

- Kim, K.B.; Baek, H.J. Photoplethysmography in wearable devices: A comprehensive review of technological advances, current challenges, and future directions. Electronics 2023, 12, 2923. [Google Scholar] [CrossRef]

- Weiler, D.T.; Shull, C.L.; Craighead, P.M.; Whitaker, S.K. Wearable heart rate monitor technology accuracy in research: A comparative study between PPG and ECG technology. Proc. Hum. Factors Ergonom. Soc. Annu. Meet. 2017, 61, 1221–1225. [Google Scholar] [CrossRef]

- Park, J.; Seok, H.S.; Kim, S.S.; Shin, H. Photoplethysmogram analysis and applications: An integrative review. Front. Physiol. 2022, 12, 808451. [Google Scholar] [CrossRef]

- Pollreisz, D.; TaheriNejad, N. Detection and removal of motion artifacts in PPG signals. Mobile Netw. Appl. 2022, 27, 728–738. [Google Scholar] [CrossRef]

- Maeda, Y.; Sekine, M.; Tamura, T. Relationship between measurement site and motion artifacts in wearable reflected photoplethysmography. J. Med. Syst. 2010, 35, 969–976. [Google Scholar] [CrossRef]

- Povea, C.E.; Cabrera, A. Practical usefulness of heart rate monitoring in physical exercise. Rev. Colomb. Cardiol. 2018, 25, e9–e13. [Google Scholar] [CrossRef]

- Schneider, C.; Hanakam, F.; Wiewelhove, T.; Döweling, A.; Kellmann, M.; Meyer, T.; Ferrauti, A. Heart rate monitoring in team sports—A conceptual framework for contextualizing heart rate measures for training and recovery prescription. Front. Physiol. 2018, 9, 639. [Google Scholar] [CrossRef]

- Huikuri, H.V.; Stein, P.K. Heart rate variability in risk stratification of cardiac patients. Prog. Cardiovasc. Dis. 2013, 56, 153–159. [Google Scholar] [CrossRef]

- Batalik, L.; Dosbaba, F.; Hartman, M.; Batalikova, K.; Spinar, J. Benefits and effectiveness of using a wrist heart rate monitor as a telerehabilitation device in cardiac patients: A randomized controlled trial. Medicine 2020, 99, 19556. [Google Scholar] [CrossRef] [PubMed]

- Falter, M.; Budts, W.; Goetschalckx, K.; Cornelissen, V.; Buys, R. Accuracy of Apple Watch measurements for heart rate and energy expenditure in patients with cardiovascular disease: Cross-sectional study. JMIR mHealth uHealth 2019, 7, 11889. [Google Scholar] [CrossRef] [PubMed]

- Ismail, S.; Akram, U.; Siddiqi, I. Heart rate tracking in photoplethysmography signals affected by motion artifacts: A review. EURASIP J. Adv. Signal Process. 2021, 2021, 5. [Google Scholar] [CrossRef]

- Salehizadeh, S.M.; Dao, D.; Bolkhovsky, J.; Cho, C.; Mendelson, Y.; Chon, K.H. A novel time-varying spectral filtering algorithm for reconstruction of motion artifact corrupted heart rate signals during intense physical activities using a wearable photoplethysmogram sensor. Sensors 2015, 16, 10. [Google Scholar] [CrossRef]

- Reiss, A.; Schmidt, P.; Indlekofer, I.; Laerhoven, K.V. PPG-based heart rate estimation with time-frequency spectra: A deep learning approach. In Proceedings of the 2018 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Singapore, 8–12 October 2018; pp. 1283–1292. [Google Scholar]

- Zhang, Z.; Pi, Z.; Liu, B. TROIKA: A general framework for heart rate monitoring using wrist-type photoplethysmographic signals during intensive physical exercise. IEEE Trans. Biomed. Eng. 2015, 62, 522–531. [Google Scholar] [CrossRef]

- Zhang, Z. Photoplethysmography-based heart rate monitoring in physical activities via joint sparse spectrum reconstruction. IEEE Trans. Biomed. Eng. 2015, 62, 1902–1910. [Google Scholar] [CrossRef]

- Reiss, A.; Indlekofer, I.; Schmidt, P.; Laerhoven, K.V. Deep PPG: Large-scale heart rate estimation with convolutional neural networks. Sensors 2019, 19, 3079. [Google Scholar] [CrossRef]

- Chang, X.; Li, G.; Xing, G.; Zhu, K.; Tu, L. DeepHeart: A deep learning approach for accurate heart rate estimation from PPG signals. ACM Trans. Sensor Networks TOSN 2021, 17, 1–8. [Google Scholar] [CrossRef]

- Biswas, D.; Everson, L.; Liu, M.; Panwar, M.; Verhoef, B.E.; Patki, S.; Kim, C.H.; Acharyya, A.; Hoof, C.V.; Konijnenburg, M.; et al. CorNET: Deep learning framework for PPG-based heart rate estimation and biometric identification in ambulant environment. IEEE Trans. Biomed. Circuits Syst. 2019, 13, 282–291. [Google Scholar] [CrossRef]

- Naeini, E.K.; Sarhaddi, F.; Azimi, I.; Liljeberg, P.; Dutt, N.; Rahmani, A.M. A deep learning–based PPG quality assessment approach for heart rate and heart rate variability. ACM Trans. Comput. Healthcare 2023, 4, 1–22. [Google Scholar] [CrossRef]

- Chowdhury, S.S.; Hasan, M.S.; Sharmin, R. Robust heart rate estimation from PPG signals with intense motion artifacts using cascade of adaptive filter and recurrent neural network. In Proceedings of the TENCON 2019–2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–20 October 2019; pp. 1952–1957. [Google Scholar]

- Shen, Y.; Voisin, M.; Aliamiri, A.; Avati, A.; Hannun, A.; Ng, A. Ambulatory atrial fibrillation monitoring using wearable photoplethysmography with deep learning. In Proceedings of the 25th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1909–1916. [Google Scholar]

- Shashikumar, S.P.; Shah, A.J.; Li, Q.; Clifford, G.D.; Nemati, S. A deep learning approach to monitoring and detecting atrial fibrillation using wearable technology. In Proceedings of the 2017 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Orlando, FL, USA, 16–19 February 2017; pp. 141–144. [Google Scholar]

- Incel, O.D.; Bursa, S.Ö. On-device deep learning for mobile and wearable sensing applications: A review. IEEE Sens. J. 2023, 23, 5501–5512. [Google Scholar] [CrossRef]

- Lane, N.D.; Bhattacharya, S.; Georgiev, P.; Forlivesi, C.; Kawsar, F. An early resource characterization of deep learning on wearables, smartphones and internet-of-things devices. In Proceedings of the 2015 International Workshop on Internet of Things towards Applications, Seoul, Republic of Korea, 1 November 2015; pp. 7–12. [Google Scholar]

- Schmidt, P.; Reiss, A.; Duerichen, R.; Marberger, C.; Laerhoven, K.V. Introducing WESAD, a multimodal dataset for wearable stress and affect detection. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; pp. 400–408. [Google Scholar]

- Song, J.; Li, D.; Ma, X.; Teng, G.; Wei, J. PQR signal quality indexes: A method for real-time photoplethysmogram signal quality estimation based on noise interferences. Biomed. Signal Process. Control 2019, 47, 88–95. [Google Scholar] [CrossRef]

- Schäck, T.; Muma, M.; Zoubir, A.M. Computationally efficient heart rate estimation during physical exercise using photoplethysmographic signals. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 2478–2481. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).