1. Introduction

In the navigation system of an aerial vehicle, it is crucial to obtain accurate and real-time position information. Currently, aerial vehicles primarily rely on a combined navigation system that integrates an Inertial Navigation System (INS) and Global Positioning System (GPS) for positioning [

1]. INS tends to accumulate system errors over time, which hinders its ability to meet the demands for high-precision positioning. Therefore, it needs to be combined with GPS for navigation. However, GPS may experience signal loss or even fail to provide positioning in extreme environments [

2,

3,

4,

5]. Geomagnetic navigation technology, which utilizes the spatial variation of the geomagnetic field, offers advantages such as all-terrain applicability, all-weather capability, strong anti-jamming performance, and no error accumulation. Consequently, geomagnetic matching technology can provide stable and precise positioning for aerial vehicles. By integrating geomagnetic navigation with inertial navigation, high-precision autonomous navigation for aerial vehicles can be achieved [

6].

The key technologies of geomagnetic navigation can be categorized into geomagnetic filtering and geomagnetic matching [

7]. Geomagnetic filtering technology associates the measured magnetic field data with the INS data, and corrects the position output of the INS through filtering. Common geomagnetic filtering techniques include Kalman filtering, particle filtering, etc. Among the geomagnetic navigation systems based on Kalman filtering, the most representative one is the Sandia Inertial Terrain Aided Navigation (SITAN) [

8,

9]. The SITAN algorithm continuously processes the INS data through Kalman filtering and estimates the position information by combining terrain elevation. However, when the terrain information is linearized or the initial position accuracy is poor, the navigation accuracy deteriorates, resulting in poor robustness of the SITAN algorithm [

10,

11]. Therefore, the theory of nonlinear filtering has attracted increasing attention from relevant scholars. Stepanov and Toropov [

12] formulated the map-aided navigation problem within the framework of Bayesian nonlinear filtering theory, providing a rigorous theoretical foundation for the design of corresponding nonlinear filtering algorithms. Particle filtering, a nonlinear filtering method leveraging the Monte Carlo approach, enables parameter estimation in nonlinear and non-Gaussian environments [

13]. It approximates the posterior probability distribution through a large number of particles, leading to a significant increase in computational cost and insufficient real-time performance. Furthermore, particle filtering suffers from the issues of sample degradation and sample impoverishment [

14,

15]. In comparison to geomagnetic filtering, geomagnetic matching technology is more flexible and convenient, with lower sensitivity to initial position errors and no accumulation of errors [

16].

Geomagnetic matching technology involves matching the measured geomagnetic data with a pre-established geomagnetic database to find similar geomagnetic sequences, thereby determining the current position. The real-time capability and accuracy of the positioning are predominantly determined by the performance of the geomagnetic matching algorithm [

17]. Traditional geomagnetic matching algorithms encompass the Iterative Closest Contour Point (ICCP) algorithm and the Geomagnetic Contour Matching (MAGCOM) algorithm [

18]. The MAGCOM algorithm rectifies position errors in the INS through translational search, but when there is a large heading deviation, this algorithm cannot provide accurate positioning [

19]. The ICCP algorithm can correct both heading and position errors of the INS. However, the ICCP algorithm typically suffers from significant initial localization errors and tends to fall into local optima [

20]. To optimize the ICCP algorithm, Xiao et al. [

21] introduced the Probability Data Association (PDA) algorithm and the principle of incremental modulation, which enhanced the algorithm’s robustness to interference and improved positioning accuracy.

In recent years, in addition to traditional geomagnetic matching algorithms, artificial intelligence algorithms and intelligent optimization algorithms have also been applied to geomagnetic matching navigation. Xu et al. [

22] presented the PSO-ICCP algorithm, optimizing the ICCP output by incorporating a multi-attribute decision-making mechanism. By combining Particle Swarm Optimization (PSO) algorithm with an improved particle initialization strategy, this method effectively reduces the effect of initial positioning errors with respect to the geomagnetic matching precision of ICCP. Chen et al. [

23] introduced the fundamentals of pattern recognition into geomagnetic matching navigation and proposed a geomagnetic vector matching algorithm based on a two-stage neural network. This method cascades the non-fully connected neural network and the probabilistic neural network (PNN) to perform preliminary and refined screening on the geomagnetic vectors and their characteristic information, respectively. This approach achieves a high matching success rate and positioning accuracy under low-gradient conditions, addressing the issue of failure in traditional geomagnetic matching algorithms under the same conditions. Similarly, inspired by the fundamentals of pattern recognition, they later proposed a geomagnetic vector matching method based on PNN. By optimizing the smoothing parameters of the PNN using a genetic algorithm, this method significantly improves matching accuracy compared to traditional geomagnetic matching algorithms [

24].

Although existing geomagnetic matching algorithms achieve high matching accuracy, accurately and rapidly determining the position through geomagnetic information in the high-speed dynamic environment remains a challenge. In real-world environments, geomagnetic information is uniquely associated with positional information, which aligns with the characteristics of a regression prediction task. Therefore, machine learning methods can be employed to fit this model, resulting in a trained geomagnetic matching model. Once the airborne equipment acquires the geomagnetic information, it can quickly match and obtain the positional information through this model, enabling accurate and real-time positioning.

The Extreme Learning Machine (ELM) algorithm, known for its efficiency as a machine learning approach, simplifies the training process of traditional neural networks by randomly generating input layer weights and biases, thereby eliminating iterative optimization steps and significantly enhancing training speed [

25]. Therefore, the ELM algorithm has a significant advantage in applications that require large-scale data processing and emphasize real-time performance [

26], making it suitable for geomagnetic matching tasks. However, this algorithm has certain limitations. Due to the random initialization of the weights and biases of the neural network, the performance of the ELM algorithm may exhibit instability across different datasets, which can affect its generalizability [

27,

28]. To address these shortcomings, Huang et al. [

29] proposed the Kernel Extreme Learning Machine (KELM) to better handle linearly non-separable samples and improve robustness. Gao et al. [

30] further employed the improved Dung Beetle Optimization (IDBO) algorithm for the optimization of the regularization coefficient and kernel parameters in KELM, which allowed for the accurate identification of projectile aerodynamic parameters. Wang et al. [

31] employed the adaptive neuron clipping algorithm and the PSO algorithm to improve the ELM network. They then applied the adaptive boosting (AdaBoost) algorithm to iteratively train a series of weak learners, ultimately combining them to form a strong learner for achieving high-precision prediction. More studies have also employed intelligent optimization algorithms to optimize the hyperparameters of machine learning models [

32,

33]. These works demonstrate that such hybrid models can achieve more stable and accurate performance in regression prediction tasks.

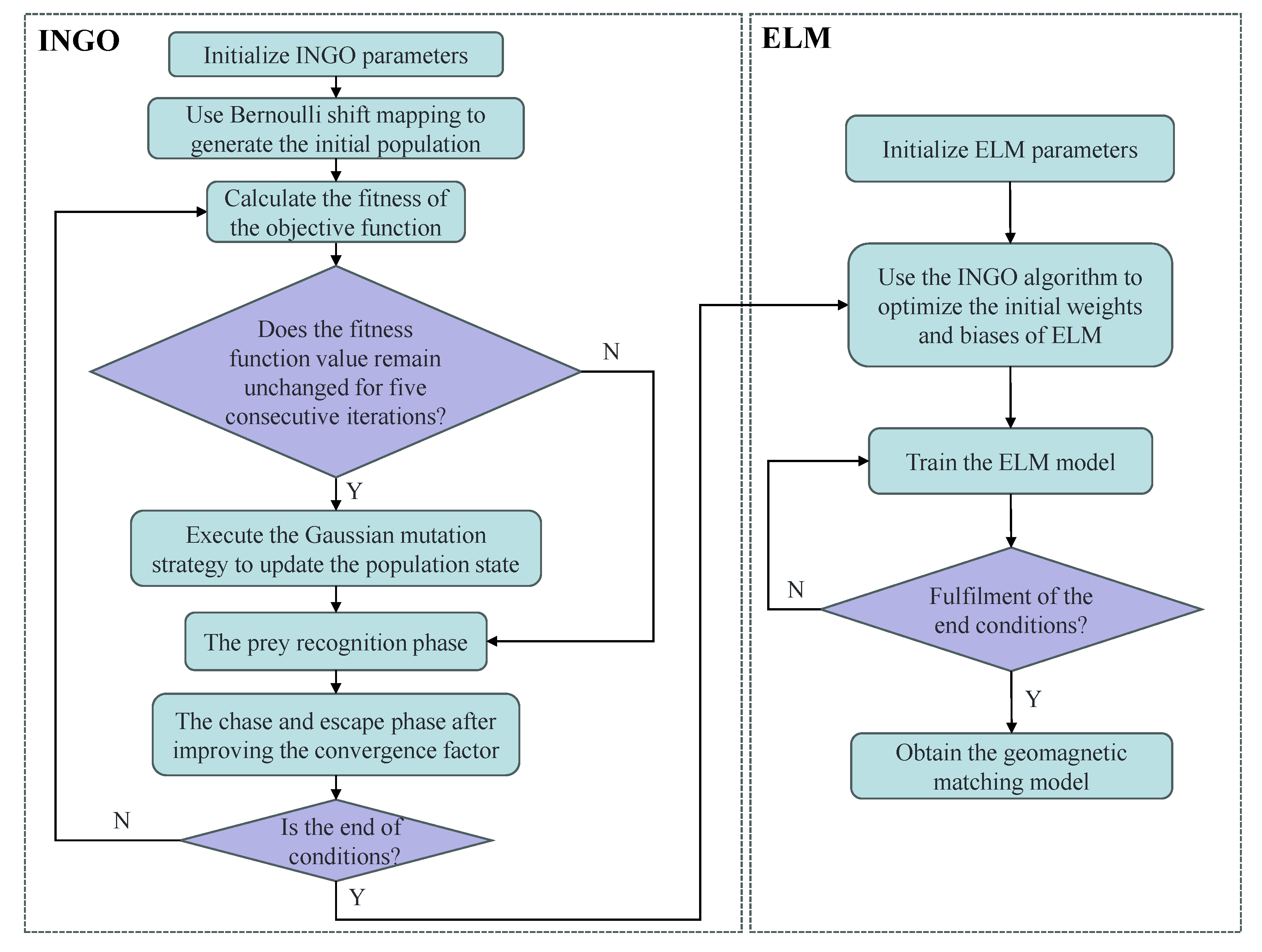

In order to achieve efficient and stable geomagnetic matching, this paper will use an improved NGO (INGO) algorithm to optimize the initial weights and biases of the ELM model, and propose the INGO-ELM model. To enhance the persuasiveness of this article, the IGRF-13 model will be used to simulate geomagnetic data and the performance of the presented geomagnetic matching algorithm will be evaluated. The innovations introduced in this paper are as follows:

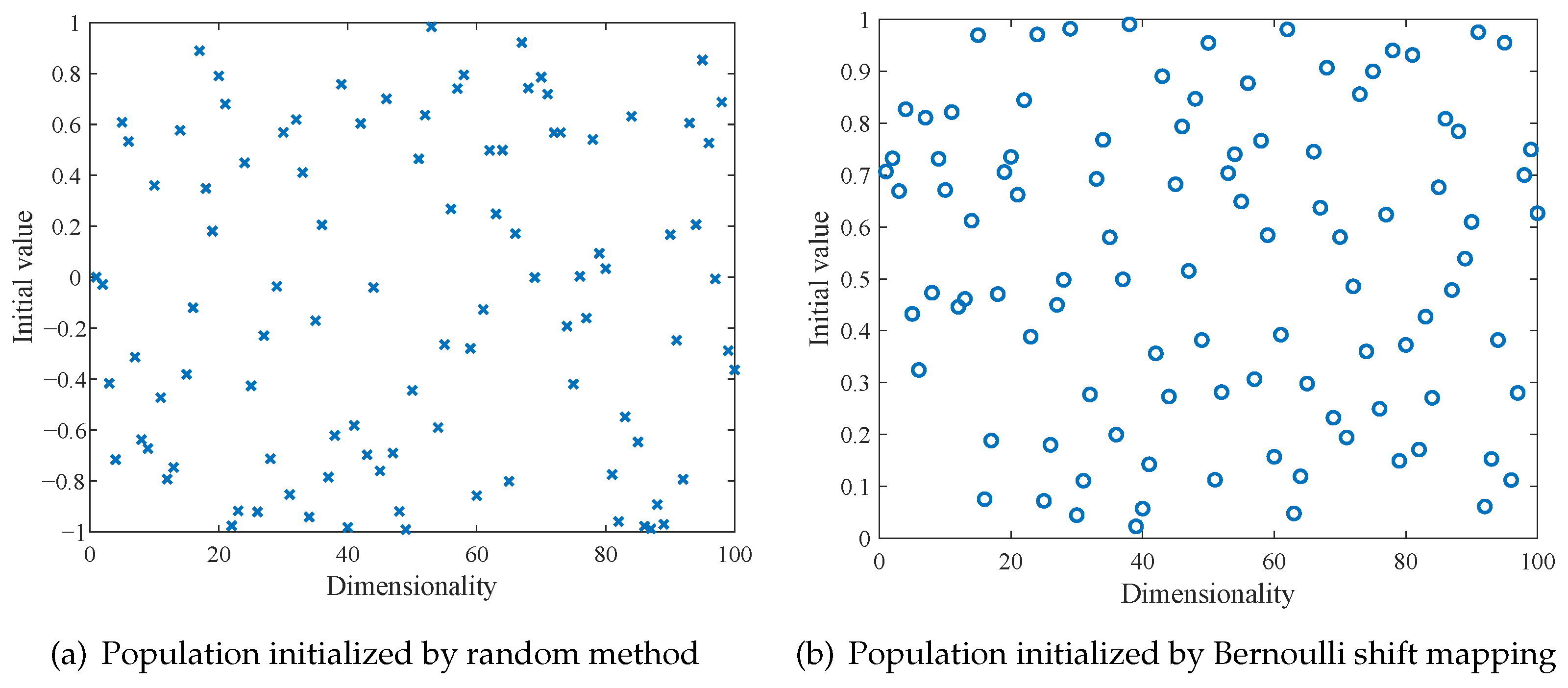

To strengthen the optimization capability of the NGO algorithm, this paper proposes three improvement measures for the NGO algorithm.

This paper presents, for the first time, the INGO-ELM algorithm for geomagnetic matching-assisted navigation, where the INGO algorithm is used to optimize the initial weights and biases of the ELM, effectively enhancing the accuracy and stability of the ELM algorithm in geomagnetic matching positioning tasks.

The geomagnetic matching dataset is generated using the IGRF-13 model to validate the matching performance of the geomagnetic matching assisted navigation algorithm proposed in this paper, with noise added to the dataset to simulate the complexity of real-world environments. The simulation results demonstrate that the INGO-ELM algorithm presented in this paper exhibits superior robustness and is capable of accomplishing the positioning task in real time with high-accuracy matching performance, even in the presence of observational errors from the magnetic sensor.

The organization of this paper is as outlined below: The IGRF-13 model and the construction methodology of the geomagnetic matching dataset are introduced in

Section 2.

Section 3 discusses the three improvements made to the NGO algorithm and analyzes their effectiveness, which is followed by the introduction of the INGO-ELM model proposed in this paper.

Section 4 presents a comparative analysis of the geomagnetic matching test results between the INGO-ELM model and four other models, further testing the geomagnetic matching performance of each model after the addition of noise.

Section 5 presents the conclusions and outlook of this paper.

To ensure clarity and consistency in scientific communication, we have compiled a terminology list and an acronyms list, as shown in

Table 1 and

Table 2, respectively.

4. Simulation Tests

The INGO-ELM geomagnetic matching model introduces the principle of machine learning to form a one-to-one nonlinear mapping between geomagnetic information and position information for direct matching and positioning. By leveraging the advantages of machine learning, this method can not only perform matching based on existing geomagnetic databases but also accurately estimates the geomagnetic data between discrete points in the database, essentially constructing a continuous and complete geomagnetic matching database to achieve more precise matching and positioning.

Thus, to verify the geomagnetic matching performance of the INGO-ELM model, we construct geomagnetic matching models using XGBoost and BP neural networks—both machine learning algorithms—and compared them with the INGO-ELM model in simulation tests. To ensure a fair comparison comparison with the INGO-ELM model, we use INGO to optimize the parameters of XGBoost and BP neural networks. Meanwhile, we built two geomagnetic matching models, NGO-ELM and ELM, to further validate the performance of INGO as an intelligent optimization algorithm.

4.1. Extreme Gradient Boosting

Extreme gradient boosting (XGBoost) [

44], a classic algorithm in the field of machine learning, originates from the optimization and upgrading of the gradient boosting decision tree (GBDT) in its core design philosophy. When training on large-scale datasets, XGBoost significantly accelerates the training speed through efficient tree structure pruning and parallel computing mechanisms. Meanwhile, it introduces regularization terms to prevent overfitting, enabling the model to maintain excellent generalization performance on complex datasets. As the core of XGBoost, its objective function Obj formula is as follows:

denotes the loss function, which is employed to measure the discrepancy between the model’s predicted value

and the true value

.

represents the regularization term, serving to control the complexity of each tree. Its formula is

In the formula, is the prediction function; is the regularization parameter; is the number of leaf nodes in the regression tree; is the penalty term for leaf node weights; m is the number of features; and is the leaf node weight of the j-th tree.

4.2. Back Propagation Neural Network

The Back Propagation (BP) Neural Network [

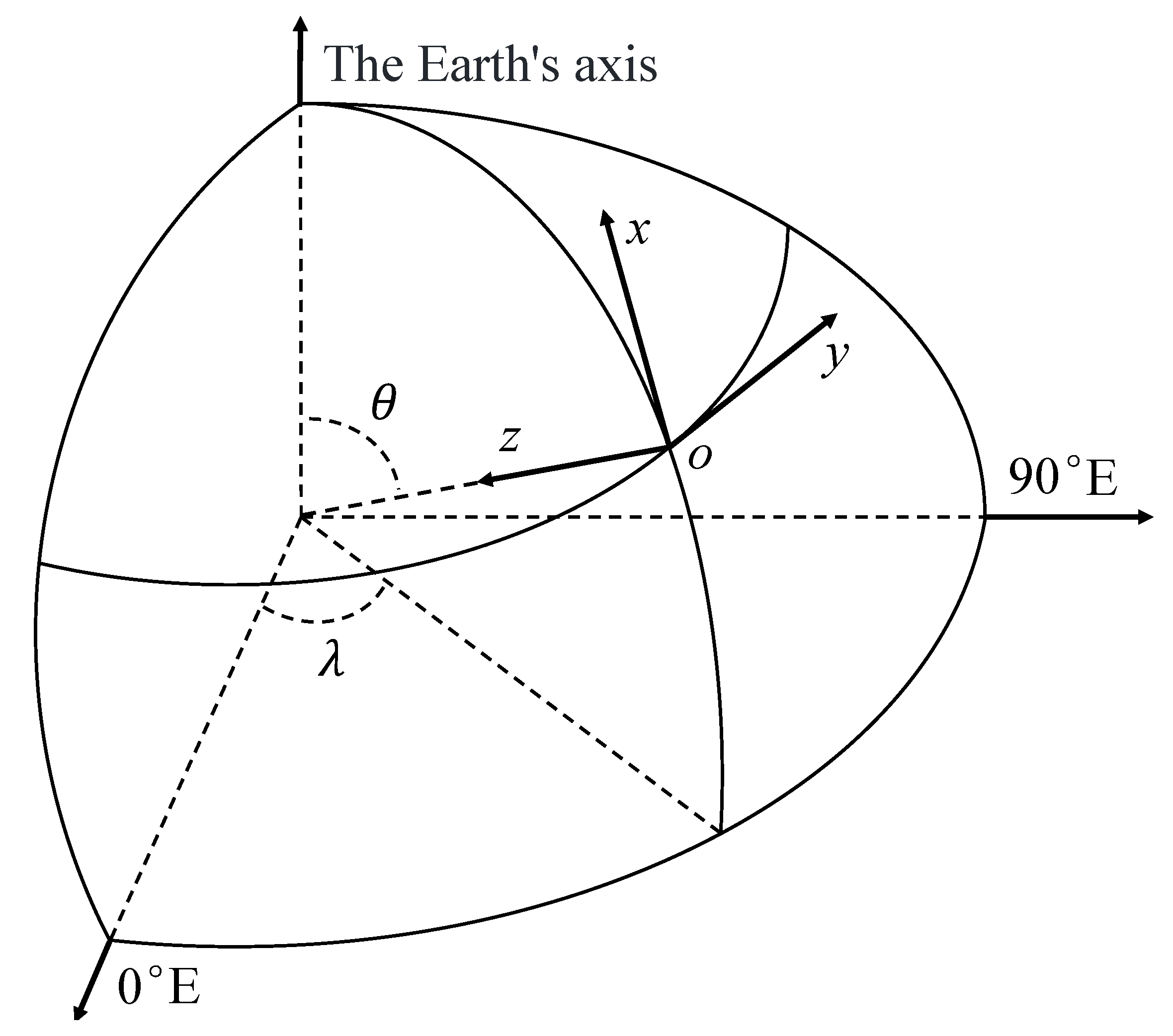

45], a feedforward neural network, exhibits a strong nonlinear fitting capability and finds extensive applications. When constructing a geomagnetic matching model using a BP neural network in this paper, the input layer consists of three nodes, which are the components of the total magnetic field intensity at the sampling point along the three axes of the NED coordinate system. The hidden layer is set with 10 nodes. The output layer contains three nodes, corresponding to the latitude, longitude, and altitude of the sampling point, respectively. The activation functions of neurons in the hidden layer and output layer are shown in Equations (22) and (23), respectively:

4.3. Geomagnetic Matching Simulation Test

To establish the control group, INGO is used to optimize the number of iterations, tree depth, and learning rate of XGBoost. Similarly, INGO is employed to optimize the weight matrix from the input layer to the hidden layer, the weight matrix from the hidden layer to the output layer, and the biases of the hidden layer and output layer for the BP neural network.

Then, the geomagnetic matching dataset constructed in

Section 2 is used to conduct comparative tests on five models, namely, INGO-ELM, NGO-ELM, ELM, INGO-XGBoost, and INGO-BP. The test results are shown in

Table 7,

Table 8 and

Table 9, which present the error statistics of latitude, longitude, and height obtained by the five geomagnetic matching models, respectively.

In

Table 7,

Table 8 and

Table 9, the INGO-ELM model exhibits the lowest mean absolute error (MAE), root mean square error (RMSE), and mean square error (MSE) among the five models, indicating that the average errors for latitude, longitude, and altitude obtained through this model are the smallest, with the fewest prediction outliers. Therefore, the geomagnetic matching accuracy and stability of this model are the highest. Furthermore, taking the MAE evaluation index as an example, the MAE for latitude and longitude obtained using the INGO-ELM geomagnetic matching model are 5.728 × 10

−5 degrees and 5.98 × 10

−5 degrees, respectively, which correspond to approximately 6.38 m and 6.43 m after unit conversion. The MAE for altitude obtained by this model is 0.0137 m. These results demonstrate that the geomagnetic matching model based on INGO-ELM achieves extremely high matching accuracy.

The error evaluation index of the NGO-ELM model are second only to those of the INGO-ELM model, remaining within the same order of magnitude. However, it is noteworthy that in the latitude matching results presented in

Table 7, the maximum error of the INGO-ELM model is 6.156 × 10

−4 degrees, which corresponds to approximately 68.53 m after unit conversion, while the maximum error of the NGO-ELM model is 9.554 × 10

−4 degrees, equivalent to approximately 106.36 m after unit conversion, with a difference of about 37.83 m between the two. Similarly, in the longitude matching results shown in

Table 8, the maximum error of the two models differs by approximately 41.74 m after unit conversion. A positioning error of several tens of meters has a significant impact on the navigation of the aerial vehicle. Therefore, compared to the NGO-ELM algorithm, the INGO-ELM algorithm not only enhances the matching accuracy but also greatly improves stability. The improvements proposed for the NGO algorithm in this paper are effective and have practical application value.

From

Table 7,

Table 8 and

Table 9, it can be observed that the four error evaluation index for latitude and altitude obtained through the INGO-BP model are similar to those of the INGO-ELM model. However, for longitude, the MAE value of the BP neural network model is 8.359 × 10

−4, which differs by an order of magnitude from the MAE value of 5.98 × 10

−5 for the INGO-ELM model, demonstrating relatively poor performance. This discrepancy may be attributed to the tendency of the BP neural network to become stuck in local optima during the training process, leading to lower overall geomagnetic matching accuracy. The error data of the INGO-XGBoost model in the three tables are mostly two orders of magnitude higher than those of the INGO-ELM model. This could be attributed to the fact that the matching results of XGBoost are determined by the expected values based on the conditional distribution of features, lacking the ability to excavate deeper-level relationships between features. XGBoost cannot directly capture the complex feature relationships in the geomagnetic matching dataset, resulting in the poor geomagnetic matching performance of INGO-XGBoost model. In

Table 7 and

Table 8, all error evaluation metrics for the ELM model are one order of magnitude higher than those of the INGO-ELM and NGO-ELM models, indicating that optimizing the initial weights and biases of the ELM model using the INGO or NGO algorithms significantly improves its matching performance.

Furthermore, in the geomagnetic matching dataset of this paper, the units of latitude and longitude are degrees, with small numerical values and minimal variations, while the unit of altitude is meters, featuring large numerical values and significant variations. However, the data obtained by the machine learning model is dimensionless, and the model itself does not inherently understand the specific physical meanings of each input and output parameter. Without considering dimensional units, the output data in

Table 7,

Table 8 and

Table 9 show that the matching errors for latitude and longitude are several orders of magnitude lower than those for altitude. However, after converting the units of latitude and longitude from degrees to meters, their matching errors become higher than those of altitude. This indicates that the dimensional discrepancies in the geomagnetic matching dataset lead to higher positioning accuracy for altitude.

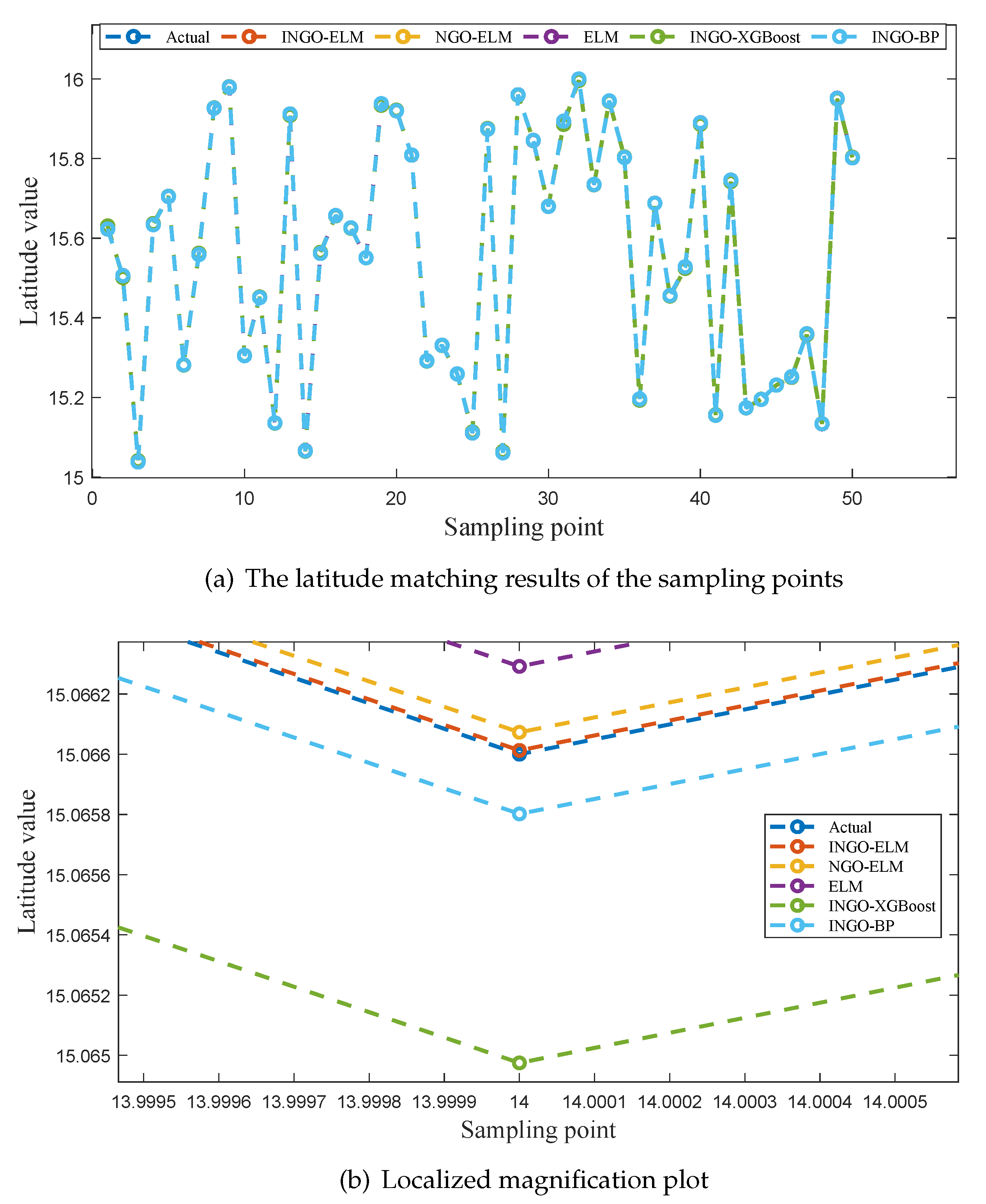

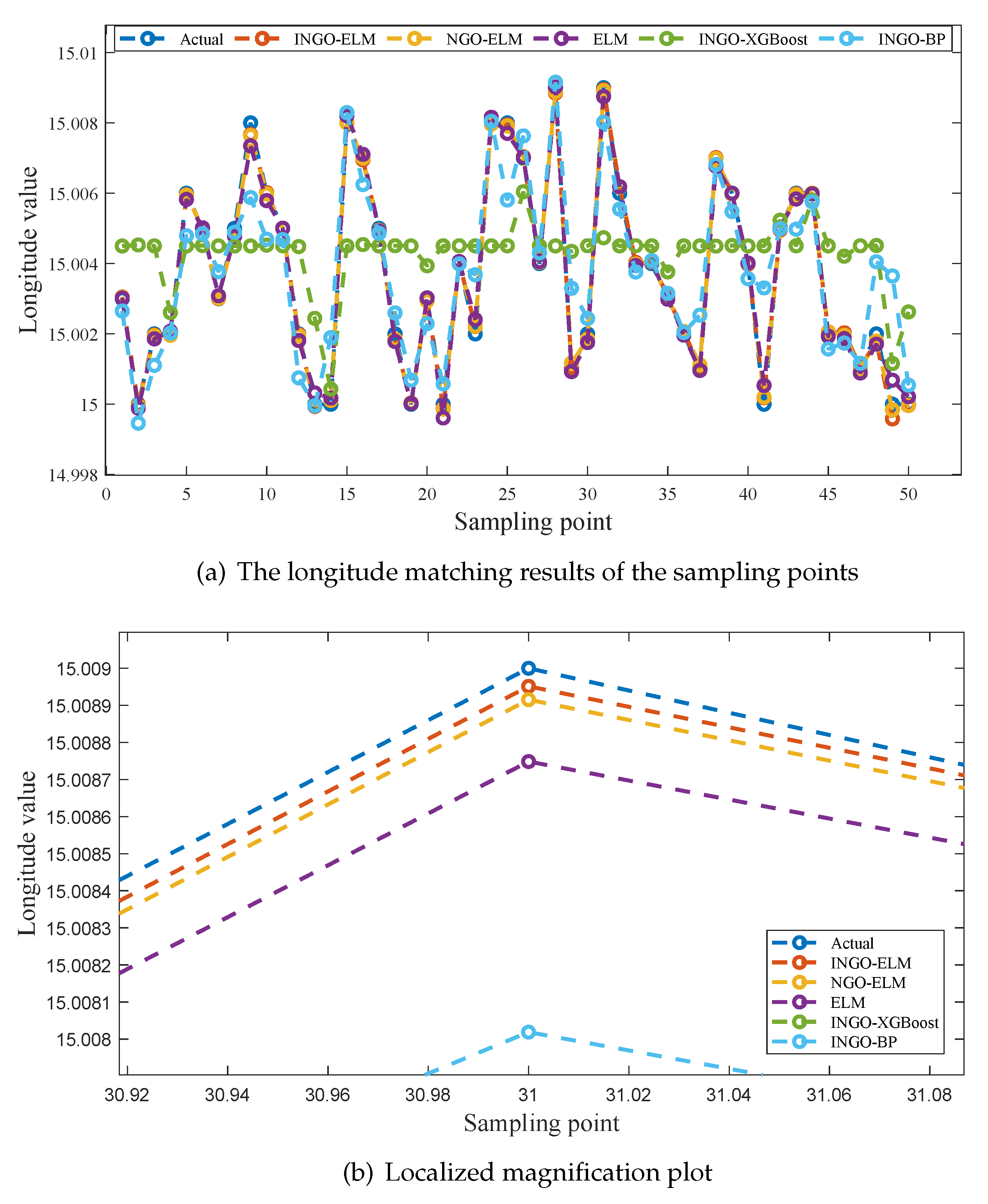

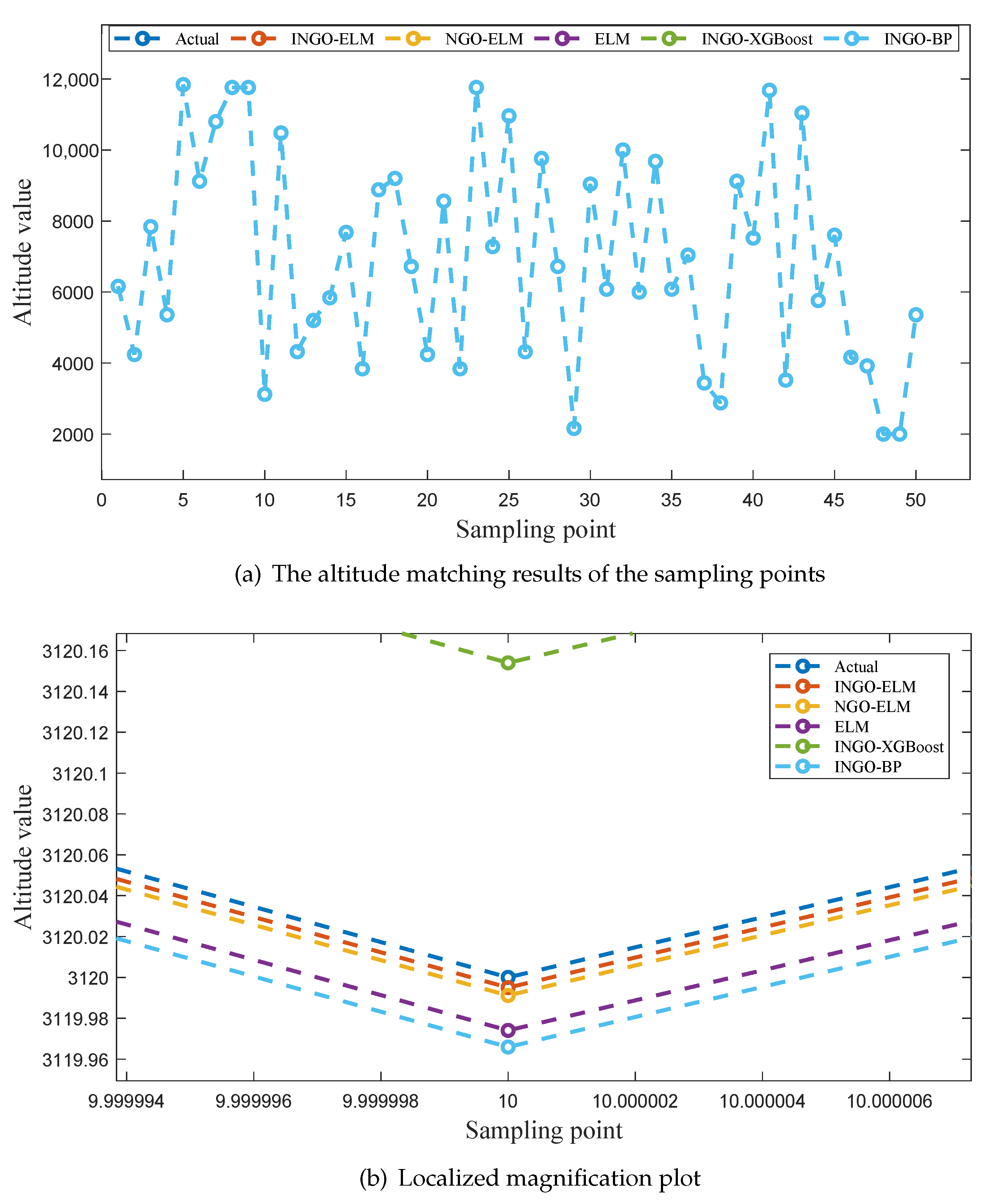

To provide a more intuitive comparison of the fitting performance among the five models,

Figure 6a,

Figure 7a and

Figure 8a illustrate the predicted values of latitude, longitude, and altitude for the sampling points by different models, along with the corresponding true values. Enlarged sections of these three figures are shown in

Figure 6b,

Figure 7b, and

Figure 8b.

As seen in

Figure 6a and

Figure 8a, the predicted values of five models exhibit a good fit with the actual values at all sampling points. However, in

Figure 7a, it is evident that INGO-XGBoost and INGO-BP models exhibit poor fitting performance, while the other three algorithms still perform well. This situation may be attributed to the fact that within the sampling range of this study, the variation in longitude [15, 15.009] is much smaller than the variation in latitude [15, 16], while the sampling interval is the same for both at 0.001 degrees. As a result, the longitude sampling values lack sufficient diversity, and both INGO-XGBoost and INGO-BP models failed to capture key features when processing this type of data, leading to poor prediction performance. As shown in

Figure 6b,

Figure 7b, and

Figure 8b, the INGO-ELM model demonstrates the highest matching accuracy.

Based on the comprehensive analysis of

Table 7,

Table 8 and

Table 9 and

Figure 6,

Figure 7, and

Figure 8, the test results confirm the effectiveness of the three improvement measures proposed for NGO in this study. In addition, after obtaining the geomagnetic data of the aerial vehicle’s location, the trained INGO-ELM model can accurately determine the aerial vehicle’s geographic coordinates in just 0.27 µs. Therefore, the proposed INGO-ELM geomagnetic matching model significantly outperforms NGO-ELM, ELM, INGO-XGBoost and INGO-BP models, achieving stable, precise, and real-time positioning.

It is worth noting that achieving the positioning accuracy of the INGO-ELM geomagnetic matching model imposes stringent requirements on geomagnetic sensors. Taking latitude as an example for analysis, in the IGRF-13 model, when longitude and altitude remain unchanged, the geomagnetic field may change by only a few nanoteslas (nT) per 0.001 degrees of latitude (approximately 110 m). In the simulation results of this paper, the average absolute error in latitude matching is 6.38 m, implying that to achieve this positioning accuracy, the sensitivity of the geomagnetic sensor must be lower than 1 nT. However, as shown in References [

46,

47,

48,

49], with the development of atomic magnetometer technology, geomagnetic sensors will be capable of achieving picotesla (pT)-level or even femtotesla (fT)-level three-axis magnetic field measurements within a high dynamic range in noisy and physically demanding environments, meeting the performance requirements of the geomagnetic matching method proposed in this paper.

4.4. Robustness Verification

Within the sampling range shown in

Table 3, the interference of non-natural noise on the magnetic field is minimal. This study primarily focuses on the impact of observational errors from the geomagnetic sensor on the INGO-ELM geomagnetic matching model. To evaluate the robustness of the INGO-ELM geomagnetic matching model, Gaussian white noise with standard deviations of 1 nT and 5 nT was added to the input data of the test set, and the test results are presented in

Table 10,

Table 11 and

Table 12.

In

Table 10 and

Table 11, the INGO-ELM model exhibits the smallest mean absolute error for geomagnetic matching, indicating that the model maintains the highest geomagnetic matching accuracy among the five models even after the introduction of Gaussian white noise. Furthermore, these two tables demonstrate that after adding noise, the geomagnetic matching mean absolute error of INGO-ELM and NGO-ELM is significantly better than that of the other three models. When the standard deviation of the Gaussian white noise is 1nT, the matching accuracy of the two models is similar. However, when the standard deviation of the Gaussian white noise is 5 nT, the mean absolute error of the INGO-ELM model in matching latitude and longitude, after unit conversion, is approximately 12.41 m and 12.28 m lower than that of the NGO-ELM model, respectively. These test results suggest that the INGO-ELM model demonstrates significantly better anti-interference capability than the NGO-ELM model.

Table 12 further indicates that when Gaussian white noise with a standard deviation of 1nT is added to the INGO-ELM geomagnetic matching model, the order of magnitude in most error data remains unchanged. However, when the standard deviation increases to 5 nT, the average absolute errors of the INGO-ELM model in matching latitude, longitude, and altitude, after unit conversion, become 15.83 m, 17.55 m, and 0.027 m, respectively, still maintaining a high level of geomagnetic matching accuracy. Meanwhile, the maximum errors, after unit conversion, become 213.63 m, 196.13 m, and 0.409 m, but their occurrence probability is extremely low, and abnormal values can be eliminated using filtering algorithms. Therefore, a comprehensive analysis of the data in

Table 8,

Table 9 and

Table 10 reveals that the INGO-ELM model not only demonstrates excellent matching accuracy but also maintains robust performance in the presence of noise.

5. Conclusions

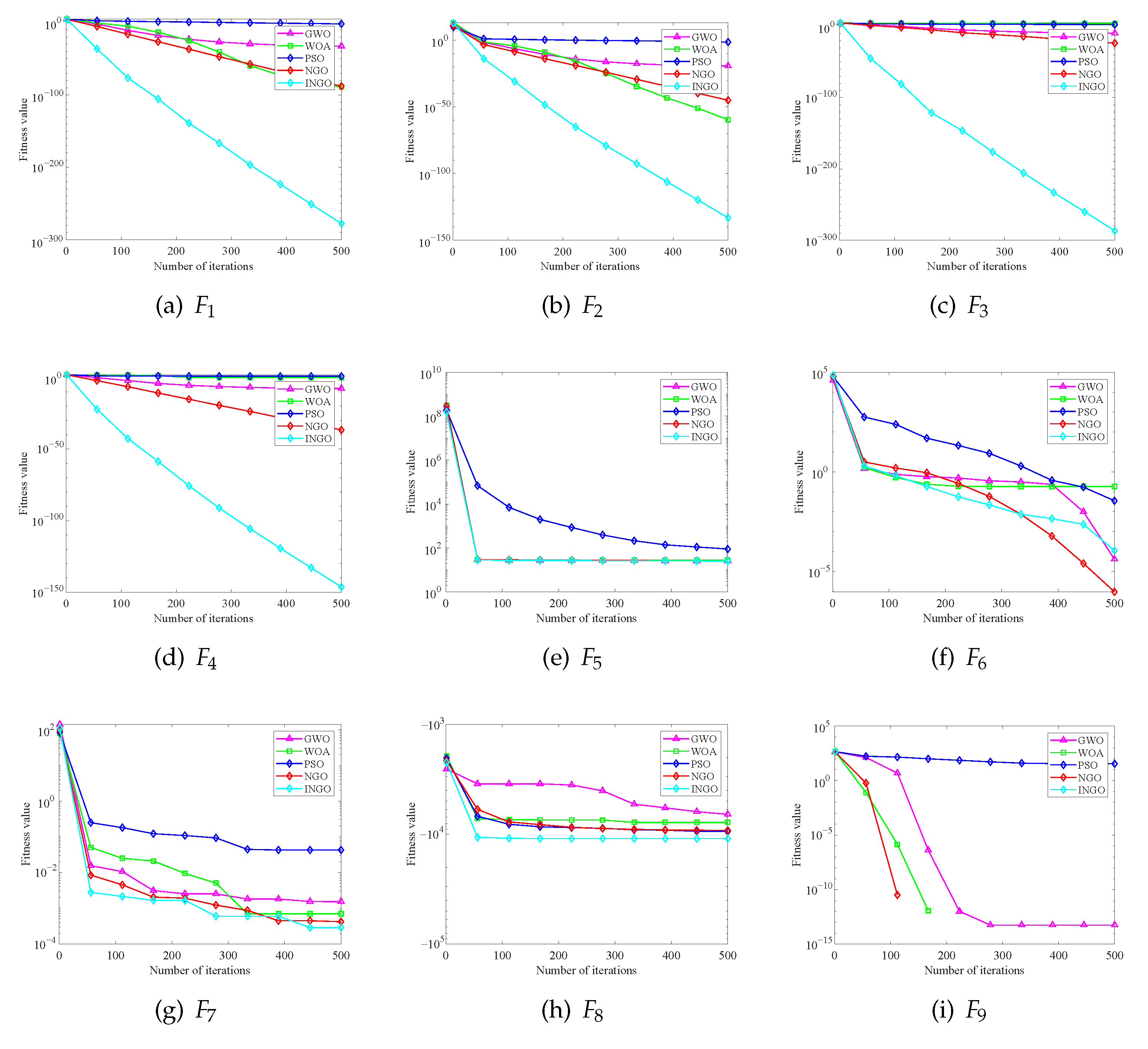

Geomagnetic matching-assisted navigation offers several advantages, including all-terrain, all-weather capabilities, strong anti-jamming performance, and no error accumulation, which can compensate for the shortcomings of the INS/GPS integrated navigation system. The INGO-ELM geomagnetic matching model proposed in this paper enables real-time, precise, and stable positioning for high-speed aerial vehicles. In this model, an improved NGO algorithm is used to optimize the initial weights and biases of the ELM model, and the effectiveness of the improvements is validated using the CEC2005 benchmark function suite. The results demonstrate that the INGO algorithm performs best overall in the tests, and the improvements effectively enhance the convergence rate, convergence accuracy, and stability of the NGO algorithm.

In addition, this paper employs the IGRF-13 model to generate a geomagnetic matching dataset and conducts comparative testing of several geomagnetic matching models, including INGO-ELM, NGO-ELM, ELM, INGO-XGBoost, and INGO-BP. The simulation results show that after the airborne equipment acquires the geomagnetic data, it only takes 0.27 µs to obtain the latitude, longitude, and altitude of the aerial vehicle through the INGO-ELM model. After unit conversion, the average absolute errors are approximately 6.38 m, 6.43 m, and 0.0137 m, respectively. When considering all types of geomagnetic matching error data comprehensively, the geomagnetic matching performance of the INGO-ELM model is significantly superior to that of the other four models. Furthermore, the INGO-ELM model maintained a high level of geomagnetic matching accuracy even after the addition of Gaussian white noise to the test set inputs, indicating that the model exhibits good robustness. Therefore, the INGO-ELM algorithm presented in this paper exhibits superior robustness and is capable of accomplishing the positioning task in real time with high-accuracy matching performance, even in the presence of observational errors from the magnetic sensor.

Future Outlook: Although the INGO-ELM model demonstrates exceptionally high geomagnetic matching accuracy, it requires precise geomagnetic data from the target area for training. A major challenge lies in the stable acquisition of such data in advance. Although the IGRF-13 model adopted in this study is a highly internationally recognized geomagnetic field reference model, it exhibits certain discrepancies compared to the real geomagnetic field. In fact, the geomagnetic matching algorithm proposed herein is equally applicable to datasets generated by other more accurate geomagnetic field models that establish one-to-one correspondences between geomagnetic information and location information. In future work, we will focus on the construction of regional geomagnetic field models. Additionally, geomagnetic matching-assisted navigation may be severely disrupted in special circumstances, such as geomagnetic storms. Therefore, it is crucial to explore how to integrate the INGO-ELM model with other navigation systems to effectively address such situations.