1. Introduction

Extended reality (XR) is a term used to describe all immersive technologies that exist within the reality–virtuality continuum. The reality–virtuality Continuum is a theoretical framework that describes the relationship between real and virtual environments [

1]. The continuum ranges from the real environment at one end to the virtual environment at the other end, with augmented reality (AR) and mixed reality (MR) in between. The reality–virtuality continuum helps to categorize and compare different types of immersive technologies based on their level of immersion and interaction with the physical world. It also provides a framework for designing and developing immersive experiences that fit within a particular point on the continuum. Virtual reality (VR) creates a completely digital environment that simulates the physical world or an imaginary one. AR overlays digital content on top of the real world, while MR blends digital objects with the physical world in real time.

Within recent decades, XR has been gaining increasing interest across different fields, including retail [

2], education [

3], manufacturing [

4], and healthcare [

5]. Technological advancements have transformed healthcare by introducing innovative approaches that enhance patient care and clinical outcomes, with XR technologies gaining increasing recognition for their potential across both clinical practice and research settings [

6]. XR has made its debut in the healthcare sector, starting with surgical procedures, and has since expanded to medical training, patient care, and various other areas [

5]. Recent evidence indicates that XR is of benefit for patient education, educating and training medical students, surgical planning, medical imaging, rehabilitation, and pain management [

7]. Additionally, it has been incorporated into the treatment of several medical conditions like neurodegenerative disorders (e.g., post-traumatic stress disorder (PTSD), panic disorders, special phobias, autism spectrum disorder (ASD)), dental medicine, orthopedics, obesity, and so forth [

5,

8].

XR can offer an engaging and authentic user experience that is practical for teaching and providing immediate guidance during complex medical emergencies, diagnostic procedures, interventions, and procedural applications [

9]. Moreover, for beginners, XR can provide extra support to enhance their proficiency in making medical decisions, utilizing medical devices and equipment, and executing medical procedures. XR enables healthcare professionals to access people, information, and experiences that were once inaccessible, thereby reducing the geographical barriers between the delivery of value-based healthcare and patients [

9]. The most noteworthy benefit of XR in the healthcare industry could be its ability to provide communal and shared experiences. In the past, medical professionals might not have been able to empathize with their patients’ medical issues since they were not sick themselves. However, with the advent of XR, this could change, as medical personnel can now experience how it feels to be unwell and gain a better understanding of their patients’ perspectives. XR involves the use of a specialized headset to simulate an immersive 3D virtual environment, enabling users to feel physically present in a synthetic space. Through interactions with virtual characters (avatars) and real-time feedback using audio, visual, and haptic stimuli, medical personnel can engage in simulations that replicate patients’ experiences, thereby fostering empathy and improving patient care [

10].

Recent advances in sensor technologies have played a pivotal role in shaping the development and application of XR systems. Sensors such as inertial measurement units (IMUs), depth cameras, RGB-D sensors, LiDAR, eye trackers, and physiological sensors are now integral to most XR platforms. These sensing components enable spatial tracking, environmental awareness, and user interaction, forming the technical foundation for immersive experiences. The richness and reliability of sensor data directly affect system responsiveness, safety, and realism—factors that are especially critical in high-stakes environments such as healthcare [

11].

The type and configuration of sensors differ significantly across VR, AR, and MR systems. VR systems often emphasize motion tracking and visual immersion, leveraging IMUs and optical tracking to replicate physical motion within digital spaces. AR systems require more advanced sensing, such as simultaneous localization and mapping (SLAM) and depth estimation, to anchor virtual content to the physical world accurately. MR systems go a step further by integrating multimodal inputs such as eye gaze, gesture recognition, and contextual awareness to allow real-time dynamic interaction between digital and physical elements. These differences in sensing complexity not only influence the cost and form factor of XR systems but also determine their suitability for specific clinical and operational tasks. For instance, MR systems like HoloLens 2 are better suited for image-guided surgery, while simpler VR systems may suffice for immersive procedural training.

The evaluation of XR systems is far from a simple, straightforward task, given that numerous heterogeneous factors should be considered in an integrated manner. Recent work highlights the potential of integrating XR in healthcare and notes challenges in system-level evaluation and integration [

5], although formal qualitative and quantitative comparison frameworks remain underdeveloped. This paper introduces an effective Decision Support System (DSS) tailored for the evaluation and selection of XR systems in the healthcare sector. Our DSS is founded on a framework that integrates the Supply Chain Operations Reference (SCOR) with the Fuzzy Analytical Network Process (FANP) model. The presented system aligns with the hallmark characteristics of a DSS by integrating diverse data sources, utilizing a hierarchical model, and employing sophisticated algorithms like FANP to facilitate informed decision-making. This combination allows for systematic identification and prioritization of the influential criteria essential for assessing XR systems in healthcare settings. Additionally, two state-of-the-art fuzzy approaches for the ANP models are compared, and the results are analyzed using statistical testing. The proposed SCOR-FANP system allows decision-makers to evaluate and compare available XR systems in a systematic manner to select the most suitable one. The proposed approach provides a comprehensive evaluation of different factors affecting the performance of XR systems in healthcare. It also allows researchers to have an early evaluation of the XR system during the design phase. Our seminal contribution is rooted in its application to healthcare XR system selection, synergistically combined with the SCOR model. This blend and the consequent insights present a distinctive contribution to the existing literature.

The remainder of this paper is organized as follows.

Section 2 reviews the body of literature and highlights the most important features of XR systems.

Section 3 describes the methodology of the study.

Section 4 discusses the experimental results.

Section 5 presents a performance evaluation of existing XR technologies based on the proposed criteria. Finally,

Section 6 concludes the paper.

2. Literature Survey

This section provides a comprehensive review of the literature on the applications of XR technologies in healthcare. We categorize the literature into distinct sections, focusing on clinical applications, technological advancements, and evaluation studies.

Clinical Application-Based Studies. XR technologies have been brought into healthcare during the past decade due to several potential benefits, such as scalability, increased motivation, and cost savings. The expansion of XR in the healthcare industry has the potential to change how medical services are provided to autistic children, PTSD and depression patients, patients undergoing surgeries, and patients with brain injuries, to name a few [

5]. Among the medical areas in which XR technologies are being used, medical education and training, surgical simulation, medical diagnostics, neurological rehabilitation, psychotherapy, and telemedicine can be mentioned [

12]. VR has been used therapeutically to treat posttraumatic stress disorder, anxiety disorders, phobias, schizophrenia, addiction, eating disorders, and obesity [

13,

14,

15,

16,

17]. Patients are more at ease dealing with challenging situations “virtually” rather than in real life since they are aware that the digital environment is not real, and it is likely that learning new behaviors could be applied in the real world [

17]. Augmented reality can be helpful in the planning of surgical interventions and in communicating potential medical complications to patients and their families in the context of patient care [

18]. Ref. [

8] proposed that XR technologies could significantly improve adherence to behavioral interventions and challenged researchers to explore XR-based interventions in treating adolescent obesity. Ref. [

14] conducted a review of XR and telehealth interventions for children and adolescents with ASD, aiming to verify their efficacy and validity. Ref. [

7] wrote a chapter outlining the established clinical applications of XR, including mental well-being, pain management, physiotherapy, and rehabilitation. Ref. [

19] conducted a systematic review investigating the utilization of XR-based therapies for anxiety disorder patients, exploring the perceptions and experiences of patients and healthcare providers, and comparing the effectiveness of different XR approaches in anxiety disorder treatment.

Technology-Based Studies. Using whole-slide microscopic images, Google’s AR Microscope employed machine learning to detect cancer in real-time [

13]. The proposed system enables integration of artificial intelligence (AI) into routine workflows, which was demonstrated to be useful in the detection of prostate and metastatic breast cancer [

14]. A systematic review [

20] indicated that integrating AI, XR, and human digital twin-based solutions can reduce technical errors and provide a universal framework for advancing personalized cardiology. Ref. [

5] discussed the potential impact of XR in transforming the healthcare industry, outlining its use cases, challenges, XR tools and techniques for intelligent healthcare, recent developments in XR-based healthcare services, and the potential benefits and future aspects of XR in the medical domain. Ref. [

21] provided a comprehensive overview of XR’s theoretical potential, highlighting its technical advancements and biomedical applications. Ref. [

9] proposed a standardized taxonomy of essential XR capabilities and described important functional characteristics that could serve as a conceptual design and development framework for XR-based medical training and real-time clinical guidance for astronauts during deep space missions.

Evaluation-Based Studies. Evaluating the effectiveness of XR systems is still an open question. Among the studies on evaluation and/or comparison of XR systems, most of which focused on qualitative assessment of XR systems [

22,

23,

24,

25]. Among other industries other than healthcare, Ref. [

26] evaluated the application of AR devices from a process point of view in the manufacturing industry. Ref. [

27] critically evaluated the use of AI and XR in real-world biomedical settings, comparing their outcomes to traditional healthcare practices and illustrating their effectiveness through case studies. Ref. [

28]’s investigation also examined the integration of AI, XR, and VR in biomedical applications, highlighting their roles in diagnosis, treatment, and medical training. Ref. [

29] enhanced the transparency and quality of reporting in early-phase clinical evaluations of XR applications by applying the Delphi method. Ref. [

30] identified and highlighted the role of immersive technologies—including VR, AR, MR, XR, the metaverse, and gamification—in supporting healthcare responses to COVID-19. The literature on the subject shows that there is a need for a comprehensive quantitative DSS for evaluating XR systems in healthcare.

To build upon these findings and better understand how XR systems can be optimized for different healthcare applications, it is crucial to examine the role of sensing technologies that underpin XR platforms. The type and configuration of sensors integrated into XR systems directly influence their usability, fidelity, and contextual appropriateness for clinical interventions.

Table 1 presents a comparative overview of the primary sensing technologies used in XR systems, highlighting their roles in determining system performance and relevance to healthcare applications. It categorizes sensors such as IMUs, optical trackers, depth cameras, LiDAR, eye trackers, gesture sensors, physiological sensors, and SLAM techniques based on their technical functions, XR domain usage, and clinical applicability. By aligning sensor capabilities with their impact on XR fidelity and medical integration, the table bridges technical specifications with real-world healthcare needs.

Gap Analysis

While several researchers have explored the potential of XR technologies in the medical field, there is a lack of work proposing a DSS that addresses the criteria influencing the selection/evaluation of XR systems in healthcare. To the best of our knowledge, this study is the first research paper that presents a DSS based on a multiple-criteria analysis for the evaluation and selection of XR systems to be applied in the healthcare sector. The proposed system utilizes a combination of the SCOR model, a literature survey, and expert knowledge to identify and rank influential criteria for evaluating XR systems in healthcare. The ANP model is used to determine the relative importance of each criterion under a fuzzy environment. Fuzzy environments help to deal with the uncertainty and imprecision surrounding the opinion of our experts and literature, allowing for a more flexible and nuanced approach to factor identification and ranking [

32,

33,

34,

35]. Two state-of-the-art fuzzy approaches are compared to provide a more comprehensive analysis. The proposed system allows decision-makers to evaluate and compare available XR systems systematically to select the most suitable one while providing researchers with an early evaluation during the design phase. Overall, this approach provides a comprehensive evaluation of different factors affecting the performance of XR systems in healthcare, aiding in effective decision-making.

3. Materials and Methods

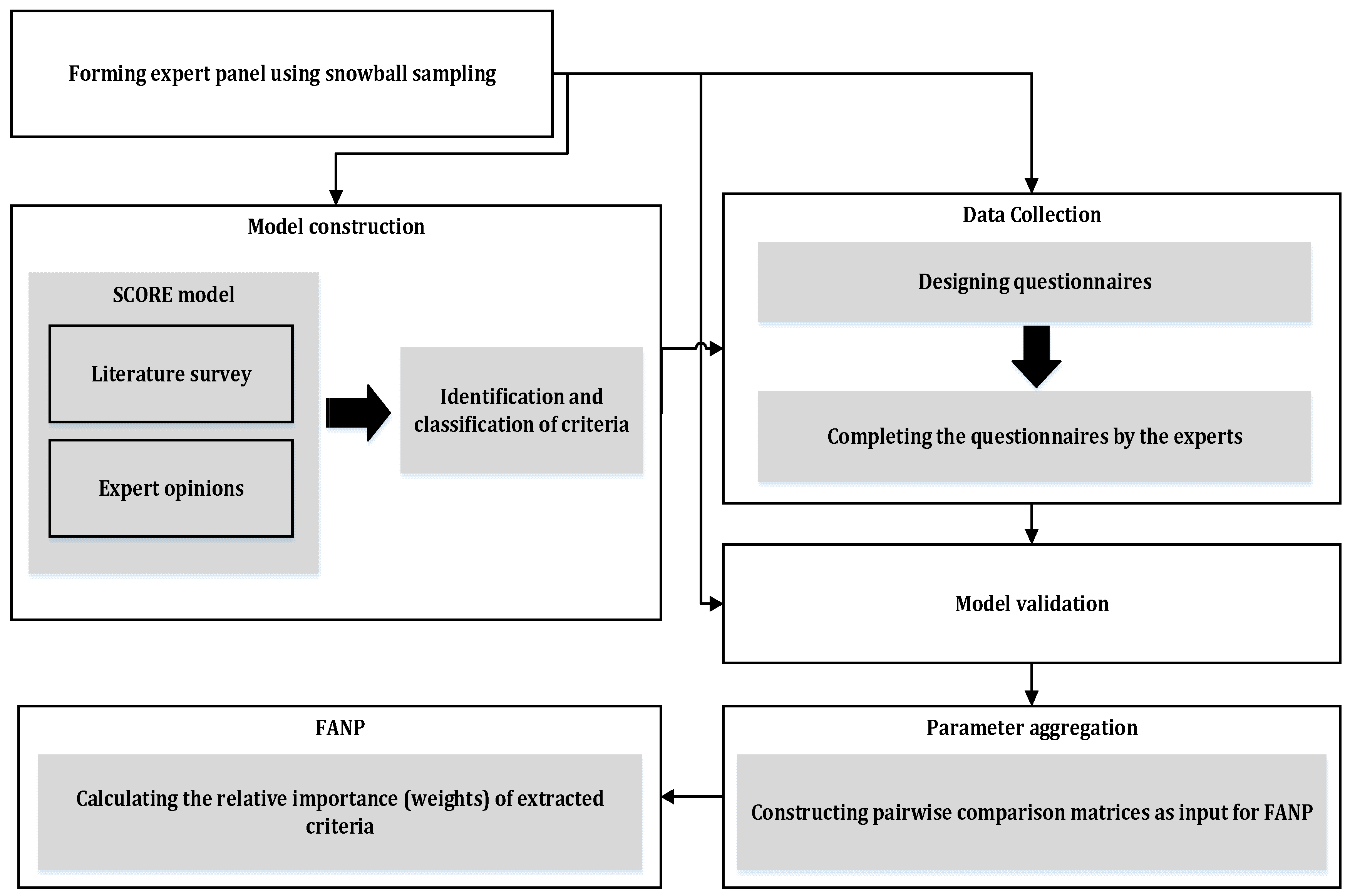

Our proposed multiple-criteria analysis approach consists of five phases: model construction, data collection, model confirmation/validation, model parameter determination, and calculating local and global weights, as demonstrated in

Figure 1.

3.1. Model Construction

Based on an extensive review of the literature, the most critical evaluation criteria for XR systems in the healthcare domain are first identified and subsequently classified. Loosely based on the well-established SCOR model [

36,

37], this paper provides a taxonomy of XR system selection criteria and classifies them into distinct classes.

The SCOR model, with its wide recognition for aiding supply chain strategy formulation, serves as a robust foundation. Its key attributes, like providing a standard description of supply chain processes, performance metrics, and best practices, enable a systematic approach to classify and evaluate XR systems. Leveraging SCOR’s principles allows this paper to develop a coherent taxonomy that is adaptable to the evolving needs of the healthcare industry. It breaks down the criteria for XR system selection in healthcare into four overarching classes (reliability, responsiveness, agility, and cost), further subdivided into 18 specific criteria.

Reliability: The ability to perform tasks as expected. An XR system is expected to be accurate, safe, and convenient.

Accuracy: The degree to which the result of a measurement or calculation conforms to the correct value or a standard [

38]. This definition includes concepts such as precision and recall.

Safety: The condition or state of being free from harm, injury, or loss [

39,

40].

Ease of installation and use: This includes features such as weight, if one or both hands are free during usage, and help and documentation [

37].

User control and freedom: The ability of the user to freely move around and perform intended tasks with full control [

41].

Responsiveness: The speed and timeliness of service delivery.

Feedback: Refers to the response time of the system and its ability to provide real-time feedback [

26,

42].

Setup time: The time interval necessary to prepare the system for operation [

42,

43].

Maximum usage time: The maximum time that the system can operate without interruption [

43].

Interaction method: Outlines the usability of the specific XR system. It can be vocal, gestural, touching, physiological, or a combination of more than one method [

41].

Information update gap: How fast the XR system allows updating information from/to the central system, thus supporting a more responsive process [

42].

Agility: The capacity to effectively sense and respond to external influences/stimuli.

Supported tech: It can support AR, VR, MR, or a combination of all [

26].

Expandability: The degree to which a system could easily extend or upgrade with new functionalities and abilities to address new requirements [

39].

Operating environment: Refers to the environments (indoor, outdoor, and severe) in which the system can operate [

42].

Operating range: The maximum allowable area where the system can operate [

26].

Costs: All the costs associated with operating the processes.

Purchase cost: The total cost paid for the acquisition of the XR system [

44].

Operating cost: All the required costs related to the operation of the XR system [

45].

Maintenance cost: The sum of the cost of preserving the system [

39].

Warranty cost: The cost paid for obtaining a warranty for the XR system [

46].

Depreciation cost: The reduction in the initial value of the machinery resulting from its exploitation [

46].

Figure 2 graphically showcases the hierarchical model based on this taxonomy.

3.2. Data Collection

Employing the snowball sampling method, the XR experts are opted to confirm, identify, and categorize the factors and sub-factors.

3.2.1. Snowball Sampling Method

Snowball sampling is a sampling technique where primary subjects recruit future study subjects among their acquaintances. This technique can be used to identify experts in a certain field. Locating hidden populations and low cost can be mentioned as advantages of the snowball sampling method. Snowball sampling starts with a convenience sample of initial subjects [

47]. The initial subjects serve as “seeds”, through which the sample consequently expands through several steps, just like a snowball growing in size as it rolls down a hill [

48]. For this work, the opinions of 15 experts in the intersection of XR and healthcare are solicited. All experts met strict eligibility criteria regarding domain experience, graduate qualification, and peer-reviewed contributions. Having a group of five to eight experts suffices for training our proposed model. As an example, Ref. [

48] initiated with a snowball sample size of three internet of things experts which later was expanded to a team of eight. We acknowledge that the snowball referral used to contact some participants may have introduced a degree of network-homogeneity bias.

3.2.2. Questionnaire Design

To identify and prioritize the evaluation criteria for XR technologies in healthcare, the FANP—a multi-criteria decision-making method—was employed, as it is well-suited for capturing the complexity and interdependence of evaluation criteria under uncertainty.

The questionnaire was designed based on the validated model proposed by [

49], commonly used in prior decision analysis research. The evaluation framework was structured as a network, allowing for the consideration of interdependencies among criteria and sub-criteria. To gather input, we developed a set of pairwise comparison questions. For each pair of criteria, participants were asked to assess their relative importance. Each pairwise comparison asked participants to evaluate the relative importance of one criterion over another. For instance, within the “reliability” cluster, one question was: “How important is Accuracy in comparison to Safety?” This approach was applied consistently across all other criteria and sub-criteria clusters as well.

Respondents expressed their judgment using a linguistic scale (e.g., equally important, moderately more important, etc.) provided in

Table 2, which was then converted to a Triangular Fuzzy Number (TFN) to account for the ambiguity and subjectivity of human judgment. This conversion allowed us to represent expert preferences more realistically in the presence of uncertainty. It should be noted that, first, using a pre-approved questionnaire can help validate the questionnaire, and second, homogeneity and clustering as a guarantee for validity insurance are considered [

50].

3.3. Model Validation

To ensure the robustness and relevance of the proposed model, a comprehensive validation process was undertaken.

3.3.1. Expert-Based Qualitative Validation:

Preliminary Model Review: Initially, a preliminary version of the model was presented to a select panel of domain experts. These experts, with substantial experience in both healthcare and XR systems, meticulously reviewed the model’s structure, criteria, and sub-criteria.

Feedback and Refinement: Through focused group discussions and one-on-one interactions, the experts provided feedback on the relevance, comprehensiveness, and clarity of the model components. This iterative process allowed for the identification of any overlooked criteria, potential redundancies, or ambiguities.

Final Model Endorsement: After incorporating the suggested modifications, the refined model was once again presented to the expert panel for a final review. Their consensus endorsement confirmed the model’s validity and relevance in the target domain.

3.3.2. Questionnaire Distribution:

Once the model’s validity was ascertained through expert endorsement, the next phase was the distribution of the questionnaire.

Development of the Questionnaire: The questionnaire was designed to capture the relative importance and interrelationships among the model’s criteria and sub-criteria. It integrated the findings from the literature review with insights from the preliminary expert review.

Pilot Testing: Before the full-scale distribution, the questionnaire underwent pilot testing with a subset of experts. This ensured clarity, appropriateness, and the feasibility of the questions. Feedback from this phase further fine-tuned the questionnaire.

Full-Scale Distribution: The finalized questionnaire was then disseminated among a broader set of experts in the field. Their responses facilitated the quantitative analysis of the model’s components and their interrelationships. Expert input was gathered through successive rounds over four weeks, with feedback loops until all participants confirmed their final responses. The complete questionnaire is provided in

Appendix B.

Through this rigorous validation process, we ensured that our model not only stands up to academic scrutiny but also aligns well with practical, real-world insights and expertise.

3.4. Parameter Aggregation

In FANP, the results of pairwise comparison made by the experts are aggregated, and the parameters are calculated as follows: The responses are mapped to TFNs using the scale specified in

Table 2. The weights of the experts for each question are calculated using the following equation (weight of expert

j for question

i):

where

distanceij is the difference between the response of expert

j to question

i and the average of all experts’ responses to question

i. The aggregated TFN associated with each comparison is calculated based on the weighted average and standard deviation for each question. The aggregated TFNs form the pairwise comparison matrices.

3.5. Calculating Criteria Weights

A fuzzy ANP-based model is used to calculate the local and global weights due to its ability to address more generalized relations than AHP. Furthermore, its combination with fuzzy theory is due to the inability of the original ANP model to handle the imprecision and subjectivity in the pairwise comparison process made by the experts. The versatility of ANP and FANP methodologies is evident not just in healthcare but also in diverse applications. For instance, Refs. [

52,

53,

54] are testament to this adaptability and underline the adaptability of these methodologies. From flood vulnerability assessments to soil erosion susceptibility evaluations and beyond, the common thread remains the robustness of ANP and FANP in navigating intricate decision-making processes. Such cross-domain insights accentuate the relevance of ANP and FANP for XR system selection in healthcare [

52,

53,

54].

Let the TFN (

) be the elements of the pairwise comparison matrix between criterion i and j (named as pairwise comparison ratios), where

, and

indicate the smallest, the most promising, and the largest possible value describing a fuzzy event, respectively [

55]. Several approaches have been proposed to extend the ANP model to a fuzzy setting. In this paper, two primary approaches are focused on, which are aimed at determining the final weights of the criteria and prioritizing them accordingly.

Table 3 provides a comparative summary of FANP Approaches A and B, outlining their methodological differences, computational structures, advantages, and complexity.

- -

FANP approach A

This approach, which is based on the model proposed by [

49], involves the utilization of fuzzy pairwise comparison judgments instead of exact numerical values for the comparison ratios. By employing this method, the original fuzzy prioritization problem can be transformed into a non-linear program. This is a three-step procedure which is described in detail as follows [

56]:

Step A-1. Using pairwise comparison matrices, determine the local weights of the factors and sub-factors. Using the following mathematical programming model, the local weights of the factors and sub-factors are calculated:

Subject to:

where w and

denote the local weight vector and the consistency index. If the optimal objective function of the programming model is positive, it means that the set of fuzzy judgments is rather consistent, and if it is a negative value, it means that the fuzzy judgments are inconsistent. The closer to one, the greater the consistency and compatibility of judgments are. The consistency index is a metric devised to gauge the level of consistency in the provided pairwise comparisons. In essence, λ measures the extent to which the matrix deviates from perfect consistency. While the exact threshold can vary depending on the specific context or the complexity of the decision problem, an index of 0.6 or higher is generally deemed acceptable, implying that the judgments are sufficiently consistent for the decision-making process [

49].

Step A-2. In this step, the interdependent effects and the global weights of the criteria are calculated. The interdependent effects are asked for by the experts and extracted from the questionnaires. The global weights of the factors are equal to the product of the local weights of the factors and the interdependency matrix.

Step A-3. The global weights of the sub-factors are calculated by multiplying the local weight of the sub-factor with the global weights of the relevant factor, calculated in the previous step.

- -

FANP approach B

The approach presented by [

57] for fuzzy prioritization is based on [

58,

59]’s fuzzy arithmetic means and requires a fuzzy ranking procedure to compare the final fuzzy scores. To determine the final weights of the criteria, the following steps need to be followed:

Step B-1. Calculating the fuzzy synthetic extent for the criterion i, denoted as

(the combined judgment for criterion i relative to all others), as follows [

57]:

Step B-2. To obtain criteria weights, the principle of comparison must be considered for fuzzy numbers. The degree of possibility (the likelihood that one fuzzy number is greater than another) of comparing two fuzzy synthetic extents

and

is defined as follows [

57]:

Step B-3. Calculating the relative preference of each criterion (the least possibility that criterion j is worse than any other), denoted as and then normalizing them to find the criteria weights,

Table 3.

Comparison of FANP approaches A and B.

Table 3.

Comparison of FANP approaches A and B.

| Aspect | FANP Approach A [49] | FANP Approach B [57] |

|---|

| Methodology | Fuzzy pairwise comparison, transformed into a non-linear program. | Fuzzy synthetic extent and fuzzy ranking procedure. |

| Step Structure | Three steps: local weight calculation, interdependent effects, and global weight computation. | Three steps: fuzzy synthetic extent, fuzzy ranking, and relative preference calculation. |

| Mathematical Foundation | Based on fuzzy optimization with constraints. | Based on fuzzy arithmetic means and possibility theory. |

| Handling of Inconsistency | The consistency index measures deviation from perfect consistency. | Relies on fuzzy ranking to compare final fuzzy scores. |

| Global Weight Calculation | Local weights are multiplied by the interdependency matrix to obtain global weights. | Final weights are determined by fuzzy synthetic extent and relative preferences. |

| Complexity | Relatively more computationally intensive due to non-linear programming. | Computationally simpler due to direct use of fuzzy arithmetic. |

| Advantages | Suitable for complex, interdependent criteria. | Simpler approach, more intuitive ranking of criteria. |

5. Performance Evaluation of Existing XR Technologies Based on the Proposed Criteria

It is important to note that the relative importance of the evaluation criteria proposed in this study may vary depending on the intended use case of the XR system. For instance, applications in surgical training may prioritize accuracy and feedback responsiveness, while remote collaboration solutions might emphasize operating range and ease of use. Chiang et al. developed a VR simulator aimed at reducing the training time for cardiologists pursuing certification and experience in intracardiac interventions. They assessed the simulator’s efficacy, and their findings were fully consistent with the results of our study. Notably, their evaluation highlighted ‘accuracy’ as the highest priority, followed by ‘responsiveness’ and then ‘cost’ as the next most important factors [

60]. However, for the purpose of a consistent and generalizable comparison across various XR technologies, we rely on the weighting derived from the FANP model developed in this study. To assess the performance of widely available XR systems in the market, we selected eight representative devices encompassing a mix of AR, VR, and MR technologies. These include Microsoft HoloLens 2, Meta Quest 3, Magic Leap 2, Varjo XR-4, HTC Vive Pro 2, Apple Vision Pro, Pico 4 Enterprise, and RealWear Navigator 500. Each system was evaluated against the 18 sub-factors categorized under the four primary criteria: reliability, responsiveness, agility, and cost. The scoring for each sub-factor was informed by manufacturer specifications, third-party technical reviews, and relevant literature (see

Section 2), with scores normalized on a 1-to-10 scale and then weighted based on the FANP-derived importance. The detailed scoring tables for each XR system are available in

Appendix A.

Figure 7 presents a detailed, at-a-glance comparison of strengths and weaknesses across each criterion and illustrates a trade-off between cost-efficiency and high-end technical capability. Devices such as the Meta Quest 3 and Pico 4 Enterprise offer attractive affordability and ease of use but tend to score slightly lower in clinically critical dimensions like safety and accuracy. In contrast, premium systems such as Apple Vision Pro and Varjo XR-4 deliver top-tier performance but at a significantly higher cost. These distinctions underscore the importance of contextualizing XR system selection based on the operational goals and constraints of the intended healthcare environment.

As displayed in

Figure 7, Apple Vision Pro and Microsoft HoloLens 2 consistently demonstrate high scores, with most sub-factor evaluations above 8 out of 10. These systems particularly excel in critical dimensions such as accuracy, information update gap, user control and freedom, and safety. Their uniformly strong performance highlights their suitability for high-stakes healthcare applications, including surgical visualization, complex diagnostics, and real-time guided interventions, where immersive precision and system reliability are paramount. RealWear Navigator 500 and Pico 4 Enterprise, by contrast, show their strengths in cost-related and usability-focused dimensions. These systems exhibit top scores in purchase cost, operating cost, maintenance cost, and ease of installation and use, while still maintaining competitive scores in responsiveness-related sub-factors such as setup time and maximum usage time. Although they do not match the premium devices in terms of spatial fidelity or interaction precision, their affordability and operational durability make them well-suited for deployment in resource-limited healthcare settings, as well as for training, remote collaboration, and telehealth applications.

As displayed in

Figure 7, Varjo XR-4 stands out with a perfect score in both accuracy and supported technology, reflecting its high-resolution display and hybrid MR/VR capabilities. This makes it particularly advantageous in applications requiring visual realism and high-performance computing, such as advanced simulation environments or neurosurgical planning. Similarly, Magic Leap 2 scores highly in areas such as interaction method and safety, aligning it with AR applications like physical therapy, patient education, and in-field visualization. Notably, HTC Vive Pro 2 scores relatively well in usage-related dimensions like ease of installation and maximum usage time but falls behind in several cost and agility-related metrics, including depreciation cost and setup time. This variability suggests that while the device may be suitable for training and demonstration purposes, it may be less optimal for sustained clinical deployment. To better understand how the primary evaluation criteria contribute to the overall performance of each XR system,

Figure 8 displays a stacked bar chart summarizing the weighted contributions of reliability, responsiveness, agility, and cost.

Figure 8 reveals not just which XR systems score highest, but how their strengths are structurally composed across the four decision-making criteria. One immediately noticeable insight is that top-performing systems do not rely equally on all factors—rather, each has a unique profile of emphasis. For instance, while Apple Vision Pro and Microsoft HoloLens 2 both sit at the top of the performance ranking, their category distributions show subtle but meaningful differences: Apple Vision Pro demonstrates a relatively more diversified contribution across reliability, responsiveness, and agility, whereas Microsoft HoloLens 2’s strength is more concentrated in responsiveness and reliability. This suggests that Apple’s system may offer greater adaptability across varied environments and use cases, while HoloLens 2 may be more optimal for applications demanding fast, stable interaction in known settings.

Interestingly, the cost contribution is highest among mid-ranking systems such as RealWear Navigator 500 and Pico 4 Enterprise. These systems exhibit a value-driven profile, where high scores are derived in part from low operating and acquisition costs, reinforcing their suitability for scaled implementations, workforce training, or budget-conscious clinical environments. In contrast, high-end systems such as Varjo XR-4 show a minimal cost contribution, reflecting that their performance is achieved through premium technical specifications rather than economic efficiency. This divergence underscores that systems optimized for technical superiority may inherently require more investment, even if their overall performance ranks similarly to more economical alternatives. Another insight emerges from the responsiveness category, which appears as a common strength across nearly all devices—indicating that most XR manufacturers are prioritizing real-time feedback, interaction fluidity, and operational responsiveness. This might be a result of competitive differentiation in user experience, especially as XR expands into healthcare settings where latency, gesture recognition, and feedback are essential for clinician and patient engagement. A complementary view is provided in

Figure 9, which visualizes each XR system as a bubble in a 2D plane with purchase cost on the

x-axis and total weighted score on the

y-axis.

Figure 9 illustrates a multidimensional lens on XR system performance by simultaneously visualizing purchase cost, total weighted score, safety, and maximum usage time. From this perspective, what becomes clear is that value is not linearly tied to investment—while systems like the Apple Vision Pro and Varjo XR-4 occupy the upper-right region, indicating high performance and high cost, several mid-cost devices such as RealWear Navigator 500 and Pico 4 Enterprise achieve nearly comparable performance scores with significantly lower price points and longer operational durations. Moreover, by encoding safety as color intensity and usage time as bubble size, the plot reveals an important operational insight: some lower-cost devices like RealWear deliver not only economic efficiency but also durability and reliability, key for extended use in resource-constrained or mobile healthcare environments. This shifts the interpretation of “performance” from a purely technical measure to one that incorporates practical usability and sustainability, especially relevant for broader adoption across healthcare contexts. While our evaluation offers a comprehensive comparison of XR devices using a structured set of criteria, several limitations warrant consideration. First, the scoring of individual sub-factors relied on secondary sources such as manufacturer specifications, third-party technical reviews, and peer-reviewed literature. Although these sources are valuable and commonly used in technology assessments, they may not fully capture real-world performance, particularly in clinical settings. To mitigate this limitation, we employed cross-referencing data from multiple independent sources whenever possible to ensure greater accuracy and consistency in the assigned scores. Additionally, all scores were normalized to a consistent 1–10 scale to allow meaningful comparison across devices and sub-criteria. While we aimed to standardize the scoring process, some subjectivity remains unavoidable. To reduce this risk, the weighting of sub-factors was determined using the FANP, which incorporates expert judgment in a structured and consistent manner. This approach ensures that the influence of any individual subjective assessment is moderated by collective expert input.

6. Conclusions

Although the capabilities of XR systems in healthcare have not yet been fully explored, they can offer significant benefits for improving patient care in innovative and effective ways. We propose the first comprehensive FANP-based decision support system (DSS) specifically designed to evaluate XR technologies in healthcare. This framework integrates the SCOR model with FANP to systematically assess XR technologies by prioritizing key evaluation factors across four dimensions—reliability, responsiveness, agility, and cost—encompassing a total of 18 criteria. These factors are identified by a hybridization of the SCOR model, a literature survey, and experts’ knowledge and experience. To determine the importance of these factors and sub-factors, an integrated fuzzy ANP approach is used. Two fuzzy approaches for the ANP method from the literature are utilized, and the results are analyzed to compare the relative weights of all the identified factors. After calculating Kendall’s Tau and Spearman’s rank correlation coefficients, the results indicate a robust positive correlation between the rankings obtained by the two fuzzy approaches. The application of this framework to eight prominent XR systems highlighted performance trade-offs and helped identify domain-specific system strengths. Insights from the comparative analysis and sensor-based evaluation were discussed to guide procurement and development decisions in healthcare contexts. By utilizing the proposed Decision Support System, the selection of XR systems in healthcare can be enhanced, resulting in improved outcomes for both patients and providers. While this research provides a solid foundation for XR system evaluation in healthcare, there are natural areas for extension. The expert-driven scoring and weighting process, while rigorous, could be further strengthened by incorporating a broader range of perspectives or real-time user feedback. Additionally, as this study focused on a structured pre-market evaluation, future work could complement the model with longitudinal benchmarking using field data such as clinician satisfaction, patient outcomes, and user analytics. Tailoring the framework for specific clinical applications and integrating evolving sensing and AI technologies also represent meaningful directions to enrich and adapt the model for ongoing advancements in XR.