Enhancing Time Series Anomaly Detection: A Knowledge Distillation Approach with Image Transformation

Abstract

1. Introduction

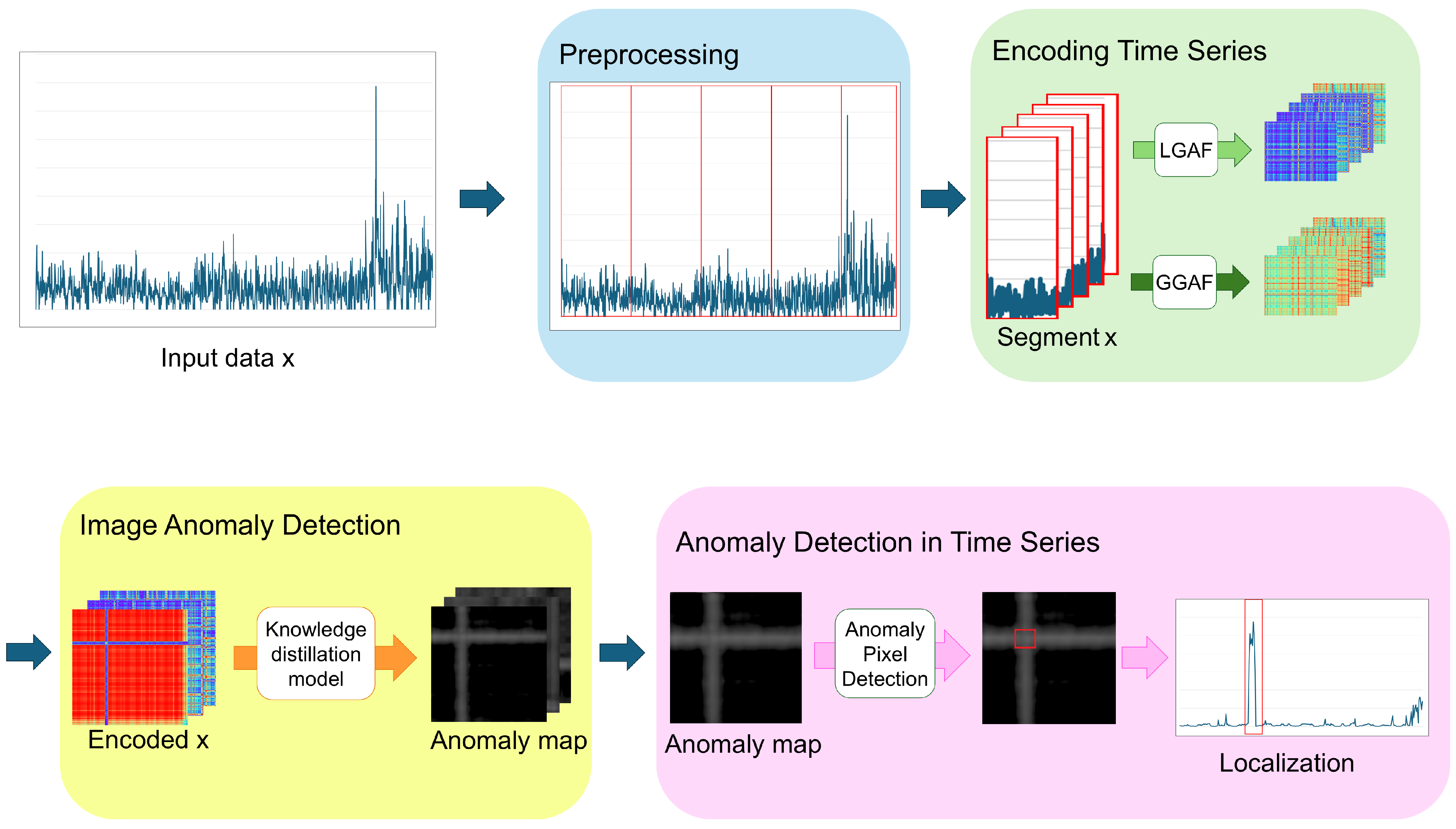

- Generalization of normal patterns through processing: Normal time series data are segmented based on repetitive patterns and transformed into images while preserving time series characteristics. Although normal patterns in time series data can be unstable due to noise and other factors, segmenting them by cycle and generalizing them into image representations enables more robust detection against such variability. This approach facilitates the formation of normal patterns in unsupervised anomaly detection, leading to improved detection performance.

- Anomaly-map-based time series anomaly detection: Using a knowledge-distillation-based anomaly detection model, an anomaly map is derived from image anomaly detection results. The transformed image and the derived anomaly map, which preserve the temporal dependency and time series characteristics, allow for an intuitive understanding of where anomalies occur. Leveraging this map and the preserved time series structure, we propose a more accurate and efficient anomaly detection algorithm for time series data.

- Experimental validation: extensive experiments on various real-world datasets validate the effectiveness of the proposed framework and demonstrate significant performance gains over existing methods.

2. Related Works

2.1. Anomaly Detection of Time Series Data

2.2. Image Transformation of Time Series Data

2.3. Anomaly Detection of Image Data

3. Preliminaries

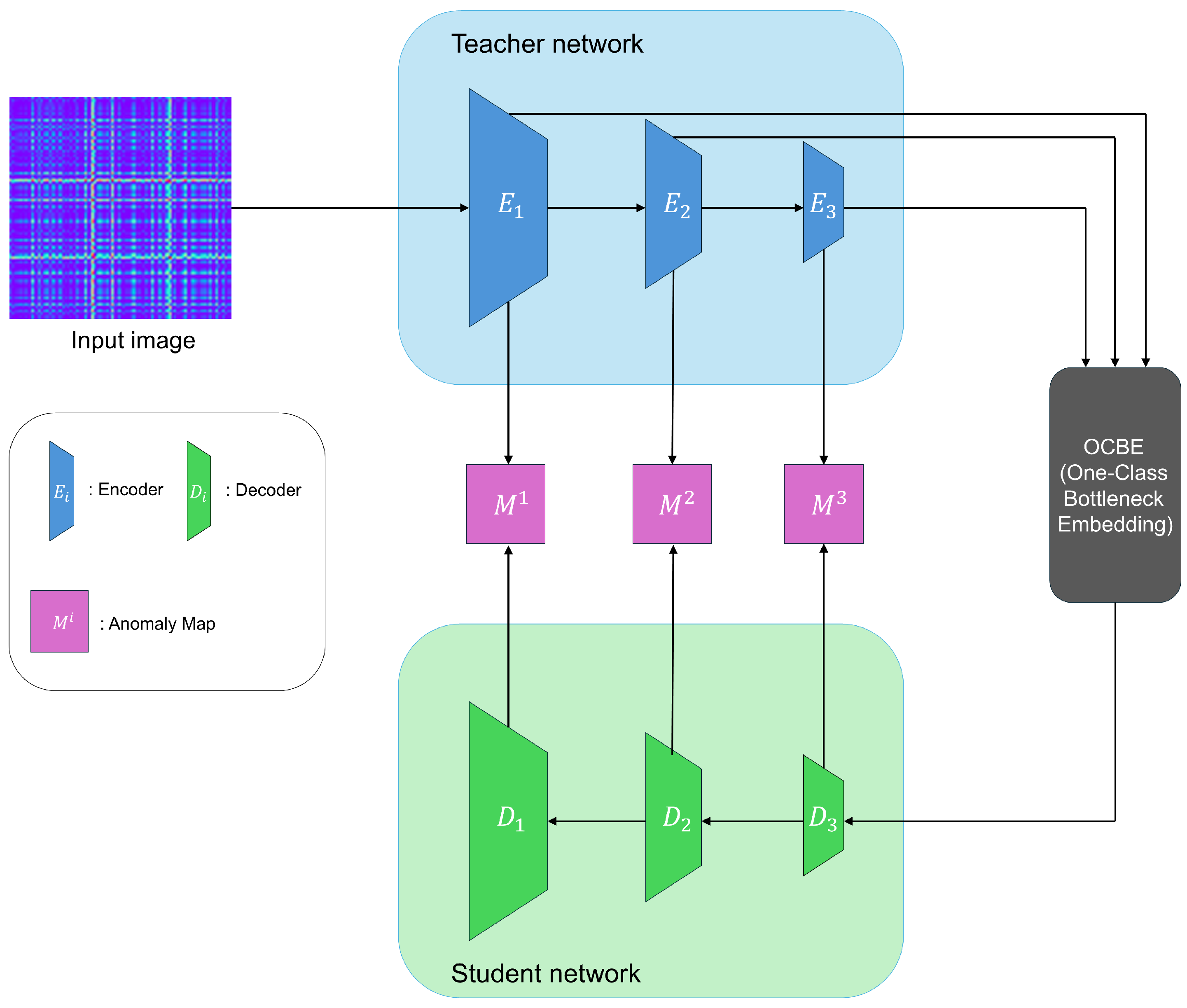

3.1. Reverse Distillation

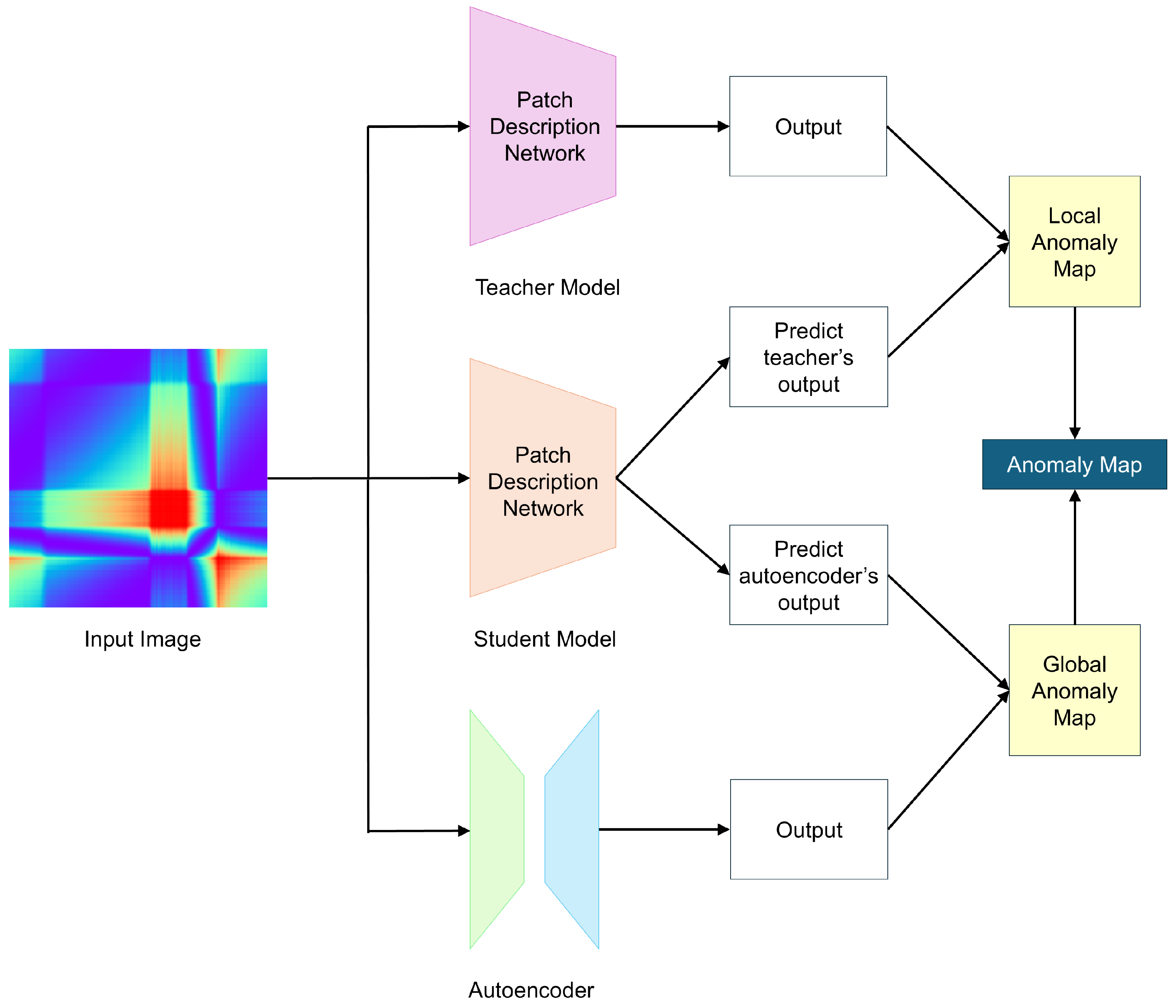

3.2. EfficientAD

4. Methods

4.1. Data Pre-Processing

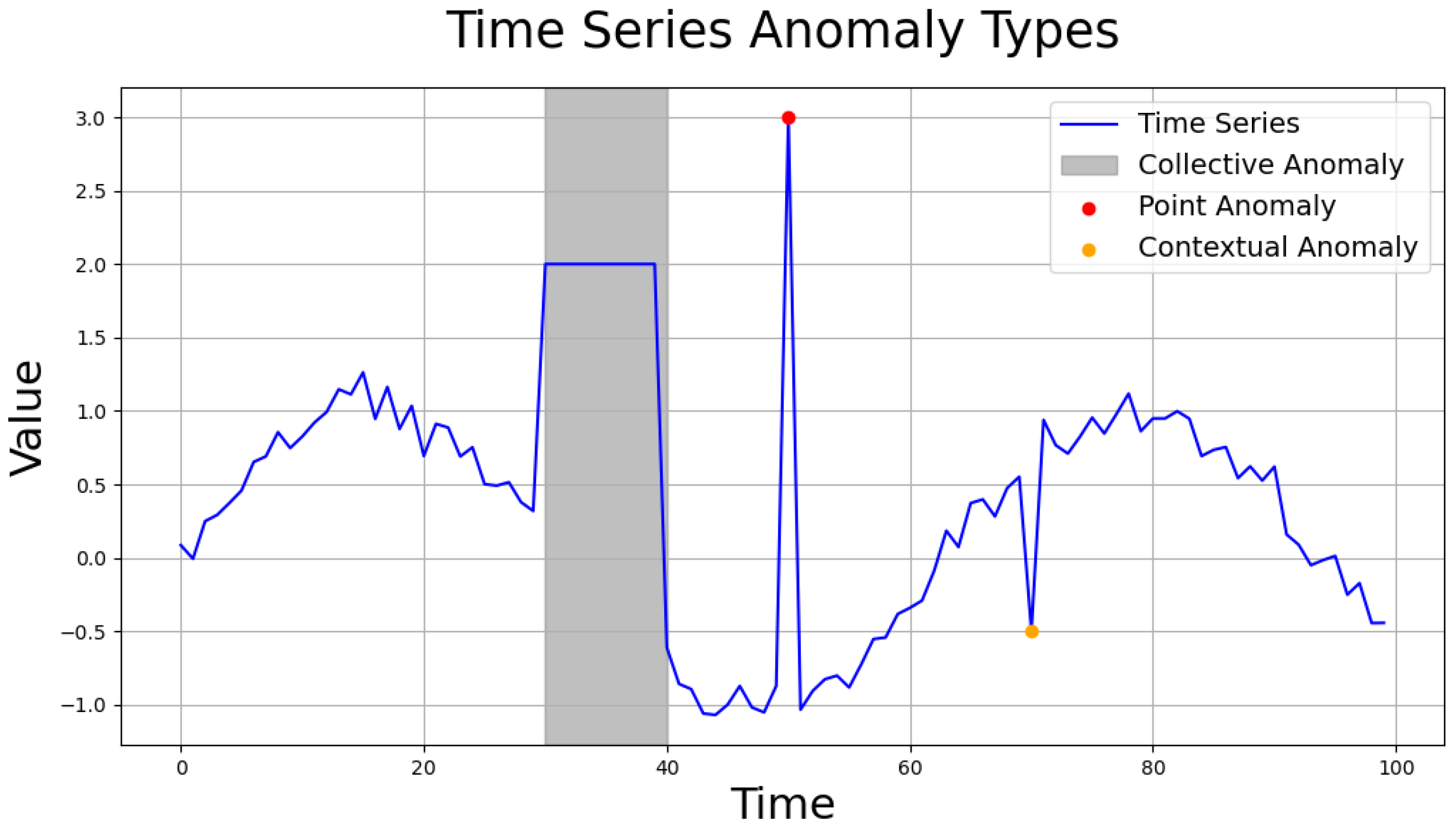

- Point anomaly: abnormal data values at specific time points.

- Contextual anomaly: data with individually normal values that appear abnormal in a temporal context.

- Collective anomaly: a group of data points that individually appear normal but together form an anomalous pattern.

4.1.1. Data Transformation

- Min-Max Normalization scales data to a range of , enhancing small differences between values for improved anomaly detection. However, it may distort distributions in the presence of significant discrepancies. The transformation process is expressed as follows:where are the minimum and maximum values in the time series , respectively.

- Z-Score Normalization adjusts the data to have a mean of zero and scales it by the standard deviation, making it easier to identify anomalies that differ significantly from the average. This method may underperform for non-normal distributions or datasets heavily influenced by anomalies. The transformation process is defined as follows:where is the mean of the times series and is the standard deviation of the time series .

- Log Transformation reduces the impact of extreme values and stabilizes data distribution. It is particularly useful for wide value ranges, but may distort cyclic patterns. The log transformation process is represented by the following equation:

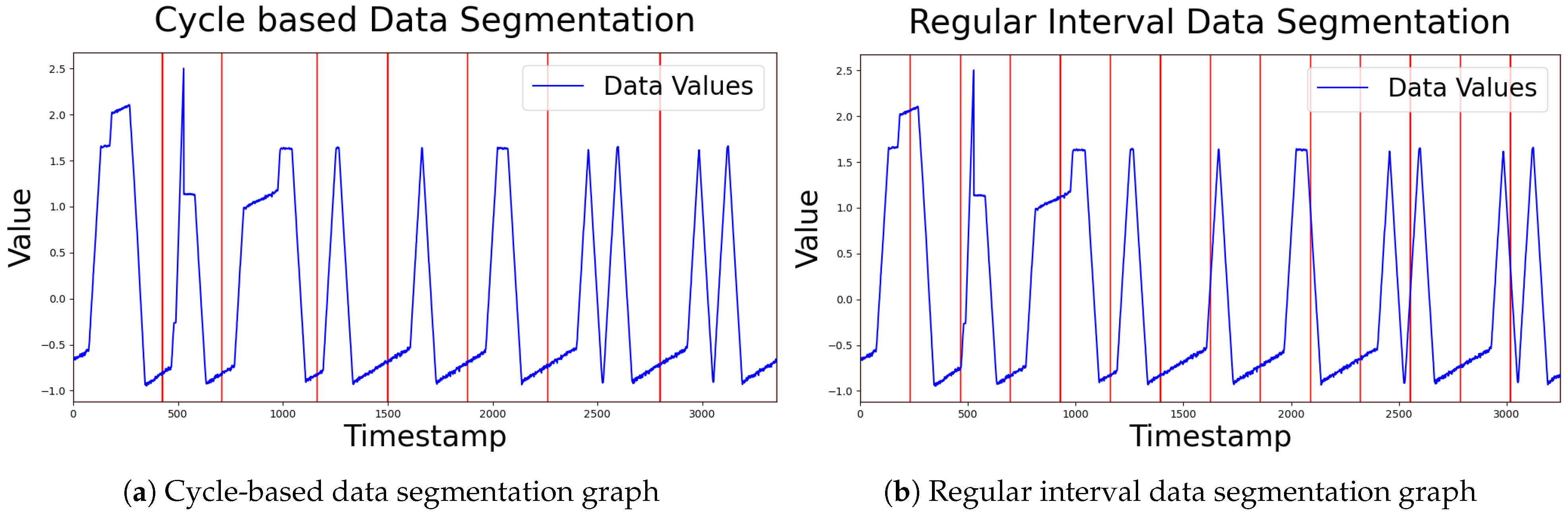

4.1.2. Time Series Segmentation

4.2. Encoding Time Series

- Local GAF (LGAF): focuses on local extrema for normalization, emphasizing subtle changes.

- Global GAF (GGAF): normalizes using global extrema, providing robustness to noise and better generalization.

4.3. Image Anomaly Detection via Knowledge Distillation

4.4. Anomaly Detection in Time Series

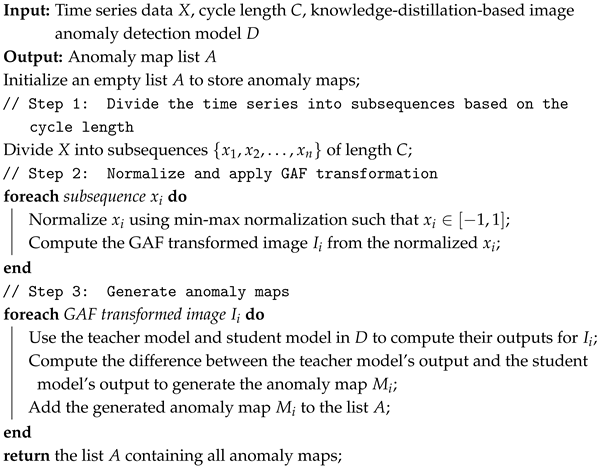

| Algorithm 1: Anomaly map generation via preprocessing, GAF, and knowledge distillation model |

|

| Algorithm 2: Time series anomaly detection using anomaly map |

|

5. Experiments

5.1. Experimental Setup

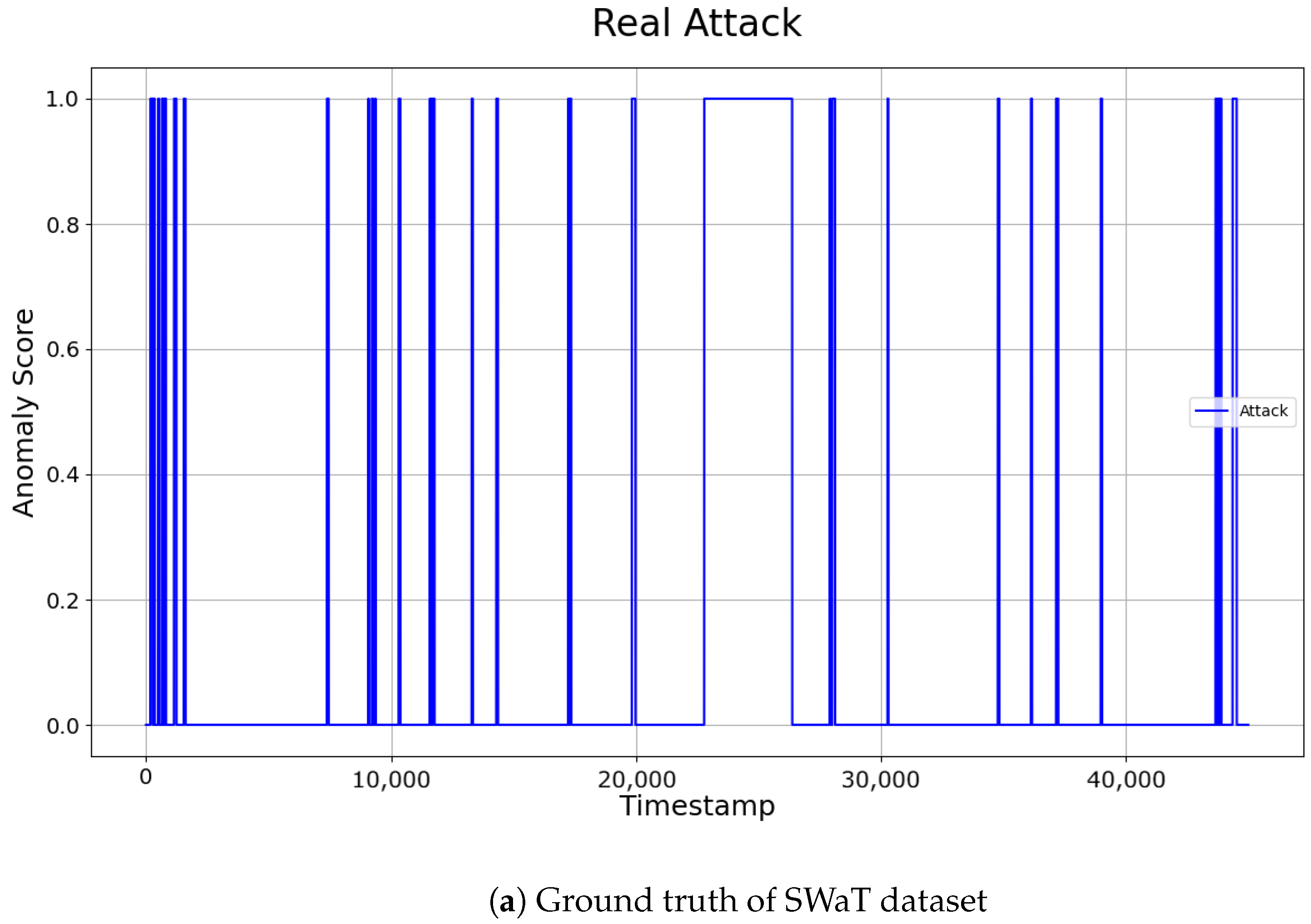

- Secure Water Treatment dataset (SWaT) [32]: The SWaT dataset consists of data collected from 51 sensors in a real water treatment process. Each sensor measures parameters such as water level, pressure, flow rate, and actuator operation. Only the normal operation data were used for training, while the abnormal operation data were used for testing. Testing was performed individually on representative sensors, and the results from these sensors were combined to determine the overall anomaly detection performance for the SWaT dataset. According to [32], the system requires approximately 5 h for stabilization. Therefore, the first 21,600 data points were removed from the training data, and down-sampling by a factor of 10 was applied to process the data efficiently.

- InternalBleeding dataset from UCR [33]: The University of California, Riverside (UCR) dataset includes time series data collected from various domains, such as industrial systems, environmental sensors, biological signal sensors, and financial data. It is widely used for time series anomaly detection research. The dataset consists of uni-variate time series data. In our experiments, we used the InternalBleeding data, which contains measurements of arterial blood pressure in pigs, excluding synthetic sequences generated with intentionally created anomalies.

- NAB dataset [34,35]: The Numenta Anomaly Benchmark (NAB) dataset comprises real and artificially generated uni-variate time series data collected to evaluate anomaly detection algorithms for real time applications. It includes data such as machine temperature sensor readings, CPU temperatures, New York taxi traffic volumes, and AWS server metrics. For our experiments, we divided the data into subsequences of length 100 and performed anomaly detection. Only normal data points, excluding 233 anomaly containing timestamps, were used for training.

- True Positive (TP): an instance where an actual abnormal point is correctly identified as abnormal.

- False Positive (FP): an instance where a normal point is incorrectly identified as abnormal.

- True Negative (TN): an instance where a normal point is correctly identified as normal.

- False Negative (FN): an instance where an actual abnormal point is incorrectly identified as normal.

5.2. Analysis of the Proposed Framework

5.2.1. Module-Wise Analysis

5.2.2. Comparison with Baselines

6. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GAF | Gramian Angular Field |

| LGAF | Local Gramian Angular Field |

| GGAF | Global Gramian Angular Field |

| KD | Knowledge Distillation |

| RD | Reverse Distillation |

| EAD | EfficientAD |

| PDN | Patch Description Network |

| SPADE | Semantic Pyramid Anomaly Detection |

| FAS | Final Anomaly Score |

| SWaT | Secure Water Treatment dataset |

| UCR | University of California, Riverside |

| NAB | Numenta Anomaly Benchmark |

| AWS | Amazon Web Services |

| MVTecAD | MVTec Anomaly Detection dataset |

| IoT | Internet of Things |

References

- Pang, G.; Shen, C.; Cao, L.; Hengel, A.V.D. Deep learning for anomaly detection: A review. ACM Comput. Surv. 2021, 54, 38. [Google Scholar] [CrossRef]

- Hinton, G. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Zamanzadeh Darban, Z.; Webb, G.I.; Pan, S.; Aggarwal, C.; Salehi, M. Deep learning for time series anomaly detection: A survey. ACM Comput. Surv. 2024, 57, 15. [Google Scholar] [CrossRef]

- Kang, J.; Kim, M.; Park, J.; Park, S. Time-Series to Image-Transformed Adversarial Autoencoder for Anomaly Detection. IEEE Access 2024, 12, 119671–119684. [Google Scholar] [CrossRef]

- Saravanan, S.S.; Luo, T.; Van Ngo, M. TSI-GAN: Unsupervised Time Series Anomaly Detection Using Convolutional Cycle-Consistent Generative Adversarial Networks. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Taipei, Taiwan, 7–10 May 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 39–54. [Google Scholar]

- Namura, N.; Ichikawa, Y. Training-Free Time-Series Anomaly Detection: Leveraging Image Foundation Models. arXiv 2024, arXiv:2408.14756. [Google Scholar]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14318–14328. [Google Scholar]

- Vaswani, A. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Chen, Y.; Xu, H.; Pang, G.; Qiao, H.; Zhou, Y.; Shang, M. Self-supervised Spatial-Temporal Normality Learning for Time Series Anomaly Detection. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Vilnius, Lithuania, 9–13 September 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 145–162. [Google Scholar]

- Wang, R.; Liu, C.; Mou, X.; Gao, K.; Guo, X.; Liu, P.; Wo, T.; Liu, X. Deep contrastive one-class time series anomaly detection. In Proceedings of the 2023 SIAM International Conference on Data Mining (SDM), Minneapolis-St. Paul Twin Cities, MN, USA, 27–29 April 2023; SIAM: Philadelphia, PA, USA, 2023; pp. 694–702. [Google Scholar]

- Xu, J. Anomaly transformer: Time series anomaly detection with association discrepancy. arXiv 2021, arXiv:2110.02642. [Google Scholar]

- Tuli, S.; Casale, G.; Jennings, N.R. Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv 2022, arXiv:2201.07284. [Google Scholar] [CrossRef]

- Deng, A.; Hooi, B. Graph neural network-based anomaly detection in multivariate time series. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 19–21 May 2021; Volume 35, pp. 4027–4035. [Google Scholar]

- Mousakhan, A.; Brox, T.; Tayyub, J. Anomaly detection with conditioned denoising diffusion models. arXiv 2023, arXiv:2305.15956. [Google Scholar]

- Wang, Z.; Oates, T. Imaging time-series to improve classification and imputation. arXiv 2015, arXiv:1506.00327. [Google Scholar]

- Eckmann, J.P.; Kamphorst, S.O.; Ruelle, D. Recurrence plots of dynamical systems. World Sci. Ser. Nonlinear Sci. Ser. A 1995, 16, 441–446. [Google Scholar]

- Akcay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Ganomaly: Semi-supervised anomaly detection via adversarial training. In Proceedings of the Computer Vision—ACCV 2018: 14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018, Revised Selected Papers, Part III 14; Springer: Berlin/Heidelberg, Germany, 2019; pp. 622–637. [Google Scholar]

- Schlegl, T.; Seeböck, P.; Waldstein, S.M.; Schmidt-Erfurth, U.; Langs, G. Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In Proceedings of the International Conference on Information Processing in Medical Imaging, Boone, NC, USA, 25–30 June 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 146–157. [Google Scholar]

- Gudovskiy, D.; Ishizaka, S.; Kozuka, K. Cflow-ad: Real-time unsupervised anomaly detection with localization via conditional normalizing flows. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 98–107. [Google Scholar]

- Yu, J.; Zheng, Y.; Wang, X.; Li, W.; Wu, Y.; Zhao, R.; Wu, L. Fastflow: Unsupervised anomaly detection and localization via 2d normalizing flows. arXiv 2021, arXiv:2111.07677. [Google Scholar]

- Kim, D.; Lai, C.H.; Liao, W.H.; Takida, Y.; Murata, N.; Uesaka, T.; Mitsufuji, Y.; Ermon, S. PaGoDA: Progressive Growing of a One-Step Generator from a Low-Resolution Diffusion Teacher. arXiv 2024, arXiv:2405.14822. [Google Scholar]

- Cohen, N.; Hoshen, Y. Sub-image anomaly detection with deep pyramid correspondences. arXiv 2020, arXiv:2005.02357. [Google Scholar]

- Defard, T.; Setkov, A.; Loesch, A.; Audigier, R. Padim: A patch distribution modeling framework for anomaly detection and localization. In Proceedings of the International Conference on Pattern Recognition, Virtual, 10–15 January 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 475–489. [Google Scholar]

- Hyun, J.; Kim, S.; Jeon, G.; Kim, S.H.; Bae, K.; Kang, B.J. ReConPatch: Contrastive patch representation learning for industrial anomaly detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024; pp. 2052–2061. [Google Scholar]

- Chen, Q.; Luo, H.; Lv, C.; Zhang, Z. A unified anomaly synthesis strategy with gradient ascent for industrial anomaly detection and localization. arXiv 2024, arXiv:2407.09359. [Google Scholar]

- Deng, H.; Li, X. Anomaly detection via reverse distillation from one-class embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9737–9746. [Google Scholar]

- Batzner, K.; Heckler, L.; König, R. Efficientad: Accurate visual anomaly detection at millisecond-level latencies. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 128–138. [Google Scholar]

- Chalapathy, R.; Chawla, S. Deep learning for anomaly detection: A survey. arXiv 2019, arXiv:1901.03407. [Google Scholar]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4183–4192. [Google Scholar]

- Goh, J.; Adepu, S.; Junejo, K.N.; Mathur, A. A dataset to support research in the design of secure water treatment systems. In Proceedings of the Critical Information Infrastructures Security: 11th International Conference, CRITIS 2016, Paris, France, 10–12 October 2016, Revised Selected Papers 11; Springer: Berlin/Heidelberg, Germany, 2017; pp. 88–99. [Google Scholar]

- Wu, R.; Keogh, E.J. Current time series anomaly detection benchmarks are flawed and are creating the illusion of progress. IEEE Trans. Knowl. Data Eng. 2021, 35, 2421–2429. [Google Scholar] [CrossRef]

- Lavin, A.; Ahmad, S. Evaluating real-time anomaly detection algorithms–the Numenta anomaly benchmark. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA); IEEE: Piscataway, NJ, USA, 2015; pp. 38–44. [Google Scholar]

- Ahmad, S.; Lavin, A.; Purdy, S.; Agha, Z. Unsupervised real-time anomaly detection for streaming data. Neurocomputing 2017, 262, 134–147. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Kingma, D.P. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD–A comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9592–9600. [Google Scholar]

- Goh, J.; Adepu, S.; Tan, M.; Lee, Z.S. Anomaly detection in cyber physical systems using recurrent neural networks. In Proceedings of the 2017 IEEE 18th International Symposium on High Assurance Systems Engineering (HASE), Singapore, 12–14 January 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 140–145. [Google Scholar]

- Li, D. Anomaly detection with generative adversarial networks for multivariate time series. arXiv 2018, arXiv:1809.04758. [Google Scholar]

- Nakamura, T.; Imamura, M.; Mercer, R.; Keogh, E. Merlin: Parameter-free discovery of arbitrary length anomalies in massive time series archives. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Virtual, 17–20 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1190–1195. [Google Scholar]

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Soderstrom, T. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 387–395. [Google Scholar]

- Li, G.; Zhou, X.; Sun, J.; Yu, X.; Han, Y.; Jin, L.; Li, W.; Wang, T.; Li, S. opengauss: An autonomous database system. Proc. VLDB Endow. 2021, 14, 3028–3042. [Google Scholar] [CrossRef]

- Zong, B.; Song, Q.; Min, M.R.; Cheng, W.; Lumezanu, C.; Cho, D.; Chen, H. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; Pei, D. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2828–2837. [Google Scholar]

- Zhang, C.; Song, D.; Chen, Y.; Feng, X.; Lumezanu, C.; Cheng, W.; Ni, J.; Zong, B.; Chen, H.; Chawla, N.V. A deep neural network for unsupervised anomaly detection and diagnosis in multivariate time series data. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 1409–1416. [Google Scholar]

- Li, D.; Chen, D.; Jin, B.; Shi, L.; Goh, J.; Ng, S.K. MAD-GAN: Multivariate anomaly detection for time series data with generative adversarial networks. In Proceedings of the International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 703–716. [Google Scholar]

- Audibert, J.; Michiardi, P.; Guyard, F.; Marti, S.; Zuluaga, M.A. Usad: Unsupervised anomaly detection on multivariate time series. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual, 6–10 July 2020; pp. 3395–3404. [Google Scholar]

- Zhao, H.; Wang, Y.; Duan, J.; Huang, C.; Cao, D.; Tong, Y.; Xu, B.; Bai, J.; Tong, J.; Zhang, Q. Multivariate time-series anomaly detection via graph attention network. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 841–850. [Google Scholar]

- Zhang, Y.; Chen, Y.; Wang, J.; Pan, Z. Unsupervised deep anomaly detection for multi-sensor time-series signals. IEEE Trans. Knowl. Data Eng. 2021, 35, 2118–2132. [Google Scholar] [CrossRef]

| Dataset | Train | Test | Anomaly Rate |

|---|---|---|---|

| SWaT [32] | 47,520 | 44,991 | 12.2091% |

| UCR [33] | 31,700 | 5900 | 1.8813% |

| NAB [34,35] | 3800 | 4033 | 0.0007% |

| Data | Sensor | Regular | Cycle | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P ↑ | R ↑ | F1 ↑ | AUC ↑ | P | R | F1 | AUC | ||

| SWaT | LIT-101 | 0.9603 | 0.5949 | 0.7347 | 0.7958 | 0.9013 | 0.6552 | 0.7588 | 0.8226 |

| LIT-301 | 0.9069 | 0.6334 | 0.7458 | 0.8122 | 0.9470 | 0.6858 | 0.7955 | 0.8402 | |

| AIT-202 | 0.1258 | 0.7542 | 0.2157 | 0.5127 | 0.9207 | 0.6364 | 0.7526 | 0.8144 | |

| AIT-504 | 0.1184 | 0.7883 | 0.2059 | 0.4861 | 0.9735 | 0.5811 | 0.7278 | 0.7895 | |

| MV-101 | 0.0824 | 0.0446 | 0.0579 | 0.4877 | 0.8720 | 0.6537 | 0.7473 | 0.8202 | |

| MV-303 | 0.2161 | 0.0380 | 0.0647 | 0.5094 | 0.9337 | 0.6714 | 0.7811 | 0.8324 | |

| DPIT-301 | 0.7397 | 0.6787 | 0.7079 | 0.8227 | 0.9761 | 0.6100 | 0.7515 | 0.8044 | |

| UCR | 0.9412 | 1.0000 | 0.9697 | 0.9994 | 0.9910 | 0.9821 | 0.9865 | 0.9910 | |

| Data | Sensor | LGAF | GGAF | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | AUC | P | R | F1 | AUC | ||

| SWaT | LIT-101 | 0.9283 | 0.6363 | 0.7550 | 0.8147 | 0.9013 | 0.6552 | 0.7588 | 0.8226 |

| LIT-301 | 0.9470 | 0.6858 | 0.7955 | 0.8402 | 0.9386 | 0.6734 | 0.7842 | 0.8336 | |

| AIT-202 | 0.9229 | 0.6343 | 0.7518 | 0.8134 | 0.9207 | 0.6364 | 0.7526 | 0.8144 | |

| AIT-504 | 0.9735 | 0.5811 | 0.7278 | 0.7895 | 0.9484 | 0.5855 | 0.7240 | 0.7905 | |

| MV-101 | 0.8504 | 0.6656 | 0.7467 | 0.8246 | 0.8720 | 0.6537 | 0.7473 | 0.8202 | |

| MV-303 | 0.9337 | 0.6714 | 0.7811 | 0.8324 | 0.9330 | 0.6714 | 0.7809 | 0.8323 | |

| DPIT-301 | 0.9761 | 0.6100 | 0.7515 | 0.8044 | 1.0000 | 0.6106 | 0.7582 | 0.8053 | |

| UCR | 0.9910 | 0.9821 | 0.9865 | 0.9910 | 0.9412 | 1.0000 | 0.9697 | 0.9994 | |

| NAB | 0.0182 | 0.6667 | 0.0354 | 0.8199 | 0.0132 | 1.0000 | 0.0260 | 0.9721 | |

| Data | Sensor | RD | EAD | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | AUC | P | R | F1 | AUC | ||

| SWaT | LIT-101 | 0.9310 | 0.6308 | 0.7520 | 0.8121 | 0.9013 | 0.6552 | 0.7588 | 0.8226 |

| LIT-301 | 0.8981 | 0.6881 | 0.7792 | 0.8386 | 0.9470 | 0.6858 | 0.7955 | 0.8402 | |

| AIT-202 | 0.9279 | 0.6304 | 0.7508 | 0.8118 | 0.9207 | 0.6364 | 0.7526 | 0.8144 | |

| AIT-504 | 0.9735 | 0.5811 | 0.7278 | 0.7895 | 0.9369 | 0.5922 | 0.7257 | 0.7933 | |

| MV-101 | 0.8720 | 0.6537 | 0.7473 | 0.8202 | 0.8627 | 0.6545 | 0.7443 | 0.8200 | |

| MV-303 | 0.9337 | 0.6714 | 0.7811 | 0.8324 | 0.9134 | 0.6758 | 0.7768 | 0.8334 | |

| DPIT-301 | 0.9761 | 0.6100 | 0.7515 | 0.8044 | 0.6434 | 0.7042 | 0.6724 | 0.8249 | |

| UCR | 0.8279 | 0.9018 | 0.8632 | 0.9491 | 0.9910 | 0.9821 | 0.9865 | 0.9910 | |

| NAB | 0.0171 | 1.0000 | 0.0337 | 0.9787 | 0.0132 | 1.0000 | 0.0260 | 0.9721 | |

| Methods | Train Time (s) | Test Time (s) | Memory Usage (MiB) |

|---|---|---|---|

| RD | 1069 | 16 | 8978 |

| EAD | 2539 | 17 | 2030 |

| Sensor | Methods | Accu ↑ | P | R | F1 | FPR ↓ |

|---|---|---|---|---|---|---|

| LIT-101 | CUSUM [39] | 0.8663 | 0.3642 | 0.2686 | 0.0030 | 0.0739 |

| GAN-AD [40] | 0.8763 | 0.5000 | 0.0175 | 0.0003 | 0.0932 | |

| Ours | 0.9492 | 0.9310 | 0.6308 | 0.7520 | 0.0065 | |

| Ours * | 0.9491 | 0.9013 | 0.6552 | 0.7588 | 0.0100 | |

| LIT-301 | CUSUM | 0.8150 | 0.1292 | 0.0902 | 0.0010 | 0.0843 |

| GAN-AD | 0.8685 | 0.2222 | 0.0104 | 0.0002 | 0.0052 | |

| Ours | 0.9544 | 0.9651 | 0.6501 | 0.7769 | 0.0033 | |

| Ours * | 0.9569 | 0.9470 | 0.6858 | 0.7955 | 0.0053 | |

| AIT-202 | CUSUM | 0.5567 | 0.0924 | 0.2515 | 0.0013 | 0.3945 |

| GAN-AD | 0.6022 | 0.0458 | 0.1710 | 0.0007 | 0.3548 | |

| Ours | 0.9489 | 0.9279 | 0.6304 | 0.7508 | 0.0068 | |

| Ours * | 0.9489 | 0.9207 | 0.6364 | 0.7526 | 0.0076 | |

| DPIT-301 | CUSUM | 0.8413 | 0.1846 | 0.1714 | 0.0017 | 0.0841 |

| GAN-AD | 0.8440 | 0.2500 | 0.0267 | 0.0005 | 0.0118 | |

| Ours | 0.9199 | 0.6643 | 0.6958 | 0.6797 | 0.0489 | |

| Ours * | 0.9203 | 0.6665 | 0.6949 | 0.6804 | 0.0484 | |

| AIT-504 | CUSUM | 0.7097 | 0.0623 | 0.1438 | 0.0008 | 0.2301 |

| GAN-AD | 0.8603 | 0.1474 | 0.1435 | 0.0014 | 0.1114 | |

| Ours | 0.9474 | 0.9624 | 0.5922 | 0.7332 | 0.0032 | |

| Ours * | 0.9474 | 0.9624 | 0.5922 | 0.7332 | 0.0032 | |

| MV-303 | CUSUM | 0.7155 | 0.0967 | 0.1918 | 0.0012 | 0.2201 |

| GAN-AD | 0.8768 | 0.1754 | 0.0300 | 0.0005 | 0.0174 | |

| Ours | 0.9460 | 0.8720 | 0.6537 | 0.7473 | 0.0133 | |

| Ours * | 0.9541 | 0.9337 | 0.6714 | 0.7811 | 0.0066 |

| Methods | UCR | NAB | ||||||

|---|---|---|---|---|---|---|---|---|

| P | R | F1 | AUC | P | R | F1 | AUC | |

| MERLIN [41] | 0.8013 | 0.7262 | 0.8414 | 0.7619 | 0.7542 | 0.8018 | 0.8984 | 0.7773 |

| LSTM-NDT [42,43] | 0.6400 | 0.6667 | 0.8322 | 0.6531 | 0.5231 | 0.8294 | 0.9781 | 0.6416 |

| DAGMM [44] | 0.7622 | 0.7292 | 0.8572 | 0.7453 | 0.5337 | 0.9718 | 0.9916 | 0.6890 |

| OmniAnomaly [45] | 0.8421 | 0.6667 | 0.8330 | 0.7442 | 0.8346 | 0.9999 | 0.9981 | 0.9098 |

| MSCRED [46] | 0.8522 | 0.6700 | 0.8401 | 0.7502 | 0.5441 | 0.9718 | 0.9920 | 0.6976 |

| MAD-GAN [47] | 0.8666 | 0.7012 | 0.8478 | 0.7752 | 0.8538 | 0.9891 | 0.9984 | 0.9165 |

| USAD [48] | 0.8421 | 0.6667 | 0.8330 | 0.7442 | 0.8952 | 1.0000 | 0.9989 | 0.9447 |

| MTAD-GAT [49] | 0.8421 | 0.7272 | 0.8221 | 0.7804 | 0.7812 | 0.9972 | 0.9978 | 0.8761 |

| CAE-M [50] | 0.7918 | 0.8019 | 0.8019 | 0.7968 | 0.6981 | 1.0000 | 0.9957 | 0.8222 |

| GDN [15] | 0.8129 | 0.7872 | 0.8542 | 0.7998 | 0.6894 | 0.9988 | 0.9959 | 0.8158 |

| TranAD [14] | 0.8889 | 0.9892 | 0.9541 | 0.9364 | 0.9407 | 1.0000 | 0.9994 | 0.9694 |

| Ours | 0.9910 | 0.9821 | 0.9865 | 0.9910 | 0.0171 | 1.0000 | 0.0337 | 0.9787 |

| Methods | SWaT | |||

|---|---|---|---|---|

| P | R | F1 | AUC | |

| MERLIN | 0.6560 | 0.2547 | 0.6175 | 0.3669 |

| LSTM-NDT | 0.7778 | 0.5109 | 0.7140 | 0.6167 |

| DAGMM | 0.9933 | 0.6879 | 0.8436 | 0.8128 |

| OmniAnomaly | 0.9782 | 0.6957 | 0.8467 | 0.8131 |

| MSCRED | 0.9992 | 0.6770 | 0.8433 | 0.8072 |

| MAD-GAN | 0.9593 | 0.6957 | 0.8463 | 0.8065 |

| USAD | 0.9977 | 0.6879 | 0.8460 | 0.8143 |

| MTAD-GAT | 0.9718 | 0.6957 | 0.8464 | 0.8109 |

| CAE-M | 0.9697 | 0.6957 | 0.8464 | 0.8101 |

| GDN | 0.9697 | 0.6957 | 0.8462 | 0.8101 |

| TranAD | 0.9760 | 0.6997 | 0.8491 | 0.8151 |

| T2IAE | 0.9555 | 0.7611 | 0.8473 | - |

| Ours | 0.5673 | 0.7373 | 0.6412 | 0.8295 |

| Ours * | 0.6020 | 0.7841 | 0.6811 | 0.8560 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, H.; Jang, H. Enhancing Time Series Anomaly Detection: A Knowledge Distillation Approach with Image Transformation. Sensors 2024, 24, 8169. https://doi.org/10.3390/s24248169

Park H, Jang H. Enhancing Time Series Anomaly Detection: A Knowledge Distillation Approach with Image Transformation. Sensors. 2024; 24(24):8169. https://doi.org/10.3390/s24248169

Chicago/Turabian StylePark, Haiwoong, and Hyeryung Jang. 2024. "Enhancing Time Series Anomaly Detection: A Knowledge Distillation Approach with Image Transformation" Sensors 24, no. 24: 8169. https://doi.org/10.3390/s24248169

APA StylePark, H., & Jang, H. (2024). Enhancing Time Series Anomaly Detection: A Knowledge Distillation Approach with Image Transformation. Sensors, 24(24), 8169. https://doi.org/10.3390/s24248169