Unsupervised Learning for Machinery Adaptive Fault Detection Using Wide-Deep Convolutional Autoencoder with Kernelized Attention Mechanism

Abstract

1. Introduction

2. Basic Theory

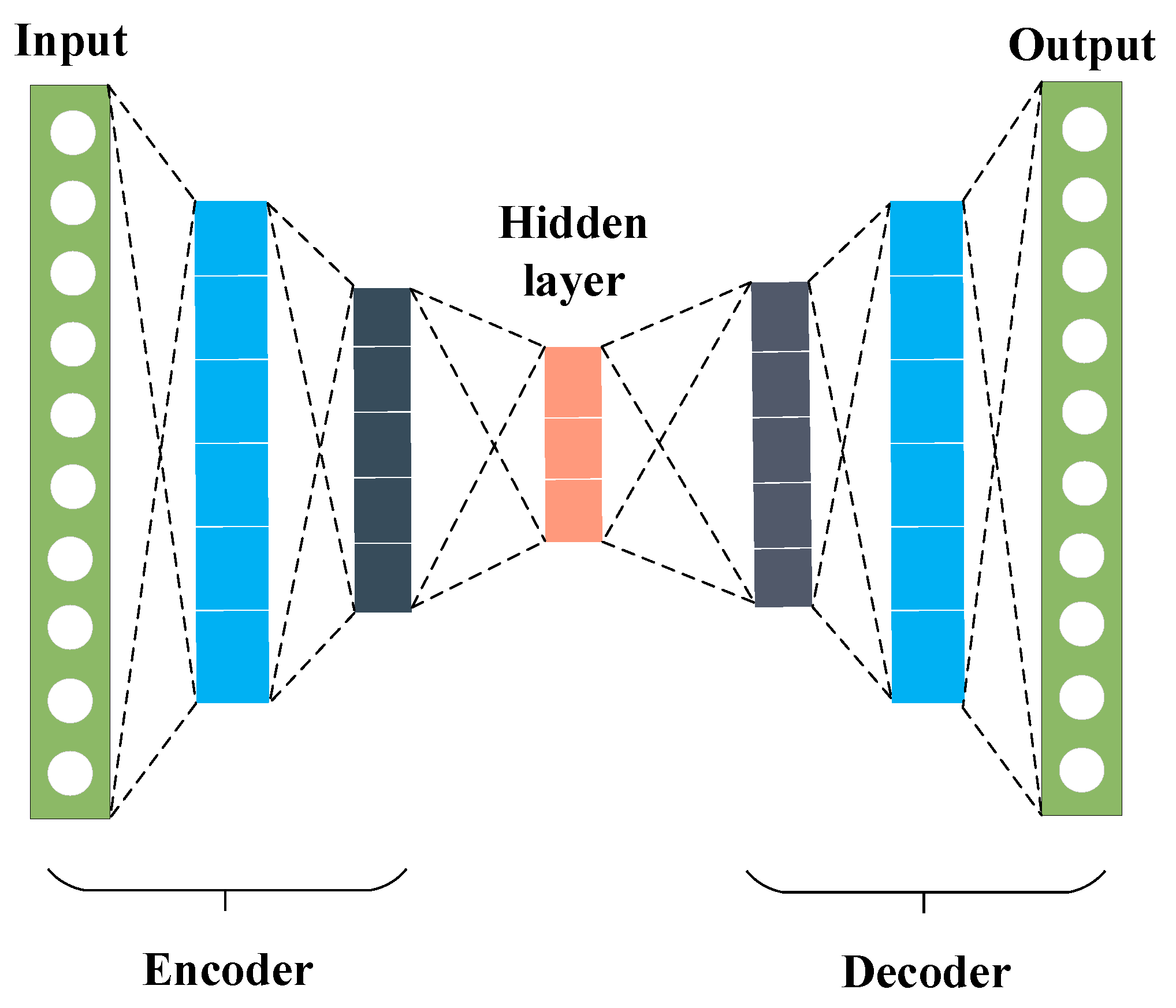

2.1. Standard Autoencoder

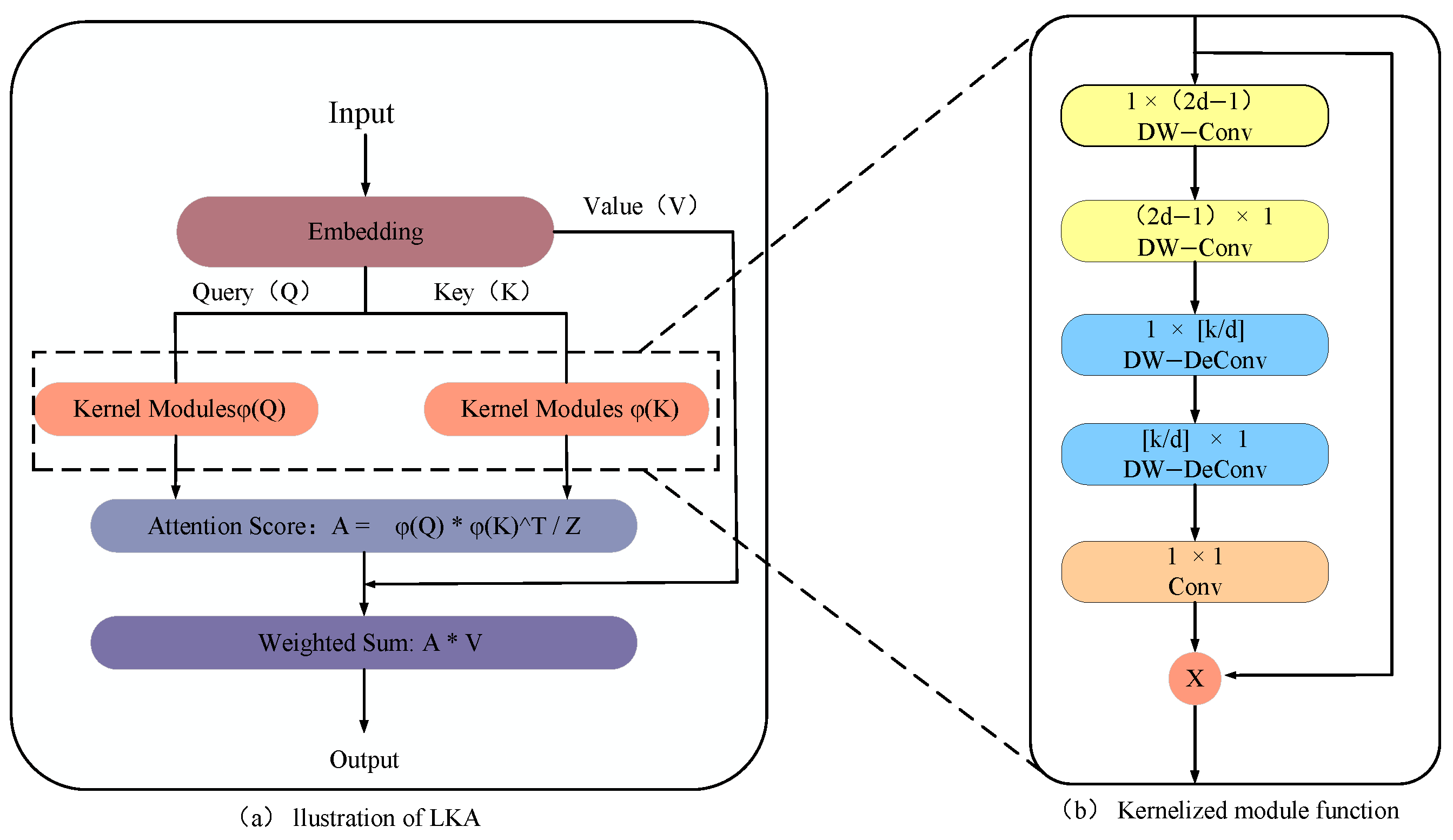

2.2. Self-Attention Mechanism

3. Proposed Intelligent Fault Diagnosis Method

3.1. Large Kernelized Attention-Base Feature Extraction

3.2. Threshold Generation with LKA-WDCAE

3.3. Threshold Adaptation with MLP

3.4. Combination Strategy Design

- Data Collection and Denoising: Acquire unlabeled data from rolling bearings, applying noise reduction techniques to filter out industrial noise interference. This step is crucial for ensuring the input data quality, allowing the model to focus on meaningful signal features.

- Feature Extraction and Reconstruction: Employ the LKA-WDCAE module to extract features from the denoised input data and perform accurate signal reconstruction. The module computes the reconstruction error of the dataset, which will be utilized in later stages for fault detection.

- Threshold Generation: Using both the reconstruction error and original signal features obtained from the LKA-WDCAE module, train the MLP module to produce an adaptive threshold. This threshold acts as the criterion for distinguishing between normal and faulty signals.

- Stability Assurance: Conduct repeated experiments and model training to ensure the stability and reliability of diagnostic results, making the system robust to various operational conditions.

| Algorithm 1: LKA-WDCAE |

| # Training stage Input: unlabeled source datasets 1: for epoch = 1 to epochs do 2: Randomly sample source data from unlabeled datasets. 3. Extract features with the convolutional layer, using Equation (14) for calculation. 4. Compute attention scores of feature maps with the large kernel attention layer, using Equation (15). 5. Restore the signal through the deconvolution layer, calculated by Equation (16). 6. Calculate reconstruction error as output using Equation (18). 7. Use the output reconstruction error and original signal features as input to the MLP. 8. Adaptively adjust the reconstruction error by Equation (19). 9.end for Return: The Adaptive Threshold . # Testing stage Input: Unseen target dataset . Model: The Adaptive Threshold .. Output: Final diagnosis decisions. |

4. Experimental Results and Discussion

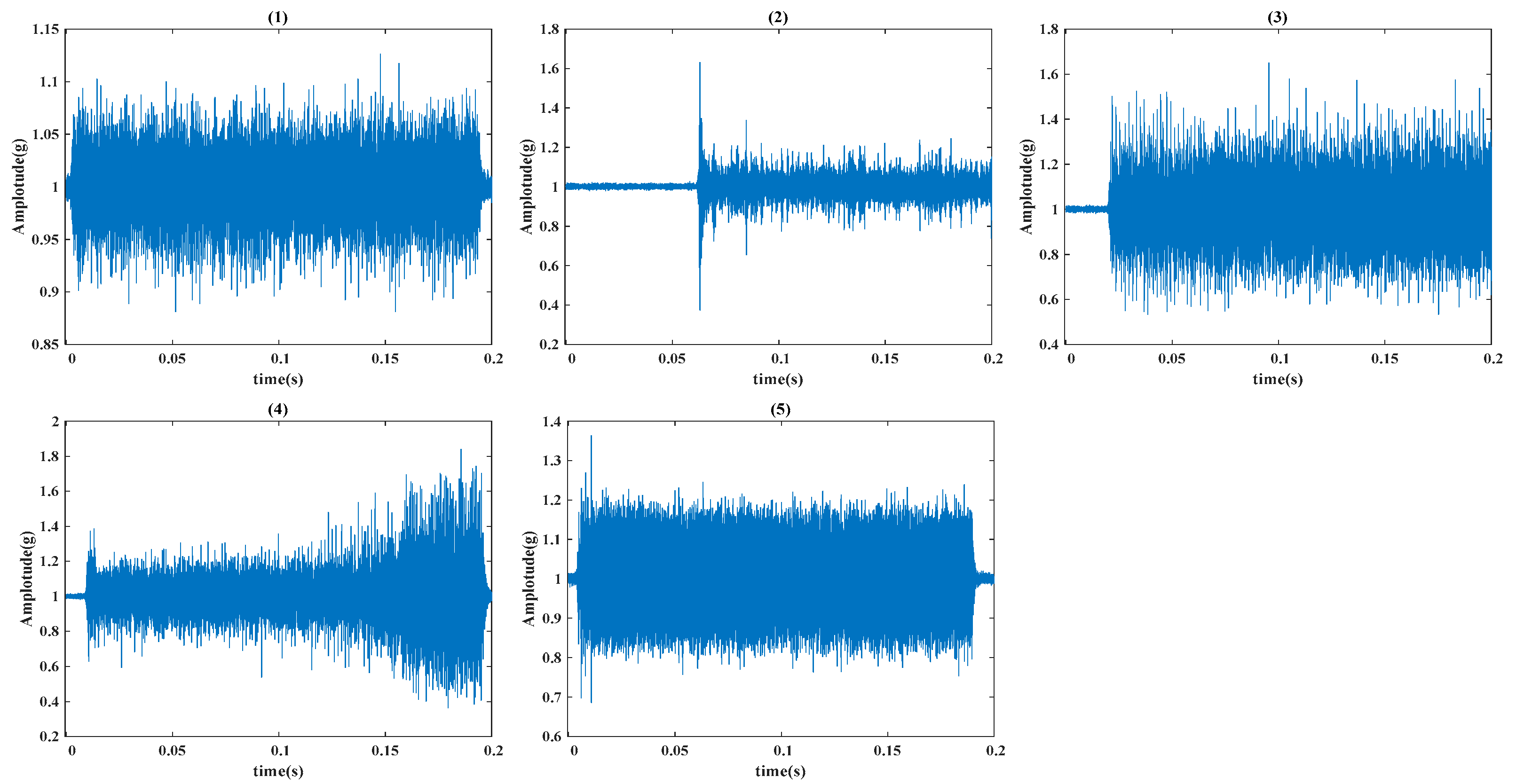

4.1. Dataset Description

- (1)

- CWRU Public Bearing Dataset

- (2)

- Ball Screw System Bearing Fault Simulation Experimental Platform

4.2. Compared Approaches and Implementation Details

4.3. Diagnosis Results and Performance Analysis

- (1)

- Comparison with unsupervised AE variants

- (2)

- Effectiveness of Different Modules

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Javaid, M.; Haleem, A.; Singh, R.P.; Sinha, A.K. Digital economy to improve the culture of industry 4.0: A study on features, implementation and challenges. Green Technol. Sustain. 2024, 2, 100083. [Google Scholar] [CrossRef]

- Liu, X.; Yu, J.; Ye, L. Residual attention convolutional autoencoder for feature learning and fault detection in nonlinear industrial processes. Neural Comput. Appl. 2021, 33, 12737–12753. [Google Scholar] [CrossRef]

- Wang, H.; Liu, Z.; Peng, D.; Yang, M.; Qin, Y. Feature-Level Attention-Guided Multitask CNN for Fault Diagnosis and Working Conditions Identification of Rolling Bearing. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 4757–4769. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Jiang, H.; Mu, M.; Dong, Y. A trackable multi-domain collaborative generative adversarial network for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2025, 224, 111950. [Google Scholar] [CrossRef]

- Li, X. A spectral self-focusing fault diagnosis method for automotive transmissions under gear-shifting conditions. Mech. Syst. Signal Process. 2023, 200, 110499. [Google Scholar] [CrossRef]

- Yao, R.; Jiang, H.; Yang, C.; Zhu, H.; Liu, C. An integrated framework via key-spectrum entropy and statistical properties for bearing dynamic health monitoring and performance degradation assessment. Mech. Syst. Signal Process. 2023, 187, 109955. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, Y.; Li, X.; Huo, M.; Luo, H.; Yin, S. An adaptive remaining useful life prediction approach for single battery with unlabeled small sample data and parameter uncertainty. Reliab. Eng. Syst. Saf. 2022, 222, 108357. [Google Scholar] [CrossRef]

- Dong, Y.; Jiang, H.; Jiang, W.; Xie, L. Dynamic normalization supervised contrastive network with multiscale compound attention mechanism for gearbox imbalanced fault diagnosis. Eng. Appl. Artif. Intell. 2024, 133, 108098. [Google Scholar] [CrossRef]

- Wang, Y.; Wei, Z.; Yang, J. Feature Trend Extraction and Adaptive Density Peaks Search for Intelligent Fault Diagnosis of Machines. IEEE Trans. Ind. Inform. 2019, 15, 105–115. [Google Scholar] [CrossRef]

- Zhao, C.; Shen, W. Mutual-assistance semisupervised domain generalization network for intelligent fault diagnosis under unseen working conditions. Mech. Syst. Signal Process. 2023, 189, 110074. [Google Scholar] [CrossRef]

- Tang, G.; Yi, C.; Liu, L.; Xu, D.; Zhou, Q.; Hu, Y.; Zhou, P.; Lin, J. A parallel ensemble optimization and transfer learning based intelligent fault diagnosis framework for bearings. Eng. Appl. Artif. Intell. 2024, 127, 107407. [Google Scholar] [CrossRef]

- Du, J.; Li, X.; Gao, Y.; Gao, L. Integrated gradient-based continuous wavelet transform for bearing fault diagnosis. Sensors 2022, 22, 8760. [Google Scholar] [CrossRef] [PubMed]

- Bertocco, M.; Fort, A.; Landi, E.; Mugnaini, M.; Parri, L.; Peruzzi, G.; Pozzebon, A. Roller bearing failures classification with low computational cost embedded machine learning. In Proceedings of the 2022 IEEE International Workshop on Metrology for Automotive (MetroAutomotive), Modena, Italy, 4–6 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 12–17. [Google Scholar]

- Ding, X.; Wang, H.; Cao, Z.; Liu, X.; Liu, Y.; Huang, Z. An edge intelligent method for bearing fault diagnosis based on a parameter transplantation convolutional neural network. Electronics 2023, 12, 1816. [Google Scholar] [CrossRef]

- Kiakojouri, A.; Lu, Z.; Mirring, P.; Powrie, H.; Wang, L. A Novel Hybrid Technique Combining Improved Cepstrum Pre-Whitening and HighPass Filtering for Effective Bearing Fault Diagnosis Using Vibration Data. Sensors 2023, 23, 9048. [Google Scholar] [CrossRef]

- Zhao, K.; Liu, Z.; Shao, H. Class-Aware Adversarial Multiwavelet Convolutional Neural Network for Cross-Domain Fault Diagnosis. IEEE Trans. Ind. Inform. 2024, 20, 4492–4503. [Google Scholar] [CrossRef]

- Cao, H.; Shao, H.; Zhong, X.; Deng, Q.; Yang, X.; Xuan, J. Unsupervised domain-share CNN for machine fault transfer diagnosis from steady speeds to time-varying speeds. J. Manuf. Syst. 2022, 62, 186–198. [Google Scholar] [CrossRef]

- Zhang, Z.; Shen, Y.; Xu, S. Unsupervised learning of part-based representations using sparsity optimized auto-encoder for machinery fault diagnosis. Control Eng. Pract. 2024, 145, 105871. [Google Scholar] [CrossRef]

- Fang, Y.; Yap, P.-T.; Lin, W.; Zhu, H.; Liu, M. Source-free unsupervised domain adaptation: A survey. Neural Netw. 2024, 174, 106230. [Google Scholar] [CrossRef]

- Huang, D.; Zhang, W.-A.; Guo, F.; Liu, W.; Shi, X. Wavelet Packet Decomposition-Based Multiscale CNN for Fault Diagnosis of Wind Turbine Gearbox. IEEE Trans. Cybern. 2023, 53, 443–453. [Google Scholar] [CrossRef]

- Zhao, Z.; Jiao, Y. A Fault Diagnosis Method for Rotating Machinery Based on CNN With Mixed Information. IEEE Trans. Ind. Inform. 2023, 19, 9091–9101. [Google Scholar] [CrossRef]

- Stewart, G.; Al-Khassaweneh, M. An Implementation of the HDBSCAN* Clustering Algorithm. Appl. Sci. 2022, 12, 2405. [Google Scholar] [CrossRef]

- Tschannen, M.; Bachem, O.; Lucic, M. Recent Advances in Autoencoder-Based Representation Learning. arXiv 2018, arXiv:1812.05069. [Google Scholar]

- Zhou, F.; Yang, S.; Wen, C.; Park, J.H. Improved DAE and application in fault diagnosis. In Proceedings of the 2018 Chinese Control And Decision Conference (CCDC), Shenyang, China, 9–11 June 2018. [Google Scholar]

- Gao, L.; La Tour, T.D.; Tillman, H.; Goh, G.; Troll, R.; Radford, A.; Sutskever, I.; Leike, J.; Wu, J. Scaling and evaluating sparse autoencoders. arXiv 2024, arXiv:2406.04093. [Google Scholar]

- Ryu, S.; Choi, H.; Lee, H.; Kim, H. Convolutional Autoencoder Based Feature Extraction and Clustering for Customer Load Analysis. IEEE Trans. Power Syst. 2020, 35, 1048–1060. [Google Scholar] [CrossRef]

- Yu, M.; Quan, T.; Peng, Q.; Yu, X.; Liu, L. A model-based collaborate filtering algorithm based on stacked AutoEncoder. Neural Comput. Appl. 2022, 34, 2503–2511. [Google Scholar] [CrossRef]

- Liu, L.; Zheng, Y.; Liang, S. Variable-Wise Stacked Temporal Autoencoder for Intelligent Fault Diagnosis of Industrial Systems. IEEE Trans. Ind. Inform. 2024, 20, 7545–7555. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, K.; An, Y.; Luo, H.; Yin, S. An Integrated Multitasking Intelligent Bearing Fault Diagnosis Scheme Based on Representation Learning Under Imbalanced Sample Condition. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 6231–6242. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872v3. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762v7. [Google Scholar]

- Neupane, D.; Seok, J. Bearing Fault Detection and Diagnosis Using Case Western Reserve University Dataset With Deep Learning Approaches: A Review. IEEE Access 2020, 8, 93155–93178. [Google Scholar] [CrossRef]

| Features of Error | Features of Signal | Formula |

|---|---|---|

| Maximum | Maximum | |

| Minimum | Minimum | |

| Peak-to-Peak Value | Peak-to-Peak Value | |

| Mean | Mean | |

| Variance | Variance | |

| - | Root mean square | |

| - | Skewness | |

| - | Kurtosis | |

| - | Wavelet Coefficient | /N |

| Module Name | Layer Type | Parameters | Operation |

|---|---|---|---|

| LKA-WDCAE | Convolution | Kernel 16–64 × 4 | BN, ReLU |

| Max-Pooling | Kernel 16–2 × 2 | / | |

| Convolution | Kernel 32–3 × 1 | BN, ReLU | |

| Large KernelAttention | 256, head = 8 | ReLU, Sigmoid | |

| Convolution | Kernel 64–3 × 1 | BN, ReLU | |

| Max-Pooling | Kernel 64–2 × 2 | Flatten | |

| Linear Layer1 | 64, 1024 | ||

| Linear Layer2 | 1024, 64 × 128 | UnFlatten | |

| ConvTranspose | Kernel 32–3 × 1 | ReLU | |

| Large KernelAttention | 256, head = 8 | ||

| ConvTranspose | Kernel 16–3 × 1 | ReLU | |

| ConvTranspose | Kernel 16–64 × 4 | ReLU | |

| MLP | Input Layer | Input Size: 14 | |

| Linear | Input: 14 Output: 128 | BN | |

| Linear | Input: 128 Output: 64 | ReLU | |

| Dropout | p = 0.2 | ||

| Linear | Input: 64, Output: 32 | ReLU | |

| Linear | Input: 32, Output: 1 | Linear (None) |

| Bearing State | Sensor | Diameter (mm) | Class Label | Data Length | Sample Number |

|---|---|---|---|---|---|

| Normal | DE & FE | - | Nor | 1024 | 200 |

| Rolling element fault | 0.178 | Ro07 | 1024 | 200 | |

| 0.356 | Ro17 | 1024 | 200 | ||

| 0.533 | Ro21 | 1024 | 200 | ||

| Inner ring fault | 0.178 | In07 | 1024 | 200 | |

| 0.356 | In 17 | 1024 | 200 | ||

| 0.533 | In 21 | 1024 | 200 | ||

| Outer ring fault | 0.178 | Ou07 | 1024 | 200 | |

| 0.356 | Ou14 | 1024 | 200 | ||

| 0.533 | Ou21 | 1024 | 200 |

| Signal Type | Sensor Model | Sensor Layout | Collection Device | Sampling Frequency |

|---|---|---|---|---|

| Vibration Acceleration Signal | PCB 356A16 | 1 attached magnetically to the fixed end bearing seat, another to the support end bearing seat or mobile platform | NI 9230 | |

| Servo Information | — | — | Servo Driver Panasonic MBDLN25BE | ~2000 Hz |

| Task Name | Signal Proportion | Task Description |

|---|---|---|

| Task 1 | 100% normal signals, 0% fault signals | simulates a fault-free baseline scenario |

| Task 2 | 95% normal signals, 5% fault signals | simulate an early-stage fault scenario |

| Task 3 | 90% normal signals, 10% fault signals | simulate a moderate fault scenario |

| Task 4 | 85% normal signals, 15% fault signals | simulates more noticeable fault occurrences |

| Task 5 | 80% normal signals, 20% fault signals | simulates a challenging scenario |

| Task 6 | 75% normal signals, 25% fault signals | simulates real-world imbalanced datasets |

| Task 7 | 70% normal signals, 30% fault signals | simulates highly imbalanced datasets |

| Method | Description |

|---|---|

| M1 | DAE, analogous to AE, with enhanced resistance to noisy signals. |

| M2 | VAE, analogous to AE, offering advantages in generative capability, latent space continuity, generalizability, and interpretability. |

| M3 | DAE, analogous to AE, with added sparsity constraints for learning more representative features. |

| V1 | MDAE-SAMB [32], integrates attention mechanisms within neurons for efficient and precise fault detection. |

| V2 | SOAE [14], includes two sparse optimization attributes for high-precision unsupervised fault detection. |

| Method | Wide Kernel Convolution Layer | Large Kernel Attention Layer | Self-Attention Layer | MLP Adaptive Thresholding Layer |

|---|---|---|---|---|

| A1 | × | × | × | × |

| A2 | √ | × | × | × |

| A3 | √ | √ | × | × |

| B1 | √ | × | √ | × |

| B2 | √ | × | √ | √ |

| LKA-WDCAE | √ | √ | × | √ |

| Task Name | M1 | M2 | M3 | V1 | V2 | Proposed |

|---|---|---|---|---|---|---|

| CWRU-Task 1 | 80.36% | 80.10% | 83.52% | 99.83% | 98.39% | 98.87% |

| CWRU-Task 2 | 76.74% | 76.97% | 78.99% | 93.37% | 93.89% | 95.63% |

| CWRU-Task 3 | 72.96% | 72.24% | 73.96% | 90.42% | 89.42% | 92.41% |

| CWRU-Task 4 | 67.99% | 68.03% | 68.91% | 85.44% | 85.91% | 89.25% |

| CWRU-Task 5 | 62.13% | 63.37% | 62.24% | 81.13% | 80.60% | 88.09% |

| CWRU-Task 6 | 55.76% | 56.50% | 57.68% | 77.13% | 76.28% | 84.97% |

| CWRU-Task 7 | 48.24% | 50.45% | 50.18% | 72.95% | 71.66% | 82.82% |

| CWRU-Avg | 66.12% | 66.95% | 67.93% | 85.75% | 85.16% | 90.29% |

| Ball screw-Task 1 | 68.78% | 70.89% | 73.52% | 88.21% | 86.91% | 86.25% |

| Ball screw-Task 2 | 65.45% | 67.54% | 70.45% | 82.04% | 81.93% | 82.47% |

| Ball screw-Task 3 | 61.12% | 62.32% | 65.12% | 77.84% | 77.52% | 79.60% |

| Ball screw-Task 4 | 56.32% | 58.75% | 60.78% | 71.54% | 71.66% | 75.82% |

| Ball screw-Task 5 | 52.67% | 53.90% | 54.54% | 66.43% | 67.21% | 73.12% |

| Ball screw-Task 6 | 46.90% | 47.78% | 49.40% | 61.13% | 61.49% | 71.43% |

| Ball screw-Task 7 | 41.23% | 42.11% | 42.25% | 58.02% | 53.45% | 67.45% |

| Ball screw-Avg | 56.07% | 57.61% | 59.43% | 71.90% | 72.25% | 76.59% |

| Task Name | V1 | V2 | LKA-WDCAE |

|---|---|---|---|

| CWRU-Task 1 | 98.68 | 113.13 | 96.78 |

| Ball screw-Task 1 | 104.42 | 115.10 | 101.93 |

| Task Name | A1 | A2 | A3 | B1 | B2 | LKA-WDCAE |

|---|---|---|---|---|---|---|

| CWRU-Task 1 | 75.82% | 81.63% | 98.87% | 99.21% | 99.21% | 98.87% |

| CWRU-Task 2 | 72.58% | 78.12% | 93.12% | 93.54% | 95.97% | 95.63% |

| CWRU-Task 3 | 69.30% | 73.39% | 89.37% | 90.12% | 92.84% | 92.41% |

| CWRU-Task 4 | 63.12% | 69.14% | 84.76% | 86.85% | 90.34% | 89.25% |

| CWRU-Task 5 | 56.99% | 64.51% | 80.58% | 82.37% | 88.53% | 88.09% |

| CWRU-Task 6 | 50.45% | 57.72% | 76.23% | 78.43% | 85.23% | 84.97% |

| CWRU-Task 7 | 43.67% | 51.86% | 70.88% | 73.76% | 83.45% | 82.82% |

| CWRU-Avg | 61.57% | 68.04% | 84.83% | 86.32% | 90.79% | 90.29% |

| Ball screw-Task 1 | 67.34% | 72.34% | 86.25% | 87.64% | 87.64% | 86.25% |

| Ball screw-Task 2 | 65.88% | 68.92% | 81.23% | 82.43% | 82.91% | 82.47% |

| Ball screw-Task 3 | 61.45% | 63.11% | 77.11% | 78.25% | 80.47% | 79.60% |

| Ball screw-Task 4 | 56.10% | 59.45% | 72.78% | 73.68% | 76.42% | 75.82% |

| Ball screw-Task 5 | 51.25% | 54.03% | 66.54% | 67.19% | 73.85% | 73.12% |

| Ball screw-Task 6 | 45.63% | 48.89% | 61.40% | 61.96% | 71.96% | 71.43% |

| Ball screw-Task 7 | 39.05% | 42.76% | 55.25% | 58.73% | 68.13% | 67.45% |

| Ball screw-Avg | 49.53% | 58.50% | 71.51% | 72.84% | 77.34% | 76.59% |

| Task Name | A1 | A2 | A3 | B1 | B2 | LKA-WDCAE |

|---|---|---|---|---|---|---|

| CWRU-Task 1 | 65.14 | 82.35 | 91.28 | 107.81 | 122.32 | 96.78 |

| Ball screw-Task 1 | 72.56 | 86.23 | 94.72 | 112.15 | 128.47 | 101.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, H.; Si, X.; Liang, J.; Duan, J.; Shi, T. Unsupervised Learning for Machinery Adaptive Fault Detection Using Wide-Deep Convolutional Autoencoder with Kernelized Attention Mechanism. Sensors 2024, 24, 8053. https://doi.org/10.3390/s24248053

Yan H, Si X, Liang J, Duan J, Shi T. Unsupervised Learning for Machinery Adaptive Fault Detection Using Wide-Deep Convolutional Autoencoder with Kernelized Attention Mechanism. Sensors. 2024; 24(24):8053. https://doi.org/10.3390/s24248053

Chicago/Turabian StyleYan, Hao, Xiangfeng Si, Jianqiang Liang, Jian Duan, and Tielin Shi. 2024. "Unsupervised Learning for Machinery Adaptive Fault Detection Using Wide-Deep Convolutional Autoencoder with Kernelized Attention Mechanism" Sensors 24, no. 24: 8053. https://doi.org/10.3390/s24248053

APA StyleYan, H., Si, X., Liang, J., Duan, J., & Shi, T. (2024). Unsupervised Learning for Machinery Adaptive Fault Detection Using Wide-Deep Convolutional Autoencoder with Kernelized Attention Mechanism. Sensors, 24(24), 8053. https://doi.org/10.3390/s24248053