Effectiveness of Data Augmentation for Localization in WSNs Using Deep Learning for the Internet of Things

Abstract

1. Introduction

2. Localization Process

3. Data Augmentation in WSN Application

| Algorithm 1. Generator for the coordinates of the virtual anchors |

| Input: BorderLength, NodeAmount, BeaconAmount, Span, V; C (generation of coordinates of all nodes); Beacon = [C(1,1:BeaconAmount);C(2,1:BeaconAmount)]; % Coordinates of real anchors Output: 1. for V = 5:5:25 2. k = BeaconAmount × V; 3. for i = NodeAmount:−1:BeaconAmount +1; 4. C(:,i + k) = C(:,i); % Shift unknown nodes to leave room for virtual ones 5. end 6. n = 1; 7. for i = 1:V + 1:k + BeaconAmount 8. Vcx = Span × randn(1,V); 9. Vcy = Span × randn(1,V); 10. for j = 0:V 11. if (j = 0) 12. C1(:,i + j) = Beacon(:,n); 13. else 14. C(1,i + j) = C(1,i) + Vcx(1,j); 15. C(2,i + j) = C(2,i) + Vcy(1,j); 16. Bind C(:,i+j) within (BorderLength)2 square if outside 17. end 18. end 19. n = n + 1; 20. end 21. n = n − 1; % Total number of real and virtual anchors n = BeaconAmount × (V + 1) |

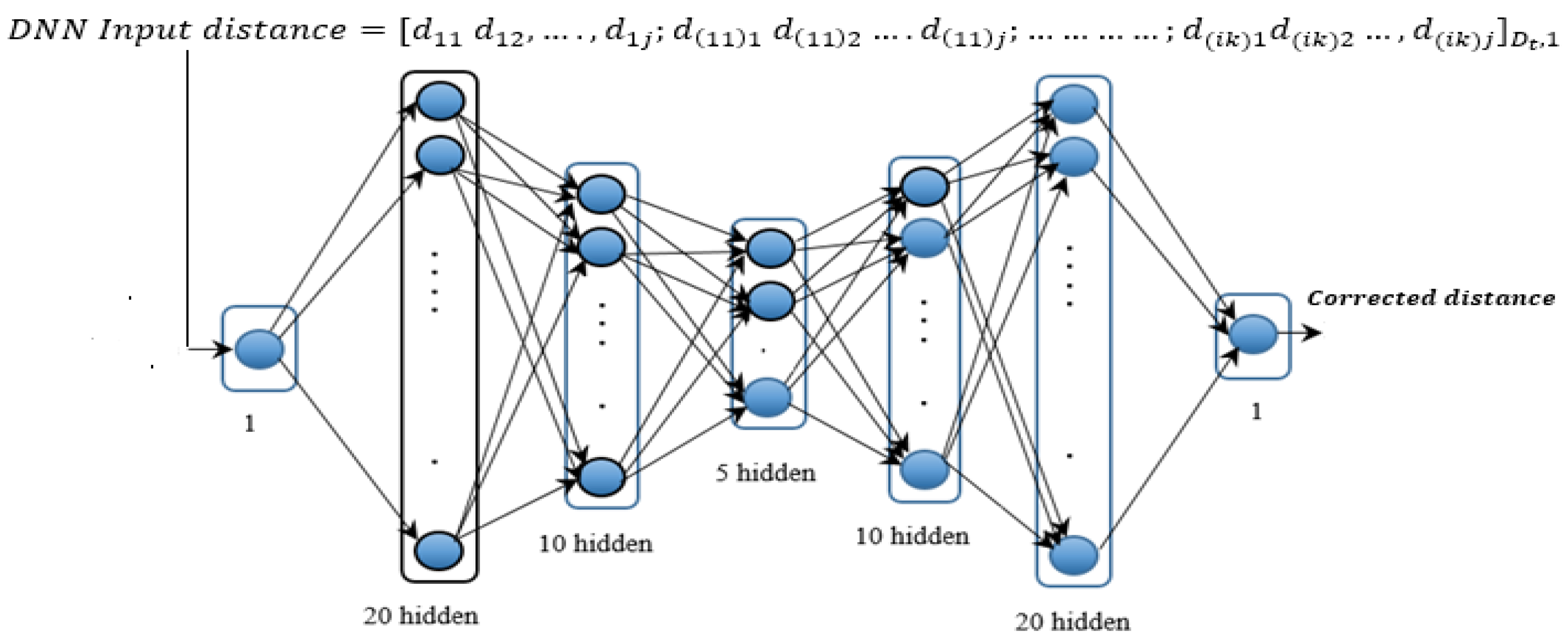

4. DNN-Based Estimated Distance Correction

5. Simulation and Performance Analysis

5.1. Experiment Results

5.1.1. Effect of Span

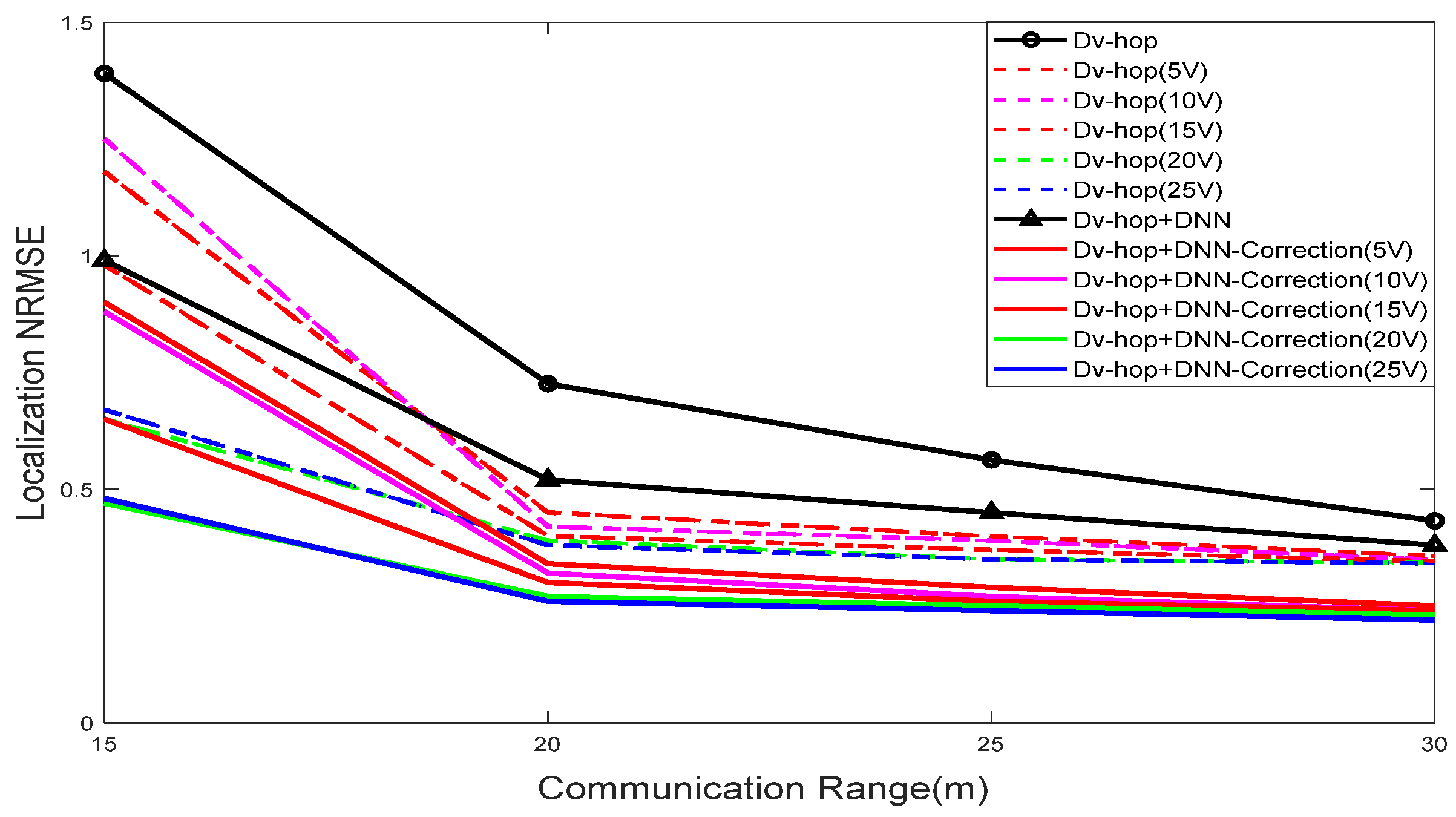

5.1.2. Effect of Node Communication Range

5.2. Performance Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Niyato, D.; Dobre, O.; Poor, H.V. 6G Internet of things: A comprehensive survey. IEEE Internet Things J. 2022, 9, 359–383. [Google Scholar] [CrossRef]

- Paul, A.K.; Sato, T. Localization in wireless sensor networks: A survey on algorithms, measurement techniques, applications, and challenges. J. Sens. Actuator Netw. 2017, 6, 24. [Google Scholar] [CrossRef]

- Bianchi, V.; Ciampolini, P.; Munari, I.D. RSSI-based indoor localization and identification for Zigbee wireless sensor networks in smart homes. IEEE Trans. Instrum. Meas. 2019, 68, 566–575. [Google Scholar] [CrossRef]

- Sundhari, R.M.; Jaikumar, K. IoT assisted hierarchical computation strategic making (HCSM) and dynamic stochastic optimization technique (DSOT) for energy optimization in wireless sensor networks for smart city monitoring. Comput. Commun. 2020, 150, 226–234. [Google Scholar] [CrossRef]

- Niculescu, D.; Nath, B. Ad hoc positioning system (APS) using AOA. In Proceedings of the IEEE INFOCOM 2003. Twenty-second Annual Joint Conference of the IEEE Computer and Communications Societies (IEEE Cat. No.03CH37428), San Francisco, CA, USA, 30 March–3 April 2003; Volume 3, pp. 1734–1743. [Google Scholar]

- Kumar, P.; Reddy, L.; Varma, S. Distance measurement and error estimation scheme for RSSI based localization in wireless sensor networks. In Proceedings of the 2009 Fifth International Conference on Wireless Communication and Sensor Networks (WCSN), Allahabad, India, 15–19 December 2009; pp. 1–4. [Google Scholar]

- Voltz, P.J.; Hernandez, D. Maximum likelihood time of arrival estimation for real-time physical location tracking of 802.11 a/g mobile stations in indoor environments. In Proceedings of the Position Location and Navigation Symposium, Allahabad, India, 15–19 December 2004; pp. 585–591. [Google Scholar]

- Wang, Y.; Wang, X.; Wang, D.; Agrawal, D.P. Range-free localization using expected hop progress in wireless sensor networks. IEEE Trans. Parallel Distrib. Syst. 2009, 25, 1540–1552. [Google Scholar] [CrossRef]

- Boukerche, A.; Oliveira, H.A.B.F.; Nakamura, E.F.; Loureiro, A.A.F. Localization systems for wireless sensor networks. IEEE Wirel. Commun. 2007, 14, 6–12. [Google Scholar] [CrossRef]

- Niculescu, D.; Nath, B. Ad hoc positioning system (APS). In Proceedings of the GLOBECOM’01, IEEE Global Telecommunications Conference (Cat. No.01CH37270), San Antonio, TX, USA, 25–29 November 2001. [Google Scholar]

- Abu Alsheikh, M.; Lin, S.; Niyato, D.; Tan, H.-P. Machine learning in wireless sensor networks: Algorithms, strategies, and applications. IEEE Commun. Surv. Tutor. 2014, 16, 1996–2018. [Google Scholar] [CrossRef]

- Bhatti, G. Machine learning based localization in large-scale wireless sensor networks. Sensors 2018, 18, 4179. [Google Scholar] [CrossRef] [PubMed]

- Chen, A.C.H.; Jia, W.K.; Hwang, F.J.; Liu, G.; Song, F.; Pu, L. Machine learning and deep learning methods for wireless network applications. EURASIP J. Wirel. Commun. Netw. 2022, 2022, 115. [Google Scholar] [CrossRef]

- Zainab, M.; Sadik, K.G.; Ammar, H.M.; Ali, A.J. Neural network-based Alzheimer’s patient localization for wireless sensor network in an indoor environment. IEEE Access 2020, 8, 150527–150538. [Google Scholar]

- Baird, H.S. Document Image Analysis. Chapter Document Image Defect Models; IEEE Computer Society Press: Los Alamitos, CA, USA, 1995; pp. 315–325. [Google Scholar]

- Lin, C.; Weidong, S. Virtual big data for GAN based data augmentation. In Proceedings of the 2019 IEEE International Conference on Big Data, Los Angeles, CA, USA, 9–12 December 2019. [Google Scholar]

- Zhang, B.; Lin, J.; Du, L.; Zhang, L. Harnessing data augmentation and normalization preprocessing to improve the performance of chemical reaction predictions of data-driven mode. Polymers 2023, 15, 2224. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, Y.; Yu, J.; Chang, C.; Qiao, H.; Wu, Y.; Wang, X.; Eu, Z.; Duan, H. Virtual data augmentation method for reaction prediction. Sci. Rep. 2022, 12, 17098. [Google Scholar] [CrossRef] [PubMed]

- Paul, A.; Prasad, A.; Kumar, A. Review on artificial neural network and its application in the field of engineering. J. Mech. Eng. PRAKASH 2022, 1, 53–61. [Google Scholar] [CrossRef]

- Amirsadri, S.; Mousavirad, S.J.; Ebrahimpour-Komleh, H. A Levy_fight-based grey wolf optimizer combined with back-propagation algorithm for neural network training. Neural Comput. Appl. 2018, 30, 3707–3720. [Google Scholar] [CrossRef]

- Yang, C.; Kim, H.; Adhikari, S.P.; Chua, L.O. A Circuit-based neural network with hybrid learning of backpropagation and random weight change algorithms. Sensors 2017, 17, 16. [Google Scholar] [CrossRef] [PubMed]

- Tarigan, J.; Diedan, R.; Suryana, Y. Plate recognition using backpropagation neural network and genetic algorithm. Procedia Comput. Sci. 2017, 116, 365–372. [Google Scholar] [CrossRef]

- Alaa, A.H.; Bekir, K.; Mohammad, S.S. Back-propagation algorithm with variable adaptive momentum. Knowl. Based Syst. 2016, 114, 79–87. [Google Scholar]

- Jun, Z.; Ting, Y.; Wenwu, X.; Zhihe, Y.; Dan, Y. An enhanced flower pollination algorithm with gaussian perturbation for node location of a WSN. Sensors 2023, 23, 6463. [Google Scholar]

- Cui, H.; Wang, S.; Zhou, C. A high-accuracy and low-energy range-free localization algorithm for wireless sensor networks. EURASIP J. Wirel. Commun. Netw. 2023, 2023, 37. [Google Scholar] [CrossRef]

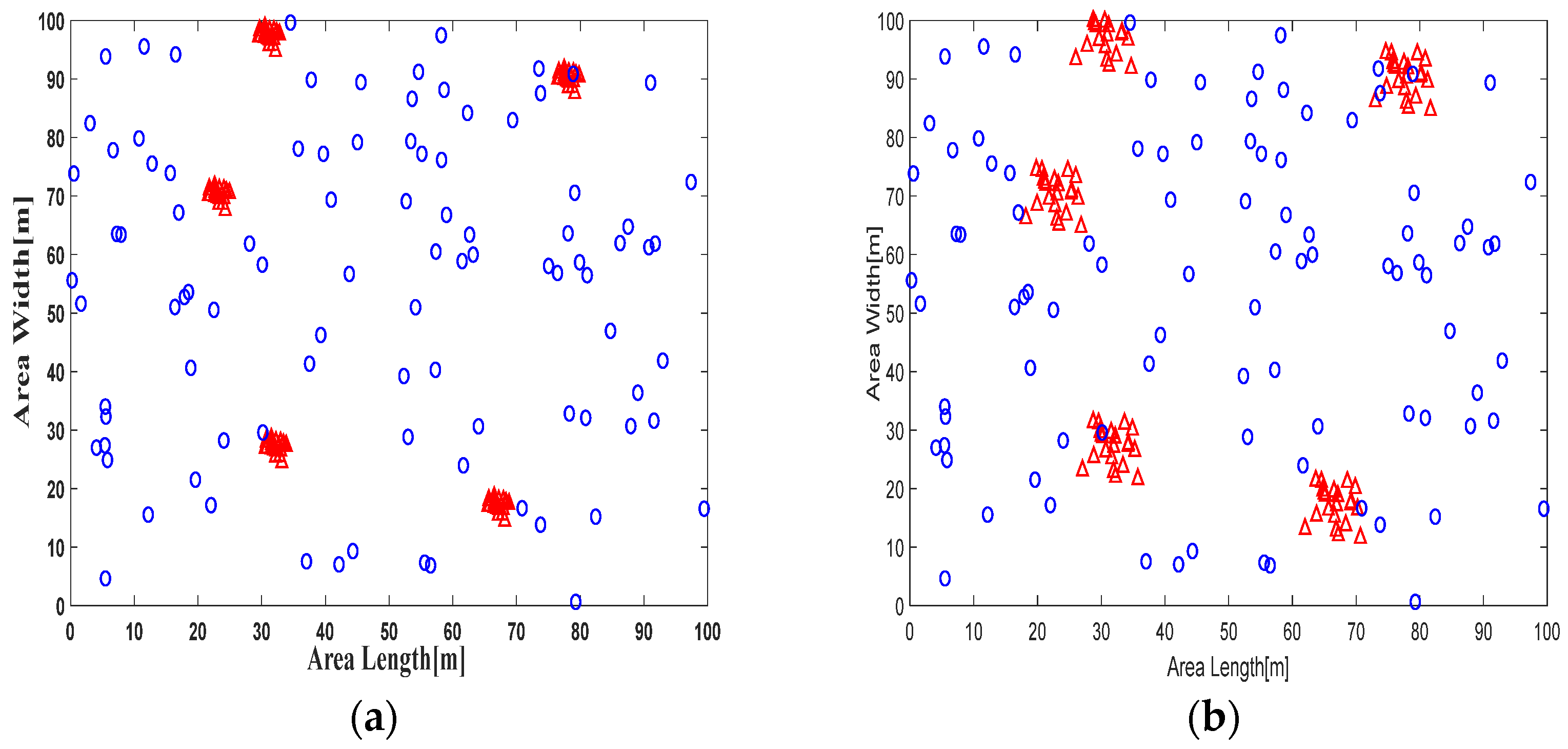

: unknown nodes

: unknown nodes  : real and virtual anchors (a,b).

: real and virtual anchors (a,b).

| Virtual Anchors | Anchors with Augmentation | |

|---|---|---|

| 5 | 30 | 2850 |

| 10 | 55 | 5225 |

| 15 | 80 | 7600 |

| 20 | 105 | 9975 |

| 25 | 130 | 12,350 |

| Contents of Experiments | ||

|---|---|---|

| Number of unknown nodes | 95 | |

| A | Number of real anchors | 5 |

| V | Number of virtual anchors | 5, 10, 15, 20, 25 |

| Sa | Square area | 100 × 100 m2 |

| R | Communications range | 15 m, 20 m, 25 m, 30 m |

| σ | Node density | 0.01 |

| S | Span | 1 m, 3 m, 6 m, 9 m, 12 m |

| Span | 1 m | 3 m | 6 m | 9 m | 12 m | |

|---|---|---|---|---|---|---|

| Dv-hop | 43.87% | 43.34% | 43.24% | 43.22% | 43.20% | |

| Dv-hop (5 V) | 43.09% | 41.37% | 39.91% | 37.65% | 36.58% | |

| Dv-hop (10 V) | 43.09% | 39.05% | 37.35% | 35.45% | 35.35% | |

| NRMSE of | Dv-hop (15 V) | 43.07% | 38.75% | 36.97% | 35.26% | 34.17% |

| Dv-hop (20 V) | 43.06% | 38.08% | 36.55% | 35.14% | 34.11% | |

| Dv-hop (25 V) | 43.06% | 38.05% | 36.87% | 35.05% | 34.02% |

| Span | 1 m | 3 m | 6 m | 9 m | 12 m | |

|---|---|---|---|---|---|---|

| Dv-hop + DNN | 33.65% | 33.05% | 33.04% | 33.04% | 33.05% | |

| Dv-hop + DNN (5 V) | 32.23% | 31.35% | 29.95% | 27.55% | 26.34% | |

| NRMSE of | Dv-hop + DNN (10 V) | 32.15% | 30.05% | 26.45% | 24.89% | 24.73% |

| Dv-hop + DNN (15 V) | 32.15% | 29.85% | 25.91% | 24.54% | 24.34% | |

| Dv-hop + DNN (20 V) | 32.05% | 29.05% | 25.76% | 23.55% | 23.29% | |

| Dv-hop + DNN (25 V) | 32.05% | 29.15% | 25.12% | 22.85% | 22.30% |

| R | 15 m | 20 m | 25 m | 30 m | |

|---|---|---|---|---|---|

| Dv-hop | 139.89% | 72.56% | 56.34% | 43.22% | |

| Dv-hop (5 V) | 118.45% | 45.45% | 39.45% | 35.58% | |

| NRMSE of | Dv-hop (10 V) | 115.67% | 42.34% | 37.87% | 34.35% |

| Dv-hop (15 V) | 98.87% | 40.65% | 36.23% | 34.17% | |

| Dv-hop (20 V) | 65.82% | 39.54% | 35.05% | 34.11% | |

| Dv-hop (25 V) | 67.72% | 38.67% | 35.12% | 34.02% |

| R | 15 m | 20 m | 25 m | 30 m | |

|---|---|---|---|---|---|

| Dv-hop + DNN | 99.02% | 52.55% | 45.53% | 34.05% | |

| Dv-hop + DNN (5 V) | 90.12% | 34.87% | 29.34% | 25.34% | |

| NRMSE of | Dv-hop + DNN (10 V) | 88.98% | 32.56% | 27.43% | 24.73% |

| Dv-hop + DNN (15 V) | 65.44% | 30.33% | 26.82% | 24.34% | |

| Dv-hop + DNN (20 V) | 47.34% | 24.36% | 24.25% | 23.49% | |

| Dv-hop + DNN (25 V) | 48.54% | 25.5% | 23.56% | 22.60% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esheh, J.; Affes, S. Effectiveness of Data Augmentation for Localization in WSNs Using Deep Learning for the Internet of Things. Sensors 2024, 24, 430. https://doi.org/10.3390/s24020430

Esheh J, Affes S. Effectiveness of Data Augmentation for Localization in WSNs Using Deep Learning for the Internet of Things. Sensors. 2024; 24(2):430. https://doi.org/10.3390/s24020430

Chicago/Turabian StyleEsheh, Jehan, and Sofiene Affes. 2024. "Effectiveness of Data Augmentation for Localization in WSNs Using Deep Learning for the Internet of Things" Sensors 24, no. 2: 430. https://doi.org/10.3390/s24020430

APA StyleEsheh, J., & Affes, S. (2024). Effectiveness of Data Augmentation for Localization in WSNs Using Deep Learning for the Internet of Things. Sensors, 24(2), 430. https://doi.org/10.3390/s24020430