Abstract

The rapid evolution of 3D technology in recent years has brought about significant change in the field of agriculture, including precision livestock management. From 3D geometry information, the weight and characteristics of body parts of Korean cattle can be analyzed to improve cow growth. In this paper, a system of cameras is built to synchronously capture 3D data and then reconstruct a 3D mesh representation. In general, to reconstruct non-rigid objects, a system of cameras is synchronized and calibrated, and then the data of each camera are transformed to global coordinates. However, when reconstructing cattle in a real environment, difficulties including fences and the vibration of cameras can lead to the failure of the process of reconstruction. A new scheme is proposed that automatically removes environmental fences and noise. An optimization method is proposed that interweaves camera pose updates, and the distances between the camera pose and the initial camera position are added as part of the objective function. The difference between the camera’s point clouds to the mesh output is reduced from 7.5 mm to 5.5 mm. The experimental results showed that our scheme can automatically generate a high-quality mesh in a real environment. This scheme provides data that can be used for other research on Korean cattle.

1. Introduction

In general, to reconstruct 3D objects, people can use 2D images or 3D cameras. Using 2D images as input, we can reconstruct objects like buildings by the Structure From Motion algorithm [1], whereas 3D cameras have an advantage in reconstructing objects in a shorter range due to the high-quality data captured like the Kinect Fusion program [2]. In the object reconstruction field by 3D cameras, objects are simply separated into two types: rigid objects and non-rigid objects.

For rigid objects, a sequence of frames is captured by one camera, then global registration is implemented [2,3]. When two frames are closed and have large overlaps, the ICP algorithm [4,5,6,7,8] can be used for registering between frames. In the case of initial poses between two cameras that are unknown, Rusu in 2009, proposed a method of global alignment by global feature FPFH [9]. After locally aligning pairs of frames, the positions of all frames are aligned locally by the pose graph algorithm [10] or loop closure [2].

In the case of reconstruction of non-rigid objects, using one camera to reconstruct [11,12] a moving non-rigid object has many limits due to the fast change of non-rigid objects. In general, to reconstruct non-rigid objects, a system of cameras needs to be synchronized and calibrated and then data from each camera are transformed to global coordinates.

However, when reconstructing cattle in a real environment, difficulties, including occlusion by surrounding fences and the vibration of cameras, can lead to the failure of the process of reconstruction. The camera position can be vibrated by wind or ground vibration. Thus, although the camera system is calibrated beforehand, the camera’s point cloud cannot be directly mapped to global coordinates to form a well-defined 3D shape. Environmental fences, which partially prevent data capture, create large empty areas on the cow’s surface and generate noisy data in areas near fences. The camera vibration and fences make the reconstruction a challenging task.

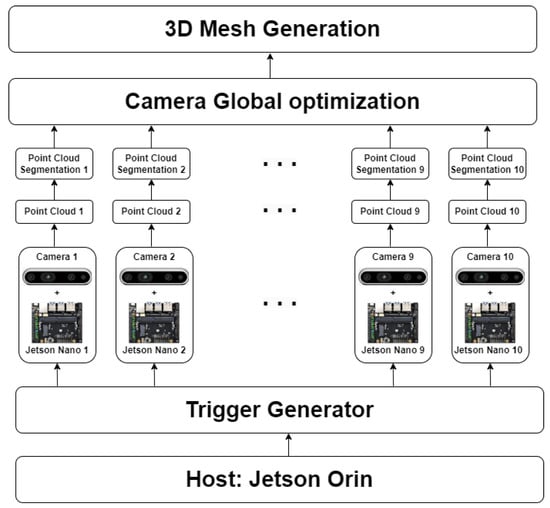

Therefore, we proposed an integrated system to overcome the above disadvantages. The system is divided into two main parts. The hardware part: A system of ten cameras (Figure 1) are calibrated and synchronously capture stereo images. The application part: cow surface segmentation and camera pose optimization are implemented. The camera’s initial pose is established based on the calibration result. Each camera is optimized to well overlap with other cameras but needs to not be far from the original pose. The optimization result is compared to the global optimization method, BCMO [13]. The contributions of this paper can be summarized as follows:

Figure 1.

The Korean cattle 3D reconstruction framework.

- A method of two-step optimization is proposed. In step 1, a global mesh is generated from all cameras, and in step 2, each camera is aligned to the global mesh. This optimization can run well with cameras that have small overlapping and considerable noise.

- Automatic 3D segmenting of cattle parts from 3D data of each camera.

The structure of the rest of this paper is organized as follows: In Section 2, we present papers related to global registration; in Section 3, are the materials and methods that provide details of the scanning system and the data acquisition process. Section 4 focuses on the segmentation of data for each camera, and the optimization of the global camera pose is mentioned. Section 5 presents the experimental results that include the evaluation of time synchronization evaluation of the optimization process. The comparison of global optimization between our result and the result of the BCMO algorithm is present. Finally, Section 6 is the conclusions and future work.

2. Related Work

Recently, reconstructing animals, such as cattle, has emerged as an appealing research topic. One can use a single 3D scan camera to capture a sequence of frames and then apply global alignment [2,14,15]. However, a drawback of this method is that it requires the cattle to be still. Reconstruction of non-rigid objects is a developing topic, and this method can reconstruct a small movement of non-rigid objects. Applying this reconstruction method to reconstruct cattle is not practical because of the fast movement of animals.

Another reconstruction method is using multi-view cameras. A system of cameras is built to synchronously scan data and then align all frames to create the point cloud [16,17,18,19]. However, in paper [16,17], the fence size must be small, or the registration algorithm used (Super4PCS) can not work with small overlapping point clouds. In paper [20], they used laser scanners to generate high-quality point clouds but only reconstructed non-moving cows. Camera synchronization is essential in this method. The iterative closest point [21] is known as a widely used algorithm to determine alignment between two roughly aligned 3D point clouds. This algorithm searches for correspondence points between two given point cloud sets and then optimizes object functions with the aim of minimizing the distance between corresponding points. However, if the overlap between a pair of cameras is small or there is no data because of occlusion from fences leading to alignment by ICP, the algorithm can fail. The global alignment method for the cloud from all cameras in paper [18,19] used information on building a set of co-planar points to register.

A pre-trained model, CreStereo [22], is applied to enhance the depth quality [23], and our approach similarly leverages this model to improve the reconstruction result. The camera’s position is calibrated by spheres [24], and its calibration information is used for the reconstruction process. All of our cameras are calibrated before reconstruction so we can obtain an initial position of all cameras. Different from the global optimization method by the 4PCS or ICP algorithm, a global mesh is created from all input cameras, and then a process of two steps is repeated. Step one is aligning all cameras to the global mesh and step 2 is to compute the global mesh from all cameras. In step 1, the objective function is modified from the ICP algorithm that guarantees the camera position is not far from the initial position. This method prevents cameras from sliding away from the initial position.

3. Material and Data Acquisition

3.1. The 3D Reconstruction Framework

In this section, we proposed a 3D reconstruction scheme that generates a cattle 3D point cloud and registration issues. As indicated in Figure 1, the 3D reconstruction framework contains three main parts:

- Camera synchronization and data acquisition.

- Segmenting 3D data.

- Global 3D camera pose optimization and generating mesh.

In this system, we can generate a full cow mesh. The Host, Jetson Orin, is the center of the system; it connects to a trigger generator to synchronously capture data from ten cameras. Data from ten cameras are transferred to Orin Host through a Wi-Fi connection. The processes of point cloud generation, segmentation, camera global optimization, and mesh generation run on Host Orin.

3.2. Cameras System

Our camera system includes:

- Trigger Generator: This device generates a synchronous signal to ten camera devices.

- Camera devices—Intel Realsense D435i: This device connects and sends stereo images to Jetson Nano.

- Single Board Computer—Jetson Nano: This device receives stereo images from cameras and then transfers them to Jetson Orin.

- Host Computer—Jetson Orin: Center device of capturing system. It generates a signal to the active Trigger Generator. It retrieves stereo images from Jetson Nano devices and reconstructs the final 3D mesh.

Figure 2a is a rendering of the camera system model and Figure 2b is an image of the camera system at the farm. The camera system can be easily installed, dismantled, and moved, but it is susceptible to being shaken by the environment or the wind.

Figure 2.

(a) Design of multi-view camera system. (b) Real multi-view camera system.

The device specifications are detailed in Table 1.

Table 1.

The specifications of the hardware device.

3.3. Camera Synchronization System

A multi-camera synchronization system has been developed to register frames acquired from ten cameras. We use Trigger TG-16 to control all cameras. The trigger device synchronously sends a capturing signal to 10 camera devices to capture cattle data. Additionally, synchronization global timestamps among host Orin and other Jetson Nano devices are set up based on Network Time Protocol. Consequently, we can retrieve simultaneously captured frames by gathering each frame from all cameras that share the same global timestamps.

3.4. Camera Calibration System

Calibration consisted of finding the camera’s intrinsic parameters and the global position of each camera. The cameras were calibrated in two ways:

- Calibration of each camera to determine its intrinsic parameters.

- Calibration global extrinsic matrix of all ten cameras.

A system of spheres is designed for calibration. After capturing data, the center of all spheres on each camera can be detected. The calibration system is designed with the distance between spheres being different so we can find correspondence of a sphere’s center points on the point cloud of all cameras. We calibrated all pairs of cameras to find calibration information on all cameras. Calibration was performed twice, first in the laboratory and then after installation on the farm. The camera matrix from the calibration process can be used as an initial camera’s position in the camera pose optimization process.

4. Korean Cattle 3D Reconstruction

4.1. Point Cloud Generation and Removing Fence for a Single Camera

Point Cloud Generation and Segmentation

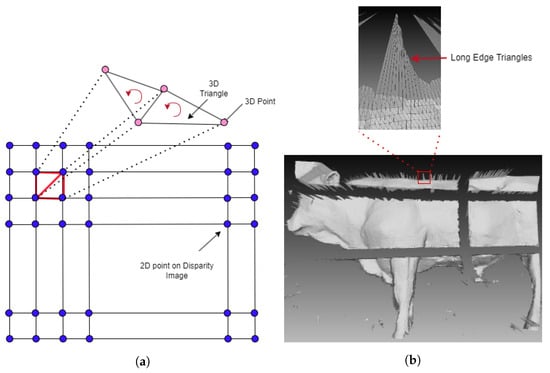

In this work, the pre-trained model was based on the approach [22] for creating a disparity image from stereo images. In our system, we used images at a resolution of 1280 pixels by 720 pixels. A point cloud was generated by disparity image and camera intrinsic parameters as in Figure 3a. We considered a disparity image as a grid, and from 3 pixels side-by-side and not co-liner we back-projected to generate 3D points and then created a counter-clock-wise triangle. For each point, its normal is equal to the average normal of all triangles which contain that point. Points and normals were used for generating the 3D mesh.

Figure 3.

(a) The 3D mesh triangulation. (b) Mesh with long edges triangle.

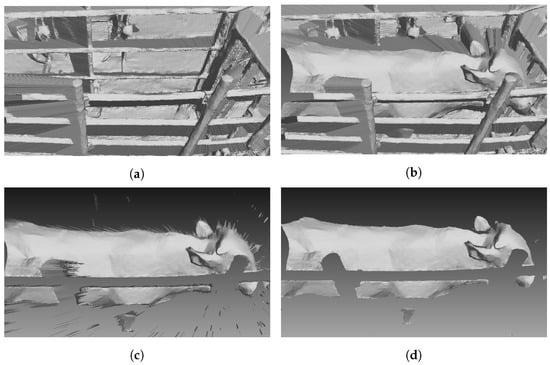

Figure 4 shows a mesh segmentation process. Firstly, for all cameras, we created a 3D mesh of the environment without cattle, Figure 4a. A mesh of the environment with cattle is in Figure 4b. The mesh was generated by 3D mesh triangulation as in Figure 3a. All points of the environment in Figure 4b were removed to roughly obtain a mesh of cattle, like in Figure 4c. Points near areas in which depth has significantly changed can create noise triangles with long edges like in Figure 3b. A triangle that has a normal vector parallel to the camera direction has small edges. If a triangle’s normal vector and camera direction form a large angle, then that triangle has long edges. The average edge of a mesh is computed from all edges of the mesh. An edge is considered a long edge if it is two times longer than the average of the mesh. Next is the detection of the loop boundary of all mesh segmentations. The edge is a half-edge if it is contained in only one triangle. Connecting all half-edges to create a loop, a boundary of segmentation is created. The library, The Visualization Toolkit (VTK), is used to detect half-edges. To remove the remaining noise, mesh boundaries are detected and then deleted. In practice, the boundary is detected and deleted several times to remove all noise. This process allows us to obtain a segmentation mesh as shown in Figure 4d. To improve the computation time, a Kd-Tree of a point cloud of the environment is created one time and is used as a reference to remove 3D environment points of all cattle 3D frames.

Figure 4.

Point cloud generation and segmentation. (a) Environment mesh. (b) Cattle in environment mesh. (c) Environment removed mesh. (d) Cattle segmentation mesh.

4.2. Camera Pose Optimization

In this section, we present the approach in which camera poses are optimized.

4.2.1. Objective

Here, input is the set of 10 segmented meshes which contain points and normals with initial camera pose, Ci. From the points and normals of 10 cameras that were generated by the triangulation method, we combined it into global points and normals, then set it as an input to generate a 3D model M by Poisson surface reconstruction (PSR) [25,26]. For each camera points cloud, we find an overlapping area of with other C subset based on the threshold distance value.

For a transform with small rotation angle, we can parameterize T by a 6-vector Equation (1) that represents an incremental transformation relative to . Here, is translation, and can be interpreted as the rotation part. is thus approximated by a linear function of :

Our objective is to optimize the set of cameras transform T, with mesh M serving as auxiliary variables. We minimize the objective function following:

whereas

and,

In the objective function, Equation (2), the first part is the error difference between the point cloud of one camera to the other camera’s point cloud. The second part shows the distance of each camera position to the initial camera position of that camera. In the process of data acquisition, the camera position can be rotated and translated. The camera translation can be large but the angle of rotation is generally small so we can approximate the second part of the Equation (5). Thus, the optimization process minimizes the error among the point cloud of all cameras but still keeps the camera position not moving far from the initial position. and are constant factors chosen at the beginning of the optimization process.

4.2.2. Optimization

Here, we present an optimization scheme for minimizing the objective Algorithm 1. The optimization process is repeated in some iterations. In each iteration, the optimization is divided into 2 steps. In step 1, the global mesh is fixed, and then all 10 camera poses are optimized to the global mesh. In step 2, all camera poses are fixed and the global mesh is computed from 10 camera point clouds by PSR.

When global mesh M is fixed then the optimization process becomes aligning only one camera position to the global mesh. Optimizing all camera poses in Equation (2) becomes optimizing each camera pose in Equation (7):

Each objective function contains only the six variables . In each iteration, the Gaussian–Newton method is performed with six variables.

| Algorithm 1 Algorithm of alignment for all cameras |

|

5. Experimental Results

In this section, the hardware specifications and the outcomes of cattle reconstruction are provided. Additionally, the distinctions in cattle reconstruction results, compare cases with and without global optimization. Furthermore, we document the reduction in errors during the process of global optimization.

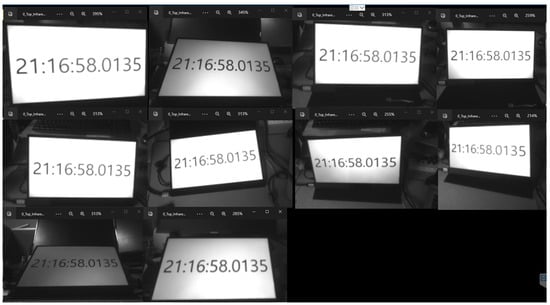

5.1. Evaluation of Synchronization Process

To assess the synchronization between the 10 cameras in the 3D reconstruction system, we generated a capture signal to capture images of the ten cameras. The synchronization was demonstrated through the frames obtained from the ten cameras, which displayed the same timestamp on the screen Figure 5.

Figure 5.

Synchronization of camera system.

5.2. Evaluation of Optimization Process

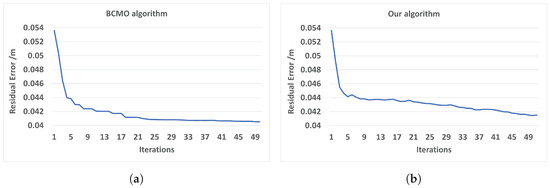

To compare the optimization result of our optimization method we used an up-to-date global optimization—the BCMO algorithm. Computation time by the global optimization method—BCMO—is slow, so a small data set of only three cameras was optimized for this comparison.

For optimizing three camera poses, each camera had six parameters and one camera was fixed. Therefore, there were a total of 12 parameters optimized. For six variables of the BCMO algorithm, the translation variable was set in the range of −0.1 m to 0.1 m, and the angle was set in the range of −3 to 3 degrees. In the BCMO algorithm, the parameter population was set to 1000, and the parameter iteration was set to 50. Figure 6 shows that for global optimization with all variables, the error result of method BCMO was a little better than the result of our method, but the computation time was much slower (Table 2). The optimization spends most of its time working on finding correspondence points between clouds.

Figure 6.

Residual error for 3-camera optimization. (a) BCMO algorithm. (b) Our optimization.

Table 2.

Computation time for 3-camera optimization by BCMO algorithm and our algorithm.

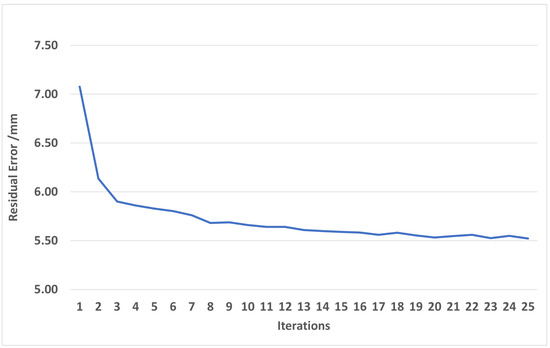

In the next experiment, the residual error was computed after the alignment of 10 cameras. From the dataset of 10 cameras, model mesh M was generated. Model M has ∼72.000 vertices and ∼144.000 triangles. The total overlapping points of 10 cameras is about ∼1.9 million points. When we generated the mesh by the PSR algorithm from points and normals, we set the option depth to 8 to create a smooth mesh, M. Then, we used model M to align each camera as overlapping. A final output mesh was created by the PSR algorithm with an option depth of nine to get more details on its surface. The computation time for each iteration includes the time of two parts: the time for mesh reconstruction by the PSR algorithm is about 5 s; the time for camera pose optimization is about 6 s. The total time for 1 iteration is about 11 s. The mean error point on the 10-camera mesh and model M reduces from 7.5 mm to 5.5 mm after 25 iterations. The results from Figure 6 and Figure 7 have large differences due to several reasons. The first reason is that in Figure 6, the residual is computed based on point-to-point distances, and in Figure 7, the distance is computed based on point-to-plane distances. The distance between two clouds is computed based on the point-to-plane being smaller than point-to-point; it depends on point resolution. The second reason is that in Figure 7, the residual is between points, and mesh M is approximated to the average of all frames, so roughly, it is approximately equal to half of the distance between frames. The last reason is that when optimizing the objective function, all points with a distance smaller than 0.14 to other mesh points are also valid points.

Figure 7.

Residual error between input data and output model M.

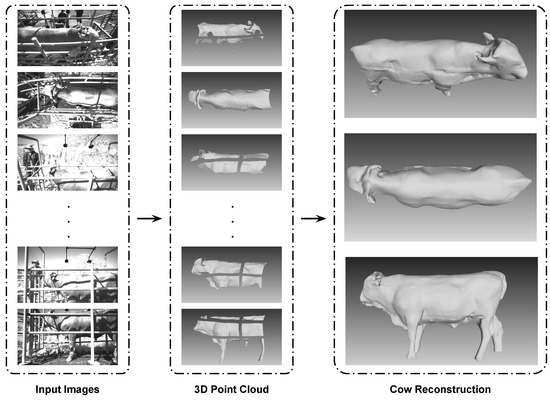

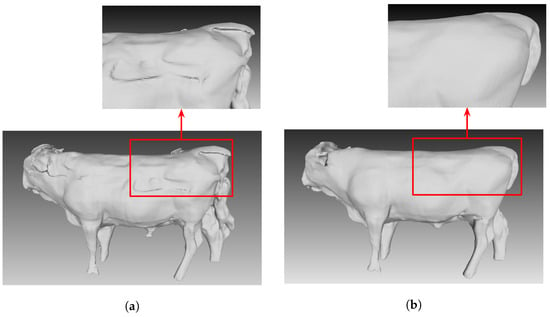

5.3. Cow Reconstruction Result

In Figure 8, our results show we can align all ten cameras to create a final cow reconstruction model. Additionally, the cattle surface, which is invisible by a fence on one camera view, can be filled in by another camera view or can be filled in by the PSR algorithm. Based on both residual value and computation time, using the method of interleaving update is a practical method. Figure 9 shows the mesh surface before global optimization (Figure 9a) and after global optimization (Figure 9b).

Figure 8.

Cow reconstruction process.

Figure 9.

The reconstruction output. (a) Before global optimization. (b) After global optimization.

6. Conclusions

In this paper, an automatic process from capturing data to generating a 3D mesh is presented. Experimental results with actual Korean cattle data demonstrate that the mesh output has quality almost equivalent to an up-to-date global optimization algorithm but the time to reconstruction is much faster. When the number of cameras increases, our algorithm computation time linearly increases from a high-quality mesh output of Korean cattle, and Korean cattle’s features can easily be analyzed or the mesh can be used as an input database for AI research. In this research, the light issue is not mentioned and the reconstruction process fails if the input data (stereo images) are too bright. The light issue needs to be analyzed more in the future. The computation time of BCMO depends on the number of population and iteration parameters, to decrease computation time, using GPU is a potential method to speed up computation time. To improve output mesh precision, the camera distortion should be considered. However, in this camera system, 10 cameras have different distortion models, and pre-calibrating distortion models of each camera can improve results in the future.

Author Contributions

Conceptualization, C.G.D. and V.T.P.; methodology, V.T.P.; software, V.T.P., M.K.B., H.-P.N. and S.H.; validation, S.S.L., M.A. and S.M.L.; formal analysis, M.A. and J.G.L.; investigation, C.G.D.; resources, M.N.P.; data curation, H.-S.S.; writing—original draft preparation, V.T.P.; writing—review and editing, H.-P.N., S.H., V.T.P., C.G.D. and J.G.L.; visualization, M.K.B., S.H. and H.-P.N.; supervision, C.G.D.; project administration, C.G.D.; funding acquisition, C.G.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Korean Institute of Planning and Evaluation for Technology in Food, Agriculture, and Forestry (IPET) and Korea Smart Farm R&D Foundation (KosFarm) through the Smart Farm Innovation Technology Development Program, funded by the Ministry of Agriculture, Food, and Rural Affairs (MAFRA), and the Ministry of Science and ICT (MSIT), Rural Development Administration (421050-03).

Institutional Review Board Statement

The animal study protocol was approved by the IACUC at the National Institute of Animal Science (approval number: NIAS 2022-0545).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors, Seungkyu Han, Hoang-Phong Nguyen, Min Ki Baek, Van Thuan Pham were employed by the company ZOOTOS Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 3D | Three Dimension |

| A.I | Artificial Intelligence |

| BCMO | Balance Composite Motion Optimization |

| FPFH | Fast-Point Feature Histogram |

| ICP | Iterative Closest Point |

| Kd-tree | K-dimension tree |

| PSR | Poisson Surface Reconstruction |

| M | 3D Mesh generated from points and normals of 10 cameras by PSR algorithm |

| Super4PCS | Super 4-Point Congruent Set |

| T | Transformation matrix |

References

- Özyeşil, O.; Voroninski, V.; Basri, R.; Singer, A. A survey of structure from motion. Acta Numer. 2017, 26, 305–364. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. Kinectfusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Zhou, Q.Y.; Koltun, V. Simultaneous localization and calibration: Self-calibration of consumer depth cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 454–460. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Structures, Spie, Boston, MA, USA, 1 April 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; Volume 2, p. 435. [Google Scholar]

- Horn, B.K. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative point matching for registration of free-form curves and surfaces. Int. J. Comput. Vis. 1994, 13, 119–152. [Google Scholar] [CrossRef]

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-points congruent sets for robust pairwise surface registration. In ACM SIGGRAPH 2008 Papers; ACM: New York, NY, USA, 2008; pp. 1–10. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; Volume 2, pp. 1–9. [Google Scholar]

- Wang, S.; Zuo, X.; Du, C.; Wang, R.; Zheng, J.; Yang, R. Dynamic non-rigid objects reconstruction with a single rgb-d sensor. Sensors 2018, 18, 886. [Google Scholar] [CrossRef] [PubMed]

- Newcombe, R.A.; Fox, D.; Seitz, S.M. Dynamicfusion: Reconstruction and tracking of non-rigid scenes in real-time. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 343–352. [Google Scholar]

- Le-Duc, T.; Nguyen, Q.H.; Nguyen-Xuan, H. Balancing composite motion optimization. Inf. Sci. 2020, 520, 250–270. [Google Scholar] [CrossRef]

- Choi, S.; Zhou, Q.Y.; Koltun, V. Robust reconstruction of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5556–5565. [Google Scholar]

- Park, J.; Zhou, Q.Y.; Koltun, V. Colored point cloud registration revisited. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 143–152. [Google Scholar]

- Ruchay, A.; Kober, V.; Dorofeev, K.; Kolpakov, V.; Gladkov, A.; Guo, H. Live Weight Prediction of Cattle Based on Deep Regression of RGB-D Images. Agriculture 2022, 12, 1794. [Google Scholar] [CrossRef]

- Li, J.; Ma, W.; Li, Q.; Zhao, C.; Tulpan, D.; Yang, S.; Ding, L.; Gao, R.; Yu, L.; Wang, Z. Multi-view real-time acquisition and 3D reconstruction of point clouds for beef cattle. Comput. Electron. Agric. 2022, 197, 106987. [Google Scholar] [CrossRef]

- Li, S.; Lu, R.; Liu, J.; Guo, L. Super edge 4-points congruent sets-based point cloud global registration. Remote Sens. 2021, 13, 3210. [Google Scholar] [CrossRef]

- Bueno, M.; Bosché, F.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. 4-Plane congruent sets for automatic registration of as-is 3D point clouds with 3D BIM models. Autom. Constr. 2018, 89, 120–134. [Google Scholar] [CrossRef]

- Le Cozler, Y.; Allain, C.; Caillot, A.; Delouard, J.; Delattre, L.; Luginbuhl, T.; Faverdin, P. High-precision scanning system for complete 3D cow body shape imaging and analysis of morphological traits. Comput. Electron. Agric. 2019, 157, 447–453. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Li, J.; Wang, P.; Xiong, P.; Cai, T.; Yan, Z.; Yang, L.; Liu, J.; Fan, H.; Liu, S. Practical stereo matching via cascaded recurrent network with adaptive correlation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16263–16272. [Google Scholar]

- Dang, C.; Choi, T.; Lee, S.; Lee, S.; Alam, M.; Lee, S.; Han, S.; Hoang, D.T.; Lee, J.; Nguyen, D.T. Case Study: Improving the Quality of Dairy Cow Reconstruction with a Deep Learning-Based Framework. Sensors 2022, 22, 9325. [Google Scholar] [CrossRef] [PubMed]

- Staranowicz, A.N.; Brown, G.R.; Morbidi, F.; Mariottini, G.L. Practical and accurate calibration of RGB-D cameras using spheres. Comput. Vis. Image Underst. 2015, 137, 102–114. [Google Scholar] [CrossRef]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson surface reconstruction. In Proceedings of the Fourth Eurographics Symposium on Geometry Processing, Sardinia, Italy, 26–28 June 2006; Volume 7. [Google Scholar]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. (ToG) 2013, 32, 29. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).