Comprehensive Investigation of Unmanned Aerial Vehicles (UAVs): An In-Depth Analysis of Avionics Systems

Abstract

1. Introduction

- Water-related challenges (i.e., rain) result in UAV operational limitations [45] as water can leak into the UAV, permanently damaging sensitive electronic components.

- Humidity: high levels of air humidity induce condensation and water accumulation inside a UAV.

- High temperatures: the performance of semiconductors inside a UAV is greatly affected by high temperatures.

2. Research Methodology

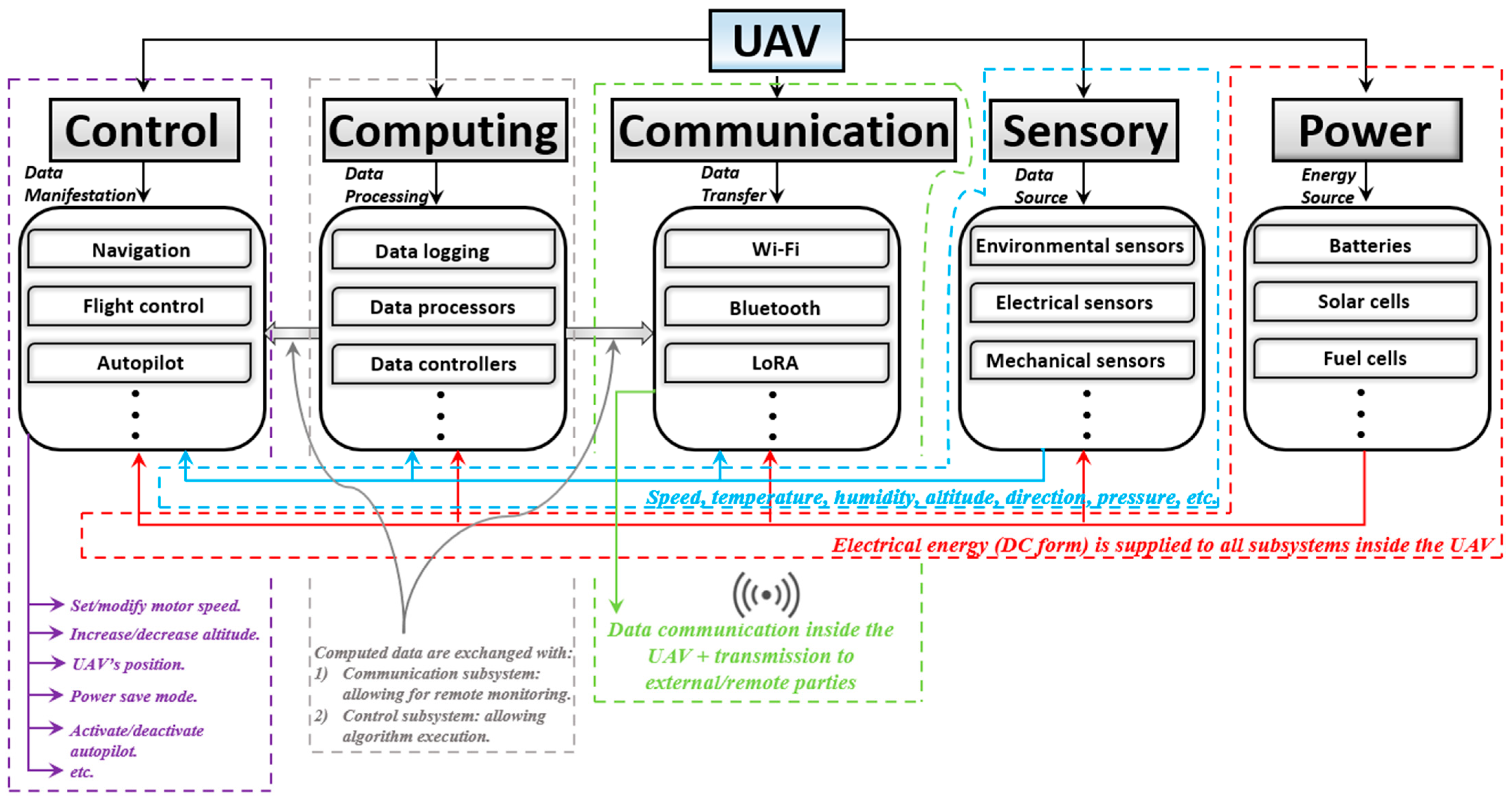

- Control: This set includes navigation systems, flight control, autopilot, collision avoidance, target tracking, fail-safe, motor speed, and other related systems that are dedicated to managing and directing a UAV’s flight.

- Computing: This set includes the computational elements including data processors, onboard computers, data loggers, and all computing platforms responsible for the execution of different algorithms.

- Communication: This set includes the information exchange between a UAV and external parties (i.e., for remote control options) performed through different communication modules (e.g., Bluetooth, Wi-Fi, Long Range (LoRa) modules, etc.).

- Sensory: This set includes data captured from internal (e.g., UAV’s power consumption and temperature), as well as external (e.g., altitude, pressure, and wind speed) environments held through UAV-embedded sensors.

- Power: This set includes the energy sources (e.g., battery/solar cell drives), power distribution, and power management systems with the related circuitry to provide UAVs with optimal power for proper overall functionality.

- RQ1: What are the different subsystems within a UAV?

- RQ2: Is there any hierarchy between the different subsystems?

- RQ3: Is there any integration between the subsystems?

- RQ4: How can UAV reliability be enhanced by means of multiple sensory systems?

- RQ5: What are the programming languages for different computing systems?

- RQ6: What is the relationship between sensory and computing systems?

- RQ7: How is the interdependence between subsystems managed?

- RQ8: What are the standard UAV communication protocols?

- RQ9: How can fail-safe be ensured in emergencies?

- RQ10: How can limits be set for motor speed and UAV maximal altitude?

3. UAV Operational Overview

4. Avionics Assessment

4.1. Control

4.1.1. Navigation

- Strategy

- (a)

- Vision-based techniques

- (b)

- Artificial Intelligence (AI)-based techniques

- (b.1)

- Mathematical optimization

- PSO: the optimal path for particles (i.e., drones with a swarm) can be attained by means of a competition strategy-based PSO, after comparison between the current global path with respect to other global candidates [112].

- ACO: the premature convergence of a single-colony ACO algorithm can be overcome using multi-colony ACO, where multiple UAV groups search for the optimal routes to the destination [113].

- GA: the 3D position of a UAV is encoded into a chromosome which in turn contains information about the UAV’s position/motion (e.g., acceleration, rate of the climbing angle, rate of the heading angle, etc.). The present-time 3D coordinates are obtained from the chromosome decoding and then evaluated by a fitness function. Eventually, path selection and information loss/exchange are referred to genetic operations [114].

- DE: in the case of a disaster (i.e., the navigation becomes harder), a constraint DE converges toward the optimum UAV route by selecting the high fitness values and minimum constraint violations among all probable traveling points [115].

- GWO: for fast convergence and efficient environmental exploitation, the conventional GWO can be hybridized with other algorithms (e.g., modified symbiotic organisms search), eventually yielding better UAV path navigation [116].

- (b.2)

- Training models

- Path planning/obstacle avoidance

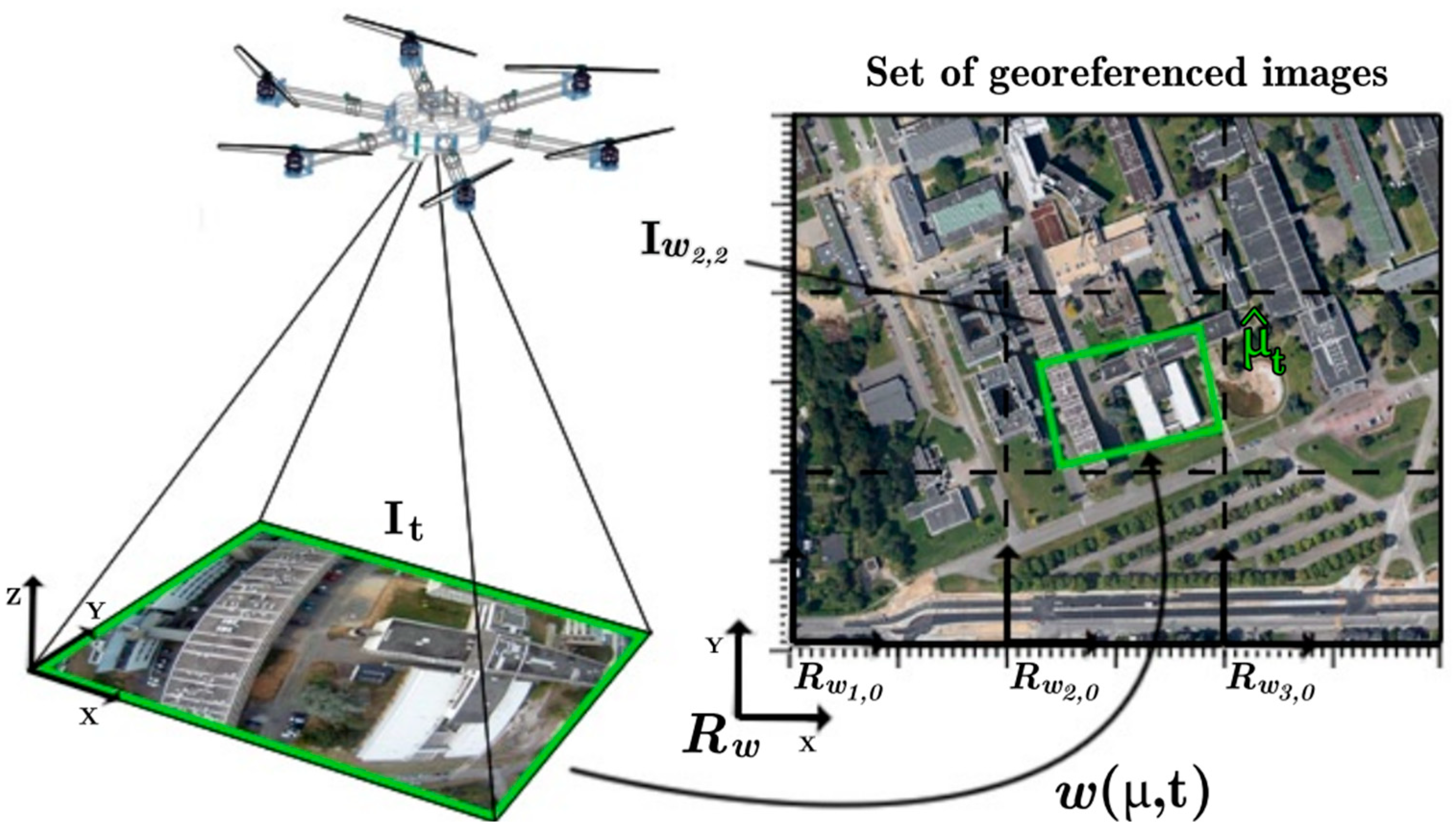

- Localization

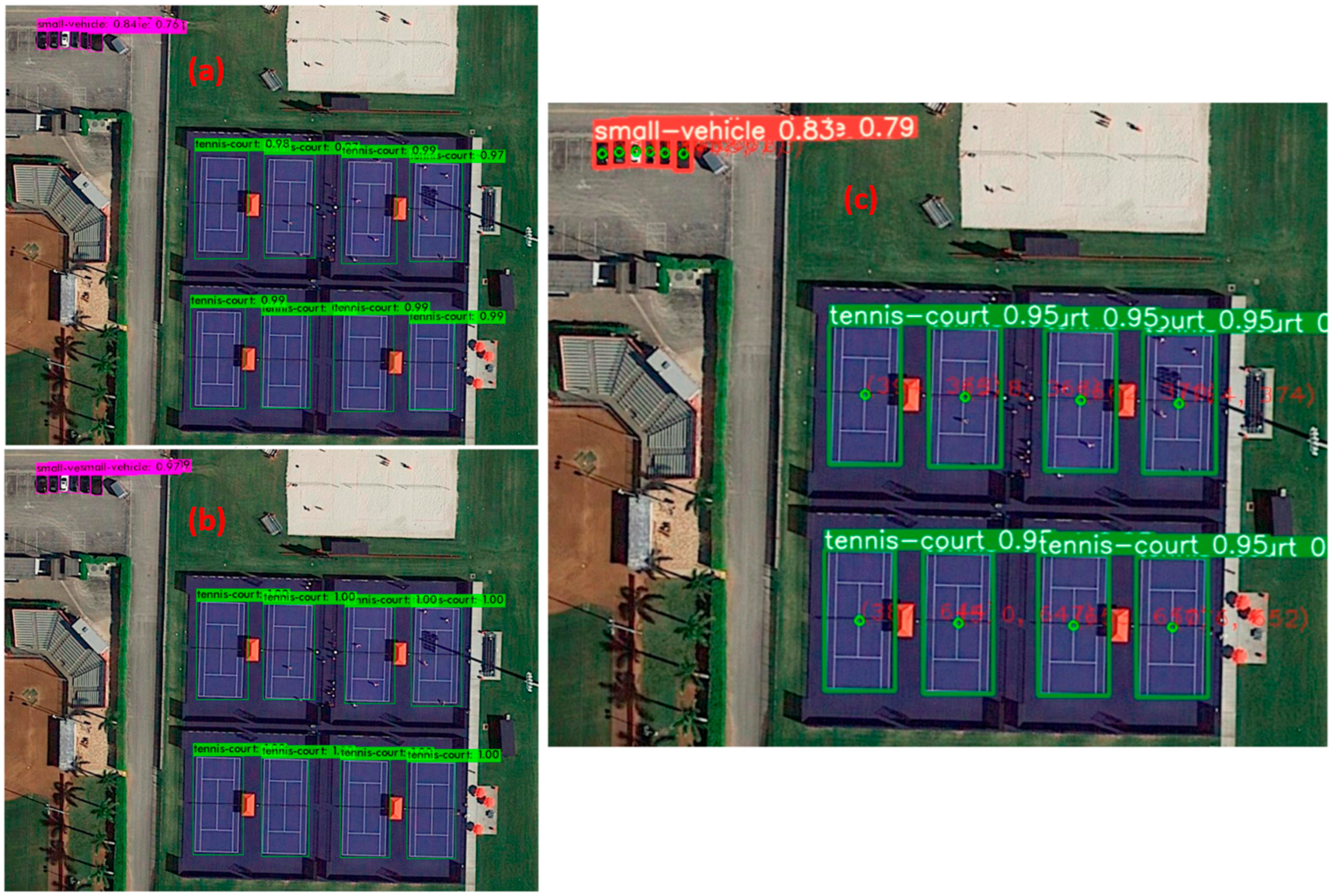

4.1.2. Target Tracking

4.1.3. Payload Integration and Control

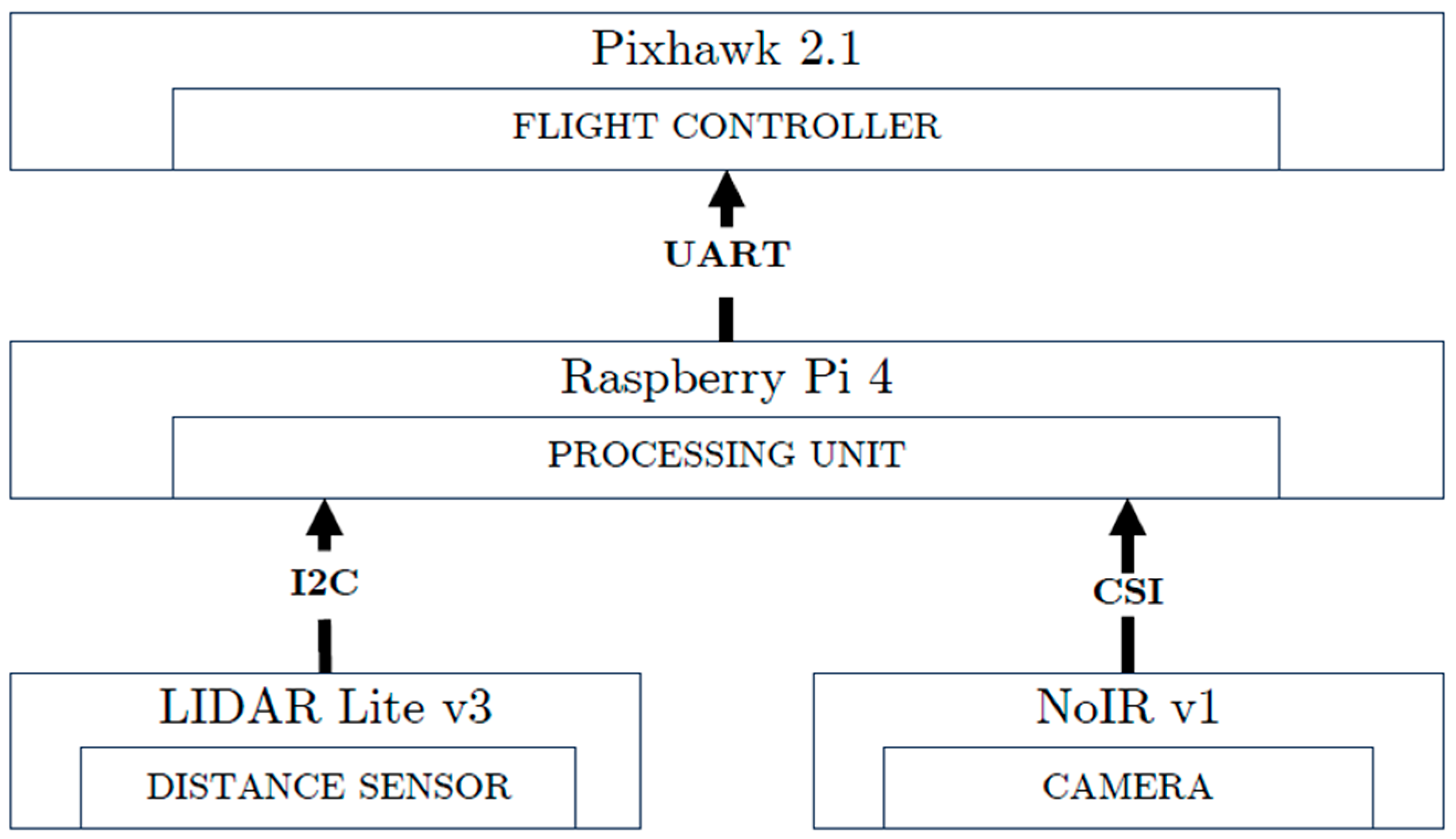

4.2. Computing

- (a)

- SBCs

- Raspberry Pi

- Odroid XU4

- NVIDIA Jetson

- (b)

- SoM

4.3. Communication

- (a)

- LoRa

- (b)

- Wi-Fi

- (c)

- BLE

- (d)

- LTE-M

4.4. Sensory

- Environmental sensors

- (a)

- Pressure sensors

- (b)

- Temperature sensors

- (c)

- Humidity sensors

- Vision sensors

- (a)

- RGB-D Cameras

- (b)

- Thermal Cameras

- Position sensors

- (a)

- Tracking/localization

- (b)

- Proximity/radar

4.5. Power

5. Discussion

6. Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Capener, A.M.; Sowby, R.B.; Williams, G.P. Pathways to Enhancing Analysis of Irrigation by Remote Sensing (AIRS) in Urban Settings. Sustainability 2023, 15, 12676. [Google Scholar] [CrossRef]

- Fragassa, C.; Vitali, G.; Emmi, L.; Arru, M. A New Procedure for Combining UAV-Based Imagery and Machine Learning in Precision Agriculture. Sustainability 2023, 15, 998. [Google Scholar] [CrossRef]

- Guan, S.; Takahashi, K.; Watanabe, S.; Tanaka, K. Unmanned Aerial Vehicle-Based Techniques for Monitoring and Prevention of Invasive Apple Snails (Pomacea canaliculata) in Rice Paddy Fields. Agriculture 2024, 14, 299. [Google Scholar] [CrossRef]

- Finigian, M.; Kavounas, P.A.; Ho, I.; Smith, C.C.; Witusik, A.; Hopwood, A.; Avent, C.; Ragasa, B.; Roth, B. Design and Flight Test of a Tube-Launched Unmanned Aerial Vehicle. Aerospace 2024, 11, 133. [Google Scholar] [CrossRef]

- Wang, Y.; Kumar, L.; Raja, V.; AL-bonsrulah, H.A.Z.; Kulandaiyappan, N.K.; Amirtharaj Tharmendra, A.; Marimuthu, N.; Al-Bahrani, M. Design and Innovative Integrated Engineering Approaches Based Investigation of Hybrid Renewable Energized Drone for Long Endurance Applications. Sustainability 2022, 14, 16173. [Google Scholar] [CrossRef]

- Alsumayt, A.; El-Haggar, N.; Amouri, L.; Alfawaer, Z.M.; Aljameel, S.S. Smart Flood Detection with AI and Blockchain Integration in Saudi Arabia Using Drones. Sensors 2023, 23, 5148. [Google Scholar] [CrossRef]

- Atanasov, A.Z.; Evstatiev, B.I.; Vladut, V.N.; Biris, S.-S. A Novel Algorithm to Detect White Flowering Honey Trees in Mixed Forest Ecosystems Using UAV-Based RGB Imaging. AgriEngineering 2024, 6, 95–112. [Google Scholar] [CrossRef]

- Povlsen, P.; Bruhn, D.; Durdevic, P.; Arroyo, D.O.; Pertoldi, C. Using YOLO Object Detection to Identify Hare and Roe Deer in Thermal Aerial Video Footage—Possible Future Applications in Real-Time Automatic Drone Surveillance and Wildlife Monitoring. Drones 2024, 8, 2. [Google Scholar] [CrossRef]

- Kabir, H.; Tham, M.-L.; Chang, Y.C.; Chow, C.-O.; Owada, Y. Mobility-Aware Resource Allocation in IoRT Network for Post-Disaster Communications with Parameterized Reinforcement Learning. Sensors 2023, 23, 6448. [Google Scholar] [CrossRef]

- Shin, H.; Kim, J.; Kim, K.; Lee, S. Empirical Case Study on Applying Artificial Intelligence and Unmanned Aerial Vehicles for the Efficient Visual Inspection of Residential Buildings. Buildings 2023, 13, 2754. [Google Scholar] [CrossRef]

- da Silva, Y.M.R.; Andrade, F.A.A.; Sousa, L.; de Castro, G.G.R.; Dias, J.T.; Berger, G.; Lima, J.; Pinto, M.F. Computer Vision Based Path Following for Autonomous Unmanned Aerial Systems in Unburied Pipeline Onshore Inspection. Drones 2022, 6, 410. [Google Scholar] [CrossRef]

- Kim, H.-J.; Kim, J.-Y.; Kim, J.-W.; Kim, S.-K.; Na, W.S. Unmanned Aerial Vehicle-Based Automated Path Generation of Rollers for Smart Construction. Electronics 2024, 13, 138. [Google Scholar] [CrossRef]

- Rossini, M.; Garzonio, R.; Panigada, C.; Tagliabue, G.; Bramati, G.; Vezzoli, G.; Cogliati, S.; Colombo, R.; Di Mauro, B. Mapping Surface Features of an Alpine Glacier through Multispectral and Thermal Drone Surveys. Remote Sens. 2023, 15, 3429. [Google Scholar] [CrossRef]

- Han, D.; Lee, S.B.; Song, M.; Cho, J.S. Change Detection in Unmanned Aerial Vehicle Images for Progress Monitoring of Road Construction. Buildings 2021, 11, 150. [Google Scholar] [CrossRef]

- Li, R.; Wu, M. Revealing Urban Color Patterns via Drone Aerial Photography—A Case Study in Urban Hangzhou, China. Buildings 2024, 14, 546. [Google Scholar] [CrossRef]

- Wu, H.; Huang, Z.; Zheng, W.; Bai, X.; Sun, L.; Pu, M. SSGAM-Net: A Hybrid Semi-Supervised and Supervised Network for Robust Semantic Segmentation Based on Drone LiDAR Data. Remote Sens. 2024, 16, 92. [Google Scholar] [CrossRef]

- Yoo, H.-J.; Kim, H.; Kang, T.-S.; Kim, K.-H.; Bang, K.-Y.; Kim, J.-B.; Park, M.-S. Prediction of Beach Sand Particle Size Based on Artificial Intelligence Technology Using Low-Altitude Drone Images. J. Mar. Sci. Eng. 2024, 12, 172. [Google Scholar] [CrossRef]

- Koulianos, A.; Litke, A. Blockchain Technology for Secure Communication and Formation Control in Smart Drone Swarms. Future Internet 2023, 15, 344. [Google Scholar] [CrossRef]

- Myers, R.J.; Perera, S.M.; McLewee, G.; Huang, D.; Song, H. Multi-Beam Beamforming-Based ML Algorithm to Optimize the Routing of Drone Swarms. Drones 2024, 8, 57. [Google Scholar] [CrossRef]

- Abdelmaboud, A. The Internet of Drones: Requirements, Taxonomy, Recent Advances, and Challenges of Research Trends. Sensors 2021, 21, 5718. [Google Scholar] [CrossRef]

- Hou, D.; Su, Q.; Song, Y.; Yin, Y. Research on Drone Fault Detection Based on Failure Mode Databases. Drones 2023, 7, 486. [Google Scholar] [CrossRef]

- Puchalski, R.; Giernacki, W. UAV Fault Detection Methods, State-of-the-Art. Drones 2022, 6, 330. [Google Scholar] [CrossRef]

- Kim, H.; Chae, H.; Kwon, S.; Lee, S. Optimization of Deep Learning Parameters for Magneto-Impedance Sensor in Metal Detection and Classification. Sensors 2023, 23, 9259. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Q.; Tian, X.; Yu, Z.; Ding, Y.; Elhanashi, A.; Saponara, S.; Kpalma, K. MobileRaT: A Lightweight Radio Transformer Method for Automatic Modulation Classification in Drone Communication Systems. Drones 2023, 7, 596. [Google Scholar] [CrossRef]

- Hyun, D.; Han, J.; Hong, S. Power Management Strategy of Hybrid Fuel Cell Drones for Flight Performance Improvement Based on Various Algorithms. Energies 2023, 16, 8001. [Google Scholar] [CrossRef]

- Beliaev, V.; Kunicina, N.; Ziravecka, A.; Bisenieks, M.; Grants, R.; Patlins, A. Development of Adaptive Control System for Aerial Vehicles. Appl. Sci. 2023, 13, 12940. [Google Scholar] [CrossRef]

- Bond, E.; Crowther, B.; Parslew, B. The Rise Of High-Performance Multi-Rotor Unmanned Aerial Vehicles—How worried should we be? In Proceedings of the 2019 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS), Cranfield, UK, 25–27 November 2019; pp. 177–184. [Google Scholar] [CrossRef]

- Ghazali, M.H.M.; Rahiman, W.; Novaliendry, D.; Risfendra. Automated Drone Fault Detection Approach in Thrust Mode State. In Proceedings of the 2023 IEEE International Conference on Automatic Control and Intelligent Systems (I2CACIS), Shah Alam, Malaysia, 17 June 2023; pp. 17–21. [Google Scholar] [CrossRef]

- Dalwadi, N.; Deb, D.; Ozana, S. Rotor Failure Compensation in a Biplane Quadrotor Based on Virtual Deflection. Drones 2022, 6, 176. [Google Scholar] [CrossRef]

- Shin, Y.-H.; Kim, D.; Son, S.; Ham, J.-W.; Oh, K.-Y. Vibration Isolation of a Surveillance System Equipped in a Drone with Mode Decoupling. Appl. Sci. 2021, 11, 1961. [Google Scholar] [CrossRef]

- Eskandaripour, H.; Boldsaikhan, E. Last-Mile Drone Delivery: Past, Present, and Future. Drones 2023, 7, 77. [Google Scholar] [CrossRef]

- Al-Haddad, L.A.; Jaber, A.A. An Intelligent Fault Diagnosis Approach for Multirotor UAVs Based on Deep Neural Network of Multi-Resolution Transform Features. Drones 2023, 7, 82. [Google Scholar] [CrossRef]

- Meng, L.; Zhang, L.; Yang, L.; Yang, W. A GPS-Adaptive Spoofing Detection Method for the Small UAV Cluster. Drones 2023, 7, 461. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-Based Navigation Techniques for Unmanned Aerial Vehicles: Review and Challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Fakhraian, E.; Semanjski, I.; Semanjski, S.; Aghezzaf, E.-H. Towards Safe and Efficient Unmanned Aircraft System Operations: Literature Review of Digital Twins’ Applications and European Union Regulatory Compliance. Drones 2023, 7, 478. [Google Scholar] [CrossRef]

- Wang, C.-N.; Yang, F.-C.; Vo, N.T.M.; Nguyen, V.T.T. Wireless Communications for Data Security: Efficiency Assessment of Cybersecurity Industry—A Promising Application for UAVs. Drones 2022, 6, 363. [Google Scholar] [CrossRef]

- Guo, K.; Liu, L.; Shi, S.; Liu, D.; Peng, X. UAV Sensor Fault Detection Using a Classifier without Negative Samples: A Local Density Regulated Optimization Algorithm. Sensors 2019, 19, 771. [Google Scholar] [CrossRef]

- Daniels, L.; Eeckhout, E.; Wieme, J.; Dejaegher, Y.; Audenaert, K.; Maes, W.H. Identifying the Optimal Radiometric Calibration Method for UAV-Based Multispectral Imaging. Remote Sens. 2023, 15, 2909. [Google Scholar] [CrossRef]

- Siddiqui, Z.A.; Park, U. A Drone Based Transmission Line Components Inspection System with Deep Learning Technique. Energies 2020, 13, 3348. [Google Scholar] [CrossRef]

- Saha, B.; Koshimoto, E.; Quach, C.C.; Hogge, E.F.; Strom, T.H.; Hill, B.L.; Vazquez, S.L.; Goebel, K. Battery health management system for electric UAVs. In Proceedings of the 2011 Aerospace Conference, Big Sky, MT, USA, 5–12 March 2011. [Google Scholar] [CrossRef]

- Manjarrez, L.H.; Ramos-Fernández, J.C.; Espinoza, E.S.; Lozano, R. Estimation of Energy Consumption and Flight Time Margin for a UAV Mission Based on Fuzzy Systems. Technologies 2023, 11, 12. [Google Scholar] [CrossRef]

- Bello, A.B.; Navarro, F.; Raposo, J.; Miranda, M.; Zazo, A.; Álvarez, M. Fixed-Wing UAV Flight Operation under Harsh Weather Conditions: A Case Study in Livingston Island Glaciers, Antarctica. Drones 2022, 6, 384. [Google Scholar] [CrossRef]

- Tajima, Y.; Hiraguri, T.; Matsuda, T.; Imai, T.; Hirokawa, J.; Shimizu, H.; Kimura, T.; Maruta, K. Analysis of Wind Effect on Drone Relay Communications. Drones 2023, 7, 182. [Google Scholar] [CrossRef]

- Shalaby, A.M.; Othman, N.S. The Effect of Rainfall on the UAV Placement for 5G Spectrum in Malaysia. Electronics 2022, 11, 681. [Google Scholar] [CrossRef]

- Luo, K.; Luo, R.; Zhou, Y. UAV detection based on rainy environment. In Proceedings of the 2021 IEEE 4th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 18–20 June 2021; pp. 1207–1210. [Google Scholar] [CrossRef]

- Estevez, J.; Garate, G.; Lopez-Guede, J.M.; Larrea, M. Review of Aerial Transportation of Suspended-Cable Payloads with Quadrotors. Drones 2024, 8, 35. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Ilipbayeva, L.; Taissariyeva, K.; Smailov, N.; Matson, E.T. Advances and Challenges in Drone Detection and Classification Techniques: A State-of-the-Art Review. Sensors 2024, 24, 125. [Google Scholar] [CrossRef]

- Hu, D.; Minner, J. UAVs and 3D City Modeling to Aid Urban Planning and Historic Preservation: A Systematic Review. Remote Sens. 2023, 15, 5507. [Google Scholar] [CrossRef]

- De Silvestri, S.; Capasso, P.J.; Gargiulo, A.; Molinari, S.; Sanna, A. Challenges for the Routine Application of Drones in Healthcare: A Scoping Review. Drones 2023, 7, 685. [Google Scholar] [CrossRef]

- Álvarez-González, M.; Suarez-Bregua, P.; Pierce, G.J.; Saavedra, C. Unmanned Aerial Vehicles (UAVs) in Marine Mammal Research: A Review of Current Applications and Challenges. Drones 2023, 7, 667. [Google Scholar] [CrossRef]

- Quamar, M.M.; Al-Ramadan, B.; Khan, K.; Shafiullah, M.; El Ferik, S. Advancements and Applications of Drone-Integrated Geographic Information System Technology—A Review. Remote Sens. 2023, 15, 5039. [Google Scholar] [CrossRef]

- Bayomi, N.; Fernandez, J.E. Eyes in the Sky: Drones Applications in the Built Environment under Climate Change Challenges. Drones 2023, 7, 637. [Google Scholar] [CrossRef]

- Abrahams, M.; Sibanda, M.; Dube, T.; Chimonyo, V.G.P.; Mabhaudhi, T. A Systematic Review of UAV Applications for Mapping Neglected and Underutilised Crop Species’ Spatial Distribution and Health. Remote Sens. 2023, 15, 4672. [Google Scholar] [CrossRef]

- Telli, K.; Kraa, O.; Himeur, Y.; Ouamane, A.; Boumehraz, M.; Atalla, S.; Mansoor, W. A Comprehensive Review of Recent Research Trends on Unmanned Aerial Vehicles (UAVs). Systems 2023, 11, 400. [Google Scholar] [CrossRef]

- Chandran, N.K.; Sultan, M.T.H.; Łukaszewicz, A.; Shahar, F.S.; Holovatyy, A.; Giernacki, W. Review on Type of Sensors and Detection Method of Anti-Collision System of Unmanned Aerial Vehicle. Sensors 2023, 23, 6810. [Google Scholar] [CrossRef] [PubMed]

- Sihag, V.; Choudhary, G.; Choudhary, P.; Dragoni, N. Cyber4Drone: A Systematic Review of Cyber Security and Forensics in Next-Generation Drones. Drones 2023, 7, 430. [Google Scholar] [CrossRef]

- Lyu, M.; Zhao, Y.; Huang, C.; Huang, H. Unmanned Aerial Vehicles for Search and Rescue: A Survey. Remote Sens. 2023, 15, 3266. [Google Scholar] [CrossRef]

- Liang, H.; Lee, S.-C.; Bae, W.; Kim, J.; Seo, S. Towards UAVs in Construction: Advancements, Challenges, and Future Directions for Monitoring and Inspection. Drones 2023, 7, 202. [Google Scholar] [CrossRef]

- Chen, C.; Zheng, Z.; Xu, T.; Guo, S.; Feng, S.; Yao, W.; Lan, Y. YOLO-Based UAV Technology: A Review of the Research and Its Applications. Drones 2023, 7, 190. [Google Scholar] [CrossRef]

- Gugan, G.; Haque, A. Path Planning for Autonomous Drones: Challenges and Future Directions. Drones 2023, 7, 169. [Google Scholar] [CrossRef]

- Malang, C.; Charoenkwan, P.; Wudhikarn, R. Implementation and Critical Factors of Unmanned Aerial Vehicle (UAV) in Warehouse Management: A Systematic Literature Review. Drones 2023, 7, 80. [Google Scholar] [CrossRef]

- Iqbal, U.; Riaz, M.Z.B.; Zhao, J.; Barthelemy, J.; Perez, P. Drones for Flood Monitoring, Mapping and Detection: A Bibliometric Review. Drones 2023, 7, 32. [Google Scholar] [CrossRef]

- Tahir, M.A.; Mir, I.; Islam, T.U. A Review of UAV Platforms for Autonomous Applications: Comprehensive Analysis and Future Directions. IEEE Access 2023, 11, 52540–52554. [Google Scholar] [CrossRef]

- Milidonis, K.; Eliades, A.; Grigoriev, V.; Blanco, M.J. Unmanned Aerial Vehicles (UAVs) in the planning, operation and maintenance of concentrating solar thermal systems: A review. Sol. Energy 2023, 254, 182–194. [Google Scholar] [CrossRef]

- Mohammed, A.B.; Fourati, L.C.; Fakhrudeen, A.M. Comprehensive systematic review of intelligent approaches in UAV-based intrusion detection, blockchain, and network security. Comput. Netw. 2023, 239, 110140. [Google Scholar] [CrossRef]

- Buchelt, A.; Adrowitzer, A.; Kieseberg, P.; Gollob, C.; Nothdurft, A.; Eresheim, S.; Tschiatschek, S.; Stampfer, K.; Holzinger, A. Exploring artificial intelligence for applications of drones in forest ecology and management. For. Ecol. Manag. 2024, 551, 121530. [Google Scholar] [CrossRef]

- Kim, S.Y.; Kwon, D.Y.; Jang, A.; Ju, Y.K.; Lee, J.-S.; Hong, S. A review of UAV integration in forensic civil engineering: From sensor technologies to geotechnical, structural and water infrastructure applications. Measurement 2024, 224, 113886. [Google Scholar] [CrossRef]

- Vigneault, P.; Lafond-Lapalme, J.; Deshaies, A.; Khun, K.; de la Sablonnière, S.; Filion, M.; Longchamps, L.; Mimee, B. An integrated data-driven approach to monitor and estimate plant-scale growth using UAV. ISPRS Open J. Photogramm. Remote Sens. 2024, 11, 100052. [Google Scholar] [CrossRef]

- Michail, A.; Livera, A.; Tziolis, G.; Candás, J.L.C.; Fernandez, A.; Yudego, E.A.; Martínez, D.F.; Antonopoulos, A.; Tripolitsiotis, A.; Partsinevelos, P.; et al. A comprehensive review of unmanned aerial vehicle-based approaches to support photovoltaic plant diagnosis. Heliyon 2024, 10, e23983. [Google Scholar] [CrossRef] [PubMed]

- Wan, L.; Zhao, L.; Xu, W.; Guo, F.; Jiang, X. Dust deposition on the photovoltaic panel: A comprehensive survey on mechanisms, effects, mathematical modeling, cleaning methods, and monitoring systems. Sol. Energy 2024, 268, 112300. [Google Scholar] [CrossRef]

- Xu, W.; Wu, X.; Liu, J.; Yan, Y. Design of anti-load perturbation flight trajectory stability controller for agricultural UAV. Front. Plant Sci. 2023, 14, 1030203. [Google Scholar] [CrossRef] [PubMed]

- Aksland, C.T.; Alleyne, A.G. Hierarchical model-based predictive controller for a hybrid UAV powertrain. Control Eng. Pract. 2021, 115, 104883. [Google Scholar] [CrossRef]

- Kovalev, I.V.; Voroshilova, A.A.; Karaseva, M.V. On the problem of the manned aircraft modification to UAVs. J. Phys. Conf. Ser. 2019, 1399, 055100. [Google Scholar] [CrossRef]

- Riboldi, C.E.D.; Rolando, A. Autonomous Flight in Hover and Near-Hover for Thrust-Controlled Unmanned Airships. Drones 2023, 7, 545. [Google Scholar] [CrossRef]

- Huang, Y.; Li, W.; Ning, J.; Li, Z. Formation Control for UAV-USVs Heterogeneous System with Collision Avoidance Performance. J. Mar. Sci. Eng. 2023, 11, 2332. [Google Scholar] [CrossRef]

- Xiao, Q.; Li, Y.; Luo, F.; Liu, H. Analysis and assessment of risks to public safety from unmanned aerial vehicles using fault tree analysis and Bayesian network. Technol. Soc. 2023, 73, 102229. [Google Scholar] [CrossRef]

- Marques, T.; Carreira, S.; Miragaia, R.; Ramos, J.; Pereira, A. Applying deep learning to real-time UAV-based forest monitoring: Leveraging multi-sensor imagery for improved results. Expert Syst. Appl. 2024, 245, 123107. [Google Scholar] [CrossRef]

- Tahir, M.A.; Mir, I.; Islam, T.U. Control Algorithms, Kalman Estimation and Near Actual Simulation for UAVs: State of Art Perspective. Drones 2023, 7, 339. [Google Scholar] [CrossRef]

- Ge, C.; Dunno, K.; Singh, M.A.; Yuan, L.; Lu, L.-X. Development of a Drone’s Vibration, Shock, and Atmospheric Profiles. Appl. Sci. 2021, 11, 5176. [Google Scholar] [CrossRef]

- Li, B.; Zhang, H.; He, P.; Wang, G.; Yue, K.; Neretin, E. Hierarchical Maneuver Decision Method Based on PG-Option for UAV Pursuit-Evasion Game. Drones 2023, 7, 449. [Google Scholar] [CrossRef]

- Bakırcıoğlu, V.; Çabuk, N.; Yıldırım, Ş. Experimental comparison of the effect of the number of redundant rotors on the fault tolerance performance for the proposed multilayer UAV. Robot. Auton. Syst. 2022, 149, 103977. [Google Scholar] [CrossRef]

- Zhang, H.; Xue, J.; Wang, Q.; Li, Y. A security optimization scheme for data security transmission in UAV-assisted edge networks based on federal learning. Ad Hoc Netw. 2023, 150, 103277. [Google Scholar]

- Huang, J.; Tao, H.; Wang, Y.; Sun, J.-Q. Suppressing UAV payload swing with time-varying cable length through nonlinear coupling. Mech. Syst. Signal Process. 2023, 185, 109790. [Google Scholar] [CrossRef]

- Srisomboon, I.; Lee, S. Positioning and Navigation Approaches Using Packet Loss-Based Multilateration for UAVs in GPS-Denied Environments. IEEE Access 2024, 12, 13355–13369. [Google Scholar] [CrossRef]

- Akremi, M.S.; Neji, N.; Tabia, H. Visual Navigation of UAVs in Indoor Corridor Environments using Deep Learning. In Proceedings of the 2023 Integrated Communication, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 18–20 April 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Famili, A.; Stavrou, A.; Wang, H.; Park, J.-M. PILOT: High-Precision Indoor Localization for Autonomous Drones. IEEE Trans. Veh. Technol. 2023, 72, 6445–6459. [Google Scholar] [CrossRef]

- Grunwald, G.; Ciećko, A.; Kozakiewicz, T.; Krasuski, K. Analysis of GPS/EGNOS Positioning Quality Using Different Ionospheric Models in UAV Navigation. Sensors 2023, 23, 1112. [Google Scholar] [CrossRef]

- Beirens, B.; Darrozes, J.; Ramillien, G.; Seoane, L.; Médina, P.; Durand, P. Using a SPATIAL INS/GNSS MEMS Unit to Detect Local Gravity Variations in Static and Mobile Experiments: First Results. Sensors 2023, 23, 7060. [Google Scholar] [CrossRef]

- Gyagenda, N.; Hatilima, J.V.; Roth, H.; Zhmud, V. A review of GNSS-independent UAV navigation techniques. Robot. Auton. Syst. 2022, 152, 104069. [Google Scholar] [CrossRef]

- Gao, B.; Hu, G.; Zhang, L.; Zhong, Y.; Zhu, X. Cubature Kalman filter with closed-loop covariance feedback control for integrated INS/GNSS navigation. Chin. J. Aeronaut. 2023, 36, 363–376. [Google Scholar] [CrossRef]

- Al Radi, M.; AlMallahi, M.N.; Al-Sumaiti, A.S.; Semeraro, C.; Abdelkareem, M.A.; Olabi, A.G. Progress in artificial intelligence-based visual servoing of autonomous unmanned aerial vehicles (UAVs). Int. J. Thermofluids 2024, 21, 100590. [Google Scholar] [CrossRef]

- Vetrella, A.R.; Fasano, G. Cooperative UAV navigation under nominal GPS coverage and in GPS-challenging environments. In Proceedings of the 2016 IEEE 2nd International Forum on Research and Technologies for Society and Industry Leveraging a Better Tomorrow (RTSI) 2016, Bologna, Italy, 7–9 September 2016. [Google Scholar]

- Saranya, K.C.; Naidu, V.P.; Singhal, V.; Tanuja, B.M. Application of vision based techniques for UAV position estimation. In Proceedings of the 2016 International Conference on Research Advances in Integrated Navigation Systems (RAINS) 2016, Bangalore, India, 6–7 May 2016. [Google Scholar]

- Gupta, A.; Fernando, X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- Ranftl, R.; Vineet, V.; Chen, Q.; Koltun, V. Dense monocular depth estimation in complex dynamic scenes. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Bavle, H.; De La Puente, P.; How, J.P.; Campoy, P. VPS-SLAM: Visual planar semantic slam for aerial robotic systems. IEEE Access 2020, 8, 60704–60718. [Google Scholar] [CrossRef]

- Chang, Y.; Cheng, Y.; Manzoor, U.; Murray, J. A review of UAV autonomous navigation in GPS-denied environments. Robot. Auton. Syst. 2023, 170, 104533. [Google Scholar] [CrossRef]

- Wang, F.; Zou, Y.; Cheng, Z.; Buzzatto, J.; Liarokapis, M.; Castillo, E.d.R.; Lim, J.B.P. UAV navigation in large-scale GPS-denied bridge environments using fiducial marker-corrected stereo visual-inertial localization. Autom. Constr. 2023, 156, 105139. [Google Scholar] [CrossRef]

- Nabavi-Chashmi, S.-Y.; Asadi, D.; Ahmadi, K. Image-based UAV position and velocity estimation using a monocular camera. Control Eng. Pract. 2023, 134, 105460. [Google Scholar] [CrossRef]

- Wei, P.; Liang, R.; Michelmore, A.; Kong, Z. Vision-Based 2D Navigation of Unmanned Aerial Vehicles in Riverine Environments with Imitation Learning. J. Intell. Robot. Syst. 2022, 104, 47. [Google Scholar] [CrossRef]

- Jiménez, G.A.; de la Escalera Hueso, A.; Gómez-Silva, M.J. Reinforcement Learning Algorithms for Autonomous Mission Accomplishment by Unmanned Aerial Vehicles: A Comparative View with DQN, SARSA and A2C. Sensors 2023, 23, 9013. [Google Scholar] [CrossRef]

- Çetin, E.; Barrado, C.; Pastor, E. Counter a Drone in a Complex Neighborhood Area by Deep Reinforcement Learning. Sensors 2020, 20, 2320. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Dadrass Javan, F.; Ashtari Mahini, F.; Gholamshahi, M. Detection and Recognition of Drones Based on a Deep Convolutional Neural Network Using Visible Imagery. Aerospace 2022, 9, 31. [Google Scholar] [CrossRef]

- Sarkar, N.I.; Gul, S. Artificial Intelligence-Based Autonomous UAV Networks: A Survey. Drones 2023, 7, 322. [Google Scholar] [CrossRef]

- Xie, J.; Peng, X.; Wang, H.; Niu, W.; Zheng, X. UAV Autonomous Tracking and Landing Based on Deep Reinforcement Learning Strategy. Sensors 2020, 20, 5630. [Google Scholar] [CrossRef] [PubMed]

- Saha, S.; Vasegaard, A.E.; Nielsen, I.; Hapka, A.; Budzisz, H. UAVs Path Planning under a Bi-Objective Optimization Framework for Smart Cities. Electronics 2021, 10, 1193. [Google Scholar] [CrossRef]

- Xin, J.; Li, S.; Sheng, J.; Zhang, Y.; Cui, Y. Application of Improved Particle Swarm Optimization for Navigation of Unmanned Surface Vehicles. Sensors 2019, 19, 3096. [Google Scholar] [CrossRef]

- Shan, D.; Zhang, S.; Wang, X.; Zhang, P. Path-Planning Strategy: Adaptive Ant Colony Optimization Combined with an Enhanced Dynamic Window Approach. Electronics 2024, 13, 825. [Google Scholar] [CrossRef]

- Xin, J.; Zhong, J.; Yang, F.; Cui, Y.; Sheng, J. An Improved Genetic Algorithm for Path-Planning of Unmanned Surface Vehicle. Sensors 2019, 19, 2640. [Google Scholar] [CrossRef] [PubMed]

- Abdel-Basset, M.; Mohamed, R.; Hezam, I.M.; Alshamrani, A.M.; Sallam, K.M. An Efficient Evolution-Based Technique for Moving Target Search with Unmanned Aircraft Vehicle: Analysis and Validation. Mathematics 2023, 11, 2606. [Google Scholar] [CrossRef]

- Feng, J.; Sun, C.; Zhang, J.; Du, Y.; Liu, Z.; Ding, Y. A UAV Path Planning Method in Three-Dimensional Space Based on a Hybrid Gray Wolf Optimization Algorithm. Electronics 2024, 13, 68. [Google Scholar] [CrossRef]

- Huang, C.; Fei, J. UAV Path Planning Based on Particle Swarm Optimization with Global Best Path Competition. Int. J. Pattern Recognit. Artif. Intell. 2018, 32, 1859008. [Google Scholar] [CrossRef]

- Cekmez, U.; Ozsiginan, M.; Sahingoz, O.K. Multi colony ant optimization for UAV path planning with obstacle avoidance. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016. [Google Scholar] [CrossRef]

- Bagherian, M.; Alos, A. 3D UAV trajectory planning using evolutionary algorithms: A comparison study. Aeronaut. J. 2016, 119, 1271–1285. [Google Scholar] [CrossRef]

- Yu, X.; Li, C.; Zhou, J. A constrained differential evolution algorithm to solve UAV path planning in disaster scenarios. Knowl.-Based Syst. 2020, 204, 106209. [Google Scholar] [CrossRef]

- Qu, C.; Gai, W.; Zhang, J.; Zhong, M. A novel hybrid grey wolf optimizer algorithm for unmanned aerial vehicle (UAV) path planning. Knowl.-Based Syst. 2020, 194, 105530. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, C.; Liu, Y.; Chen, X. Decision-Making for the Autonomous Navigation of Maritime Autonomous Surface Ships Based on Scene Division and Deep Reinforcement Learning. Sensors 2019, 19, 4055. [Google Scholar] [CrossRef] [PubMed]

- Kalidas, A.P.; Joshua, C.J.; Md, A.Q.; Basheer, S.; Mohan, S.; Sakri, S. Deep Reinforcement Learning for Vision-Based Navigation of UAVs in Avoiding Stationary and Mobile Obstacles. Drones 2023, 7, 245. [Google Scholar] [CrossRef]

- Wu, L.; Wang, C.; Zhang, P.; Wei, C. Deep Reinforcement Learning with Corrective Feedback for Autonomous UAV Landing on a Mobile Platform. Drones 2022, 6, 238. [Google Scholar] [CrossRef]

- Hu, Z.; Wan, K.; Gao, X.; Zhai, Y.; Wang, Q. Deep Reinforcement Learning Approach with Multiple Experience Pools for UAV’s Autonomous Motion Planning in Complex Unknown Environments. Sensors 2020, 20, 1890. [Google Scholar] [CrossRef] [PubMed]

- Ponsen, M.; Taylor, M.E.; Tuyls, K. Abstraction and Generalization in Reinforcement Learning: A Summary and Framework. In International Workshop on Adaptive and Learning Agents; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–32. [Google Scholar] [CrossRef]

- Pham, H.X.; La, H.M.; Feil-Seifer, D.; Nguyen, L.V. Reinforcement Learning for Autonomous UAV Navigation Using Function Approximation. In Proceedings of the 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 6–8 August 2018. [Google Scholar] [CrossRef]

- Azar, A.T.; Koubaa, A.; Ali Mohamed, N.; Ibrahim, H.A.; Ibrahim, Z.F.; Kazim, M.; Ammar, A.; Benjdira, B.; Khamis, A.M.; Hameed, I.A.; et al. Drone Deep Reinforcement Learning: A Review. Electronics 2021, 10, 999. [Google Scholar] [CrossRef]

- Rezwan, S.; Choi, W. A Survey on Applications of Reinforcement Learning in Flying Ad-Hoc Networks. Electronics 2021, 10, 449. [Google Scholar] [CrossRef]

- Hassan, S.-A.; Rahim, T.; Shin, S.-Y. An Improved Deep Convolutional Neural Network-Based Autonomous Road Inspection Scheme Using Unmanned Aerial Vehicles. Electronics 2021, 10, 2764. [Google Scholar] [CrossRef]

- Kupervasser, O.; Kutomanov, H.; Levi, O.; Pukshansky, V.; Yavich, R. Using Deep Learning for Visual Navigation of Drone with Respect to 3D Ground Objects. Mathematics 2020, 8, 2140. [Google Scholar] [CrossRef]

- Menfoukh, K.; Touba, M.M.; Khenfri, F.; Guettal, L. Optimized Convolutional Neural Network architecture for UAV navigation within unstructured trail. In Proceedings of the 2020 1st International Conference on Communications, Control Systems and Signal Processing (CCSSP), El Oued, Algeria, 16–17 May 2020. [Google Scholar] [CrossRef]

- Maciel-Pearson, B.G.; Carbonneau, P.; Breckon, T.P. Extending Deep Neural Network Trail Navigation for Unmanned Aerial Vehicle Operation Within the Forest Canopy. Towards Auton. Robot. Syst. 2018, 10965, 147–158. [Google Scholar] [CrossRef] [PubMed]

- Fraga-Lamas, P.; Ramos, L.; Mondéjar-Guerra, V.; Fernández-Caramés, T.M. A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance. Remote Sens. 2019, 11, 2144. [Google Scholar] [CrossRef]

- Tullu, A.; Endale, B.; Wondosen, A.; Hwang, H.-Y. Machine Learning Approach to Real-Time 3D Path Planning for Autonomous Navigation of Unmanned Aerial Vehicle. Appl. Sci. 2021, 11, 4706. [Google Scholar] [CrossRef]

- Goel, A.; Tung, C.; Lu, Y.-H.; Thiruvathukal, G.K. A Survey of Methods for Low-Power Deep Learning and Computer Vision. In Proceedings of the 2020 IEEE 6th World Forum on Internet of Things (WF-IoT), New Orleans, LA, USA, 2–16 June 2020. [Google Scholar] [CrossRef]

- Karaman, S.; Frazzoli, E. Incremental Sampling-based Algorithms for Optimal Motion Planning. arXiv 2010, arXiv:1005.0416. [Google Scholar] [CrossRef]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Sun, Q.; Li, M.; Wang, T.; Zhao, C. UAV path planning based on improved rapidly-exploring random tree. In Proceedings of the 2018 Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018. [Google Scholar] [CrossRef]

- Yang, K.; Sukkarieh, S. 3D smooth path planning for a UAV in cluttered natural environments. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008. [Google Scholar] [CrossRef]

- Ragi, S.; Mittelmann, H.D. Mixed-integer nonlinear programming formulation of a UAV path optimization problem. In Proceedings of the 2017 American Control Conference (ACC), Seattle, WA, USA, 24–26 May 2017. [Google Scholar] [CrossRef]

- Nishira, M.; Ito, S.; Nishikawa, H.; Kong, X.; Tomiyama, H. An Integer Programming Based Approach to Delivery Drone Routing under Load-Dependent Flight Speed. Drones 2023, 7, 320. [Google Scholar] [CrossRef]

- Chamseddine, A.; Zhang, Y.; Rabbath, C.A.; Join, C.; Theilliol, D. Flatness-Based Trajectory Planning/Replanning for a Quadrotor Unmanned Aerial Vehicle. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2832–2848. [Google Scholar] [CrossRef]

- Yan, F.; Liu, Y.-S.; Xiao, J.-Z. Path Planning in Complex 3D Environments Using a Probabilistic Roadmap Method. Int. J. Autom. Comput. 2014, 10, 525–533. [Google Scholar] [CrossRef]

- Wai, R.-J.; Prasetia, A.S. Adaptive Neural Network Control and Optimal Path Planning of UAV Surveillance System with Energy Consumption Prediction. IEEE Access 2019, 7, 126137–126153. [Google Scholar] [CrossRef]

- Benjumea, D.; Alcántara, A.; Ramos, A.; Torres-Gonzalez, A.; Sánchez-Cuevas, P.; Capitan, J.; Heredia, G.; Ollero, A. Localization System for Lightweight Unmanned Aerial Vehicles in Inspection Tasks. Sensors 2021, 21, 5937. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Bai, J.; Wang, G.; Wu, X.; Sun, F.; Guo, Z.; Geng, H. UAV Localization in Low-Altitude GNSS-Denied Environments Based on POI and Store Signage Text Matching in UAV Images. Drones 2023, 7, 451. [Google Scholar] [CrossRef]

- Sandamini, C.; Maduranga, M.W.P.; Tilwari, V.; Yahaya, J.; Qamar, F.; Nguyen, Q.N.; Ibrahim, S.R.A. A Review of Indoor Positioning Systems for UAV Localization with Machine Learning Algorithms. Electronics 2023, 12, 1533. [Google Scholar] [CrossRef]

- Cui, Z.; Zhou, P.; Wang, X.; Zhang, Z.; Li, Y.; Li, H.; Zhang, Y. A Novel Geo-Localization Method for UAV and Satellite Images Using Cross-View Consistent Attention. Remote Sens. 2023, 15, 4667. [Google Scholar] [CrossRef]

- Haque, A.; Elsaharti, A.; Elderini, T.; Elsaharty, M.A.; Neubert, J. UAV Autonomous Localization Using Macro-Features Matching with a CAD Model. Sensors 2020, 20, 743. [Google Scholar] [CrossRef]

- Si, X.; Xu, G.; Ke, M.; Zhang, H.; Tong, K.; Qi, F. Relative Localization within a Quadcopter Unmanned Aerial Vehicle Swarm Based on Airborne Monocular Vision. Drones 2023, 7, 612. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, X.; Cui, J.; Meng, W. Multi-UAV Area Coverage Based on Relative Localization: Algorithms and Optimal UAV Placement. Sensors 2021, 21, 2400. [Google Scholar] [CrossRef] [PubMed]

- Tong, P.; Yang, X.; Yang, Y.; Liu, W.; Wu, P. Multi-UAV Collaborative Absolute Vision Positioning and Navigation: A Survey and Discussion. Drones 2023, 7, 261. [Google Scholar] [CrossRef]

- Wei, J.; Yilmaz, A. A Visual Odometry Pipeline for Real-Time UAS Geopositioning. Drones 2023, 7, 569. [Google Scholar] [CrossRef]

- Cheng, H.-W.; Chen, T.-L.; Tien, C.-H. Motion Estimation by Hybrid Optical Flow Technology for UAV Landing in an Unvisited Area. Sensors 2019, 19, 1380. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Cui, J.; Qi, X.; Jing, Y.; Ma, H. The Improved Optimization Algorithm for UAV SLAM in Visual Odometry-Pose Estimation. In Proceedings of the 2023 35th Chinese Control and Decision Conference (CCDC), Yichang, China, 20–22 May 2023. [Google Scholar] [CrossRef]

- Leprince, S.; Barbot, S.; Ayoub, F.; Avouac, J.-P. Automatic and Precise Orthorectification, Coregistration, and Subpixel Correlation of Satellite Images, Application to Ground Deformation Measurements. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1529–1558. [Google Scholar] [CrossRef]

- Van Dalen, G.J.; Magree, D.P.; Johnson, E.N. Absolute Localization using Image Alignment and Particle Filtering. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, San Diego, CA, USA, 4–8 January 2016. [Google Scholar] [CrossRef]

- Yol, A.; Delabarre, B.; Dame, A.; Dartois, J.-E.; Marchand, E. Vision-based absolute localization for unmanned aerial vehicles. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014. [Google Scholar] [CrossRef]

- Chen, C.; Tian, Y.; Lin, L.; Chen, S.; Li, H.; Wang, Y.; Su, K. Obtaining World Coordinate Information of UAV in GNSS Denied Environments. Sensors 2020, 20, 2241. [Google Scholar] [CrossRef]

- Basan, E.; Basan, A.; Nekrasov, A.; Fidge, C.; Sushkin, N.; Peskova, O. GPS-Spoofing Attack Detection Technology for UAVs Based on Kullback–Leibler Divergence. Drones 2022, 6, 8. [Google Scholar] [CrossRef]

- Kalaitzakis, M.; Vitzilaios, N. UAS Control under GNSS Degraded and Windy Conditions. Robotics 2023, 12, 123. [Google Scholar] [CrossRef]

- Li, B.; Gan, Z.; Chen, D.; Sergey Aleksandrovich, D. UAV Maneuvering Target Tracking in Uncertain Environments Based on Deep Reinforcement Learning and Meta-Learning. Remote Sens. 2020, 12, 3789. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, B.; Zhang, X.; Nie, W.; Gou, P.; Liao, J.; Wang, K. A Practical Deep Learning Architecture for Large-Area Solid Wastes Monitoring Based on UAV Imagery. Appl. Sci. 2024, 14, 2084. [Google Scholar] [CrossRef]

- Lu, F.; Li, K.; Nie, Y.; Tao, Y.; Yu, Y.; Huang, L.; Wang, X. Object Detection of UAV Images from Orthographic Perspective Based on Improved YOLOv5s. Sustainability 2023, 15, 14564. [Google Scholar] [CrossRef]

- Cao, Z.; Kooistra, L.; Wang, W.; Guo, L.; Valente, J. Real-Time Object Detection Based on UAV Remote Sensing: A Systematic Literature Review. Drones 2023, 7, 620. [Google Scholar] [CrossRef]

- Lee, Y.; An, J.; Joe, I. Deep-Learning-Based Object Filtering According to Altitude for Improvement of Obstacle Recognition during Autonomous Flight. Remote Sens. 2022, 14, 1378. [Google Scholar] [CrossRef]

- Zhao, Y.; Yan, L.; Dai, J.; Hu, X.; Wei, P.; Xie, H. Robust Planning System for Fast Autonomous Flight in Complex Unknown Environment Using Sparse Directed Frontier Points. Drones 2023, 7, 219. [Google Scholar] [CrossRef]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef]

- Sang, J.; Wu, Z.; Guo, P.; Hu, H.; Xiang, H.; Zhang, Q.; Cai, B. An Improved YOLOv2 for Vehicle Detection. Sensors 2018, 18, 4272. [Google Scholar] [CrossRef] [PubMed]

- Yeh, C.-C.; Chang, Y.-L.; Alkhaleefah, M.; Hsu, P.-H.; Eng, W.; Koo, V.-C.; Huang, B.; Chang, L. YOLOv3-Based Matching Approach for Roof Region Detection from Drone Images. Remote Sens. 2021, 13, 127. [Google Scholar] [CrossRef]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Aydin, B.; Singha, S. Drone Detection Using YOLOv5. Eng 2023, 4, 416–433. [Google Scholar] [CrossRef]

- Kucukayan, G.; Karacan, H. YOLO-IHD: Improved Real-Time Human Detection System for Indoor Drones. Sensors 2024, 24, 922. [Google Scholar] [CrossRef]

- Portugal, M.; Marta, A.C. Optimal Multi-Sensor Obstacle Detection System for Small Fixed-Wing UAVs. Modelling 2024, 5, 16–36. [Google Scholar] [CrossRef]

- Ma, M.-Y.; Shen, S.-E.; Huang, Y.-C. Enhancing UAV Visual Landing Recognition with YOLO’s Object Detection by Onboard Edge Computing. Sensors 2023, 23, 8999. [Google Scholar] [CrossRef] [PubMed]

- Shahi, T.B.; Dahal, S.; Sitaula, C.; Neupane, A.; Guo, W. Deep Learning-Based Weed Detection Using UAV Images: A Comparative Study. Drones 2023, 7, 624. [Google Scholar] [CrossRef]

- Liu, J.; Gu, Q.; Chen, D.; Yan, D. VSLAM method based on object detection in dynamic environments. Front. Neurorobot. 2022, 16, 990453. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Liu, B.; Luo, H. An Improved Yolov5 for Multi-Rotor UAV Detection. Electronics 2022, 11, 2330. [Google Scholar] [CrossRef]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef]

- Norkobil Saydirasulovich, S.; Abdusalomov, A.; Jamil, M.K.; Nasimov, R.; Kozhamzharova, D.; Cho, Y.-I. A YOLOv6-Based Improved Fire Detection Approach for Smart City Environments. Sensors 2023, 23, 3161. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, T.; He, W.; Zhang, Z. YOLOv7-UAV: An Unmanned Aerial Vehicle Image Object Detection Algorithm Based on Improved YOLOv7. Electronics 2023, 12, 3141. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Yun, L.; Chen, Z.; Cheng, F. HRYNet: A Highly Robust YOLO Network for Complex Road Traffic Object Detection. Sensors 2024, 24, 642. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Wang, A.; Yi, J.; Song, Y.; Chehri, A. Small Object Detection Based on Deep Learning for Remote Sensing: A Comprehensive Review. Remote Sens. 2023, 15, 3265. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Li, L.; Wang, L.; Zhou, Z.; Zhang, P. An Improved YOLOv7 Model Based on Visual Attention Fusion: Application to the Recognition of Bouncing Locks in Substation Power Cabinets. Appl. Sci. 2023, 13, 6817. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Zhai, X.; Huang, Z.; Li, T.; Liu, H.; Wang, S. YOLO-Drone: An Optimized YOLOv8 Network for Tiny UAV Object Detection. Electronics 2023, 12, 3664. [Google Scholar] [CrossRef]

- Imran, I.H.; Wood, K.; Montazeri, A. Adaptive Control of Unmanned Aerial Vehicles with Varying Payload and Full Parametric Uncertainties. Electronics 2024, 13, 347. [Google Scholar] [CrossRef]

- Bianchi, D.; Di Gennaro, S.; Di Ferdinando, M.; Acosta Lùa, C. Robust Control of UAV with Disturbances and Uncertainty Estimation. Machines 2023, 11, 352. [Google Scholar] [CrossRef]

- Li, B.; Zhu, X. A novel anti-disturbance control of quadrotor UAV considering wind and suspended payload. In Proceedings of the 2023 6th International Symposium on Autonomous Systems (ISAS), Nanjing, China, 23–25 June 2023. [Google Scholar] [CrossRef]

- Allahverdy, D.; Fakharian, A.; Menhaj, M.B. Back-Stepping Integral Sliding Mode Control with Iterative Learning Control Algorithm for Quadrotor UAV Transporting Cable-Suspended Payload. In Proceedings of the 2021 29th Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 18–20 May 2021. [Google Scholar] [CrossRef]

- Rigatos, G.; Busawon, K.; Wira, P.; Abbaszadeh, M. Nonlinear Optimal Control of the UAV and Suspended Payload System. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018. [Google Scholar] [CrossRef]

- Saponi, M.; Borboni, A.; Adamini, R.; Faglia, R.; Amici, C. Embedded Payload Solutions in UAVs for Medium and Small Package Delivery. Machines 2022, 10, 737. [Google Scholar] [CrossRef]

- Elmokadem, T.; Savkin, A.V. Towards Fully Autonomous UAVs: A Survey. Sensors 2021, 21, 6223. [Google Scholar] [CrossRef]

- Bassolillo, S.R.; Raspaolo, G.; Blasi, L.; D’Amato, E.; Notaro, I. Path Planning for Fixed-Wing Unmanned Aerial Vehicles: An Integrated Approach with Theta* and Clothoids. Drones 2024, 8, 62. [Google Scholar] [CrossRef]

- Falkowski, K.; Duda, M. Dynamic Models Identification for Kinematics and Energy Consumption of Rotary-Wing UAVs during Different Flight States. Sensors 2023, 23, 9378. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.; Jiang, H.; Zou, Z.; Ye, L.; Song, K. An Integrated Adaptive Kalman Filter for High-Speed UAVs. Appl. Sci. 2019, 9, 1916. [Google Scholar] [CrossRef]

- Pereira, R.; Carvalho, G.; Garrote, L.; Nunes, U.J. Sort and Deep-SORT Based Multi-Object Tracking for Mobile Robotics: Evaluation with New Data Association Metrics. Appl. Sci. 2022, 12, 1319. [Google Scholar] [CrossRef]

- Johnston, S.J.; Cox, S.J. The Raspberry Pi: A Technology Disrupter, and the Enabler of Dreams. Electronics 2017, 6, 51. [Google Scholar] [CrossRef]

- Raspberry Pi Home Page. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/specifications/ (accessed on 14 March 2024).

- Ortega, L.D.; Loyaga, E.S.; Cruz, P.J.; Lema, H.P.; Abad, J.; Valencia, E.A. Low-Cost Computer-Vision-Based Embedded Systems for UAVs. Robotics 2023, 12, 145. [Google Scholar] [CrossRef]

- Sheu, M.-H.; Jhang, Y.-S.; Morsalin, S.M.S.; Huang, Y.-F.; Sun, C.-C.; Lai, S.-C. UAV Object Tracking Application Based on Patch Color Group Feature on Embedded System. Electronics 2021, 10, 1864. [Google Scholar] [CrossRef]

- Delgado-Reyes, G.; Valdez-Martínez, J.S.; Hernández-Pérez, M.Á.; Pérez-Daniel, K.R.; García-Ramírez, P.J. Quadrotor Real-Time Simulation: A Temporary Computational Complexity-Based Approach. Mathematics 2022, 10, 2032. [Google Scholar] [CrossRef]

- Odroid European Distributor Home Page. Available online: https://www.odroid.co.uk/odroid-xu4 (accessed on 14 March 2024).

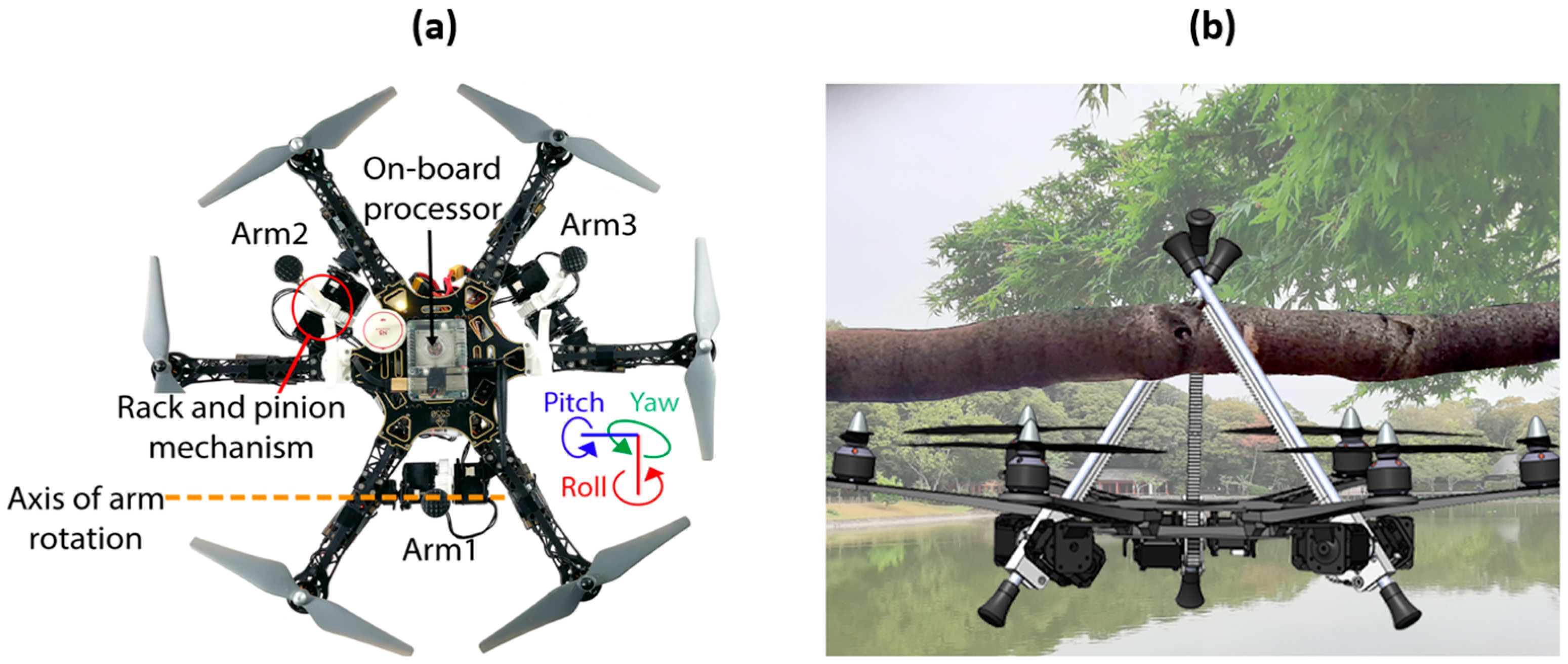

- Paul, H.; Martinez, R.R.; Ladig, R.; Shimonomura, K. Lightweight Multipurpose Three-Arm Aerial Manipulator Systems for UAV Adaptive Leveling after Landing and Overhead Docking. Drones 2022, 6, 380. [Google Scholar] [CrossRef]

- Opromolla, R.; Inchingolo, G.; Fasano, G. Airborne Visual Detection and Tracking of Cooperative UAVs Exploiting Deep Learning. Sensors 2019, 19, 4332. [Google Scholar] [CrossRef]

- Embedded Systems with Jetson Home Page. Available online: https://www.nvidia.com/de-de/autonomous-machines/embedded-systems/ (accessed on 14 March 2024).

- Oh, C.; Lee, M.; Lim, C. Towards Real-Time On-Drone Pedestrian Tracking in 4K Inputs. Drones 2023, 7, 623. [Google Scholar] [CrossRef]

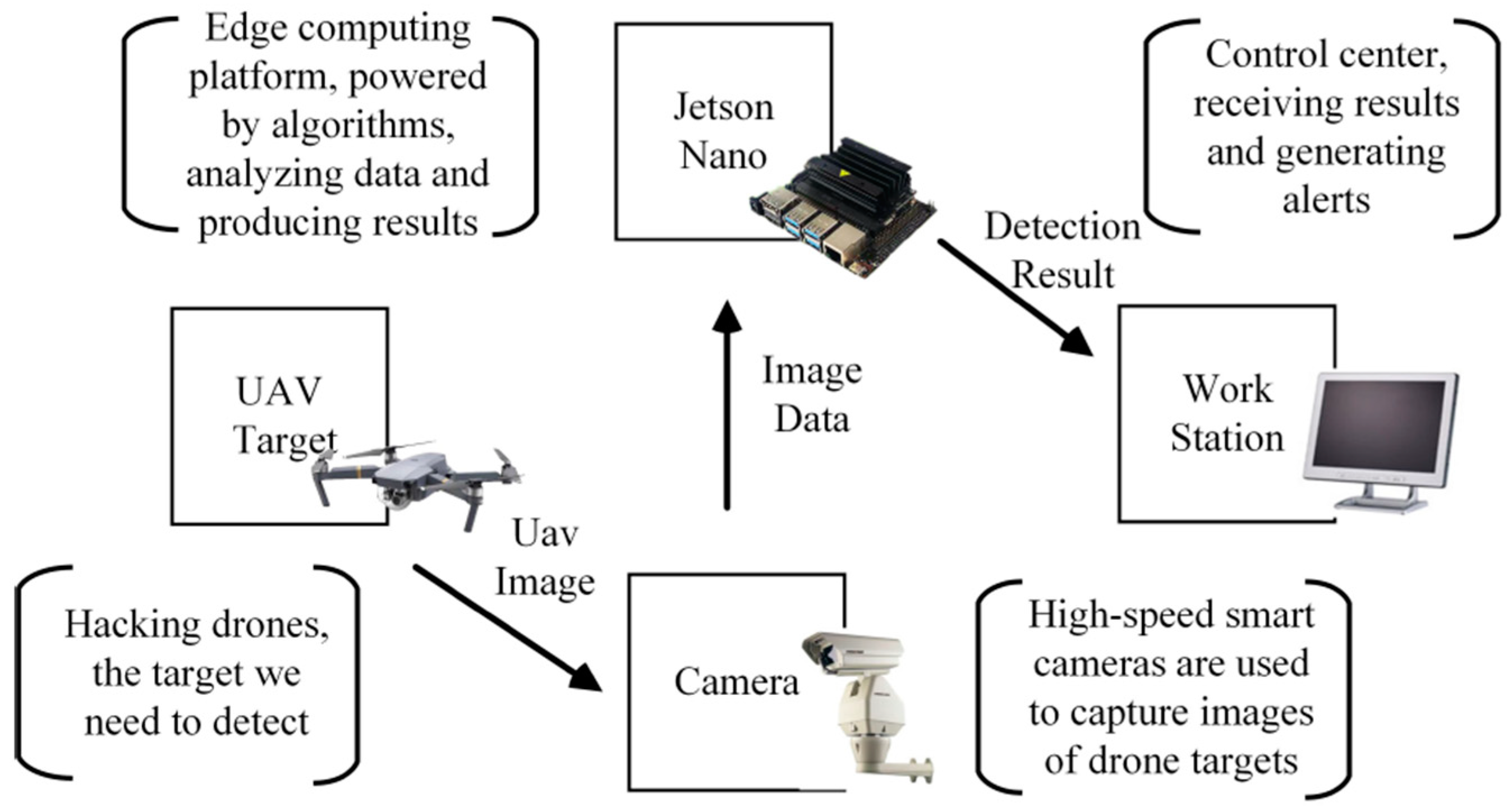

- Cheng, Q.; Wang, H.; Zhu, B.; Shi, Y.; Xie, B. A Real-Time UAV Target Detection Algorithm Based on Edge Computing. Drones 2023, 7, 95. [Google Scholar] [CrossRef]

- System on Module VS Single Board Computer. Available online: https://www.forlinx.net/industrial-news/som-vs-sbc-431.html (accessed on 15 March 2024).

- NXP i.MX 8 System on Module. Available online: https://www.variscite.de/variscite-products-imx8/?utm_source=google&utm_medium=cpc&utm_campaign=1746430699&utm_content=531817445452&utm_term=i.mx%208m&gad_source=1&gclid=EAIaIQobChMImMuf6rX2hAMV7RiiAx0Dig6aEAAYASAAEgKWp_D_BwE (accessed on 15 March 2024).

- High Performance CPU Recommended—Rockchip RK3399. Available online: https://www.forlinx.net/industrial-news/high-performance-cpu-recommended-rockchip-rk3399-331.html?gad_source=1&gclid=EAIaIQobChMIqOiSnbr2hAMVGz4GAB0ofwqZEAAYASAAEgKq6vD_BwE (accessed on 15 March 2024).

- Qualcomm Snapdragon Home Page. Available online: https://www.qualcomm.com/snapdragon/overview (accessed on 15 March 2024).

- STM32 32-bit Arm Cortex MCUs. Available online: https://www.st.com/en/microcontrollers-microprocessors/stm32-32-bit-arm-cortex-mcus.html (accessed on 15 March 2024).

- Martin, J.; Cantero, D.; González, M.; Cabrera, A.; Larrañaga, M.; Maltezos, E.; Lioupis, P.; Kosyvas, D.; Karagiannidis, L.; Ouzounoglou, E.; et al. Embedded Vision Intelligence for the Safety of Smart Cities. J. Imaging 2022, 8, 326. [Google Scholar] [CrossRef] [PubMed]

- Tang, G.; Hu, Y.; Xiao, H.; Zheng, L.; She, X.; Qin, N. Design of Real-time video transmission system based on 5G network. In Proceedings of the 2021 IEEE 16th Conference on Industrial Electronics and Applications (ICIEA), Chengdu, China, 1–4 August 2021. [Google Scholar] [CrossRef]

- Nguyen, P.H.; Arsalan, M.; Koo, J.H.; Naqvi, R.A.; Truong, N.Q.; Park, K.R. LightDenseYOLO: A Fast and Accurate Marker Tracker for Autonomous UAV Landing by Visible Light Camera Sensor on Drone. Sensors 2018, 18, 1703. [Google Scholar] [CrossRef] [PubMed]

- Buggiani, V.; Ortega, J.C.Ú.; Silva, G.; Rodríguez-Molina, J.; Vilca, D. An Inexpensive Unmanned Aerial Vehicle-Based Tool for Mobile Network Output Analysis and Visualization. Sensors 2023, 23, 1285. [Google Scholar] [CrossRef] [PubMed]

- Wu, T.; Guo, X.; Chen, Y.; Kumari, S.; Chen, C. Amassing the Security: An Enhanced Authentication Protocol for Drone Communications over 5G Networks. Drones 2022, 6, 10. [Google Scholar] [CrossRef]

- Cao, Y.; Qi, F.; Jing, Y.; Zhu, M.; Lei, T.; Li, Z.; Xia, J.; Wang, J.; Lu, G. Mission Chain Driven Unmanned Aerial Vehicle Swarms Cooperation for the Search and Rescue of Outdoor Injured Human Targets. Drones 2022, 6, 138. [Google Scholar] [CrossRef]

- Chen, J.I.-Z.; Lin, H.-Y. Performance Evaluation of a Quadcopter by an Optimized Proportional–Integral–Derivative Controller. Appl. Sci. 2023, 13, 8663. [Google Scholar] [CrossRef]

- Müezzinoğlu, T.; Karaköse, M. An Intelligent Human–Unmanned Aerial Vehicle Interaction Approach in Real Time Based on Machine Learning Using Wearable Gloves. Sensors 2021, 21, 1766. [Google Scholar] [CrossRef]

- Behjati, M.; Mohd Noh, A.B.; Alobaidy, H.A.H.; Zulkifley, M.A.; Nordin, R.; Abdullah, N.F. LoRa Communications as an Enabler for Internet of Drones towards Large-Scale Livestock Monitoring in Rural Farms. Sensors 2021, 21, 5044. [Google Scholar] [CrossRef]

- Saraereh, O.A.; Alsaraira, A.; Khan, I.; Uthansakul, P. Performance Evaluation of UAV-Enabled LoRa Networks for Disaster Management Applications. Sensors 2020, 20, 2396. [Google Scholar] [CrossRef] [PubMed]

- Mujumdar, O.; Celebi, H.; Guvenc, I.; Sichitiu, M.; Hwang, S.; Kang, K.-M. Use of LoRa for UAV Remote ID with Multi- User Interference and Different Spreading Factors. In Proceedings of the 2021 IEEE 93rd Vehicular Technology Conference (VTC2021-Spring), Helsinki, Finland, 25–28 April 2021. [Google Scholar] [CrossRef]

- Pan, M.; Chen, C.; Yin, X.; Huang, Z. UAV-Aided Emergency Environmental Monitoring in Infrastructure-Less Areas: LoRa Mesh Networking Approach. IEEE Internet Things J. 2021, 9, 2918–2932. [Google Scholar] [CrossRef]

- Delafontaine, V.; Schiano, F.; Cocco, G.; Rusu, A.; Floreano, D. Drone-aided Localization in LoRa IoT Networks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Virtual, 31 May–31 August 2020; pp. 286–292. [Google Scholar] [CrossRef]

- Chen, L.-Y.; Huang, H.-S.; Wu, C.-J.; Tsai, Y.-T.; Chang, Y.-S. A LoRa-Based Air Quality Monitor on Unmanned Aerial Vehicle for Smart City. In Proceedings of the 2018 International Conference on System Science and Engineering (ICSSE), New Taipei City, Taiwan, 15 March 2018; pp. 1–5. [Google Scholar] [CrossRef]

- What Are LoRa and LoRaWAN? Available online: https://lora-developers.semtech.com/documentation/tech-papers-and-guides/lora-and-lorawan/#:~:text=The%20name%2C%20LoRa%2C%20is%20a,areas%20(line%20of%20sight) (accessed on 18 March 2024).

- LoRa Home Page. Available online: https://lora.readthedocs.io/en/latest/ (accessed on 18 March 2024).

- Semtech Home Page. Available online: https://www.semtech.fr/products/wireless-rf/lora-connect/sx1278 (accessed on 19 March 2024).

- Octopart Home Page. Available online: https://octopart.com/rn2483-i%2Frm101-microchip-71047793?gad_source=1&gclid=CjwKCAjw7-SvBhB6EiwAwYdCAUXvYbTF8jBuNfDDXZBN8BJdW5LwxVXLjAxHOuFNIg4A68ZtN7H8WhoCA-EQAvD_BwE (accessed on 19 March 2024).

- Octopart Home Page. Available online: https://octopart.com/rfm95w-868s2-hoperf-96011900?gad_source=1&gclid=CjwKCAjw7-SvBhB6EiwAwYdCAQmajTrwfiSlrfprQuTRZ-qruVAsB9ge_FQ3qGMuifw1dOdiyci55hoCNswQAvD_BwE (accessed on 19 March 2024).

- Zeng, T.; Mozaffari, M.; Semiari, O.; Saad, W.; Bennis, M.; Debbah, M. Wireless Communications and Control for Swarms of Cellular-Connected UAVs. In Proceedings of the 2018 52nd Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 28–31 October 2018. [Google Scholar] [CrossRef]

- Alhoraibi, L.; Alghazzawi, D.; Alhebshi, R.; Rabie, O.B.J. Physical Layer Authentication in Wireless Networks-Based Machine Learning Approaches. Sensors 2023, 23, 1814. [Google Scholar] [CrossRef] [PubMed]

- Guillen-Perez, A.; Sanchez-Iborra, R.; Cano, M.-D.; Sanchez-Aarnoutse, J.C.; Garcia-Haro, J. WiFi networks on drones. In Proceedings of the 2016 ITU Kaleidoscope: ICTs for a Sustainable World (ITU WT), Bangkok, Thailand, 14–16 November 2016. [Google Scholar] [CrossRef]

- Chen, Z.; Yin, D.; Chen, D.; Pan, M.; Lai, J. WiFi-based UAV Communication and Monitoring System in Regional Inspection. In Proceedings of the 2017 International Conference on Computer Technology, Electronics and Communication (ICCTEC), Dalian, China, 19–21 December 2017. [Google Scholar] [CrossRef]

- Anggraeni, P.; Khoirunnisa, H.; Rizal, M.N.; Alfadhila, M.F. Implementation of WiFi Communication on Multi UAV for Leader-Follower Trajectory based on ROS. In Proceedings of the 2023 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Bali, Indonesia, 20–23 February 2023. [Google Scholar] [CrossRef]

- Different Wi-Fi Protocols and Data Rates. Available online: https://www.intel.com/content/www/us/en/support/articles/000005725/wireless/legacy-intel-wireless-products.html (accessed on 20 March 2024).

- ESP8266 A Cost-Effective and Highly Integrated Wi-Fi MCU for IoT Applications. Available online: https://www.espressif.com/en/products/socs/esp8266 (accessed on 20 March 2024).

- ESP32 A Feature-Rich MCU with Integrated Wi-Fi and Bluetooth Connectivity for a Wide-Range of Applications. Available online: https://www.espressif.com/en/products/socs/esp32 (accessed on 20 March 2024).

- Adafruit CC3000 WiFi. Available online: https://learn.adafruit.com/adafruit-cc3000-wifi/overview (accessed on 20 March 2024).

- Nikodem, M.; Slabicki, M.; Bawiec, M. Efficient Communication Scheme for Bluetooth Low Energy in Large Scale Applications. Sensors 2020, 20, 6371. [Google Scholar] [CrossRef] [PubMed]

- Avilés-Viñas, J.; Carrasco-Alvarez, R.; Vázquez-Castillo, J.; Ortegón-Aguilar, J.; Estrada-López, J.J.; Jensen, D.D.; Peón-Escalante, R.; Castillo-Atoche, A. An Accurate UAV Ground Landing Station System Based on BLE-RSSI and Maximum Likelihood Target Position Estimation. Appl. Sci. 2022, 12, 6618. [Google Scholar] [CrossRef]

- Ariante, G.; Ponte, S.; Del Core, G. Bluetooth Low Energy based Technology for Small UAS Indoor Positioning. In Proceedings of the 2022 IEEE 9th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Pisa, Italy, 27–29 June 2022. [Google Scholar] [CrossRef]

- Guruge, P.; Kocer, B.B.; Kayacan, E. A novel automatic UAV launcher design by using bluetooth low energy integrated electromagnetic releasing system. In Proceedings of the 2015 IEEE Region 10 Humanitarian Technology Conference (R10-HTC), Cebu City, Philippines, 9–12 December 2015. [Google Scholar] [CrossRef]

- nRF54H20 System-on-Chip. Available online: https://www.nordicsemi.com/Products/nRF54H20 (accessed on 20 March 2024).

- nRF54L15 System-on-Chip. Available online: https://www.nordicsemi.com/Products/nRF54L15 (accessed on 20 March 2024).

- SimpleLink™ 32-bit Arm Cortex-M3 Multiprotocol 2.4 GHz Wireless MCU with 128kB Flash. Available online: https://www.ti.com/product/CC2650?keyMatch=CC2650&tisearch=search-everything&usecase=GPN-ALT#tech-docs (accessed on 20 March 2024).

- Borkar, S.R. Long-term evolution for machines (LTE-M). In LPWAN Technologies for IoT and M2M Applications; Chaudhari, B.S., Zennaro, M., Eds.; Academic Press: Cambridge, MA, USA, 2020; pp. 145–166. [Google Scholar]

- Kukliński, S.; Szczypiorski, K.; Chemouil, P. UAV Support for Mission Critical Services. Energies 2022, 15, 5681. [Google Scholar] [CrossRef]

- Singh, R.; Jepsen, J.H.; Ballal, K.D.; Nwabuona, S.; Berger, M.; Dittmann, L. An Investigation of 5G, LTE, LTE-M and NB-IoT Coverage for Drone Communication Above 450 Feet. In Proceedings of the 2023 IEEE 24th International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Boston, MA, USA, 12–15 June 2023. [Google Scholar] [CrossRef]

- MC Technologies Home Page. Available online: https://mc-technologies.com/en/produkt/quectel-module-bg95-m3-lga/ (accessed on 21 March 2024).

- Techship Home Page. Available online: https://techship.com/product/telit-me310g1-ww-cat-m1-nb-iot-ssku-lga/?variant=005 (accessed on 21 March 2024).

- Surojaya, A.; Zhang, N.; Bergado, J.R.; Nex, F. Towards Fully Autonomous UAV: Damaged Building-Opening Detection for Outdoor-Indoor Transition in Urban Search and Rescue. Electronics 2024, 13, 558. [Google Scholar] [CrossRef]

- Schraml, S.; Hubner, M.; Taupe, P.; Hofstätter, M.; Amon, P.; Rothbacher, D. Real-Time Gamma Radioactive Source Localization by Data Fusion of 3D-LiDAR Terrain Scan and Radiation Data from Semi-Autonomous UAV Flights. Sensors 2022, 22, 9198. [Google Scholar] [CrossRef] [PubMed]

- Zafar, M.A.; Rauf, A.; Ashraf, Z.; Akhtar, H. Design and development of effective manual control system for unmanned air vehicle. In Proceedings of the 2011 3rd International Conference on Computer Research and Development, Shanghai, China, 11–13 March 2011. [Google Scholar] [CrossRef]

- Elamin, A.; El-Rabbany, A. UAV-Based Multi-Sensor Data Fusion for Urban Land Cover Mapping Using a Deep Convolutional Neural Network. Remote Sens. 2022, 14, 4298. [Google Scholar] [CrossRef]

- Szafranski, G.; Czyba, R.; Janusz, W.; Blotnicki, W. Altitude estimation for the UAV’s applications based on sensors fusion algorithm. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013. [Google Scholar] [CrossRef]

- MS561101BA03-50. Available online: https://www.te.com/usa-en/product-MS561101BA03-50.html (accessed on 23 March 2024).

- Pressure Sensor BMP388. Available online: https://www.bosch-sensortec.com/products/environmental-sensors/pressure-sensors/bmp388/ (accessed on 23 March 2024).

- Weber, C.; von Eichel-Streiber, J.; Rodrigo-Comino, J.; Altenburg, J.; Udelhoven, T. Automotive Radar in a UAV to Assess Earth Surface Processes and Land Responses. Sensors 2020, 20, 4463. [Google Scholar] [CrossRef]

- Ya’acob, N.; Zolkapli, M.; Johari, J.; Yusof, A.L.; Sarnin, S.S.; Asmadinar, A.Z. UAV environment monitoring system. In Proceedings of the 2017 International Conference on Electrical, Electronics and System Engineering (ICEESE), Kanazawa, Japan, 9–10 November 2017. [Google Scholar] [CrossRef]

- Raeva, P.L.; Šedina, J.; Dlesk, A. Monitoring of crop fields using multispectral and thermal imagery from UAV. In Proceedings of the 37th EARSeL Symposium: Smart Future with Remote Sensing, Prague, Czech Republic, 27–30 June 2017. [Google Scholar] [CrossRef]

- Sengupta, P. Can Precision Agriculture Be the Future of Indian Farming?—A Case Study across the South-24 Parganas District of West Bengal, India. Biol. Life Sci. Forum 2024, 30, 3. [Google Scholar] [CrossRef]

- Singh, D.K.; Jerath, H.; Raja, P. Low Cost IoT Enabled Weather Station. In Proceedings of the 2020 International Conference on Computation, Automation and Knowledge Management (ICCAKM), Dubai, United Arab Emirates, 9–10 January 2020. [Google Scholar] [CrossRef]

- Shahadat, A.S.B.; Ayon, S.I.; Khatun, M.R. Efficient IoT based Weather Station. In Proceedings of the 2020 IEEE International Women in Engineering (WIE) Conference on Electrical and Computer Engineering (WIECON-ECE), Bhubaneswar, India, 26–27 December 2020. [Google Scholar] [CrossRef]

- SHT75. Available online: https://sensirion.com/products/catalog/SHT75/ (accessed on 23 March 2024).

- Mestre, G.; Ruano, A.; Duarte, H.; Silva, S.; Khosravani, H.; Pesteh, S.; Ferreira, P.M.; Horta, R. An Intelligent Weather Station. Sensors 2015, 15, 31005–31022. [Google Scholar] [CrossRef]

- Ladino, K.S.; Sama, M.P.; Stanton, V.L. Development and Calibration of Pressure-Temperature-Humidity (PTH) Probes for Distributed Atmospheric Monitoring Using Unmanned Aircraft Systems. Sensors 2022, 22, 3261. [Google Scholar] [CrossRef] [PubMed]

- Ricaud, P.; Medina, P.; Durand, P.; Attié, J.-L.; Bazile, E.; Grigioni, P.; Guasta, M.D.; Pauly, B. In Situ VTOL Drone-Borne Observations of Temperature and Relative Humidity over Dome C, Antarctica. Drones 2023, 7, 532. [Google Scholar] [CrossRef]

- Cai, W.; Du, S.; Yang, W. UAV image stitching by estimating orthograph with RGB cameras. J. Vis. Commun. Image Represent. 2023, 94, 103835. [Google Scholar] [CrossRef]

- Intel® RealSense™ Depth Camera D435. Available online: https://www.intelrealsense.com/depth-camera-d435/ (accessed on 24 March 2024).

- Kim, W.; Luong, T.; Ha, Y.; Doh, M.; Yax, J.F.M.; Moon, H. High-Fidelity Drone Simulation with Depth Camera Noise and Improved Air Drag Force Models. Appl. Sci. 2023, 13, 10631. [Google Scholar] [CrossRef]

- TaoZhang, R. UAV 3D mapping with RGB-D camera. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017. [Google Scholar] [CrossRef]

- Yeom, S. Thermal Image Tracking for Search and Rescue Missions with a Drone. Drones 2024, 8, 53. [Google Scholar] [CrossRef]

- Feroz, S.; Abu Dabous, S. UAV-Based Remote Sensing Applications for Bridge Condition Assessment. Remote Sens. 2021, 13, 1809. [Google Scholar] [CrossRef]

- Yang, J.-C.; Lin, C.-J.; You, B.-Y.; Yan, Y.-L.; Cheng, T.-H. RTLIO: Real-Time LiDAR-Inertial Odometry and Mapping for UAVs. Sensors 2021, 21, 3955. [Google Scholar] [CrossRef]

- Melebari, A.; Nergis, P.; Eskandari, S.; Ramos Costa, P.; Moghaddam, M. Absolute Calibration of a UAV-Mounted Ultra-Wideband Software-Defined Radar Using an External Target in the Near-Field. Remote Sens. 2024, 16, 231. [Google Scholar] [CrossRef]

- Mekik, C.; Arslanoglu, M. Investigation on Accuracies of Real Time Kinematic GPS for GIS Applications. Remote Sens. 2009, 1, 22–35. [Google Scholar] [CrossRef]

- Heidarian Dehkordi, R.; Burgeon, V.; Fouche, J.; Placencia Gomez, E.; Cornelis, J.-T.; Nguyen, F.; Denis, A.; Meersmans, J. Using UAV Collected RGB and Multispectral Images to Evaluate Winter Wheat Performance across a Site Characterized by Century-Old Biochar Patches in Belgium. Remote Sens. 2020, 12, 2504. [Google Scholar] [CrossRef]

- NEO-M8 Series Versatile u-blox M8 GNSS Modules. Available online: https://www.u-blox.com/en/product/neo-m8-series (accessed on 24 March 2024).

- Yang, M.; Zhou, Z.; You, X. Research on Trajectory Tracking Control of Inspection UAV Based on Real-Time Sensor Data. Sensors 2022, 22, 3648. [Google Scholar] [CrossRef] [PubMed]

- Krzysztofik, I.; Koruba, Z. Study on the Sensitivity of a Gyroscope System Homing a Quadcopter onto a Moving Ground Target under the Action of External Disturbance. Energies 2021, 14, 1696. [Google Scholar] [CrossRef]

- Eling, C.; Klingbeil, L.; Kuhlmann, H. Real-Time Single-Frequency GPS/MEMS-IMU Attitude Determination of Lightweight UAVs. Sensors 2015, 15, 26212–26235. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Bulman, H.; Whitley, T.; Li, S. Ultra-Wideband Communication and Sensor Fusion Platform for the Purpose of Multi-Perspective Localization. Sensors 2022, 22, 6880. [Google Scholar] [CrossRef] [PubMed]

- Wu, P.; Su, S.; Zuo, Z.; Guo, X.; Sun, B.; Wen, X. Time Difference of Arrival (TDoA) Localization Combining Weighted Least Squares and Firefly Algorithm. Sensors 2019, 19, 2554. [Google Scholar] [CrossRef]

- Lian Sang, C.; Adams, M.; Hörmann, T.; Hesse, M.; Porrmann, M.; Rückert, U. Numerical and Experimental Evaluation of Error Estimation for Two-Way Ranging Methods. Sensors 2019, 19, 616. [Google Scholar] [CrossRef] [PubMed]

- DWM1000 3.5–6.5 GHz Ultra-Wideband (UWB) Transceiver Module. Available online: https://www.qorvo.com/products/p/DWM1000 (accessed on 25 March 2024).

- Steup, C.; Beckhaus, J.; Mostaghim, S. A Single-Copter UWB-Ranging-Based Localization System Extendable to a Swarm of Drones. Drones 2021, 5, 85. [Google Scholar] [CrossRef]

- Ultrasonic Distance Sensor-HC-SR04 (5V). Available online: https://www.sparkfun.com/products/15569 (accessed on 25 March 2024).

- Rahmaniar, W.; Wang, W.-J.; Caesarendra, W.; Glowacz, A.; Oprzędkiewicz, K.; Sułowicz, M.; Irfan, M. Distance Measurement of Unmanned Aerial Vehicles Using Vision-Based Systems in Unknown Environments. Electronics 2021, 10, 1647. [Google Scholar] [CrossRef]

- MB1222 I2CXL-MaxSonar-EZ2. Available online: https://maxbotix.com/products/mb1222 (accessed on 25 March 2024).

- Yang, L.; Feng, X.; Zhang, J.; Shu, X. Multi-Ray Modeling of Ultrasonic Sensors and Application for Micro-UAV Localization in Indoor Environments. Sensors 2019, 19, 1770. [Google Scholar] [CrossRef] [PubMed]

- van Berlo, B.; Elkelany, A.; Ozcelebi, T.; Meratnia, N. Millimeter Wave Sensing: A Review of Application Pipelines and Building Blocks. IEEE Sens. J. 2021, 21, 10332–10368. [Google Scholar] [CrossRef]

- Soumya, A.; Krishna Mohan, C.; Cenkeramaddi, L.R. Recent Advances in mmWave-Radar-Based Sensing, Its Applications, and Machine Learning Techniques: A Review. Sensors 2023, 23, 8901. [Google Scholar] [CrossRef] [PubMed]

- Başpınar, Ö.O.; Omuz, B.; Öncü, A. Detection of the Altitude and On-the-Ground Objects Using 77-GHz FMCW Radar Onboard Small Drones. Drones 2023, 7, 86. [Google Scholar] [CrossRef]

- AVIA. Available online: https://www.livoxtech.com/avia (accessed on 25 March 2024).

- Luo, H.; Wen, C.-Y. A Low-Cost Relative Positioning Method for UAV/UGV Coordinated Heterogeneous System Based on Visual-Lidar Fusion. Aerospace 2023, 10, 924. [Google Scholar] [CrossRef]

- Pourrahmani, H.; Bernier, C.M.I.; Van herle, J. The Application of Fuel-Cell and Battery Technologies in Unmanned Aerial Vehicles (UAVs): A Dynamic Study. Batteries 2022, 8, 73. [Google Scholar] [CrossRef]

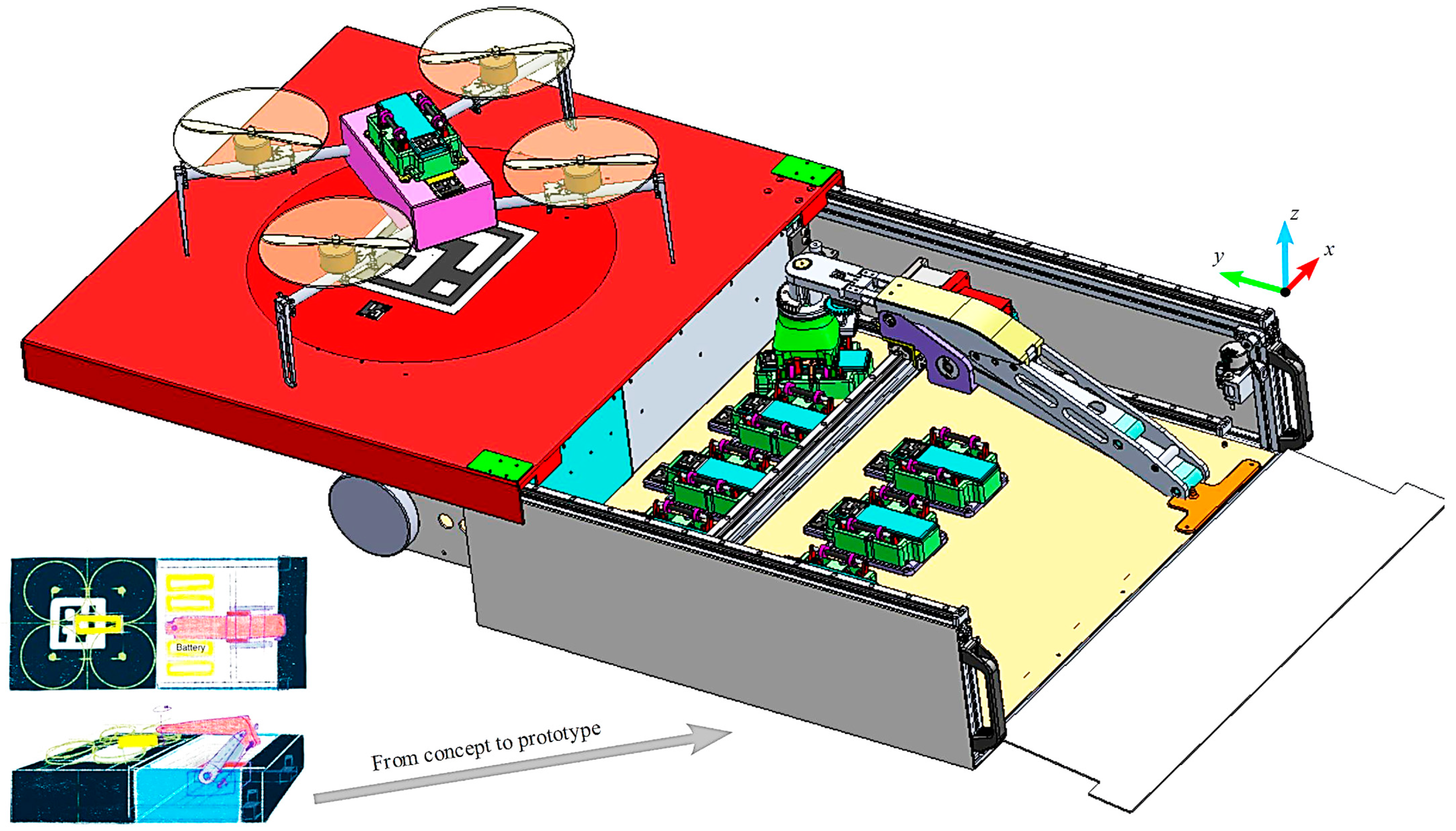

- Bláha, L.; Severa, O.; Goubej, M.; Myslivec, T.; Reitinger, J. Automated Drone Battery Management System—Droneport: Technical Overview. Drones 2023, 7, 234. [Google Scholar] [CrossRef]

- Jarrah, K.; Alali, Y.; Lalko, A.; Rawashdeh, O. Flight Time Optimization and Modeling of a Hybrid Gasoline–Electric Multirotor Drone: An Experimental Study. Aerospace 2022, 9, 799. [Google Scholar] [CrossRef]

- Chu, Y.; Ho, C.; Lee, Y.; Li, B. Development of a Solar-Powered Unmanned Aerial Vehicle for Extended Flight Endurance. Drones 2021, 5, 44. [Google Scholar] [CrossRef]

- Osmani, K.; Haddad, A.; Alkhedher, M.; Lemenand, T.; Castanier, B.; Ramadan, M. A Novel MPPT-Based Lithium-Ion Battery Solar Charger for Operation under Fluctuating Irradiance Conditions. Sustainability 2023, 15, 9839. [Google Scholar] [CrossRef]

- Camas-Náfate, M.; Coronado-Mendoza, A.; Vargas-Salgado, C.; Águila-León, J.; Alfonso-Solar, D. Optimizing Lithium-Ion Battery Modeling: A Comparative Analysis of PSO and GWO Algorithms. Energies 2024, 17, 822. [Google Scholar] [CrossRef]

- Suti, A.; Di Rito, G.; Mattei, G. Development and Experimental Validation of Novel Thevenin-Based Hysteretic Models for Li-Po Battery Packs Employed in Fixed-Wing UAVs. Energies 2022, 15, 9249. [Google Scholar] [CrossRef]

- Tang, P.; Li, J.; Sun, H. A Review of Electric UAV Visual Detection and Navigation Technologies for Emergency Rescue Missions. Sustainability 2024, 16, 2105. [Google Scholar] [CrossRef]

- Guan, S.; Zhu, Z.; Wang, G. A Review on UAV-Based Remote Sensing Technologies for Construction and Civil Applications. Drones 2022, 6, 117. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Abro, G.E.M.; Zulkifli, S.A.B.M.; Masood, R.J.; Asirvadam, V.S.; Laouiti, A. Comprehensive Review of UAV Detection, Security, and Communication Advancements to Prevent Threats. Drones 2022, 6, 284. [Google Scholar] [CrossRef]

- Sharma, A.; Vanjani, P.; Paliwal, N.; Basnayaka, C.M.W.; Jayakody, D.N.K.; Wang, H.-C.; Muthuchidambaranathan, P. Communication and networking technologies for UAVs: A survey. J. Netw. Comput. Appl. 2020, 168, 102739. [Google Scholar] [CrossRef]

- Hu, X.; Assaad, R.H. The use of unmanned ground vehicles (mobile robots) and unmanned aerial vehicles (drones) in the civil infrastructure asset management sector: Applications, robotic platforms, sensors, and algorithms. Expert Syst. Appl. 2023, 232, 120897. [Google Scholar] [CrossRef]

- Ducard, G.J.J.; Allenspach, M. Review of designs and flight control techniques of hybrid and convertible VTOL UAVs. Aerosp. Sci. Technol. 2021, 118, 107035. [Google Scholar] [CrossRef]

- Saeed, A.S.; Younes, A.B.; Cai, C.; Cai, G. A survey of hybrid Unmanned Aerial Vehicles. Prog. Aerosp. Sci. 2018, 98, 91–105. [Google Scholar] [CrossRef]

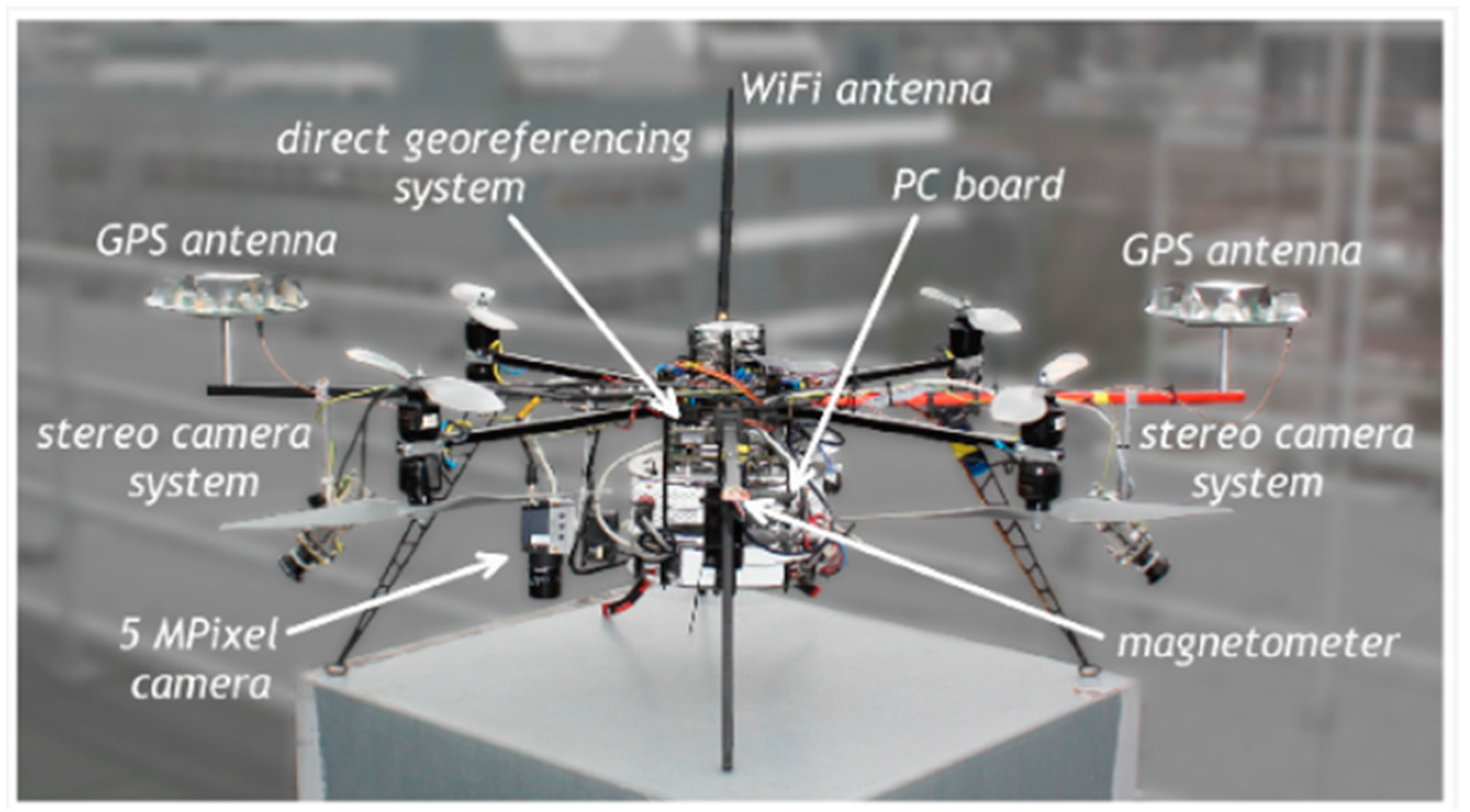

- Digitalisierte, Rechtssichere und Emissionsarme Flugmobile Inspektion und Netzdatenerfassung mit Automatisierten Drohnen. Available online: https://www.hsu-hh.de/rt/forschung/dned (accessed on 28 March 2024).

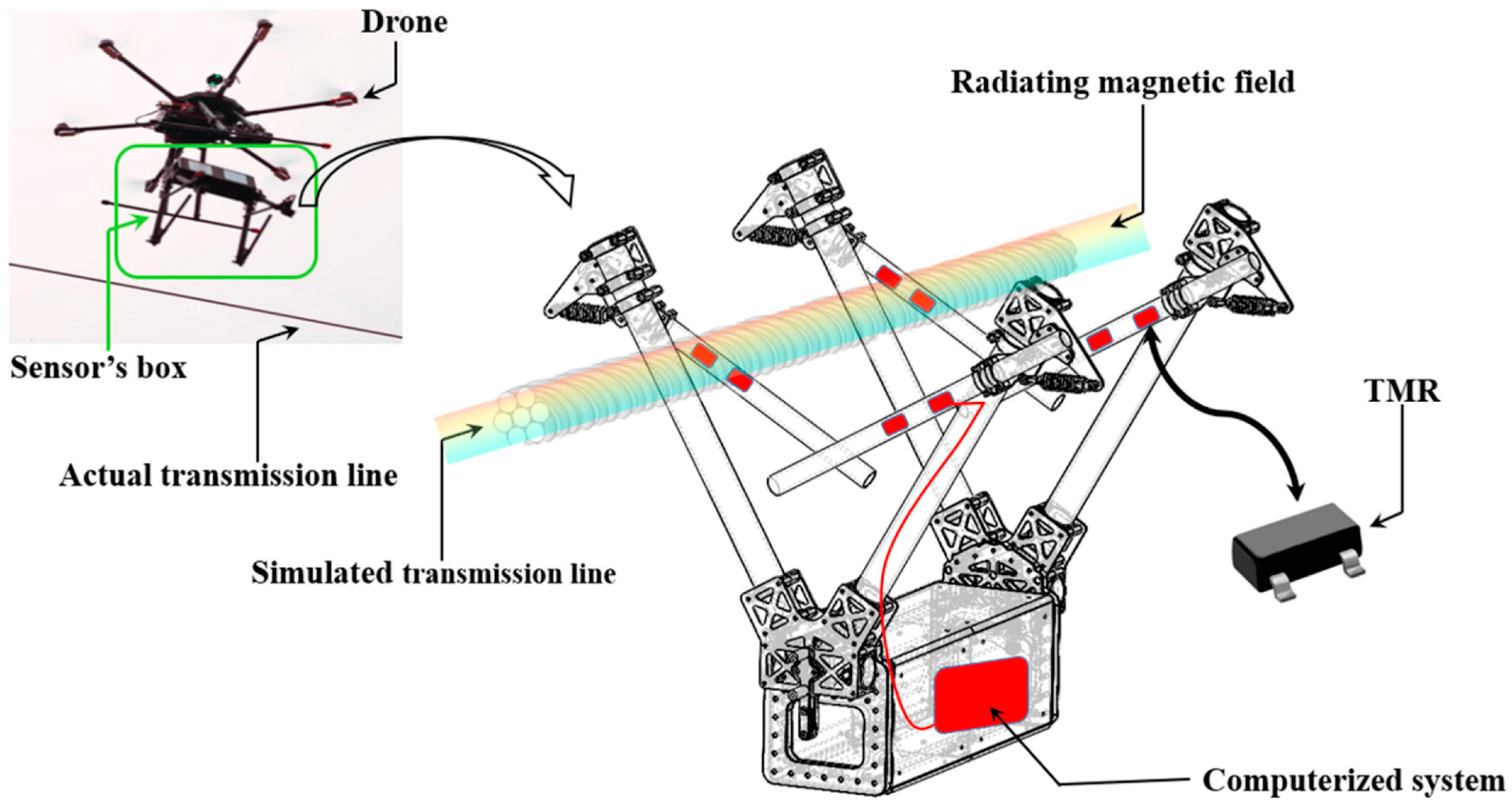

- Osmani, K.; Schulz, D. Modeling Magnetic Fields around Stranded Electrical Transmission Lines via Finite Element Analysis (FEA). Energies 2024, 17, 801. [Google Scholar] [CrossRef]

| Vision-Based Navigation for UAVs | |||

|---|---|---|---|

| Advantages | Disadvantages | Challenges | Field of Application |

| ✓ Informative scene data | ✕ Complex environment structures reflect complexities in the navigation algorithm | Real-time processing requirements | Agriculture |

| ✓ Anti-jamming ability | ✕ Performance is impacted by adverse weather conditions | Integration with image-based sensing modalities | Surveillance |

| ✓ Relatively high accuracy | ✕ Vulnerable to visual illusions | Power consumption | Environmental monitoring |

| Mathematical-Based AI Algorithms | ||||

|---|---|---|---|---|

| Algorithm | Ref. | Performance | Efficiency | Contribution |

| PSO | [112] | High | Moderate | Non-feasible paths can be attained by means of an error factor |

| ACO | [113] | Moderate | High | Intra-/inter-colony yield a better convergence toward an optimum |

| GA | [114] | High | High | Chromosome decoding yields path navigation acknowledgment |

| DE | [115] | Moderate | High | Better convergence is achieved by means of selective mutations |

| GWO | [116] | High | High | Flexible algorithm hybridization with UAV navigation-based data |

| Algorithms Set | Working Mechanism |

|---|---|

| Sample-based |

|

| Mathematical-based |

|

| Multi-fusion |

|

| Bio-inspired |

|

| YOLOvx-Algorithm Aspect | ||||

|---|---|---|---|---|

| Algorithm | Ref. | Working Mechanism | Additional Improvements | Performance |

| YOLOv6 | [181,182] |

| Knowledge distillation (i.e., teacher–student training model) | Achieves higher mean Average Precision (mAP) at different Frames Per Second (FPS) than its predecessors |

| YOLOv7 | [183,184] |

| Presents trainable Bag-of-Freebies | Improving accuracy simultaneously with maintained high detection speeds |

| YOLOv8 | [185] |

| Dynamic task-aligned allocator | Positive and negative samples are specified by an anchor-free detection model |

| SBC | Processor | RAM | Communication * | GPU | CPU Clock | Pros | Cons | |

|---|---|---|---|---|---|---|---|---|

| Raspberry Pi 4 | 64-bit quad-core ARM | 4 GB LPDDR4 | Ethernet, USB, HDMI, Bluetooth, Wi-Fi, I2C, SPI, UART | Videocore VI | 1.5 GHz | Upgradable RAM to 8 GB | Overheating | |

| Odroid XU4 | Samsung Exynos 5422 octa-core | 2 GB LPDDR3 | USB, Ethernet, HDMI, I2C, SPI, UART | Mali-T628 MP6 | 2 GHz | High processor performance | Incompatible with 3.3 V and 5 V accessories | |

| NVIDIA Jetson | TX2 | Dual-core NVIDIA Denver 2 64-bit; quad-core ARM Cortex A57 | 8 GB LPDDR4 | Ethernet, USB, HDMI, UART, SPI, I2C, CAN | 256-core NVIDIA Pascal | 2 GHz | GPU acceleration | High power consumption |

| Nano | Quad-core ARM Cortex A57 | 4 GB LPDDR4 | Ethernet, USB, HDMI, SPI, I2C, UART, CAN | NVIDIA Maxwell | 1.43 GHz | Good parallel processing | Overheating | |

| SoM Brand | ||||

|---|---|---|---|---|

| Criteria | NXP I.MX8M | Rockchip RK3399 | Qualcomm Snapdragon | STM32 * |

| Processor | ARM Cortex A53, A72 | ARM Cortex A53, A72 | ARM Qualcomm Kryo | ARM Cortex-M4 |

| RAM | Up to 4 GB LPDDR4 | Up to 4 GB LPDDR4 | Up to 8 GB LPDDR4 | Up to 640 kB SRAM |

| Main programming languages | C, C#, C++, Python, Java | C, C++, Python, Java | C, C#, C++, Kotlin, Java | C, C++, MicroPython |

| Programming structure | Sequential, concurrent, asynchronous, real-time | Sequential, concurrent, asynchronous, real-time | Sequential, concurrent, asynchronous, real-time | Sequential, concurrent, asynchronous, real-time |

| Embedded wireless communication | Wi-Fi, Bluetooth | Wi-Fi, Bluetooth | Wi-Fi, Bluetooth | - |

| Power consumption | Low | Moderate | Moderate | Very low |

| Supported temperature range | −40 °C to +105 °C | −40 °C to +80 °C | −40 °C to +105 °C | −40 °C to +125 °C |

| Outperforms in | Multimedia, industrial IoT | Multimedia, industrial IoT | AI, graphic processing, 5G | Real-time processing, embedded applications |

| Characteristics | ||||||||

|---|---|---|---|---|---|---|---|---|

| Range * | ||||||||

| Communication Technology | Module | Power Consumption | Indoor [km] | Outdoor [km] | Supported Frequency Ranges [Hz] | Max Data Rate (kbps) | RAM (Bytes) | Transmission Power [dBm] |

| LoRa | SX1278 | Low | 5–10 | 20 | 137–1020 MHz | 300 | 256–512 | 20 |

| RN2483 | Low | 5–10 | 20 | 433;868;915 MHz | 300 | 32 k | 18 | |

| HOPERF RFM95W-86852 | Low | 5–10 | 20 | 860–1020 MHz | 300 | 256–512 | 20 | |

| Wi-Fi | ESP8266 | Moderate | 0.05–0.1 | 0.3 | 2.4 GHz | 72 | 96–160 | 19 |

| ESP32 | Moderate | 0.05–0.1 | 0.3 | 2.4;5 GHz | 150 | 520–320 k | 19–20 | |

| CC3000 | Moderate | 0.03 | 0.1 | 2.4 GHz | 10 | 8 k | 14 | |

| BLE | nRF54H20 | Low | 0.05–0.15 | 0.2–0.4 | 2.4 GHz | 2 | 192–256 | −40 to +8 |

| nRF54LI5 | Low | 0.05–0.15 | 0.2–0.4 | 2.4 GHz | 2 | 192–256 | −40 to +8 | |

| CC2650 | Low | 0.05–0.15 | 0.2–0.4 | 2.4 GHz | 2 | 20–80 k | −40 to +5 | |

| LTE-M | Quectel BG95-M3LGA | Low | - | - | LTE-M/NB-IoT/GSM/GPRS | 588 | 32–64 M | 23 |

| Telit ME310G1-WW | Low | - | - | LTE-M/NB-IoT | 588 | 64 M | 23 | |

| Ref. | Criteria | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Control | Computing | Communication | Sensory | Power | |||||||||||

| Navigation | Target Tracking | Payload Integration | SBCs | SoM | LoRa | Wi-Fi | BLE | LTE-M | Environmental | Vision | Position | Battery | PV–Batt | Gasoline–Batt | |

| This work | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| [305] | ✔ | ✔ | ✔ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| [306] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✔ | ✔ | ✕ | ✕ | ✕ |

| [307] | ✔ | ✔ | ✔ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✔ | ✕ | ✕ | ✕ | ✕ |