Abstract

Online fatigue estimation is, inevitably, in demand as fatigue can impair the health of college students and lower the quality of higher education. Therefore, it is essential to monitor college students’ fatigue to diminish its adverse effects on the health and academic performance of college students. However, former studies on student fatigue monitoring are mainly survey-based with offline analysis, instead of using constant fatigue monitoring. Hence, we proposed an explainable student fatigue estimation model based on joint facial representation. This model includes two modules: a spacial–temporal symptom classification module and a data-experience joint status inferring module. The first module tracks a student’s face and generates spatial–temporal features using a deep convolutional neural network (CNN) for the relevant drivers of abnormal symptom classification; the second module infers a student’s status with symptom classification results with maximum a posteriori (MAP) under the data-experience joint constraints. The model was trained on the benchmark NTHU Driver Drowsiness Detection (NTHU-DDD) dataset and tested on an Online Student Fatigue Monitoring (OSFM) dataset. Our method outperformed the other methods with an accuracy rate of 94.47% under the same training–testing setting. The results were significant for real-time monitoring of students’ fatigue states during online classes and could also provide practical strategies for in-person education.

1. Introduction

It has been reported that long-time online classes under the education policy of the COVID-19 pandemic can cause middle-level fatigue for college students []. Research indicates that higher fatigue levels can result in social isolation to some degree []. Both the state of online fatigue and the adverse impacts brought on by online fatigue can lower college students’ performance and grades, and the situation can be even more severe for students with poor physical or psychological conditions. Thereby, online fatigue estimation has become a timely tool because its estimation results can provide guidance for the teaching process and education administration.

At the beginning of the twentieth century, mass-produced technology began to take the place of primitive workshops in all walks of life []. The aviation and car industry boomed during this period and the fatigue of metal structures in airplane and car equipment became pivotal as airplanes and cars were promoters for mass production []. Since the middle of the twentieth century, a large quantity of fatigue research spontaneously targeted the detection of defects and the endurance of metal fatigue, or other material fatigue, to guarantee safe and top-speed industrial production []. Simultaneously, fatigue estimation in human operators became the focus of fatigue research as human resources were also vital factors for the high-speed rotation of industry []. Especially, the prosperity of the airplane- and car-driving business has made piloting fatigue one important area in fatigue research [,].

Nowadays, the tough academic demands in higher education puts college students under much more physical and mental pressure than ever before []. As a result, most college students are in a state of fatigue during class time, especially in the first class in the morning []. To date, questionnaires and surveys are the most frequently used methods in student fatigue research [,,,], but video-based deep learning is seldom used in this field. Based on previous piloting fatigue experiments, in this research, we devised a joint facial fatigue representation classification module and an explainable fatigue estimation module for online fatigue detection in college students. Firstly, our model uses video data for online student fatigue monitoring. Student states were captured directly from video data, thus, our monitoring results were more accurate and timely. Moreover, as the features from different fatigue-related regions in our model are fused with the fatigue state inferring process, the model is more robust and can distinguish and explain detailed fatigue degrees.

The rest of the paper is organized as follows. Section 2 briefly reviews the research concerning fatigue estimation. Section 3 elucidates the present explainable fatigue monitoring module. Section 4 discusses the advantages of the present student fatigue detection module compared to previous methods. In Section 5, the conclusion is presented.

2. Related Works

As fatigue estimation plays an important role in the biological recovery of the human body, enormous investigations, experiments, and research have been carried out to estimate fatigue by various approaches. To sum this up, these estimation approaches can be categorized into four types: survey methods, instrument testing methods, observational methods, and blended methods.

2.1. Survey Methods

Questionnaire and survey methods are the traditional methods that have been frequently applied in student fatigue research. Here are some examples.

Through questionnaires, researchers have testified that near work can cause visual fatigue, initiate myopia, and enhance the degree of myopia in college students [,,,,,,,,]. Since the breakout of the COVID-19 pandemic, researchers have revealed that long-term online education can aggravate the situation of eye fatigue among college students [,,,]. Meanwhile, an online survey also found that viral infections and psychological stress can trigger chronic fatigue syndrome among college students [].

2.2. Instrument Testing Methods

With the emergence of new materials and technologies, more and more innovative measuring instruments have been adopted in scientific, technical, and physiological examinations. At present, electroencephalograms (EEGs), electrocardiograms (ECGs), and electro-oculograms (EOGs) are widely used devices in testing physical and mental fatigue. These instruments are noninvasive and safe, and the data acquisition process is nearly in real-time. Moreover, the convenience and low cost of new wearable materials have greatly boosted the extensive usage of these instruments in detecting the fatigue state of individuals in different conditions and environments.

2.3. Observational Methods

By conscious noticing and detailed examination of participants’ behaviors in a naturalistic background, observation has been identified as a fundamental base of all research methods []. Observation is not only one of the most important research methods in social sciences but it is also one of the most complex ones []. It may be the main method in the project or one of several complementary qualitative methods for other types of research []. As fatigue detection and estimation belong to the research field of human behavioral science, many researchers have applied this method for collecting elements that influence the physical or mental states of drivers, students, workers, patients, or other populations. For example, Claudia Kedor and her partners observed 42 chronic fatigue syndromes in patients following the COVID-19 pandemic [].

2.4. Blended Methods

A lot of research combines more than one method to study the fatigue state of different populations. In effect, many researchers combine an instrument testing method and an observational method together to study piloting fatigue, muscle fatigue, vision fatigue, and other types of fatigue. Nowadays, new techniques such as video technology and machine learning algorithms are combined to measure the scales and degrees of a fatigue state. Li and her peers stated that deep learning can easily collect complicated face features and dig out the latent rules of face images in certain settings []. As human face recognition can be more efficient and precise by adding an attentional mechanism [], introducing attention to observation-based methods can also improve the generalization and robustness of the method. Machine learning/deep learning has been widely used to analyze visual fatigue among college students [].

In this research, we used an observational method by collecting long simulated raw videos of college students presenting with normal and fatigue behavior, as observational methods can provide real-time monitoring and, thus, make the research more reliable. These videos were then divided into 2474 clip samples that were processed by machine learning in order to study the online fatigue of university students compared with piloting fatigue.

3. Our Proposed Approach

3.1. The Proposed Framework

Facial fatigue symptoms include head nodding (head anomaly), yawning (mouth anomaly), and blink frequency (eye anomaly) []. However, due to the diversity of equipment in the online detection scene, factors such as viewing angle and the line of sight are likely to interfere with the detection of head movements. Moreover, head movements are difficult to detect and this can lead to low reliability. Therefore, the two major fatigue symptoms in online classes are eye anomalies and mouth anomalies, which are also highly rotation-irrelevant. Inferring a student fatigue state simply with data to fatigue mapping is neither reliable nor explainable. It is hard to determine different fatigue degrees in the monitored subject and act based on these monitoring results. Therefore, we proposed the monitoring of a student fatigue state using facial fatigue representations, such as eye and mouth anomalies, then interpretably fusing all the fatigue symptoms with the data-driven maximum posterior rule and human experience constraints.

Our model monitors the two facial representation subsets: the eye state representation and the mouth state representation. In each representation set, we distinguish the anomaly representation from the normal representation with a spatial–temporal feature extraction network constructed with temporal shift module (TSM) [] blocks. The extracted fatigue representations of both subsets are later fused with a naive Bayesian model to predict the overall fatigue state.

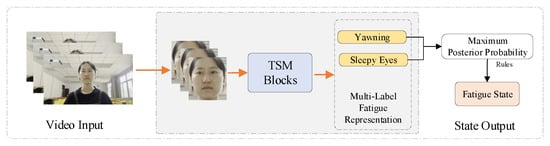

Our network comprises a facial fatigue representation classification module and an interpretable fatigue estimation module. As shown in Figure 1, in the facial fatigue representation classification module, the network takes preprocessed frame sequences as input and generates the facial fatigue representation on both subsets.

Figure 1.

Architecture of the interpretable student fatigue monitoring network. The proposed network includes an anomaly classification module and a Bayesian fatigue state inference module.

3.2. Fatigue Representation Classification

Prior to sharing human research data, the eye and mouth region actions can most significantly represent the human fatigue state. Therefore, we proposed a facial fatigue representation classification network built with TSM blocks to extract the fatigue-relevant features in these two key areas.

In this module, the proposed network first performed facial detection on the raw video input to enhance the task-relevant region and suppress background noise. This procedure has also been proved to be beneficial to the robustness of the proposed model.

Considering that the target fatigue symptoms in the eye or mouth regions are also microactions, we utilized TSM blocks as the basic feature extraction unit to take advantage of state-of-the-art video action recognition approaches. In these units, the modeling of temporal information in the input sequence is obtained by a short-range feature shift in the temporal dimension. In the facial fatigue representation learning module, three TSM blocks, based on ResNet50 [], are utilized as the spatial–temporal representation extractor.

Moreover, to enhance the generalizability and robustness of our model, we trained our model on a driver fatigue detection benchmark dataset, which contained a larger amount of data and more scenario variants. We adopted this training–testing procedure for the following three reasons. Firstly, fatigue research belongs to personnel fatigue, and drivers and students are consistent in their behavioral representation. Secondly, the research method extracted the facial data and this avoided the influence of environmental background and other factors, which can enhance the robustness of the algorithms on different tasks. Lastly, the low volume of relevant datasets for student fatigue detection can be complemented by the driver fatigue dataset.

To validate the generalizability of the trained model, we tested our model on an independent dataset without fine-tuning. In the training process, the sigmoid-crossentropy loss was applied for the multi-label facial representation classification of extracted face sequences. Since sigmoid-crossentropy allows multiple positive classes, in the anomaly detection stage, it was used to minimize the distance between the multilabel representation ground truth and the prediction. Moreover, the contributions of these independent classes were weighted separately with class weight factors, . The loss functions can be expressed as in Equation (1).

where are the batch size, class number, j-th class prediction and label of the i-th sample, j-th class weight, network parameters, and -regularization coefficient, respectively. The value is the activation function, . The values are the label and prediction of the i-th sample in each batch.

3.3. Interpretable Fatigue Estimation

Different fatigue symptoms represent different degrees of fatigue. Detailed fatigue symptom detection results can provide comprehensive information about a student’s state of fatigue. In addition, purely data-driven methods such as support vector machines (SVMs) are susceptible to noisy data, while purely experience-driven methods such as thresholds have poor adaptability towards new environments. Compared with other complex classification algorithms based on a large amount of training data, the naive Bayesian classification algorithm has a better learning efficiency and classification effect, and it is widely used in text classification, content classification [,], and so on. Therefore, considering the particularity of state data labeling and the requirements for continuous detection and detection efficiency in student fatigue detection application scenarios, we utilized a MAP model constrained by experience rules to estimate the student fatigue state based on detected symptoms.

In the MAP model, the posterior probability is linked to the appearance frequency, for which the noise samples tend to be suppressed by probability averaging. Therefore, the MAP model is more robust under variate backgrounds or environments. To simplify the status inferring problem, we assumed that the symptoms of different subsets were approximately independent. Thus, we could estimate the drowsiness state with two approximately independent attributes under the maximum posterior rule. For a fatigued state, s, the observable symptoms set, , and the parts subsets, , the MAP problem could be constructed as in Equation (2):

where , , and are the posterior probability, the prior probability of symptoms, and symptoms under each state, respectively. The prior Gaussian distribution is determined by the mean and variance . The prior probability of attributes is approximated with a Gaussian distribution as in Equation (3). The MAP model was trained with the symptom labels of the training dataset. Additionally, as shown in (2), certain experience constraints constructed by human experience were applied to the MAP model to constrain the fatigue state result with eye and mouth condition sequences.

4. Experiments

4.1. Dataset and Implementation Protocols

4.1.1. NTHU-DDD Dataset

We trained our model on the driver fatigue detection benchmark dataset, the NTHU Driver Drowsiness Detection (NTHU-DDD) dataset [], which contains videos of 36 sampled subjects of different races in a simulated driving environment, a normal driving environment, yawning, and exhibiting sleepiness in various scenes with different facial decorations. The training set of the NTHU-DDD dataset contains 18 individuals that were separated from the testing set. The original videos were preprocessed and divided into clips before training. The frame samples of the five scenarios are shown in Figure 2.

Figure 2.

Frame samples of five scenarios in the NTHU-DDD dataset. The images in the first row are sample frames taken in daylight, and from left to right are the no-glasses, glasses, and sunglasses scenarios, respectively. The images in the second row are sample frames taken at nighttime.

4.1.2. OSFM Dataset

We prodcued a dataset of videos, named the Online Student Fatigue Monitoring dataset, for model performance evaluation. The videos were collected with different mobile devices to simulate real scenarios. The original data included videos recorded by both male and female students at different times and angles. These video data contained simulated normal behavior and fatigue behavior, including yawning and sleepy eyes. To enrich the dataset, some samples were taken from the same subject in different scenarios and times. As a result, we collected 41 long raw videos from 34 subjects, which were later divided into 2474 clip samples for video-wise prediction and evaluation. The frame samples of different behaviors of different subjects are shown in Figure 3.

Figure 3.

Frame samples of different subjects in different scenarios and at different angles. The first row are samples with darker backgrounds and a closer view; the second row are samples with better-illuminated backgrounds and a distant view.

4.1.3. Implementation

To take advantage of the rich variance of the data variance, such as scenario, illumination, and gesture, we trained our model on the benchmark NTHU-DDD dataset and tested it on the OSFM dataset without fine-tuning. The sample distribution is shown in Table 1. We implemented our model with Pytorch on two Nvidia 3090 GPUs for both training and evaluation. The feature backbone of our model was fine-tuned from the ImageNet [] pre-trained weights. At the clip level, we sampled input frames using temporal sampling network (TSN) sampling [] following the TSM. The sample clips from raw videos were sampled by uniform sampling. We trained the model using a stochastic gradient descent (SGD) optimizer with a learning rate of for 120 epochs.

Table 1.

Train and test sample distributions.

4.2. Comparison of Fatigue State Estimation Techniques

In this section, the students’ fatigue state inference results are shown in Table 2 and we compared the results with other fatigue detection methods using the OSFM dataset. The face sampling network (FSN) [] samples key areas from the face for feature fusion and state prediction; the ensemble network (E-Net) [] collects different features extracted by a group of backbones to generate state predictions. We provided frame-wise , , and an f1- of the drowsy class, along with the accuracy of overall samples in all test models for a fair comparison. As shown in Table 2, this model showed both higher precision and more excellent recall, which testifies that this new method is much more accurate and sensitive than previous fatigue detection methods. The metrics were calculated as in Equation (4) and we bolded the optimal results of the same subset.

where are the true positives, true negetives, false positives, false negatives, and the total number of all samples.

Table 2.

Performance comparison.

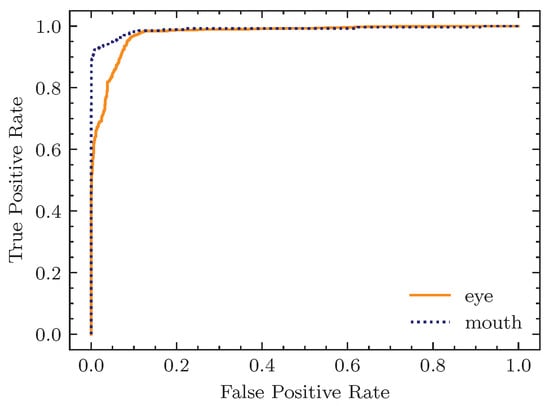

To further testify the advantage of our design, we also evaluated the model performance on the subset level. The multilabel classification accuracy on the subset level is shown in Table 3, which manifests that on both subsets our model can classify anomalous behaviors more accurately and sensitively. The receiver operating characteristic curve (ROC curve) of our facial representation classifier is exhibited in Figure 4. For both subsets, our model presented high true positive rates on different false positive rates.

Table 3.

Subset performance analysis.

Figure 4.

The ROC curve of our facial representation classifier.

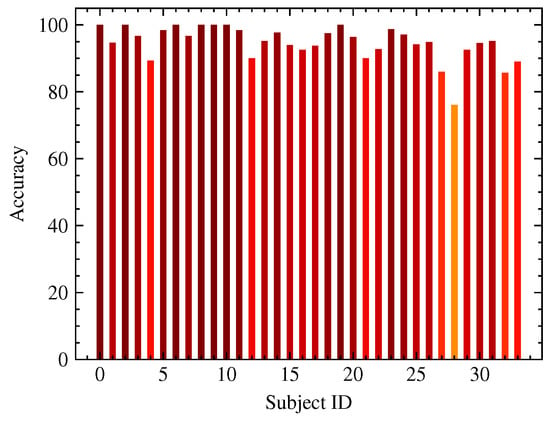

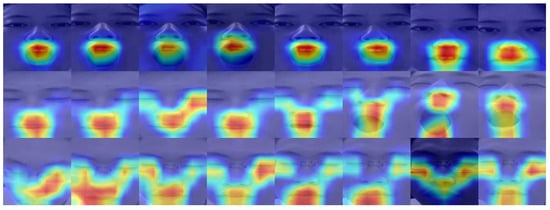

As mentioned above, our model was trained on the NTHU-DDD dataset and tested on the OSFM dataset without fine-tuning. The experimental results supported our claim of the high robustness of the proposed model. The robustness of the proposed model was gained from both the rich variance of the training dataset and the structural similarity of the human face. We summarized the subset detection accuracy of all subjects in the OSFM dataset. As exhibited in Figure 5, where the darker color denotes higher accuracy, the model’s performance varied over the subjects but held a high detection accuracy towards most subjects, which indicated that our method achieved an excellent overall performance. We visualized the gradient class activation heat map (Grad-CAM) [] of our backbone model for samples of eye anomalies, mouth anomalies, and eye-head anomalies. As exhibited in Figure 6, the feature backbone was highly sensitive to mouth region anomalies and could effectively deal with scenarios with facial decorations, such as glasses.

Figure 5.

The subset detection accuracy of all subjects in the OSFM dataset. The horizontal axis is the subject ID in the OSFM dataset and the vertical axis is the frame-wise fatigue state accuracy of the subjects.

Figure 6.

The class activation heatmap of our backbone model on samples of eye anomalies, mouth anomalies, and eye-head anomalies. The contribution of each region is represented by blue to red from low to high.

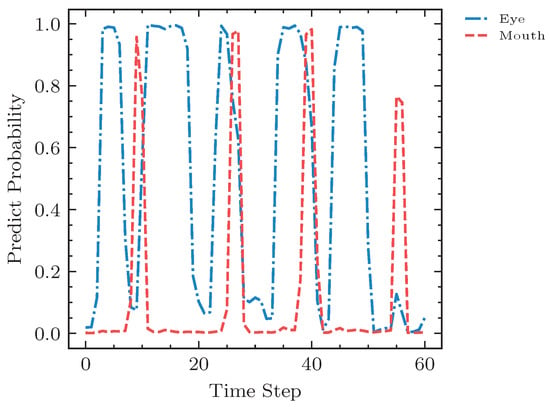

To guarantee the explainability and the advantage of our design, we further visualized the subset prediction probability–time curve, which is presented in Figure 7. The horizontal axis represents the time dimension, and the vertical axis represents the predicted confidence. As exhibited in the curve, as the subject performed a series of anomalous behaviors in each subset, the model captured a group of probability peaks that could be regarded as the center of the anomalous behaviors. Additionally, as our method uses both spatial and temporal information, our video-based results were more stable and smoother than frame-based predictions.

Figure 7.

The factor analysis of the video sample from subject 005. The red and orange curves represent the predicted mouth and eye anomaly probabilities, respectively. The time–serial curves show the prediction results over time for a series of fatigue behaviors on the test video sample.

To sum up, in this research, we established an effective explainable student fatigue monitoring module with joint facial representation. Through analyzing the collected videos by machine learning, our research found that our module can better recognize the online fatigue state of individual students and can also differentiate their fatigue degree. Our method achieved a higher accuracy rate of 94.47% than the other methods under the same training–testing setting.

5. Discussion

With the renewal of technology, fatigue research has evolved from a qualitative perspective to a quantitative perspective. The major approaches applied in the previous physical and mental fatigue research mainly include surveys, instrument testing, observational, and blended methods. Among these methods, surveys play a major role as they can be repeatedly used by researchers. In fatigue research, many researchers select surveys, especially questionnaires, as their research method due to this convenience [,,,,]. Take De’s research as an example, the author investigated 541 medical students and their investigation found that the mean prevalence of overall online fatigue was 48%. Similar to De’s survey, other surveys also demonstrated that online fatigue is a universal phenomenon among college students, but none of them described the students’ fatigue states in detail.

In surveys, researchers usually use statistical software such as SPSS to analyze the data, but this data management can just give a numerical presentation on the fatigue prevalence among individuals or the differences in occurrences between different populations. In this way, the potential factors that influence the subject may be picked out, but the data and the research results rely much more on the participants’ self-evaluation. Therefore, it is not completely possible to guarantee the research validity. Additionally, the late workload of data management can sometimes be overwhelming in time and effort for its analysis.

As mentioned before, various electrical instruments such as EEGs, ECGs, and EOGs have been widely used in detecting physiological fatigue. For example, in the work of Marcin and his peers, they used EOGs to detect students’ fatigue states by the index of eye anomalies, but their research just used eye symptoms to illustrate the condition []. Moreover, during the experiment, the monitoring participants must be wearing those instruments on their bodies and an attached display shows the brain waves, heartbeats, and eye-moving curves. These instruments are usually expensive and, thus, it is difficult to involve a large number of samples. At the same time, the participants must keep certain postures, which can be quite uncomfortable as body positions are especially hard to control in fatigue states. Despite these disadvantages, these electrical instruments can accurately signal a fatigued state, even minute changes in the fatigue state.

Compared with the prior methods, the video-based observational method we have used in this research has a lot of advantages. First and foremost, the monitoring can be real-time and it is harmless to the participants. It is easy to control and operate compared to electrical instruments. Secondly, what matters most is that nearly no interference disturbs the research procedures and results. Additionally, due to the popularization of high-definition monitoring cameras in university classrooms, genuine samples and data that are required for our research can be collected more easily than data collected in surveys.

Another advantage of our fatigue estimation method is the usage of artificial intelligence and machine learning. Artificial intelligence has been widely used to detect fatigue among drivers [,,,,], but it has seldom been used to detect student fatigue. Nevertheless, online education prevails around the world due to the COVID-19 pandemic. Under this global situation, several pieces of research have revealed that online class environments are much more likely to cause fatigue than traditional classroom environments [,,]. After all, college students are now attending classes in places such as bedrooms or dining rooms, which obviously lack the aura of studying in classrooms. Lack of monitoring from teachers and class engagement of activities may further boost the sense of sleepiness.

In a conference in 2010, Rabey Husini et al. reported that their research took the blink rate and duration as measures of fatigue and mental workload [], but this research only depended on one symptom, eye anomalies, to estimate students’ fatigue. In the research of Diah and his peers, they used EEG technology to monitor 13 senior high school students to recognize the mental fatigue condition. Obviously, the research numbers were very low and the analysis method used was Pearson correlation, which only confirmed the presence of fatigue conditions after the workload of a full day of school.

However, in our research, we used monitored facial presentations to study the online fatigue of college students. To be specific, we monitored the students’ fatigue states based on facial fatigue representations, such as eye anomalies and mouth anomalies. We then interpretably fused all the fatigue symptoms with the data-driven maximum posterior rule and human experience constraints. The fusing method could better decipher a student’s fatigue state in a comprehensive way, which is the novelness of our method. In addition, both the multiple representations and fusion method made our video-based results more stable and smoother than frame-based predictions, as both spatial and temporal information was processed.

6. Conclusions

For general fatigue, there is a disability for performing either mental or physical work, and this disability is noticed first in work requiring attention and sustained effort and, lastly, in those acts that have become automatic []. Online fatigue, one type of general fatigue, has a great influence on college students’ health and performance, as the academic load of college students is rather large with the speedy renewal of knowledge and technology. For these reasons, fatigue estimation is a very important aspect of fatigue research because the research results can provide concrete guidance to design proper class hours, as well as proper study content for students at different ages. For example, in 1899 Kratz investigated how to reduce fatigue to the minimum in the schoolroom and the results showed that it might be quite safe to require college students to devote at least as much time as is ordinarily taken for examination periods without the fear of either doing them an injustice from the point of view of measurement or of tiring them unduly [].

Fatigue state estimation has broad applications and business potential in autopiloting, industrial worker monitoring, education, etc. In this research, we established an effective explainable student fatigue monitoring module with joint facial representation. By simulating fatigue models and machine learning, our research not only recognized the online fatigue state of individual students but it also differentiated their fatigue degrees. These results are very beneficial for higher education management, as well as online education, in order to be able to design more specific teaching plans for students with different grades, majors, or sexes. Therefore, our further study will focus on fatigue differentiation technologies among college students in different majors.

Author Contributions

Conceptualization, X.L., J.L. and Y.L.; methodology, X.L. and J.L.; software, J.L.; validation, X.L., J.L. and Z.T.; formal analysis, Z.T.; investigation, Y.L.; resources, X.L. and Y.L.; data curation, J.L. and Z.T.; writing—original draft preparation, X.L. and J.L.; writing—review and editing, X.L., J.L., Z.T. and Y.L.; visualization, J.L.; supervision, Y.L.; project administration, Z.T. and Y.L.; funding acquisition, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Social Science Foundation of the Shaanxi Province of China, under Grant Nos. 2021K014, 2020K016, 2021K007, the Natural Science Basic Research Plan of the Shaanxi Province of China under Grant No. 2022JM-324, and Shaanxi Province Key Research and Development Program under Grant No. 2022ZDLSF07-07.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Biomedical Ethics Committee of Xian Jiaotong University Health Center(No. 2022-0085 approved on 17 February 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

We sincerely thank the anonymous reviewers for the critical comments and suggestions for improving the manuscript. We also wish to express our special gratitude to all the enrolled subjects who voluntarily participated in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Labrague, L.J.; Ballad, C.A. Lockdown fatigue among college students during the COVID-19 pandemic: Predictive role of personal resilience, coping behaviors, and health. Perspect. Psychiatr. Care 2020, 57, 1905–1912. [Google Scholar] [CrossRef] [PubMed]

- Nitschke, J.P.; Forbes, P.A.; Ali, N.; Cutler, J.; Apps, M.A.; Lockwood, P.L.; Lamm, C. Resilience during uncertainty? Greater social connectedness during COVID-19 lockdown is associated with reduced distress and fatigue. Br. J. Health Psychol. 2021, 26, 553–569. [Google Scholar] [CrossRef] [PubMed]

- Scott, A.J.; Storper, M. Industrialization and regional development. In Pathways to Industrialization and Regional Development; Routledge: Oxfordshire, UK, 2005; pp. 15–28. [Google Scholar]

- Schijve, J. Fatigue of structures and materials in the 20th century and the state of the art. Int. J. Fatigue 2003, 25, 679–702. [Google Scholar] [CrossRef]

- Halford, G.R. Fatigue Toughness of Metals Data Compilation; Technical Report; Department of Theoretical and Applied Mechanics, College of Engineering, University of Illinois: Urbana, IL, USA, 1964. [Google Scholar]

- Harris, W.V. The Effect of Ammonia and Ammonium Carbonate in the Reduction of Drowsiness in the Human Operator. Master’s Thesis, The University of Arizona, Tucson, AZ, USA, 1967. [Google Scholar]

- Day, C.K. In-Flight Aircraft Fatigue Detection; Y-58034; Battelle Northwest: Richland, WA, USA, 1968. [Google Scholar]

- Vigilance, I.S. Effects of Fatigue on Basic Processes Involved in Human Operator Performance. Driv. Simul. 1964, 17. [Google Scholar]

- Compas, B.E.; Connor-Smith, J.K.; Saltzman, H.; Thomsen, A.H.; Wadsworth, M.E. Coping with stress during childhood and adolescence: Problems, progress, and potential in theory and research. Psychol. Bull. 2001, 127, 87. [Google Scholar] [CrossRef] [PubMed]

- Prestwich, D.J.; Rankin, L.L.; Housman, J. Tracking sleep times to reduce tiredness and improve sleep in college students. Californian J. Health Promot. 2007, 5, 148–156. [Google Scholar] [CrossRef]

- Vela-Bueno, A.; Fernandez-Mendoza, J.; Olavarrieta-Bernardino, S.; Vgontzas, A.N.; Bixler, E.O.; De La Cruz-Troca, J.J.; Rodriguez-Muñoz, A.; Oliván-Palacios, J. Sleep and behavioral correlates of napping among young adults: A survey of first-year university students in Madrid, Spain. J. Am. Coll. Health 2008, 57, 150–158. [Google Scholar] [CrossRef] [PubMed]

- Narisawa, H. Anxiety and its related factors at bedtime are associated with difficulty in falling asleep. Tohoku J. Exp. Med. 2013, 231, 37–43. [Google Scholar] [CrossRef]

- Buboltz Jr, W.; Jenkins, S.M.; Soper, B.; Woller, K.; Johnson, P.; Faes, T. Sleep habits and patterns of college students: An expanded study. J. Coll. Couns. 2009, 12, 113–124. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, H.; Andrasik, F.; Gu, C. Perceived stress and cyberloafing among college students: The mediating roles of fatigue and negative coping styles. Sustainability 2021, 13, 4468. [Google Scholar] [CrossRef]

- Kinge, B.; Midelfart, A.; Jacobsen, G.; Rystad, J. The influence of near-work on development of myopia among university students. A three-year longitudinal study among engineering students in Norway. Acta Ophthalmol. Scand. 2000, 78, 26–29. [Google Scholar] [CrossRef] [PubMed]

- Rajeev, A.; Gupta, A.; Sharma, M. Visual fatigue and computer use among college students. Indian J. Comm. Med. 2006, 31, 192–193. [Google Scholar]

- Han, C.C.; Liu, R.; Liu, R.R.; Zhu, Z.H.; Yu, R.B.; Ma, L. Prevalence of asthenopia and its risk factors in Chinese college students. Int. J. Ophthalmol. 2013, 6, 718. [Google Scholar] [PubMed]

- Rajabi-Vardanjani, H.; Habibi, E.; Pourabdian, S.; Dehghan, H.; Maracy, M.R. Designing and validation a visual fatigue questionnaire for video display terminals operators. Int. J. Prev. Med. 2014, 5, 841. [Google Scholar] [PubMed]

- Dol, K.S. Fatigue and pain related to internet usage among university students. J. Phys. Ther. Sci. 2016, 28, 1233–1237. [Google Scholar] [CrossRef]

- Kim, S.D. Effects of yogic eye exercises on eye fatigue in undergraduate nursing students. J. Phys. Ther. Sci. 2016, 28, 1813–1815. [Google Scholar] [CrossRef]

- Choi, J.H.; Kim, K.S.; Kim, H.J.; Joo, S.J.; Cha, H.G. Factors influencing on dry eye symptoms of university students using smartphone. Int. J. Pure Appl. Math. 2018, 118, 1–13. [Google Scholar] [CrossRef]

- Wang, J.Q.; Zhai, Y.; Liu, Z.y.; Yan, Y.R.; Xia, J.Y.; Deng, G.Y. Association of electronic devices usage and visual fatigue in Chinese college students. Recent Adv. Ophthalmol. 2018, 6, 65–68. [Google Scholar]

- Xu, Y.; Deng, G.; Wang, W.; Xiong, S.; Xu, X. Correlation between handheld digital device use and asthenopia in Chinese college students: A Shanghai study. Acta Ophthalmol. 2019, 97, e442–e447. [Google Scholar] [CrossRef]

- Huseyin, K. Investigation of the effect of online education on eye health in Covid-19 pandemic. Int. J. Assess. Tools Educ. 2020, 7, 488–496. [Google Scholar]

- Kim, H.; Kim, S.J. Management of eye and vision symptoms caused by online learning among college students during COVID-19 Pandemic. J. Korean Ophthalmic Opt. Soc. 2021, 26, 73–80. [Google Scholar] [CrossRef]

- Agarwal, S.; Bhartiya, S.; Mithal, K.; Shukla, P.; Dabas, G. Increase in ocular problems during COVID-19 pandemic in school going children-a survey based study. Indian J. Ophthalmol. 2021, 69, 777. [Google Scholar] [CrossRef] [PubMed]

- Borsting, E.; Chase, C.H.; Ridder, W.H., III. Measuring visual discomfort in college students. Optom. Vis. Sci. 2007, 84, 745–751. [Google Scholar] [CrossRef] [PubMed]

- Akshayaa, L.; Vishnupriya, V.; Gayathri, R. Awareness of chronic fatigue syndrome among the college students–A survey. Drug Invent. Today 2019, 11, 1369–1371. [Google Scholar]

- Adler, P.A.; Adler, P. Observational techniques. In Handbook of Qualitative Research; Sage: Thousand Oaks, CA, USA, 1994; pp. 377–392. [Google Scholar]

- Cowie, N. Observation. In Qualitative Research in Applied Linguistics: A Practical Introduction; Springer: Cham, Switzerland, 2009; pp. 165–181. [Google Scholar]

- Ciesielska, M.; Boström, K.W.; Öhlander, M. Observation methods. In Qualitative Methodologies in Organization Studies; Springer: Berlin/Heidelberg, Germany, 2018; pp. 33–52. [Google Scholar]

- Kedor, C.; Freitag, H.; Meyer-Arndt, L.; Wittke, K.; Hanitsch, L.G.; Zoller, T.; Steinbeis, F.; Haffke, M.; Rudolf, G.; Heidecker, B.; et al. A prospective observational study of post-COVID-19 chronic fatigue syndrome following the first pandemic wave in Germany and biomarkers associated with symptom severity. Nat. Commun. 2022, 13, 5104. [Google Scholar] [CrossRef]

- Li, L.; Mu, X.; Li, S.; Peng, H. A review of face recognition technology. IEEE Access 2020, 8, 139110–139120. [Google Scholar] [CrossRef]

- Moret-Tatay, C.; Fortea, I.B.; Sevilla, M.D.G. Challenges and insights for the visual system: Are face and word recognition two sides of the same coin? J. Neurolinguist. 2020, 56, 100941. [Google Scholar] [CrossRef]

- Priya, D.B.; Jotheeswaran, J.; Subramaniyam, M. Visual Flow on Eye-Activity and Application of Learning Techniques for Visual Fatigue Analysis. IOP Conf. Ser. Mater. Sci. Eng. 2020, 912, 062066. [Google Scholar] [CrossRef]

- Zhu, T.; Zhang, C.; Wu, T.; Ouyang, Z.; Li, H.; Na, X.; Liang, J.; Li, W. Research on a real-time driver fatigue detection algorithm based on facial video sequences. Appl. Sci. 2022, 12, 2224. [Google Scholar] [CrossRef]

- Lin, J.; Gan, C.; Han, S. TSM: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7083–7093. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4–10 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Lowd, D.; Domingos, P. Naive Bayes models for probability estimation. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005; pp. 529–536. [Google Scholar]

- Weng, C.H.; Lai, Y.H.; Lai, S.H. Driver drowsiness detection via a hierarchical temporal deep belief network. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 117–133. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Gool, L.V. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 20–36. [Google Scholar]

- Omidyeganeh, M.; Javadtalab, A.; Shirmohammadi, S. Intelligent driver drowsiness detection through fusion of yawning and eye closure. In Proceedings of the 2011 IEEE International Conference on Virtual Environments, Human-Computer Interfaces and Measurement Systems Proceedings, Ottawa, ON, Canada, 19–21 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1–6. [Google Scholar]

- Dua, M.; Singla, R.; Raj, S.; Jangra, A. Deep CNN models-based ensemble approach to driver drowsiness detection. Neural Comput. Appl. 2021, 33, 3155–3168. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- de Moraes Amaducci, C.; de Correa Mota, D.D.F.; de Mattos Pimenta, C.A. Fatigue among nursing undergraduate students. Rev. Esc. Enferm. USP 2010, 44, 1052–1058. [Google Scholar] [CrossRef]

- Tanaka, M.; Fukuda, S.; Mizuno, K.; Kuratsune, H.; Watanabe, Y. Stress and coping styles are associated with severe fatigue in medical students. Behav. Med. 2009, 35, 87–92. [Google Scholar] [CrossRef]

- Tanaka, M.; Mizuno, K.; Fukuda, S.; Shigihara, Y.; Watanabe, Y. Relationships between dietary habits and the prevalence of fatigue in medical students. Nutrition 2008, 24, 985–989. [Google Scholar] [CrossRef] [PubMed]

- Kizhakkeveettil, A.; Vosko, A.M.; Brash, M.; PH, D.; Philips, M.A. Perceived stress and fatigue among students in a doctor of chiropractic training program. J. Chiropr. Educ. 2017, 31, 8–13. [Google Scholar] [CrossRef] [PubMed]

- de Oliveira Kubrusly Sobral, J.B.; Lima, D.L.F.; Lima Rocha, H.A.; de Brito, E.S.; Duarte, L.H.G.; Bento, L.B.B.B.; Kubrusly, M. Active methodologies association with online learning fatigue among medical students. BMC Med. Educ. 2022, 22, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Meiring, G.A.M.; Myburgh, H.C. A review of intelligent driving style analysis systems and related artificial intelligence algorithms. Sensors 2015, 15, 30653–30682. [Google Scholar] [CrossRef] [PubMed]

- Naz, S.; Ziauddin, S.; Shahid, A. Driver fatigue detection using mean intensity, SVM, and SIFT. Int. J. Interact. Multimed. Artif. Intell. 2019, 5, 1. [Google Scholar] [CrossRef]

- Alshaqaqi, B.; Baquhaizel, A.S.; Ouis, M.E.A.; Boumehed, M.; Ouamri, A.; Keche, M. Driver drowsiness detection system. In Proceedings of the 2013 8th International Workshop on Systems, Signal Processing and their Applications (WoSSPA), Algiers, Algeria, 12–15 May 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 151–155. [Google Scholar]

- Singh, S.; Papanikolopoulos, N.P. Monitoring driver fatigue using facial analysis techniques. In Proceedings of the 1999 IEEE/IEEJ/JSAI International Conference on Intelligent Transportation Systems (Cat. No. 99TH8383), Tokyo, Japan, 5–8 October 1999; IEEE: Piscataway, NJ, USA, 1999; pp. 314–318. [Google Scholar]

- Coetzer, R.C.; Hancke, G.P. Eye detection for a real-time vehicle driver fatigue monitoring system. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 66–71. [Google Scholar]

- Toney, S.; Light, J.; Urbaczewski, A. Fighting Zoom fatigue: Keeping the zoombies at bay. Commun. Assoc. Inf. Syst. 2021, 48, 10. [Google Scholar] [CrossRef]

- Alevizou, G. Virtual schooling, Covid-gogy and digital fatigue. In Parenting for a Digital Future; Oxford University Press: Oxford, UK, 2020. [Google Scholar]

- Peper, E.; Yang, A. Beyond Zoom fatigue: Re-energize yourself and improve learning. Acad. Lett. 2021, 257, 1–7. [Google Scholar] [CrossRef]

- Husini, R.; Lenskiy, A.A.; Lee, J.S. Distance learning and fatigue monitoring. In Proceedings of the International Forum on Strategic Technology 2010, Ulsan, Republic of Korea, 13–15 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 95–97. [Google Scholar]

- Poore, G. On Fatigue. Lancet 1875, 106, 163–164. [Google Scholar] [CrossRef]

- Kratz, H. How May Fatigue in the Schoolroom Be Reduced to the Minimum? Am. Phys. Educ. Rev. 1899, 4, 350–357. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).