Human Walking Direction Detection Using Wireless Signals, Machine and Deep Learning Algorithms

Abstract

:1. Introduction

- In security systems, to track the movement of individuals in restricted areas and detect any unauthorized access or suspicious behavior.

- In retail stores, to track the movement of customers and analyze their behavior, such as the areas where they spend the most time, the products with which they interact, and the path they take through the store.

- In robotics, to control the movement of robots and ensure their safety around humans.

- First, we thoroughly analyze the literature related to HAR in general and HWDD in particular.

- Then, we identify the major weaknesses of existing HWDD schemes.

- Next, we propose our new approach for HWDD, which uses the CSI of all available signal streams, the Hampel filter algorithm, and DWT to denoise and extract the features of amplitude, phase calibration, and finally, machine and deep learning algorithms to identify the human walking direction.

- Then, we perform extensive experiments under different conditions, such as various environments and diversity in users.

- Finally, we evaluate the performance of our approach using a variety of machine and deep learning classifiers, including RF, KNN, SVM, and 1D-CNN.

2. Background and Literature Review

2.1. Channel State Information

2.2. Literature Review

2.2.1. Human Gesture Detection

2.2.2. Human Activity Detection

2.2.3. Human Movement Detection

2.2.4. Human Walking Direction Detection (HWDD)

3. System Design

3.1. Experiment Setup

3.2. Data Collection

3.3. Data Preprocessing

3.3.1. Phase Calibration

3.3.2. Denoising the CSI Amplitudes Using the Hampel Filter Algorithm

3.3.3. Feature Extraction from CSI Amplitudes Using Discrete Wavelet Transform

3.3.4. Comparison of Denoised Phase and Amplitudes for Different Activities

- ,

- ,

- ,

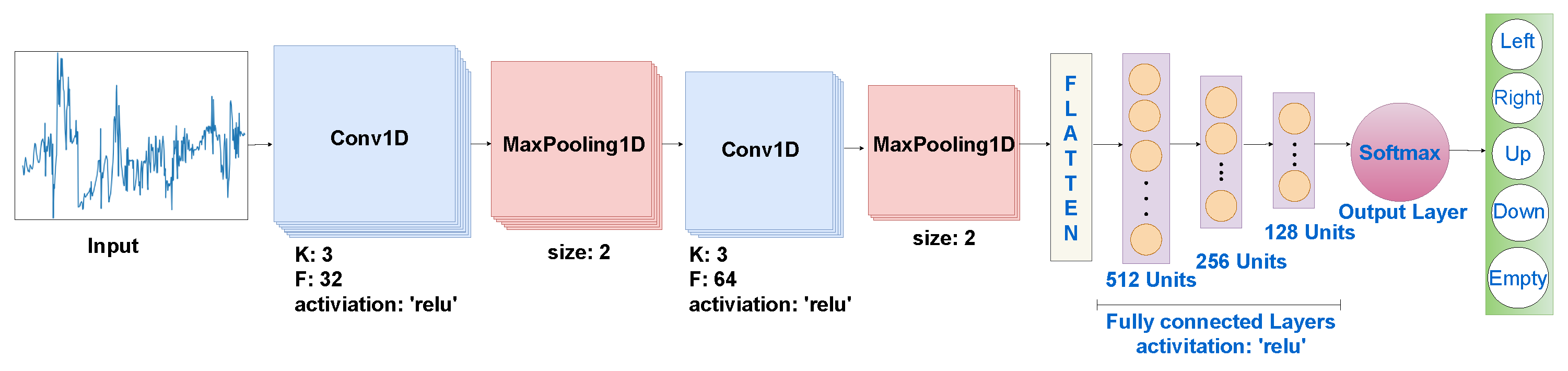

3.4. Activity Recognition

4. Performance Evaluation

4.1. Metrics

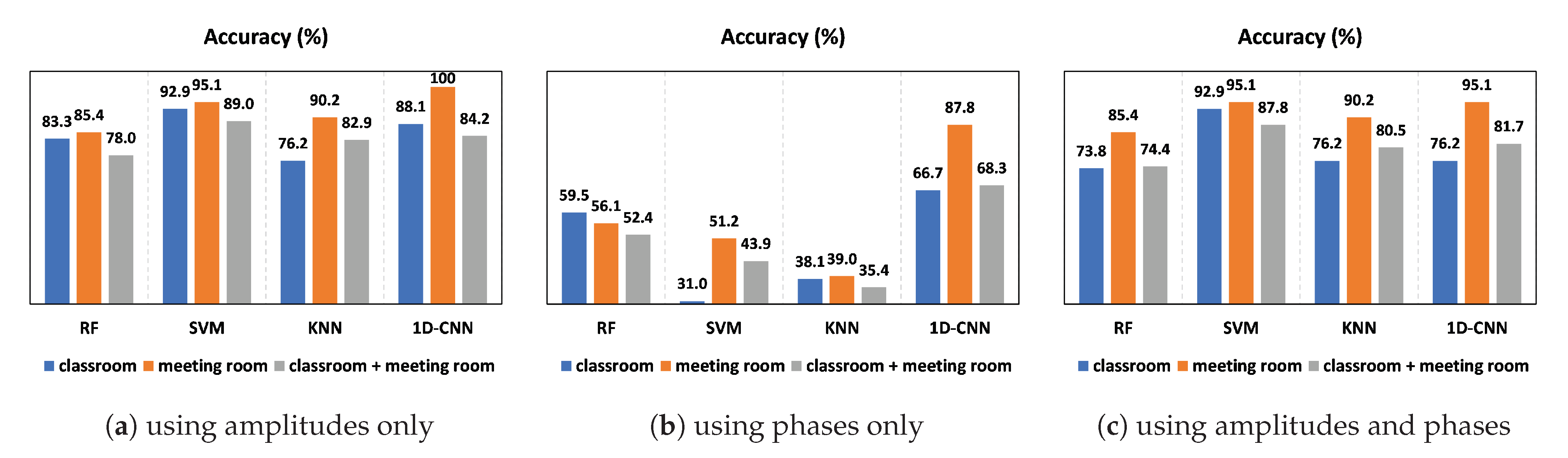

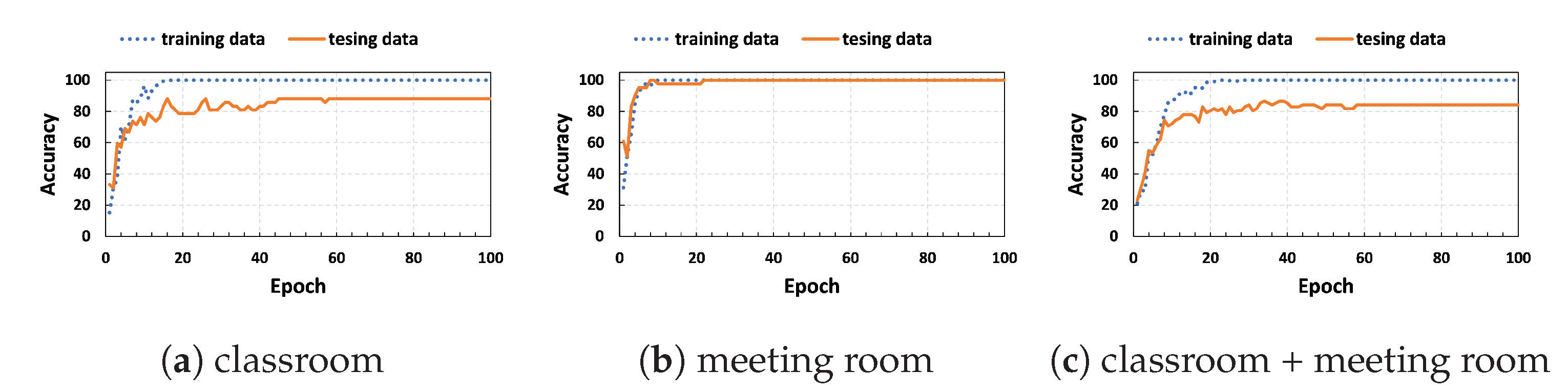

4.2. Impact of Environment Change

4.3. Impact of Individual Diversity

4.4. Impact of Increasing the Number of People

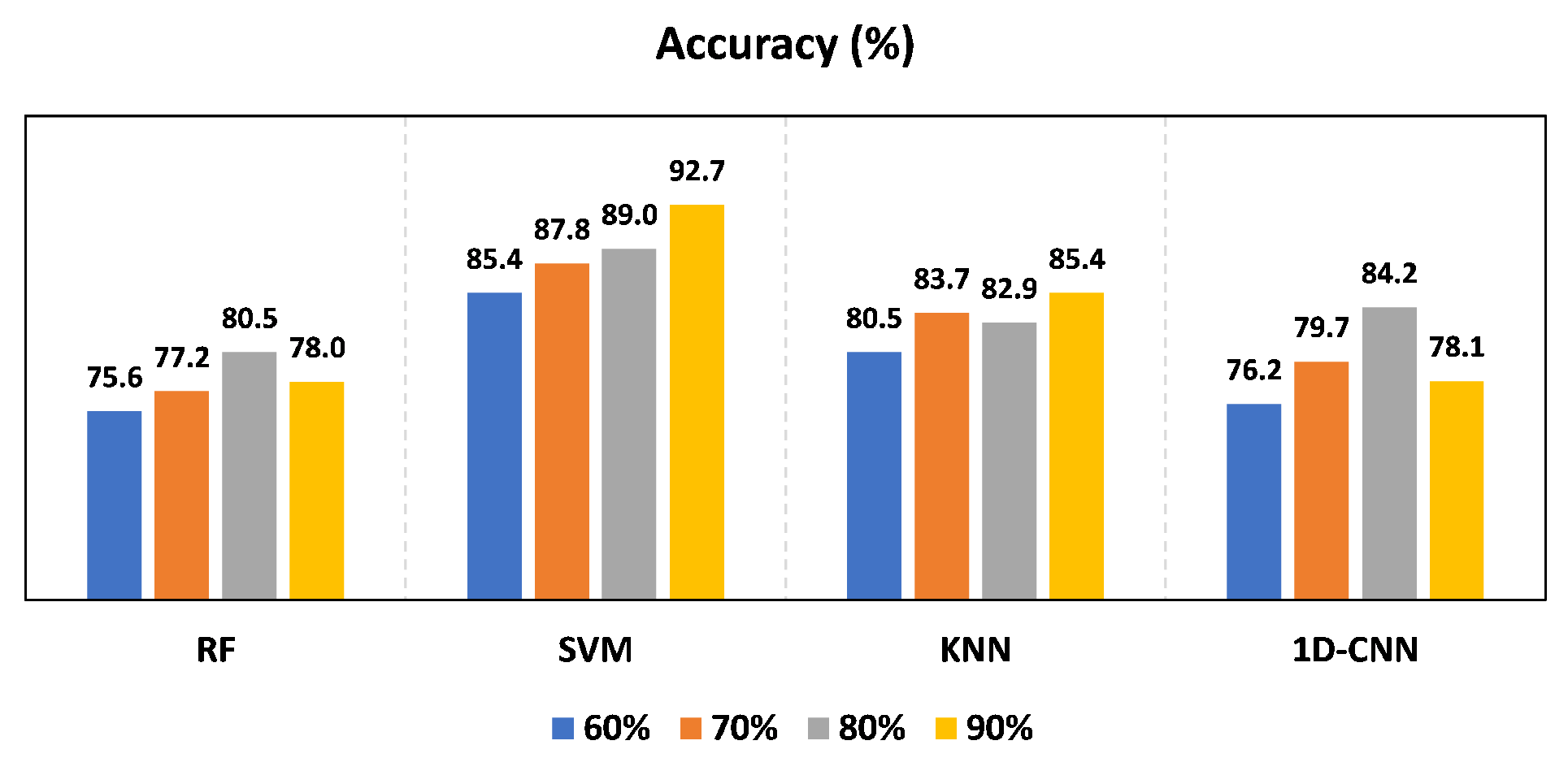

4.5. Impact of Training Size

4.6. Robustness

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HAR | Human activity recognition |

| LOS | Line-of-sight |

| NLOS | Non-LOS |

| RSSI | Received signal strength indicator |

| CSI | Channel state information |

| HWDD | Human walking direction detection |

| DWT | Discrete wavelet transform |

| RF | Random forest |

| SVM | Support vector machine |

| KNN | K-nearest neighbor |

| CFR | Channel frequency response |

| OFDM | Orthogonal frequency division multiplexing |

| CNN | Convolutional neural network |

| 1D-CNN | One-dimensional CNN |

| LSTM | Long short-term memory |

| DNN | Deep neural network |

| CSVD-NMF | Class-estimated-basis space singular value decomposition non-negative matrix factorization |

| HMM | Hidden Markov model |

| DTW | Dynamic time warping |

| CFO | Carrier frequency offset |

| SFO | Sampling frequency offset |

Appendix A

| System | Objective of Sensing | Methodology | Performance | Complexity | Devices |

|---|---|---|---|---|---|

| WiSee [39] | gestures: strike, drag, kick, bowling, punch, circle, push, dodge | Doppler shift, pattern matching | 94% | no training needed | USRP-N210 hardware |

| Deep transfer learning for gesture recognition with Wi-Fi signals [40] | draw X, swipe right, punch 2 times, drag, circle, bowling, push, pull, swipe left, draw tick, enlargement, and shrink | CSI-based, classification with CNN–SVM, FT-CNN | 97% using FT CNN and 95.17% using CNN-SVM | training needed | TP-Link router laptop with Intel 5300 Wi-Fi network interface card (NIC) |

| WiRoI [27] | gestures: punch out, push forward, swipe left, cut down | CSI-based, classification with SVM | 88% | training needed | two mini-PCs were equipped with Ubuntu operating systems running on the Linux 4.2.0 kernel |

| Dynamic gesture recognition using wireless signals with less disturbance [41] | five hand gestures | CSI-based, classification with KNN, ICA to eliminate disturbance, to reduce data dimensionality and eliminate noise | the amplitude and phase information, respectively, yielded accuracy rates of 95% and 85% | training needed | two computers were set up, each one equipped with three-antenna Intel 5300 wireless network cards |

| Wi-Finger [30] | finger gestures: flip up/down, swipe left/right, circle left/right, and zoom in/out | CSI-based, classification with multi-dimensional dynamic time wrapping | 93% | training needed | in an 802.11n Wi-Fi network, a Dell LATITUDE E5540 Laptop was used as a single Wi-Fi device, while a LINKSYS E2500 N600 Wireless Router served as the wireless Access Point |

| FreeGesture [21] | gestures: down, up, pull, push, left, right. | CSI-based, classification with CNN | 95.8% | training needed | two TP-LINK N750 routers |

| Dynamic Hand Gesture Detection and Recognition with Wi-Fi Signal Based on 1D-CNN [11] | hand gestures: (7 characters “ABCDEFG”) | RSSI-based, classification with 1D-CNN | 86.91% | training needed | the transmitter utilized in this setup is a widely used IoT Wi-Fi device called CC3200, while the receivers consist of two Universal Software Radio Peripherals (USRPs) |

| AirDraw [12] | handwriting detection | CSI-based, phase tracking and triangulation | median error lower than 2.2 cm | no training needed | three mini-PCs were utilized, with one serving as the transmitter (TX) and the other two as receivers (RXs). All devices employed Ubuntu 14.04 LTS and were equipped with Intel 5300 Wi-Fi NICs |

| Wiga [23] | yoga detection | CSI-based, CNN-LSTM | 85.6% | training needed | two desktop computers were equipped with Atheros 9590 802.11n Wi-Fi NICs and operated on the Ubuntu 10.04 operating system |

| WiADG [24] | gestures: (left, right, roll left, roll right, pull, and push) | CSI-based, classification using CNN | 98% in the original environment and 66.6% under environment changes | training needed | two TP-LINK N750 wireless routers, with one serving as the transmitter (TX) and the other as the receiver (RX) |

| FingerPass [25] | finger gestures (wave up, wave down, wave left, wave right, zoom out, and zoom in, circle left, circle right) | CSI-based, classification using LSTM-based DNN | 80% | training needed | a laptop used in the experiment was an HP Pavilion 14, featuring an Intel Wi-Fi Link 5300 network interface card (NIC); this configuration allowed for the provision of 30 subcarriers on the CSI of Wi-Fi signals |

| System | Objective of Sensing | Methodology | Performance | Complexity | Devices |

|---|---|---|---|---|---|

| WiFall [13] | Activity: falling | CSI-based, one-class SVM model was employed, utilizing a set of seven features | The detection accuracy achieved was 87% with a false alarm rate of 18% | training needed | the Intel Wi-Fi Link 5300, an 802.11n network interface card (NIC) |

| PAWS [42] | Walking, standing, sitting, running, sleeping, falling, empty | RSSI-based, fusion algorithm with two features | 97.47% | training needed | COTS Wi-Fi routers |

| E-Eyes [29] | Daily activity: washing dishes, talking on the phone | CSI-based, classification of in-place and walking activity | 96% | clustering needed | the laptops utilized in the experiment were equipped with Intel 5300 NIC cards |

| CARM [8] | Sitting down, walking, falling | CSI-based, CSI speed model, and features derived from PCA | 96% | training needed | laptop with Intel 5300 and TP-link TLWDR7500 |

| WiGest [43] | Gestures: swipe left/right, open/close, wipe, hold over, toward | RSSI-based, segmentation and matching | 87.5% | no training | two routers used in the experiment were the Cisco Linksys X2000 and the Netgear N300 |

| Device-free occupant activity sensing using Wi-Fi-enabled IoT devices for smart homes [15] | Activities: run, jump, sit down, box, walk, golf | Fine-grained CSI-based, CSVD-NMF classification with KNN | 90.6% with 20 training samples | training needed | TP-Link N7500 along with other COTS Wi-Fi routers |

| Freedetector [16] | Occupied or unoccupied | CSI-based, classification with random forest | 93.73% | training needed | two TP-LINK N750 wireless dual-band routers |

| Deepsense [26] | Sitting, standing, walking, lying down, and running | CSI-based, classification with CNN | 97.4% | training needed | two TP-LINK N750 wireless routers |

| Fine-grained occupant activity monitoring with Wi-Fi channel state information [17] | Activities: cooking, eating, fall down, toileting, sleeping, walking, batching, empty | CSI-based, feature extraction and classification with CNN-LSTM model | 96% for one user | training needed | four Lenovo T400 laptops equipped with Intel 5300NIC, where one laptop served as the transmitter and three laptops were employed as receivers |

| Using auditory features for Wi-Fi channel state information activity recognition [18] | Activities: walking, empty, pushing, waving, boxing | CSI-based, classification with CNN classifier combined with MFCC features | 95%, 88.64%, and 78% for CARM-9, CARM-11, ITI dataset respectively | training needed | TP-LINK: AC-1750 used as transmitter desktop computer with the Intel-5300 Wi-Fi card used as receiver |

| CDHAR [19] | Activities: walk, run, sit down, squat, and fall down | Fine-grained CSI-based, classification with ensemble learner | 90% indoor and 91% outdoor | training needed | MS-B083 mini host equipped with an Intel 5300 NIC; both the transmitter and receiver were configured with a single antenna |

| Device-free activity recognition using ultra-wideband radios [20] | Activities: standing, sitting, lying | UWB radios, classification with random forest | 95.6% | training needed | UWB (ultra-wideband) transceivers and UWB transmitter; we utilized the DW1000 Evaluation Board (EVB1000) provided by DecaWave |

| FALAR [22] | Activities: walk, run, sit down, boxing, and golf swinging | Fine-grained CSI-based, classification with CSVD-NMF | 90% | training needed | two TP-LINK N750 wireless dual-band routers |

| System | Objective of Sensing | Methodology | Performance | Complexity | Devices |

|---|---|---|---|---|---|

| WiWho [14] | Gait of single person | CSI-based, a decision tree-based classifier | 80% with 6 people | training needed | a laptop equipped with an Intel 5300 NIC and Asus RT-AC66U routers |

| A Trained-once Crowd Counting Method Using Differential Wi-Fi Channel State Information [46] | Count the number of people in each area at most for seven people | CSI-based, classification using the linear discriminant classifier | 74% for Room A (5 m × 6 m) and 52% for Room C (6 m × 12.5 m). | training needed | a commercially available 2.4 GHz Wi-Fi b/g/n access point (AP) was utilized as a dual-antenna transmitter; additionally, a laptop running Ubuntu 10.04 LTS equipped with an Intel Wi-Fi Link 5300 wireless NIC, featuring three antennas, served as the receiver |

| WiDMove [44] | Human direction detection | CSI-based, classification using SVM | 80% | training needed | two computers were utilized in the experiment, each equipped with an Intel Core i7 processor, 8 GB of RAM, and running Ubuntu 14.04; both computers were also equipped with Atheros ath9k AR9380 NICs |

| WifiU [45] | Walking movement | CSI-based, PCA, SVM classifiers | 79.28%/89.52%/93.05% (top-1/-2/-3, 50 subjects) | training needed | NetGear JR6100 Wi-Fi router and a ThinkPad X200 laptop equipped with an Intel 5300 Wi-Fi NIC |

| System | Objective of Sensing | Methodology | Performance | Complexity | Devices |

|---|---|---|---|---|---|

| WiDir [9] | Detect walking direction | CSI-based, Fresnel direction calculation | median error of less than 10 degrees | no training needed | a Wi-Fi access point (AP) and two computers equipped with wireless cards |

| WiDar [10] | Detect human velocity (direction and speed) and location | CSI Doppler, STFT Gaussian window, pass band filter PCA | the median location error observed is 25 cm and 38 cm when considering both cases with and without initial positions; additionally, a median relative velocity error of of 13% | no training | three mini-desktops equipped with Intel 5300 NICs; one of the mini-desktops was designated as the transmitter, while the other two were employed as receivers |

| WiDet [31] | Walking direction detection (up/down) | RSSI-based, CNN algorithm | 94.5% for a total of 163 walking events | training needed | the system operates on a Raspberry Pi development board, running on the Linux kernel version 2.6. It comprises three Wi-Fi transmitters and a single Wi-Fi receiver |

| Wi-Fi-Enabled Smart Human Dynamics Monitoring [32] | Counting people, walking direction detection | RSSI-based, CNN algorithm | 90% | training needed | two sets of laptops, with each pair equipped with Intel 5300 802.11n Wi-Fi NICs, functioning as Wi-Fi transceivers |

| Indoor Device-Free Activity Recognition Based on Radio Signal [33] | Walking direction detection | PRR-based, KNN and SVM classifier | 91% | training needed | four transmitters USRP nodes and one receiver |

| Walking Direction Detection using Received Signal Strengths in Correlated RF Links [34] | Walking entry and exit directions | RSSI-based, dynamic time warping (DTW) | the percentage ranges from 40% to 99%, depending on the specific pair of links utilized | training needed | the XM2110 node, developed by MEMSIC Inc.; this node combines an ATmega1281 processing chip with a mote module that supports the IEEE 802.15.4 protocol |

| Gate-ID [35] | User’s gait in two walking directions (left, right) | CSI-based, deep learning algorithms | 90.7% | training needed | an HP laptop and a Netgear R7000 Wi-Fi router |

| WiDIGR [36] | Walking direction detection | CSI-based, SVM classifier | 78.28% for apartment, 92.83% for empty room for training data | training needed | three laptops equipped with Intel 5300 wireless NICs |

| Our Approach | Walking direction detection (left, right, up, down) and empty | CSI-based, SVM classifier | 92.9%, 95.1%, and 89% for untrained data of the classroom, meeting room, and classroom + meeting room, respectively | training needed | two TP-LINK TLWDR4300 wireless routers and a single-user laptop |

References

- Aggarwal, J.K.; Michael, S.R. Human activity analysis: A review. ACM Comput. Surv. 2011, 43, 1–43. [Google Scholar] [CrossRef]

- Adib, F.; Kabelac, Z.; Katabi, D. Multi-person motion tracking via RF body reflections. In Proceedings of the Usenix NSDI, Santa Clara, CA, USA, 7–8 May 2015. [Google Scholar]

- Adib, F.; Kabelac, Z.; Katabi, D.; Miller, R.C. 3D tracking via body radio reflections. In Proceedings of the Usenix NSDI, Berkeley, CA, USA, 2–5 April 2013. [Google Scholar]

- Lyonnet, B.; Ioana, C.; Amin, M.G. Human gait classification using microdoppler time-frequency signal representations. In Proceedings of the IEEE Radar Conference, Arlington, VA, USA, 10–14 May 2010; pp. 915–919. [Google Scholar]

- Dorp, P.V.; Groen, F. Feature-based human motion parameter estimation with radar. IET Radar Sonar Navig. 2008, 2, 135–145. [Google Scholar] [CrossRef]

- Ertin, E.; Stohs, N.; Kumar, S.; Raij, A.; al’Absi, M.; Shah, S. AutoSense: Unobtrusively wearable sensor suite for inferring the onset, causality, and consequences of stress in the field. In Proceedings of the ACM Sensys, Seattle, WA, USA, 1–4 November 2011. [Google Scholar]

- Yatani, K.; Truong, K.N. Bodyscope: A wearable acoustic sensor for activity recognition. In Proceedings of the ACM UbiComp, Pittsburgh, PA, USA, 5–8 September 2012; pp. 341–350. [Google Scholar]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Understanding and modeling of wifi signal based human activity recognition. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking—ACM, Paris, France, 7–11 September 2015; pp. 65–76. [Google Scholar]

- Wu, D.; Zhang, D.; Xu, C.; Wang, Y.; Wang, H. WiDir: Walking direction estimation using wireless signals. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp ’16), Heidelberg, Germany, 12–16 September 2016; pp. 351–362. [Google Scholar]

- Qian, K.; Wu, C.; Yang, Z.; Liu, Y.; Jamieson, K. Widar: Decimeter-level passive tracking via velocity monitoring with commodity Wi-Fi. In Proceedings of the 18th ACM International Symposium on Mobile Ad Hoc Networking and Computing, New York, NY, USA, 10–14 July 2017. [Google Scholar]

- Pan, X.; Jiang, T.; Li, X.; Ding, X.; Wang, Y.; Li, Y. Dynamic hand gesture detection and recognition with WiFi signal based on 1d-CNN. In Proceedings of the 2019 IEEE International Conference on Communications Workshops (ICC Workshops), Shanghai, China, 20–24 May 2019. [Google Scholar]

- Han, Z.; Lu, Z.; Wen, X.; Zhao, J.; Guo, L.; Liu, Y. In-air handwriting by passive gesture tracking using commodity WiFi. IEEE Commun. Lett. 2020, 24, 2652–2656. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, K.; Ni, L.M. Wifall: Device-free fall detection by wireless networks. IEEE Trans. Mob. Comput. 2016, 16, 581–594. [Google Scholar] [CrossRef]

- Zeng, Y.; Pathak, P.H.; Mohapatra, P. Wiwho: Wifi-based person identification in smart spaces. In Proceedings of the 15th International Conference on Information Processing in Sensor Networks, Vienna, Austria, 11–14 April 2016; p. 4. [Google Scholar]

- Yang, J.; Zou, H.; Jiang, H.; Xie, L. Device-free occupant activity sensing using WiFi-enabled IoT devices for smart homes. IEEE Internet Things J. 2018, 5, 3991–4002. [Google Scholar] [CrossRef]

- Zou, H.; Zhou, Y.; Yang, J.; Gu, W.; Xie, L.; Spanos, C. Freedetector: Device-free occupancy detection with commodity wifi. In Proceedings of the Sensing, Communication and Networking (SECON Workshops), 2017 IEEE International Conference, San Diego, CA, USA, 12 June 2017; pp. 1–5. [Google Scholar]

- Lee, H.; Ahn, C.R.; Choi, N. Fine-grained occupant activity monitoring with Wi-Fi channel state information: Practical implementation of multiple receiver settings. Adv. Eng. Inform. 2020, 46, 101147. [Google Scholar] [CrossRef]

- Tegou, T.; P apadopoulos, A.; Kalamaras, I.; Votis, K.; Tzovaras, D. Using auditory features for WiFi channel state information activity recognition. SN Comput. Sci. 2020, 1, 1–11. [Google Scholar] [CrossRef]

- Yang, X.; He, A.; Zhou, M.; Jiang, Q.; Li, Z. Human Activity Recognition System Based on Channel State Information. In Proceedings of the 2018 IEEE 8th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Tianjin, China, 19–23 July 2018. [Google Scholar]

- Sharma, S.; Mohammadmoradi, H.; Heydariaan, M.; Gnawali, O. Device-free activity recognition using ultra-wideband radios. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019. [Google Scholar]

- Zou, H.; Zhou, Y.; Yang, J.; Jiang, H.; Xie, L.; Spanos, C.J. WiFi-enabled device-free gesture recognition for smart home automation. In Proceedings of the 2018 IEEE 14th International Conference on Control and Automation (ICCA), Anchorage, AK, USA, 12–15 June 2018. [Google Scholar]

- Yang, J.; Zou, H.; Jiang, H.; Xie, L. Fine-grained adaptive location-independent activity recognition using commodity WiFi. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018. [Google Scholar]

- Huang, S.; Wang, D.; Zhao, R.; Zhang, Q. Wiga: A wifi-based contactless activity sequence recognition system based on deep learning. In Proceedings of the 2019 15th International Conference on Mobile Ad-Hoc and Sensor Networks (MSN), Shenzhen, China, 11–13 December 2019. [Google Scholar]

- Zou, H.; Yang, J.; Zhou, Y.; Xie, L.; Spanos, C.J. Robust wifi-enabled device-free gesture recognition via unsupervised adversarial domain adaptation. In Proceedings of the 2018 27th International Conference on Computer Communication and Networks (ICCCN), Hangzhou, China, 30 July–2 August 2018. [Google Scholar]

- Kong, H.; Lu, L.; Yu, J.; Chen, Y.; Kong, L.; Li, M. Fingerpass: Finger gesture-based continuous user authentication for smart homes using commodity WiFi. In Proceedings of the Twentieth ACM International Symposium on Mobile Ad Hoc Networking and Computing, Catania, Italy, 2–5 July 2019. [Google Scholar]

- Zou, H.; Zhou, Y.; Yang, J.; Xie, L.; Spanos, C. Deepsense: Devicefree human activity recognition via autoencoder long-term recurrent convolutional network. In Proceedings of the IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018. [Google Scholar]

- Guo, L.; Wen, X.; Lu, Z.; Shen, X.; Han, Z. WiRoI: Spatial region of interest human sensing with commodity WiFi. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–18 April 2019. [Google Scholar]

- Bellavista-Parent, V.; Torres-Sospedra, J.; Pérez-Navarro, A. Comprehensive analysis of applied machine learning in indoor positioning based on wi-fi: An extended systematic review. Sensors 2022, 22, 4622. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Liu, J.; Chen, Y.; Gruteser, M.; Yang, J.; Liu, H. E-eyes: Device-free location-oriented activity identification using fine-grained wifi signatures. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–14 September 2014; pp. 617–628. [Google Scholar]

- Tan, S.; Yang, J. WiFinger: Leveraging commodity WiFi for fine-grained finger gesture recognition. In Proceedings of the 17th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Chennai, India, 10–14 July 2016; pp. 201–210. [Google Scholar]

- Huang, H.; Lin, S. WiDet: Wi-Fi based device-free passive person detection with deep convolutional neural networks. In Proceedings of the 21st ACM International Conference on Modeling, Analysis and Simulation of Wireless and Mobile Systems, Montreal, QC, Canada, 28 October–2 November 2018; pp. 53–60. [Google Scholar]

- Guo, X.; Liu, B.; Shi, C.; Liu, H.; Chen, Y.; Chuah, M.C. WiFi-enabled smart human dynamics monitoring. In Proceedings of the 15th ACM Conference on Embedded Network Sensor Systems, Delft, The Netherlands, 6–8 November 2017; pp. 1–13. [Google Scholar]

- Huang, X.; Dai, M. Indoor device-free activity recognition based on radio signal. IEEE Trans. Veh. Technol. 2016, 66, 5316–5329. [Google Scholar] [CrossRef]

- Liu, T.; Chen, Z.M.; Liang, Z.Q. Walking direction detection using received signal strengths in correlated RF links. In Proceedings of the 2017 11th Asian Control Conference (ASCC), Gold Coast, QLD, Australia, 17–20 December 2017; pp. 2872–2877. [Google Scholar]

- Zhang, J.; Wei, B.; Wu, F.; Dong, L.; Hu, W.; Kanhere, S.S.; Luo, C.; Yu, S.; Cheng, J. Gate-ID: WiFi-based human identification irrespective of walking directions in smart home. IEEE Internet Things J. 2020, 8, 7610–7624. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, C.; Ma, M.; Zhang, D. WiDIGR: Direction-independent gait recognition system using commercial Wi-Fi devices. IEEE Internet Things J. 2019, 7, 1178–1191. [Google Scholar] [CrossRef]

- IEEE Standard for Information Technology—Local and Metropolitan Area Networks—Specific Requirements—Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) Specifications Amendment 5: Enhancements for Higher Throughput; IEEE Std 802.11n-2009 (Amendment to IEEE Std 802.11-2007 as amended by IEEE Std 802.11k-2008, IEEE Std 802.11r-2008, IEEE Std 802.11y-2008, and IEEE Std 802.11w-2009); IEEE: Piscataway, NJ, USA, 2009; pp. 1–565. [CrossRef]

- Halperin, D.; Hu, W.; Sheth, A.; Wetherall, D. Tool release: Gathering 802.11 n traces with channel state information. ACM SIGCOMM Comput. Commun. Rev. 2011, 41, 53. [Google Scholar] [CrossRef]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013; pp. 27–38. [Google Scholar]

- Bu, Q.; Yang, G.; Ming, X.; Zhang, T.; Feng, J.; Zhang, J. Deep transfer learning for gesture recognition with WiFi signals. Pers. Ubiquitous Comput. 2020, 26, 543–554. [Google Scholar] [CrossRef]

- Chen, J.; Li, F.; Chen, H.; Yang, S.; Wang, Y. Dynamic gesture recognition using wireless signals with less disturbance. Pers. Ubiquitous Comput. 2019, 23, 17–27. [Google Scholar] [CrossRef]

- Gu, Y.; Ren, F.; Li, J. Paws: Passive human activity recognition based on wifi ambient signals. IEEE Internet Things J. 2015, 3, 796–805. [Google Scholar] [CrossRef]

- Abdelnasser, H.; Youssef, M.; Harras, K.A. Wigest: A ubiquitous wifi-based gesture recognition system. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hong Kong, 26 April–1 May 2015; pp. 1472–1480. [Google Scholar]

- Da Silva, B.S.; Laureano, G.T.; Abdallah, A.S.; Cardoso, K.V. Widmove: Sensing movement direction using ieee 802.11 n interfaces. In Proceedings of the 2018 IEEE Canadian Conference on Electrical & Computer Engineering (CCECE), Quebec, QC, Canada, 13–16 May 2018; pp. 1–4. [Google Scholar]

- Wang, W.; Liu, A.X.; Shahzad, M. Gait recognition using wifi signals. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg Germany, 12–16 September 2016; pp. 363–373. [Google Scholar]

- Di Domenico, S.; De Sanctis, M.; Cianca, E.; Bianchi, G. A trained-once crowd counting method using differential wifi channel state information. In Proceedings of the 3rd International on Workshop on Physical Analytics, Singapore, 26 June 2016; pp. 37–42. [Google Scholar]

- Xie, Y.; Li, Z.; Li, M. Precise power delay profiling with commodity WiFi. In Proceedings of the 21st Annual international conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; pp. 53–64. [Google Scholar]

- Schulz, M.; Wegemer, D.; Hollick, M. Nexmon: The c-Based Firmware Patching Framework. 2017. Available online: https://nexmon.org (accessed on 1 September 2023).

- Sen, S.; Radunovic, B.; Choudhury, R.R.; Minka, T. You are facing the Mona Lisa: Spot localization using PHY layer information. In Proceedings of the 10th International Conference on Mobile Systems, Applications, and Services, Low Wood Bay Lake District, UK, 25–29 June 2012; pp. 183–196. [Google Scholar]

- Chowdhury, T.Z. Using Wi-Fi Channel State Information (CSI) for Human Activity Recognition and Fall Detection. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2018. [Google Scholar]

- Burkov, A. The Hundred-Page Machine Learning Book; Andriy Burkov: Quebec City, QC, Canada, 2019; Volume 1. [Google Scholar]

- Ali, H.A.H.; Seytnazarov, S. Human-Walking-Direction-Detection-Using-Wireless-Signals-and-Machine-and-Deep-Learning. Available online: https://github.com/hananawad12/Human-Walking-Direction-Detection-Using-Wireless-Signals-and-Machine-and-Deep-Learning (accessed on 6 December 2023).

- Ali, H.A.H. Dataset. Available online: https://figshare.com/articles/dataset/HWDD_Dataset_zip_Dataset_for_Human_Walking_Direction_Detection_Using_Wireless_Signals_Channel_State_Information_CSI_/24718371/3 (accessed on 6 December 2023).

- Zhang, J.; Wei, B.; Hu, W.; Kanhere, S.S. Wifi-id: Human Identification Using Wifi Signal. In Proceedings of the 2016 International Conference on Distributed Computing in Sensor Systems (DCOSS), Washington, DC, USA, 26–28 May 2016; pp. 75–82. [Google Scholar]

| Activities | Left, Right, Up, Down, Empty |

|---|---|

| # of participants involved | 9 |

| # of environments used | 2 |

| Wi-Fi router | TP-link TLWDR4300 |

| Channel bandwidth | 20 MHz |

| Frequency | 2.4 GHz |

| Antennas | 2TX × 2RX |

| # of subcarriers | 56 |

| Activity | Classroom | Meeting Room | Classroom + Meeting Room | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Empty | Down | Left | Right | Up | Empty | Down | Left | Right | Up | Empty | Down | Left | Right | Up | |

| Empty | 100 | 0 | 0 | 0 | 0 | 100 | 0 | 0 | 0 | 0 | 100 | 0 | 0 | 0 | 0 |

| Down | 0 | 100 | 0 | 0 | 0 | 0 | 92.86 | 0 | 0 | 7.14 | 0 | 86.36 | 0 | 4.55 | 9.09 |

| Left | 0 | 0 | 100 | 0 | 0 | 0 | 0 | 85.71 | 0 | 14.29 | 0 | 5.88 | 88.24 | 0 | 5.88 |

| Right | 0 | 0 | 0 | 88.89 | 11.11 | 0 | 0 | 0 | 100 | 0 | 0 | 0 | 7.69 | 84.62 | 7.69 |

| Up | 0 | 22.22 | 0 | 0 | 77.78 | 0 | 0 | 0 | 0 | 100 | 0 | 100 | 0 | 0 | 90 |

| Activity | Classroom | Meeting Room | Classroom + Meeting Room | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | f1 Score | Precision | Recall | f1 Score | Precision | Recall | f1 Score | |

| Empty | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| Down | 0.83 | 1.0 | 0.91 | 1.0 | 0.93 | 0.96 | 0.86 | 0.86 | 0.86 |

| Left | 1.0 | 1.0 | 1.0 | 1.0 | 0.86 | 0.92 | 0.94 | 0.88 | 0.91 |

| Right | 1.0 | 0.89 | 0.94 | 1.0 | 1.0 | 1.0 | 0.92 | 0.85 | 0.88 |

| Up | 0.88 | 0.78 | 0.82 | 0.80 | 1.0 | 0.89 | 0.82 | 0.90 | 0.86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ali, H.A.H.; Seytnazarov, S. Human Walking Direction Detection Using Wireless Signals, Machine and Deep Learning Algorithms. Sensors 2023, 23, 9726. https://doi.org/10.3390/s23249726

Ali HAH, Seytnazarov S. Human Walking Direction Detection Using Wireless Signals, Machine and Deep Learning Algorithms. Sensors. 2023; 23(24):9726. https://doi.org/10.3390/s23249726

Chicago/Turabian StyleAli, Hanan Awad Hassan, and Shinnazar Seytnazarov. 2023. "Human Walking Direction Detection Using Wireless Signals, Machine and Deep Learning Algorithms" Sensors 23, no. 24: 9726. https://doi.org/10.3390/s23249726

APA StyleAli, H. A. H., & Seytnazarov, S. (2023). Human Walking Direction Detection Using Wireless Signals, Machine and Deep Learning Algorithms. Sensors, 23(24), 9726. https://doi.org/10.3390/s23249726