A Comparative Study of the Typing Performance of Two Mid-Air Text Input Methods in Virtual Environments

Abstract

1. Introduction

1.1. Text Input in VR

1.2. VR Text Input via Mid-Air Interaction

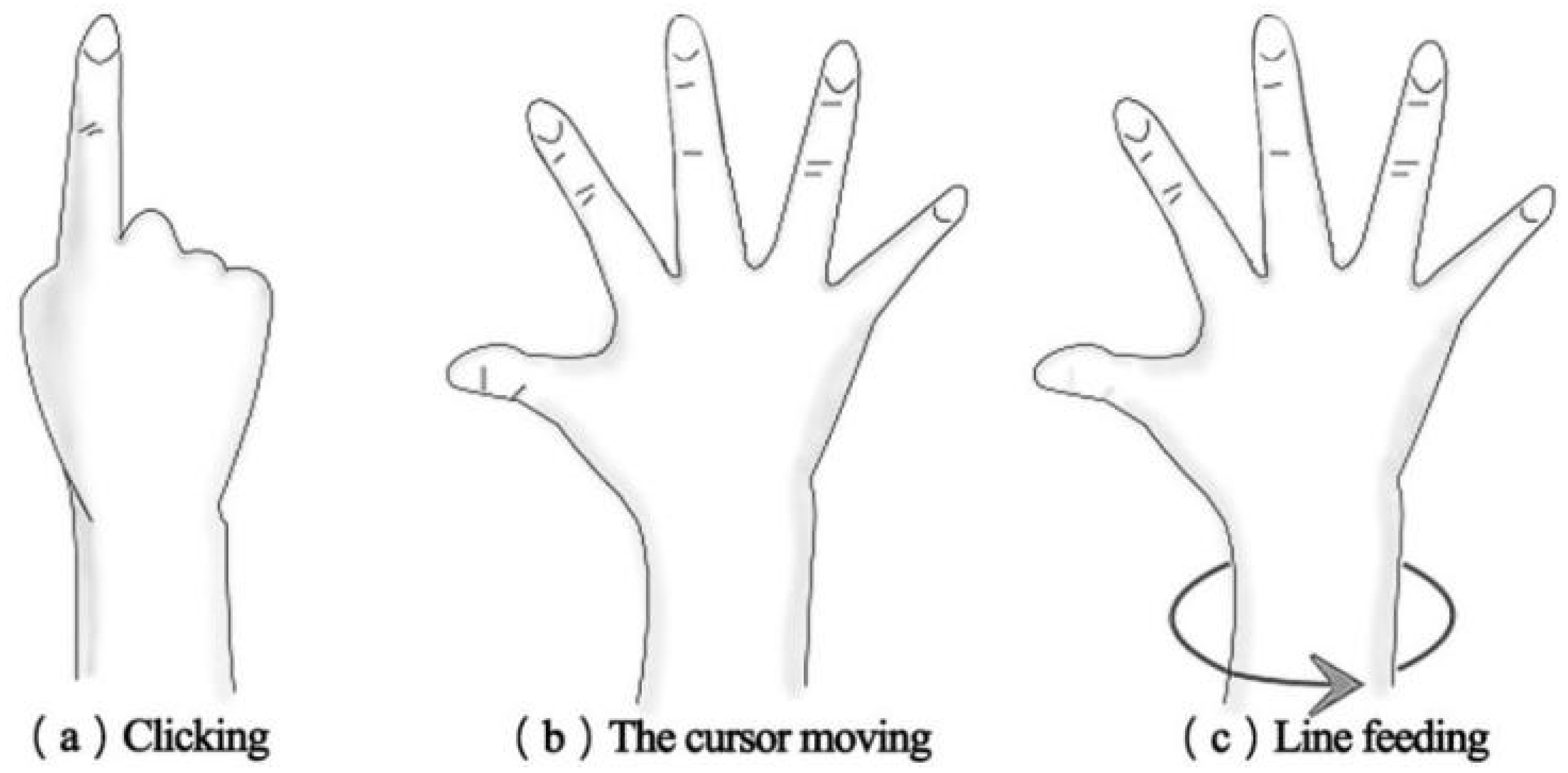

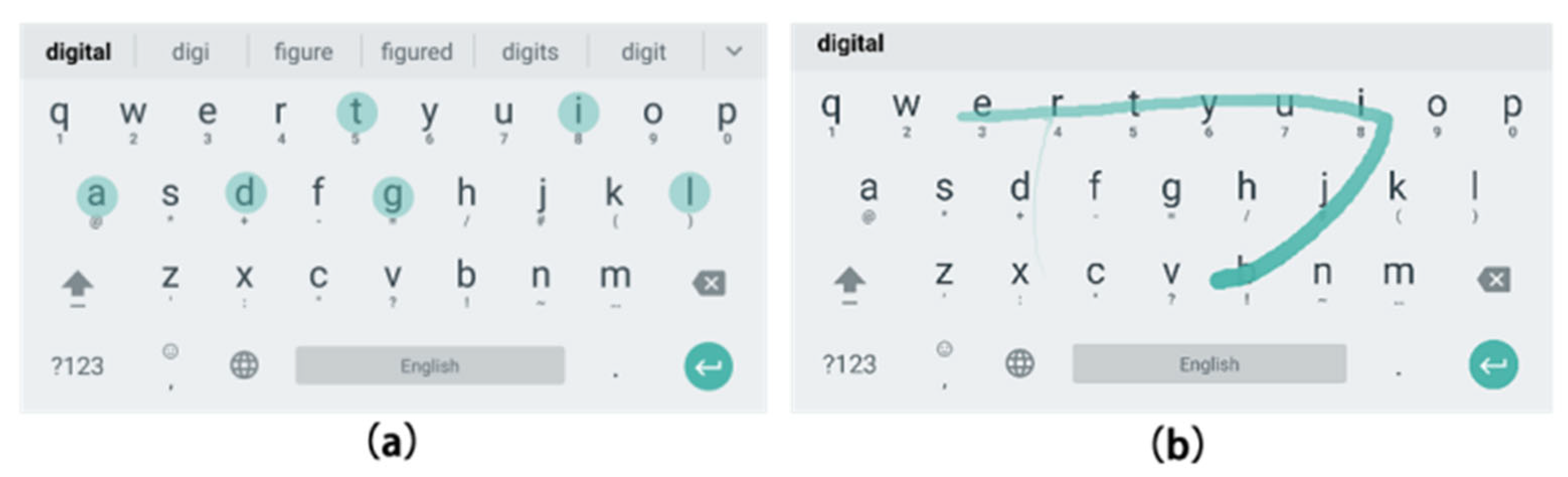

1.3. Typical Input Methods: Tap and Trace

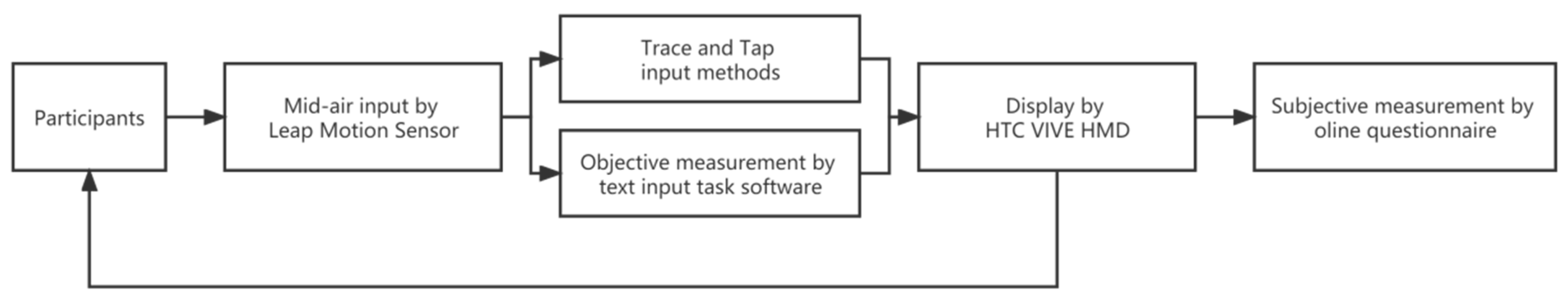

2. Materials and Methods

2.1. Participants

2.2. Apparatus

2.3. Procedure

2.4. Design

2.4.1. Typing Performance

2.4.2. Subjective Experience

2.4.3. Workload

3. Results

3.1. Typing Performance

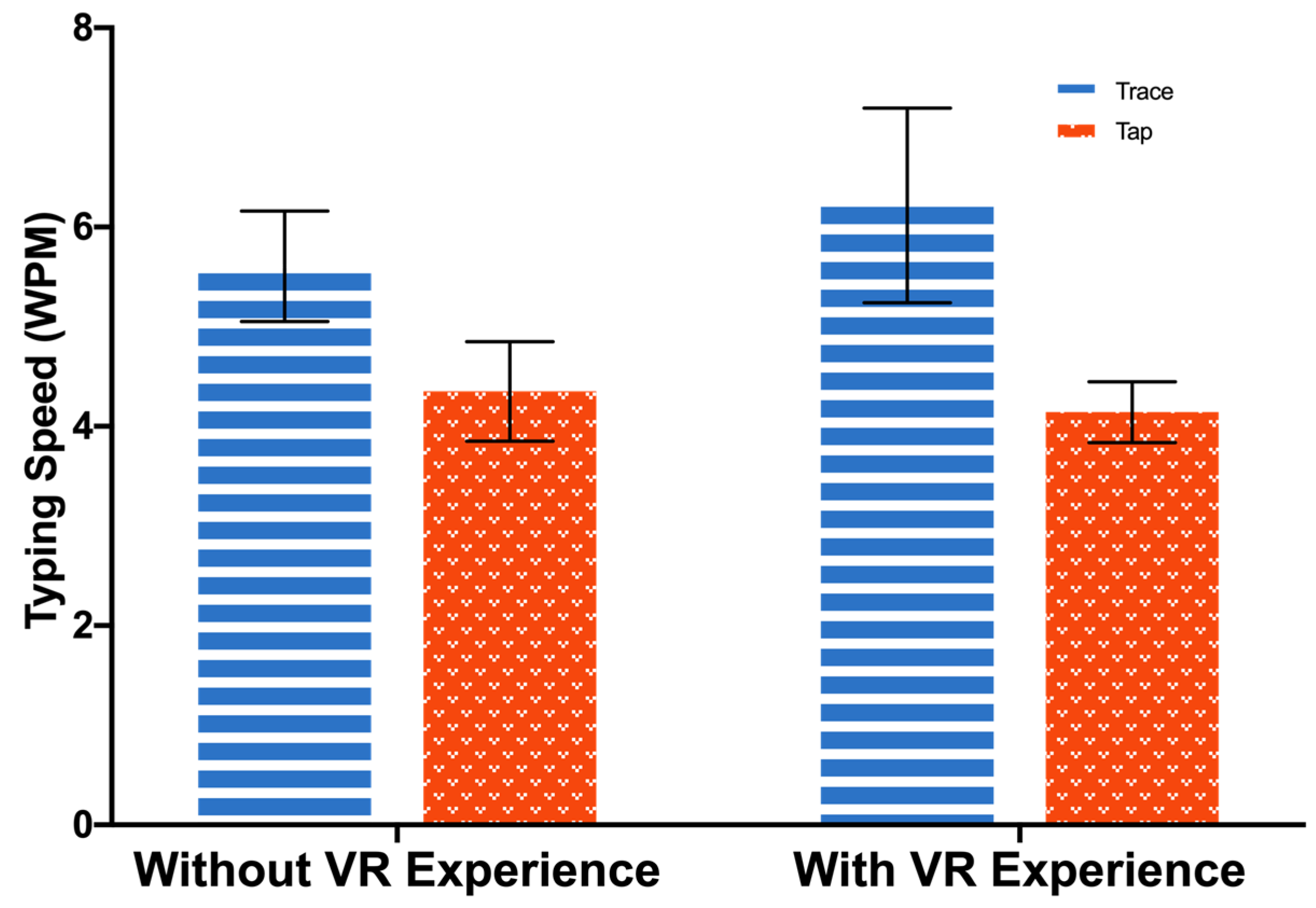

3.1.1. Words Per Minute (WPM)

3.1.2. Word Accuracy

3.1.3. Typing Efficiency

3.1.4. Effect of Learning on Typing Accuracy

3.2. Subjective Experience

3.2.1. User Experience

3.2.2. Motion Sickness

3.2.3. Perceived Exertion

3.3. Workload

3.3.1. Subjective Workload

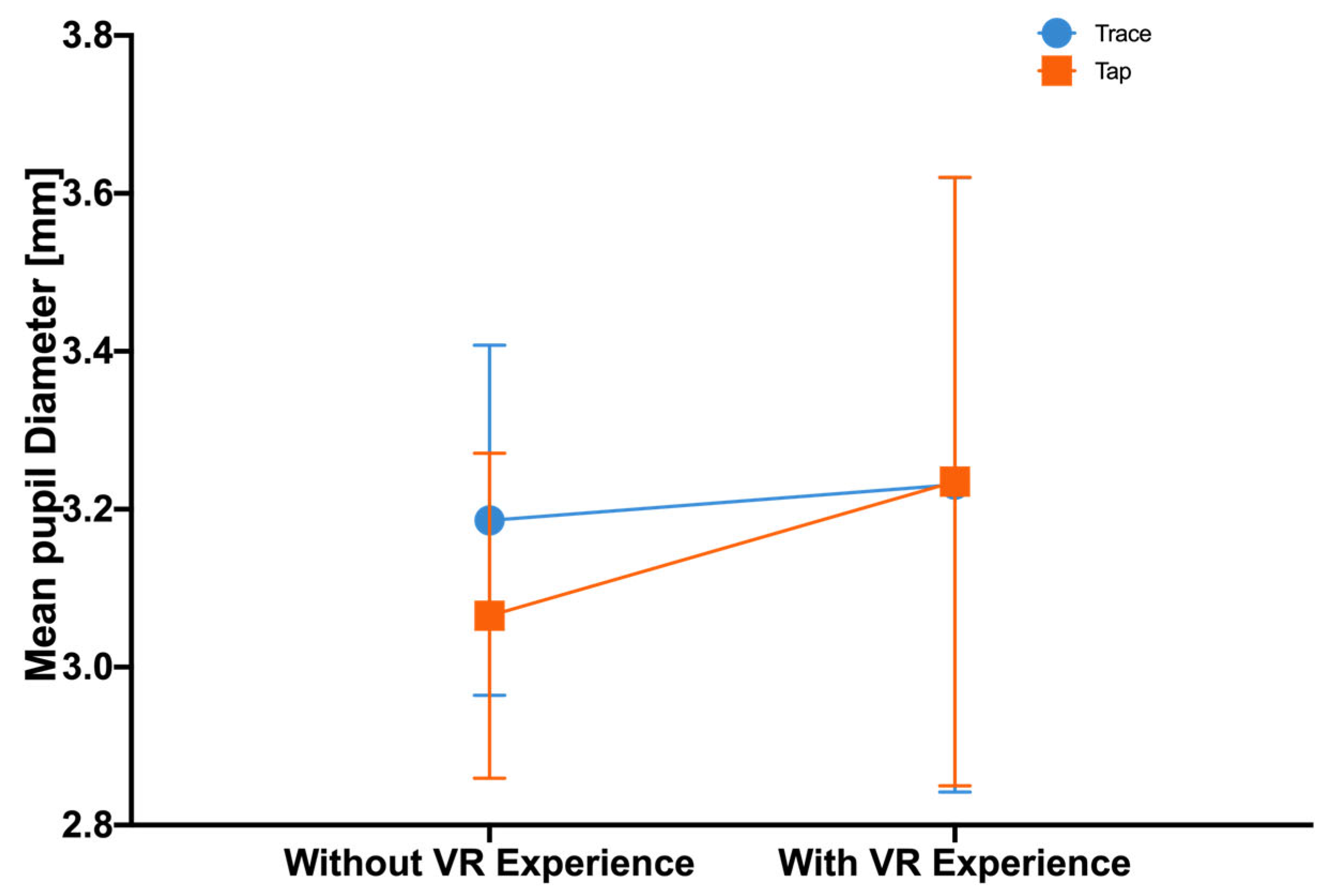

3.3.2. Cognitive Workload (Mean Pupil Diameter)

4. Discussion

4.1. The Performance of the Input Methods

4.2. The Subjective Experience of the Input Methods

4.3. The Workload of the Input Methods

5. Conclusions and Future Work

5.1. Conclusions

- (1)

- Regardless of VR experience, participants displayed greater efficiency in text input when employing the trace input technique. The trace method was deemed more novel by users, while the tap method was more easily comprehensible.

- (2)

- Neither of the VR input techniques induced significant motion sickness, yet both were perceived to require considerable exertion. It is advised to employ short texts for mid-air input tasks in VR.

- (3)

- All participants reported a heightened level of subjective workload while using the trace input method; however, after gaining some experience with VR, their level of frustration decreased to match that of the tap input method.

- (4)

- Participants who lacked experience with VR exhibited lower cognitive workloads when using the tap input method; however, after gaining some experience with VR, their cognitive workloads increased to match those required by the trace input method.

5.2. Limitation

Author Contributions

Funding

Informed Consent Statement

Conflicts of Interest

References

- Grubert, J.; Ofek, E.; Pahud, M.; Kristensson, P.O. The Office of the Future: Virtual, Portable, and Global. IEEE Comput. Graph. Appl. 2018, 38, 125–133. [Google Scholar] [CrossRef]

- Grubert, J.; Witzani, L.; Ofek, E.; Pahud, M.; Kranz, M.; Kristensson, P.O. Effects of Hand Representations for Typing in Virtual Reality. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018. [Google Scholar]

- Ma, C.; Du, Y.; Teng, D.; Chen, J.; Wang, H.; Guozhong, D. An adaptive sketching user interface for education system in virtual reality. In Proceedings of the 2009 IEEE International Symposium on IT in Medicine & Education, Jinan, China, 14–16 August 2009. [Google Scholar]

- Nguyen, C.; DiVerdi, S.; Hertzmann, A.; Liu, F. CollaVR: Collaborative In-Headset Review for VR Video. In Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, Québec City, QC, Canada, 22–25 October 2017; pp. 267–277. [Google Scholar]

- Wang, B.; Li, S.; Mu, J.; Hao, X.; Zhu, W.; Hu, J. Research Advancements in Key Technologies for Space-Based Situational Awareness. Space Sci. Technol. 2022, 2022, 9802793. [Google Scholar] [CrossRef]

- Jiang, Z.; Liu, J.; Liu, Y.; Li, H. Advances in Space Robots. Space Sci. Technol. 2022, 2022, 9764036. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, Q.; Wang, W. Design and Application Prospect of China’s Tiangong Space Station. Space Sci. Technol. 2023, 3, 35. [Google Scholar] [CrossRef]

- Wang, R.; Liang, C.; Pan, D.; Zhang, X.; Xin, P.; Du, X. Research on a Visual Servo Method of a Manipulator Based on Velocity Feedforward. Space Sci. Technol. 2021, 2021, 9763179. [Google Scholar] [CrossRef]

- Fu, H.; Xu, W.; Xue, H.; Yang, H.; Ye, R.; Huang, Y.; Xue, Z.; Wang, Y.; Lu, C. Rfuniverse: A physics-based action-centric interactive environment for everyday household tasks. arXiv 2022, arXiv:2202.00199. [Google Scholar]

- Knierim, P.; Schwind, V.; Feit, A.M.; Nieuwenhuizen, F.; Henze, N. Physical Keyboards in Virtual Reality: Analysis of Typing Performance and Effects of Avatar Hands. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; Association for Computing Machinery: Montreal, QC, Canada, 2018; p. 345. [Google Scholar]

- Knierim, P.; Schwind, V.; Feit, A.M.; Nieuwenhuizen, F.; Henze, N. HMK: Head-Mounted-Keyboard for Text Input in Virtual or Augmented Reality. In Proceedings of the Adjunct Proceedings of the 34th Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA, 10–14 October 2021; Association for Computing Machinery: Virtual Event, USA, 2021; pp. 115–117. [Google Scholar]

- Boletsis, C.; Kongsvik, S. Text Input in Virtual Reality: A Preliminary Evaluation of the Drum-Like VR Keyboard. Technologies 2019, 7, 31. [Google Scholar] [CrossRef]

- Chen, S.; Wang, J.; Guerra, S.; Mittal, N.; Prakkamakul, S. Exploring Word-gesture Text Entry Techniques in Virtual Reality. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: Glasgow, Scotland, 2019; p. LBW0233. [Google Scholar]

- Kim, Y.R.; Kim, G.J. HoVR-type: Smartphone as a typing interface in VR using hovering. In Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology, Munich, Germany, 2–4 November 2016; Association for Computing Machinery: Munich, Germany, 2017; pp. 333–334. [Google Scholar]

- Olofsson, J. Input and Display of Text for Virtual Reality Head-Mounted Displays and Hand-held Positionally Tracked Controllers. Ph.D. Thesis, Luleå University of Technology, Lulia, Sweden, 2017. [Google Scholar]

- Poupyrev, I.; Tomokazu, N.; Weghorst, S. Virtual Notepad: Handwriting in immersive VR. In Proceedings of the IEEE 1998 Virtual Reality Annual International Symposium (Cat. No.98CB36180), Atlanta, GA, USA, 14–18 March 1998. [Google Scholar]

- Yu, C.; Gu, Y.; Yang, Z.; Yi, X.; Luo, H.; Shi, Y. Tap, Dwell or Gesture? Exploring Head-Based Text Entry Techniques for HMDs. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; Association for Computing Machinery: Denver, CO, USA, 2017; pp. 4479–4488. [Google Scholar]

- Yu, D.; Fan, K.; Zhang, H.; Monteiro, D.; Xu, W.; Liang, H.N. PizzaText: Text Entry for Virtual Reality Systems Using Dual Thumbsticks. IEEE Trans. Vis. Comput. Graph. 2018, 24, 2927–2935. [Google Scholar] [CrossRef] [PubMed]

- Darbar, R.; Odicio-Vilchez, J.; Lainé, T.; Prouzeau, A.; Hachet, M. Text Selection in AR-HMD Using a Smartphone as an Input Device. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021. [Google Scholar]

- Nguyen, A.; Bittman, S.; Zank, M. Text Input Methods in Virtual Reality using Radial Layouts. In Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology, New York, NY, USA, 1–4 November 2020; Association for Computing Machinery: New York, NY, USA, 2020; p. 73. [Google Scholar]

- Kern, F.; Niebling, F.; Latoschik, M.E. Text Input for Non-Stationary XR Workspaces: Investigating Tap and Word-Gesture Keyboards in Virtual and Augmented Reality. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2658–2669. [Google Scholar] [CrossRef] [PubMed]

- Kern, F.; Niebling, F.; Latoschik, M.E. Toward Using Machine Learning-Based Motion Gesture for 3D Text Input. In Proceedings of the 2021 ACM Symposium on Spatial User Interaction, New York, NY, USA, 9–10 November 2021; p. 28. [Google Scholar]

- Jackson, B.; Caraco, L.B.; Spilka, Z.M. Arc-Type and Tilt-Type: Pen-based Immersive Text Input for Room-Scale VR. In Proceedings of the 2020 ACM Symposium on Spatial User Interaction, New York, NY, USA, 31 October—1 November 2020; Association for Computing Machinery: New York, NY, USA, 2020; p. 18. [Google Scholar]

- Evans, F.; Skiena, S.; Varshney, A. VType: Entering text in a virtual world. Int. J. Hum. Comput. Stud. 1999. [Google Scholar]

- Bowman, D.A.; Rhoton, C.J.; Pinho, M.S. Text Input Techniques for Immersive Virtual Environments: An Empirical Comparison. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2002, 46, 2154–2158. [Google Scholar] [CrossRef]

- Jiang, H.; Weng, D.; Zhang, Z.; Chen, F. HiFinger: One-Handed Text Entry Technique for Virtual Environments Based on Touches between Fingers. Sensors 2019, 19, 3063. [Google Scholar] [CrossRef] [PubMed]

- Pratorius, M.; Burgbacher, U.; Valkov, D.; Hinrichs, K. Sensing Thumb-to-Finger Taps for Symbolic Input in VR/AR Environments. IEEE Comput. Graph. Appl. 2015, 35, 42–54. [Google Scholar] [CrossRef] [PubMed]

- Kuester, F.; Chen, M.; Phair, M.E.; Mehring, C. Towards keyboard independent touch typing in VR. In Proceedings of the ACM symposium on Virtual reality software and technology, Monterey, CA, USA, 7–9 November 2005; Association for Computing Machinery: Monterey, CA, USA, 2005; pp. 86–95. [Google Scholar]

- Nooruddin, N.; Dembani, R.; Maitlo, N. HGR: Hand-Gesture-Recognition Based Text Input Method for AR/VR Wearable Devices. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020. [Google Scholar]

- Fang, F.; Zhang, H.; Zhan, L.; Guo, S.; Zhang, M.; Lin, J.; Qin, Y.; Fu, H. Handwriting Velcro: Endowing AR Glasses with Personalized and Posture-adaptive Text Input Using Flexible Touch Sensor. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2023, 6, 163. [Google Scholar] [CrossRef]

- Smith, A.L.; Chaparro, B.S. Smartphone Text Input Method Performance, Usability, and Preference with Younger and Older Adults. Hum. Factors 2015, 57, 1015–1028. [Google Scholar] [CrossRef] [PubMed]

- Turner, C.J.; Chaparro, B.S.; He, J. Typing on a Smartwatch While Mobile: A Comparison of Input Methods. Hum. Factors 2021, 63, 974–986. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Yao, Z.; Wang, Y.; Pei, W.; Chen, H. Combining Brain-Computer Interface and Eye Tracking for High-Speed Text Entry in Virtual Reality. In Proceedings of the 23rd International Conference on Intelligent User Interfaces, Tokyo, Japan, 7–11 March 2018; Association for Computing Machinery: Tokyo, Japan, 2018; pp. 263–267. [Google Scholar]

- Munafo, J.; Diedrick, M.; Stoffregen, T.A. The virtual reality head-mounted display Oculus Rift induces motion sickness and is sexist in its effects. Exp. Brain Res. 2016, 235, 889–901. [Google Scholar] [CrossRef]

- Rajanna, V.; Hansen, J.P. Gaze typing in virtual reality: Impact of keyboard design, selection method, and motion. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; Association for Computing Machinery: Warsaw, Poland, 2018; p. 15. [Google Scholar]

- Xu, W.; Liang, H.N.; Zhao, Y.; Zhang, T.; Yu, D.; Monteiro, D. RingText: Dwell-free and hands-free Text Entry for Mobile Head-Mounted Displays using Head Motions. IEEE Trans. Vis. Comput. Graph. 2019, 25, 1991–2001. [Google Scholar] [CrossRef]

- Derby, J.L.; Rickel, E.A.; Harris, K.J.; Lovell, J.A.; Chaparro, B.S. “We Didn’t Catch That!” Using Voice Text Input on a Mixed Reality Headset in Noisy Environments. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2020, 64, 2102–2106. [Google Scholar] [CrossRef]

- Aliprantis, J.; Konstantakis, M.; Nikopoulou, R.; Mylonas, P.; Caridakis, G. Natural Interaction in Augmented Reality Context. In Proceedings of the 1st International Workshop on Visual Pattern Extraction and Recognition for Cultural Heritage Understanding Co-Located with 15th Italian Research Conference on Digital Libraries (IRCDL 2019), Pisa, Italy, 30 January 2019. [Google Scholar]

- Karam, M. A Framework for Research and Design of Gesture-Based Human-Computer Interactions. Ph.D. thesis, University of Southampton, Southampton, UK, 2006. [Google Scholar]

- Koutsabasis, P.; Vogiatzidakis, P. Empirical Research in Mid-Air Interaction: A Systematic Review. Int. J. Hum. Comput. Interact. 2019, 35, 1747–1768. [Google Scholar] [CrossRef]

- Jones, E.; Alexander, J.; Andreou, A.; Irani, P.; Subramanian, S. GesText: Accelerometer-based gestural text-entry systems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; Association for Computing Machinery: Atlanta, GA, USA, 2010; pp. 2173–2182. [Google Scholar]

- Markussen, A.; Jakobsen, M.R.; Hornbæk, K. Selection-Based Mid-Air Text Entry on Large Displays; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Ren, G.; Li, W.; O’Neill, E. Towards the design of effective freehand gestural interaction for interactive TV. J. Intell. Fuzzy Syst. 2016, 31, 2659–2674. [Google Scholar] [CrossRef]

- Ren, G.; O’Neill, E. Freehand gestural text entry for interactive TV. In Proceedings of the 11th European Conference on Interactive TV and Video, Como, Italy, 24–26 June 2013; Association for Computing Machinery: Como, Italy, 2013; pp. 121–130. [Google Scholar]

- Shoemaker, G.; Findlater, L.; Dawson, J.Q.; Booth, K.S. Mid-air text input techniques for very large wall displays. In Proceedings of the GI ′09: Proceedings of Graphics Interface, Kelowna, BC, Canada, 25–27 May 2009. [Google Scholar]

- Adhikary, J.K. Investigating Midair Virtual Keyboard Input Using a Head Mounted Display. Master’s Thesis, Michigan Technological University, Houghton, MI, USA, 2018. [Google Scholar]

- Spiess, F.; Weber, P.; Schuldt, H. Direct Interaction Word-Gesture Text Input in Virtual Reality. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Virtual Event, 12–14 December 2022. [Google Scholar]

- Hincapié-Ramos, J.D.; Guo, X.; Moghadasian, P.; Irani, P. Consumed endurance: A metric to quantify arm fatigue of mid-air interactions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; Association for Computing Machinery: Toronto, ON, Canada, 2014; pp. 1063–1072. [Google Scholar]

- Turner, C.J.; Chaparro, B.S.; He, J. Text Input on a Smartwatch QWERTY Keyboard: Tap vs. Trace. Int. J. Hum. Comput. Interact. 2017, 33, 143–150. [Google Scholar] [CrossRef]

- Gordon, M.; Ouyang, T.; Zhai, S. WatchWriter: Tap and Gesture Typing on a Smartwatch Miniature Keyboard with Statistical Decoding. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: San Jose, CA, USA, 2016; pp. 3817–3821. [Google Scholar]

- Romano, M.; Paolino, L.; Tortora, G.; Vitiello, G. The Tap and Slide Keyboard: A New Interaction Method for Mobile Device Text Entry. Int. J. Hum. Comput. Interact. 2014, 30, 935–945. [Google Scholar] [CrossRef]

- Gorbet, D.J.; Sergio, L.E. Looking up while reaching out: The neural correlates of making eye and arm movements in different spatial planes. Exp. Brain Res. 2019, 237, 57–70. [Google Scholar] [CrossRef]

- Gupta, A.; Ji, C.; Yeo, H.-S.; Quigley, A.; Vogel, D. RotoSwype: Word-Gesture Typing using a Ring. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: Glasgow, UK, 2019; p. 14. [Google Scholar]

- Wang, Y.; Wang, Y.; Chen, J.; Wang, Y.; Yang, J.; Jiang, T.; He, J. Investigating the Performance of Gesture-Based Input for Mid-Air Text Entry in a Virtual Environment: A Comparison of Hand-Up versus Hand-Down Postures. Sensors 2021, 21, 1582. [Google Scholar] [CrossRef]

- Tao, D.; Diao, X.; Wang, T.; Guo, J.; Qu, X. Freehand interaction with large displays: Effects of body posture, interaction distance and target size on task performance, perceived usability and workload. Appl. Ergon. 2021, 93, 103370. [Google Scholar] [CrossRef]

- MacKenzie, I.S.; Soukoreff, R.W. Phrase sets for evaluating text entry techniques. In CHI ’03 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: Ft. Lauderdale, FL, USA, 2003; pp. 754–755. [Google Scholar]

- Wobbrock, J.O. Measures of Text Entry Performance. In Text Entry Systems: Mobility, Accessibility, Universality; Card, S., Grudin, J., Nielsen, J., Eds.; Morgan Kaufmann: Burlington, MA, USA, 2007; pp. 47–74. [Google Scholar]

- Ogawa, A.; Hori, T.; Nakamura, A. Estimating Speech Recognition Accuracy Based on Error Type Classification. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 2400–2413. [Google Scholar] [CrossRef]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Kim, H.K.; Park, J.; Choi, Y.; Choe, M. Virtual reality sickness questionnaire (VRSQ): Motion sickness measurement index in a virtual reality environment. Appl. Ergon. 2018, 69, 66–73. [Google Scholar] [CrossRef]

- Borg, G. Principles in scaling pain and the Borg CR Scales®. Psychologica 2004, 37, 35–47. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Hyönä, J.; Tommola, J.; Alaja, A.-M. Pupil Dilation as a Measure of Processing Load in Simultaneous Interpretation and Other Language Tasks. Q. J. Exp. Psychol. Sect. A 1995, 48, 598–612. [Google Scholar] [CrossRef] [PubMed]

| No. | Stage | Duration (min) | Description |

|---|---|---|---|

| 1 | Pre-experiment practice | 30 | Familiarization with tap and trace input methods, entering five precise phrases using each method with Leap Motion |

| 2 | VR equipment training | As needed | Instruction on employing the VR headset and controllers |

| 3 | Practice before experiment | 20 | Entering 15 phrases using one of the input methods for familiarization |

| 4 | Rest | 15 | Rest period to counteract any potential impact of fatigue |

| 5 | Experiment | 10 | Inputing 10 phrases with precision and celerity using the input method practiced before |

| 6 | Filling out questionnaires | 10 | Removing the HMD and completing the questionnaires |

| 7 | Rest | 15 (or longer) | Rest period to alleviate possible fatigue |

| 8 | Second round of experiment | 40 | Same as steps 3–6, but with the alternate input method |

| 9 | Collection of demographic data | As needed | Collection of data such as age and gender |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Wang, Y.; Li, X.; Zhao, C.; Ma, N.; Guo, Z. A Comparative Study of the Typing Performance of Two Mid-Air Text Input Methods in Virtual Environments. Sensors 2023, 23, 6988. https://doi.org/10.3390/s23156988

Wang Y, Wang Y, Li X, Zhao C, Ma N, Guo Z. A Comparative Study of the Typing Performance of Two Mid-Air Text Input Methods in Virtual Environments. Sensors. 2023; 23(15):6988. https://doi.org/10.3390/s23156988

Chicago/Turabian StyleWang, Yueyang, Yahui Wang, Xiaoqiong Li, Chengyi Zhao, Ning Ma, and Zixuan Guo. 2023. "A Comparative Study of the Typing Performance of Two Mid-Air Text Input Methods in Virtual Environments" Sensors 23, no. 15: 6988. https://doi.org/10.3390/s23156988

APA StyleWang, Y., Wang, Y., Li, X., Zhao, C., Ma, N., & Guo, Z. (2023). A Comparative Study of the Typing Performance of Two Mid-Air Text Input Methods in Virtual Environments. Sensors, 23(15), 6988. https://doi.org/10.3390/s23156988