Robust Localization of Industrial Park UGV and Prior Map Maintenance

Abstract

1. Introduction

1.1. Odometry Based on LiDAR-IMU

1.2. The Problems in the Real-Time Localization of UGV in Industrial Parks

- Given the intricate and expansive nature of industrial parks, traditional algorithms encounter challenges in mitigating odometry drift in extensive environments and over long distances. The utilization of low-frequency odometry with inadequate real-time performance can result in errors and delays in UGV motion control. Consequently, traditional methods struggle to fulfill the high-frequency and real-time localization demands of UGVs [20,21,22,23].

- By leveraging prior maps for real-time localization, the localization accuracy of UGVs within industrial parks can be significantly enhanced. However, the utilization of prior maps for large-scale industrial parks presents challenges, as it necessitates substantial RAM occupancy. Consequently, performing a KNN search on UGVs with limited RAM capacity becomes arduous and impractical [24].

- The edge and planar features in industrial park environments exhibit relative prominence; however, they are often accompanied by numerous unstable features. Traditional methods encounter challenges in efficiently and accurately extracting high-quality feature points, as well as in concurrently extracting ground points in a targeted manner [25,26,27,28].

1.3. New Contributions

- A method is proposed for extracting high-quality feature points using a bidirectional projection plane slope difference filter. This method not only reduces the feature extraction speed but also enhances the quality of feature points. Moreover, it enables the separate extraction of ground points and planar points.

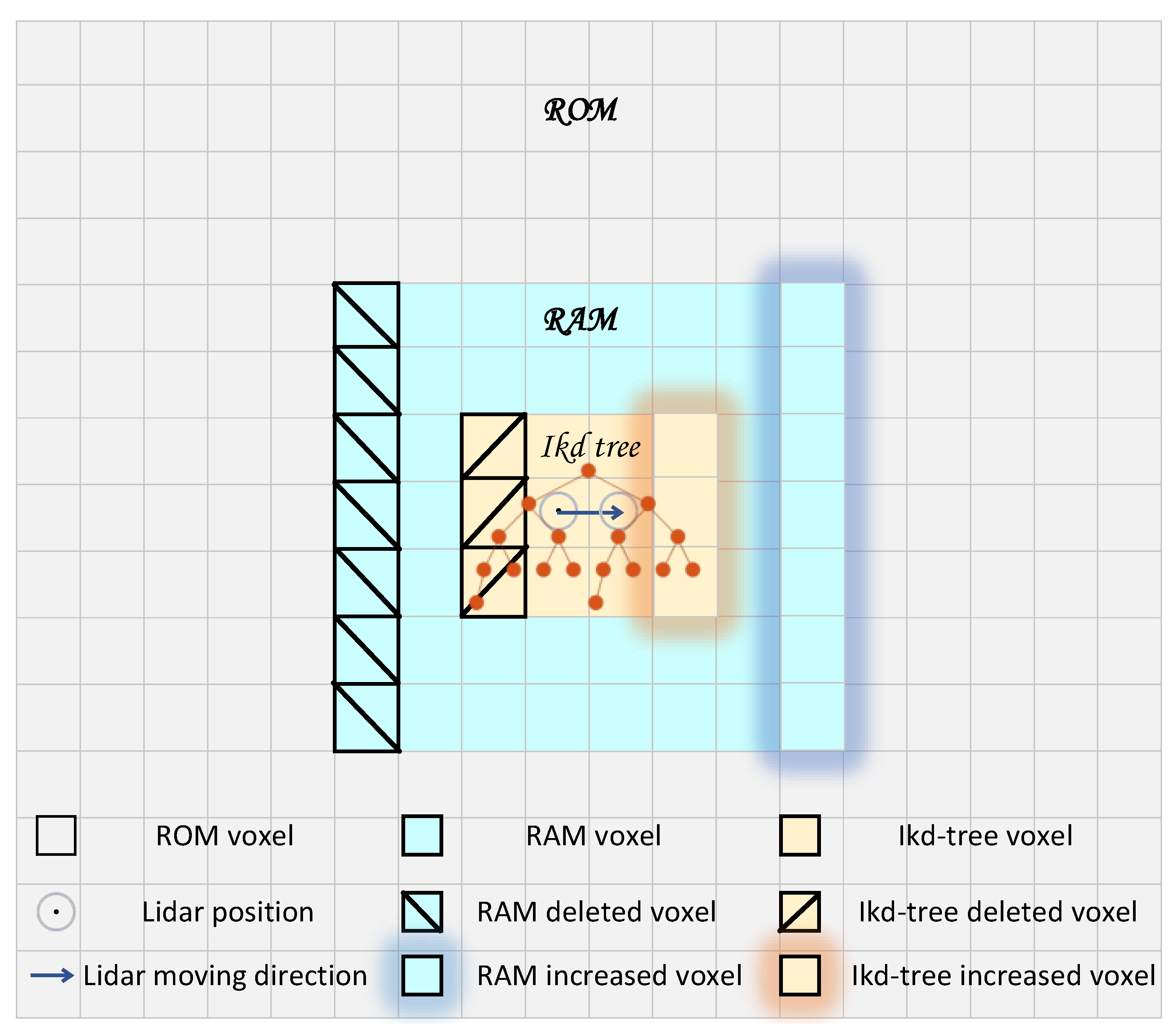

- A maintenance method is proposed for large prior maps, which integrate three-layer voxels with an ikd-tree structure. This method effectively reduces RAM consumption and enables the real-time localization of UGVs using the large prior maps.

- A back-end optimization model incorporating ground constraints is proposed. This method assigns adaptive weights to observation error equations of ground and planar points using the proposed pseudo occupancy method. This method addresses the issue of inadequate lateral or longitudinal constraints that may arise due to a limited number of points on the plane or ground.

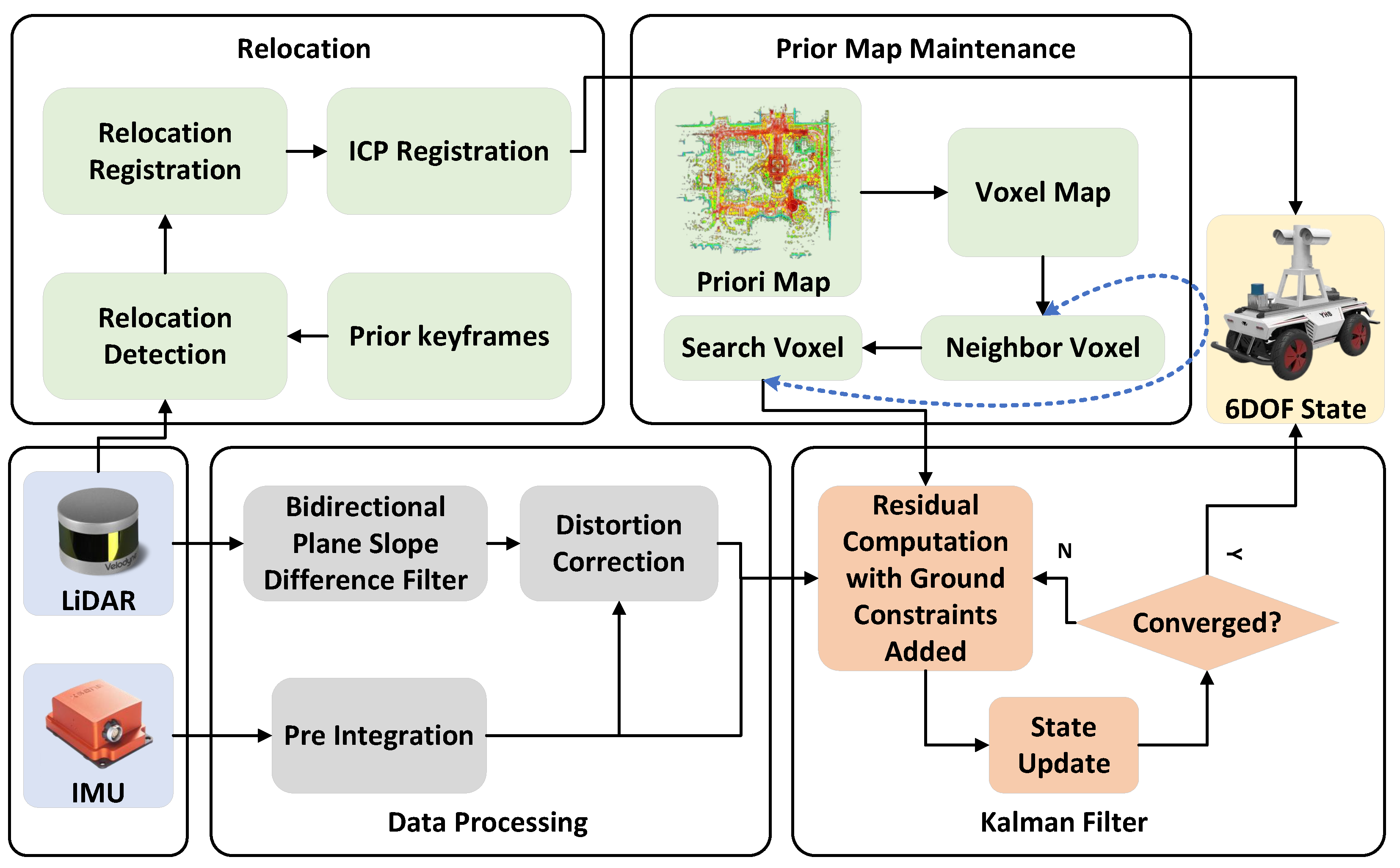

2. Overall System Framework

- Data processing for LiDAR and IMU: the initial pose estimation of the current frame is obtained through the preintegration of the IMU measurements. Subsequently, high-quality planar and ground points are extracted using a bidirectional projection plane slope difference filter. The IMU pre-integration results are propagated backwards to compensate for the motion distortion of LiDAR points in the current frame.

- Tightly coupled LiDAR-IMU odometry: the error equations for prediction and observation with ground constraints in the iterated extended Kalman filter (IEKF) are formulated using the points information and the pre-integration results from the IMU. As part of the estimation process, the pose is cyclically and iteratively updated.

- Relocation: the initial frame captured by the LiDAR is detected and corrected using prior historical keyframes for relocation. The initial frame is registered with the successfully detected historical keyframe, enabling the acquisition of accurate UGV position information within a prior map.

- Prior map maintenance: through the real-time maintenance of the three-layer voxels comprising the overall ROM voxels, nearest-neighbor RAM voxels, and ikd-tree voxels, the prior maps can be efficiently maintained, enabling a fast KNN search while ensuring lightweight operations.

3. Tightly Coupled LiDAR-IMU Odometry

3.1. Feature Extraction Based on Bidirectional Projection Plane Slope Difference Filter

| Algorithm 1: Feature extraction |

|

3.2. IMU Preintegration and Compensation of Point Cloud Distortion

3.3. Back-End Optimization Based on Iterated Extended Kalman Filter

3.3.1. Error State Estimation

3.3.2. Iterated State Update with Ground Constraints Added

4. Relocation

5. Prior Map Maintenance

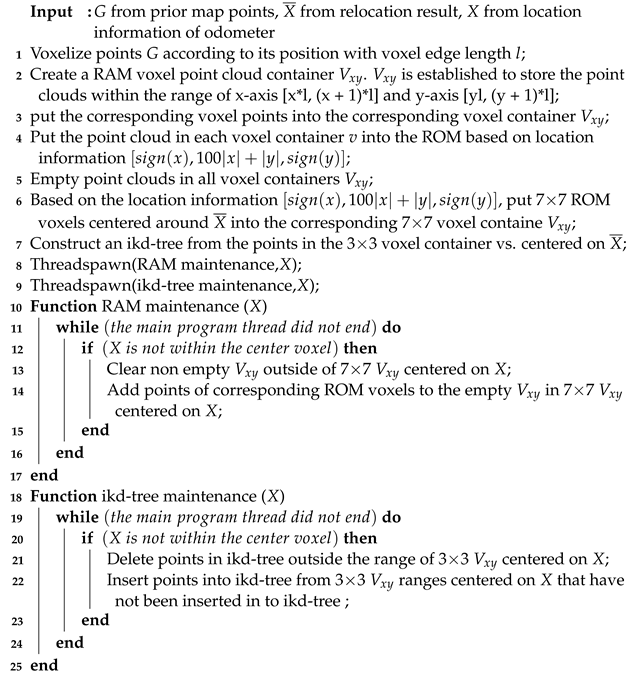

| Algorithm 2: Map maintenance |

|

6. Experiment and Analysis

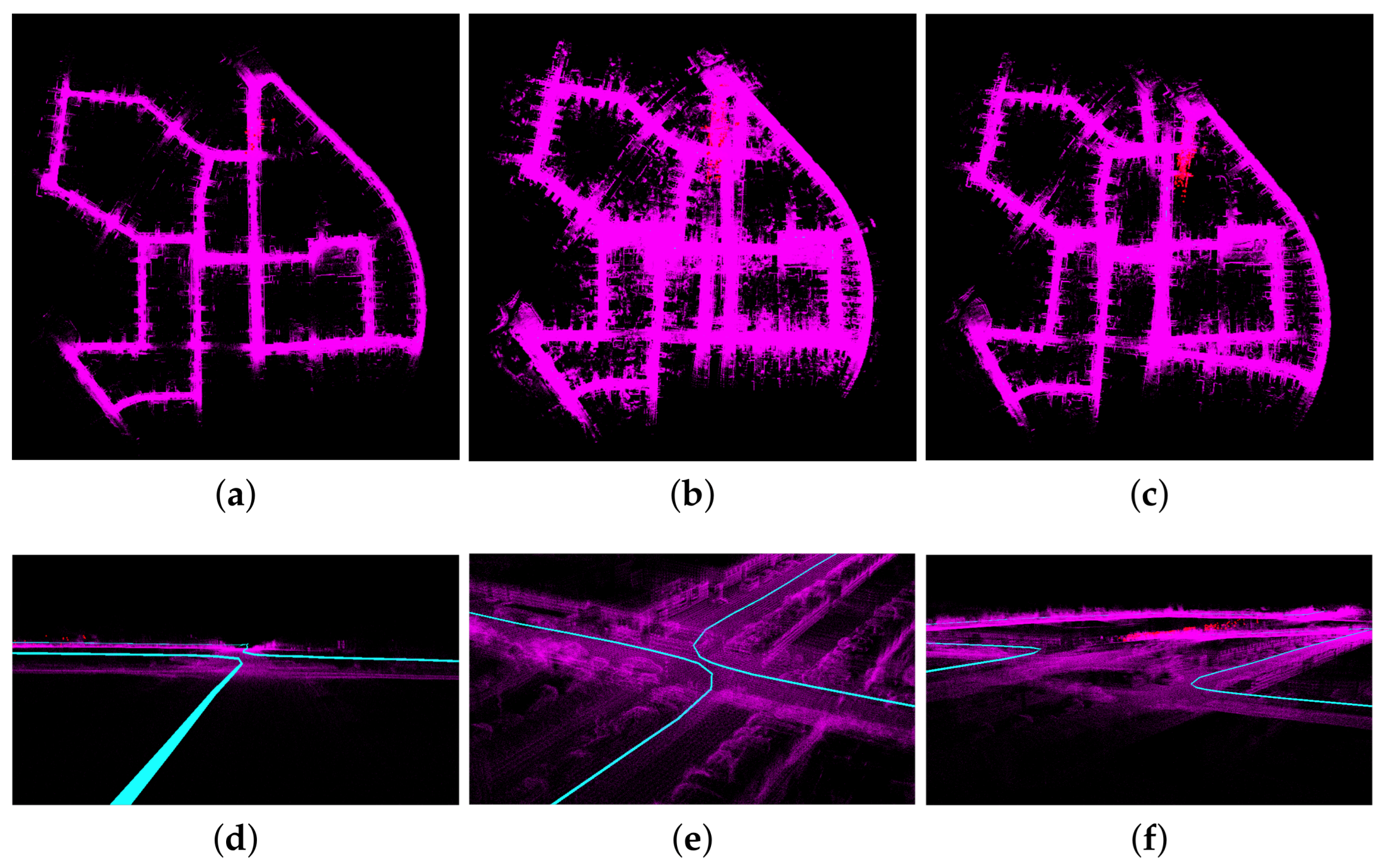

7. Analysis of Mapping Results without Prior Maps

7.1. Analysis of Mapping Accuracy without Prior Maps

7.2. Analysis of Mapping Efficiency without Prior Maps

8. Analysis of Localization Results Using Prior Maps

8.1. Analysis of Localization Accuracy Using Prior Maps

8.2. Analysis of Localization Efficiency Using Prior Maps

8.3. Analysis of Effectiveness of Map Maintenance

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pan, Y.; Wu, N.; Qu, T.; Li, P.; Zhang, K.; Guo, H. Digital-twin-driven production logistics synchronization system for vehicle routing problems with pick-up and delivery in industrial park. Int. J. Comput. Integr. Manuf. 2021, 34, 814–828. [Google Scholar] [CrossRef]

- Nath, S.V.; Dunkin, A.; Chowdhary, M.; Patel, N. Industrial Digital Transformation: Accelerate Digital Transformation with Business Optimization, AI, and Industry 4.0; Packt Publishing Ltd.: Birmingham, UK, 2020. [Google Scholar]

- Sivaneri, V.O.; Gross, J.N. UGV-to-UAV cooperative ranging for robust navigation in GNSS-challenged environments. Aerosp. Sci. Technol. 2017, 71, 245–255. [Google Scholar] [CrossRef]

- Guérin, F.; Guinand, F.; Brethé, J.F.; Pelvillain, H. UAV-UGV cooperation for objects transportation in an industrial area. In Proceedings of the 2015 IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 547–552. [Google Scholar]

- Garzón, M.; Valente, J.; Zapata, D.; Barrientos, A. An aerial–ground robotic system for navigation and obstacle mapping in large outdoor areas. Sensors 2013, 13, 1247–1267. [Google Scholar] [CrossRef]

- Taheri, H.; Xia, Z.C. SLAM; definition and evolution. Eng. Appl. Artif. Intell. 2021, 97, 104032. [Google Scholar] [CrossRef]

- Macario Barros, A.; Michel, M.; Moline, Y.; Corre, G.; Carrel, F. A comprehensive survey of visual slam algorithms. Robotics 2022, 11, 24. [Google Scholar] [CrossRef]

- Huang, L. Review on LiDAR-based SLAM techniques. In Proceedings of the 2021 International Conference on Signal Processing and Machine Learning (CONF-SPML), Stanford, CA, USA, 14 November 2021; pp. 163–168. [Google Scholar]

- Xu, X.; Zhang, L.; Yang, J.; Cao, C.; Wang, W.; Ran, Y.; Tan, Z.; Luo, M. A review of multi-sensor fusion slam systems based on 3D LIDAR. Remote Sens. 2022, 14, 2835. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; Volume 2, pp. 1–9. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference On computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Lin, J.; Zhang, F. Loam livox: A fast, robust, high-precision LiDAR odometry and mapping package for LiDARs of small FoV. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3126–3131. [Google Scholar]

- Jiao, J.; Ye, H.; Zhu, Y.; Liu, M. Robust odometry and mapping for multi-lidar systems with online extrinsic calibration. IEEE Trans. Robot. 2021, 38, 351–371. [Google Scholar] [CrossRef]

- Ye, H.; Chen, Y.; Liu, M. Tightly coupled 3d lidar inertial odometry and mapping. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3144–3150. [Google Scholar]

- Qin, C.; Ye, H.; Pranata, C.E.; Han, J.; Zhang, S.; Liu, M. Lins: A lidar-inertial state estimator for robust and efficient navigation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 8899–8906. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. Lio-sam: Tightly coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5135–5142. [Google Scholar]

- Xu, W.; Zhang, F. Fast-lio: A fast, robust lidar-inertial odometry package by tightly coupled iterated kalman filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Wu, D.; Zhong, X.; Peng, X.; Hu, H.; Liu, Q. Multimodal Information Fusion for High-Robustness and Low-Drift State Estimation of UGVs in Diverse Scenes. IEEE Trans. Instrum. Meas. 2022, 71, 1–15. [Google Scholar] [CrossRef]

- Asadi, K.; Suresh, A.K.; Ender, A.; Gotad, S.; Maniyar, S.; Anand, S.; Noghabaei, M.; Han, K.; Lobaton, E.; Wu, T. An integrated UGV-UAV system for construction site data collection. Autom. Constr. 2020, 112, 103068. [Google Scholar] [CrossRef]

- Liu, K.; Ou, H. A light-weight lidar-inertial slam system with high efficiency and loop closure detection capacity. In Proceedings of the 2022 International Conference on Advanced Robotics and Mechatronics (ICARM), Guilin, China, 9–11 July 2022; pp. 284–289. [Google Scholar]

- Liu, Z.; Chen, H.; Di, H.; Tao, Y.; Gong, J.; Xiong, G.; Qi, J. Real-time 6d lidar slam in large scale natural terrains for ugv. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 662–667. [Google Scholar]

- Hamieh, I.; Myers, R.; Rahman, T. Construction of autonomous driving maps employing lidar odometry. In Proceedings of the 2019 IEEE Canadian Conference of Electrical and Computer Engineering (CCECE), Edmonton, AB, Canada, 5–8 May 2019; pp. 1–4. [Google Scholar]

- Alharthy, A.; Bethel, J. Heuristic filtering and 3D feature extraction from LIDAR data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 29–34. [Google Scholar]

- Guo, S.; Rong, Z.; Wang, S.; Wu, Y. A LiDAR SLAM with PCA-based feature extraction and two-stage matching. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Li, Y.; Olson, E.B. Extracting general-purpose features from LIDAR data. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 1388–1393. [Google Scholar]

- Serafin, J.; Olson, E.; Grisetti, G. Fast and robust 3d feature extraction from sparse point clouds. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4105–4112. [Google Scholar]

- Egger, P.; Borges, P.V.; Catt, G.; Pfrunder, A.; Siegwart, R.; Dubé, R. Posemap: Lifelong, multi-environment 3d lidar localization. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3430–3437. [Google Scholar]

- Li, Y.; Olson, E.B. Structure tensors for general purpose LIDAR feature extraction. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1869–1874. [Google Scholar]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Beetz, M. Learning informative point classes for the acquisition of object model maps. In Proceedings of the 2008 10th International Conference on Control, Automation, Robotics and Vision, Hanoi, Vietnam, 17–20 December 2008; pp. 643–650. [Google Scholar]

- Rusu, R.B.; Bradski, G.; Thibaux, R.; Hsu, J. Fast 3d recognition and pose using the viewpoint feature histogram. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2155–2162. [Google Scholar]

- Raitoharju, M.; Piché, R. On computational complexity reduction methods for Kalman filter extensions. IEEE Aerosp. Electron. Syst. Mag. 2019, 34, 2–19. [Google Scholar] [CrossRef]

- Higham, N.J. Accuracy and Stability of Numerical Algorithms; SIAM: Philadelphia, PA, USA, 2002. [Google Scholar]

- Kim, G.; Kim, A. Scan context: Egocentric spatial descriptor for place recognition within 3d point cloud map. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4802–4809. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Structures; SPIE: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Low, K.L. Linear least-squares optimization for point-to-plane icp surface registration. Chapel Hill Univ. North Carol. 2004, 4, 1–3. [Google Scholar]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, 28 June–1 July 2009; Volume 2, p. 435. [Google Scholar]

- Będkowski, J.; Pełka, M.; Majek, K.; Fitri, T.; Naruniec, J. Open source robotic 3D mapping framework with ROS-robot operating system, PCL-point cloud library and cloud compare. In Proceedings of the 2015 International Conference on Electrical Engineering and Informatics (ICEEI), Denpasar, Indonesia, 10–11 August 2015; pp. 644–649. [Google Scholar]

- Sanfourche, M.; Vittori, V.; Le Besnerais, G. eVO: A realtime embedded stereo odometry for MAV applications. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2107–2114. [Google Scholar]

- Muhammad, H. Htop—An Interactive Process Viewer for Linux. 2015. Available online: http://hisham.hm/htop/ (accessed on 22 May 2015).

| Methods/APE | Max (m) | Mean (m) | Median (m) | Min (m) | Rmse (m) | Std (m) |

|---|---|---|---|---|---|---|

| Proposed method | 25.645 | 11.785 | 11.232 | 1.089 | 12.787 | 4.968 |

| FAST-LIO2 | 33.429 | 16.553 | 16.991 | 1.222 | 18.319 | 7.847 |

| FAST-LIO2 + curvature | 48.196 | 22.783 | 22.108 | 6.580 | 24.707 | 9.559 |

| Method | Proposed Method | FAST-LIO2 + Curvature | FAST-LIO2 |

|---|---|---|---|

| Number of feature points | 5155 | 8549 | 30321 |

| Feature extraction time (ms) | 5.423 | 8.483 | 0 |

| Odometer processing time (ms) | 7.553 | 13.164 | 29.980 |

| Total time(ms) | 12.976 | 21.647 | 29.980 |

| Method/Closed-Loop Error | (m) | (m) | (m) | Total (m) |

|---|---|---|---|---|

| FAST-LIO2 + Prior map (Outdoor) | 0.018 | 0.092 | 0.025 | 0.097 |

| Proposed method + Prior map method (Outdoor) | 0.030 | 0.032 | 0.0003 | 0.044 |

| FAST-LIO2 + Prior map (Indoor) | 0.018 | 0.022 | 0.004 | 0.029 |

| Proposed method + Prior map method (Indoor) | 0.017 | 0.012 | 0.0004 | 0.021 |

| Method | Scene | FAST-LIO2 + Proposed Prior Map Maintenance Method | Proposed Method + Proposed Prior Map Maintenance Method |

|---|---|---|---|

| Feature extraction time (ms) | Outdoor Indoor | 0 0 | 0.963 1.345 |

| Odometer processing time (ms) | Outdoor Indoor | 10.561 12.634 | 6.426 6.964 |

| Total time (ms) | Outdoor Indoor | 10.561 12.634 | 7.389 8.309 |

| Method | Average RAM Consumption (GB) | Average RAM Consumption Percentage | KNN Search Average Time (ms) | Number of Points in Ikd-Tree | Map Range in Ikd-Tree (m) | Prior Map Range (m) |

|---|---|---|---|---|---|---|

| Proposed map maintenance method | 15 | 98% | 0.00316 | 2,335,000 | 240 × 240 | 1320 × 1320 |

| ikd-tree | 5.2 | 34% | 0.00348 | 12,450,000 | 1320 × 1320 | 1320 × 1320 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, F.; Liu, Z.; Zou, F.; Liu, M.; Cheng, Y.; Li, X. Robust Localization of Industrial Park UGV and Prior Map Maintenance. Sensors 2023, 23, 6987. https://doi.org/10.3390/s23156987

Luo F, Liu Z, Zou F, Liu M, Cheng Y, Li X. Robust Localization of Industrial Park UGV and Prior Map Maintenance. Sensors. 2023; 23(15):6987. https://doi.org/10.3390/s23156987

Chicago/Turabian StyleLuo, Fanrui, Zhenyu Liu, Fengshan Zou, Mingmin Liu, Yang Cheng, and Xiaoyu Li. 2023. "Robust Localization of Industrial Park UGV and Prior Map Maintenance" Sensors 23, no. 15: 6987. https://doi.org/10.3390/s23156987

APA StyleLuo, F., Liu, Z., Zou, F., Liu, M., Cheng, Y., & Li, X. (2023). Robust Localization of Industrial Park UGV and Prior Map Maintenance. Sensors, 23(15), 6987. https://doi.org/10.3390/s23156987