1. Introduction

Visual object tracking is an essential branch of computer vision that has recently made significant progress and has been widely applied in various industries, including autonomous driving, intelligent security, and robotics [

1]. The current research on visual object tracking predominantly focuses on visible modality (RGB tracking). This is primarily due to the rich color and texture information that RGB images provide, making target feature extraction more feasible as well as enabling the use of relatively low-cost imaging devices based on visible modality [

2].

However, researchers have identified the limitations of RGB tracking in extreme environments such as rain, fog, and low-light conditions. Thermal infrared imaging, on the other hand, relies on the target’s thermal radiation and is less affected by intensity variations in illumination, making it possible to penetrate rain and fog. It provides a complementary and extended modality to RGB tracking [

3]. This complementarity has led researchers to progressively concentrate more on object tracking based on the fusion of these two modalities to create trackers with higher accuracy and robustness [

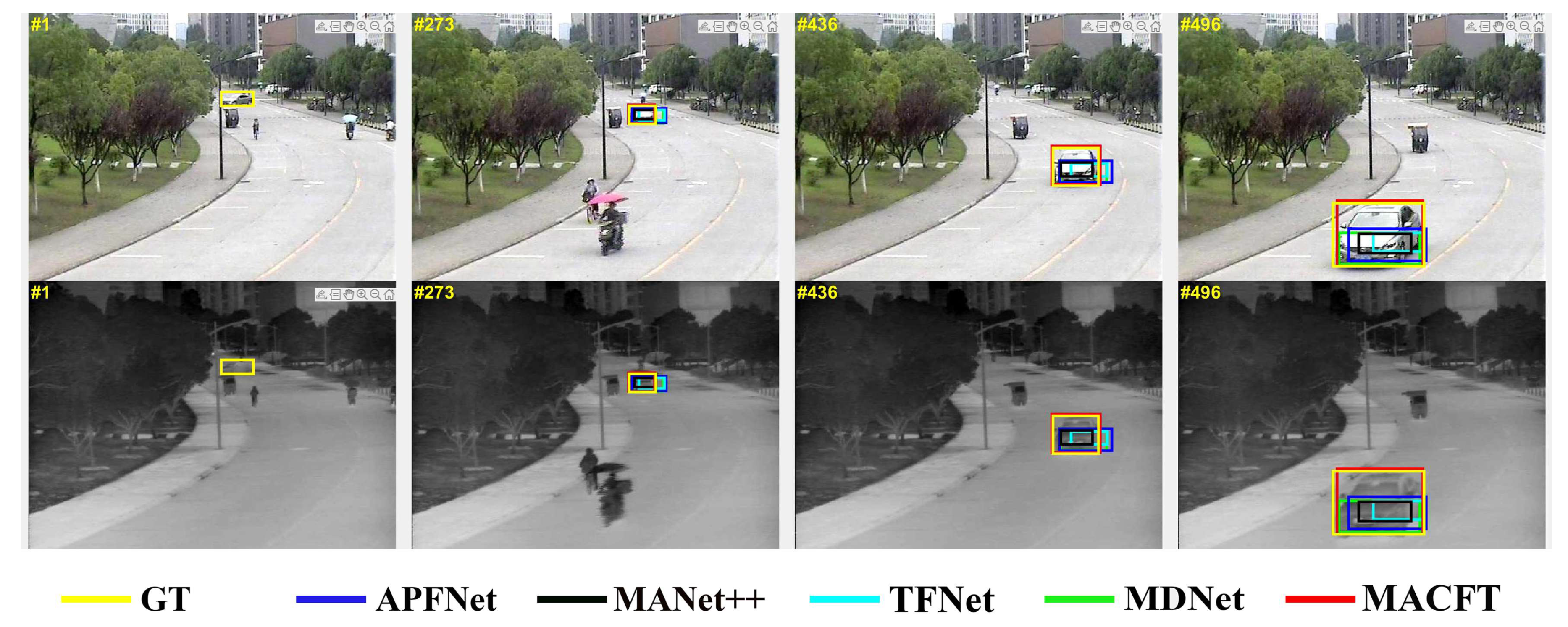

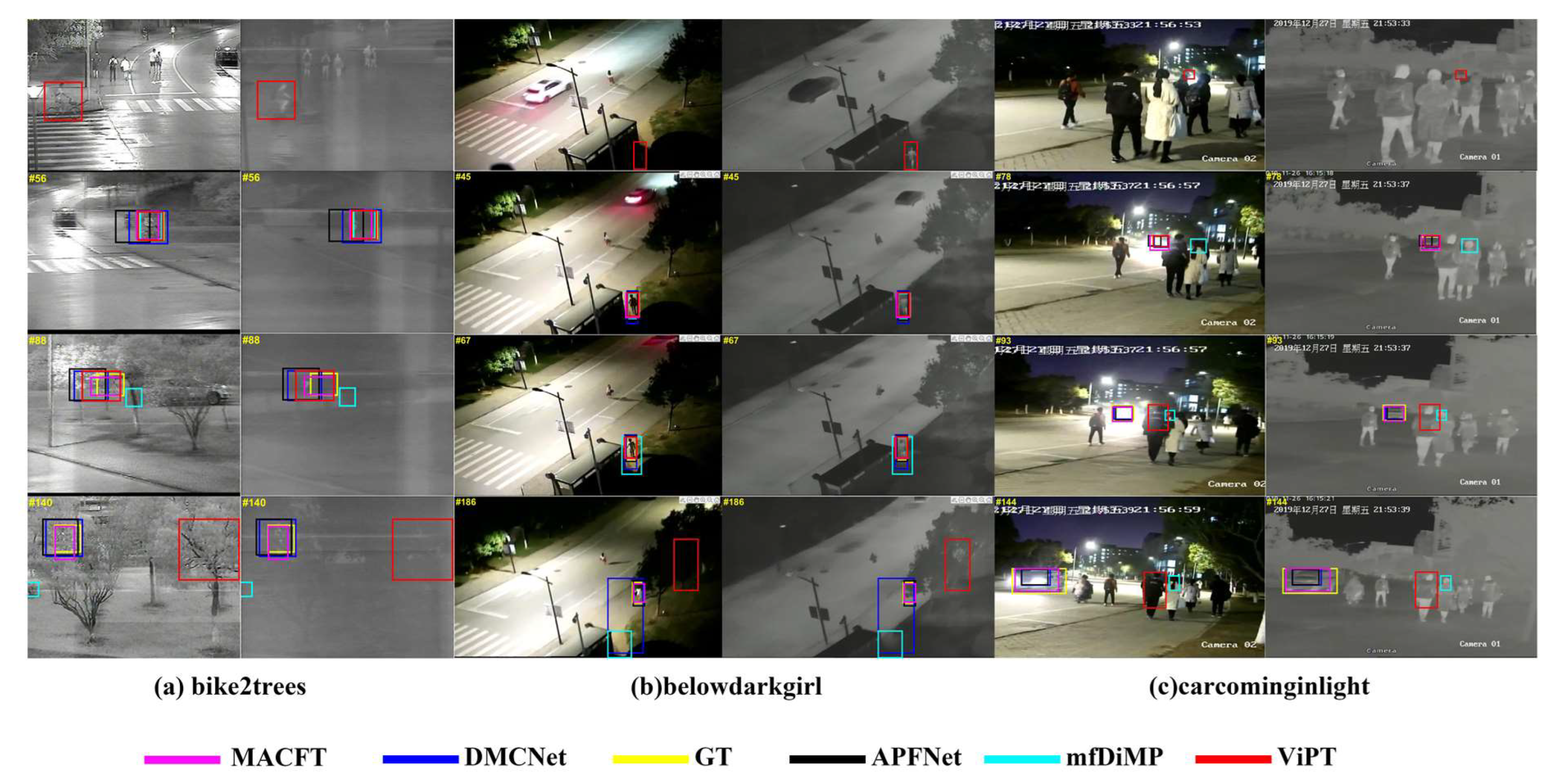

4]. As shown in

Figure 1, the complementary fusion of visible images and thermal images can provide more stable features for target-tracking tasks to overcome environmental challenges that cannot be dealt with in a single modality.

The object tracking method that combines visible and thermal modalities is known as RGB-T tracking. The key point in RGB-T tracking is to achieve complementary information fusion between the visible and thermal modalities. Early RGB-T tracking methods mostly employed CNN for feature modeling. Some methods [

5,

6] simply concatenated the features extracted from the visible and thermal modalities and fed them into a fully connected network to obtain the target position. However, this simple approach introduced excessive background noise, leading to a decrease in the network’s recognition capability. Other methods [

1,

7,

8,

9,

10,

11,

12,

13,

14,

15] first generated candidate boxes (RoIs) from the search frame and then performed feature fusion on the RoIs from different modalities using mechanisms such as gating, attention, and specific data attribute annotations. Finally, binary classification of foreground/background was conducted on the fused features, and the bounding box was regressed. One significant drawback of such methods is that the sizes and ratios of RoI regions are limited and local in nature. They cannot adapt flexibly to variations in the target’s appearance, as illustrated in

Figure 2. Additionally, these methods fail to incorporate sufficient background information for feature learning. This limitation results in inadequate modeling of the global environmental context in the search framework due to the potential insufficient feature interaction between RoIs from different modalities. Consequently, the mutual enhancement and complementary effects between the two modalities are restricted.

To enhance the adaptive fusion capability of the algorithm for complementary information from different modalities, we have designed a tracking architecture based on a combination of multiple attention mechanisms (referred to as MACFT). This architecture can learn the complementary features between visible and thermal modalities. The core of the architecture is the modality shared-specific feature interaction (SSFI) module. The SSFI module performs cross-attention and mixed-attention operations on the shared and specific features from both the visible and thermal modalities. The cross-attention operation facilitates the interaction of information between modalities, while also merging the shared and specific features to suppress the weights of low-quality modalities. The mixed-attention operation is used to enhance the fused modality information and further remove redundant noise.

Furthermore, in the feature extraction stage, considering that the effective receptive field of CNN may grow much more slowly in practice than theoretically due to the continuous downsampling process [

16], there is still a significant amount of local information retained even after multiple convolutional layers. To achieve long-range modeling of target pixels, we employ the Vision Transformer [

17] architecture, which has been widely used in recent years for Single-Object Tracking (SOT), as the backbone of our network. We extend it to a dual-branch Siamese architecture. Additionally, although visible and thermal images have different imaging wavelengths, they still share many correlations, such as object edges, contour information, and fine-grained texture details [

9]. Therefore, apart from the dual-branch backbone used to extract features from visible and thermal images separately, we introduce a shared-parameter backbone. By introducing a KL divergence loss function as a constraint, we maintain the consistency between modalities and learn shared features across modalities. Finally, we insert the SSFI module after the backbone to perform feature fusion and achieve cross-modal interaction.

After cross-fusing features from the modality-specific feature branch and the modality-shared feature branch, we generate the target bounding box with only a simple structured corner point predictor. After extensive experiments on several public RGB-T tracking datasets, we verify that the proposed method is effective and outperforms most of the current state-of-the-art RGB-T tracking models.

The main contributions of this paper are summarized as follows. Firstly, a high-performance RGB-T tracking method has been proposed, which utilizes a deep transformer-based backbone to extract both the specific features and shared features from visible and thermal modality images. This method demonstrates excellent performance in challenging scenarios such as significant target deformations and occlusions. Secondly, a novel modality feature fusion structure based on a combination of multiple attention mechanisms is designed, and through the cross-input and fusion of cross-modal shared/specific information, the complementary features between modalities can be fully interacted. It can adaptively suppress low-quality modalities and enhance the features of dominant modalities for tracking. Thirdly, as a versatile fusion strategy, the module we designed can be easily extended to other multi-modal scenarios.

3. Method

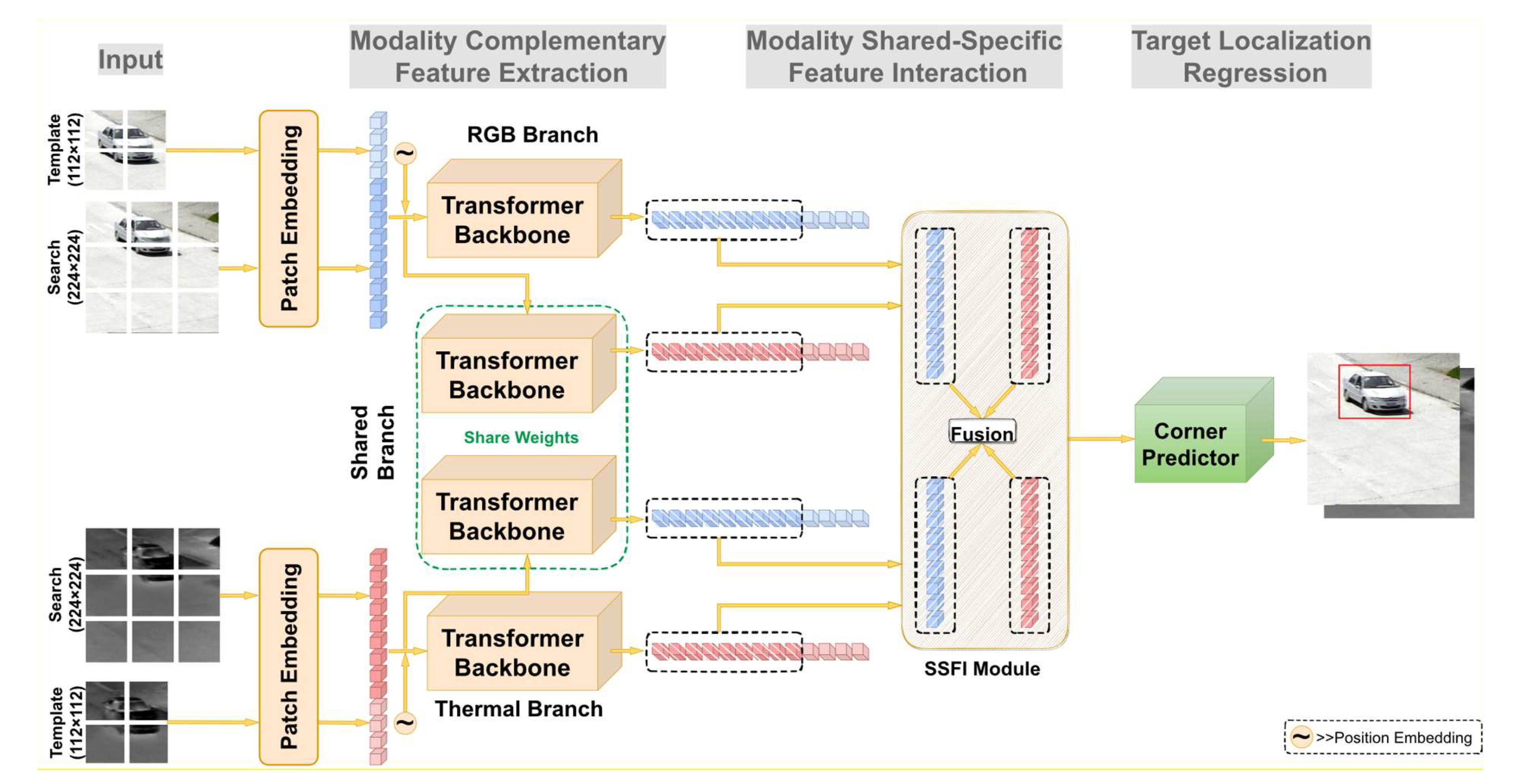

In this section, our proposed RGB-T tracking model MACFT is described in detail, as shown in

Figure 3. The whole model consists of three parts, which comprise the modality complementary feature extraction network based on the transformer backbone, the modality shared-specific feature interaction module based on the mixed-attention mechanism, and the target localization regression network; each of these components will be discussed more specifically later. The overall information pipeline of the model can be summarized as follows: after the images from the two modalities are sent to different feature extraction backbones, the modal-shared features and modal-specific features will be obtained, respectively, and then these features will be crossed in pairs and fused by the SSFI module (generating a group of shared features of visible mode and specific features of thermal mode, and a group of shared features of thermal mode and specific features of visible mode). The fused features are then fed into the target localization network to generate the position of the target. Additionally, the explanation of symbols used in this section will be shown in Abbreviations section.

3.1. Transformer-Based Complementary Feature Extraction Backbone

In our proposed model, the feature extraction part is divided into four branches, namely, the visible modal-specific feature extraction branch, the thermal modal-specific feature extraction branch, and two modal-shared feature extraction branches. ViT [

17] served as the base backbone for all four branches, initiated with parameters from the CLIP [

39] pre-trained model. Furthermore, the template image is concatenated with the search image in the same dimension to achieve information interaction. The process is detailed as follows.

3.1.1. Model-Specific Feature Extraction Branch

First, given a video sequence, we select the first frame as the reference frame and crop the template image according to the labeled bounding box, where is the size of the template image, and accordingly, the subsequent frames in the video sequence are used as the search image with the size set to . To accommodate the generic ViT backbone, we set the search image size to 224 × 224 and the template image size to 112 × 112.

Before using the transformer backbone for feature extraction, the input image is first serialized, i.e., patch embedded, by dividing the 2D image into N blocks of size (P is the size of patch; here, P = 16 since the backbone used is ViT-B-16, and C is the number of channels of the image (here C = 3), before projecting the patch onto the space with dimension D through a linear transformation. For our input template image and search image, the dimensions of the image after serialization will be transformed into and , where and .

For the transformer backbone, there is an additional step in the input process which is to add positional encoding to help the model distinguish the order of the input sequence. For the search image sequence, the pre-trained positional encoding can be used directly because its size is consistent with the pre-trained ViT backbone input. However, for the template image, its size is not aligned with the ViT input and cannot accommodate the pre-trained positional encoding, so we set up a learnable positional encoding structure that consists of a two-layer fully connected network, which is added to the template image sequence to obtain the positional encoding vector of the template image.

After adding the position encoding vector, we obtain the final input vectors

and

, and subsequently, we concatenate

along the first dimension and feed them into the transformer backbone network for learning. Let the concatenated vector be

:

Let the output of layer n in the backbone network be

; then we have:

where

denotes mixed-attention operation and

denotes feed forward network. The calculation within

can be expressed as follows:

where

.

It is in the cross-attention operations such as

and

that the model learns the relationship between the template image and the search image, and in the self-attention operations such as

and

that this relationship is self-enhanced. We construct two identical and independent feature extraction branches for RGB and thermal images based on the above method for extracting features unique between different modalities. Let the feature vectors output from the two branches be:

3.1.2. Modal-Shared Feature Extraction Branch

To better construct a complementary feature representation between the visible and thermal modalities, we add two modal-shared feature extraction backbones to the model (again using ViT as the backbone with the same input structure as the modal-specific feature branch) for learning features shared by both modalities. Further, to be able to constrain the backbone of this branch to ensure that its output features are consistent across the two modalities, we introduce a Kullback–Leibler (KL) divergence loss

. The formula is expressed as follows:

where

,

denote the output vectors (after

softmax) of the visible modality input and thermal modality input through the shared feature branch backbone, respectively, while

denote the nth term of the first dimension in

. KL divergence is based on the information entropy principle, and it can accurately measure the information lost when approximating one distribution with another, so it can be used to calculate the similarity between two distributions.

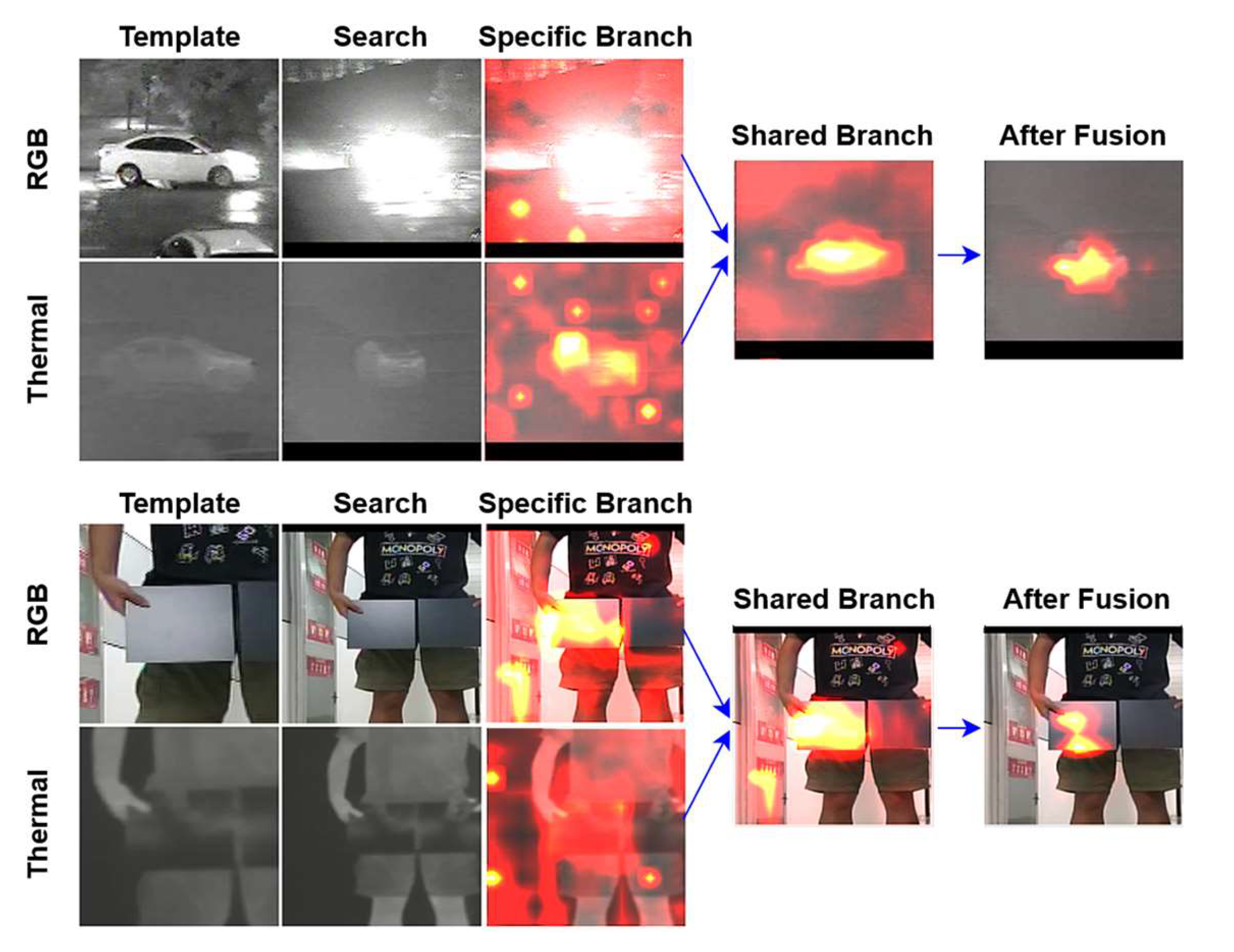

3.2. Modality Shared-Specific Feature Interaction Module

After obtaining the features from the four branches (the visible branch, the thermal branch, and the shared branches), we design a modality shared-specific feature interaction module based on the mixed-attention mechanism, with the aim of learning an adaptive discriminative weight for features from different modalities, thus enhancing high-quality modality while suppressing low-quality modality.

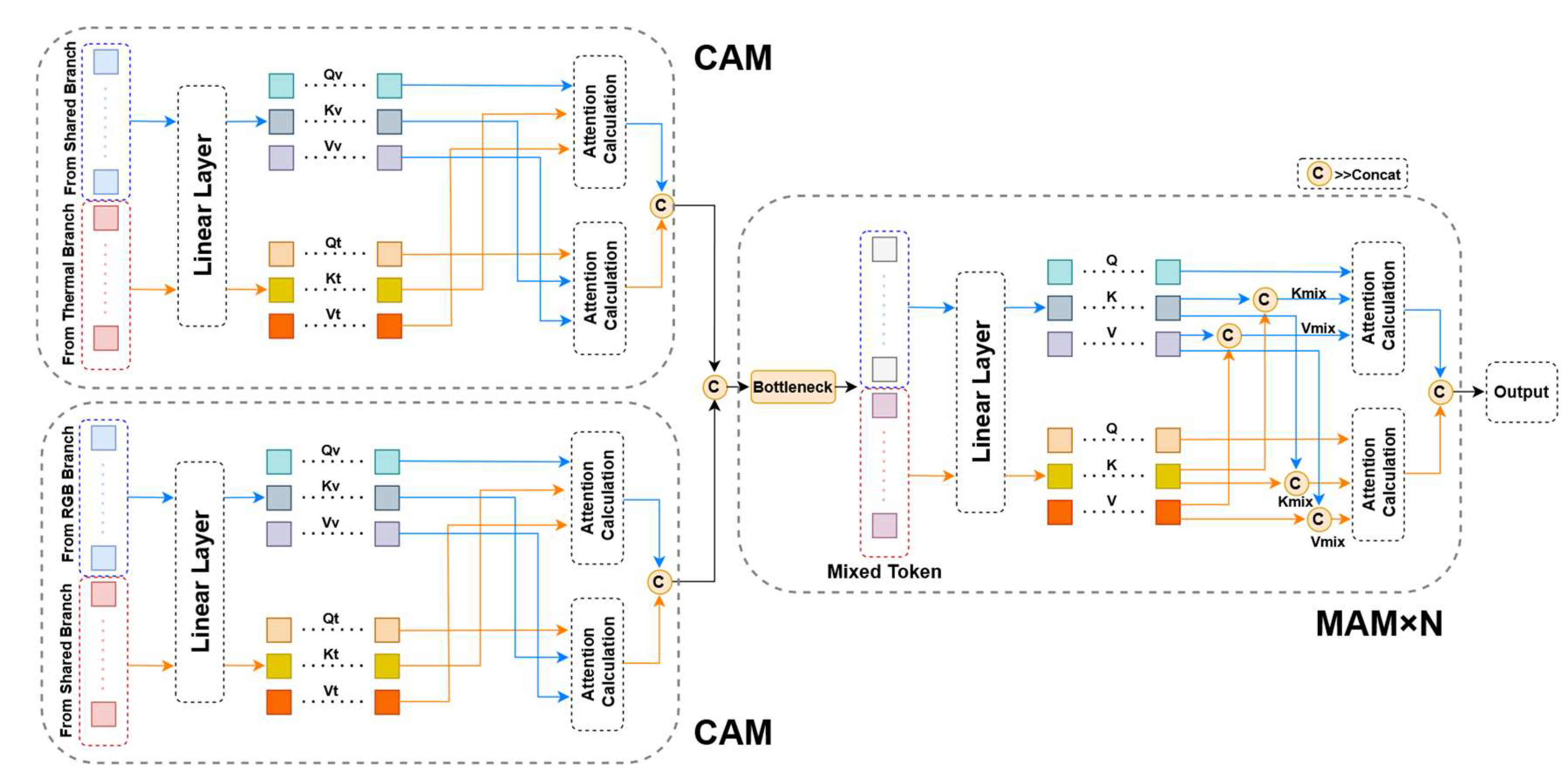

As shown in

Figure 4, we use two types of attention mechanisms in the SSFI module: the cross-attention module (CAM) and the mixed-attention module (MAM).

Given a vector consisting of visible features connected with thermal features

, we first map the input into three equally shaped weight matrices called

query,

key, and

value by a linear layer.

are used below to denote the visible part and

denote the thermal part. From (3), the calculation of MAM can be described as:

where

,

,

,

in the same way.

The CAM module, on the other hand, eliminates the self-attention component and retains only the cross-attention, which can be described as:

In the SSFI module, we connect the outputs from the visible modal-specific feature branch with the modal-shared feature branch (thermal part), and from the thermal modal-specific feature branch with the modal-shared feature branch (visible part), but only the part with the same size as the search image is selected and the template part is discarded:

Subsequently,

are fed into the CAM module for modal information interaction:

Only cross-attention is used here for the fusion of features from different branches due to the fact that sufficient self-enhancement has been performed in the backbone for the features from each modality, and there is no need to add extra self-attention modules. Additionally, removing the self-attention operation can also reduce the model’s parameter count. However, in the following MAM modules, we introduce self-attention operation because the initial interaction mapped features from different modalities into a new space, necessitating further enhancement of feature representation with the self-attention mechanism. These points will be supported in Chapter 4 in the subsection on ablation studies.

Finally, we connect the output of the CAM module and reduce the dimension and send it to the MAM module to further enhance the information interaction between the modalities:

3.3. Target Localization Regression Network

After the SSFI module, we use a lightweight corner predictor to localize the target. First, the features are reshaped into 2D; then, for the top left and bottom right corners of the bounding box, the features are downsampled using 5 convolutional layers, and finally the corner coordinates are calculated using the softArgmax layer and are reverse mapped to the coordinates corresponding to the original image according to the scale of the image crop.

3.4. Training Method

3.4.1. Training in Stages

We employ a staged approach for model training, which comprises three stages. The first two stages involve backbone training, requiring the modality fusion network to be removed while retaining only the corner predictor.

In the first stage, we freeze the modal-shared feature branch and load the pre-trained model to initialize the backbone of the modal-specific feature branches. We then train the backbone of the corresponding branches using RGB and thermal image data and save the model parameters.

In the second stage, the modal-specific feature branches are frozen, and the modal-shared branch is unfrozen. During the forward pass, RGB and thermal images are repeatedly used to update the parameters of the backbone, and the model parameters are saved.

In particular, during the training of the feature extraction backbone in the first two stages, in order to reduce the number of trainable parameters of the network and considering the shared characteristics of the low-level features between images, we freeze the first eight multi-head attention modules of the ViT backbone and only fine-tune the last four.

In the third stage, all feature extraction backbone branches are frozen, and the models trained in the first two stages are loaded for initializing the backbone parameters. The modality fusion network (SSFI) is added for training and the model parameters are saved.

3.4.2. Loss Function

The model proposed in this paper uses a total of three loss functions in the offline training phase, which are

Ɩ1 loss, generalized GioU loss [

40], and KL divergence loss, and the loss functions are combined in the first and third stages of model training in the following manner:

where

denotes the

Ɩ1 loss,

denotes the generalized GIoU loss,

denotes the target bounding boxes predicted by the model on the search image,

denotes the true value of the target bounding boxes, and

and

are the weight parameters of the loss.

In the second stage of model training, we additionally introduce the KL divergence loss, and the loss function is combined in the following way:

where

denotes KL divergence loss,

is its weight, and

, represent the target bounding boxes obtained from the RGB image and thermal image after modal-shared feature branches and corner predictors, respectively.

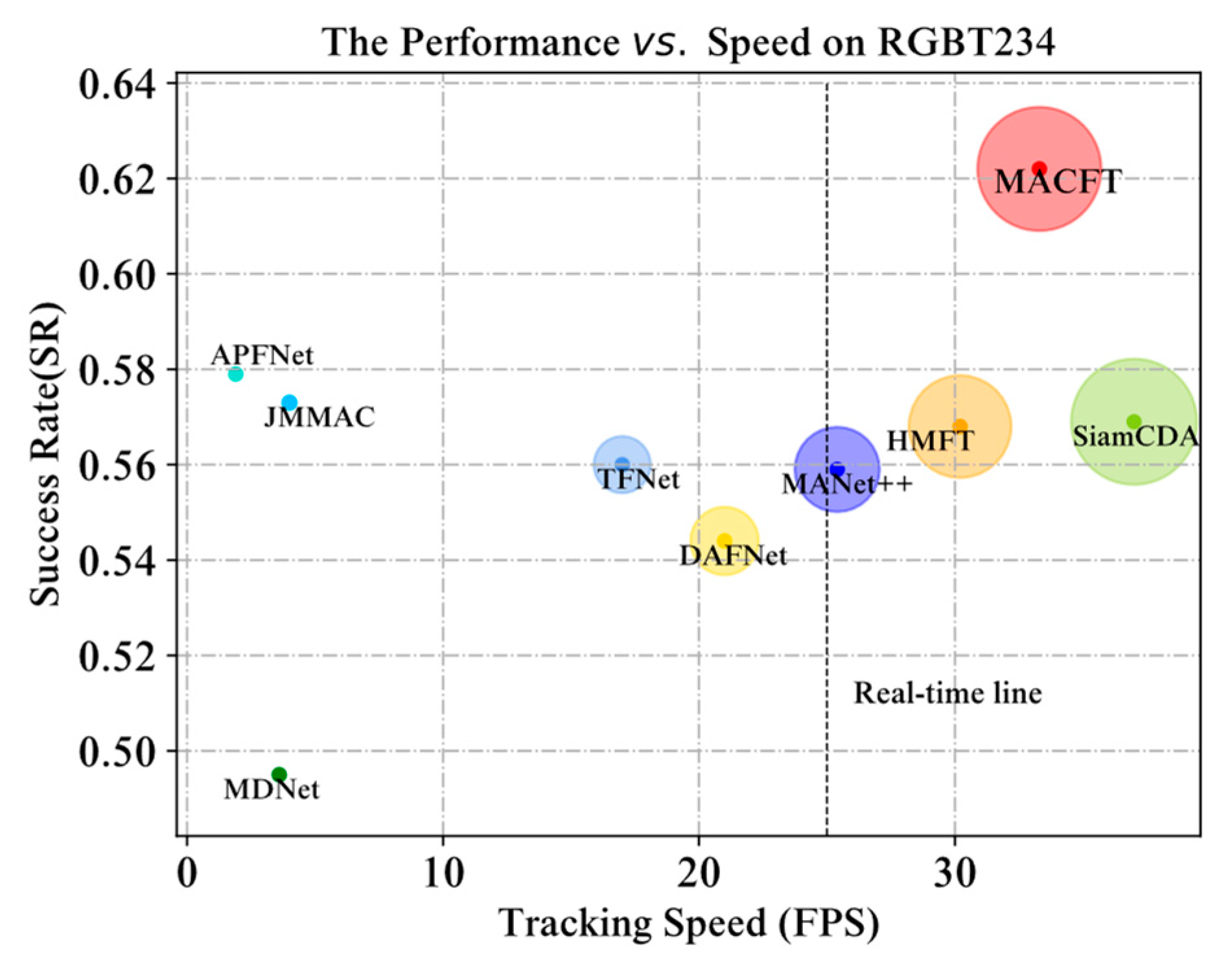

3.5. Inference

The RGB-T tracking model proposed in this paper does not contain any additional post-processing operations in the inference stage, and consists of only a few steps of image sampling, forward pass, and coordinate transformation, so MACFT can run in real time at a high speed.

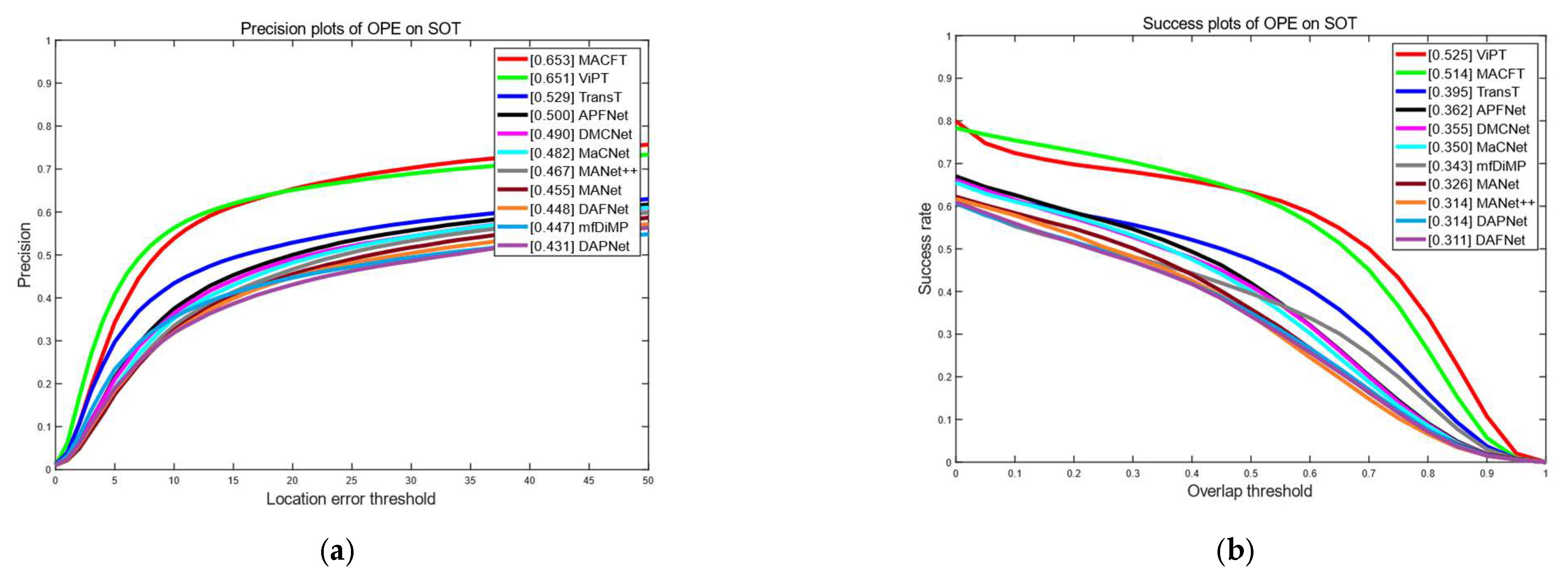

5. Conclusions

In this paper, we propose an RGB-T tracking method, MACFT, based on a mixed-attention mechanism. Four separate branches are established to extract modal-specific features and modal-shared features, and information interaction between the template image and the search image is introduced in each layer of the backbone. In the modality fusion stage, both cross-attention and mixed-attention are used to fuse the image features from different branches, which effectively reduces the noise from low-quality modality and enhances the feature information of the dominant modality. After conducting extensive experiments, we demonstrate that MACFT can understand the high-level semantic features of the target well, thus enabling it to cope with multiple challenges and achieve robust tracking.

However, our work still has some limitations, such as insufficient discriminability of target instances, large parameter count, and complex training procedures. In the future, we will address these issues by exploring various approaches, including but not limited to designing lightweight fusion modules, employing knowledge distillation to reduce parameter count, and integrating modal feature fusion into the feature extraction process to simplify training steps. At the same time, we will also explore ways to make the model compatible with more other modalities.