Abstract

Due to the advantages of simple construction, easy application and good environmental suitability, handheld structured-light measurement systems have broad application prospects in 3D measurements. Here, a monocular-vision-guided line-structured-light measurement system is developed, and the posture of the handheld device can be obtained via a specifically designed target attached to it. No more marker points need to be adhered onto the object under inspection. The key for the system calibration is to obtain the coordinate transformation matrix from the sensor to the featured target coordinate system. The mathematical model of the system is first established. Then, an improved multi-view calibration method is proposed, where a selection process for the image pairs is conducted for accuracy improvement. With this method, the maximum relative error of the measured stair heights can be reduced from 0.48% to 0.16%. The measurement results for the specific parts further verified the effectiveness of the proposed system and the calibration method.

1. Introduction

Line-structured-light sensors (LSLSs) are based on the optical triangulation principle. They generally consist of a camera, a laser line projector and a frame that connects them together [1,2]. In the measurement process, a laser plane that emits from the laser line projector intersects the surface under inspection and a perturbed stripe that carries the profile information can be captured by the camera [3,4]. Since the relative position between the laser plane and the camera is fixed, the point coordinates on the intersection profile can be calculated through the pre-calibrated sensor parameters [5].

To achieve the measurement of three-dimensional (3D) surfaces, the LSLS needs to be integrated with other motion coordinates [6,7,8]. According to different measurement requirements, the type and number of motion coordinates are varied. The sensor can be integrated with a linear stage [9], a rotational table [10], a combination of linear and rotation motion stages [7], robotic arms [8,9] and an articulated arm measurement system for portable measurement applications [11,12,13]. However, the measurement range is limited by the motion range or the structural size of the mechanical systems. The integrity is affected by the degree of motional freedoms. Measurement accuracy also highly depends on the motion accuracy of the mechanical devices.

Compared with the motional-axes-based line-structured-light measurement systems (LSLMS), the handheld measuring devices are more flexible. The objects can be scanned from different viewpoints to ensure measurement integrity [14,15,16], although the accuracy of the result highly relies on the data fusion techniques and has the problem of unsmooth edges [17,18]. Data fusion via marker points can lead to a higher accuracy and has been widely adopted by the commercial handheld scanners [19]. Users need to adhere a sufficient number of highly reflective marker points onto the object; this is inconvenient. Another choice is to obtain the posture of the handheld device by use of an inertial measurement unit (IMU) [20,21]. The posture and the acceleration data from the IMU are greatly affected by external forces, vibrations and environments. It is difficult to achieve a high-precision measurement result with commercial IMU components.

Ayaz et al. [22] studied a 3D handheld scanning system based on visual–inertial navigation and structured light. This system had a limited scanning range and could only use block-matching algorithms for 3D reconstruction, resulting in low accuracy. Additionally, the introduction of inertial sensors reduced the scanning efficiency. Wang et al. [23] proposed a handheld laser scanning system for the on-site measurement of large objects. To collect the data points near the edges and highly curved areas of the object, it was required to paste density markers onto the object. Peng et al. [24] introduced a mechanical structure for an LSLS and a portable box volume measurement system based on deep learning. However, it needed strict calibration requirements and exhibited significant uncertainty when measuring the different surfaces of a box. These handheld devices require the attachment of markers onto objects for point cloud stitching. However, pasting marker points onto the object is inconvenient and sometimes not allowed. Here, a handheld LSLMS based on a non-planar feature target is presented to make the measurement task more convenient.

System calibration of the handheld measurement system can be summarized as a hand–eye problem. Currently, this problem can be solved based on the quaternion algebra [25], the Euclidean group [26], the dual quaternion [27], the Kroenke product [28,29], etc. Besides these, Cao et al. [30] combined neural networks with robot joint angles to compensate for non-geometric errors. Guo et al. [31] optimized the hand–eye relationship through the least squares method and the particle swarm optimization algorithm. Pachtrachai et al. [32] optimized the hand–eye matrix by the rotation translation matrix obtained by alternating iteration. These calibration methods are based on the fact that the initial position and pose have small deviations from the ideal value. Moreover, the original hand–eye data for the optimization process show a significant impact on the final calibration results. The method of how to reject unfavorite data still needs to be analyzed.

In this paper, a high-precision calibration method for handheld LSLMS is proposed by use of multi-view image pairs. Large noise images are eliminated through the data selection. The method improves the calibration accuracy of the coordinate transformation relationship between the sensor and the featured target coordinate system, leading to a better measurement result.

2. Mathematical Model

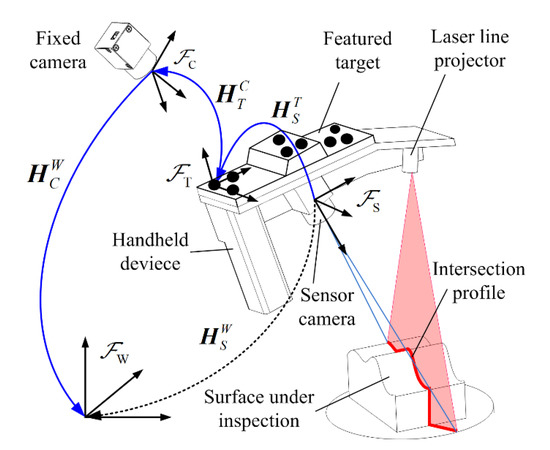

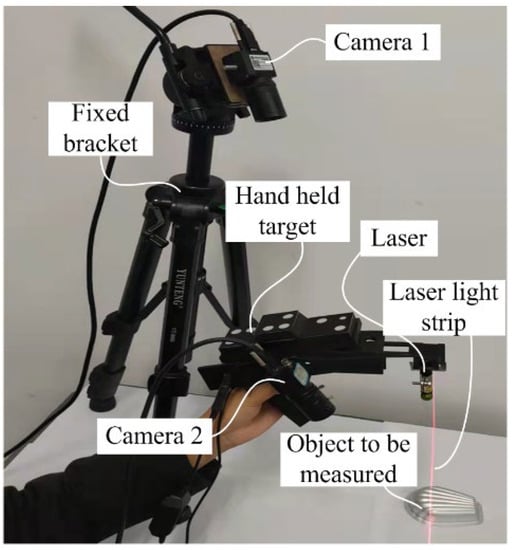

The proposed monocular-vision-guided LSLMS is shown in Figure 1. This system consists of a laser line projector, two cameras and a featured target. The laser line projector and one camera are fixed onto a handheld device, constituting an LSLS. With this sensor, an arbitrary intersection profile between the laser plane and the object under inspection can be obtained within the sensor coordinate frame S. The other camera is fixed in the world coordinate frame W and captures the image of the featured target attached to the handheld device. C and T denote the fixed camera coordinate frame and the featured target coordinate frame, respectively. The posture of the handheld device can be computed in a real-time manner. By transforming the obtained profiles from S to T and then to C, we can obtain a series of intersection profiles on the object surface.

Figure 1.

Illustration of the measurement system.

Assuming Ps = (Xs, Ys, Zs, 1)T is a point on the intersection profile with the sensor coordinate values, Pw = (Xw, Yw, Zw, 1)T is its corresponding coordinates within W, then

where , and represent the coordinate transformation matrix and are denoted in Figure 1. can be computed in real time when the intrinsic parameters of the fixed camera and the dot interval distances on the feature target are known. only affects the relative position of the measurement results and has no influence on the geometrical accuracy. Thus, the key issue for system calibration is to obtain the value of .

3. Calibration Method

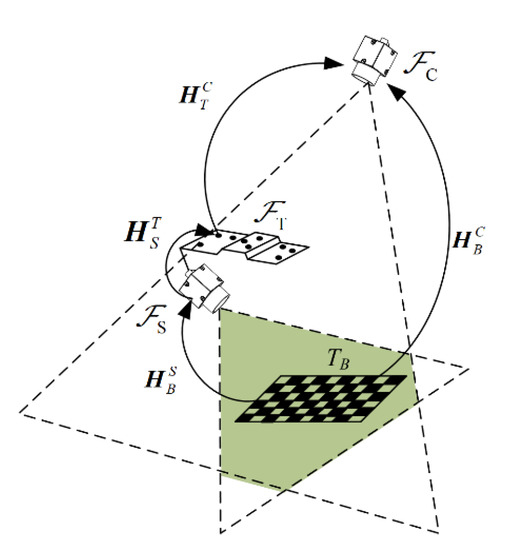

3.1. Common Field-of-View Calibration Method

The common field-of-view calibration method (CFVCM) is shown in Figure 2. A chessboard target TB is placed within the overlapped visual field of two cameras. The featured target on the handheld device is also located in the visual field of the fixed camera. Then, the transformation matrix from the sensor camera to the featured target can be expressed by

where and are the coordinate transformation matrices from the chessboard target to the sensor and the fixed camera, respectively. Both of them can be calculated via the intrinsic parameters and the known target parameters.

Figure 2.

Common field-of-view calibration method.

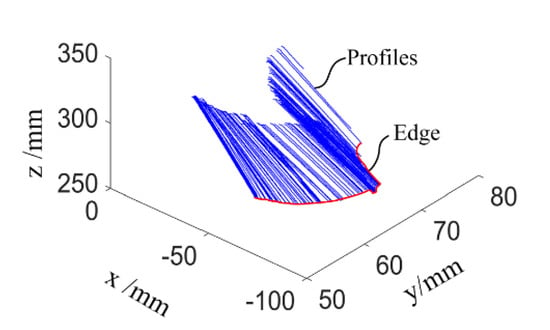

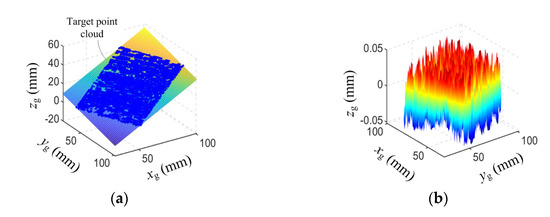

With this calibration method, a plane with a size of 60 × 60 mm is scanned, and the measurement point cloud is shown in Figure 3. The result is totally different from what we expected. Although all the intersection profiles are straight lines, they align in a disordered manner. The edge of the plane should also be a straight line. Now, it becomes an irregular space curve.

Figure 3.

Measurement result for a plane with the CFVCM.

This failure result is most probably introduced by an inaccurate calibration result for , which occurred for the following reasons. To achieve this calibration, both the planar target and the featured target should be located within the visual field of the fixed camera. The calibration target TB should also have a reasonable distance from the sensor camera to ensure image quality. Therefore, a significant difference exists between the planar and the featured target in the optical axis direction of the fixed camera. Additionally, this direction is also the error sensitive direction for the extrinsic parameter computation of and . This is why the calibration results of cannot meet the accuracy requirements of the system.

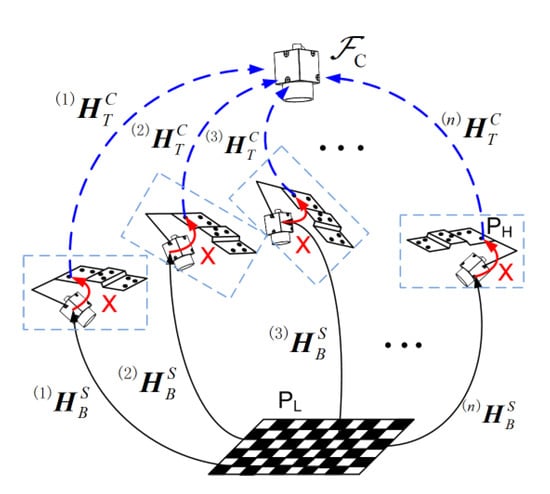

3.2. Improved Multi-View Calibration Method

To improve the calibration accuracy of , an improved multi-view calibration method (MVCM) is proposed, as shown in Figure 4. For easy illustration, is represented by X. (n) stands for the posture of the featured target at the nth view of the handheld device; (n) is the posture of the handheld camera that is computed via the chessboard underneath.

Figure 4.

Multi-view calibration method.

As the relative position between the chessboard and the fixed camera is unchanged, an arbitrary point on the chessboard has a constant coordinate value in the coordinate frame of C. Its coordinate value is irrelevant to the posture of the handheld device. The following equation can be achieved for two arbitrarily chosen positions of the handheld device:

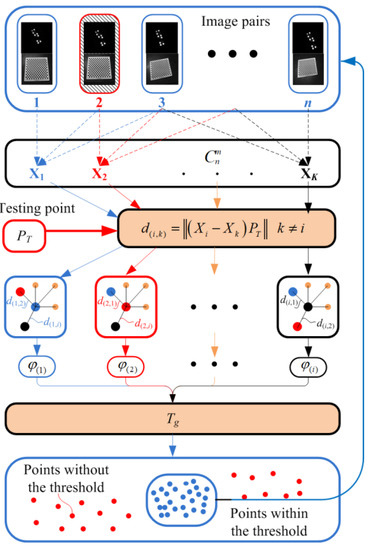

where X is the coordinate transformation matrix to be solved. From Equation (3), it can be seen that the essence of this problem is a hand–eye calibration problem in the robotic research area. The difference is the posture of the handheld device is computed by using the featured target attached to it, not the coordinate values of the robotic joints. Here, the coordinate transformation matrix X is calculated by the classical method brought by Park and Martin [26]. Then, a calibration strategy is brought out to identify and reject some image pairs that may deteriorate the accuracy of the calibration results. The procedures are illustrated in Figure 5.

Figure 5.

Procedures of image pair selection for calibration.

For each position of the handheld device, two images need to be captured. One is the featured target image for posture computation of the handheld device. The other is the chessboard image that can be used to obtain . These two images can be called an image pair. Assuming that the number of image pairs is n, m is the number that is chosen for the calibration with the least squares criterion [31]. combinations can be found. Each combination corresponds to one calibrated result denoted by Xk. A virtual testing point method is brought out to detect the unfavorite image pairs. The measurement process is to obtain the measurement value in the world coordinate system with the transformation relationship shown by Equation (1). The calibration error of the matrix Xk would make the translated point in the world coordinate system not be overlapped. The distances between the translated points are used to detect the unfavorite image pairs.

The selected image pairs are obtained by counting the number of the occurrence results that are within the threshold value. A more accurate calibration result of X can be achieved by a recalculation process using the selected image pairs. Assuming is the ith point in C that is calculated by use of Xi, the relative distances between this point and the rest of the points are denoted by Di = {d(i,1), d(i,2), …, d(i,k),…, d(i,b)}, b = Cnm− 1. The interclass variance of d(i,k) within the data set of Di can be calculated by

where = l/(b − 1), l is the number of the elements that are smaller than d(i,k) in the data set of Di, = 1 − denotes the proportion of other distance values, μ0 is the average value that is smaller than d(i,k), μ1 is the average value of the elements that are larger or equal to d(i,k) and μT is the average value of all the elements of Di. When (d(i,k)) achieves its maximum value, its corresponding value of φ(i) is the optimal threshold value for the data set Di.

Similarly, we can obtain a distance data set for an arbitrary point within the fixed camera coordinate system. Then, its corresponding optimal threshold value can be achieved using Equations (4) and (5). The optimal threshold values for different points can generate a new data set and are denoted by Ψi = {φ(1), φ(2), …, φ(i)}, i = 1,2, …, . By computing the interclass variance of each value within the data set Ψi, we can also find a maximum value. Its corresponding value of φ(i) is the final threshold value for the distance segmentation.

After that, each value of d(i,k) can be compared with Tg, and the ones that are larger than Tg are deleted. We can count the image sequences corresponding to the selected distance values. The image pairs that have the lowest frequencies would be deleted. This process would be repeated until the optimal image sequences have been found. The rest image pairs are used to recalculated X using the hand–eye calibration method. The detailed system description and calibration procedures are as follows.

4. Calibration Procedures

4.1. Setup of the Measurement System

The hardware of the handheld LSLMS is illustrated in Figure 6. In order to ensure the synchronization of the images taken by the handheld structured-light sensor camera and the vision guiding camera, global exposure cameras (Shenzhen Mindvision, MV-SUA134GM-T, Shenzhen, China) with an external trigger function were selected. The wavelength range of the sensitive spectrum of the camera’s CMOS detector was 400 nm to 950 nm, covering the spectral content of the feature target and laser. The cameras had 1280 × 1024 pixels with an image acquisition frequency of 211 fps, which could meet the requirements of the handheld scanning situations. The wavelength of the laser was 632.8 nm (Guangdong Shengzuan Lasers Co., Ltd., Shenzhen, China), and the minimum stripe width could reach 0.3 mm at a projection distance of 300 mm.

Figure 6.

Prototype of handheld LSLMS.

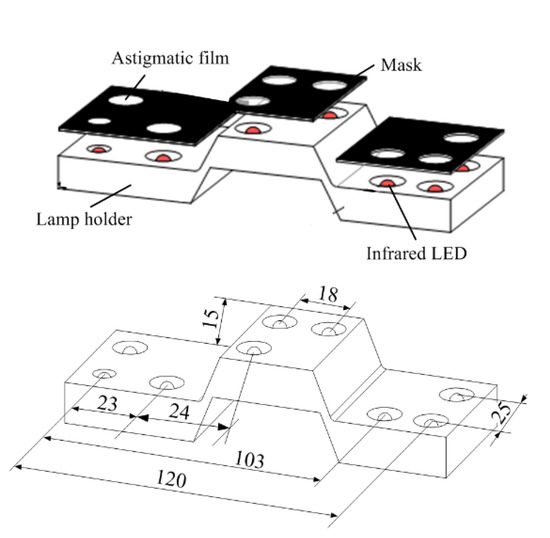

The featured target on the handheld device is also important to ensure measurement accuracy. The composition and key parameters of the target are shown in Figure 7. The light spot of the target was asymmetrically arranged to facilitate the sorting of spots. Independent infrared LEDs illuminated each spot with a wavelength of 850 nm. One side of the mask was coated with a light scattering layer, and the other side was processed with a circular hole corresponding to the lamp position to ensure the clarity of the feature points. The lamp holder was fabricated using a 3D printing technique. The position accuracy of the dots could not fulfill the measurement requirements. Therefore, a binocular method was used to recalibrate the relative distances between the feature points. The calibrated results were used for the computation of the extrinsic parameters.

Figure 7.

Composition and key parameters of featured target.

4.2. Calibration Procedures

Calibration of the measurement system mainly included three steps. The first step was to calibrate the internal parameters of two cameras using Zhang’s method [33]. The next step was the sensor calibration where the equation of the laser plane needs to be computed in the handheld camera coordinate system [2]. The final step was to calibrate the transformation matrix between the featured target coordinate system and the handheld camera coordinate system. The camera and the sensor calibration methods are relatively mature. Here, we focus on the final step. To achieve the calibration, 15 chessboard and corresponding feature target images were taken at different postures of the handheld device. During this process, the laser line projector was turned off to ensure the image quality of the chessboard. Then, the calibration was completed using the method in Section 3.2.

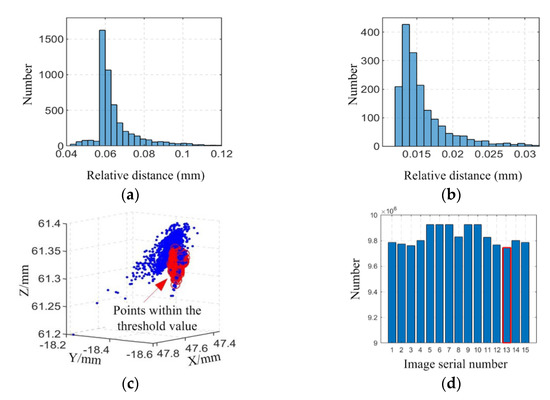

Figure 8a,b represent the relative distances of the distribution point cloud after transformation. Figure 8c represents the distribution of point clouds before and after selection. Figure 8d represents the frequency of specific image pairs that are used for the computation of selected points. These points are within the threshold value of Tg. The image pair that has the least frequency is rejected for this iteration.

Figure 8.

Selection of image pairs. (a,b) Relative distance of the distribution point cloud after transformation. (c) Point clouds before and after selection. (d) Image sequence.

Table 1 shows the change in the proportion of large noise points (Pn) within the data set of Di in the selection process of image pairs. For each iteration, one image pair would be deleted. Meanwhile, Pn is also computed by comparing the number of points from Tg and the total number. It can be seen from this table that Pn first decreases and then increases. The reason is that as the images with large errors are eliminated, the amount of data deviating from the threshold in the current data set becomes smaller and smaller. When the image pairs that may introduce significant calibration errors are eliminated, the rest of the image pairs meet the pre-set threshold and are at the same numerical level. When the new threshold is computed, the proportion of large noise points from the new threshold in the remaining data will gradually increase. Therefore, when the iteration number is three, the calibration can reach the optimal result.

Table 1.

Proportion of large noise points in the selection.

The value of can be obtained before and after the image selection process. For easy comparison, the rotation angles and the translation vectors are computed and are shown in Table 2. It can be seen that the image selection process has a significant impact on the calibration results. The value of tz has a variation of even more than 1 mm. The effect of the calibration matrix on the measurement accuracy can be found in Section 5.2.

Table 2.

Parameters of the calibrated matrix before and after image selection.

5. Measurement Results and Discussions

5.1. Measurement of Typical Parts and Accuracy Evaluation

The plane has already been measured using the system that is calibrated by use of the CFVCM. It is a failure result, as shown in Figure 3. After calibration with the improved MVCM, the same plane is rescanned using the handheld LSLMS. The point cloud and the fitted plane are shown in Figure 9a. Nearly all of the points are located on the fitted plane and the edge of the point cloud now becomes straight. Some points are located under the fitted plane due to the least squares fitting criterion and cannot be seen. This is why some holes existed in the measurement result. When the tilt is removed, the residual surface can be seen, as shown in Figure 9b. The evaluation parameters of the measurement results can be obtained with a maximum deviation of 0.0523 mm. The absolute mean value is just 0.0285 mm, denoting a high measurement accuracy for the calibration method.

Figure 9.

Measurement results of a plane. (a) Point cloud. (b) Residue error.

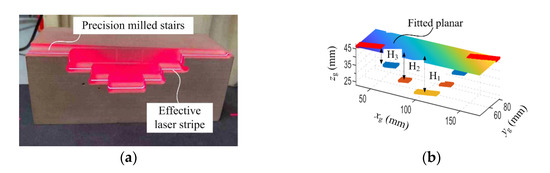

To further validate the effectiveness of the measurement system, a part with precisely milled stairs was measured, as shown in Figure 10a. The diffused laser stripe from the stairs can be seen clearly. This stripe is thin and bright, which ensures the accuracy of the results. Figure 10b is the measured point cloud and the height of each stair has been denoted by H1, H2 and H3. The fitted plane of the top stair is the reference for height computation.

Figure 10.

Measurement of the stairs. (a) Stairs. (b) Measured point cloud.

To make the result more reasonable, the edge points of each stair have been removed. The height values are the mean distance between the points on the stair and the planar fitting. The height of the stairs obtained by the coordinate measuring machine (Hexagon Golbal 7107, Qingdao, China) is considered as the standard value, and they are H1 = 24.0039 mm, H2 = 18.0027 mm and H3 = 10.0021 mm. The measurement results from the use of the MVCM and the improved MVCM were compared. With each method, the stairs are scanned five times. The measurement errors, the absolute mean error (AME) and the relative error (RE) are shown in Table 3 and Table 4.

Table 3.

Measurement results using MVCM (mm).

Table 4.

Measurement results using improved MVCM (mm).

The maximum relative error of the measured stairs with the handheld structured-light equipment calibrated using MVCM is 0.48%. With the improved method, the maximum relative error is reduced to 0.16%.

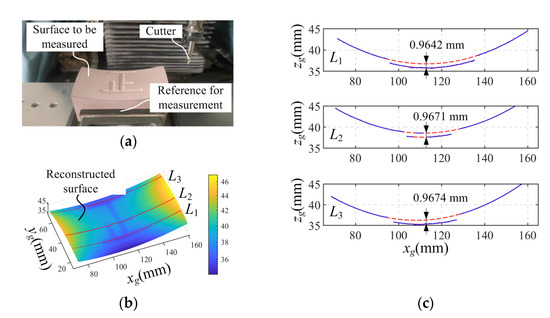

Besides the plane and the stairs, we also milled a more complex surface on a precision machine, as shown in Figure 11a. It is a saddle surface with an equation in the workpiece coordinate system of:

where xw, yw and zw are the 3D coordinate values of the surface points. The range of values for xw and yw are 0–85 mm and 0–55 mm, respectively. A character is engraved in the surface with a programming depth of 1 mm. After machining, the handheld scanning device was used to obtain the point cloud data of the surface, and the reconstructed result is shown in Figure 11b. The reference surface is adopted for the coordinate alignment between the measurement system and the workpiece system.

Figure 11.

Measurement and accuracy verification of a complex surface. (a) Surface to be measured, (b) reconstructed surface, (c) the intersection curves measured by the handheld device.

Three cross-sectional profiles, L1, L2 and L3, are selected along the xg direction on the measured surface for analysis. According to Equation (7), the profiles of the saddle surface in these three sections are all quadratic curves. Therefore, the least squares method was employed to fit the data of these profile sections. The average distance between the data points on the milled character profile and the corresponding fitted curve was calculated as the measurement value of the milling depth, as shown in Figure 11c.

The depth of the character was also measured using the coordinate measuring machine, with the measurement points distributed on the surface and the bottom of the character. Equation (7) was used to fit the measurement data of the saddle surface, and the average distance between the detection points of the character section and the fitted surface was calculated as 1.0044 mm. This deviation from the programmed milling depth is 4.4 μm, which can be used as a reference value for the measurement results. By comparison, the deviations of the character depth from the reference values in the three cross-sections were 0.0402 mm, 0.0373 mm and 0.0370 mm, respectively.

From the experiments, it can be seen that the handheld scanning device has maximum errors of 0.0285 mm, 0.0243 mm and 0.0402 mm when measuring planes, stepped surfaces and complex curved surfaces, respectively. The measurement error is within 0.0500 mm. In comparison, Creaform’s handheld scanning system demonstrates a measurement accuracy ranging from 0.0250 mm to 0.0500 mm [34]. It should be noted that their measurement systems require binocular guidance (MetraSCAN 3D) or the use of marker points (the series of HandySCAN 3D). Our measurement system, on the other hand, achieves comparable accuracy through only monocular guidance and can be easily used without marker points. This indicates that the proposed calibration method enhances the accuracy significantly for monocular measurement systems.

5.2. Measurement of Parts with Complex Surfaces

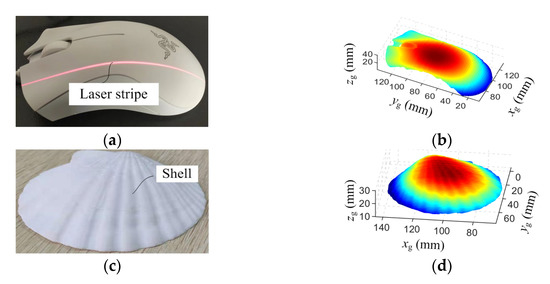

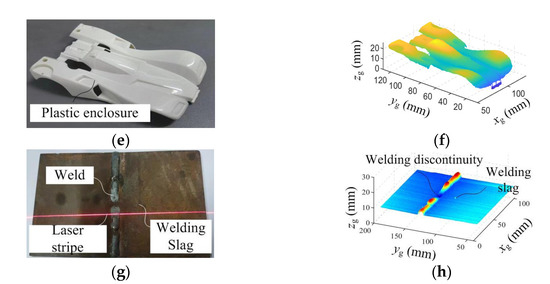

The aim of the measurement system is to obtain 3D geometrical information of the parts for quality inspection and reverse engineering. Therefore, a mouse, a natural shell, a plastic enclosure and a welded part were scanned, as shown in Figure 12a,c,e,g. With the proposed scanning system, these parts can be scanned from different angles. This makes the system more flexible and leads to a more integrated result. The measured point cloud is processed with the Delaunay triangulation method. Figure 12b,d,f,h show the triangulated surfaces.

Figure 12.

Measurement of typical parts. (a) The mouse, (c) the natural shell, (e) plastic enclosure and (g) the welded part; (b,d,f,h) are the reconstructed surfaces.

Figure 12b presents the contour of the mouse and accurately depicts the details of the scroll wheel; Figure 12d clearly displays the texture of the seashell surface; Figure 12f demonstrates the measurement results of the toy car’s enclosure and accurately represents the concave and convex features; Figure 12h shows the reconstructed surface of the welded part, revealing welding discontinuity and providing the three-dimensional point cloud information of the weld slag. Therefore, this handheld scanning system shows potential for defect detection in mechanical manufacturing. The aforementioned scanning reconstruction results further validate the effectiveness of the handheld scanning device in capturing the 3D geometric information of complex surfaces.

6. Conclusions

A handheld LSLMS was developed for the 3D geometrical measurement of complex parts. This system is guided by monocular vision and can be applied without linear slides or rotation tables. It consists of a movable structured-light sensor and a pose measurement camera. High-precision calibration of the coordinate transformation matrix from the movable line-structured-light sensor to the handheld target coordinate system is the prerequisite for measurement. To achieve this goal, an improved MVCM is proposed together with a selection procedure for image pairs. The accuracy of the transformation matrix from the sensor to the feature target on the handheld device is improved. With this method, the maximum relative error of the measured stair heights can be reduced from 0.48% to 0.16%. The maximum measurement error for the saddle surface is 0.0402 mm. The proposed measurement system does not need to have pasted markers on the objects during scanning, thereby avoiding the errors and time consumption associated with point cloud stitching. Additionally, monocular guidance has lower usage requirements and cost, making it applicable for quality inspection and reverse engineering. The next step is to develop a real-time display and result evaluation module for in situ measurement.

Author Contributions

Conceptualization, J.Z.; Data curation, J.Z. and Z.J.; Formal analysis, X.L.; Funding acquisition, J.Z.; Methodology, Z.J., Y.L. and X.L.; Project administration, Y.L.; Resources, Y.L. and W.Y.; Software, Z.J.; Validation, J.Z.; Visualization, W.Y. and Y.Q.; Writing—original draft, Z.J. and Y.Q.; Writing—review and editing, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Hebei Province under Grant E2022208020, by the Hebei Provincial Department of Education under Grant CXZZS2022080 and by the S&T Program of Hebei under Grant 22311701D.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wei, Z.; Shao, M.; Wang, Y.; Hu, M. A Sphere-Based Calibration Method for Line Structured Light Vision Sensor. Adv. Mech. Eng. 2013, 5, 580417. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, J.; Mao, Q.; Jin, J.; Huang, F. Line Structured Light 3D Sensing With Synchronous Color Mapping. IEEE Sens. J. 2020, 20, 9796–9805. [Google Scholar] [CrossRef]

- Usamentiaga, R.; Garcia, D. Multi-camera calibration for accurate geometric measurements in industrial environments. Measurement 2019, 134, 345–358. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, B.; Zhou, J.; Ren, Y. A universal method for the calibration of swing-scanning line structured light measurement system. Optik 2021, 241, 166930. [Google Scholar] [CrossRef]

- Liang, J.; Gu, X. Development and application of a non-destructive pavement testing system based on linear structured light three-dimensional measurement. Constr. Build. Mater. 2020, 260, 119919. [Google Scholar] [CrossRef]

- Karaszewski, M.; Sitnik, R.; Bunsch, E. On-line, collision-free positioning of a scanner during fully automated three-dimensional measurement of cultural heritage objects. Robot. Auton. Syst. 2012, 60, 1205–1219. [Google Scholar] [CrossRef]

- Logozzo, S.; Valigi, M.C.; Canella, G. Advances in Optomechatronics: An Automated Tilt-Rotational 3D Scanner for High-Quality Reconstructions. Photonics 2018, 5, 42. [Google Scholar] [CrossRef]

- Babu, M.; Franciosa, P.; Ceglarek, D. Spatio-Temporal Adaptive Sampling for effective coverage measurement planning during quality inspection of free form surfaces using robotic 3D optical scanner. J. Manuf. Syst. 2019, 53, 93–108. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, J.; Huang, F.; Liu, L. Sub-Pixel Extraction of Laser Stripe Center Using an Improved Gray-Gravity Method. Sensors 2017, 17, 814. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, K.; Yang, G.; Liu, X.; Du, R.; Li, Y. Real-time uncertainty estimation of stripe center extraction results using adaptive BP neural network. Measurement 2022, 194, 111022. [Google Scholar] [CrossRef]

- Santolaria, J.; Aguilar, J.-J.; Yagüe, J.-A.; Pastor, J. Kinematic parameter estimation technique for calibration and repeatability improvement of articulated arm coordinate measuring machines. Precis. Eng. 2008, 32, 251–268. [Google Scholar] [CrossRef]

- Santolaria, J.; Guillomía, D.; Cajal, C.; Albajez, J.A.; Aguilar, J.J. Modelling and Calibration Technique of Laser Triangulation Sensors for Integration in Robot Arms and Articulated Arm Coordinate Measuring Machines. Sensors 2009, 9, 7374–7396. [Google Scholar] [CrossRef]

- Tai, D.; Wu, Z.; Yang, Y.; Lu, C. A Cross-Line Structured Light Scanning System Based on a Measuring Arm. Instruments 2023, 7, 5. [Google Scholar] [CrossRef]

- Park, S.-Y.; Baek, J.; Moon, J. Hand-held 3D scanning based on coarse and fine registration of multiple range images. Mach. Vis. Appl. 2011, 22, 563–579. [Google Scholar] [CrossRef]

- Ayaz, S.M.; Kim, M.Y. Multiview registration-based handheld 3D profiling system using visual navigation and structured light. Int. J. Optomechatron. 2017, 11, 1–14. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced Computer Vision with Microsoft Kinect Sensor: A Review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [CrossRef]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Ameen, W.; Al-Ahmari, A.M.; Hammad Mian, S. Evaluation of Handheld Scanners for Automotive Applications. Appl. Sci. 2018, 8, 217. [Google Scholar] [CrossRef]

- Kleiner, B.; Munkelt, C.; Thorhallsson, T.; Notni, G.; Kühmstedt, P.; Schneider, U. Handheld 3-D Scanning with Automatic Multi-View Registration Based on Visual-Inertial Navigation. Int. J. Optomechatron. 2014, 8, 313–325. [Google Scholar] [CrossRef]

- Byczkowski, T.; Lang, J. A Stereo-Based System with Inertial Navigation for Outdoor 3D Scanning. In Proceedings of the 2009 Canadian Conference on Computer and Robot Vision, Kelowna, BC, Canada, 25–27 May 2009; pp. 221–228. [Google Scholar]

- Ayaz, S.M.; Danish, K.; Bang, J.-Y.; Park, S.-I.; Roh, Y.; Kim, M.Y. A multi-view stereo based 3D hand-held scanning system using visual-inertial navigation and structured light. MATEC Web Conf. 2015, 32, 6004. [Google Scholar] [CrossRef]

- Wang, X.; Xie, Z.; Wang, K.; Zhou, L. Research on a Handheld 3D Laser Scanning System for Measuring Large-Sized Objects. Sensors 2018, 18, 3567. [Google Scholar] [CrossRef] [PubMed]

- Peng, T.; Zhang, Z.; Song, Y.; Chen, F.; Zeng, D. Portable System for Box Volume Measurement Based on Line-Structured Light Vision and Deep Learning. Sensors 2019, 19, 3921. [Google Scholar] [CrossRef] [PubMed]

- Deniz, C.; Cakir, M. A solution to the hand-eye calibration in the manner of the absolute orientation problem. Ind. Robot. Int. J. Robot. Res. Appl. 2018, 45, 64–77. [Google Scholar] [CrossRef]

- Park, F.; Martin, B. Robot sensor calibration: Solving AX = XB on the Euclidean group. IEEE Trans. Robot. Autom. 1994, 10, 717–721. [Google Scholar] [CrossRef]

- Fu, Z.; Pan, J.; Spyrakos-Papastavridis, E.; Chen, X.; Li, M. A Dual Quaternion-Based Approach for Coordinate Calibration of Dual Robots in Collaborative Motion. IEEE Robot. Autom. Lett. 2020, 5, 4086–4093. [Google Scholar] [CrossRef]

- Wang, G.; Li, W.-L.; Jiang, C.; Zhu, D.-H.; Xie, H.; Liu, X.-J.; Ding, H. Simultaneous Calibration of Multicoordinates for a Dual-Robot System by Solving the AXB = YCZ Problem. IEEE Trans. Robot. 2021, 37, 1172–1185. [Google Scholar] [CrossRef]

- Li, A.G.; Wang, L.; Wu, D.F. Simultaneous robot-world and hand-eye calibration using dual-quaternions and Kronecker product. Int. J. Phys. Sci. 2010, 5, 1530–1536. [Google Scholar]

- Cao, C.-T.; Do, V.-P.; Lee, B.-R. A Novel Indirect Calibration Approach for Robot Positioning Error Compensation Based on Neural Network and Hand-Eye Vision. Appl. Sci. 2019, 9, 1940. [Google Scholar] [CrossRef]

- Guo, Y.; Song, B.; Tang, X.; Zhou, X.; Jiang, Z. A measurement method for calibrating kinematic parameters of industrial robots with point constraint by a laser displacement sensor. Meas. Sci. Technol. 2020, 31, 075004. [Google Scholar] [CrossRef]

- Pachtrachai, K.; Vasconcelos, F.; Chadebecq, F.; Allan, M.; Hailes, S.; Pawar, V.; Stoyanov, D. Adjoint Transformation Algorithm for Hand–Eye Calibration with Applications in Robotic Assisted Surgery. Ann. Biomed. Eng. 2018, 46, 1606–1620. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Professional and Industrial PorTable 3D Scanners. Available online: https://www.creaform3d.com.cn/zh/bian-xi-shi-3d-sao-miao-yi (accessed on 20 May 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).