Enhancing UAV Detection in Surveillance Camera Videos through Spatiotemporal Information and Optical Flow

Abstract

1. Introduction

- Extension of the object detection model’s input to consecutive frames, enabling the model to leverage temporal and spatial information for improved detection performance on dynamic targets;

- Incorporation of optical flow tensors as input to the model, allowing it to directly acquire motion information between two consecutive frames to more accurately capture features related to drone motion;

- Verification of the proposed method through comparative experiments to illustrate its effectiveness and superiority.

2. Related Work

2.1. Single-Frame Image Drone Detection

2.1.1. Single-Stage Drone Detection Methods

2.1.2. Two-Stage Drone Detection Methods

2.2. Video Drone Detection

3. Proposed Method

3.1. Overall Architecture

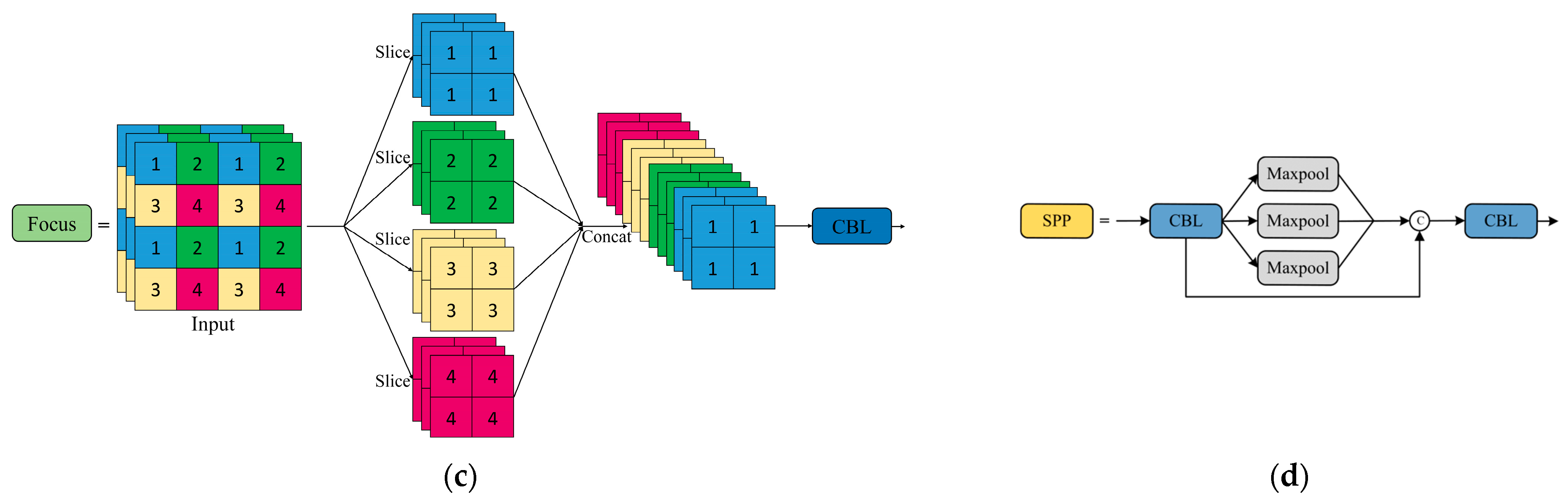

3.2. Extension of the Input Layer for Introducing Temporal Information

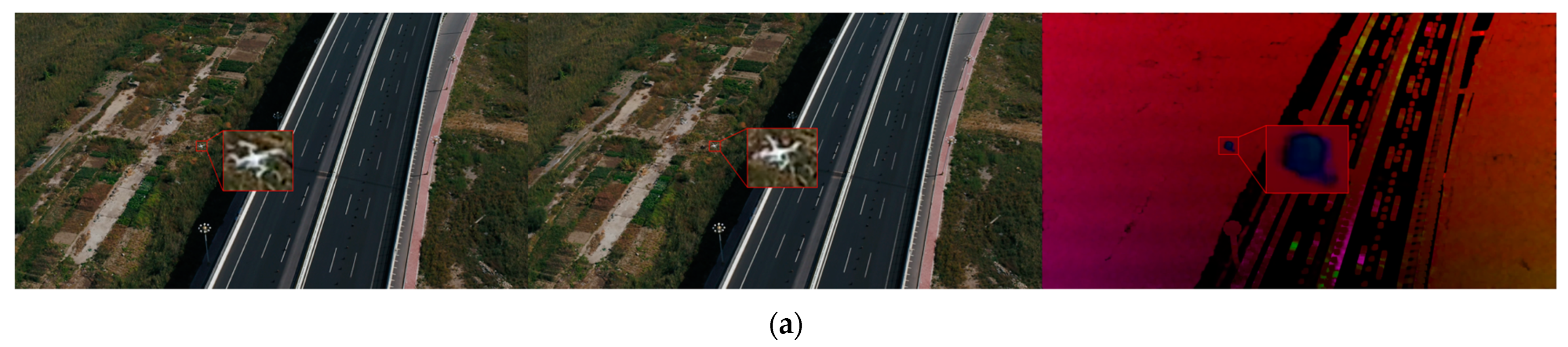

3.3. Extension of the Input Layer for Introducing Optical Flow

3.4. Loss Function

4. Experiments

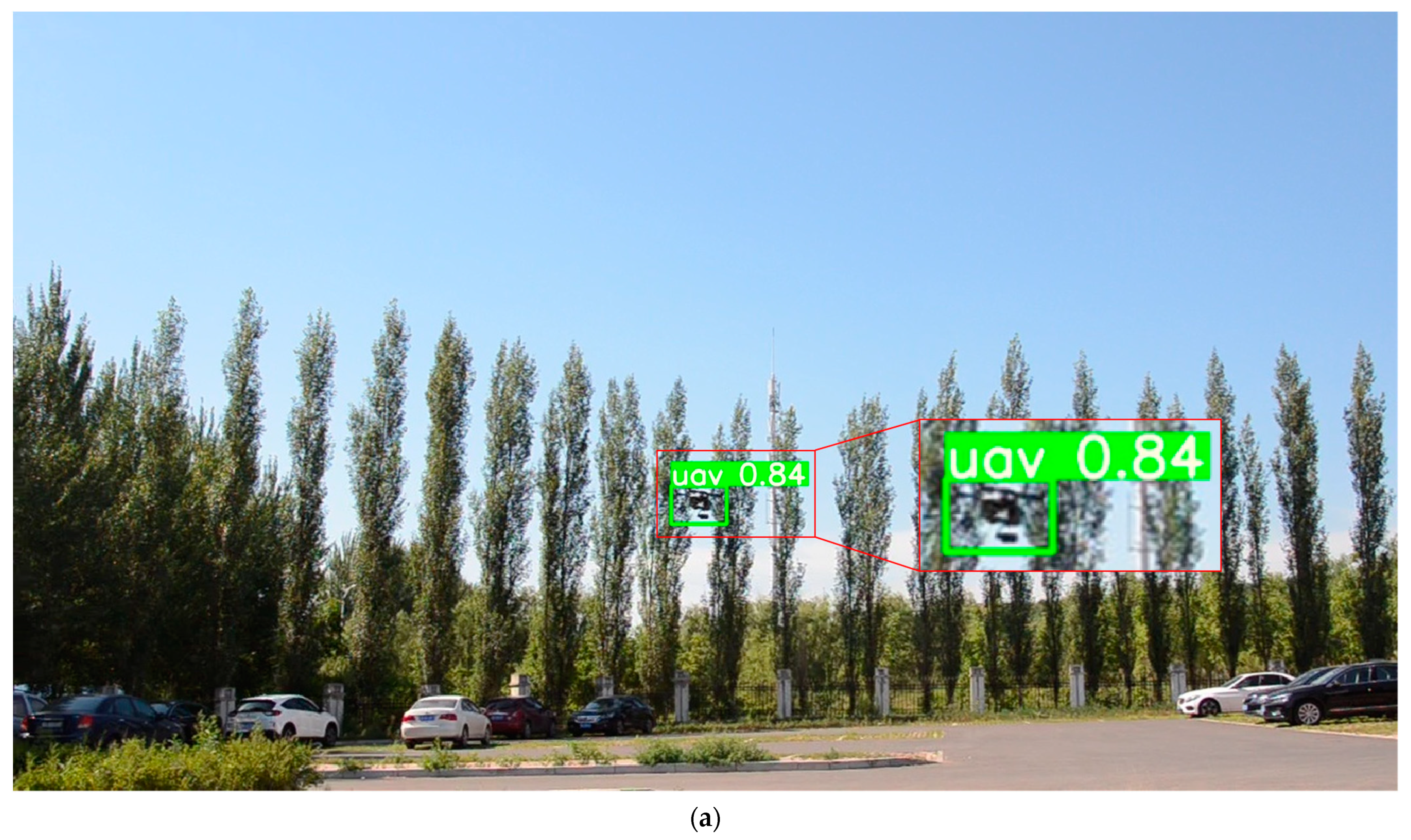

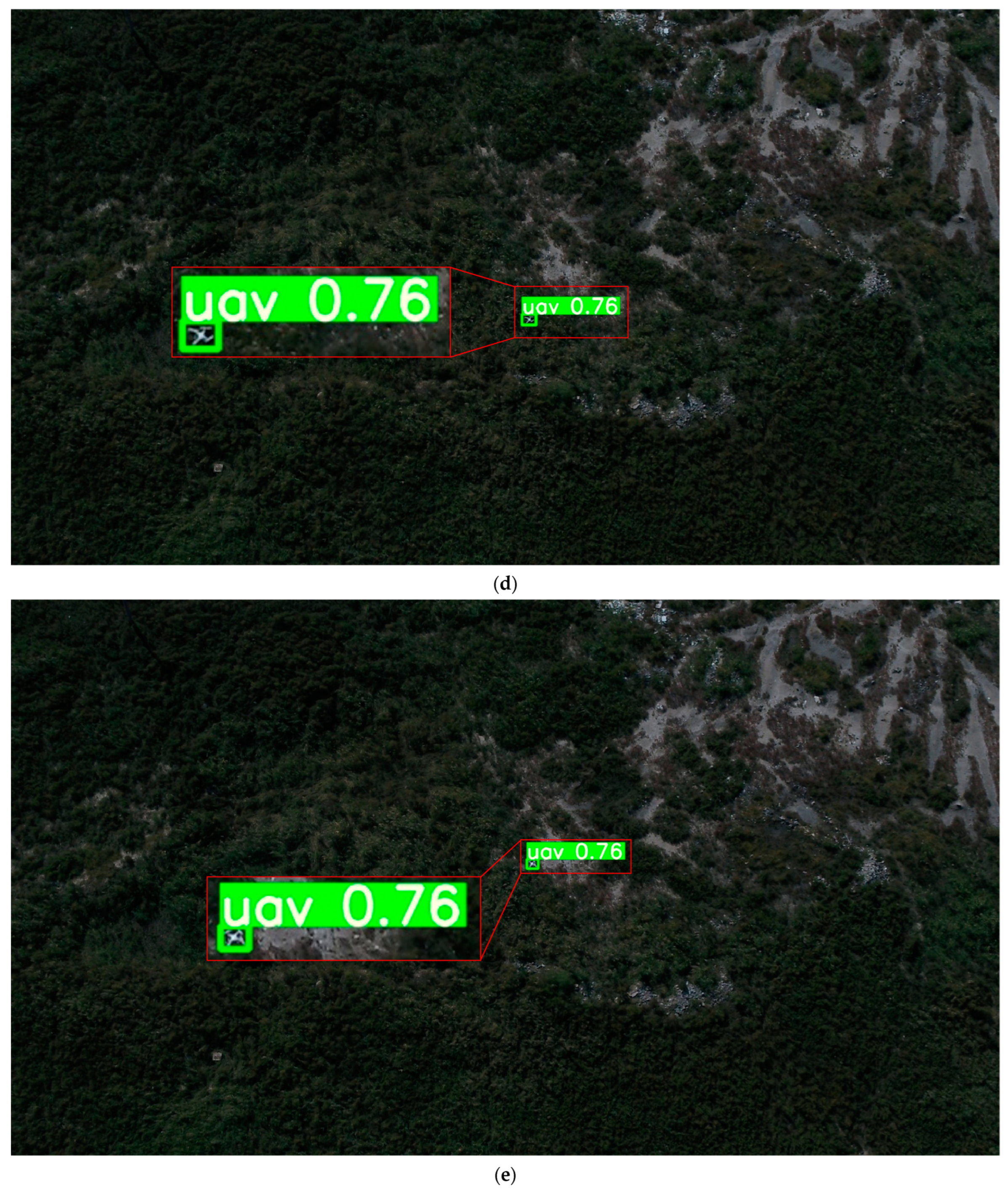

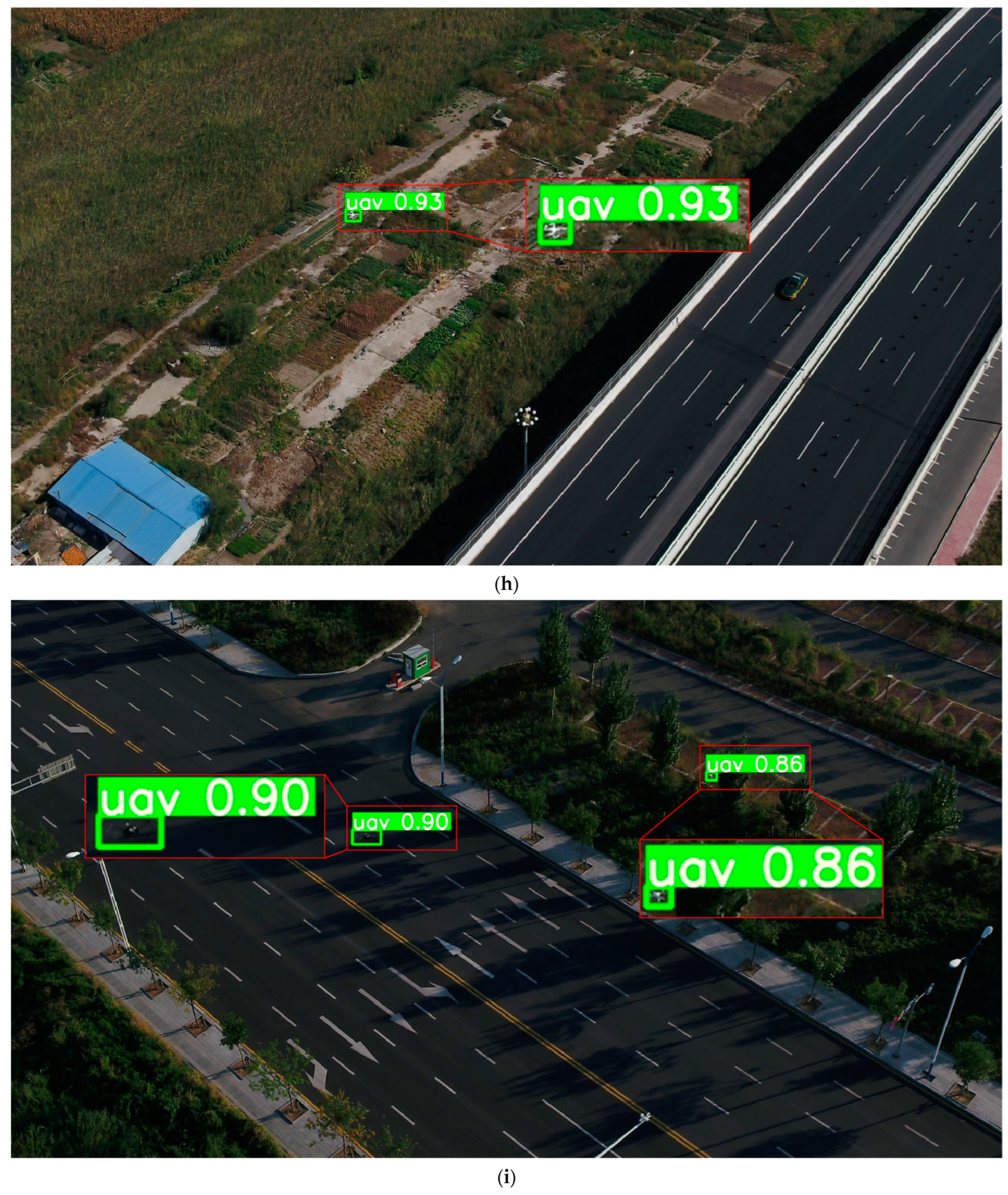

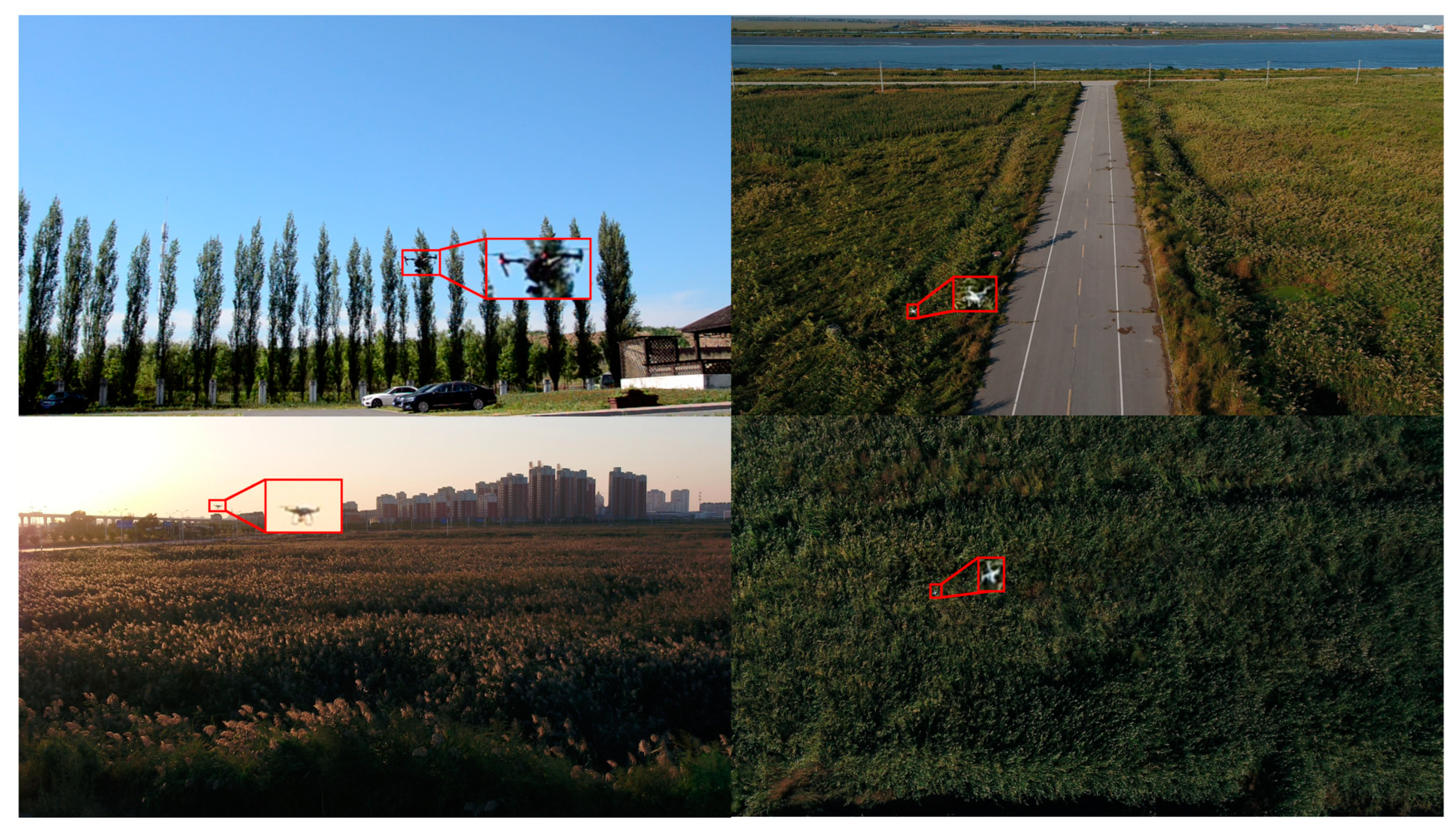

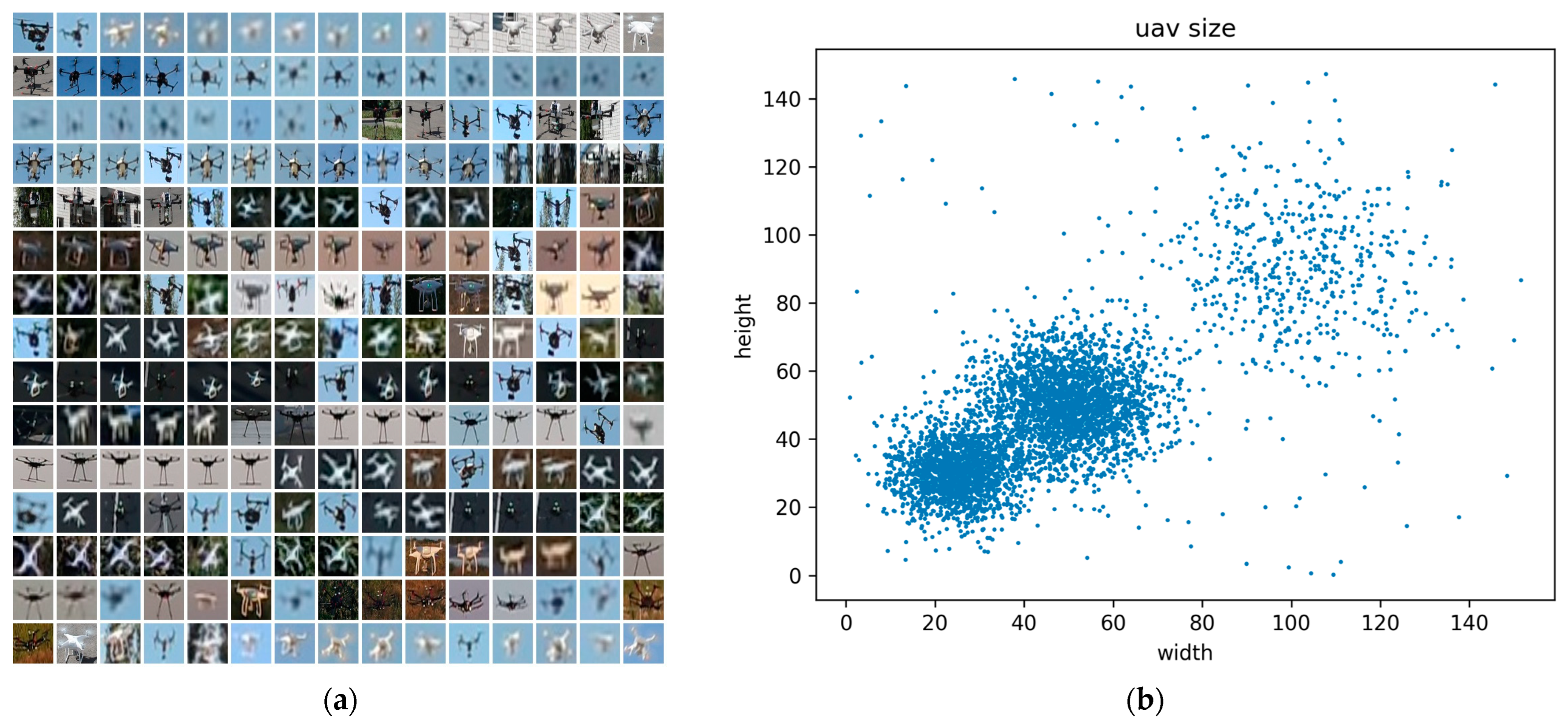

4.1. Dataset

4.2. Implementation Details

4.3. Evaluation Criteria

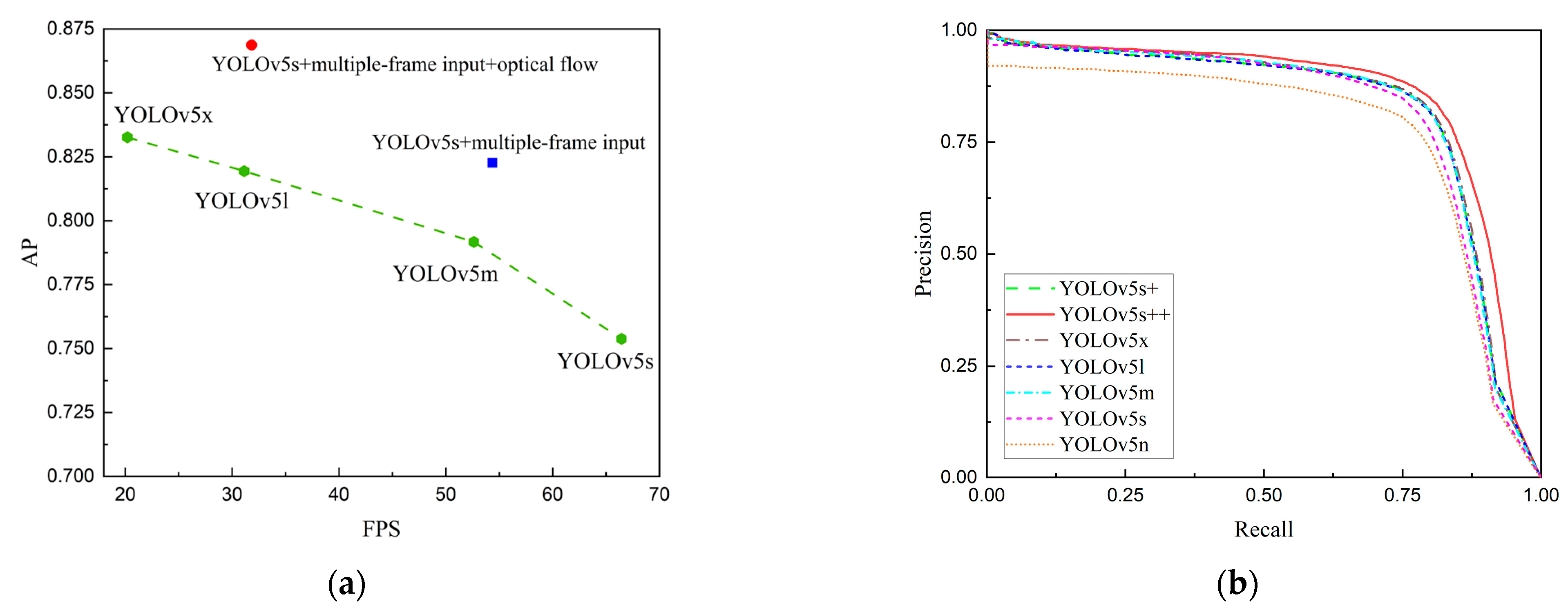

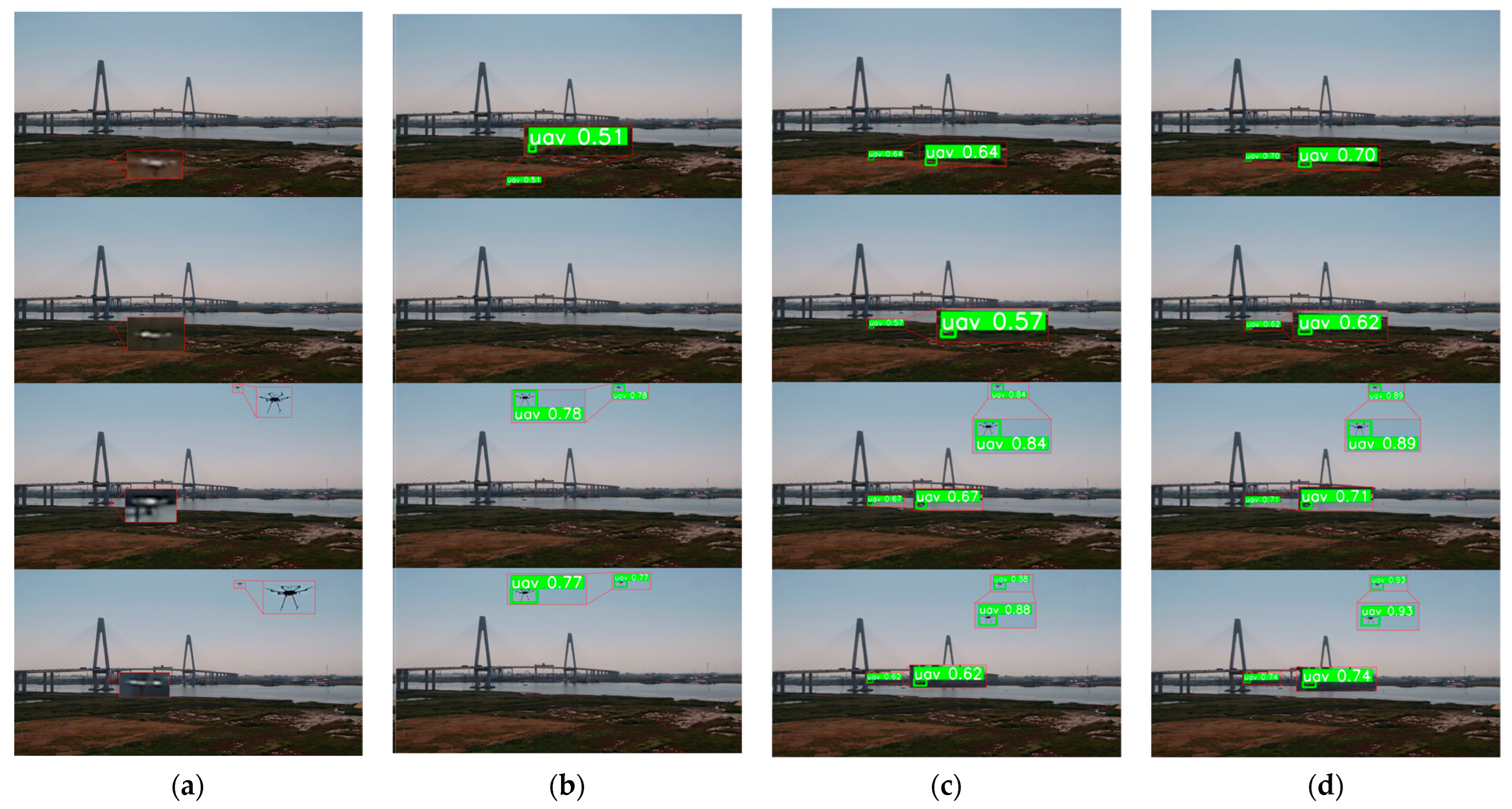

4.4. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Behera, D.K.; Raj, A.B. Drone Detection and Classification Using Deep Learning. In Proceedings of the 2020 4th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 13–15 May 2020; IEEE: Piscataway Township, NJ, USA; pp. 1012–1016. [Google Scholar]

- Luppicini, R.; So, A. A Technoethical Review of Commercial Drone Use in the Context of Governance, Ethics, and Privacy. Technol. Soc. 2016, 46, 109–119. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Alduraibi, M.; Ilipbayeva, L.; Almagambetov, A. Detection of Loaded and Unloaded UAV Using Deep Neural Network. In Proceedings of the 2020 Fourth IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 9–11 November 2020; pp. 490–494. [Google Scholar]

- Nex, F.; Remondino, F. Preface: Latest Developments, Methodologies, and Applications Based on UAV Platforms. Drones 2019, 3, 26. [Google Scholar] [CrossRef]

- de Angelis, E.L.; Giulietti, F.; Rossetti, G.; Turci, M.; Albertazzi, C. Toward Smart Air Mobility: Control System Design and Experimental Validation for an Unmanned Light Helicopter. Drones 2023, 7, 288. [Google Scholar] [CrossRef]

- Rohan, A.; Rabah, M.; Kim, S.-H. Convolutional Neural Network-Based Real-Time Object Detection and Tracking for Parrot AR Drone 2. IEEE Access 2019, 7, 69575–69584. [Google Scholar] [CrossRef]

- Kumawat, H.C.; Bazil Raj, A. Extraction of Doppler Signature of Micro-to-Macro Rotations/Motions Using Continuous Wave Radar-Assisted Measurement System. IET Sci. Meas. Technol. 2020, 14, 772–785. [Google Scholar] [CrossRef]

- Seo, Y.; Jang, B.; Im, S. Drone Detection Using Convolutional Neural Networks with Acoustic STFT Features. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Munich, Germany, 27–30 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Bernardini, A.; Mangiatordi, F.; Pallotti, E.; Capodiferro, L. Drone Detection by Acoustic Signature Identification. Electron. Imaging 2017, 10, 60–64. [Google Scholar] [CrossRef]

- Chiper, F.-L.; Martian, A.; Vladeanu, C.; Marghescu, I.; Craciunescu, R.; Fratu, O. Drone Detection and Defense Systems: Survey and a Software-Defined Radio-Based Solution. Sensors 2022, 22, 1453. [Google Scholar] [CrossRef]

- Ferreira, R.; Gaspar, J.; Sebastião, P.; Souto, N. A Software Defined Radio Based Anti-UAV Mobile System with Jamming and Spoofing Capabilities. Sensors 2022, 22, 1487. [Google Scholar] [CrossRef]

- Mahdavi, F.; Rajabi, R. Drone Detection Using Convolutional Neural Networks. In Proceedings of the 2020 6th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), Tehran, Iran, 5–7 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Liu, H. Unmanned Aerial Vehicle Detection and Identification Using Deep Learning. In Proceedings of the 2021 International Wireless Communications and Mobile Computing (IWCMC), Harbin, China, 28 June–2 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 514–518. [Google Scholar]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Aydin, B.; Singha, S. Drone Detection Using YOLOv5. Eng 2023, 4, 416–433. [Google Scholar] [CrossRef]

- Taha, B.; Shoufan, A. Machine Learning-Based Drone Detection and Classification: State-of-the-Art in Research. IEEE Access 2019, 7, 138669–138682. [Google Scholar] [CrossRef]

- Kerzel, D. Eye Movements and Visible Persistence Explain the Mislocalization of the Final Position of a Moving Target. Vision Res. 2000, 40, 3703–3715. [Google Scholar] [CrossRef] [PubMed]

- Nijhawan, R. Visual Prediction: Psychophysics and Neurophysiology of Compensation for Time Delays. Behav. Brain Sci. 2008, 31, 179–198. [Google Scholar] [CrossRef]

- Burr, D.C.; Ross, J.; Morrone, M.C. Seeing Objects in Motion. Proc. R. Soc. Lond. B Biol. Sci. 1986, 227, 249–265. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part I 14. Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June–2 July 2016; pp. 779–788. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Hassan, S.A.; Rahim, T.; Shin, S.Y. Real-Time UAV Detection Based on Deep Learning Network. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2019; pp. 630–632. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Magoulianitis, V.; Ataloglou, D.; Dimou, A.; Zarpalas, D.; Daras, P. Does Deep Super-Resolution Enhance UAV Detection? In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; pp. 1–6. [Google Scholar]

- Zhu, X.; Wang, Y.; Dai, J.; Yuan, L.; Wei, Y. Flow-Guided Feature Aggregation for Video Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 408–417. [Google Scholar]

- Liu, M.; Zhu, M. Mobile Video Object Detection with Temporally-Aware Feature Maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5686–5695. [Google Scholar]

- Wu, H.; Chen, Y.; Wang, N.; Zhang, Z. Sequence Level Semantics Aggregation for Video Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9217–9225. [Google Scholar]

- Xiao, F.; Lee, Y.J. Video Object Detection with an Aligned Spatial-Temporal Memory. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 485–501. [Google Scholar]

- Deng, J.; Pan, Y.; Yao, T.; Zhou, W.; Li, H.; Mei, T. Single Shot Video Object Detector. IEEE Trans. Multimed. 2020, 23, 846–858. [Google Scholar] [CrossRef]

- Chen, Y.; Cao, Y.; Hu, H.; Wang, L. Memory Enhanced Global-Local Aggregation for Video Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10337–10346. [Google Scholar]

- Sun, N.; Zhao, J.; Wang, G.; Liu, C.; Liu, P.; Tang, X.; Han, J. Transformer-Based Moving Target Tracking Method for Unmanned Aerial Vehicle. Eng. Appl. Artif. Intell. 2022, 116, 105483. [Google Scholar] [CrossRef]

- Li, J.; Ye, D.H.; Chung, T.; Kolsch, M.; Wachs, J.; Bouman, C. Multi-Target Detection and Tracking from a Single Camera in Unmanned Aerial Vehicles (UAVs). In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4992–4997. [Google Scholar]

- Ye, D.H.; Li, J.; Chen, Q.; Wachs, J.; Bouman, C. Deep Learning for Moving Object Detection and Tracking from a Single Camera in Unmanned Aerial Vehicles (UAVs). Electron. Imaging 2018, 10, 4661–4666. [Google Scholar] [CrossRef]

- Rozantsev, A.; Lepetit, V.; Fua, P. Detecting Flying Objects Using a Single Moving Camera. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 879–892. [Google Scholar] [CrossRef]

- Ashraf, M.W.; Sultani, W.; Shah, M. Dogfight: Detecting Drones from Drones Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 7067–7076. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Targ, S.; Almeida, D.; Lyman, K. ResNet in ResNet: Generalizing Residual Architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Beauchemin, S.S.; Barron, J.L. The Computation of Optical Flow. ACM Comput. Surv. 1995, 27, 433–466. [Google Scholar] [CrossRef]

- Plyer, A.; Le Besnerais, G.; Champagnat, F. Massively Parallel Lucas Kanade Optical Flow for Real-Time Video Processing Applications. J. Real-Time Image Process. 2016, 11, 713–730. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. VarifocalNet: An IoU-Aware Dense Object Detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Montreal, QC, Canada, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 8514–8523. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

| Methods | P | R | F1 | AP | FPS |

|---|---|---|---|---|---|

| Faster-R-CNN | 70.13% | 63.20% | 0.6648 | 68.75% | 27.26 |

| Cascade R-CNN | 78.32% | 68.08% | 0.7284 | 74.68% | 8.63 |

| YOLOv3 | 78.51% | 67.98% | 0.7286 | 73.85% | 17.21 |

| YOLOv3+ multiple-frame input | 87.59% | 75.72% | 0.8122 | 79.43% | 6.30 |

| YOLOv3+ multiple-frame input+ optical flow | 89.21% | 78.36% | 0.8343 | 85.18% | 0.87 |

| YOLOv4 | 83.47% | 79.15% | 0.8125 | 83.25% | 20.05 |

| YOLOv4+ multiple-frame input | 89.28% | 84.88% | 0.8702 | 86.71% | 10.17 |

| YOLOv4+ multiple-frame input+ optical flow | 89.68% | 86.24% | 0.8792 | 91.75% | 1.48 |

| YOLOv5x | 85.92% | 80.18% | 0.8294 | 83.26% | 20.21 |

| YOLOv5l | 83.41% | 78.39% | 0.8082 | 81.94% | 31.14 |

| YOLOv5m | 81.56% | 73.47% | 0.7730 | 79.17% | 52.63 |

| YOLOv5s | 78.77% | 66.71% | 0.7224 | 75.38% | 66.45 |

| YOLOv5s+ multiple-frame input | 86.18% | 76.43% | 0.8101 | 82.27% | 54.37 |

| YOLOv5s+ multiple-frame input+ optical flow | 86.96% | 80.67% | 0.8369 | 86.87% | 31.84 |

| YOLOv5n | 76.38% | 63.11% | 0.6911 | 71.51% | 75.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Zhi, X.; Han, H.; Jiang, S.; Shi, T.; Gong, J.; Zhang, W. Enhancing UAV Detection in Surveillance Camera Videos through Spatiotemporal Information and Optical Flow. Sensors 2023, 23, 6037. https://doi.org/10.3390/s23136037

Sun Y, Zhi X, Han H, Jiang S, Shi T, Gong J, Zhang W. Enhancing UAV Detection in Surveillance Camera Videos through Spatiotemporal Information and Optical Flow. Sensors. 2023; 23(13):6037. https://doi.org/10.3390/s23136037

Chicago/Turabian StyleSun, Yu, Xiyang Zhi, Haowen Han, Shikai Jiang, Tianjun Shi, Jinnan Gong, and Wei Zhang. 2023. "Enhancing UAV Detection in Surveillance Camera Videos through Spatiotemporal Information and Optical Flow" Sensors 23, no. 13: 6037. https://doi.org/10.3390/s23136037

APA StyleSun, Y., Zhi, X., Han, H., Jiang, S., Shi, T., Gong, J., & Zhang, W. (2023). Enhancing UAV Detection in Surveillance Camera Videos through Spatiotemporal Information and Optical Flow. Sensors, 23(13), 6037. https://doi.org/10.3390/s23136037