Energy Efficient Node Selection in Edge-Fog-Cloud Layered IoT Architecture

Abstract

1. Introduction

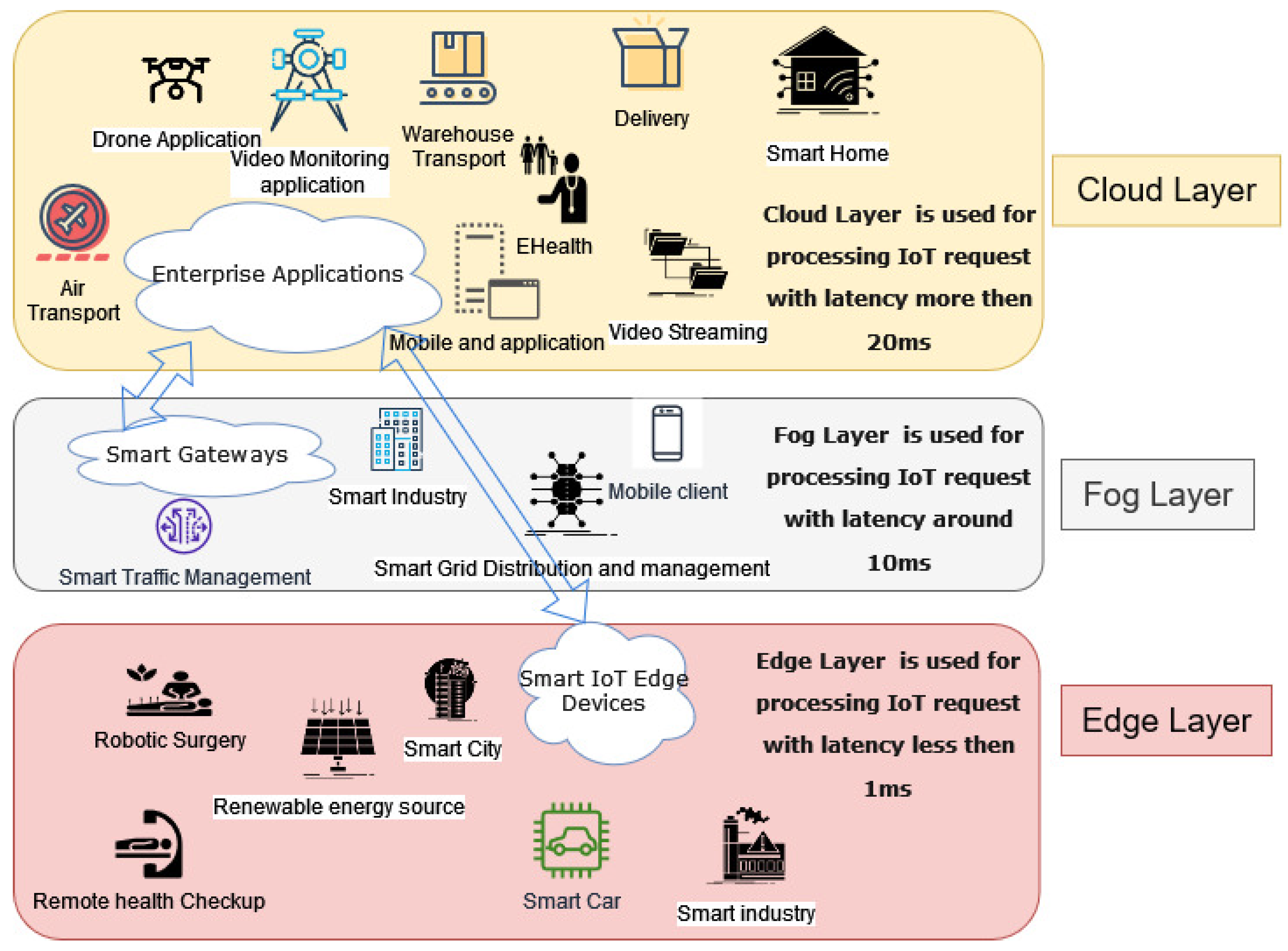

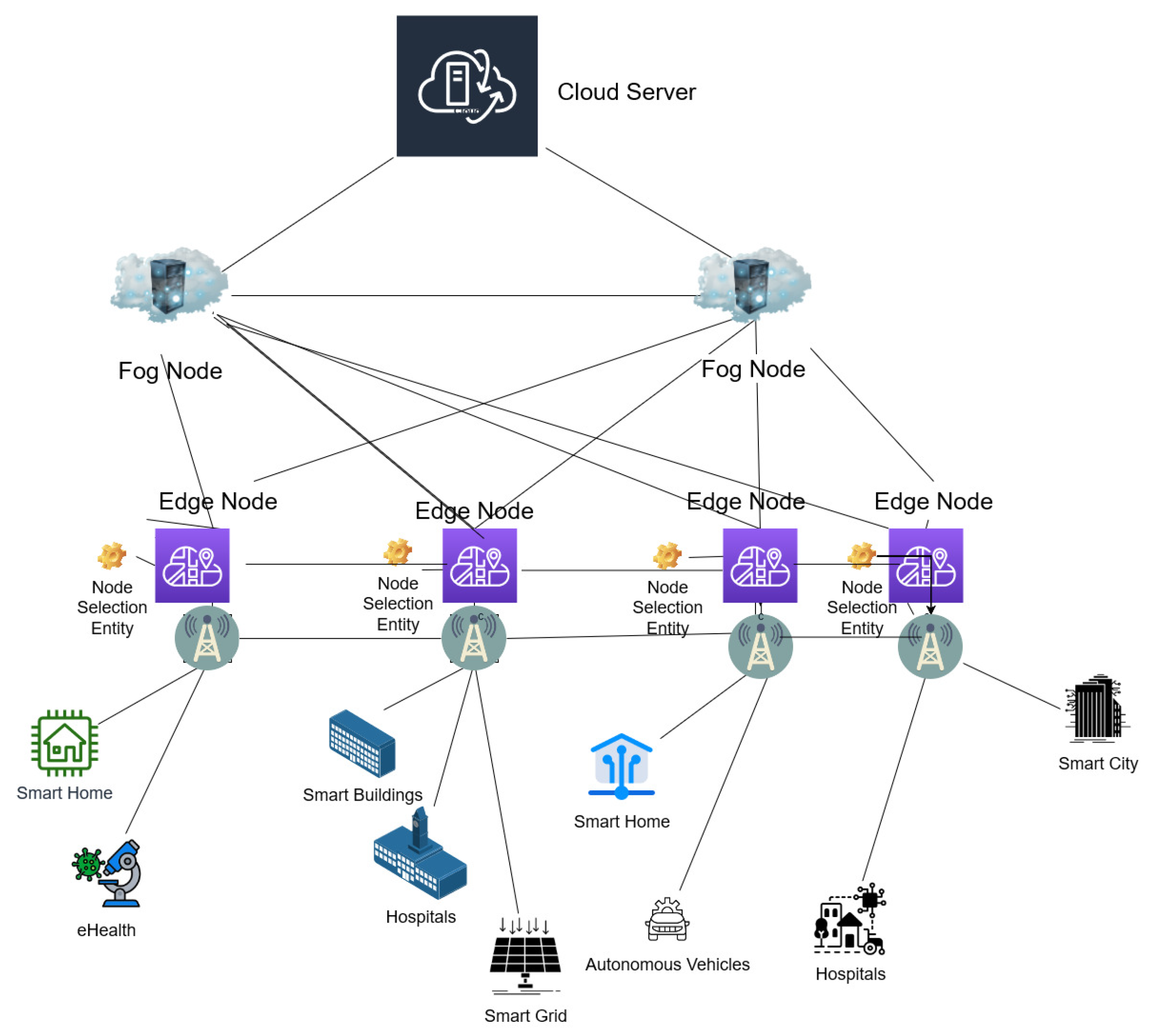

2. Background on IoT Architectures and Energy Management

2.1. IoT Architectures

2.2. Task Offloading and Node Selection in IoT networks

2.2.1. Task Offloading

2.2.2. Node Selection

2.3. Energy Management in IoT Architectures

Network and Application QoS Constraints

3. Research Challenges and Proposal

4. Optimal Node Selection Framework

4.1. Optimization Framework

4.2. Parameters and Sets

4.2.1. Sets

- Let E = 1,…, : denotes a set of all nodes to be considered the edge layer

- Let F = 1,…, : denotes set of all nodes to be considered at the fog layer

- Let C = 1,…, : denotes set of all nodes to be considered at the cloud layer

- Let : denote the set of all the nodes collectively at all three layers,

- Let : denote set of edge servers

- Let : denote set of fog servers

- Let : denote set of cloud servers

- Let : List all IoT requests/jobs from various IoT use cases

4.2.2. Network Parameters

- : The overall count of nodes installed at the cloud layer.

- : The overall count of nodes installed at the fog layer

- : The overall count of nodes installed at the edge layer

- : The overall count of nodes installed in the IoT network

- : Maximum number of edge servers that can be deployed at single edge node

- : Maximum number of fog servers that can be deployed at single fog node

- : Maximum number of cloud servers that can be deployed at single cloud node

- : Parameter denoting location l where an edge node e is installed

- : Parameter denoting location l where a fog node f is installed

- : Parameter denoting location l where a cloud node c is installed

- : Parameter denoting location l where a node has been deployed in the IoT network graph

- : Parameter denoting the number of servers installed at every node in edge layer

- : Parameter denoting the number of servers installed at every node in fog layer

- : Parameter denoting the number of servers installed at every node in cloud layer

- : Boolean parameter, if nodes has an initially deployed active server at position, otherwise, where and

- : Boolean parameter, if nodes has an initially deployed active server at position, otherwise, where and

- : Boolean parameter, if nodes has an initially deployed active server at position, otherwise, where and

- : Remaining resource/processing capacity at the specific location denoted by l, of an edge node

- : Remaining resource/processing capacity at the specific location denoted by l, of a fog node

- : Remaining resource/processing capacity at the specific location denoted by l, of a cloud node

- : denotes the resource/processing capacity of each server at an edge node in the network at location l

- : denotes the resource/processing capacity of each server at a fog node in the network l

- : denotes the resource/processing capacity of each server at a cloud node in the network location l

- : Bandwidth supported for communication at the specific location denoted by l, of an edge node

- : Bandwidth supported for communication at the specific location denoted by l, of an fog node

- : Bandwidth supported for communication at the specific location denoted by l, of an cloud node

- : Total delay between the node and node in the IoT network

- : Boolean parameter denoting connectivity among nodes in the IoT network architecture, =1 on a condition that connectivity exists among node and node else 0

- : Cost of activating an inactive E node for processing

- :Cost of activating an inactive F node for processing

- : Cost of activating an inactive server at edge node

- : Cost of activating an inactive server at fog node

- : Cost of running a job at cloud

4.2.3. IoT Application Job Request Parameters

- : denotes the maximum count of IoT request/jobs

- : denotes resource/processing requirement of an IoT request

- : denotes bandwidth requirement of an IoT request

- : denotes latency requirement of an IoT request

- : denotes origin node of an IoT request

4.3. Variables

- : A Boolean variable, takes the value 1 if the job is assigned to the edge node, and 0 otherwise, where and

- : is 1 if job is assigned to fog node, and 0 otherwise, where and . This is a Boolean variable.

- : is 1 if job is assigned to cloud node, and 0 otherwise, where and . This is a Boolean variable.

- [e]: is [e] = 1 if is an active edge node, [e] = 0 otherwise where . This is a Boolean variable that represents current active edge nodes.

- [f]: is [f] = 1 if is an active fog node, [f] = 0 otherwise where . This is a Boolean variable that represents current active fog nodes.

- [c]: is [c] = 1 if is an active cloud node, [c] = 0 otherwise where . This is a Boolean variable that represents current active cloud nodes.

- : Boolean variable, if nodes has an active server at position, otherwise, where and

- : Boolean variable, if nodes has an active server at position, otherwise, where and

- : Boolean variable, if nodes has an active server at position, otherwise, where and

4.4. Objective Function of the Framework

4.5. Constraints

4.5.1. Network Constraints

- In the IoT architecture, allocating a new job/request to a node must be restricted so that each IoT job is processed solely at a single node. This constraint is denoted in Equation (2) wherein the variables are used to correspondingly record the values of every job assigned to each edge-fog-cloud location.

- To restrict the overall count of currently active nodes in the IoT network architecture, we have defined a constraint in our framework to ensure that the count of active nodes does not exceed the total count of nodes deployed at each layer. This is highlighted in Equations (3)–(5), where the maximum allowable number of nodes at each layer are denoted by and active nodes are denoted by at edge, fog, and cloud layers, respectively.

- A constraint is required to restrict the count of servers activated at each node, ensuring that the number does not exceed the total count of servers installed at the corresponding layer. This constraint is defined in Equations (6)–(8) where are the total count of servers installed at each node at edge, fog, and cloud layers, respectively.

4.5.2. Application Constraints

- For verifying the delay requirement for IoT use cases and the network connectivity among the nodes where the request has originated and the optimal node is selected for processing the request, a constraint has been defined using Equations (9)–(11). The delay and the network connectivity for all the layers cannot exceed the latency of the IoT job request.

- For each request allocated to a node, it is essential to ensure that the allocated node has enough leftover capacity for processing the job, whether at the edge, fog, or cloud layer. The remaining capacity at each node at edge-fog-cloud layer is denoted by . The Equations (12)–(14) assist in upholding the constraint by verifying that the difference between the resource capacity of a node and the resource requirement of a job is non-negative across all three layers.

- We formulate a constraint to ensure that the available bandwidth supported by the node is adequate to satisfy the job’s bandwidth requirement, considering the count of servers installed at each node in the edge-fog-cloud layer. It is defined in Equations (15)–(17), respectively. To adhere to the constraint, we subtract the job’s required bandwidth from the node’s supported bandwidth and ensure that the result is always a positive integer at the edge-fog-cloud layer.

4.5.3. Limits

- To ensure that the number of jobs allocated to a location at each layer does not exceed the number of activated nodes at that layer, a constraint is required. Equations (18)–(20) are used to define this constraint in the edge, fog, and cloud layers. This constraint guarantees that when an IoT request is assigned to either of the nodes , the corresponding nodes , , are active.

- A constraint is necessary to guarantee that while processing a new IoT request, the total count of nodes being activated at a specific layer is greater than or equal to the total count of activated nodes installed initially. Equations (21)–(23) are used to define this constraint in the edge, fog, and cloud layers. These equations ensure that the total count of activated nodes in each layer, denoted by , , and , respectively, can be equal to or greater than the total count of nodes initially deployed and activated at the respective layer

- A constraint is necessary to ensure that the total count of servers being activated at each node at a specific layer for processing the incoming IoT job requests does not exceed the total count of nodes already active at the respective layer. Equations (24)–(26) are used to define this constraint in the edge, fog, and cloud layers, ensuring that the activated servers at the edge (), fog (), and cloud layer () cannot exceed the count of nodes already installed and active at each layer.

- To ensure that the number of servers activated at each node in a layer for processing the incoming IoT requests is not greater than the number of nodes already active at that location, a constraint is required. Equations (27)–(29) make sure the constraint is satisfied where the server activated at the edge (), fog (), and cloud () layer can be greater than or equal to the number of active servers during the initial deployment , respectively.

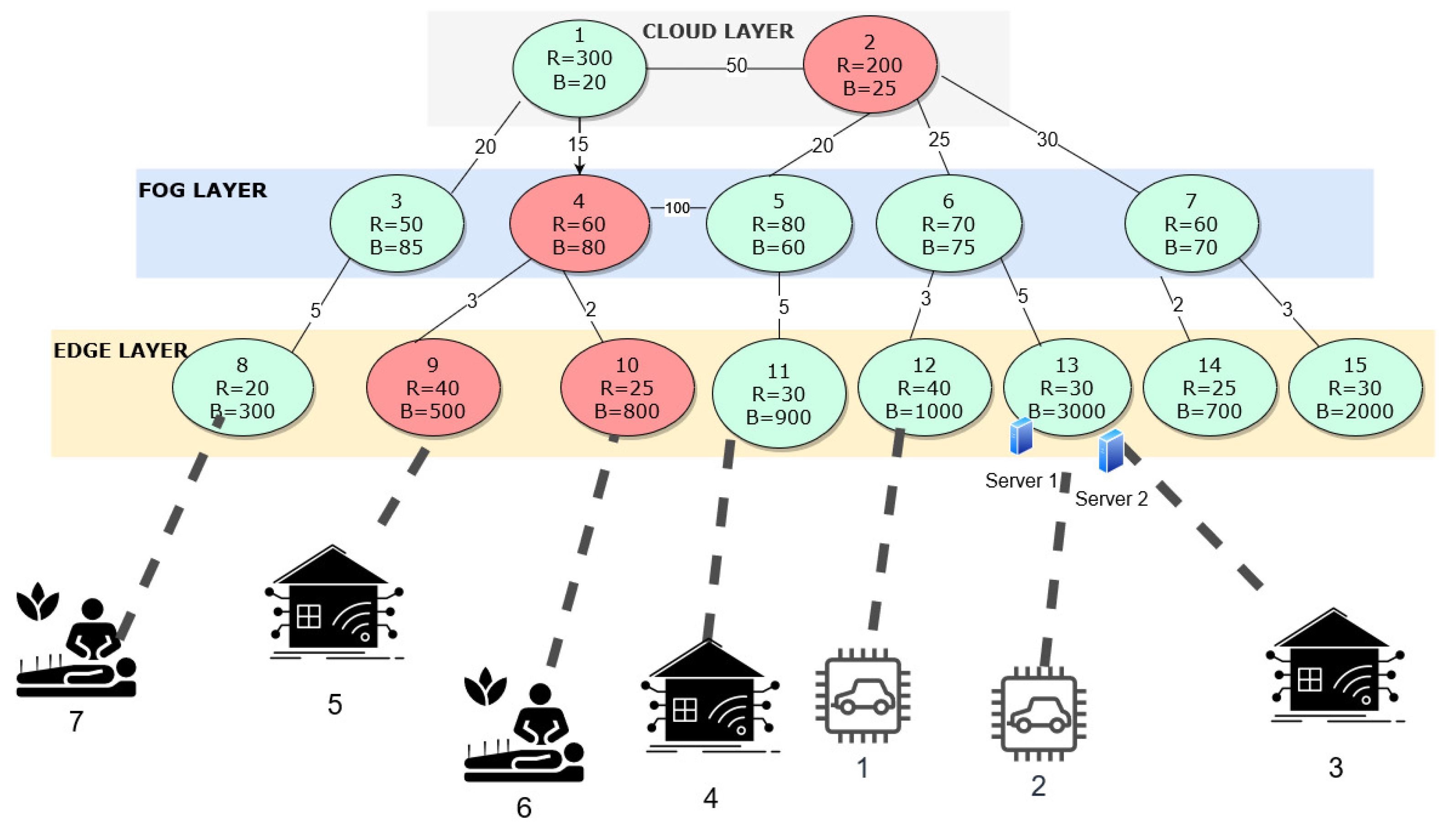

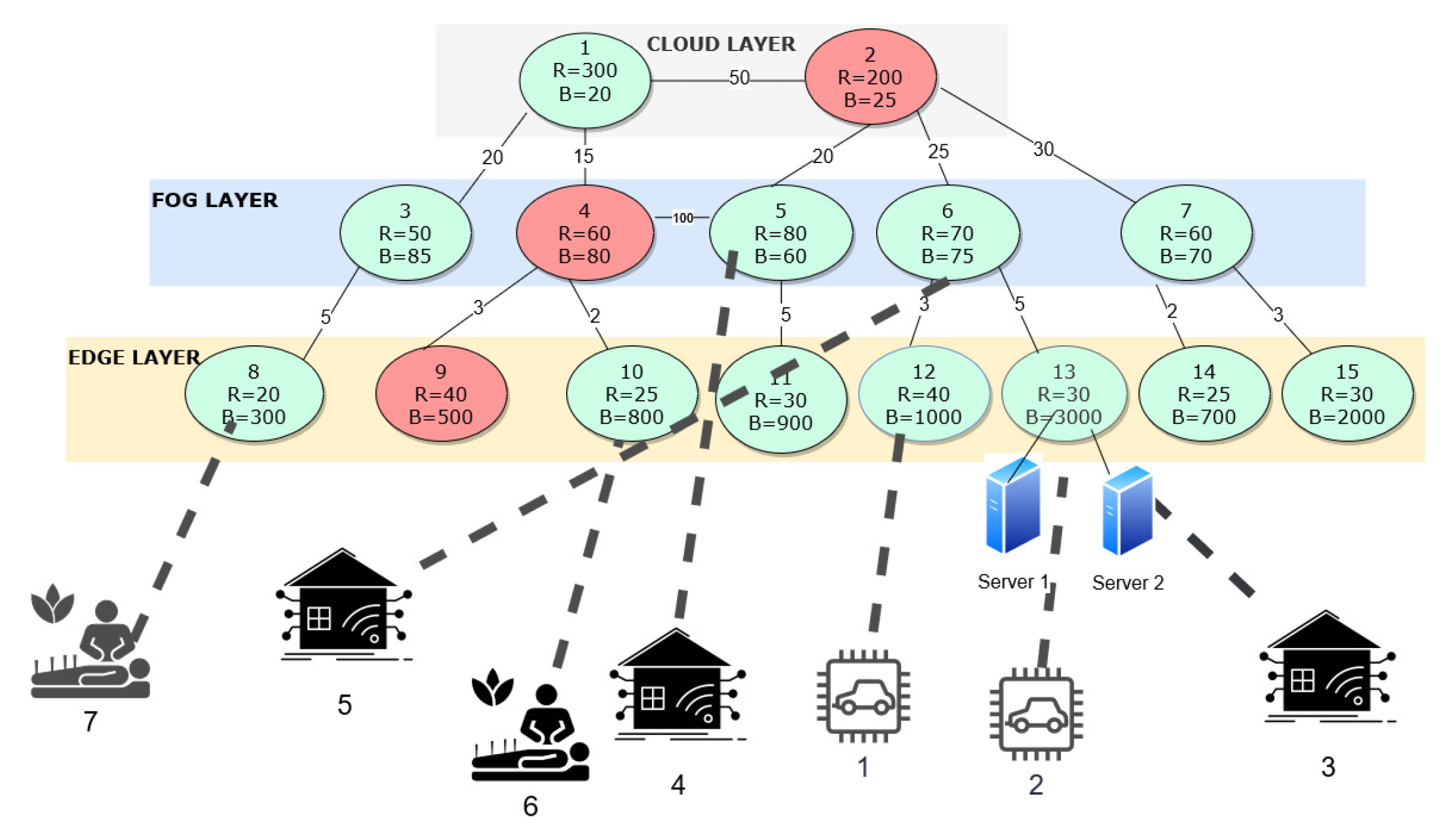

5. Framework Evaluation

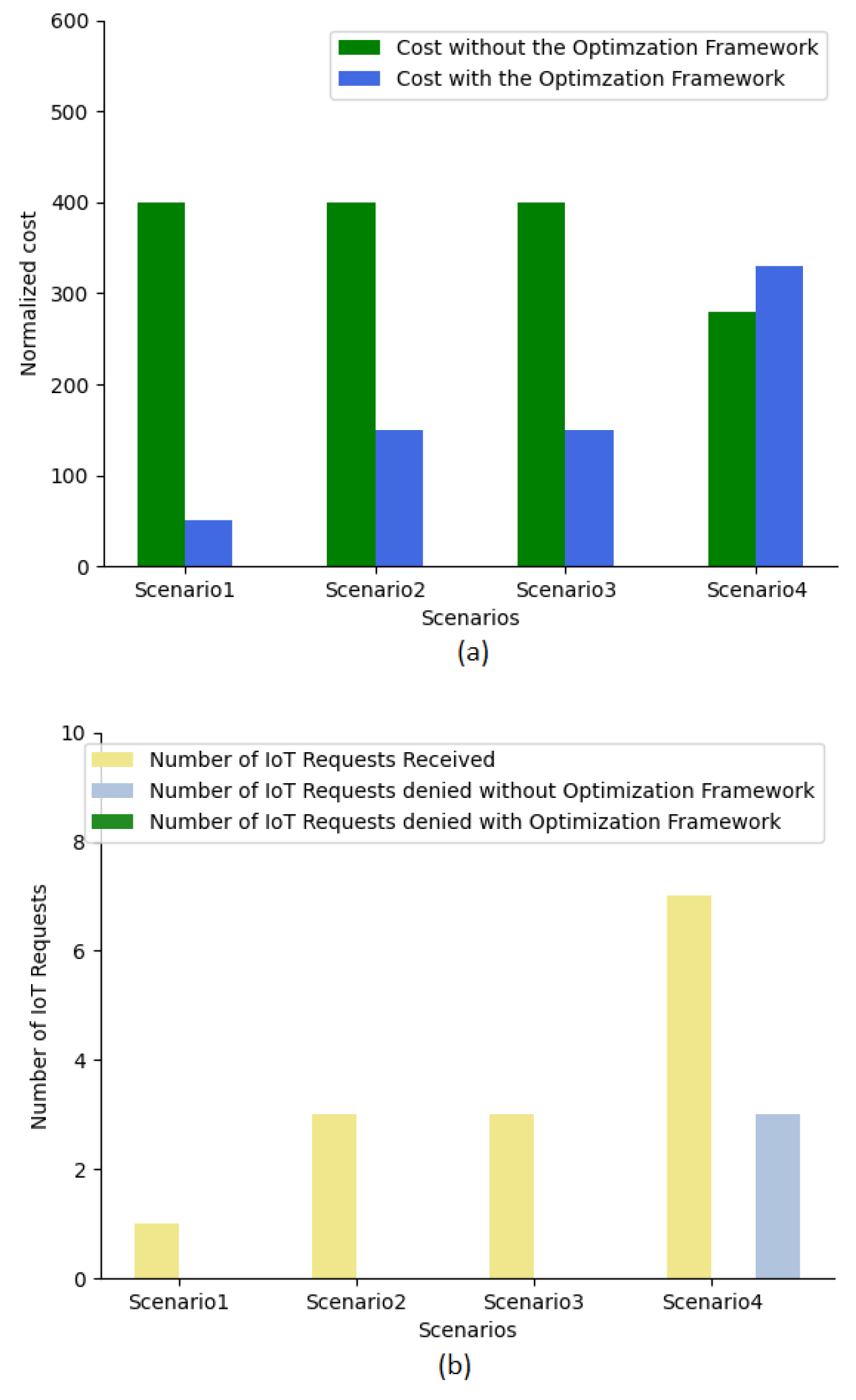

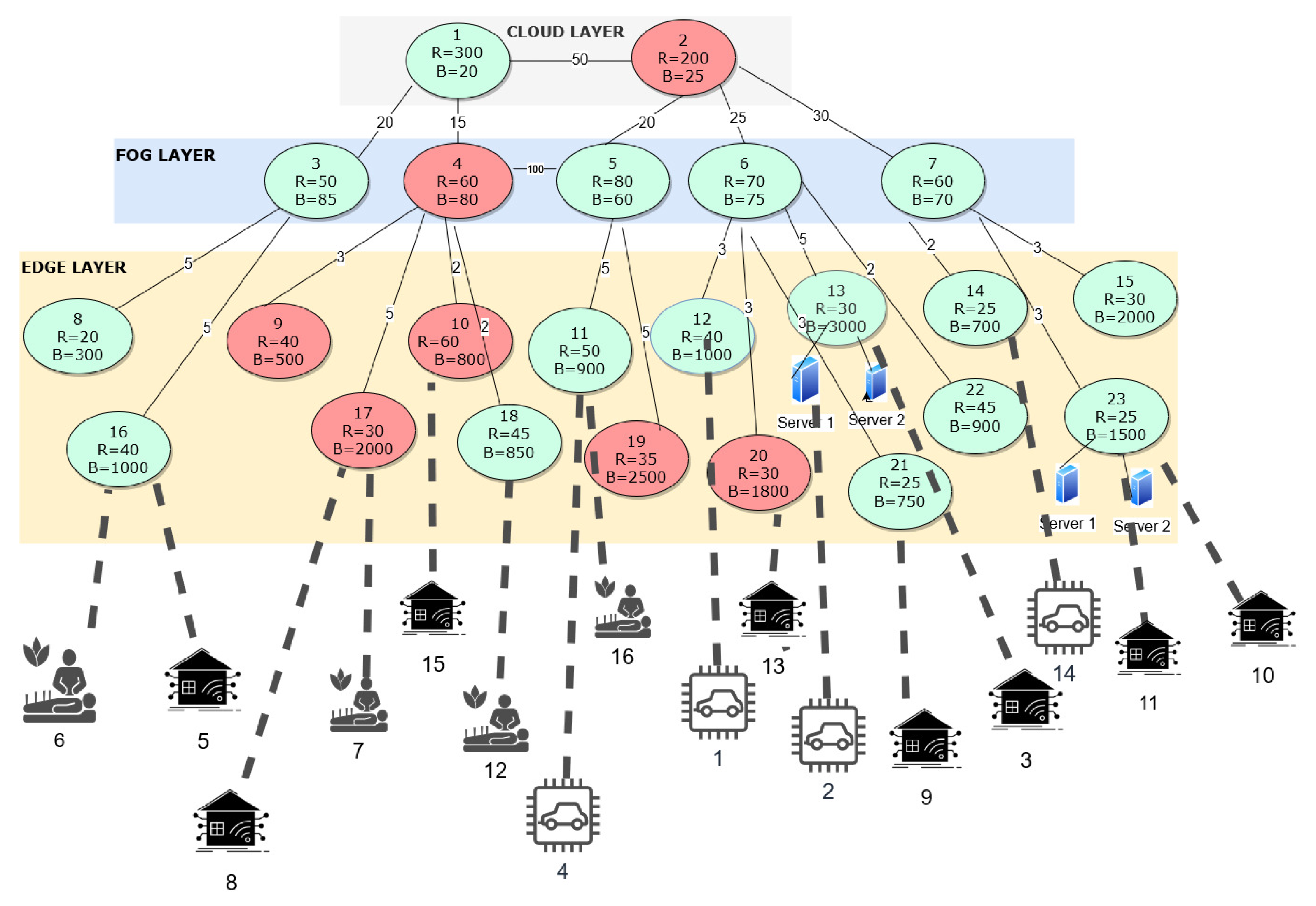

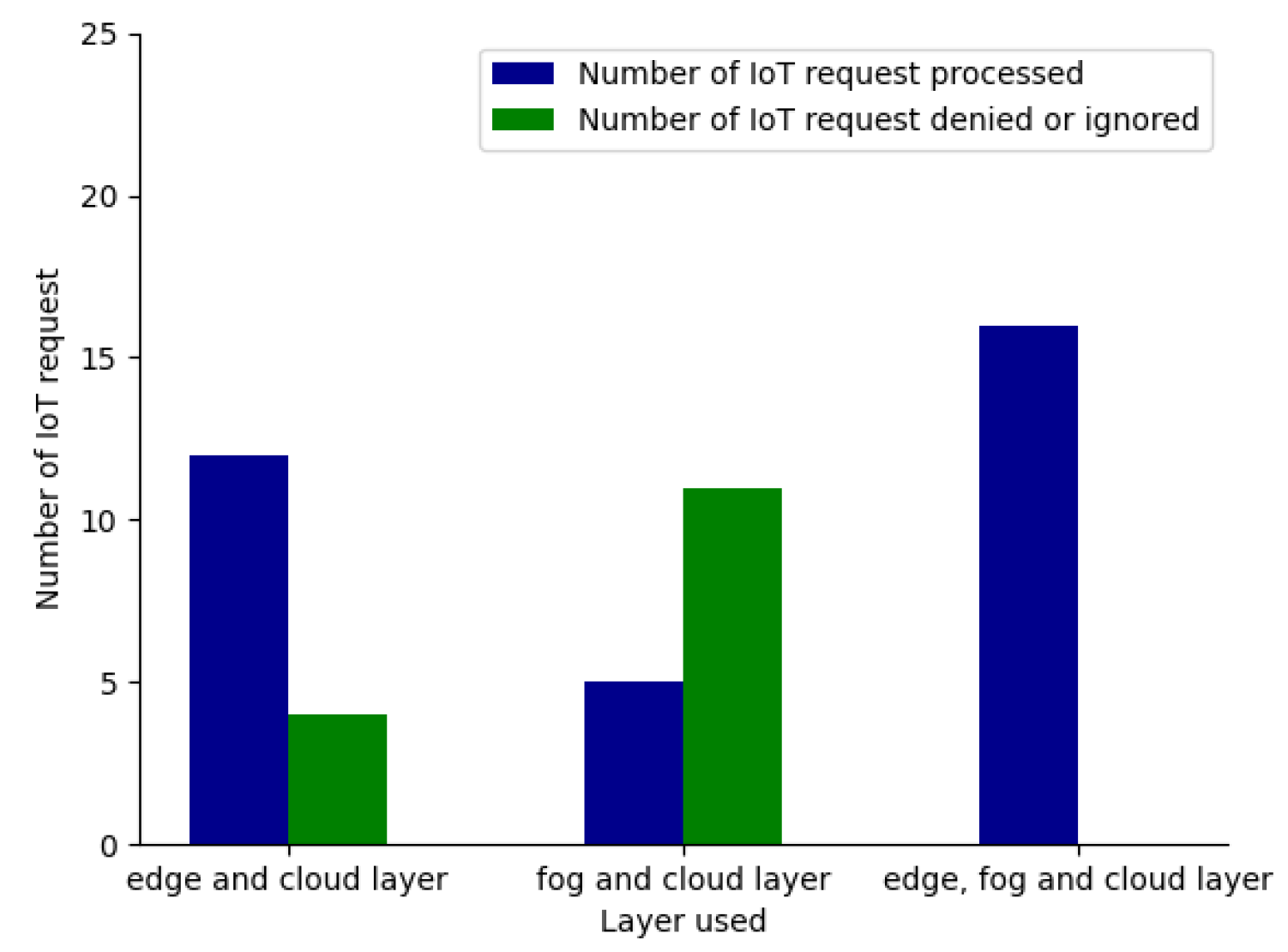

5.1. Optimal Solutions of Experiment 1

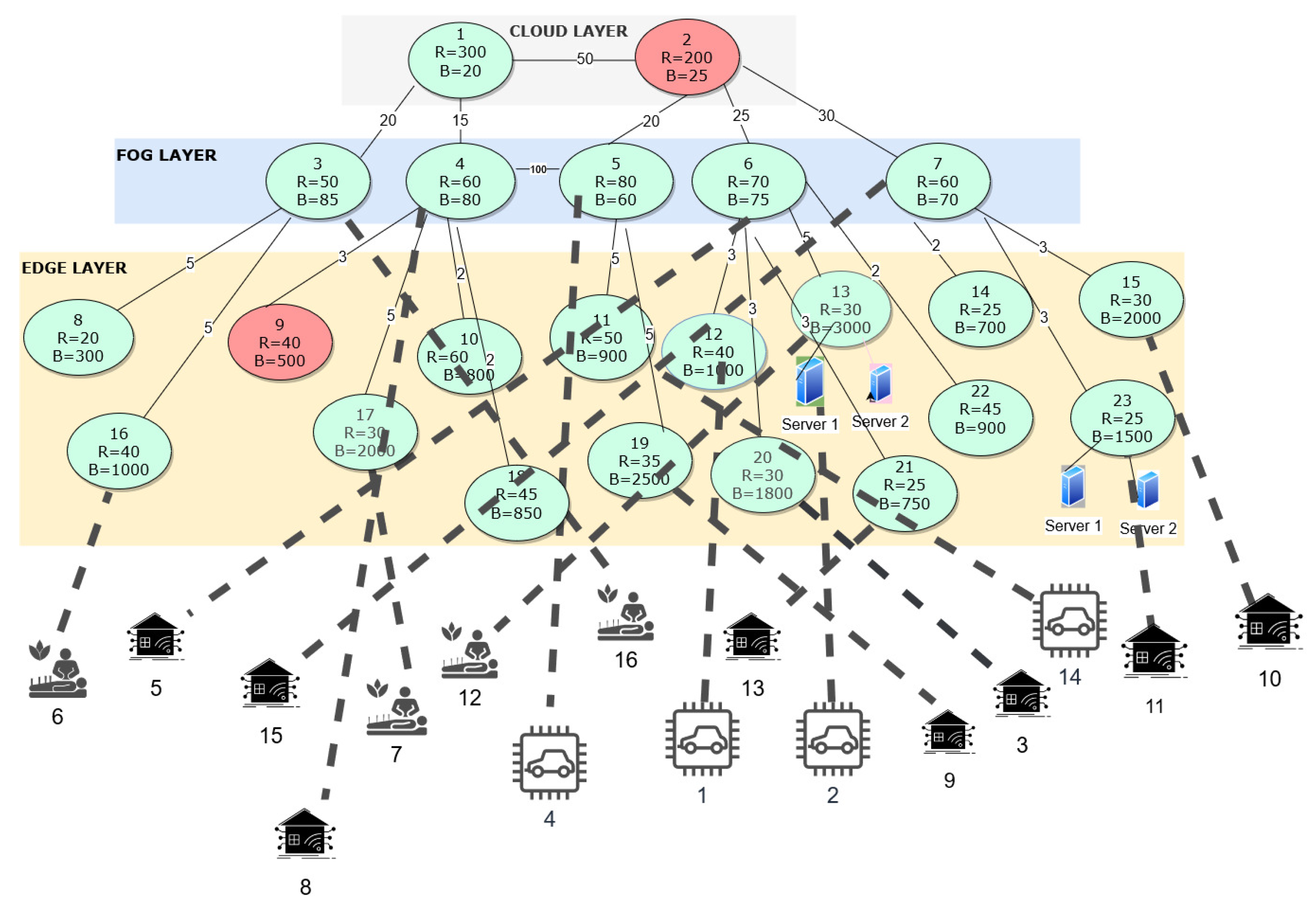

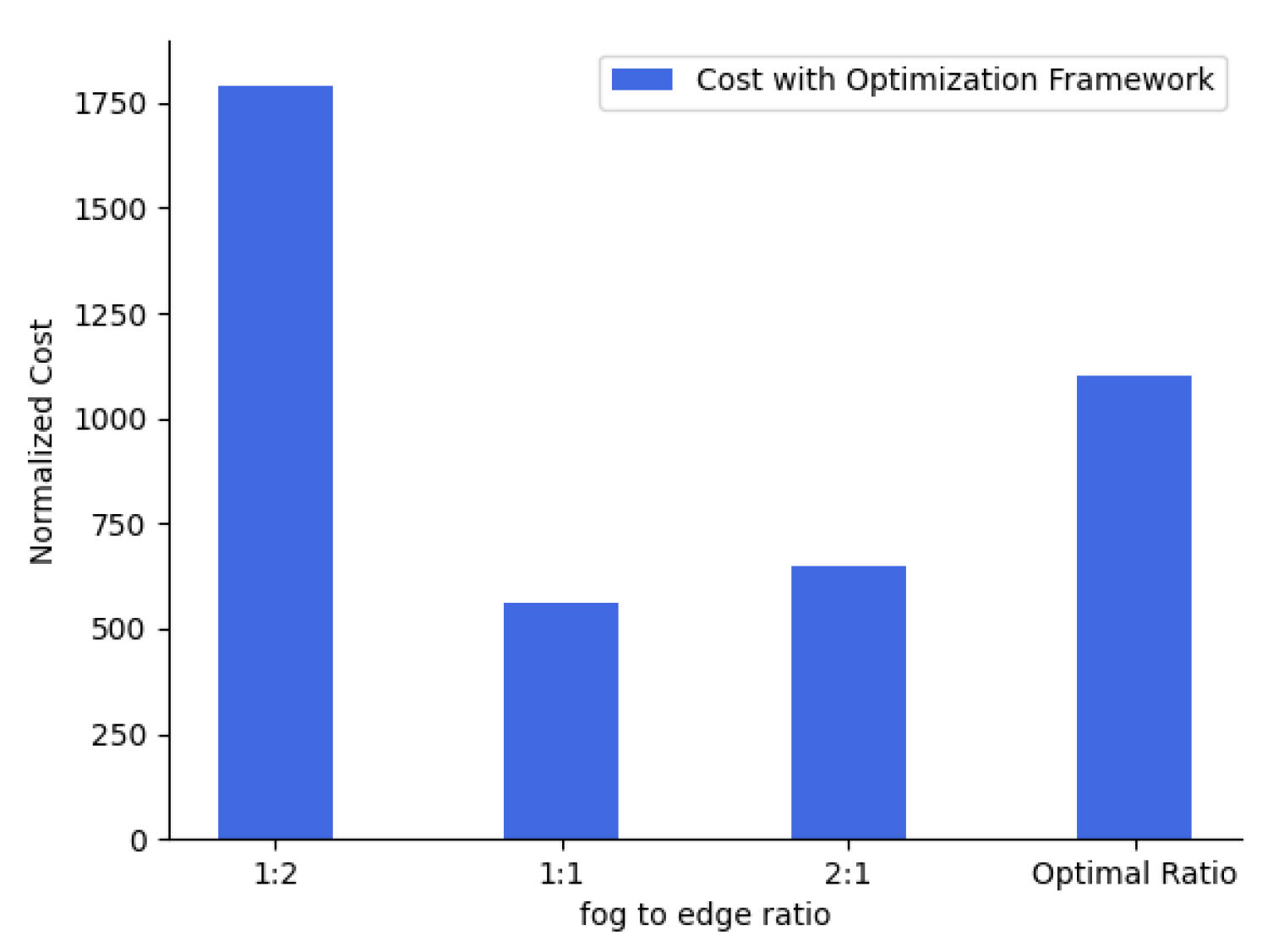

5.2. Optimal Solutions of Experiment 2

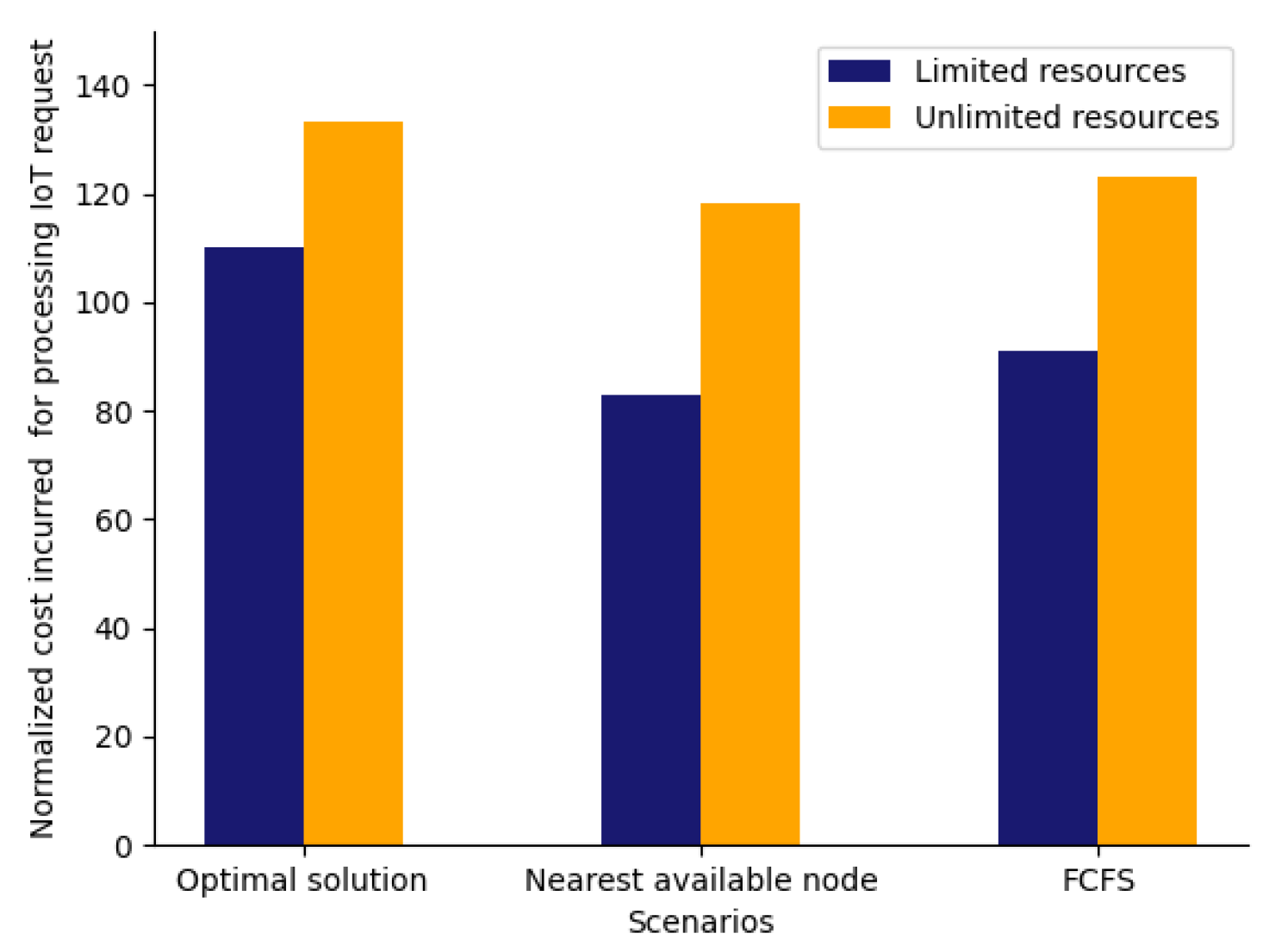

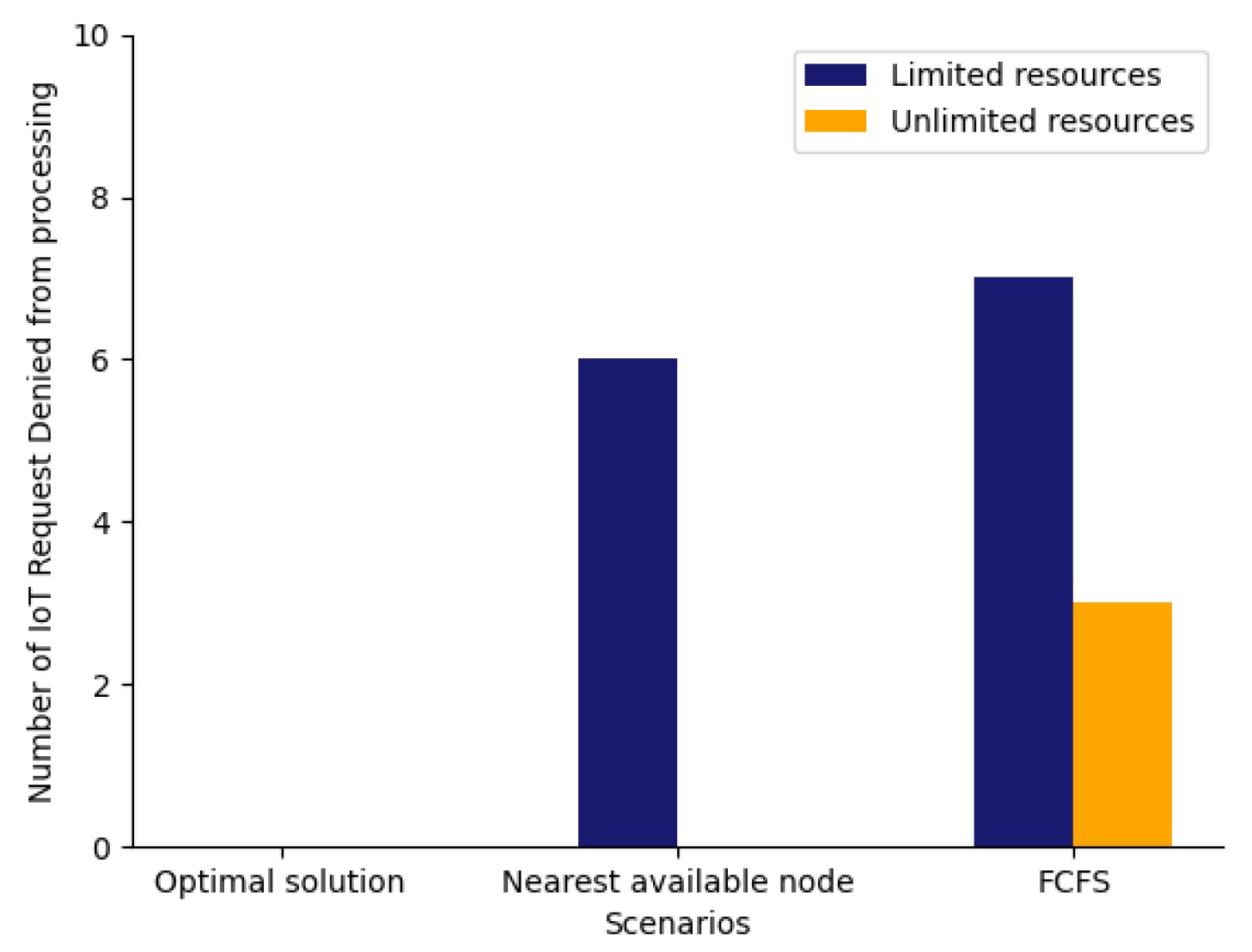

5.2.1. Scenario 0: Optimal Solution

5.2.2. Scenario 1: Cost Sensitivity

5.2.3. Scenario 2: Single Layer Deployed (Either Edge/Fog Layer)

5.2.4. Scenario 3: Allocation of IoT Requests on Basis of First Come First Serve (FCFS)

5.2.5. Scenario 4: Allocation Based on Nearest Available Node

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Edirisinghe, S.; Galagedarage, O.; Dias, I.; Ranaweera, C. Recent Development of Emerging Indoor Wireless Networks towards 6G. Network 2023, 3, 269–297. [Google Scholar] [CrossRef]

- Buzachis, A.; Galletta, A.; Celesti, A.; Fazio, M.; Villari, M. Development of a Smart Metering Microservice Based on Fast Fourier Transform (FFT) for Edge/Internet of Things Environments. In Proceedings of the 2019 IEEE 3rd International Conference on Fog and Edge Computing (ICFEC), Larnaca, Cyprus, 14–17 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Hu, P.; Chen, W.; He, C.; Li, Y.; Ning, H. Software-Defined Edge Computing (SDEC): Principle, Open IoT System Architecture, Applications, and Challenges. IEEE Internet Things J. 2020, 7, 5934–5945. [Google Scholar] [CrossRef]

- Gupta, H.; Vahid Dastjerdi, A.; Ghosh, S.K.; Buyya, R. iFogSim: A toolkit for modeling and simulation of resource management techniques in the Internet of Things, Edge and Fog computing environments. Softw. Pract. Exp. 2017, 47, 1275–1296. [Google Scholar] [CrossRef]

- Buyya, R.; Srirama, S.N. Modeling and Simulation of Fog and Edge Computing Environments Using iFogSim Toolkit. In Fog and Edge Computing: Principles and Paradigms; Wiley Telecom: Hoboken, NJ, USA, 2019; pp. 433–465. [Google Scholar] [CrossRef]

- Yang, H.; Alphones, A.; Zhong, W.D.; Chen, C.; Xie, X. Learning-based energy-efficient resource management by heterogeneous RF/VLC for ultra-reliable low-latency industrial IoT networks. IEEE Trans. Ind. Inform. 2019, 16, 5565–5576. [Google Scholar] [CrossRef]

- Mouradian, C.; Ebrahimnezhad, F.; Jebbar, Y.; Ahluwalia, J.; Afrasiabi, S.N.; Glitho, R.H.; Moghe, A.R. An IoT Platform-as-a-Service for NFV-Based Hybrid Cloud/Fog Systems. IEEE Internet Things J. 2020, 7, 6102–6115. [Google Scholar] [CrossRef]

- Ranaweera, C.; Kua, J.; Dias, I.; Wong, E.; Lim, C.; Nirmalathas, A. 4G to 6G: Disruptions and drivers for optical access (Invited). IEEE/OSA J. Opt. Commun. Netw. 2022, 14, A143–A153. [Google Scholar] [CrossRef]

- Zerifi, M.; Ezzouhairi, A.; Boulaalam, A. Overview on SDN and NFV based architectures for IoT environments: Challenges and solutions. In Proceedings of the 2020 Fourth International Conference On Intelligent Computing in Data Sciences (ICDS), Fez, Morocco, 21–23 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Bouras, M.A.; Farha, F.; Ning, H. Convergence of computing, communication, and caching in Internet of Things. Intell. Converg. Netw. 2020, 1, 18–36. [Google Scholar] [CrossRef]

- Dias, I.; Ruan, L.; Ranaweera, C.; Wong, E. From 5G to beyond: Passive optical network and multi-access edge computing integration for latency-sensitive applications. J. Opt. Fiber Technol. 2023, 75, 103191. [Google Scholar] [CrossRef]

- Lin, K.; Li, Y.; Zhang, Q.; Fortino, G. AI-Driven Collaborative Resource Allocation for Task Execution in 6G Enabled Massive IoT. IEEE Internet Things J. 2021, 8, 5264–5273. [Google Scholar] [CrossRef]

- Malik, U.M.; Javed, M.A.; Zeadally, S.; ul Islam, S. Energy efficient fog computing for 6G enabled massive IoT: Recent trends and future opportunities. IEEE Internet Things J. 2021, 9, 14572–14594. [Google Scholar] [CrossRef]

- Hussein, M.; Mousa, M. Efficient Task Offloading for IoT-Based Applications in Fog Computing Using Ant Colony Optimization. IEEE Access 2020, 8, 37191–37201. [Google Scholar] [CrossRef]

- Cong, R.; Zhao, Z.; Min, G.; Feng, C.; Jiang, Y. EdgeGO: A Mobile Resource-sharing Framework for 6G Edge Computing in Massive IoT Systems. IEEE Internet Things J. 2021, 9, 14521–14529. [Google Scholar] [CrossRef]

- Maiti, P.; Shukla, J.; Sahoo, B.; Turuk, A.K. QoS-aware fog nodes placement. In Proceedings of the 2018 4th International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 15–17 March 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Liu, Y.; Meng, Q.; Tian, F. Fog Node Selection for Low Latency Communication and Anomaly Detection in Fog Networks. In Proceedings of the 2019 International Conference on Communications, Information System and Computer Engineering (CISCE), Haikou, China, 5–7 July 2019; pp. 276–279. [Google Scholar] [CrossRef]

- Manogaran, G.; Rawal, B.S. An Efficient Resource Allocation Scheme with Optimal Node Placement in IoT-Fog-Cloud Architecture. IEEE Sens. J. 2021, 21, 25106–25113. [Google Scholar] [CrossRef]

- Goudarzi, M.; Wu, H.; Palaniswami, M.; Buyya, R. An Application Placement Technique for Concurrent IoT Applications in Edge and Fog Computing Environments. IEEE Trans. Mob. Comput. 2021, 20, 1298–1311. [Google Scholar] [CrossRef]

- Guerrero, C.; Lera, I.; Juiz, C. A lightweight decentralized service placement policy for performance optimization in fog computing. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 2435–2452. [Google Scholar] [CrossRef]

- Ottenwälder, B.; Koldehofe, B.; Rothermel, K.; Ramachandran, U. Migcep: Operator migration for mobility driven distributed complex event processing. In Proceedings of the 7th ACM International Conference on Distributed Event-Based Systems, Arlington, VA, USA, 29 June–3 July 2013; pp. 183–194. [Google Scholar]

- Mebrek, A.; Esseghir, M.; Merghem-Boulahia, L. Energy-Efficient Solution Based on Reinforcement Learning Approach in Fog Networks. In Proceedings of the 2019 15th International Wireless Communications Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 2019–2024. [Google Scholar] [CrossRef]

- El Kafhali, S.; Salah, K. Efficient and Dynamic Scaling of Fog Nodes for IoT Devices. J. Supercomput. 2017, 73, 5261–5284. [Google Scholar] [CrossRef]

- Fereira, R.; Ranaweera, C.; Schneider, J.G.; Lee, K. Optimal Node Selection in Communication and Computation Converged IoT Network. In Proceedings of the 2022 IEEE Intl Conf on Parallel and Distributed Processing with Applications, Big Data and Cloud Computing, Sustainable Computing and Communications, Social Computing and Networking (ISPA/BDCloud/SocialCom/SustainCom), Melbourne, Australia, 17–19 December 2022; pp. 539–547. [Google Scholar] [CrossRef]

- Rahman, T.; Yao, X.; Tao, G.; Ning, H.; Zhou, Z. Efficient Edge Nodes Reconfiguration and Selection for the Internet of Things. IEEE Sens. J. 2019, 19, 4672–4679. [Google Scholar] [CrossRef]

- Aboalnaser, S.A. Energy–Aware Task Allocation Algorithm Based on Transitive Cluster-Head Selection for IoT Networks. In Proceedings of the 2019 12th International Conference on Developments in eSystems Engineering (DeSE), Kazan, Russia, 7–10 October 2019; pp. 176–179. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, S.; Chen, S.; Yang, G. Energy and delay co-aware computation offloading with deep learning in fog computing networks. In Proceedings of the 2019 IEEE 38th International Performance Computing and Communications Conference (IPCCC), London, UK, 29–31 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Wang, S.; Zafer, M.; Leung, K.K. Online placement of multi-component applications in edge computing environments. IEEE Access 2017, 5, 2514–2533. [Google Scholar] [CrossRef]

- Taneja, M.; Davy, A. Resource aware placement of IoT application modules in Fog-Cloud Computing Paradigm. In Proceedings of the 2017 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Lisbon, Portugal, 8–12 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1222–1228. [Google Scholar]

- Pan, J.; Zhang, Y.; Wang, Q.; Yan, D.; Zhang, W. A Novel Fog Node Aggregation Approach for Users in Fog Computing Environment. In Proceedings of the 2020 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Calgary, AB, Canada, 17–22 August 2020; pp. 160–167. [Google Scholar] [CrossRef]

- Muleta, N.; Badar, A.Q.H. Study of Energy Management System and IOT Integration in Smart Grid. In Proceedings of the 2021 1st International Conference on Power Electronics and Energy (ICPEE), Bhubaneswar, India, 2–3 January 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Ranaweera, C.; Wong, E.; Lim, C.; Nirmalathas, A. Quality of service assurance in EPON-WiMAX converged network. In Proceedings of the 2011 International Topical Meeting on Microwave Photonics jointly held with the 2011 Asia-Pacific Microwave Photonics Conference, Singapore, 18–21 October 2011; pp. 369–372. [Google Scholar] [CrossRef]

- Ding, J.; Nemati, M.; Ranaweera, C.; Choi, J. IoT Connectivity Technologies and Applications: A Survey. IEEE Access 2020, 8, 67646–67673. [Google Scholar] [CrossRef]

- Nirmalathas, A.; Song, T.; Edirisinghe, S.; Wang, K.; Lim, C.; Wong, E.; Ranaweera, C.; Alameh, K. Indoor optical wireless access networks-recent progress (Invited). IEEE/OSA J. Opt. Commun. Netw. 2021, 13, A178–A186. [Google Scholar] [CrossRef]

- Ranaweera, C.; Nirmalathas, A.; Wong, E.; Lim, C.; Monti, P.; Marija Furdek, L.W.; Skubic, B.; Machuca, C.M. Rethinking of optical transport network design for 5G/6G mobile communication. IEEE Future Netw. Tech Focus 2021. Available online: https://futurenetworks.ieee.org/tech-focus/april-2021/rethinking-of-optical-transport-network-design-for-5g-6g-mobile-communication (accessed on 26 June 2023).

- Gupta, N.; Sharma, S.; Juneja, P.K.; Garg, U. SDNFV 5G-IoT: A Framework for the Next Generation 5G enabled IoT. In Proceedings of the 2020 International Conference on Advances in Computing, Communication Materials (ICACCM), Dehradun, India, 21–22 August 2020; pp. 289–294. [Google Scholar] [CrossRef]

- Ranaweera, C.; Monti, P.; Skubic, B.; Furdek, M.; Wosinska, L.; Nirmalathas, A.; Lim, C.; Wong, E. Optical X-haul options for 5G fixed wireless access: Which one to choose? In Proceedings of the IEEE Conference on Computer Communications (INFOCOM) Workshops, Honolulu, HI, USA, 16–19 April 2018; pp. 1–2. [Google Scholar] [CrossRef]

- Ranaweera, C.; Wong, E.; Lim, C.; Jayasundara, C.; Nirmalathas, A. Optimal design and backhauling of small-cell network: Implication of energy cost. In Proceedings of the 2016 21st OptoElectronics and Communications Conference (OECC) held jointly with 2016 International Conference on Photonics in Switching (PS), Niigata, Japan, 3–7 July 2016; pp. 1–3. [Google Scholar]

- Isa, I.S.B.M.; El-Gorashi, T.E.H.; Musa, M.O.I.; Elmirghani, J.M.H. Energy Efficient Fog-Based Healthcare Monitoring Infrastructure. IEEE Access 2020, 8, 197828–197852. [Google Scholar] [CrossRef]

- Yu, Y.; Ranaweera, C.; Lim, C.; Wong, E.; Guo, L.; Liu, Y.; Nirmalathas, A. Optimization and Deployment of Survivable Fiber-Wireless (FiWi) Access Networks with Integrated Small Cell and WiFi. In Proceedings of the 2015 IEEE International Conference on Ubiquitous Wireless Broadband (ICUWB), Montreal, QC, Canada, 4–7 October 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Ranaweera, C.; Monti, P.; Skubic, B.; Wong, E.; Furdek, M.; Wosinska, L.; Machuca, C.M.; Nirmalathas, A.; Lim, C. Optical Transport Network Design for 5G Fixed Wireless Access. J. Light. Technol. 2019, 37, 3893–3901. [Google Scholar] [CrossRef]

| Ref. | Problem | Technique | Control Parameters | Outcome |

|---|---|---|---|---|

| [6] | Selecting radio frequency based visible light communication as an access point and meet the required QoS. | Markov determination procedure and replay of experience post determination and reinforcement learning methodology | Usage status of sub channel, quality, application types | Achieved energy efficiency with required data rate via network and sub channel selection |

| [27] | Allocation of energy efficient and delay restricted resource in fog | Deployment of application using Poly-time algorithm | Delay | Energy and time optimization |

| [30] | Fog computing being geographically distributed near end-users and restricted to sufficient services because of resource limitations | Linear programming | Low rental cost, minimum data | Resource optimization using collation of fog nodes and deployment of virtual machine |

| [4] | Deployment location for resource and application component in cloud-fog environment | Resource management layer using application placement and scheduling | Latency, network congestion, energy consumption and cost | Application placement optimization in IoT using edge and fog based architecture |

| [5] | Selection of suitable location for application module in fog-edge environment | ILP, analytical modelling, resource management framework | Delay, latency, energy usage | Module placement optimization in IoT using fog and edge based architecture |

| [29] | Ideal location in fog-cloud environment for application component | Module mapping algorithm | Delay, network usage, energy | Module placement optimization in IoT using fog and cloud based architecture |

| [28] | Suitable location in mobile-edge clouds for application or workload processing | Online approximation algorithms with polynomial-logarithmic (poly-log) competitive ratio for tree application graph placement | Latency, energy consumption, resource utilization | Workload placement optimization in IoT using edge cloud based architecture |

| Metric | Value | |

|---|---|---|

| Smart Grid | Delay between devices | 10 ms–1 ms |

| Latency (end-to-end) | 1 ms | |

| Teleprotection | ≥10 ms | |

| Synchrophasor applications | ≈20 ms | |

| SCADA and VoIP applications | 100–200 ms | |

| Smart metering and others | upto few seconds | |

| Bandwidth/throughput | 5–10 Mbps one control area and 25–75 Mbps for inter control | |

| Data rates/transmission rate | 56 kbps–1 Mbps | |

| Reliability/availability | 99–99.99% | |

| Autonomous Vehicle | Delay between devices | ≈1 ms |

| Latency (end-to-end) | ≈1 ms | |

| Bandwidth/throughput | 512 Gbps–1024 Gbps | |

| Data rates/transmission rate | 10–24 Gbps | |

| Reliability/availability | 99.99–100% | |

| e-Health | Delay between devices | 1 ms–25 ms |

| Latency (end-to-end) | 1 ms–250 ms | |

| Bandwidth/throughput | 5 Gbps–512 Gbps | |

| Data rates/transmission rate | 5 Gbps–10 Gbps | |

| Reliability/availability | 99.99–100% |

| Job Number | Job Resource Requirement | Job Bandwidth | Job Latency | Job Origin | Optimally Selected Node |

|---|---|---|---|---|---|

| 1 | 40 | 1000 | 10 | 12 | 12 |

| 2 | 30 | 1100 | 6 | 13 | 13 |

| 3 | 30 | 1100 | 65 | 13 | 20 |

| 4 | 80 | 60 | 8 | 11 | 5 |

| 5 | 60 | 70 | 100 | 16 | 6 |

| 6 | 40 | 1000 | 10 | 16 | 16 |

| 7 | 30 | 2000 | 10 | 17 | 17 |

| 8 | 60 | 80 | 8 | 17 | 4 |

| 9 | 35 | 2500 | 65 | 21 | 19 |

| 10 | 30 | 2000 | 65 | 23 | 15 |

| 11 | 25 | 1500 | 1 | 23 | 23 |

| 12 | 25 | 1500 | 110 | 18 | 13 |

| 13 | 25 | 750 | 6 | 20 | 21 |

| 14 | 45 | 900 | 60 | 14 | 11 |

| 15 | 60 | 70 | 100 | 10 | 7 |

| 16 | 50 | 85 | 100 | 11 | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fereira, R.; Ranaweera, C.; Lee, K.; Schneider, J.-G. Energy Efficient Node Selection in Edge-Fog-Cloud Layered IoT Architecture. Sensors 2023, 23, 6039. https://doi.org/10.3390/s23136039

Fereira R, Ranaweera C, Lee K, Schneider J-G. Energy Efficient Node Selection in Edge-Fog-Cloud Layered IoT Architecture. Sensors. 2023; 23(13):6039. https://doi.org/10.3390/s23136039

Chicago/Turabian StyleFereira, Rolden, Chathurika Ranaweera, Kevin Lee, and Jean-Guy Schneider. 2023. "Energy Efficient Node Selection in Edge-Fog-Cloud Layered IoT Architecture" Sensors 23, no. 13: 6039. https://doi.org/10.3390/s23136039

APA StyleFereira, R., Ranaweera, C., Lee, K., & Schneider, J.-G. (2023). Energy Efficient Node Selection in Edge-Fog-Cloud Layered IoT Architecture. Sensors, 23(13), 6039. https://doi.org/10.3390/s23136039