Diversifying Emotional Dialogue Generation via Selective Adversarial Training

Abstract

1. Introduction

- More natural communication: Emotions are an important part of human communication. When dialogue systems can understand and express emotions, they can more accurately capture and respond to users’ emotional expressions, making conversations more natural and human.

- Emotion recognition: By understanding the user’s emotions, the dialogue system can better understand the user’s intentions and needs. Emotion recognition helps to parse user input more precisely and provide responses and support based on emotional information.

- Emotional support: The dialogue system can express emotions and provide users with emotional support and emotional management. When users need reassurance, encouragement, or understanding, the emotional expression of dialogue systems can provide a positive impact and emotional connection.

- Improvement of user experience: Emotion plays an important role in user experience. When the dialog system is able to recognize and respond to the user’s emotions, the user feels understood and cared for, which helps to build a better user experience and enhance user satisfaction with the dialog system.

- Emotion research and application: The ability of dialogue systems to understand and express emotions also contributes to the field of emotion research and application. For example, in research on affective computing, affective analysis, and affective intelligence, dialogue systems can provide an experimental platform and tool.

- A selective disturbance module is proposed that uses a disturbance word selector to perturb a portion of the response words based on learned potential variables, thereby improving response diversity.

- We introduce a global emotion label constraint to control the impact of perturbations during decoding, ensuring that the model improves response diversity while maintaining emotional expression.

- Our model’s ability to generate more diverse emotional responses compared to the baseline is demonstrated through extensive experiments on two standard datasets.

2. Related Works

2.1. Emotional Response Generation

2.2. Response Diversity

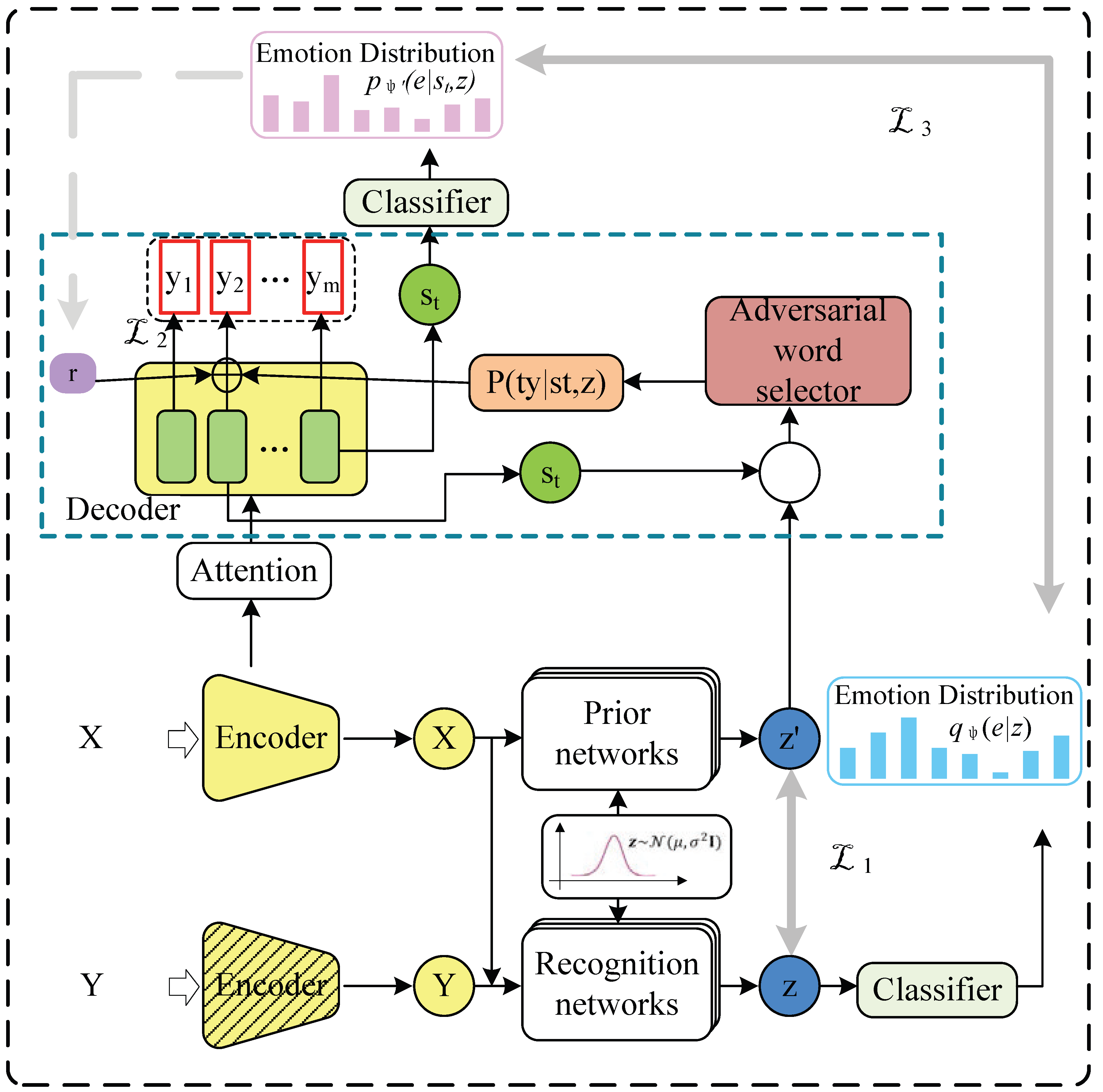

3. Approach

3.1. Formalized Definition

3.2. Model Framework

3.3. Basic Encoder-Decoder

3.4. Latent Variable Learning

3.5. Adversarial Word Selector

3.6. Selective Adversarial Decoding

3.7. Emotional Label Constraint

3.8. Loss

4. Experiments

4.1. Datasets

4.2. Baselines

4.3. Settings

4.4. Automatic Evaluation

4.5. Manual Evaluation

4.6. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Partala, T.; Surakka, V. The effects of affective interventions in human-computer interaction. Interact. Comput. 2004, 16, 295–309. [Google Scholar] [CrossRef]

- Prendinger, H.; Mori, J.; Ishizuka, M. Using human physiology to evaluate subtle expressivity of a virtual quizmaster in a mathematical game. Int. J. Hum. Comput. Stud. 2005, 62, 231–245. [Google Scholar] [CrossRef]

- Zhou, H.; Huang, M.; Zhang, T.; Zhu, X.; Liu, B. Emotional Chatting Machine: Emotional Conversation Generation with Internal and External Memory. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th Innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18), New Orleans, LA, USA, 2–7 February 2018; McIlraith, S.A., Weinberger, K.Q., Eds.; AAAI Press: Washington, DC, USA, 2018; pp. 730–739. [Google Scholar]

- Serban, I.V.; Sordoni, A.; Bengio, Y.; Courville, A.C.; Pineau, J. Building End-To-End Dialogue Systems Using Generative Hierarchical Neural Network Models. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ USA, 12–17 February 2016; Schuurmans, D., Wellman, M.P., Eds.; AAAI Press: Washington, DC, USA, 2016; pp. 3776–3784. [Google Scholar]

- Gu, J.; Lu, Z.; Li, H.; Li, V.O.K. Incorporating Copying Mechanism in Sequence-to-Sequence Learning. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, ACL 2016, Berlin, Germany, 7–12 August 2016; The Association for Computer Linguistics: Cedarville, OH, USA, 2016; Volume 1: Long Papers. [Google Scholar] [CrossRef]

- Song, Z.; Zheng, X.; Liu, L.; Xu, M.; Huang, X. Generating Responses with a Specific Emotion in Dialog. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D.R., Màrquez, L., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2019; Volume 1: Long Papers, pp. 3685–3695. [Google Scholar] [CrossRef]

- Rashkin, H.; Smith, E.M.; Li, M.; Boureau, Y. Towards Empathetic Open-domain Conversation Models: A New Benchmark and Dataset. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D.R., Màrquez, L., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2019; Volume 1: Long Papers, pp. 5370–5381. [Google Scholar] [CrossRef]

- Gowda, T.; May, J. Finding the Optimal Vocabulary Size for Neural Machine Translation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online Event, 16–20 November 2020; Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2020; Volume EMNLP 2020, pp. 3955–3964. [Google Scholar] [CrossRef]

- Mukhoti, J.; Kulharia, V.; Sanyal, A.; Golodetz, S.; Torr, P.H.S.; Dokania, P.K. Calibrating Deep Neural Networks using Focal Loss. In Proceedings of the Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Virtual, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar]

- Jiang, S.; Ren, P.; Monz, C.; de Rijke, M. Improving Neural Response Diversity with Frequency-Aware Cross-Entropy Loss. In Proceedings of the World Wide Web Conference, WWW 2019, San Francisco, CA, USA, 13–17 May 2019; Liu, L., White, R.W., Mantrach, A., Silvestri, F., McAuley, J.J., Baeza-Yates, R., Zia, L., Eds.; ACM: New York, NY, USA, 2019; pp. 2879–2885. [Google Scholar] [CrossRef]

- Yang, C.; Xie, L.; Qiao, S.; Yuille, A.L. Knowledge Distillation in Generations: More Tolerant Teachers Educate Better Students. arXiv 2018, arXiv:1805.05551. [Google Scholar]

- Holtzman, A.; Buys, J.; Du, L.; Forbes, M.; Choi, Y. The Curious Case of Neural Text Degeneration. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Huang, C.; Zaïane, O.R.; Trabelsi, A.; Dziri, N. Automatic Dialogue Generation with Expressed Emotions. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT, New Orleans, LA, USA, 1–6 June 2018; Walker, M.A., Ji, H., Stent, A., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2018; Volume 2 (Short Papers), pp. 49–54. [Google Scholar] [CrossRef]

- Colombo, P.; Witon, W.; Modi, A.; Kennedy, J.; Kapadia, M. Affect-Driven Dialog Generation. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2019; Volume 1 (Long and Short Papers), pp. 3734–3743. [Google Scholar] [CrossRef]

- Ruan, Y.; Ling, Z. Emotion-Regularized Conditional Variational Autoencoder for Emotional Response Generation. IEEE Trans. Affect. Comput. 2023, 14, 842–848. [Google Scholar] [CrossRef]

- Lin, Z.; Madotto, A.; Shin, J.; Xu, P.; Fung, P. MoEL: Mixture of Empathetic Listeners. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistic: Cedarville, OH, USA, 2019; pp. 121–132. [Google Scholar] [CrossRef]

- Majumder, N.; Hong, P.; Peng, S.; Lu, J.; Ghosal, D.; Gelbukh, A.F.; Mihalcea, R.; Poria, S. MIME: MIMicking Emotions for Empathetic Response Generation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2020; pp. 8968–8979. [Google Scholar] [CrossRef]

- Majumder, N.; Ghosal, D.; Hazarika, D.; Gelbukh, A.F.; Mihalcea, R.; Poria, S. Exemplars-Guided Empathetic Response Generation Controlled by the Elements of Human Communication. IEEE Access 2022, 10, 77176–77190. [Google Scholar] [CrossRef]

- Zandie, R.; Mahoor, M.H. EmpTransfo: A Multi-Head Transformer Architecture for Creating Empathetic Dialog Systems. In Proceedings of the Thirty-Third International Florida Artificial Intelligence Research Society Conference, Miami, FL, USA, 17–20 May 2020; Barták, R., Bell, E., Eds.; AAAI Press: Washington, DC, USA, 2020; pp. 276–281. [Google Scholar]

- Zheng, C.; Liu, Y.; Chen, W.; Leng, Y.; Huang, M. CoMAE: A Multi-factor Hierarchical Framework for Empathetic Response Generation. In Proceedings of the Findings of the Association for Computational Linguistics: ACL/IJCNLP 2021, Online Event, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2021; Volume ACL/IJCNLP, pp. 813–824. [Google Scholar] [CrossRef]

- Katayama, S.; Aoki, S.; Yonezawa, T.; Okoshi, T.; Nakazawa, J.; Kawaguchi, N. ER-Chat: A Text-to-Text Open-Domain Dialogue Framework for Emotion Regulation. IEEE Trans. Affect. Comput. 2022, 13, 2229–2237. [Google Scholar] [CrossRef]

- Choi, B.; Hong, J.; Park, D.K.; Lee, S.W. F\2-Softmax: Diversifying Neural Text Generation via Frequency Factorized Softmax. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2020; pp. 9167–9182. [Google Scholar] [CrossRef]

- Li, Z.; Wang, R.; Chen, K.; Utiyama, M.; Sumita, E.; Zhang, Z.; Zhao, H. Data-dependent Gaussian Prior Objective for Language Generation. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Lagutin, E.; Gavrilov, D.; Kalaidin, P. Implicit Unlikelihood Training: Improving Neural Text Generation with Reinforcement Learning. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, EACL 2021, Online, 19–23 April 2021; Merlo, P., Tiedemann, J., Tsarfaty, R., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2021; pp. 1432–1441. [Google Scholar] [CrossRef]

- Li, M.; Roller, S.; Kulikov, I.; Welleck, S.; Boureau, Y.; Cho, K.; Weston, J. Don’t Say That! Making Inconsistent Dialogue Unlikely with Unlikelihood Training. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2020; pp. 4715–4728. [Google Scholar] [CrossRef]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A Diversity-Promoting Objective Function for Neural Conversation Models. In Proceedings of the NAACL HLT 2016, The 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Knight, K., Nenkova, A., Rambow, O., Eds.; The Association for Computational Linguistics: Cedarville, OH, USA, 2016; pp. 110–119. [Google Scholar] [CrossRef]

- Zhang, Y.; Galley, M.; Gao, J.; Gan, Z.; Li, X.; Brockett, C.; Dolan, B. Generating Informative and Diverse Conversational Responses via Adversarial Information Maximization. In Proceedings of the Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, Montréal, QC, Canada, 3–8 December 2018; Bengio, S., Wallach, H.M., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; The Association for Computational Linguistics: Cedarville, OH, USA, 2018; pp. 1815–1825. [Google Scholar]

- Baheti, A.; Ritter, A.; Li, J.; Dolan, B. Generating More Interesting Responses in Neural Conversation Models with Distributional Constraints. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Riloff, E., Chiang, D., Hockenmaier, J., Tsujii, J., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2018; pp. 3970–3980. [Google Scholar] [CrossRef]

- Csaky, R.; Purgai, P.; Recski, G. Improving Neural Conversational Models with Entropy-Based Data Filtering. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Korhonen, A., Traum, D.R., Màrquez, L., Eds; Association for Computational Linguistics: Cedarville, OH, USA, 2019; Volume 1: Long Papers, pp. 5650–5669. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, Y.; Jiang, Y.; Huang, M. Diversifying Dialog Generation via Adaptive Label Smoothing. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP (Volume 1: Long Papers), Virtual Event, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Toronto, ON, Canada, 2021; pp. 3507–3520. [Google Scholar] [CrossRef]

- Li, Y.; Feng, S.; Sun, B.; Li, K. Diversifying Neural Dialogue Generation via Negative Distillation. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL 2022, Seattle, WA, USA, 10–15 July 2022; Carpuat, M., de Marneffe, M., Ruíz, I.V.M., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2022; pp. 407–418. [Google Scholar] [CrossRef]

- Miyato, T.; Dai, A.M.; Goodfellow, I.J. Adversarial Training Methods for Semi-Supervised Text Classification. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- He, T.; Glass, J.R. Negative Training for Neural Dialogue Response Generation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2020; pp. 2044–2058. [Google Scholar] [CrossRef]

- Sohn, K.; Lee, H.; Yan, X. Learning Structured Output Representation using Deep Conditional Generative Models. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Association for Computing Machinery: New York, NY, USA, 2015; pp. 3483–3491. [Google Scholar]

- Xing, C.; Wu, W.; Wu, Y.; Liu, J.; Huang, Y.; Zhou, M.; Ma, W. Topic Aware Neural Response Generation. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Singh, S., Markovitch, S., Eds.; AAAI Press: Washington, DC, USA, 2017; pp. 3351–3357. [Google Scholar]

- Mou, L.; Song, Y.; Yan, R.; Li, G.; Zhang, L.; Jin, Z. Sequence to Backward and Forward Sequences: A Content-Introducing Approach to Generative Short-Text Conversation. In Proceedings of the COLING 2016, 26th International Conference on Computational Linguistics, Proceedings of the Conference: Technical Papers, Osaka, Japan, 11–16 December 2016; Calzolari, N., Matsumoto, Y., Prasad, R., Eds.; ACL: Augusta, GA, USA, 2016; pp. 3349–3358. [Google Scholar]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset. In Proceedings of the Eighth International Joint Conference on Natural Language Processing, IJCNLP 2017, Taipei, Taiwan, 27 November–1 December 2017; Kondrak, G., Watanabe, T., Eds.; Asian Federation of Natural Language Processing: Hong Kong, 2017; Volume 1: Long Papers, pp. 986–995. [Google Scholar]

- Lison, P.; Tiedemann, J. OpenSubtitles2016: Extracting Large Parallel Corpora from Movie and TV Subtitles. In Proceedings of the Tenth International Conference on Language Resources and Evaluation LREC 2016, Portorož, Slovenia, 23–28 May 2016; Calzolari, N., Choukri, K., Declerck, T., Goggi, S., Grobelnik, M., Maegaard, B., Mariani, J., Mazo, H., Moreno, A., Odijk, J., et al., Eds.; European Language Resources Association (ELRA): Paris, France, 2016. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Cambria, E.; Mihalcea, R. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations; Association for Computational Linguistics: Cedarville, OH, USA, 2019; pp. 527–536. [Google Scholar] [CrossRef]

- Chudasama, V.; Kar, P.; Gudmalwar, A.; Shah, N.; Wasnik, P.; Onoe, N. M2FNet: Multi-modal Fusion Network for Emotion Recognition in Conversation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops 2022, New Orleans, LA, USA, 19–20 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 4651–4660. [Google Scholar] [CrossRef]

- Majumder, N.; Poria, S.; Hazarika, D.; Mihalcea, R.; Gelbukh, A.F.; Cambria, E. DialogueRNN: An Attentive RNN for Emotion Detection in Conversations. In Proceedings of the The Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: Washington, DC, USA, 2019; pp. 6818–6825. [Google Scholar] [CrossRef]

- Ghosal, D.; Majumder, N.; Poria, S.; Chhaya, N.; Gelbukh, A.F. DialogueGCN: A Graph Convolutional Neural Network for Emotion Recognition in Conversation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Cedarville, OH, USA, 2019; pp. 154–164. [Google Scholar] [CrossRef]

- Mikolov, T.; Grave, E.; Bojanowski, P.; Puhrsch, C.; Joulin, A. Advances in Pre-Training Distributed Word Representations. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation, LREC 2018, Miyazaki, Japan, 7–12 May 2018; Calzolari, N., Choukri, K., Cieri, C., Declerck, T., Goggi, S., Hasida, K., Isahara, H., Maegaard, B., Mariani, J., Mazo, H., et al., Eds.; European Language Resources Association (ELRA): Paris, France, 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Bengio, Y., LeCun, Y., Eds.; Scientific Research Publishing: Wuhan, China, 2015. [Google Scholar]

| Method | MELD | IEMOCAP | ||

|---|---|---|---|---|

| Acc | wF1 | Acc | wF1 | |

| DialogRNN [42] | 59.54 | 57.03 | 63.40 | 62.75 |

| DialogGCN [43] | 59.46 | 58.10 | 65.25 | 64.18 |

| M2FNet [41] | 67.24 | 66.23 | 66.29 | 66.17 |

| Method | Acc ↑ | Dist-1 ↑ | Dist-2 ↑ | Ppl ↓ |

|---|---|---|---|---|

| CVAE [34] | 82.53 | 0.074 | 0.358 | 65.79 |

| ECM [3] | 93.27 | 0.017 | 0.073 | 62.31 |

| EmoDS [6] | 88.06 | 0.013 | 0.049 | 63.68 |

| Emo-CVAE [15] | 95.14 | 0.083 | 0.407 | 64.52 |

| Ours | 97.33 | 0.091 | 0.486 | 64.72 |

| Method | Acc ↑ | Dist-1 ↑ | Dist-2 ↑ | Ppl ↓ |

|---|---|---|---|---|

| CVAE [34] | 79.62 | 0.072 | 0.403 | 67.29 |

| ECM [3] | 88.51 | 0.018 | 0.090 | 64.41 |

| EmoDS [6] | 86.27 | 0.012 | 0.037 | 64.34 |

| Emo-CVAE [15] | 90.34 | 0.096 | 0.512 | 66.38 |

| Ours | 92.89 | 0.104 | 0.656 | 66.21 |

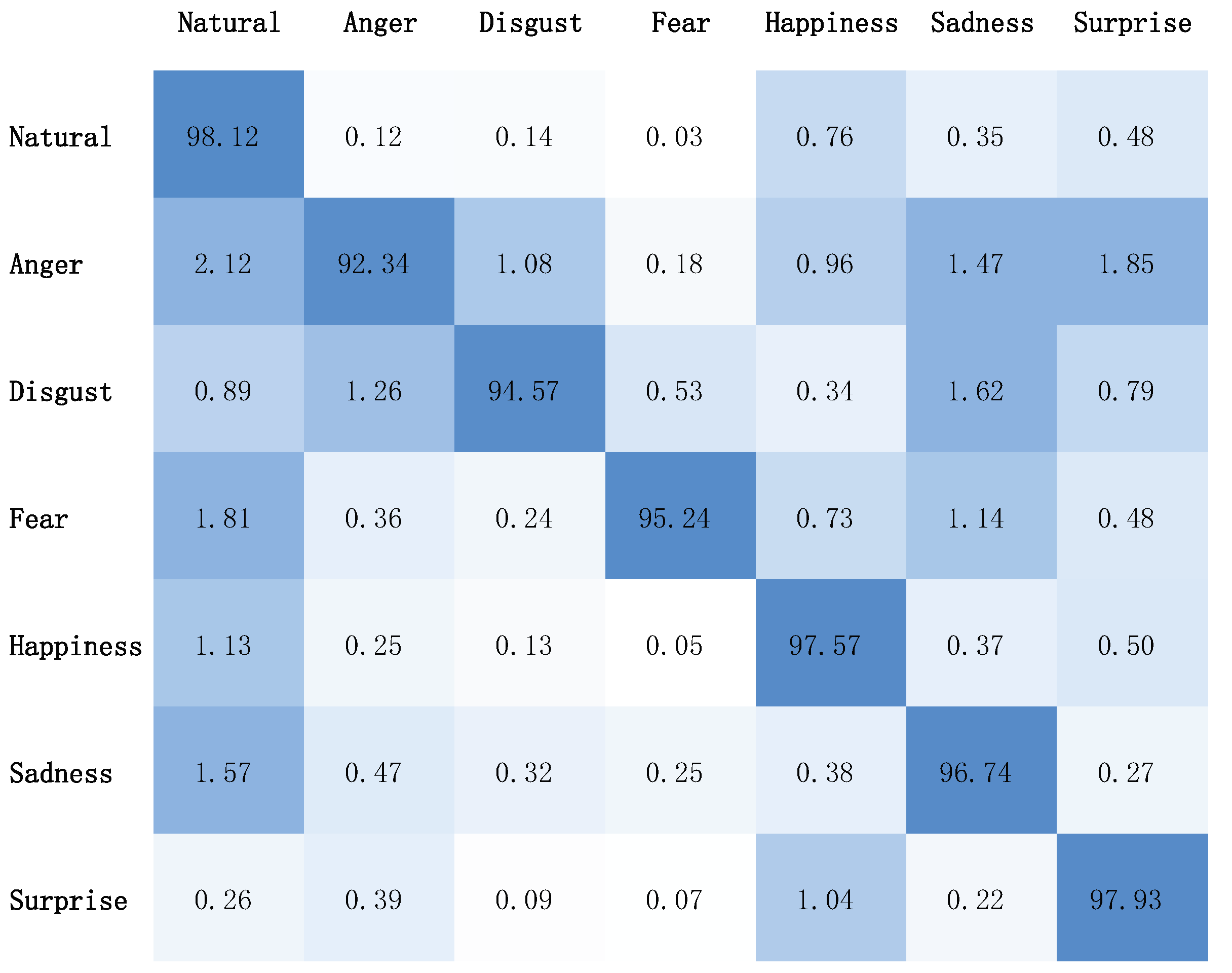

| Method | Natural | Anger | Disgust | Fear | Happiness | Sadness | Surprise | Average |

|---|---|---|---|---|---|---|---|---|

| CVAE [34] | 96.29 | 49.27 | 74.19 | 83.85 | 90.16 | 81.79 | 85.33 | 82.53 |

| ECM [3] | 97.13 | 77.50 | 83.22 | 93.92 | 97.62 | 94.12 | 93.27 | |

| EmoDS [6] | 95.92 | 62.31 | 65.98 | 92.03 | 97.27 | 90.08 | 95.87 | 88.06 |

| Emo-CVAE [15] | 97.35 | 91.06 | 95.21 | 93.99 | 96.90 | 95.28 | 96.13 | 95.14 |

| Ours | 98.12 | 92.34 | 94.57 | 95.24 | 97.57 | 96.74 | 97.93 | 97.33 |

| Method | TT | TF | FT | FF |

|---|---|---|---|---|

| CVAE [34] | 80.69 | 16.22 | 2.39 | 0.70 |

| ECM [3] | 93.15 | 4.37 | 1.93 | 0.55 |

| EmoDS [6] | 85.43 | 16.86 | 1.89 | 0.82 |

| Emo-CVAE [15] | 94.72 | 3.81 | 1.26 | 0.21 |

| Win | Lose | Tie | |

|---|---|---|---|

| vs. CVAE [34] | |||

| TT | 31.2 | 11.7 | 57.1 |

| TF | 64.3 | 4.5 | 31.2 |

| FT | 13.7 | 42.6 | 43.7 |

| FF | 35.9 | 8.4 | 55.7 |

| vs. ECM [3] | |||

| TT | 29.6 | 22.5 | 47.9 |

| TF | 79.4 | 2.7 | 17.9 |

| FT | 12.2 | 54.3 | 33.5 |

| FF | 31.3 | 14.5 | 54.2 |

| vs. EmoDS [6] | |||

| TT | 33.8 | 19.6 | 46.6 |

| TF | 72.5 | 2.9 | 24.6 |

| FT | 9.4 | 48.7 | 41.9 |

| FF | 30.6 | 12.3 | 57.1 |

| vs. EmoCVAE [15] | |||

| TT | 24.9 | 19.3 | 55.8 |

| TF | 58.2 | 6.1 | 35.7 |

| FT | 9.1 | 43.4 | 47.5 |

| FF | 29.4 | 17.3 | 53.3 |

| Win | Lose | Tie | |

|---|---|---|---|

| vs. CVAE [34] | |||

| TT | 35.8 | 21.5 | 42.7 |

| TF | 51.0 | 22.8 | 27.2 |

| FT | 35.4 | 26.7 | 37.8 |

| FF | 37.2 | 16.9 | 45.9 |

| vs. ECM [3] | |||

| TT | 52.3 | 17.6 | 35.1 |

| TF | 43.9 | 28.5 | 27.6 |

| FT | 33.2 | 41.5 | 25.3 |

| FF | 26.6 | 32.7 | 40.7 |

| vs. EmoDS [6] | |||

| TT | 45.6 | 19.2 | 35.2 |

| TF | 61.3 | 11.5 | 27.2 |

| FT | 52.9 | 31.7 | 15.4 |

| FF | 31.7 | 40.5 | 27.8 |

| vs. EmoCVAE [15] | |||

| TT | 39.8 | 25.4 | 34.8 |

| TF | 47.6 | 12.3 | 40.1 |

| FT | 49.5 | 25.3 | 25.2 |

| FF | 33.3 | 26.7 | 40.0 |

| Method | Acc ↑ | Dist-1 ↑ | Dist-2 ↑ | Ppl ↓ |

|---|---|---|---|---|

| Ours | 97.33 | 0.091 | 0.486 | 64.72 |

| w/o SA | 97.82 | 0.018 | 0.092 | 64.25 |

| w/o EC | 83.90 | 0.121 | 0.706 | 60.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Zhao, H.; Zhang, Z. Diversifying Emotional Dialogue Generation via Selective Adversarial Training. Sensors 2023, 23, 5904. https://doi.org/10.3390/s23135904

Li B, Zhao H, Zhang Z. Diversifying Emotional Dialogue Generation via Selective Adversarial Training. Sensors. 2023; 23(13):5904. https://doi.org/10.3390/s23135904

Chicago/Turabian StyleLi, Bo, Huan Zhao, and Zixing Zhang. 2023. "Diversifying Emotional Dialogue Generation via Selective Adversarial Training" Sensors 23, no. 13: 5904. https://doi.org/10.3390/s23135904

APA StyleLi, B., Zhao, H., & Zhang, Z. (2023). Diversifying Emotional Dialogue Generation via Selective Adversarial Training. Sensors, 23(13), 5904. https://doi.org/10.3390/s23135904