Efficient Super-Resolution Method for Targets Observed by Satellite SAR

Abstract

1. Introduction

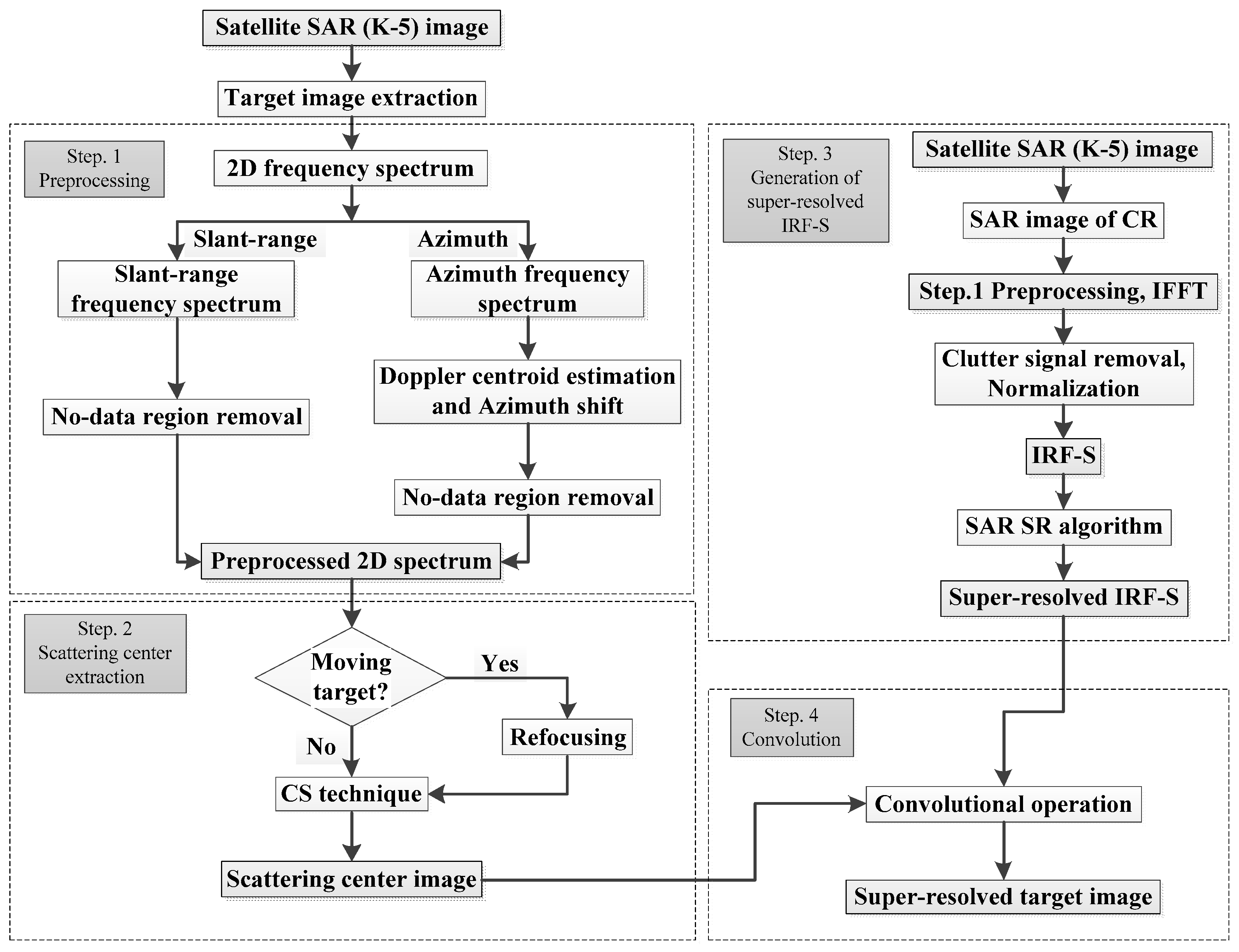

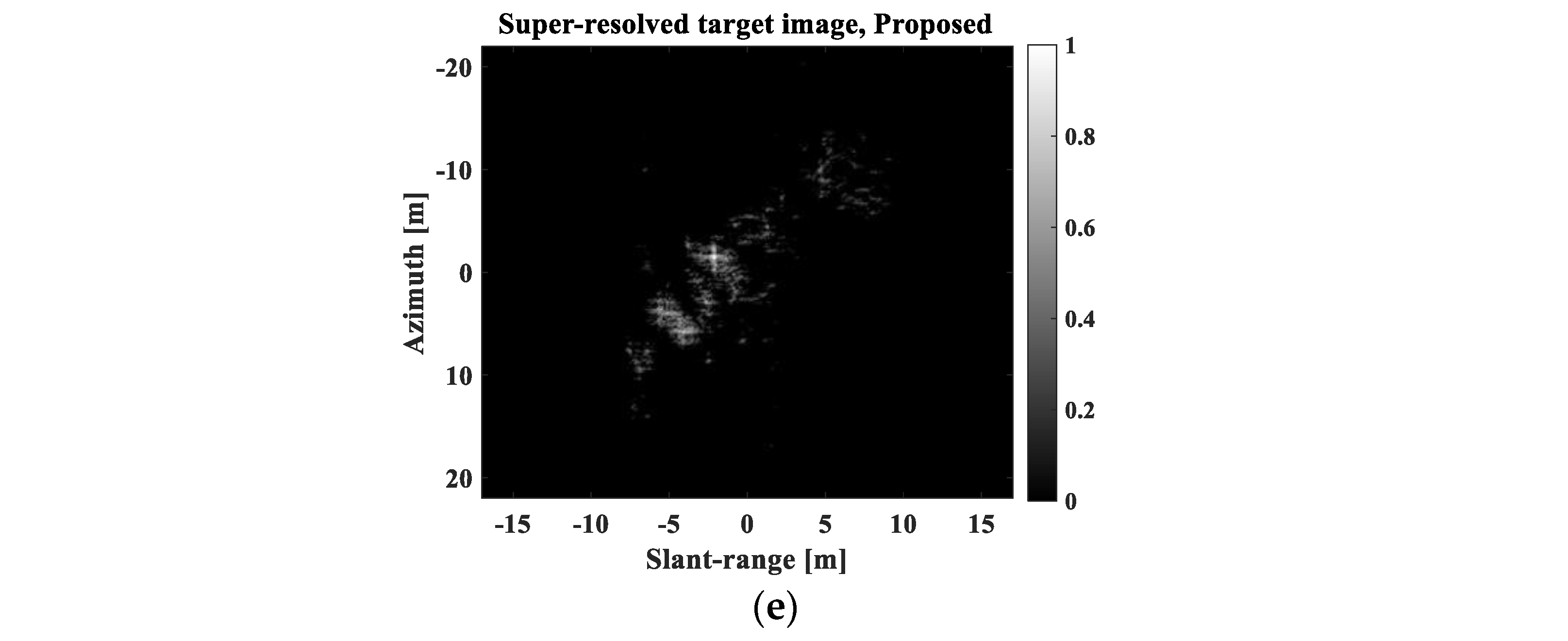

2. Proposed SR Method for Target Image

2.1. Overall Flowchart of the Proposed Method

2.2. Radar Signal Model for the Proposed Method

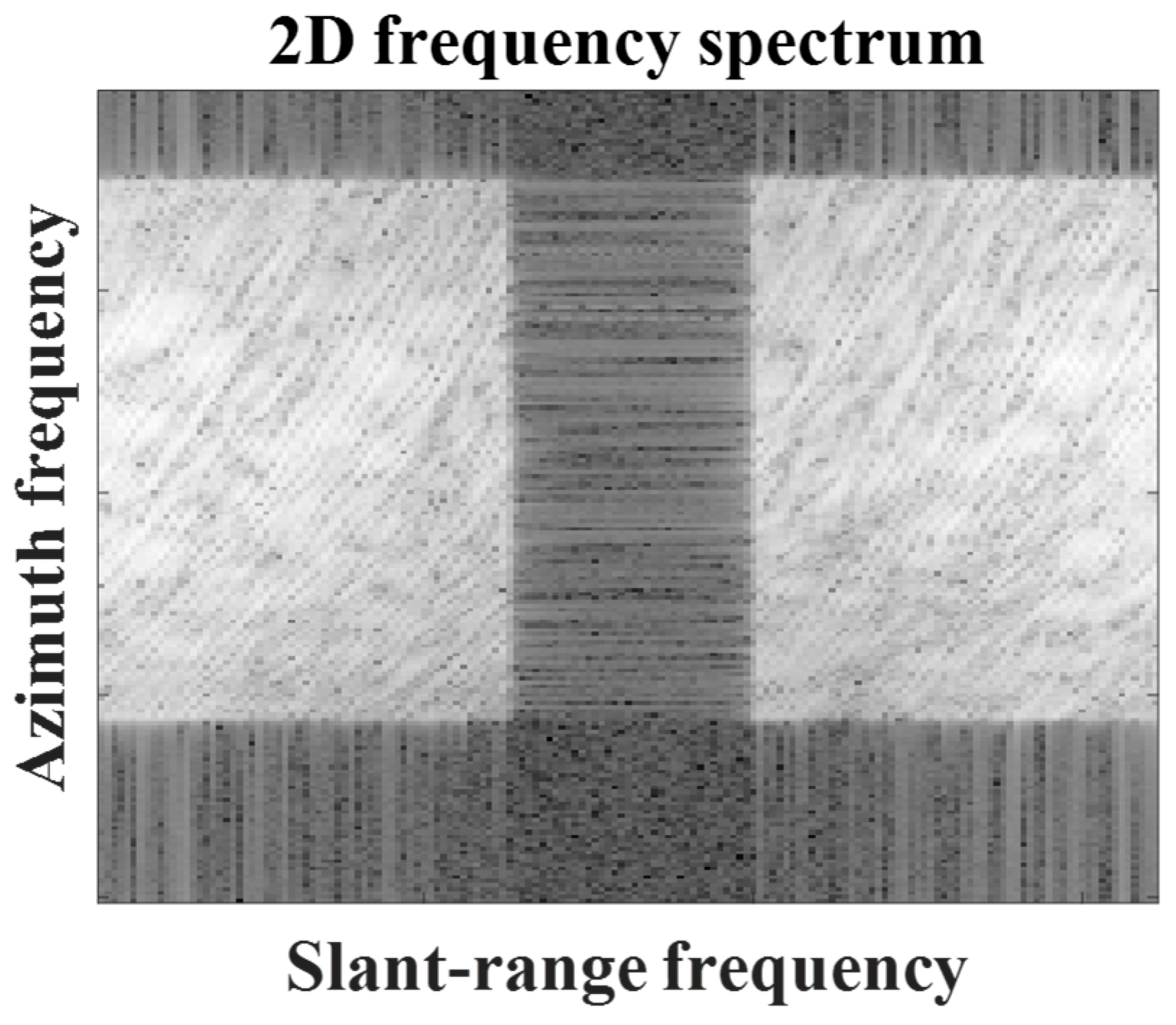

2.3. Preprocessing (Step 1)

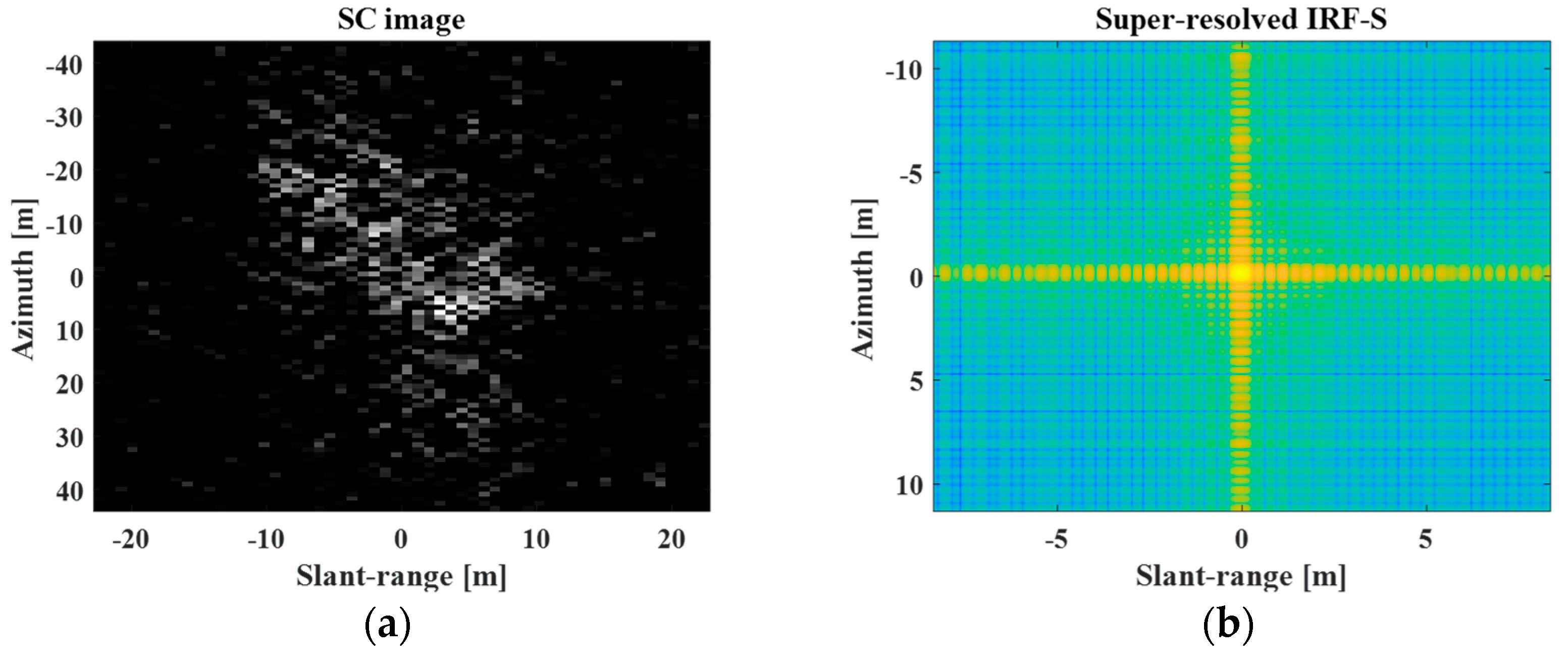

2.4. Scattering Center Extraction (Step 2)

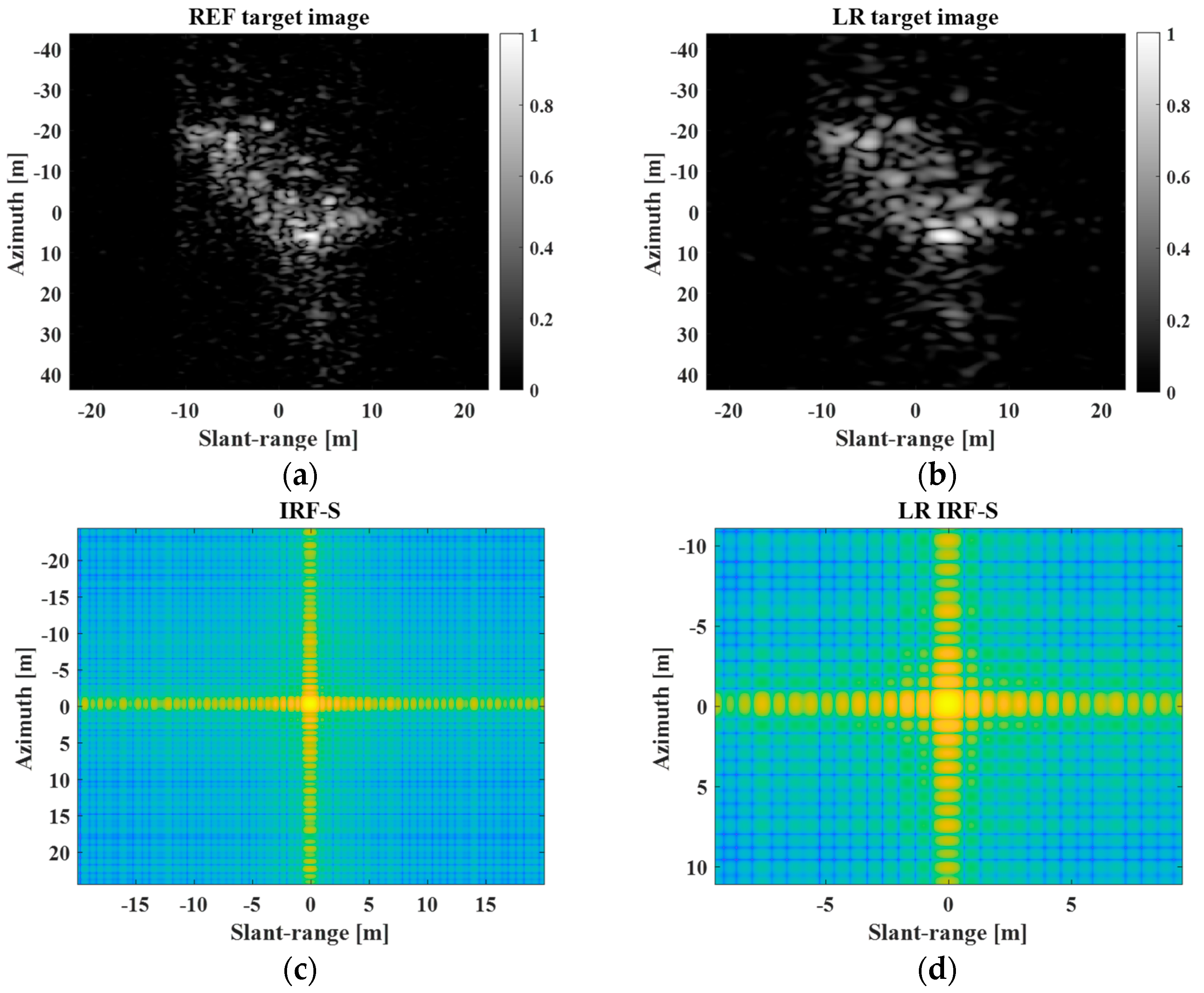

2.5. Generation of Super-Resolved IRF-S (Step 3)

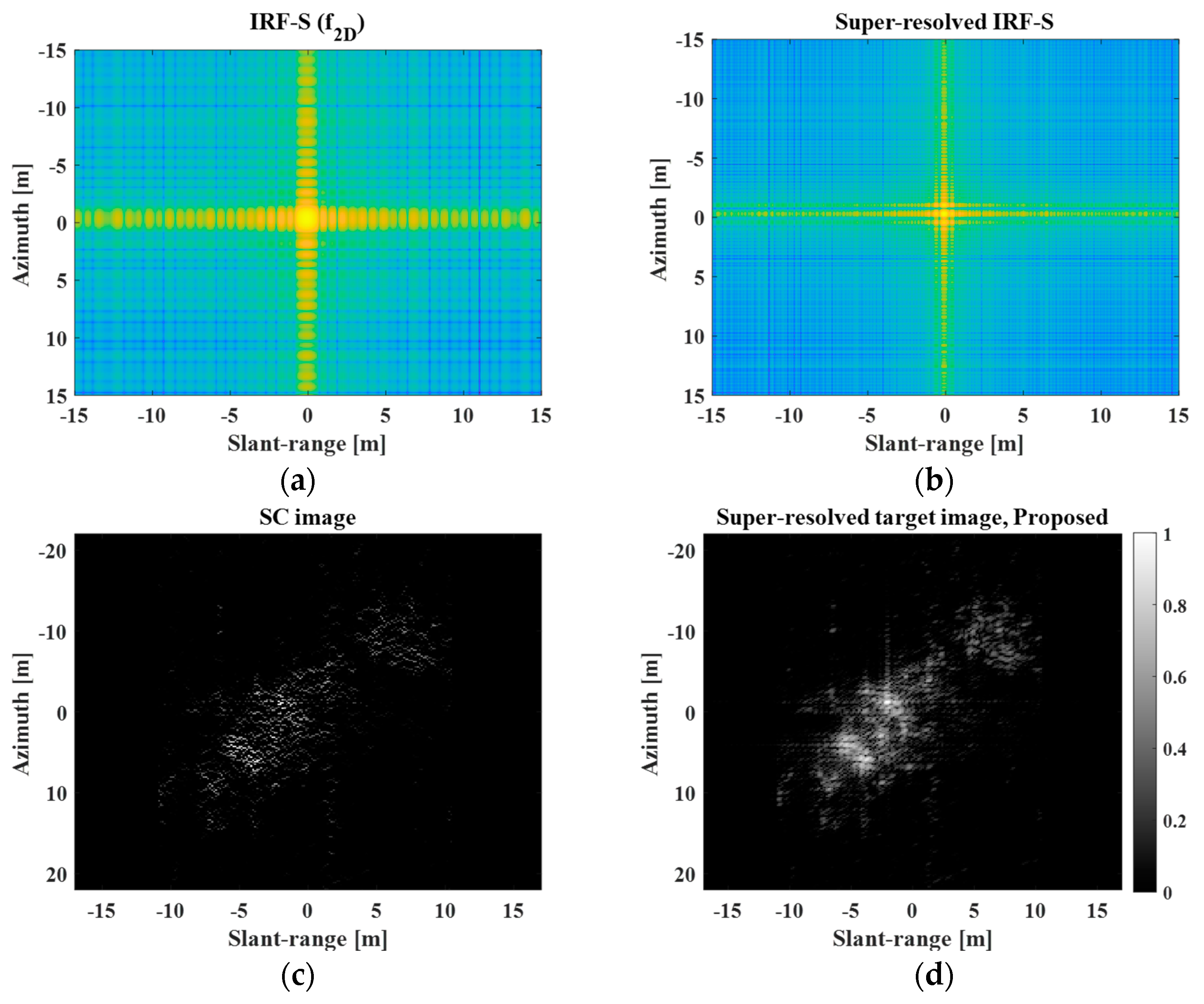

2.6. Convolution of SC Image and Super-Resolved IRF-S (Step 4)

3. Experimental Results

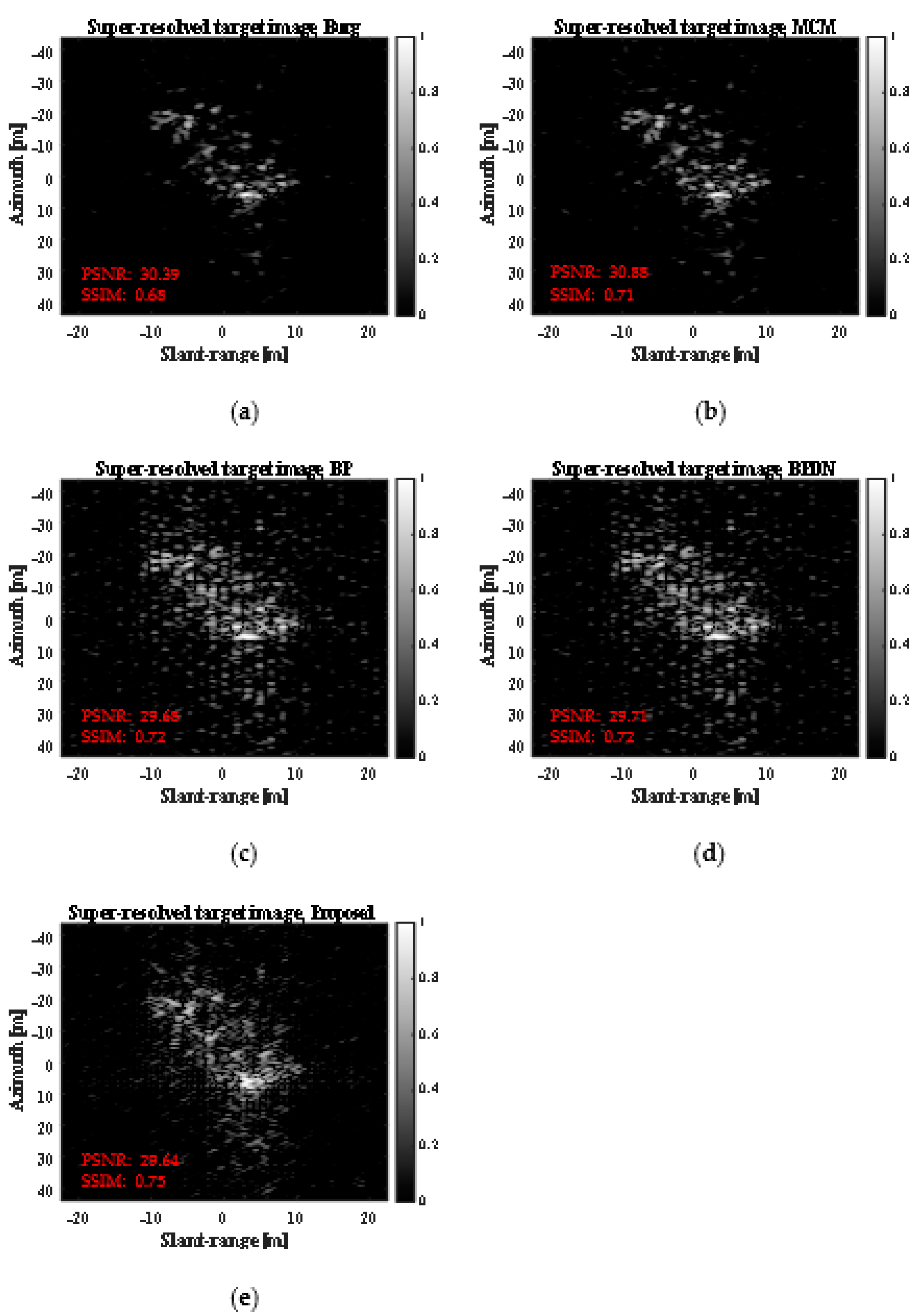

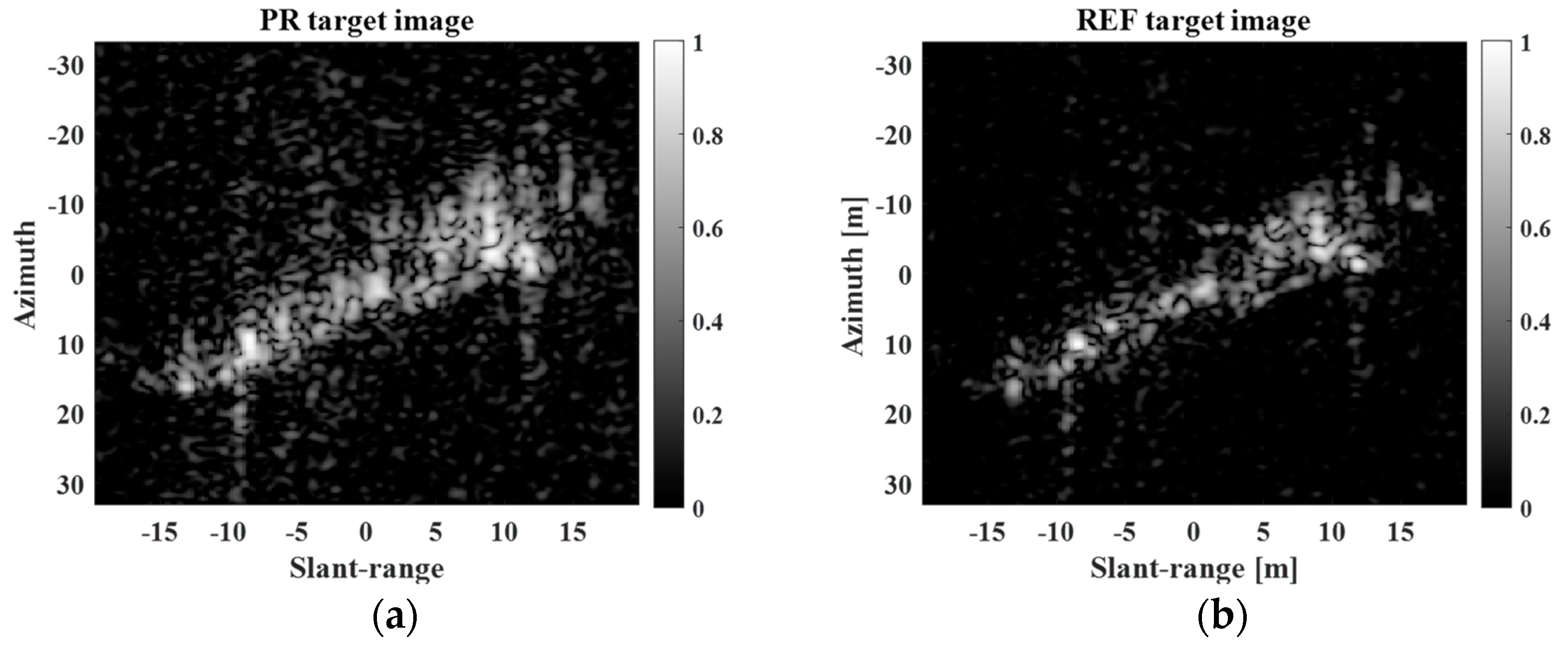

3.1. SR Results for Static Ship Target

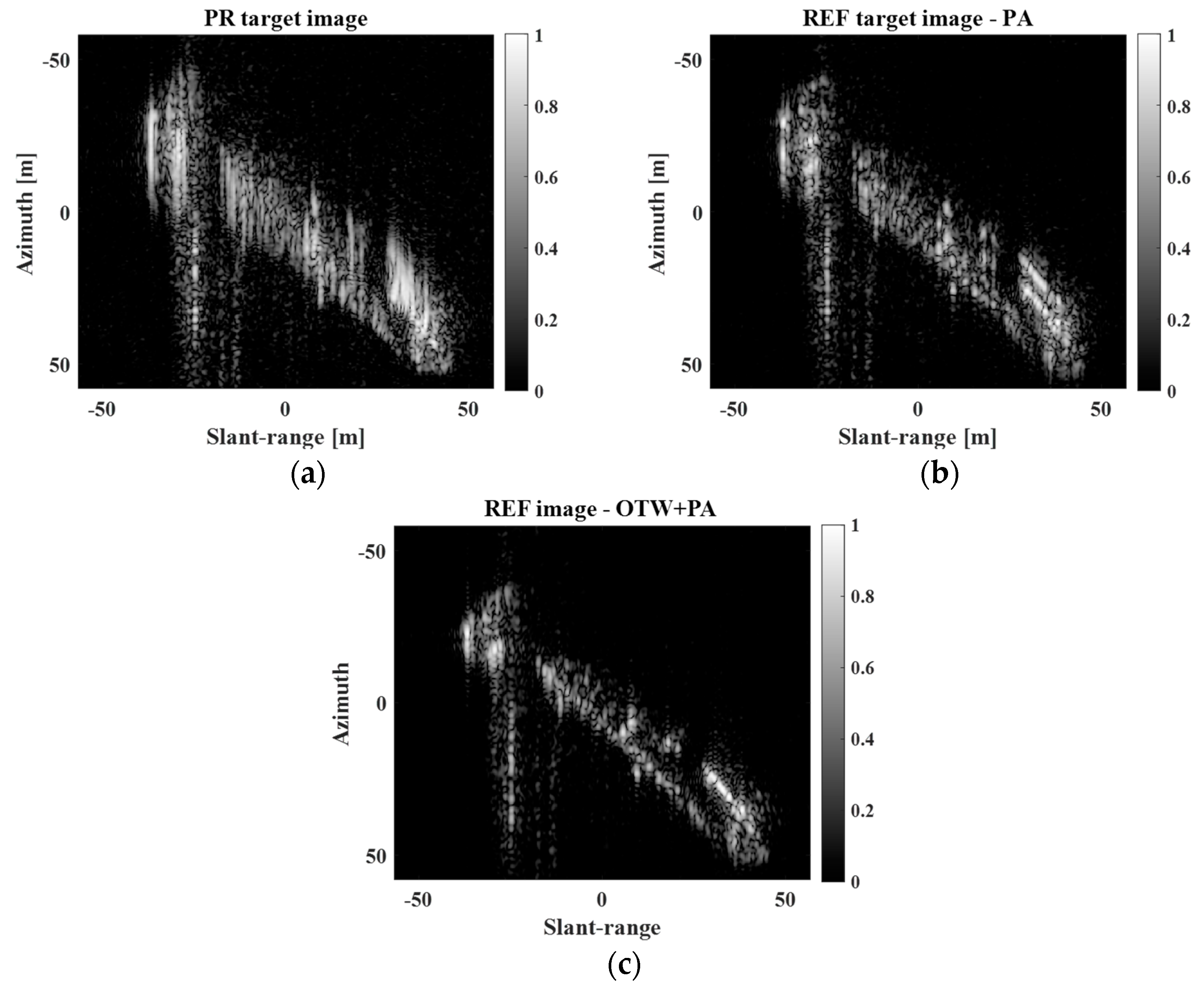

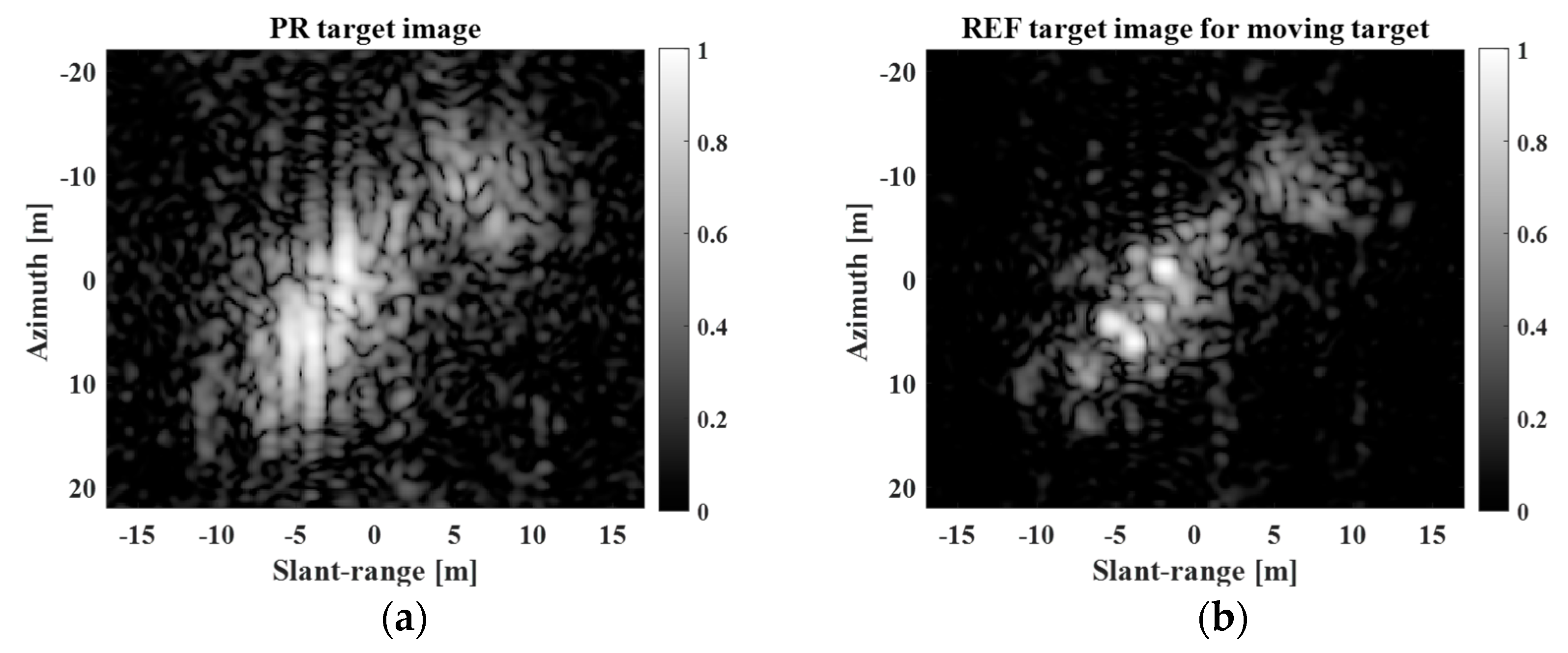

3.2. SR Results for Moving Ship Target—Moderate Motion

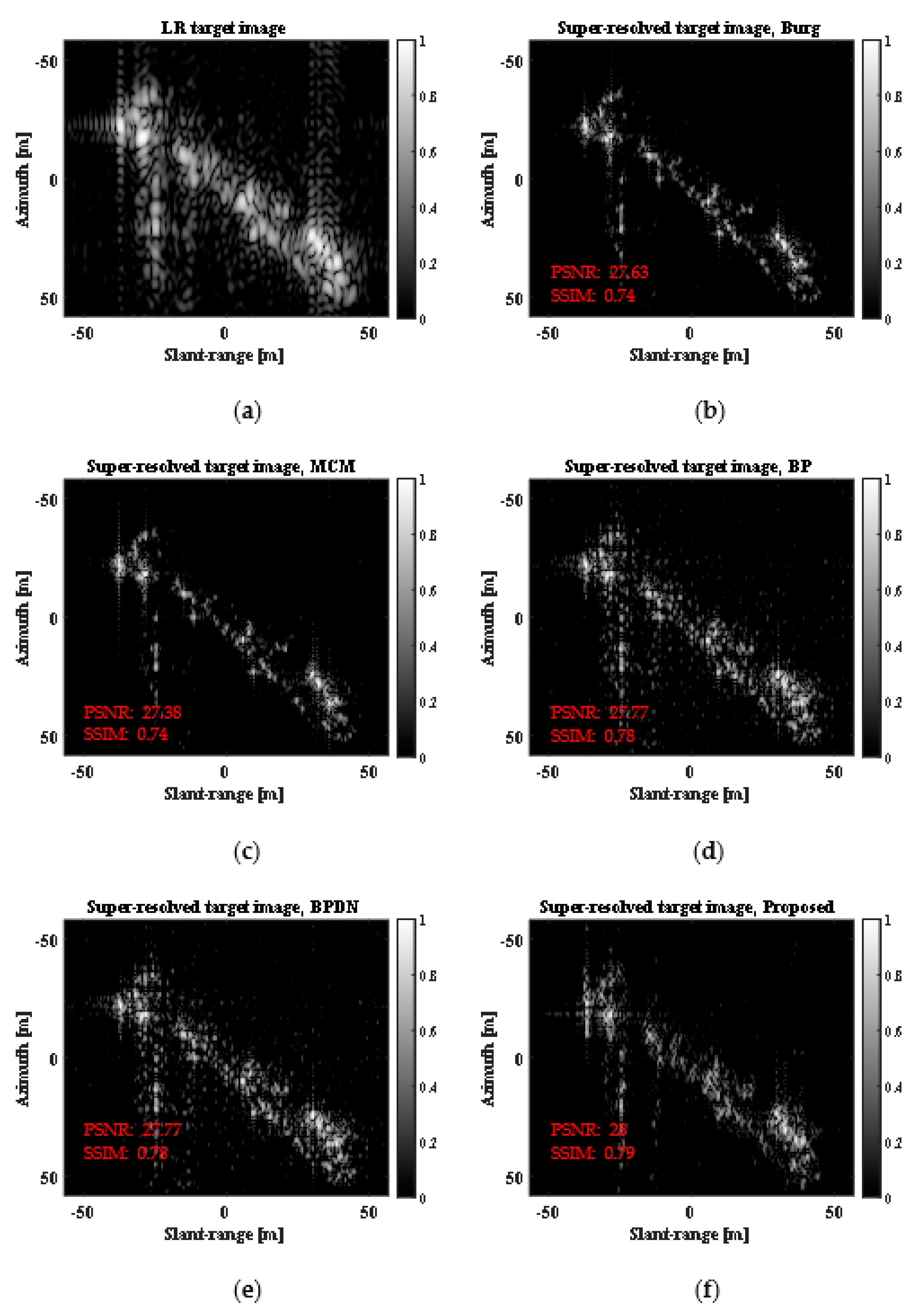

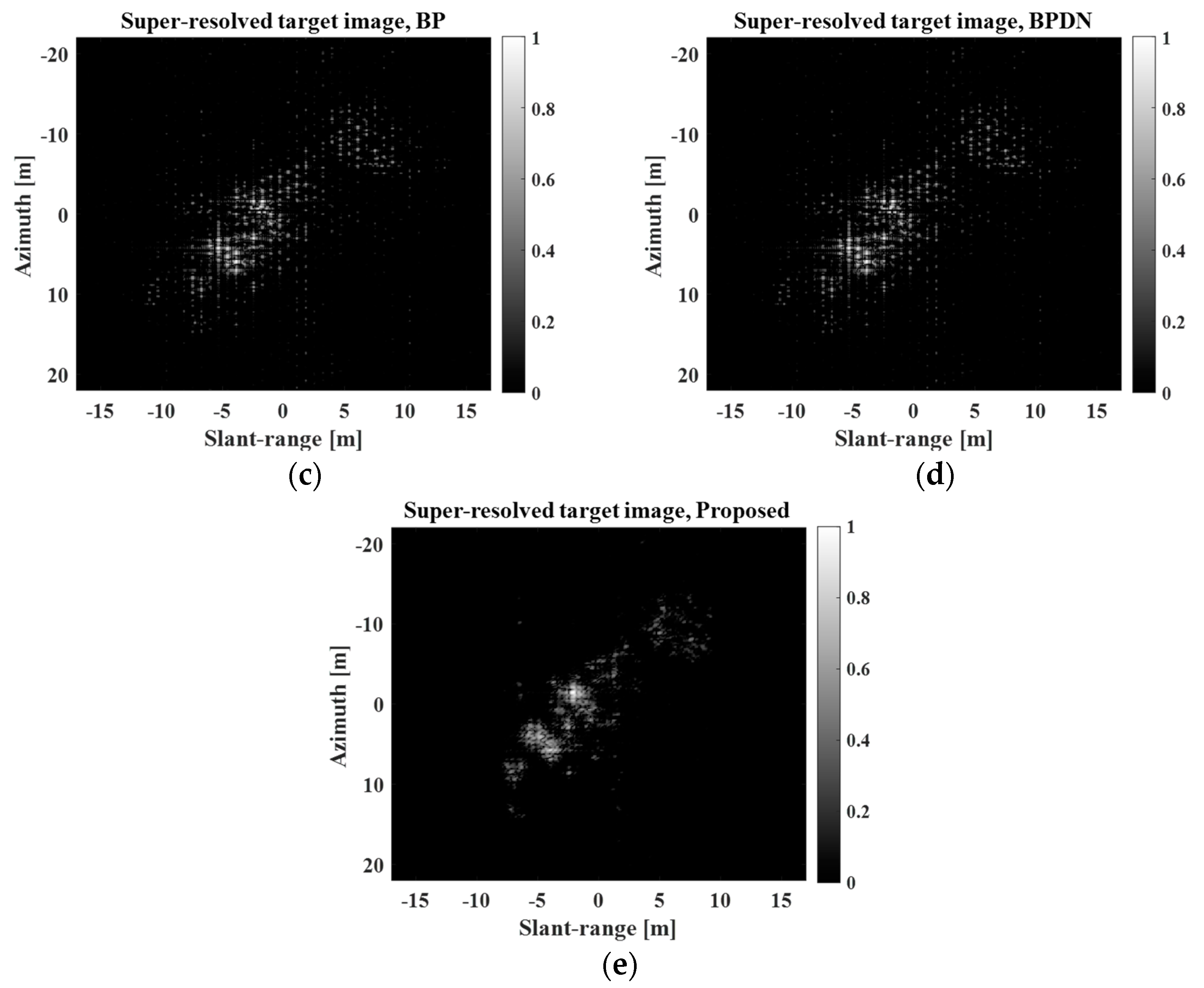

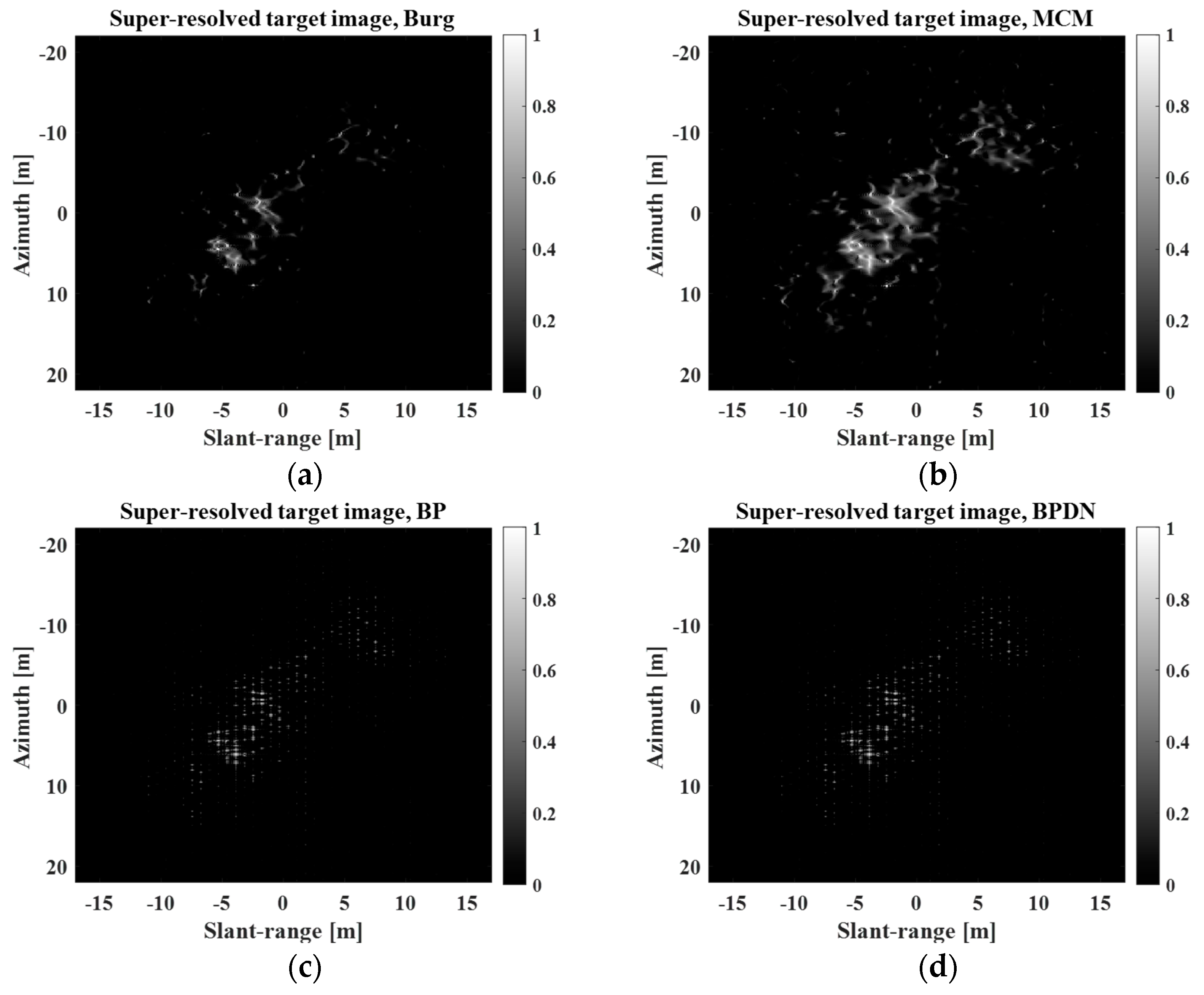

3.3. SR Results for Moving Ship Target—Complex Motion

3.4. SR Results in the Case of Improvement

4. Discussion

5. Conclusions

- (1)

- In terms of both restoration and improvement, the proposed scheme led to considerably improved spatial resolution of the target images for various types of targets, leading to clearer information on the principal scatterers.

- (2)

- In particular, the proposed method exhibited excellent SR capabilities at a high degree of SR in terms of PSNR, SSIM, and CT compared with other SAR SR methods. This implies that the proposed method can extract highly precise and meaningful information regarding the targets represented in satellite SAR images.

- (3)

- The concept of the proposed scheme can be easily extended to other satellite SAR systems such as ICEEYE, Capella, TerraSAR-X, and KOMPSAT-6 if the preprocessing steps are slightly adjusted depending on the characteristics of the SAR system.

- (4)

- It is expected that the proposed scheme will also be useful for improving target recognition capability using satellite SAR images.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, S.-J.; Lee, K.-J. Efficient generation of artificial training DB for ship detection using satellite SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11764–11774. [Google Scholar] [CrossRef]

- Chang, Y.-L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.-Y.; Lee, W.-H. Ship detection based on YOLOv2 for SAR imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 2019, 11, 765. [Google Scholar] [CrossRef]

- Ma, M.; Chen, J.; Liu, W.; Yang, W. Ship classification and detection based on CNN using GF-3 SAR Images. Remote Sens. 2018, 10, 2043. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, H.; Wu, F.; Jiang, S.; Zhang, B.; Tang, Y. A novel hierarchical ship classifier for COSMO-SkyMed SAR data. IEEE Geosci. Remote Sens. Lett. 2014, 11, 484–488. [Google Scholar] [CrossRef]

- Zhang, H.; Tian, X.; Wang, C.; Wu, F.; Zhang, B. Merchant vessel classification based on scattering component analysis for COSMO-SkyMed SAR images. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1275–1279. [Google Scholar] [CrossRef]

- Jiang, M.; Yang, X.; Dong, Z.; Fang, S.; Meng, J. Ship classification based on superstructure scattering features in SAR images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 616–620. [Google Scholar] [CrossRef]

- Xing, X.; Ji, K.; Zou, H.; Chen, W.; Sun, J. Ship classification in TerraSAR-X images with feature space-based sparse representation. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1562–1566. [Google Scholar] [CrossRef]

- Novak, L.M.; Halversen, S.D.; Owirka, G.J.; Hiett, M. Effects of polarization and resolution on the performance of a SAR automatic target recognition system. Lincoln Lab. J. 1995, 8, 49–68. [Google Scholar]

- Moore, T.G.; Zuerndorfer, B.W.; Burt, E.C. Enhanced imagery using spectral-estimation-based techniques. Lincoln Lab. J. 1997, 10, 171–186. [Google Scholar]

- Gupta, I.; Beals, M.; Moghaddar, A. Data extrapolation for high resolution radar imaging. IEEE Trans. Antennas Propag. 1994, 42, 1540–1545. [Google Scholar] [CrossRef]

- Li, J.; Stoica, P. Efficient mixed-spectrum estimation with applications to target feature extraction. IEEE Trans. Signal Process. 1996, 44, 281–295. [Google Scholar] [CrossRef]

- Odendaal, J.; Barnard, E.; Pistorius, C. Two-dimensional superresolution radar imaging using the MUSIC algorithm. IEEE Trans. Antennas Propag. 1994, 42, 1386–1391. [Google Scholar] [CrossRef]

- Roy, R.; Kailath, T. ESPRIT-estimation of signal parameters via rotational invariance techniques. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 984–995. [Google Scholar] [CrossRef]

- Kay, S.M. Modern Spectral Estimation: Theory and Application; Prentice-Hall: Englewood Cliffs, NJ, USA, 1988. [Google Scholar]

- Lee, S.-J.; Lee, M.-J.; Kim, K.-T.; Bae, J.-H. Classification of ISAR images using variable cross-range scaling. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 2291–2303. [Google Scholar] [CrossRef]

- Ye, F.; Zhang, F.; Zhu, J. ISAR super-resolution imaging based on sparse representation. In Proceedings of the 2010 International Conference on Wireless Communications & Signal Processing (WCSP), Suzhou, China, 21–23 October 2010; pp. 1–6. [Google Scholar] [CrossRef]

- He, C.; Liu, L.; Xu, L.; Liu, M.; Liao, M. Learning based compressed sensing for SAR image super-resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1272–1281. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, J.; Huang, H.; Hu, S.; Zhang, A.; Ma, H.; Sun, W. Super-resolution based on compressive sensing and structural self-similarity for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4864–4876. [Google Scholar] [CrossRef]

- Deka, B.; Gorain, K.K.; Kalita, N.; Das, B. Single image super-resolution using compressive sensing with learned overcomplete dictionary. In Proceedings of the 2013 Fourth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Jodhpur, India, 18–21 December 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Rostami, M.; Michailovich, O.; Wang, Z. Image deblurring using derivative compressed sensing for optical imaging application. IEEE Trans. Image Process. 2012, 21, 3139–3149. [Google Scholar] [CrossRef] [PubMed]

- Edeler, T.; Ohliger, K.; Hussmann, S.; Mertins, A. Multi image super resolution using compressed sensing. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 2868–2871. [Google Scholar] [CrossRef]

- Lee, S.-J.; Lee, S.-G. Super-resolution procedure for target responses in KOMPSAT-5 images. Sensors 2022, 22, 7189. [Google Scholar] [CrossRef]

- Potter, L.C.; Chiang, M.; Carriere, R.; Gerry, M.J. A GTD-based parametric model for radar scattering. IEEE Trans. Antennas Propag. 1995, 43, 1058–1067. [Google Scholar] [CrossRef]

- Bae, H.; Kang, S.; Kim, T.; Yang, E. Performance of sparse recovery algorithms for the reconstruction of radar images from incomplete RCS data. IEEE Geosci. Remote Sens. Lett. 2015, 12, 860–864. [Google Scholar]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Elad, M. Sparse and Redundant Representations; Springer-Verlag: Berlin, Germany, 2010. [Google Scholar]

- Bae, J.-H.; Kang, B.-S.; Yang, E.; Kim, K.-T. Compressive sensing-based algorithm for one-dimensional scattering center extraction. Microw. Opt. Technol. Lett. 2016, 58, 1408–1415. [Google Scholar] [CrossRef]

- Martorella, M.; Giusti, E.; Berizzi, F.; Bacci, A.; Mese, E.D. ISAR based technique for refocusing non-cooperative targets in SAR images. IET Radar, Sonar Navig. 2012, 6, 332–340. [Google Scholar] [CrossRef]

- Chen, V.C.; Martorella, M. Inverse Synthetic Aperture Radar; Imaging Principles, Algorithms and Applications; SciTech Publishing: Chennai, India, 2014. [Google Scholar]

- Martorella, M.; Berizzi, F. Time windowing for highly focused ISAR image reconstruction. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 992–1007. [Google Scholar] [CrossRef]

- Soumekh, M. Synthetic Aperture Radar Signal Processing with MATLAB Algorithms; John Wiley & Sons: Hoboken, NJ, USA, 1999. [Google Scholar]

- Cuomo, K.; Pion, J.; Mayhan, J. Ultrawide-band coherent processing. IEEE Trans. Antennas Propag. 1999, 47, 1094–1107. [Google Scholar] [CrossRef]

- Symolon, W.; Dagli, C. Single-image super resolution using convolutional neural network. Procedia Comput. Sci. 2021, 185, 213–222. [Google Scholar] [CrossRef]

- Choi, Y.-J.; Han, S.-H.; Kim, Y.-W. A no-reference CNN-based super-resolution method for KOMPSAT-3 using adaptive image quality modification. Remote Sens. 2021, 13, 3301. [Google Scholar] [CrossRef]

- l_1-MAGIC. Available online: https://candes.su.domains/software/l1magic/ (accessed on 1 January 2022).

- Wang, J.; Liu, X.; Zhou, Z. Minimum-entropy phase adjustment for ISAR. IEE Proc.-Radar Sonar Navig. 2004, 151, 203–209. [Google Scholar] [CrossRef]

- Kang, M.-S.; Lee, S.-J.; Lee, S.-H.; Kim, K.-T. ISAR imaging of high-speed maneuvering target using gapped stepped-frequency waveform and compressive sensing. IEEE Trans. Signal Process. 2017, 26, 5043–5056. [Google Scholar] [CrossRef]

- Kang, M.-S.; Kim, K.-T. Compressive sensing based SAR imaging and autofocus using improved Tikhonov regularization. IEEE Sens. J. 2019, 19, 5529–5540. [Google Scholar] [CrossRef]

| 2 | 2.5 | 3 | 3.5 | 4 | |

| Burg | 30.39 | 29.08 | 28.17 | 27.72 | 27.65 |

| MCM | 30.88 | 29.01 | 28.23 | 27.58 | 27.44 |

| BP | 29.68 | 28.76 | 28.13 | 27.99 | 27.63 |

| BPDN | 29.71 | 28.76 | 28.13 | 27.99 | 27.63 |

| Proposed | 29.64 | 29.61 | 28.27 | 28.62 | 28.04 |

| 2 | 2.5 | 3 | 3.5 | 4 | |

| Burg | 0.68 | 0.62 | 0.51 | 0.46 | 0.44 |

| MCM | 0.71 | 0.63 | 0.54 | 0.44 | 0.42 |

| BP | 0.72 | 0.63 | 0.62 | 0.56 | 0.56 |

| BPDN | 0.72 | 0.64 | 0.62 | 0.56 | 0.56 |

| Proposed | 0.75 | 0.74 | 0.62 | 0.64 | 0.58 |

| 2 | 2.5 | 3 | 3.5 | 4 | |

| PSNR | |||||

| SSIM | |||||

| 2 | 2.5 | 3 | 3.5 | 4 | |

| Burg | 29.74 | 28.78 | 28.13 | 27.7 | 26.77 |

| MCM | 29.54 | 29.06 | 27.65 | 26.96 | 26.84 |

| BP | 27.69 | 28.12 | 26.14 | 26.45 | 26.82 |

| BPDN | 27.66 | 28.13 | 26.13 | 26.45 | 26.83 |

| Proposed | 28.93 | 28.17 | 28.36 | 28.6 | 26.86 |

| 2 | 2.5 | 3 | 3.5 | 4 | |

| Burg | 0.75 | 0.63 | 0.62 | 0.58 | 0.47 |

| MCM | 0.75 | 0.67 | 0.64 | 0.47 | 0.46 |

| BP | 0.71 | 0.68 | 0.64 | 0.61 | 0.56 |

| BPDN | 0.71 | 0.68 | 0.64 | 0.61 | 0.56 |

| Proposed | 0.75 | 0.74 | 0.74 | 0.72 | 0.69 |

| 2 | 2.5 | 3 | 3.5 | 4 | |

| Burg | 28.74 | 27.63 | 27.22 | 26.68 | 26.3 |

| MCM | 28.81 | 27.38 | 27.26 | 26.71 | 26.1 |

| BP | 28.76 | 27.77 | 27.21 | 26.96 | 26.31 |

| BPDN | 28.77 | 27.77 | 27.21 | 26.96 | 26.31 |

| Proposed | 29.42 | 28 | 28.32 | 27.7 | 27.2 |

| 2 | 2.5 | 3 | 3.5 | 4 | |

| Burg | 0.77 | 0.74 | 0.71 | 0.67 | 0.67 |

| MCM | 0.79 | 0.74 | 0.7 | 0.68 | 0.67 |

| BP | 0.8 | 0.78 | 0.75 | 0.74 | 0.71 |

| BPDN | 0.8 | 0.78 | 0.75 | 0.74 | 0.71 |

| Proposed | 0.8 | 0.79 | 0.76 | 0.77 | 0.77 |

| 3 | 4 | 5 | 6 | 7 | |

| Burg (s) | 0.15 | 0.22 | 0.29 | 0.39 | 0.48 |

| MCM (s) | 0.15 | 0.21 | 0.3 | 0.4 | 0.52 |

| BP (s) | 4.19 | 8.66 | 17.15 | 31.68 | 45.52 |

| BPDN (s) | 29.2 | 64.45 | 117.24 | 195.72 | 298.25 |

| Proposed (s) | 0.12 | 0.14 | 0.23 | 0.37 | 0.39 |

| Algorithm | |||||||

|---|---|---|---|---|---|---|---|

| PR | REF | Burg | MCM | BP | BPDN | Proposed | |

| 4 | 9.74 | 8.82 | 7.64 | 7.94 | 7.63 | 7.62 | 7.13 |

| 7 | 9.74 | 8.82 | 7.03 | 7.75 | 6.51 | 6.52 | 6.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.-J.; Lee, S.-G. Efficient Super-Resolution Method for Targets Observed by Satellite SAR. Sensors 2023, 23, 5893. https://doi.org/10.3390/s23135893

Lee S-J, Lee S-G. Efficient Super-Resolution Method for Targets Observed by Satellite SAR. Sensors. 2023; 23(13):5893. https://doi.org/10.3390/s23135893

Chicago/Turabian StyleLee, Seung-Jae, and Sun-Gu Lee. 2023. "Efficient Super-Resolution Method for Targets Observed by Satellite SAR" Sensors 23, no. 13: 5893. https://doi.org/10.3390/s23135893

APA StyleLee, S.-J., & Lee, S.-G. (2023). Efficient Super-Resolution Method for Targets Observed by Satellite SAR. Sensors, 23(13), 5893. https://doi.org/10.3390/s23135893