CNN Hardware Accelerator for Real-Time Bearing Fault Diagnosis

Abstract

1. Introduction

- (1)

- This work is a pioneer work that considers the feasibility of circuit implementation and the real-time response ability in bearing fault diagnosis problems using current signals.

- (2)

- Furthermore, the signal used for diagnostics is the current signal rather than the vibration signal. Thus, the cost of the vibration sensor can be reduced, which is also a better solution for bearings that are difficult to disassemble. In other words, low hardware cost is achieved without losing too much accuracy.

- (3)

- In this work, the complex data preprocessing procedures are removed without influencing the accuracy.

- (4)

- In addition, this work converts the convolutional neural network (CNN) model from a commonly used two-dimensional CNN to a one-dimensional CNN to further reduce the delay in starting the operation. It is no longer necessary to wait for two rows of data before the convolution operation; it is only necessary to wait for kernel-sized data.

- (5)

- On the other hand, this work proposes a new quaternary quantization method to replace the ternary quantization method. As a result, the weight of the CNN can be better expressed.

- (6)

- Moreover, accuracy is improved without increasing the number of bits representing the weight. Therefore, the cost of the circuit implementation can be reduced without losing much accuracy.

- (7)

- The procedure for realizing hardware circuits is introduced in this paper.

- (8)

- The experimental results show that the proposed method can achieve similar accuracy to that of previous works on model accuracy with a significantly lower hardware cost.

2. Related Work

3. Methodology

3.1. Overview of the Proposed Method

3.2. The Proposed CNN Architecture

3.2.1. Bearing Data Preprocessing

3.2.2. CNN Architecture and Quaternary Quantization

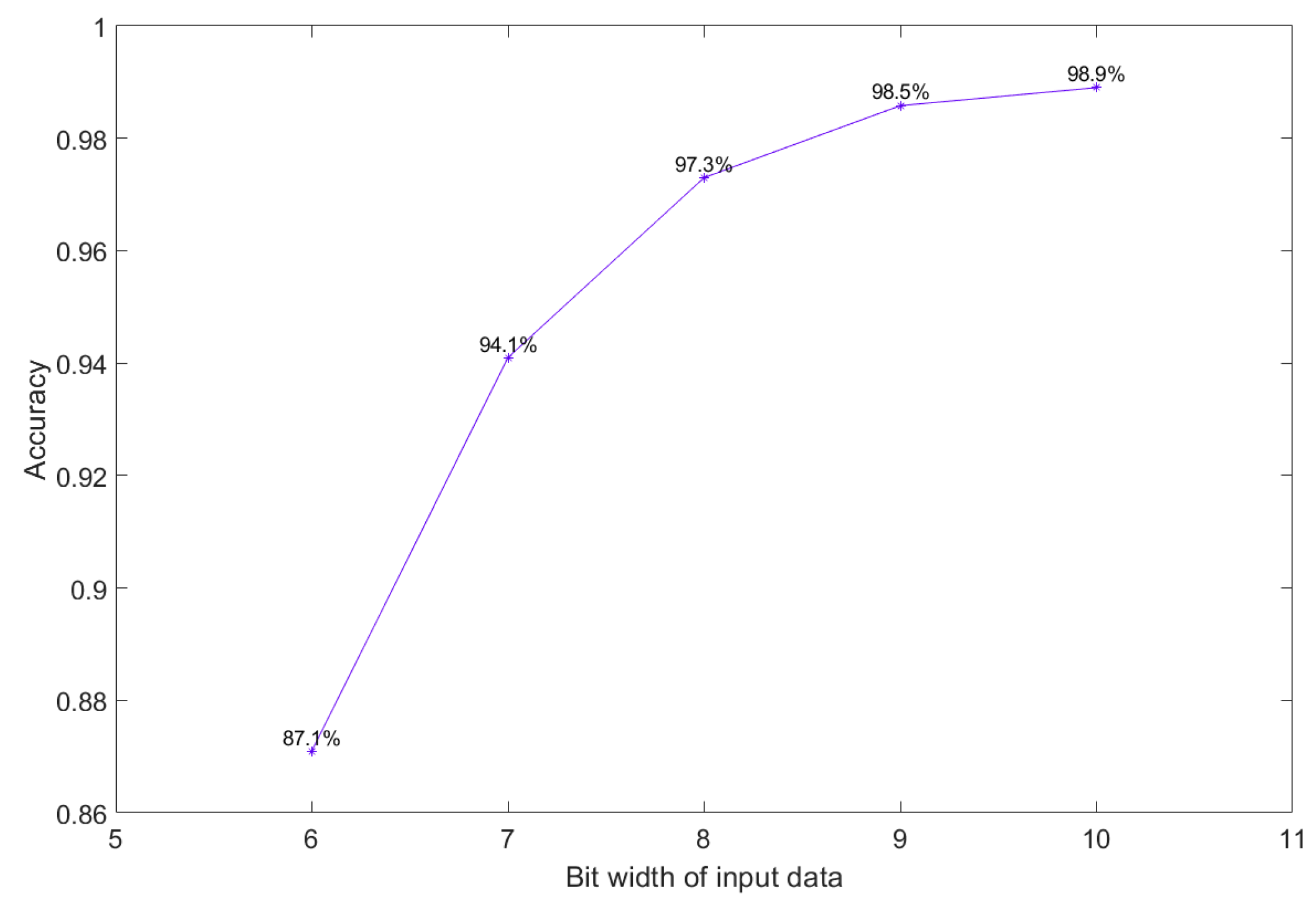

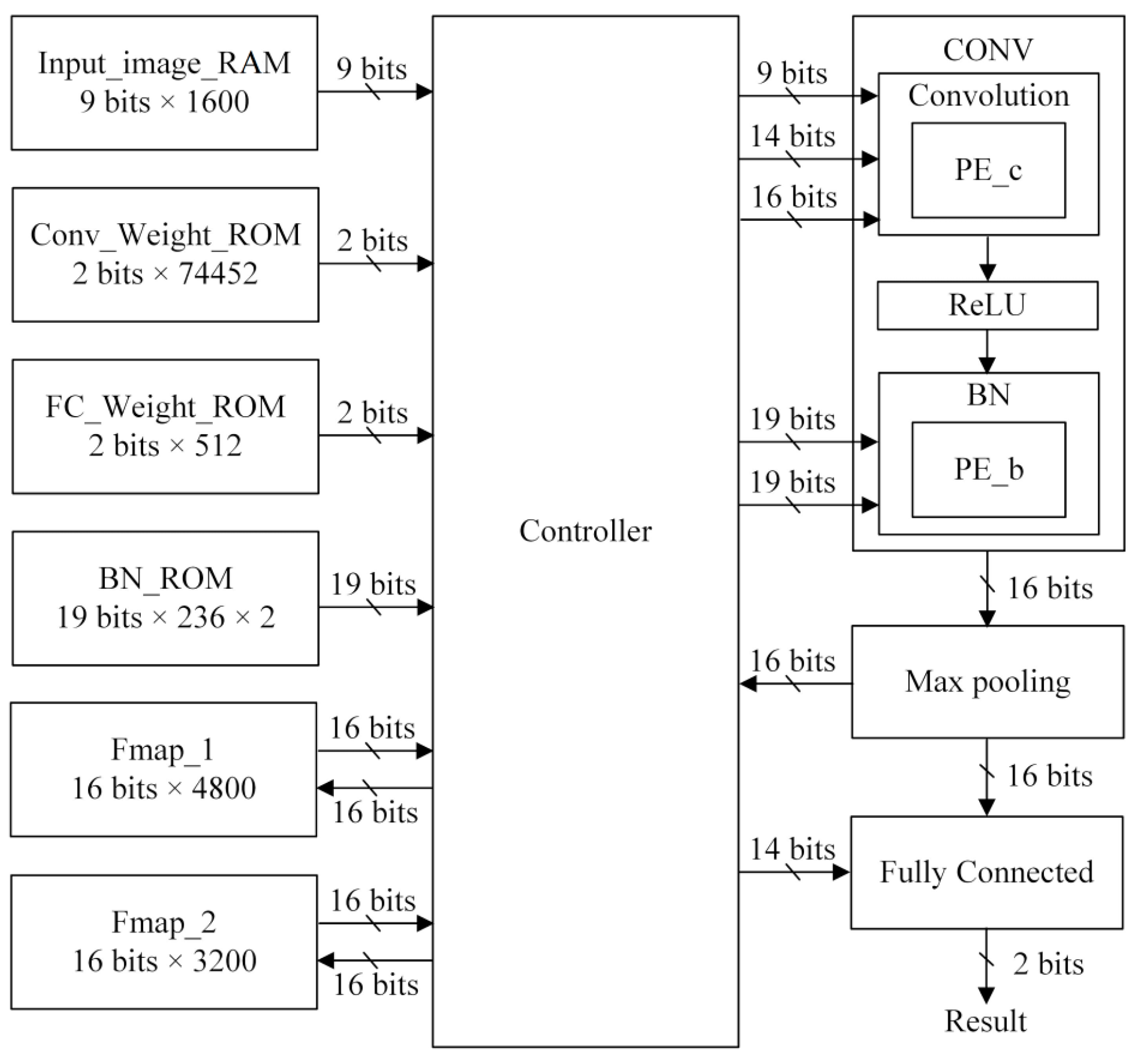

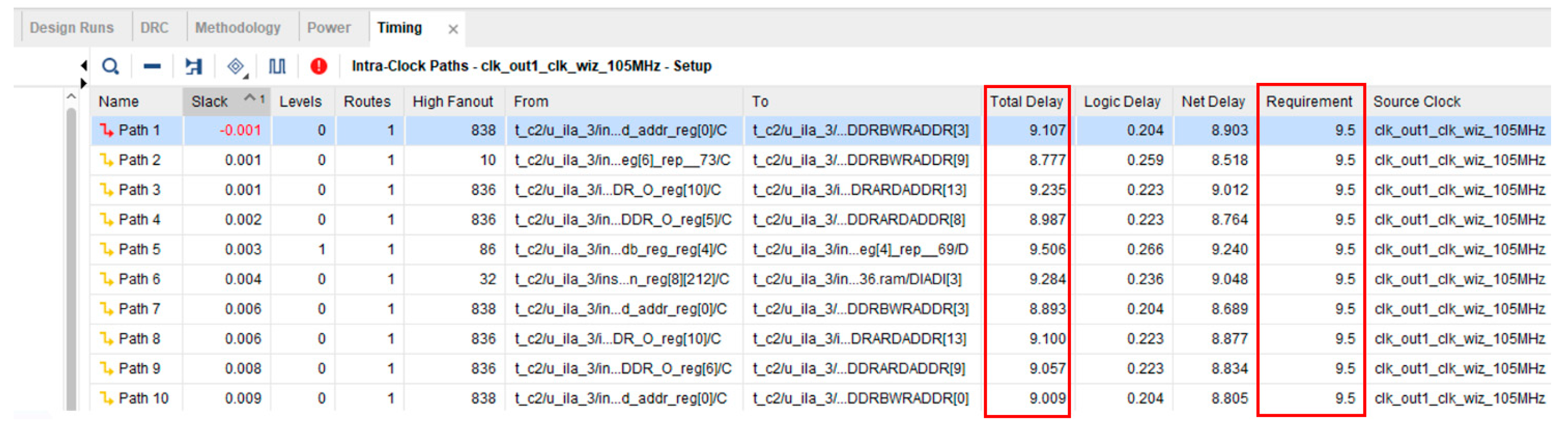

3.3. Hardware Implementation

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Glossary

| Term | Definition |

| d | Ball diameter |

| D | Pitch diameter |

| Inner race ball-passing frequency | |

| Outer race ball-passing frequency | |

| Rotary frequency | |

| n | Number of rolling elements |

| Quantization factors for negative weight | |

| Quantization factor for positive weight | |

| Quantized ternary weights | |

| x | A variable for threshold adjustment in equations |

| Bearing contact angle between the ball and the cage | |

| β | Shift parameter for batch normalization |

| γ | Scale parameter for batch normalization |

| Standard deviation of the weights in each layer |

References

- Hoang, D.T.; Kang, H.J. A motor current signal-based bearing fault diagnosis using deep learning and information fusion. IEEE Trans. Instrum. Meas. 2020, 69, 3325–3333. [Google Scholar] [CrossRef]

- Lessmeier, C.; Kimotho, J.K.; Zimmer, D.; Sextro, W. Condition monitoring of bearing damage in electromechanical drive systems by using motor current signals of electric motors: A benchmark data set for data-driven classification. In Proceedings of the European Conference of the PHM Society, Bilbao, Spain, 5–8 July 2016; Volume 3. [Google Scholar]

- Qin, Y.; Wang, X.; Zou, J. The optimized deep belief networks with improved logistic sigmoid units and their application in fault diagnosis for planetary gearboxes of wind turbines. IEEE Trans. Ind. Electron. 2019, 66, 3814–3824. [Google Scholar] [CrossRef]

- Zhu, C.; Chen, Z.; Zhao, R.; Wang, J.; Yan, R. Decoupled feature-temporal CNN: Explaining deep learning-based machine health monitoring. IEEE Trans. Instrum. Meas. 2021, 70, 3518313. [Google Scholar] [CrossRef]

- Tan, Y.; Guo, L.; Gao, H.; Zhang, L. Deep coupled joint distribution adaptation network: A method for intelligent fault diagnosis between artificial and real Damages. IEEE Trans. Instrum. Meas. 2021, 70, 3507212. [Google Scholar] [CrossRef]

- Magar, R.; Ghule, L.; Li, J.; Zhao, Y.; Farimani, A.B. FaultNet: A deep convolutional neural network for bearing fault classification. IEEE Access 2021, 9, 25189–25199. [Google Scholar] [CrossRef]

- Zhang, S.; Ye, F.; Wang, B.; Habetler, T.G. Few-shot bearing anomaly detection via model-agnostic meta-learning. In Proceedings of the International Conference on Electrical Machines and Systems (ICEMS), Hamamatsu, Japan, 24–27 November 2020; pp. 1341–1346. [Google Scholar]

- Xu, G.; Liu, M.; Jiang, Z.; Shen, W.; Huang, C. Online Fault Diagnosis Method Based on Transfer Convolutional Neural Networks. IEEE Trans. Instrum. Meas. 2020, 69, 509–520. [Google Scholar] [CrossRef]

- Talllon-Ballesteros, A.J. Edge analytics for bearing fault diagnosis based on convolution neural network. In Fuzzy Systems and Data Mining VII; IOS Press BV: Amsterdam, Netherlands, 2021; Volume 340, pp. 94–103. [Google Scholar]

- Zmarzły, P. Influence of Bearing Raceway Surface Topography on the Level of Generated Vibration as an Example of Operational Heredity. Indian J. Eng. Mater. Sci. 2020, 27, 356–364. [Google Scholar]

- Paderborn University Bearing Data Center Website. Available online: https://mb.uni-paderborn.de/kat/forschung/kat-datacenter/bearing-datacenter (accessed on 10 June 2023).

- Riaz, N.; Shah, S.I.A.; Rehman, F.; Gilani, S.O.; Udin, E. A novel 2-D current signal-based residual learning with optimized softmax to identify faults in ball screw actuators. IEEE Access 2020, 8, 115299–115313. [Google Scholar] [CrossRef]

- Wagner, T.; Sommer, S. Bearing fault detection using deep neural network and weighted ensemble learning for multiple motor phase current sources. In Proceedings of the International Conference on Innovations in Intelligent Systems and Applications (INISTA), Novi Sad, Serbia, 24–16 August 2020; pp. 1–7. [Google Scholar]

- Liu, Y.; Yan, X.; Zhang, C.; Liu, W. An ensemble convolutional neural networks for bearing fault diagnosis using multi-sensor data. Sensors 2019, 19, 5300. [Google Scholar] [CrossRef]

- Karpat, F.; Kalay, O.C.; Dirik, A.E.; Doğan, O.; Korcuklu, B.; Yüce, C. Convolutional neural networks based rolling bearing fault classification under variable operating conditions. In Proceedings of the IEEE International Symposium on Innovations in Intelligent Systems and Applications (INISTA), Kocaeli, Turkey, 25–27 August 2021; pp. 1–6. [Google Scholar]

- Sabir, R.; Rosato, D.; Hartmann, S.; Gühmann, C. LSTM based bearing fault diagnosis of electrical machines using motor current signal. In Proceedings of the IEEE the International Conference on Machine Learning and Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 613–618. [Google Scholar]

- Zhang, S.; Zhang, S.; Wang, B.; Habetler, T.G. Deep learning algorithms for bearing fault diagnostics—A comprehensive review. IEEE Access 2020, 8, 29857–29881. [Google Scholar] [CrossRef]

- Abid, F.B.; Zgarni, S.; Braham, A. Bearing fault detection of induction motor using SWPT and DAG support vector machines. In Proceedings of the IECON 2016 42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 24–27 October 2016; pp. 1476–1481. [Google Scholar]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A new convolutional neural network-based data-driven fault diagnosis method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

- Hou, L.; Jiang, R.; Tan, Y.; Zhang, J. Input feature mappings-based deep residual networks for fault diagnosis of rolling element bearing with complicated dataset. IEEE Access 2020, 8, 180967–180976. [Google Scholar] [CrossRef]

- Guo, Q.; Li, Y.; Song, Y.; Wang, D.; Chen, W. Intelligent fault diagnosis method based on full 1-D convolutional generative adversarial network. IEEE Trans. Ind. Inform. 2020, 16, 2044–2053. [Google Scholar] [CrossRef]

- Wang, Y.; Ding, X.; Zeng, Q.; Wang, L.; Shao, Y. Intelligent rolling bearing fault diagnosis via vision ConvNet. IEEE Sens. J. 2021, 21, 6600–6609. [Google Scholar] [CrossRef]

- Fang, H.; Deng, J.; Zhao, B.; Shi, Y.; Zhou, J.; Shao, S. LEFE-Net: A lightweight efficient feature extraction network with strong robustness for bearing fault diagnosis. IEEE Trans. Instrum. Meas. 2021, 70, 3513311. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, T.; Wu, J.; Sun, C.; Wang, S.; Yan, R.; Chen, X. Deep learning algorithms for rotating machinery intelligent diagnosis: An open source benchmark study. ISA Trans. 2020, 107, 224–255. [Google Scholar] [CrossRef]

- Randalla, R.B.; Antoni, J. Rolling element bearing diagnostics—A tutorial. Mech. Syst. Signal Process. 2011, 25, 485–520. [Google Scholar] [CrossRef]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized neural networks: Training neural networks with weights and activations constrained to +1 or −1. arXiv 2016, arXiv:1602.02830v3. [Google Scholar]

- Li, F.; Zhang, B.; Liu, B. Ternary weight networks. arXiv 2016, arXiv:1605.04711v2. [Google Scholar]

- Zhu, C.; Han, S.; Mao, H.; Dally, W.J. Trained ternary quantization. arXiv 2016, arXiv:1612.01064v3. [Google Scholar]

- Betta, G.; Liguori, C.; Paolillo, A.; Pietrosanto, A. A DSP-based FFT-analyzer for the fault diagnosis of rotating machine based on vibration analysis. IEEE Trans. Instrum. Meas. 2002, 51, 1316–1322. [Google Scholar] [CrossRef]

- Goel, A.; Aghajanzadeh, S.; Tung, C.; Chen, S.-H.; Thiruvathukal, G.K.; Lu, Y.-H. Modular neural networks for low-power image classification on embedded devices. ACM Trans. Des. Autom. Electron. Syst. (TODAES) 2020, 26, 1–35. [Google Scholar] [CrossRef]

| Hyperparameter | Setting |

|---|---|

| Epoch | 300 |

| Batch size | 128 |

| Learning rate | 0.001 |

| Label | Fault Type | Data Amount |

|---|---|---|

| 0 | Healthy | 6 |

| 1 | Outer Race Fault (OR) | 12 |

| 2 | Inner Race Fault (IR) | 9 |

| 3 | Combined Outer and Inner Race Fault (CR) | 5 |

| Ball-Passing Frequency | 900 rpm | 1500 rpm |

|---|---|---|

| Fo | 45.7 Hz (21 ms) | 74.1 Hz (13 ms) |

| Fi | 76.3 Hz (13 ms) | 123.6 Hz (8 ms) |

| Image Size | The Observation Time of One Image | Accuracy |

|---|---|---|

| 1 × 625 | 9.7 ms | 87.6% |

| 1 × 1024 | 16 ms | 93.1% |

| 1 × 1600 | 25 ms | 96.5% |

| 1 × 2500 | 39 ms | 96.3% |

| 1 × 3600 | 56 ms | 95.7% |

| Layer | Kernel Size | Input Image Size | Output Image Size |

|---|---|---|---|

| Conv1 | 1 × 7 × 12 | 1 × 1600 | 1 × 1600 × 12 |

| Max pooling | 1 × 1600 × 12 | 1 × 400 × 12 | |

| Conv2 | 1 × 7 × 32 | 1 × 400 × 12 | 1 × 400 × 32 |

| Max pooling | 1 × 400 × 32 | 1 × 100 × 32 | |

| Conv3 | 1 × 7 × 64 | 1 × 100 × 32 | 1 × 100 × 64 |

| Max pooling | 1 × 100 × 64 | 1 × 25 × 64 | |

| Conv4 | 1 × 7 × 64 | 1 × 25 × 64 | 1 × 25 × 64 |

| Max pooling | 1 × 25 × 64 | 1 × 7 × 64 | |

| Conv5 | 1 × 7 × 64 | 1 × 7 × 64 | 1 × 7 × 64 |

| Max pooling | 1 × 7 × 64 | 1 × 2 × 64 | |

| FC | 1 × 128 | 1 × 4 |

| Quantization Method | Weight | Threshold | Accuracy |

|---|---|---|---|

| None | Floating-point | - | 99.3% |

| Binary [26] | {−1, 1} | 0 | 74.2% |

| Ternary [27] | {−1, 0, 1} | ±0.05 | 83.6% |

| Ternary Floating [28] | {−Wn, 0, Wp} | ±0.05 | 90.8% |

| Mean | Test 1 | Test 2 | Test 3 | Test 4 |

|---|---|---|---|---|

| Conv1 weight | 0.066 | −0.056 | −0.121 | −0.041 |

| Conv2 weight | −0.064 | 0.034 | −0.034 | −0.094 |

| Conv3 weight | −0.056 | −0.078 | −0.124 | 0.007 |

| Conv4 weight | −0.111 | −0.115 | −0.012 | −0.178 |

| Conv5 weight | −0.096 | 0.111 | −0.078 | 0.074 |

| Version | Output Channels in Each Layer | Param # | Accuracy | ||||

|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | |||

| 1 | 8 | 32 | 32 | 64 | 64 | 52 K | 88.6% |

| 2 | 8 | 32 | 32 | 64 | 128 | 82 K | 91.8% |

| 3 | 12 | 16 | 32 | 64 | 128 | 78 K | 93.7% |

| 4 | 12 | 16 | 64 | 64 | 64 | 66 K | 96.4% |

| 5 | 12 | 32 | 32 | 64 | 128 | 83 K | 97.6% |

| 6 | 12 | 32 | 64 | 64 | 64 | 75 K | 98.5% |

| 7 | 16 | 32 | 64 | 64 | 64 | 76 K | 98.7% |

| Kernel Table Bits | Accuracy | ||

|---|---|---|---|

| Total Bits | Integer Bits | Decimal Bits | |

| 11 | 5 | 6 | 88.71% |

| 12 | 5 | 7 | 93.2% |

| 13 | 5 | 8 | 97.48% |

| 14 | 5 | 9 | 98.08% |

| 15 | 5 | 10 | 98.25% |

| Bits of One Feature Map Value | Accuracy | ||

|---|---|---|---|

| Total Bits | Integer Bits | Decimal Bits | |

| 13 | 8 | 5 | 92.1% |

| 14 | 8 | 6 | 95.22% |

| 15 | 8 | 7 | 96.62% |

| 16 | 8 | 8 | 97.35% |

| 17 | 8 | 9 | 97.56% |

| Memory Type | Memory | Total Bits before the Fixed Point | Total Bits after the Fixed Point | Reduction Ratio |

|---|---|---|---|---|

| ROM | Conv_Weight | 2,382,464 | 148,904 | 93.75% |

| FC_Weight | 16,384 | 1024 | 93.75% | |

| BN | 15,104 | 8968 | 40.62% | |

| RAM | Fmap_1 | 153,600 | 76,800 | 50% |

| Fmap_2 | 102,400 | 51,200 | 50% | |

| The sum of all bits | 2,669,952 | 286,896 | 89.25% |

| Fault Type | Label | Python | RTL |

|---|---|---|---|

| Healthy | 0 | 97.49% | 97.97% |

| OR Fault | 1 | 99.79% | 99.27% |

| IR Fault | 2 | 95.69% | 95.28% |

| CR Fault | 3 | 96.39% | 96.86% |

| Total | - | 97.34% | 97.53% |

| [1] IEEE Trans. Meas. ‘20 | [12] IEEE Access ‘20 | [13] INISTA ‘20 | [14] Sensors ‘19 | Proposed Work | ||

|---|---|---|---|---|---|---|

| Dataset | PU | PU | PU | PU | PU | |

| Signal type | Vibration | Current | Current | Current | Current | Current |

| Architecture | 2-D CNN + MLP | 2-D Residual CNN | 1-D CNN + LSTM + KNN | 1-D CNN + SVM | 1-D CNN | |

| Datapre-processing | Gray image | Normalize + overlap + gray image | None | Overlap + FFT + normalize | Reduce excess bits + overlap | |

| Type of classification | Fault location (3 types without IR + OR combined fault) | Fault location (3 types without IR + OR combined fault) | Fault location (3 types without IR + OR combined fault) | Fault location (3 types without IR + OR combined fault) | Fault location (4 types) | |

| Image size | 80 × 80 | 224 × 224 | 1 × 6400 | N/A | 1 × 1600 | |

| Accuracy | 99.4% | 98.3% | 98.7% | 88.8~98.93% | 98.17% | 98.58% |

| Memory Name | 18 Kbits RAM | 36 Kbits RAM | Total Kbits |

|---|---|---|---|

| Input_image_ROM | 1 | 0 | 18 |

| Conv_Weight_ROM | 0 | 5 | 180 |

| FC_Weight_ROM | 1 | 0 | 18 |

| BN_ROM | 1 | 0 | 18 |

| Fmap_1 | 1 | 2 | 90 |

| Fmap_2 | 0 | 2 | 72 |

| Total | 4 | 9 | 396 |

| Number of Correct | Label 0 | Label 1 | Label 2 | Label 3 |

|---|---|---|---|---|

| Test set 1 | 642 | 652 | 625 | 637 |

| Test set 2 | 644 | 644 | 615 | 634 |

| Test set 3 | 637 | 643 | 614 | 632 |

| Test set 4 | 633 | 647 | 622 | 641 |

| Test set 5 | 623 | 648 | 619 | 635 |

| Test set 6 | 631 | 643 | 623 | 624 |

| Test set 7 | 628 | 640 | 627 | 616 |

| Test set 8 | 630 | 652 | 605 | 627 |

| Test set 9 | 615 | 645 | 632 | 630 |

| Test set 10 | 627 | 638 | 621 | 636 |

| Each Stage Operation | Accuracy |

|---|---|

| Input data fixed-point training (9 bits) | 98.58% |

| BN parameters fixed-point (19 bits) | 98.3% |

| Weight value fixed-point (14 bits) | 98.08% |

| Feature map fixed-point (16 bits) | 97.34% |

| RTL code | 97.53% |

| FPGA implementation | 96.37% |

| # Cycles | |

|---|---|

| Layer 1 | 134,489 |

| Layer 2 | 1,077,519 |

| Layer 3 | 1,445,905 |

| Layer 4 | 741,393 |

| Layer 5 + FC | 225,297 |

| Total | 3,624,603 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, C.-C.; Liang, Y.-P.; Jiang, H.-J. CNN Hardware Accelerator for Real-Time Bearing Fault Diagnosis. Sensors 2023, 23, 5897. https://doi.org/10.3390/s23135897

Chung C-C, Liang Y-P, Jiang H-J. CNN Hardware Accelerator for Real-Time Bearing Fault Diagnosis. Sensors. 2023; 23(13):5897. https://doi.org/10.3390/s23135897

Chicago/Turabian StyleChung, Ching-Che, Yu-Pei Liang, and Hong-Jin Jiang. 2023. "CNN Hardware Accelerator for Real-Time Bearing Fault Diagnosis" Sensors 23, no. 13: 5897. https://doi.org/10.3390/s23135897

APA StyleChung, C.-C., Liang, Y.-P., & Jiang, H.-J. (2023). CNN Hardware Accelerator for Real-Time Bearing Fault Diagnosis. Sensors, 23(13), 5897. https://doi.org/10.3390/s23135897