Improved Robot Path Planning Method Based on Deep Reinforcement Learning

Abstract

1. Introduction

2. Related Work

2.1. Epsilon–Greedy

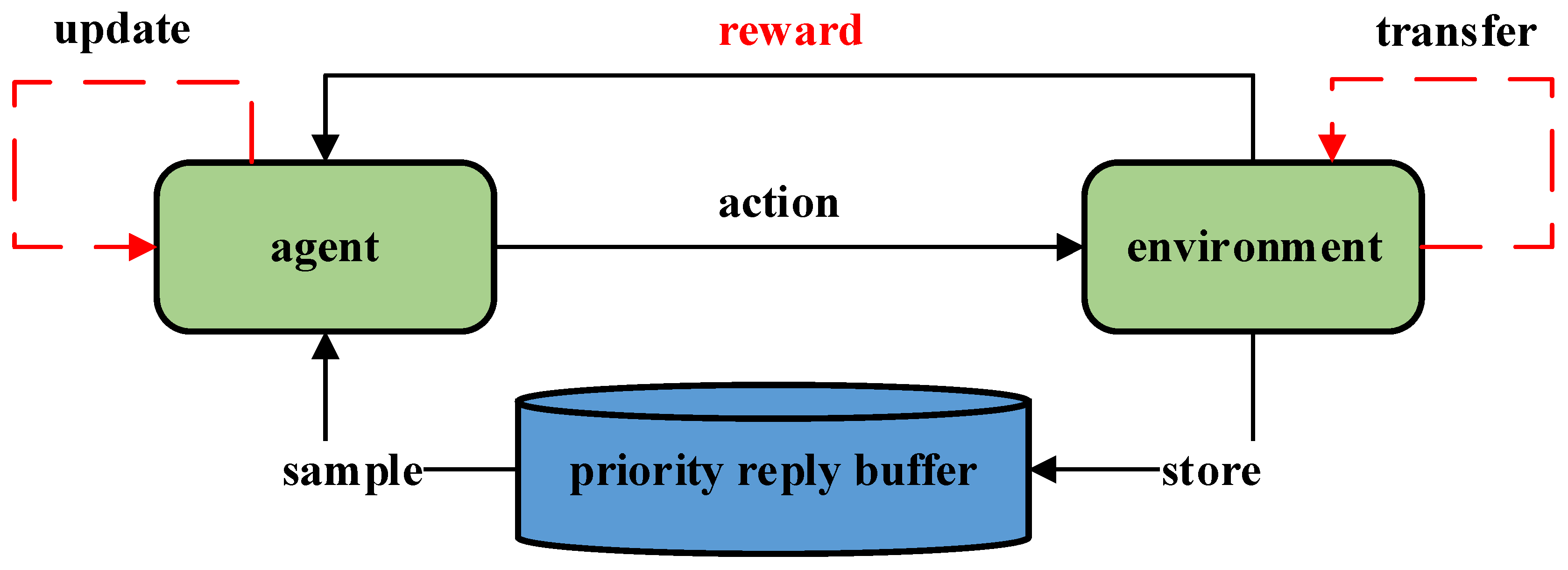

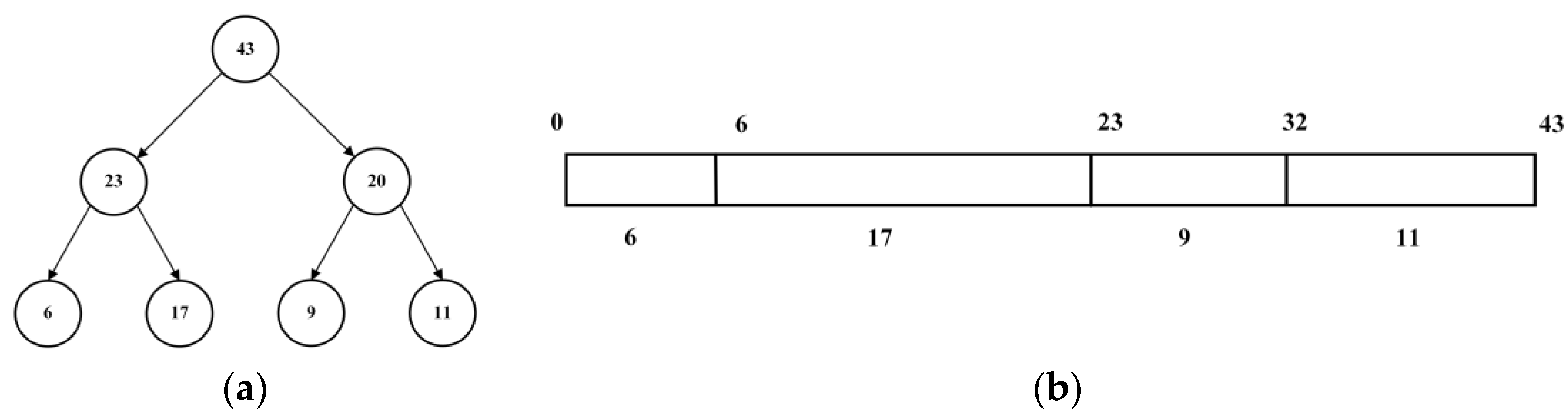

2.2. Prioritized Experience Replay

2.3. Double DQN

- As the training environment becomes more complex, the number of parameters required by the model increases [3]. This may trigger a dimensional catastrophe, leading to a significant increase in computational effort ultimately extending the training time.

- Traditional algorithms do not solve the problem of reward sparsity in path planning [23]. This is one of the reasons why DRL training is locally optimal.

- Reinforcement learning is very sensitive to the initial values of the training model, poor initial values of the model may lead to a slow convergence rate or even to a local optimum [24].

3. Materials and Methods

3.1. Dimensional Discretization

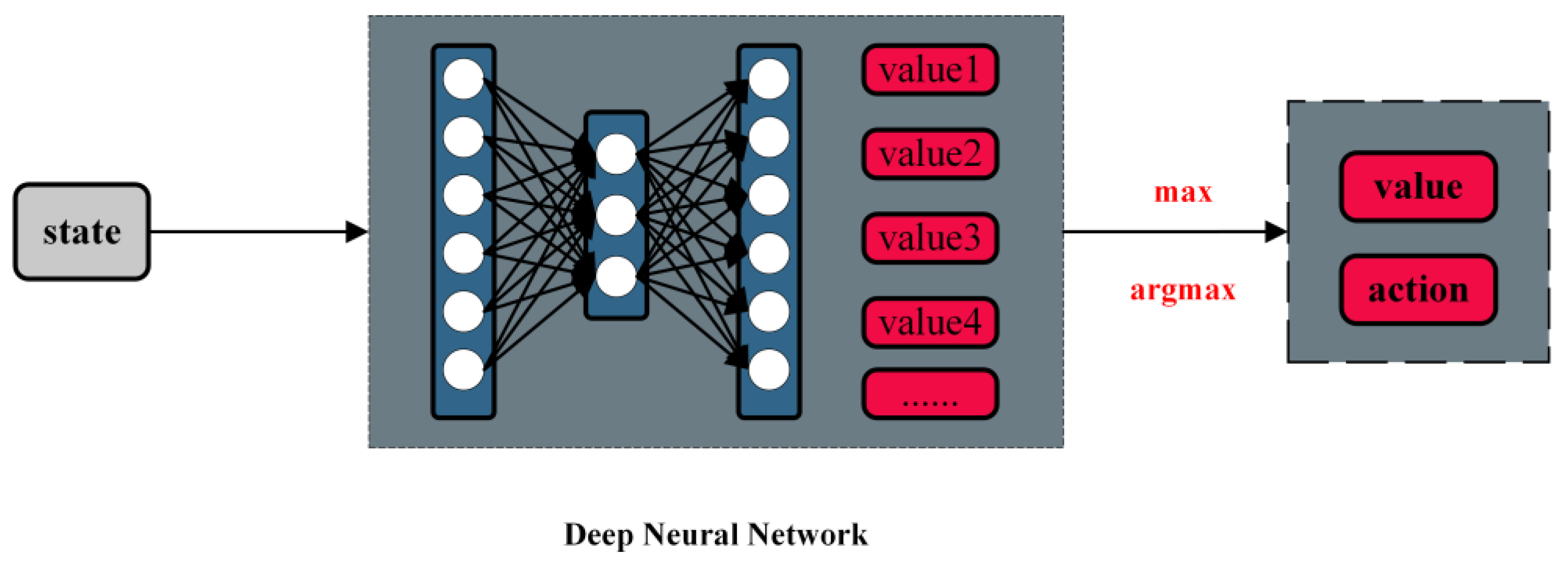

3.2. Two-Branch Network Structure

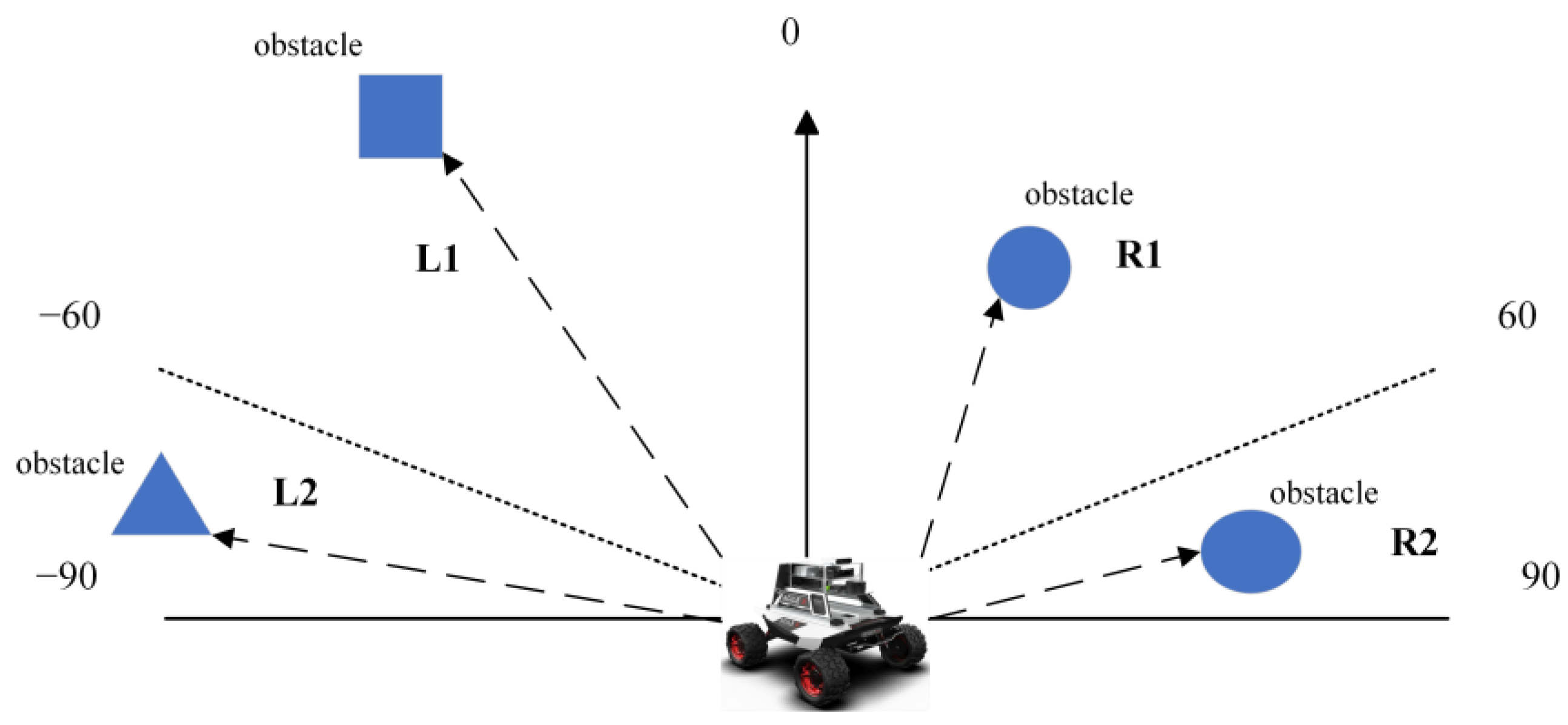

3.3. Expert Experience

3.4. Reward Function

4. Results

4.1. Experimental Procedure

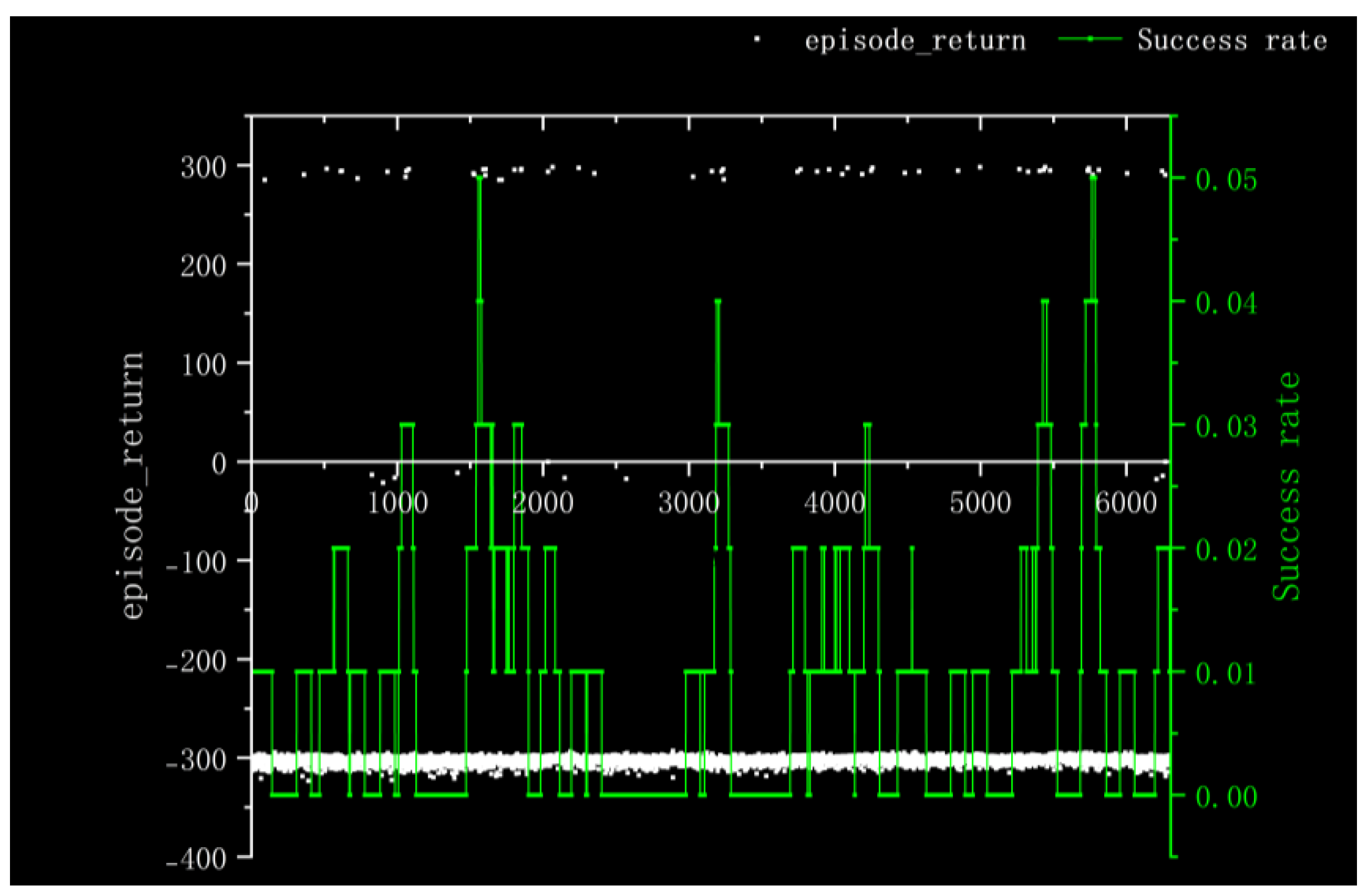

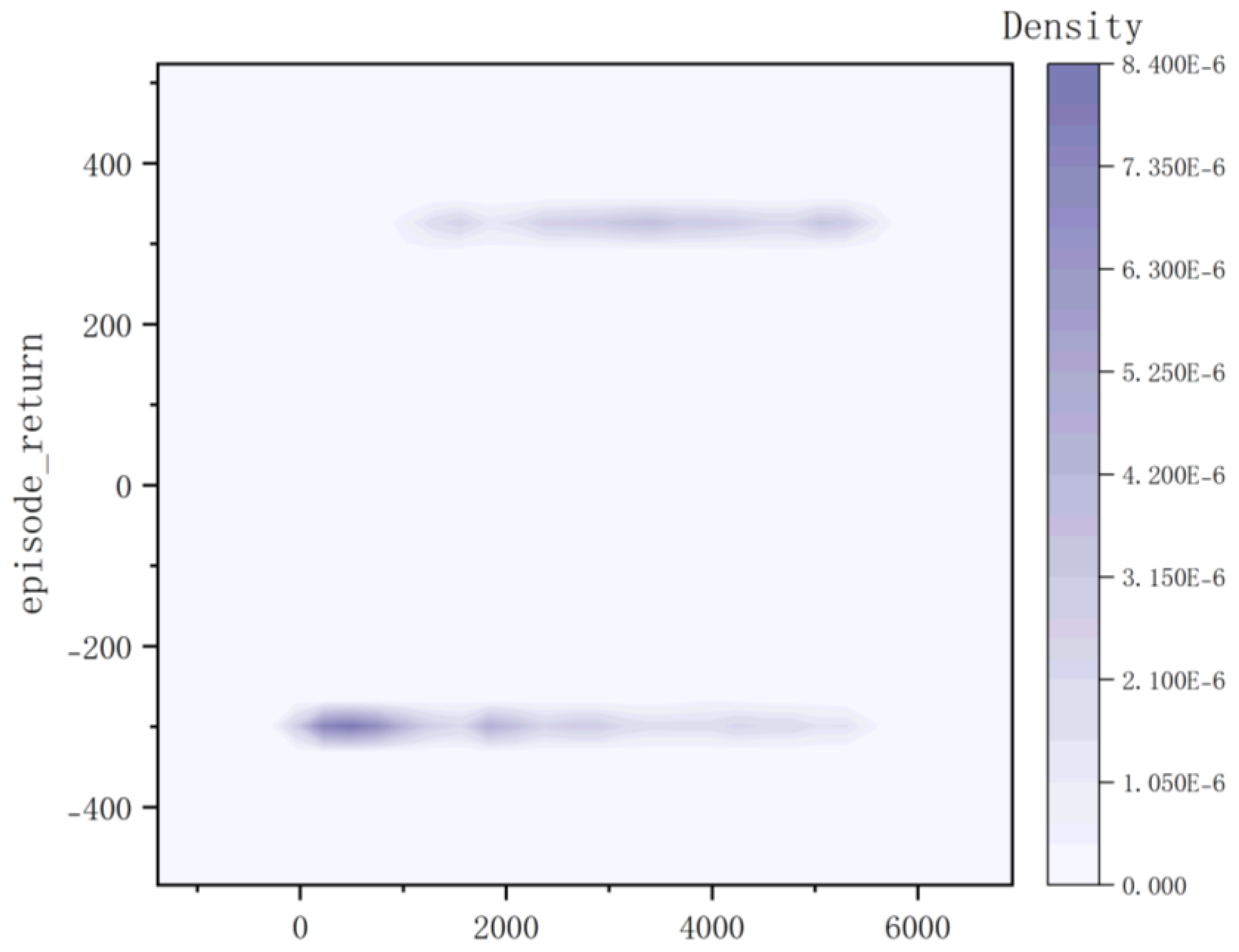

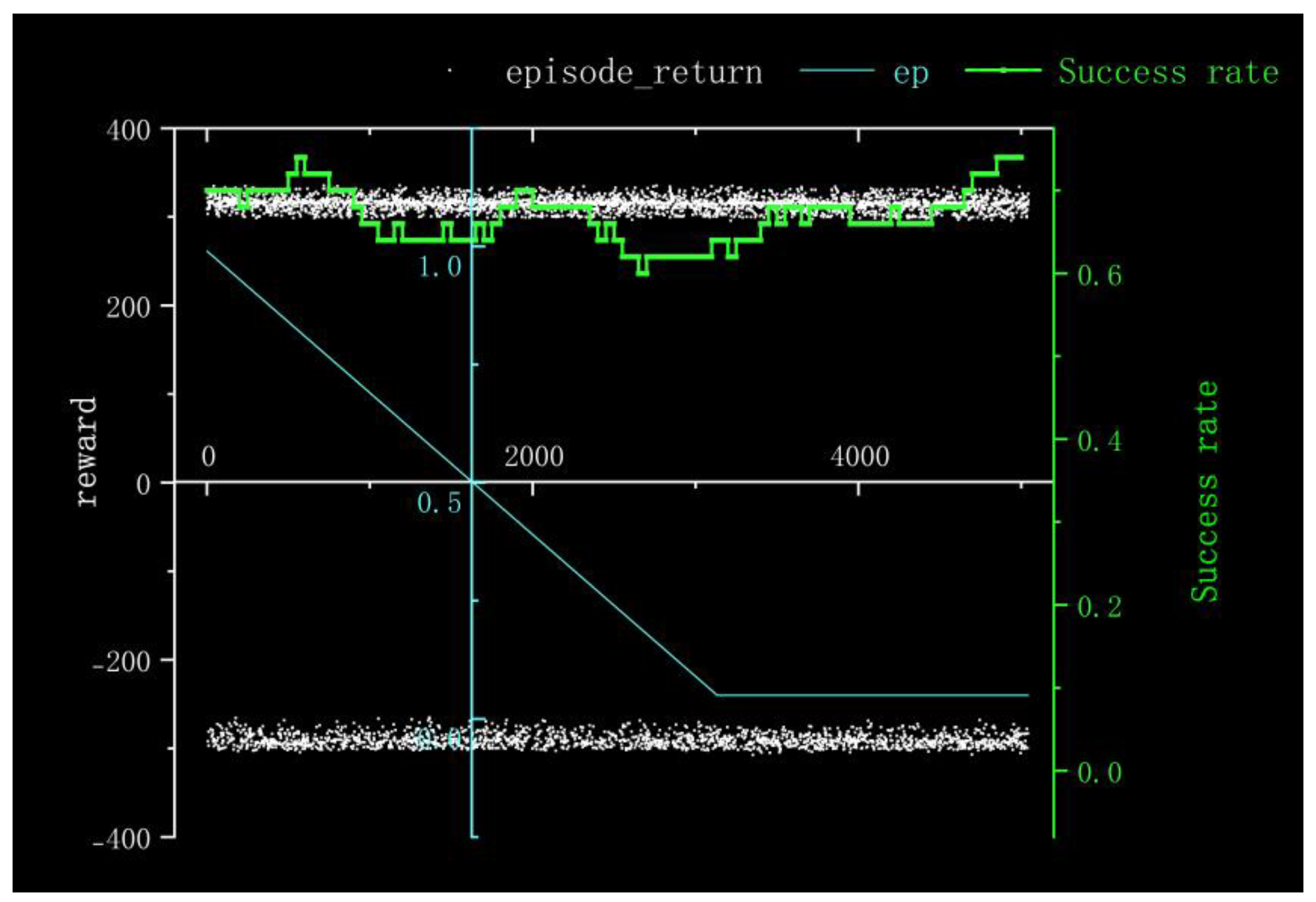

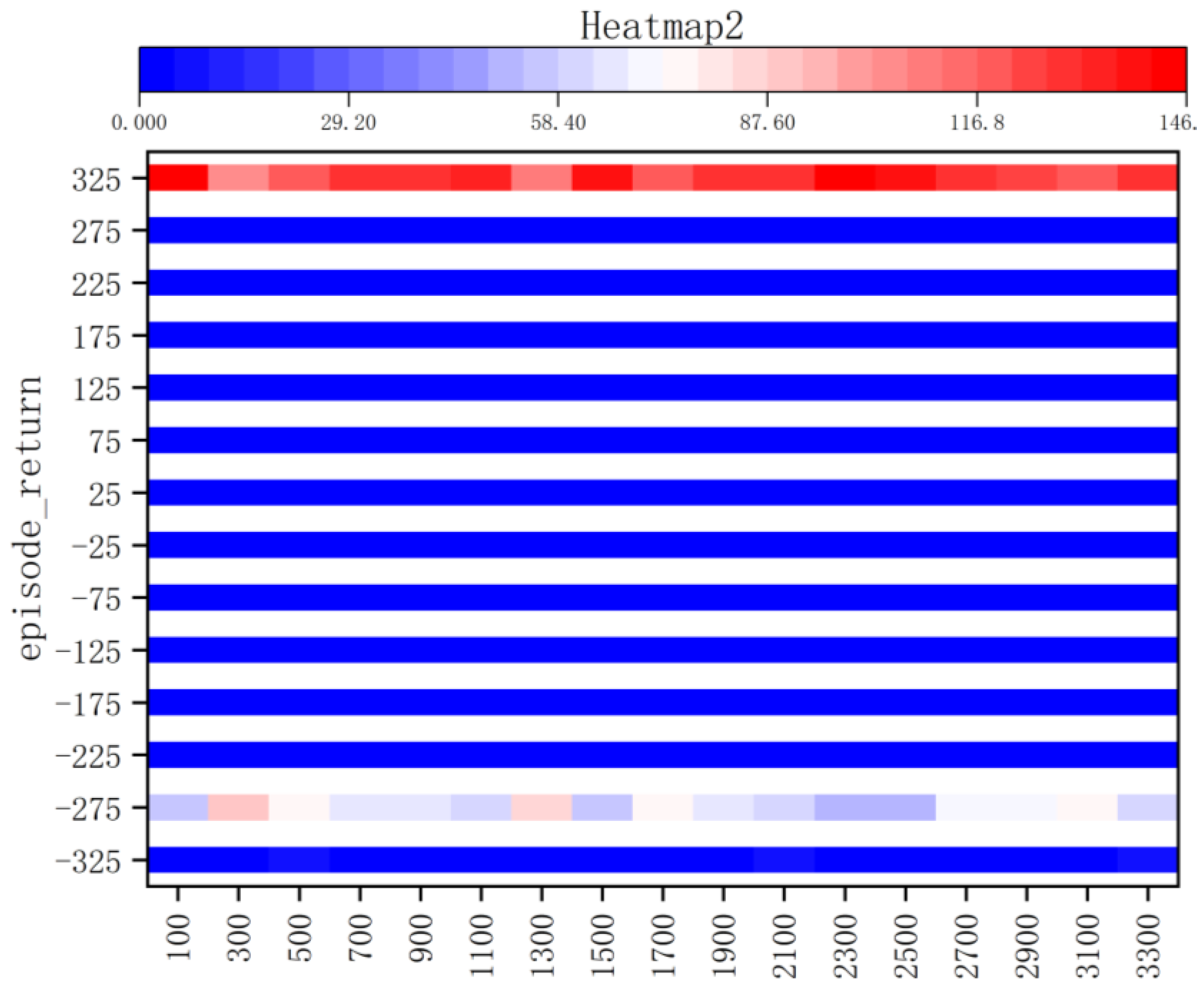

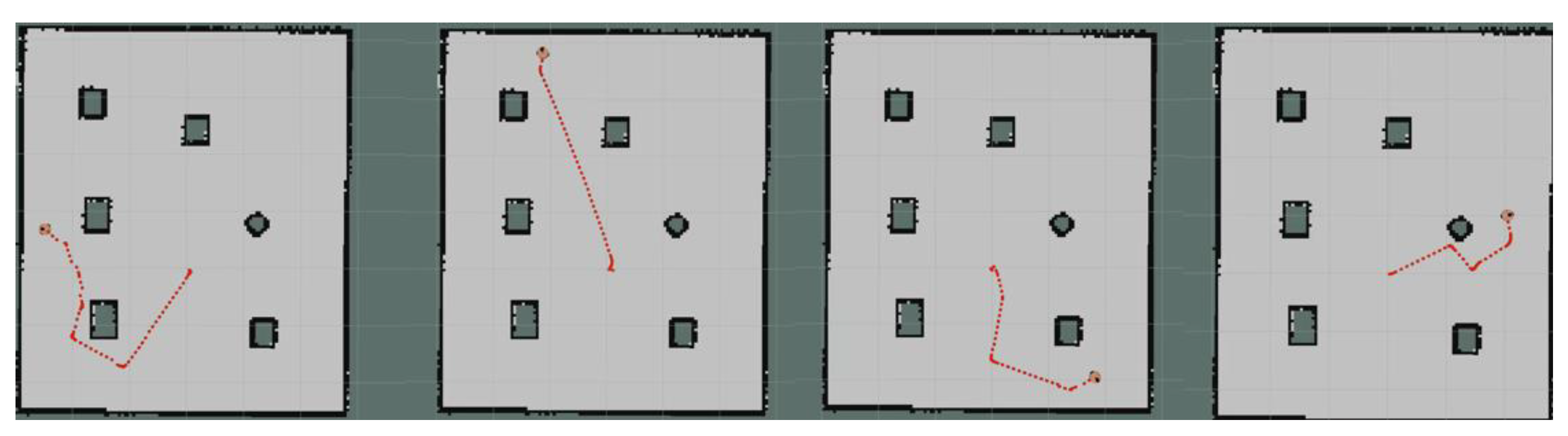

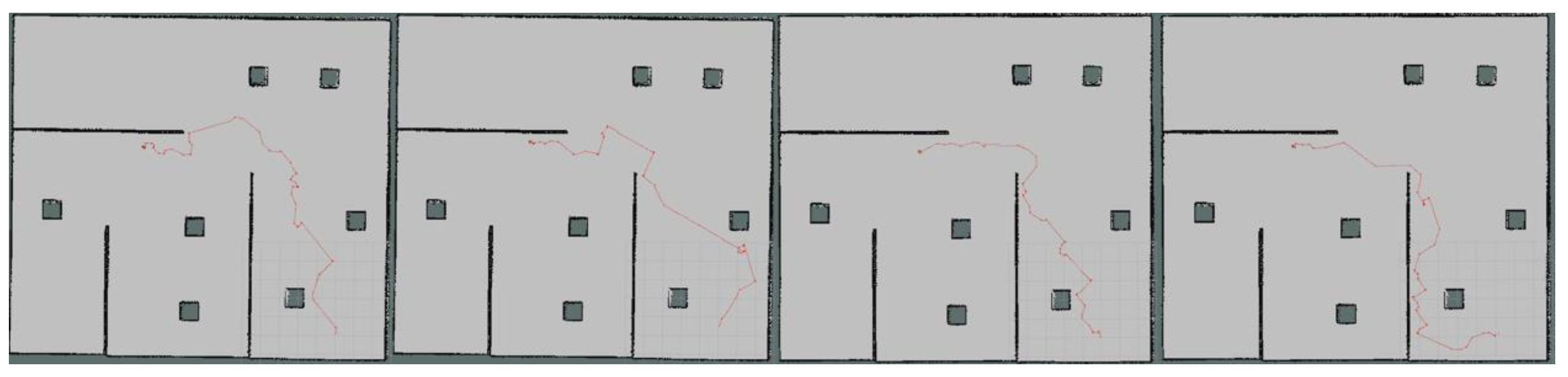

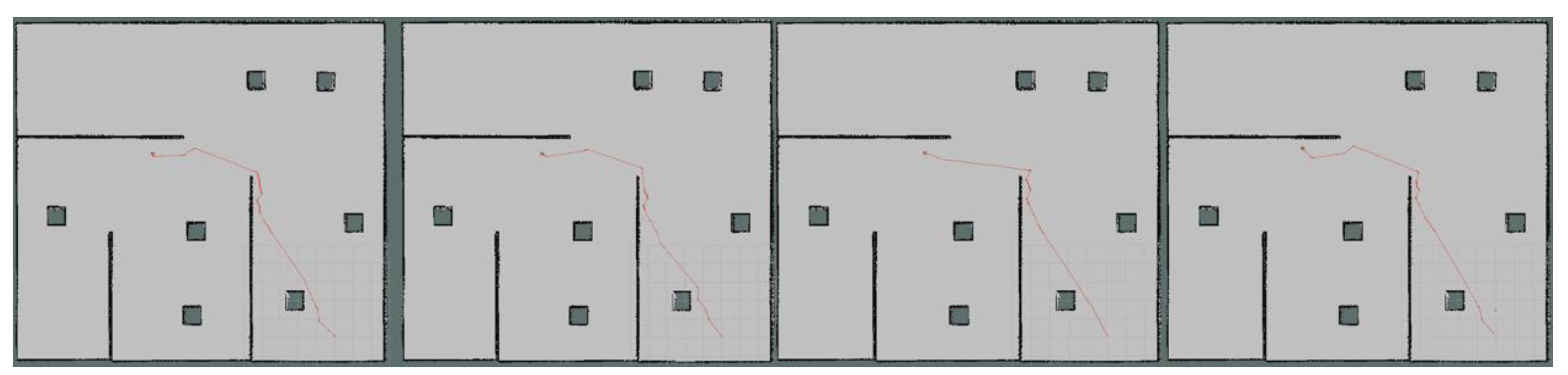

4.2. Simulation Experiments

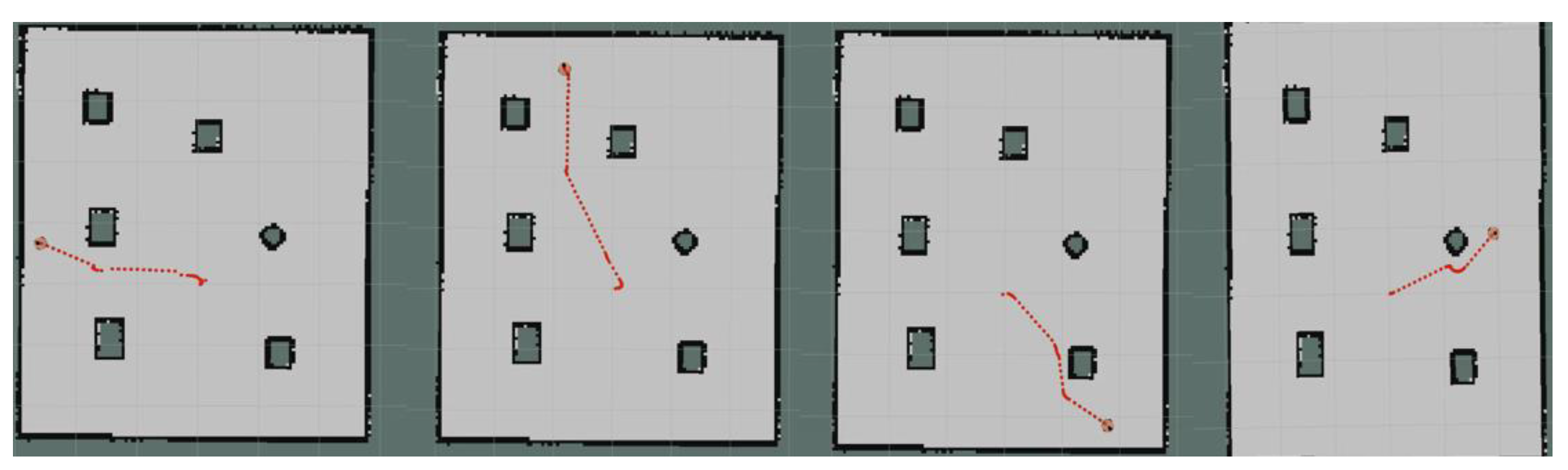

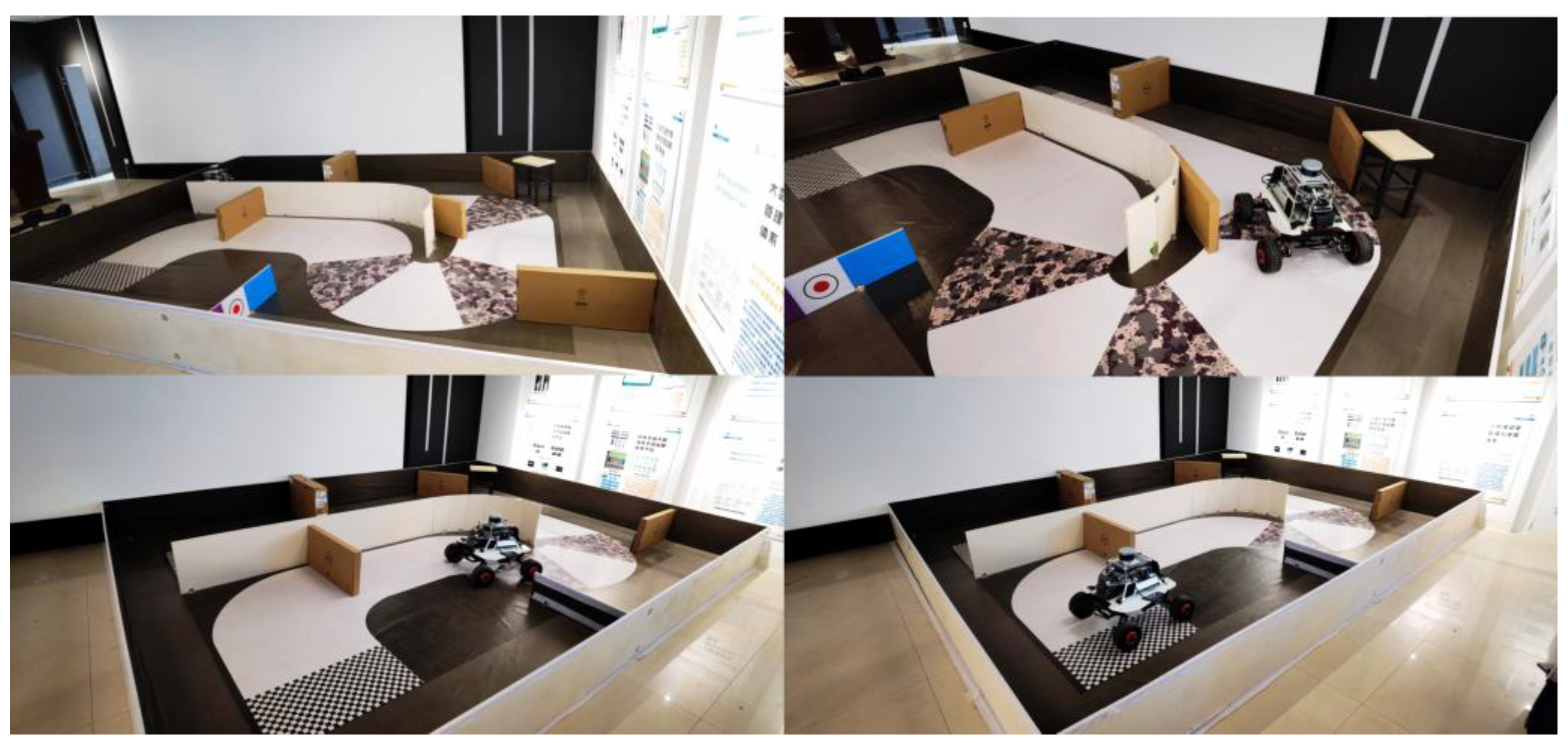

4.3. Realistic Experiments

5. Conclusions

- Introduce the ICM module and Reverse Curriculum Learning module to explore more through curiosity mechanisms and design a reasonable step-by-step training process for distributed training of the model. It is believed that this will further improve the training speed and stability.

- The dimensional discretization module is further improved to retain more information in the original data as far as possible under the premise of ensuring the speed and stability of the algorithm, so as to achieve better dimensional discretization.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mac, T.T.; Copot, C.; Tran, D.T.; De Keyser, R. Heuristic Approaches in Robot Path Planning. Robot. Auton. Syst. 2016, 86, 13–28. [Google Scholar] [CrossRef]

- Yin, Y.; Chen, Z.; Liu, G.; Guo, J. A Mapless Local Path Planning Approach Using Deep Reinforcement Learning Framework. Sensors 2023, 23, 2036. [Google Scholar] [CrossRef]

- Prianto, E.; Kim, M.; Park, J.-H.; Bae, J.-H.; Kim, J.-S. Path Planning for Multi-Arm Manipulators Using Deep Reinforcement Learning: Soft Actor–Critic with Hindsight Experience Replay. Sensors 2020, 20, 5911. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.; Huang, B.; Fränti, P. A Review of Motion Planning Algorithms for Intelligent Robots. J. Intell. Manuf. 2022, 33, 387–424. [Google Scholar] [CrossRef]

- Yu, J.; Su, Y.; Liao, Y. The Path Planning of Mobile Robot by Neural Networks and Hierarchical Reinforcement Learning. Front. Neurorobot. 2020, 14, 63. [Google Scholar] [CrossRef]

- Zhang, K.; Cao, J.; Zhang, Y. Adaptive Digital Twin and Multiagent Deep Reinforcement Learning for Vehicular Edge Computing and Networks. IEEE Trans. Ind. Inform. 2022, 18, 1405–1413. [Google Scholar] [CrossRef]

- Zheng, S.; Liu, H. Improved Multi-Agent Deep Deterministic Policy Gradient for Path Planning-Based Crowd Simulation. IEEE Access 2019, 7, 147755–147770. [Google Scholar] [CrossRef]

- Study of Convolutional Neural Network-Based Semantic Segmentation Methods on Edge Intelligence Devices for Field Agricultural Robot Navigation Line Extraction. Comput. Electron. Agric. 2023, 209, 107811. [CrossRef]

- Wu, K.; Abolfazli Esfahani, M.; Yuan, S.; Wang, H. TDPP-Net: Achieving Three-Dimensional Path Planning via a Deep Neural Network Architecture. Neurocomputing 2019, 357, 151–162. [Google Scholar] [CrossRef]

- Duguleana, M.; Mogan, G. Neural Networks Based Reinforcement Learning for Mobile Robots Obstacle Avoidance. Expert Syst. Appl. 2016, 62, 104–115. [Google Scholar] [CrossRef]

- Zeng, J.; Qin, L.; Hu, Y.; Yin, Q.; Hu, C. Integrating a Path Planner and an Adaptive Motion Controller for Navigation in Dynamic Environments. Appl. Sci. 2019, 9, 1384. [Google Scholar] [CrossRef]

- Chen, Y.; Liang, L. SLP-Improved DDPG Path-Planning Algorithm for Mobile Robot in Large-Scale Dynamic Environment. Sensors 2023, 23, 3521. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Nguyen, N.D.; Nahavandi, S. Deep Reinforcement Learning for Multiagent Systems: A Review of Challenges, Solutions, and Applications. IEEE Trans. Cybern. 2020, 50, 3826–3839. [Google Scholar] [CrossRef]

- Cai, J.; Huang, W.; You, Y.; Chen, Z.; Ren, B.; Liu, H. SPSD: Semantics and Deep Reinforcement Learning Based Motion Planning for Supermarket Robot. IEICE Trans. Inf. Syst. 2023, E106.D, 765–772. [Google Scholar] [CrossRef]

- Nakamura, T.; Kobayashi, M.; Motoi, N. Path Planning for Mobile Robot Considering Turnabouts on Narrow Road by Deep Q-Network. IEEE Access 2023, 11, 19111–19121. [Google Scholar] [CrossRef]

- Cai, M.; Aasi, E.; Belta, C.; Vasile, C.-I. Overcoming Exploration: Deep Reinforcement Learning for Continuous Control in Cluttered Environments From Temporal Logic Specifications. IEEE Robot. Autom. Lett. 2023, 8, 2158–2165. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level Control through Deep Reinforcement Learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar] [CrossRef]

- Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley & Son: Hoboken, NJ, USA, 2014; ISBN 978-1-118-62587-3. [Google Scholar]

- Zhu, K.; Zhang, T. Deep Reinforcement Learning Based Mobile Robot Navigation: A Review. Tsinghua Sci. Technol. 2021, 26, 674–691. [Google Scholar] [CrossRef]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized Experience Replay. arXiv 2016, arXiv:1511.05952, 05952. [Google Scholar]

- Lv, Y.; Ren, X.; Hu, S.; Xu, H. Approximate Optimal Stabilization Control of Servo Mechanisms Based on Reinforcement Learning Scheme. Int. J. Control Autom. Syst. 2019, 17, 2655–2665. [Google Scholar] [CrossRef]

- Kubovčík, M.; Dirgová Luptáková, I.; Pospíchal, J. Signal Novelty Detection as an Intrinsic Reward for Robotics. Sensors 2023, 23, 3985. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Ye, W.; Guo, J.; Li, Z. Deep Reinforcement Learning for Indoor Mobile Robot Path Planning. Sensors 2020, 20, 5493. [Google Scholar] [CrossRef] [PubMed]

- Ecoffet, A.; Huizinga, J.; Lehman, J.; Stanley, K.O.; Clune, J. First Return, Then Explore. Nature 2021, 590, 580–586. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Luo, Y.; Zhong, Y.; Chen, X.; Liu, Q.; Peng, J. Sequence Modeling of Temporal Credit Assignment for Episodic Reinforcement Learning. arXiv 2019, arXiv:1905.13420. [Google Scholar]

- Pathak, D.; Agrawal, P.; Efros, A.A.; Darrell, T. Curiosity-Driven Exploration by Self-Supervised Prediction. In Proceedings of the 34th International Conference on Machine Learning, PMLR, Sydney, Australia, 17 July 2017; pp. 2778–2787. [Google Scholar]

- Florensa, C.; Held, D.; Wulfmeier, M.; Zhang, M.; Abbeel, P. Reverse Curriculum Generation for Reinforcement Learning. In Proceedings of the 1st Annual Conference on Robot Learning, PMLR, California, CA, USA, 18 October 2017; pp. 482–495. [Google Scholar]

| Relative Location | Discrete Values |

|---|---|

| [−20, 20) | 1 |

| [20, 80) | 2 |

| [80, 180) | 3 |

| [180, −80) | 4 |

| [−80, −20) | 5 |

| Value | Action | Linear Velocity | Angular Velocity |

|---|---|---|---|

| −2 | Turn Right (big angle) | 0.05 | −1.5 |

| −1 | Turn Right (small angle) | 0.1 | −0.75 |

| 0 | Go Straight | 0.7 | 0 |

| 1 | Turn Left (small angle) | 0.1 | 0.75 |

| 2 | Turn Left (big angle) | 0.05 | 1.5 |

| Navigation Module Values | Expert Experience |

|---|---|

| (−20, 20) | 0 |

| (20, 80) | −1 |

| (80, 180) | −2 |

| (180, 280) | 2 |

| (280, 340) | 1 |

| Obstacle Avoidance Module Value | Expert Experience |

|---|---|

| (L2, dis < 0.1) | −1 |

| (L1, 0.5 < dis <= 0.1) | −1 |

| (L1, dis < 0.1) | −2 |

| (R1, dis < 0.1) | 2 |

| (R1, 0.5 < dis <= 0.1) | 1 |

| (R2, dis < 0.1) | 1 |

| Other | 0 |

| Parameters | Value |

|---|---|

| Memory Capacity | 5000 |

| 0.8 | |

| Learning Rate | 3 × 10−5 |

| 0.99~0.05 | |

| 0.4~1.0 |

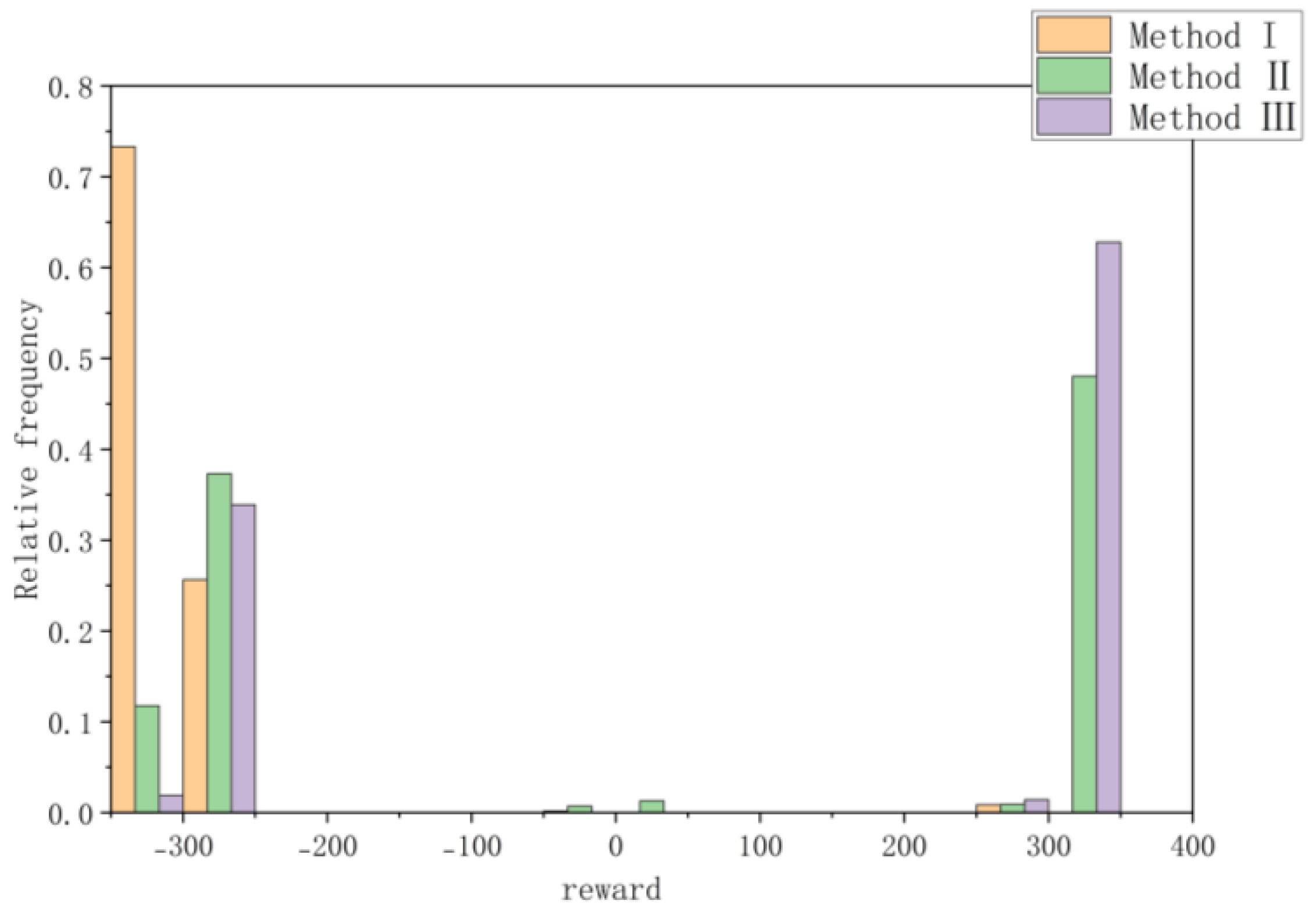

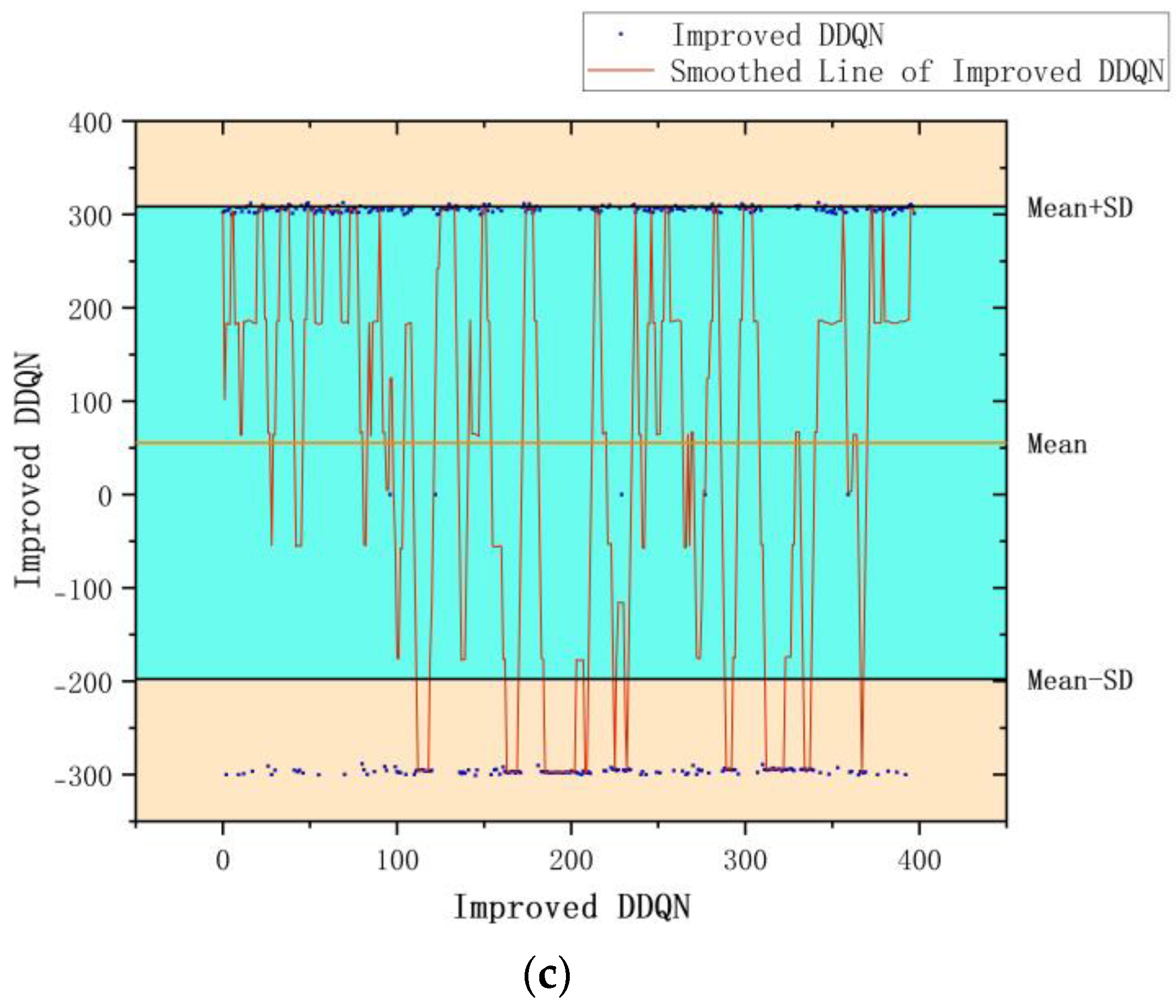

| Parameters | Method I | Method II | Method III |

|---|---|---|---|

| Mean | −296.59856 | 10.83118 | 101.8214 |

| Standard Deviation | 56.62857 | 302.85006 | 289.28139 |

| Lower 95% CI of Mean | −297.97611 | 2.84957 | 92.23436 |

| Upper 95% CI of Mean | −295.221 | 18.81279 | 111.40845 |

| Variance | 3206.79515 | 91,718.15644 | 83,683.72419 |

| 1st Quartile (Q1) | −304.03949 | −294.79913 | −286.58769 |

| Median | −301.76303 | 3.75054 | 309.77573 |

| 3rd Quartile (Q3) | −299.86398 | 317.04965 | 316.77985 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, H.; Wang, J.; Kuang, L.; Han, X.; Xue, H. Improved Robot Path Planning Method Based on Deep Reinforcement Learning. Sensors 2023, 23, 5622. https://doi.org/10.3390/s23125622

Han H, Wang J, Kuang L, Han X, Xue H. Improved Robot Path Planning Method Based on Deep Reinforcement Learning. Sensors. 2023; 23(12):5622. https://doi.org/10.3390/s23125622

Chicago/Turabian StyleHan, Huiyan, Jiaqi Wang, Liqun Kuang, Xie Han, and Hongxin Xue. 2023. "Improved Robot Path Planning Method Based on Deep Reinforcement Learning" Sensors 23, no. 12: 5622. https://doi.org/10.3390/s23125622

APA StyleHan, H., Wang, J., Kuang, L., Han, X., & Xue, H. (2023). Improved Robot Path Planning Method Based on Deep Reinforcement Learning. Sensors, 23(12), 5622. https://doi.org/10.3390/s23125622