Photoplethysmogram Biometric Authentication Using a 1D Siamese Network

Abstract

1. Introduction

2. Related Works

2.1. Statistical Methods

2.2. Machine Learning-Based Approaches

2.3. Deep Learning-Based Approaches

3. Methods

3.1. Dataset

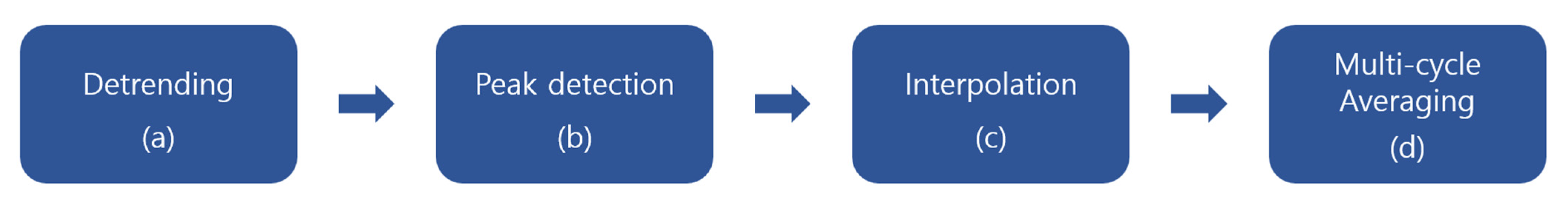

3.2. Data Processing

3.2.1. Detrending

3.2.2. Peak Detection

3.2.3. Interpolation

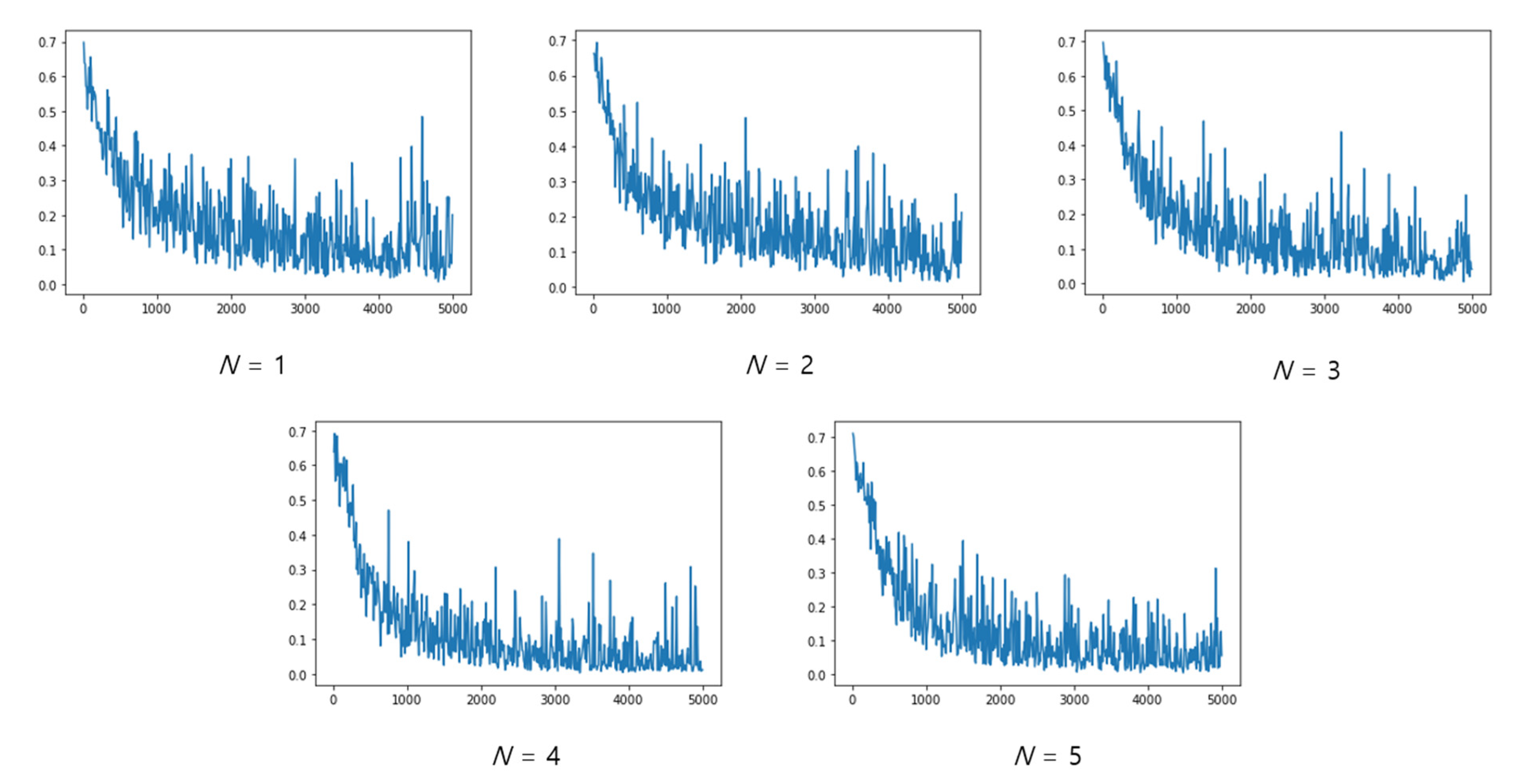

3.2.4. Multicycle Averaging

3.3. Model

3.4. Model Structure

3.5. Training

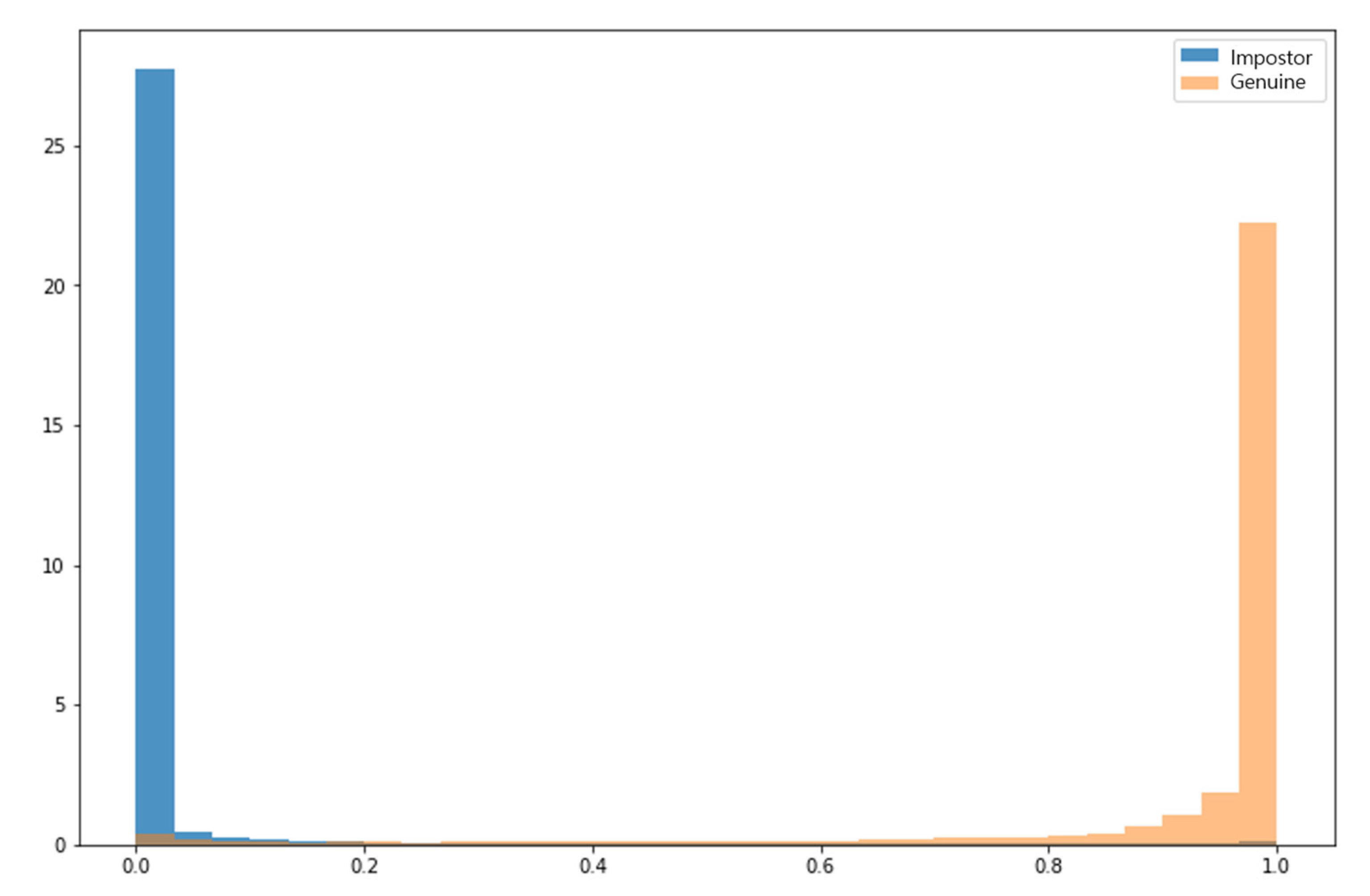

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bhalla, A.; Sluganovic, I.; Krawiecka, K.; Martinovic, I. MoveAR: Continuous biometric authentication for augmented reality headsets. In Proceedings of the 7th ACM on Cyber-Physical System Security Workshop, Hong Kong, China, 7–11 July 2021; pp. 41–52. [Google Scholar]

- Olade, I.; Liang, H.N.; Fleming, C. A review of multimodal facial biometric authentication methods in mobile devices and their application in head mounted displays. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Guangzhou, China, 8–12 October 2018; pp. 1997–2004. [Google Scholar] [CrossRef]

- Rodriguez-Labra, J.I.; Kosik, C.; Maddipatla, D.; Narakathu, B.B.; Atashbar, M.Z. Development of a PPG sensor array as a wearable device for monitoring cardiovascular metrics. IEEE Sens. J. 2021, 21, 26320–26327. [Google Scholar] [CrossRef]

- Karimian, N.; Guo, Z.; Tehranipoor, M.; Forte, D. Human recognition from photoplethysmography (ppg) based on non-fiducial features. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 4636–4640. [Google Scholar] [CrossRef]

- Krishnan, R.; Natarajan, B.B.; Warren, S. Two-stage approach for detection and reduction of motion artifacts in photoplethysmographic data. IEEE Trans. Biomed. Eng. 2010, 57, 1867–1876. [Google Scholar] [CrossRef] [PubMed]

- Temko, A. Accurate heart rate monitoring during physical exercises using ppg. IEEE Trans. Biomed. Eng. 2017, 64, 2016–2024. [Google Scholar] [CrossRef] [PubMed]

- Joseph, G.; Joseph, A.; Titus, G.; Thomas, R.M.; Jose, D. Photoplethysmogram (PPG) signal analysis and wavelet de-noising. In Proceedings of the Annual International Conference on Emerging Research Areas: Magnetics, Machines and Drives (AICERA/iCMMD), Kottayam, India, 24–26 July 2014; Volume 2014. [Google Scholar] [CrossRef]

- Tokutaka, H.; Maniwa, Y.; Gonda, E.; Yamamoto, M.; Kakihara, T.; Kurata, M.; Fujimura, K.; Shigang, L.; Ohkita, M. Construction of a general physical condition judgment system using acceleration plethysmogram pulse-wave analysis. In Proceedings of the International Workshop on Self-Organizing Maps, St. Augustine, FL, USA, 8–10 June 2009; pp. 307–315. [Google Scholar] [CrossRef]

- Tamura, T.; Maeda, Y.; Sekine, M.; Yoshida, M. Wearable photoplethysmographic sensors—Past and present. Electronics 2014, 3, 282–302. [Google Scholar] [CrossRef]

- Karimian, N.; Tehranipoor, M.; Forte, D. Non-fiducial PPG-based authentication for healthcare application. In Proceedings of the IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Orlando, FL, USA, 16–19 February 2017; Volume 2017. [Google Scholar] [CrossRef]

- Sancho, J.; Alesanco, Á.; García, J. Biometric authentication using the PPG: A long-term feasibility study. Sensors 2018, 18, 1525. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.W.; Woo, D.K.; Mah, P.S. Personal Authentication Method using Segment PPG signals. J. Inst. Electron. Inf. Eng. 2019, 56, 661–667. [Google Scholar] [CrossRef]

- Biswas, D.; Everson, L.; Liu, M.; Panwar, M.; Verhoef, B.E.; Patki, S.; Kim, C.H.; Acharyya, A.; Van Hoof, C.; Konijnenburg, M.; et al. CorNET: Deep learning framework for PPG-based heart rate estimation and biometric identification in ambulant environment. IEEE Trans. Biomed. Circuits Syst. 2019, 13, 282–291. [Google Scholar] [CrossRef] [PubMed]

- Luque, J.; Cortes, G.; Segura, C.; Maravilla, A.; Esteban, J.; Fabregat, J. End-to-end Photopleth YsmographY (PPG) based biometric authentication by using convolutional neural networks. In Proceedings of the 26th Eur Signal Process Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; Volume 2018. [Google Scholar] [CrossRef]

- Hwang, D.Y.; Taha, B.; Lee, D.S.; Hatzinakos, D. Evaluation of the time stability and uniqueness in PPG-based biometric system. IEEE Trans. Inf. Forensics Secur. 2020, 16, 116–130. [Google Scholar] [CrossRef]

- Zhao, T.; Wang, Y.; Liu, J.; Chen, Y.; Cheng, J.; Yu, J. Trueheart: Continuous authentication on wrist-worn wearables using ppg-based biometrics. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020; pp. 30–39. [Google Scholar] [CrossRef]

- Siam, A.I.; Sedik, A.; El-Shafai, W.; Elazm, A.A.; El-Bahnasawy, N.A.; ElBanby, G.M.; Khalaf, A.A.M.; Abd El-Samie, F.E. Biosignal classification for human identification based on convolutional neural networks. Int. J. Commun. Syst. 2021, 34, e4685. [Google Scholar] [CrossRef]

- Siam, A.I.; EI-Samie, F.A.; Elazm, A.A.; EI-Bahnawawy, N.; Elbanby, G. Real-World PPG Dataset Mendeley Data. 2022. Available online: https://data.mendeley.com/datasets/yynb8t9x3d (accessed on 10 November 2022).

- Huang, N.E.; Shen, S.S. Hilbert-Huang Transform and Its Applications; World Scientific Publishing: Hackensack, NJ, USA, 2005. [Google Scholar]

- Moncrieff, J.; Clement, R.; Finnigan, J.; Meyers, T. Averaging, Detrending, and Filtering of Eddy Covariance Time Series. In Handbook of Micrometeorology; Kluwer Academic Publishers: Norwell, MA, USA, 2004; pp. 7–31. [Google Scholar] [CrossRef]

- Flandrin, P.; Gonçalvès, P.; Rilling, G. EMD equivalent filter banks, from interpretation to applications. In Hilbert-Huang Transform and Its Applications; World Scientific: Singapore, 2005; pp. 57–74. [Google Scholar] [CrossRef]

- Koch, G.R. Siamese Neural Networks for One-Shot Image Recognition. Master’s Thesis, University of Toronto, Toronto, ON, Canada, 2015. [Google Scholar]

- Ruby, U.; Yendapalli, V. Binary cross entropy with deep learning technique for Image classification. IJATCSE 2020, 9, 5393–5397. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Kumar, A. Incorporating cohort information for reliable palmprint authentication. In Proceedings of the Sixth Indian Conference on Computer Vision, Graphics & & Image Processing, Bhubaneswar, India, 16–19 December 2008; Volume 2008. [Google Scholar] [CrossRef]

- Militello, C.; Conti, V.; Vitabile, S.; Sorbello, F. Embedded access points for trusted data and resources access in HPC systems. J. Supercomput. 2011, 55, 4–27. [Google Scholar] [CrossRef]

| N | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Accuracy | 92.64 | 92.42 | 95.13 | 96.36 | 97.23 |

| N | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| AUC | 0.967 | 0.974 | 0.984 | 0.988 | 0.990 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seok, C.L.; Song, Y.D.; An, B.S.; Lee, E.C. Photoplethysmogram Biometric Authentication Using a 1D Siamese Network. Sensors 2023, 23, 4634. https://doi.org/10.3390/s23104634

Seok CL, Song YD, An BS, Lee EC. Photoplethysmogram Biometric Authentication Using a 1D Siamese Network. Sensors. 2023; 23(10):4634. https://doi.org/10.3390/s23104634

Chicago/Turabian StyleSeok, Chae Lin, Young Do Song, Byeong Seon An, and Eui Chul Lee. 2023. "Photoplethysmogram Biometric Authentication Using a 1D Siamese Network" Sensors 23, no. 10: 4634. https://doi.org/10.3390/s23104634

APA StyleSeok, C. L., Song, Y. D., An, B. S., & Lee, E. C. (2023). Photoplethysmogram Biometric Authentication Using a 1D Siamese Network. Sensors, 23(10), 4634. https://doi.org/10.3390/s23104634