Fast and Accurate ROI Extraction for Non-Contact Dorsal Hand Vein Detection in Complex Backgrounds Based on Improved U-Net

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental System

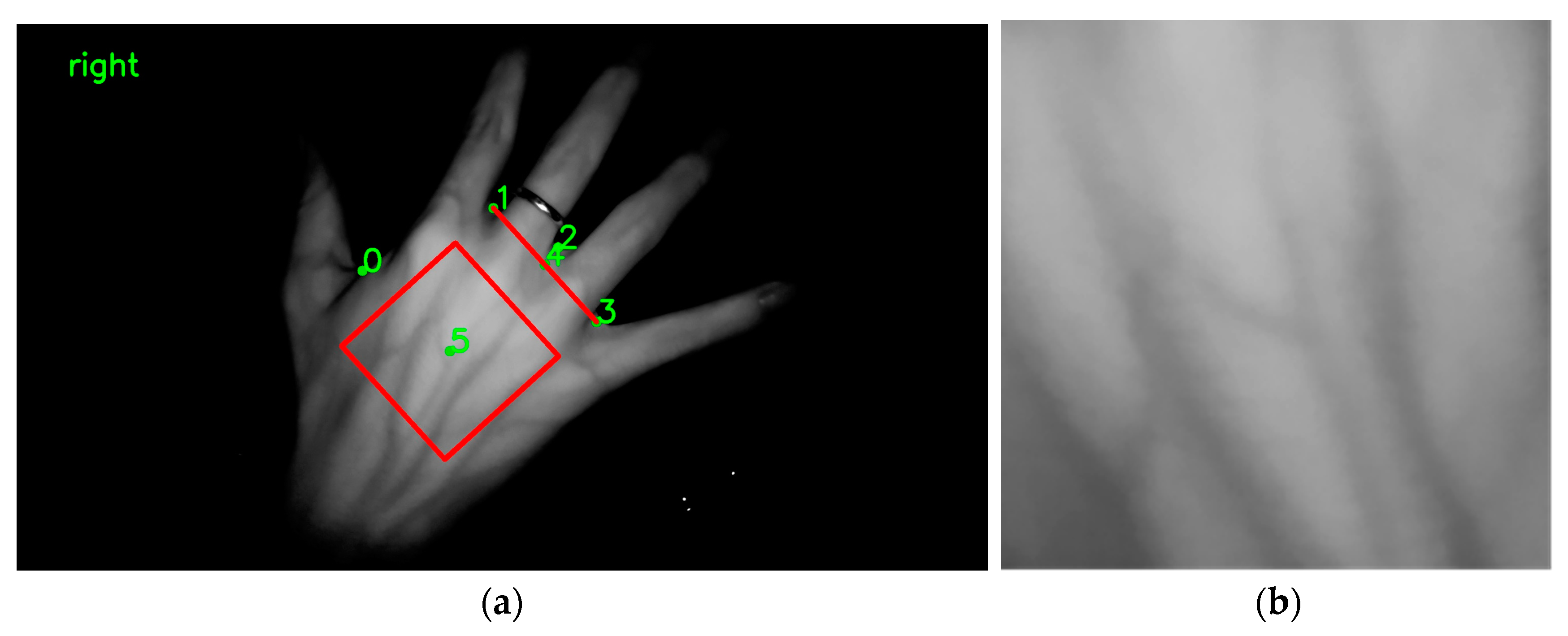

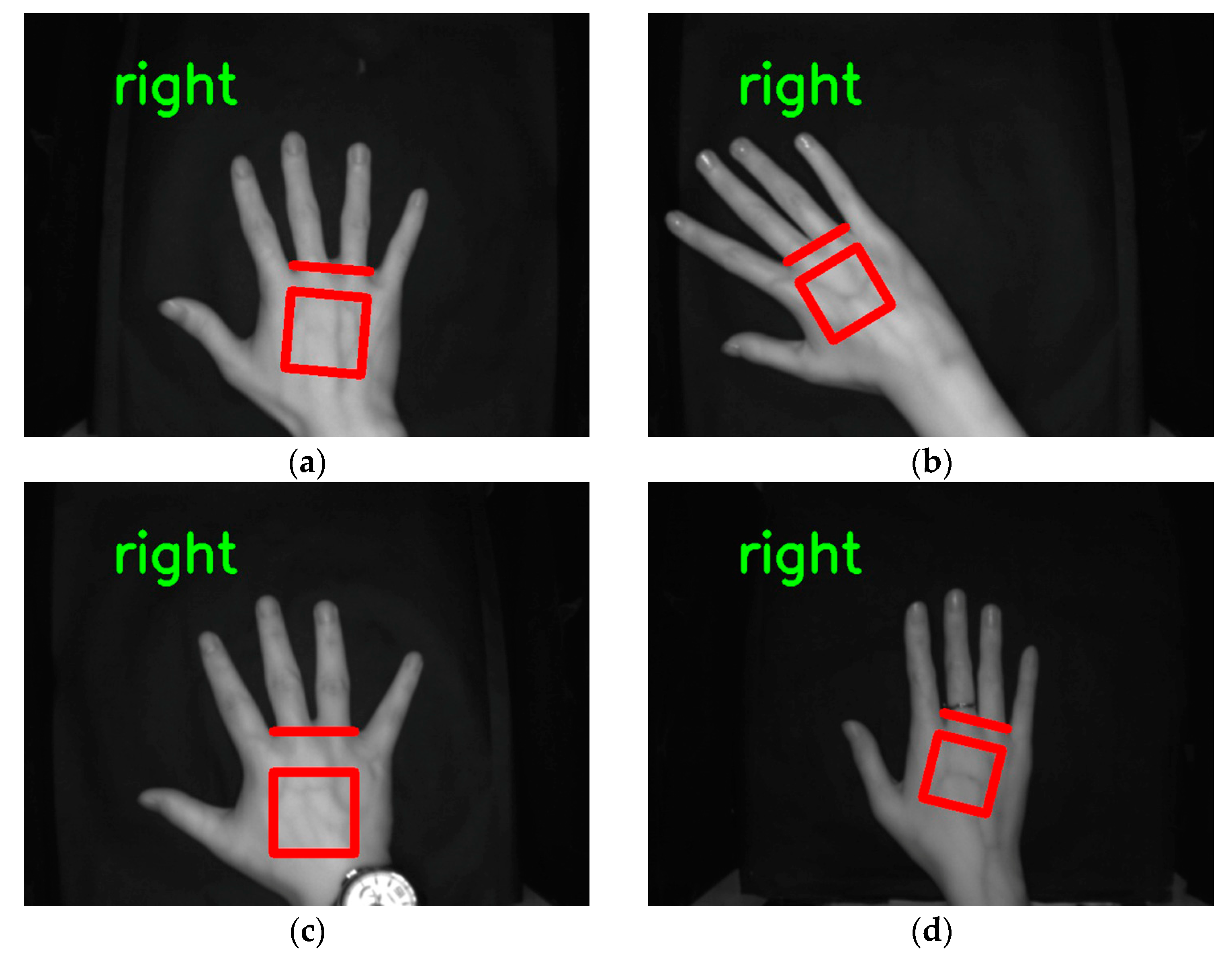

2.2. Datasets

Self-Built Dataset and Jilin University Public Dataset

2.3. Improved U-Net Model for Keypoint Detection in Dorsal Hand Vein Images

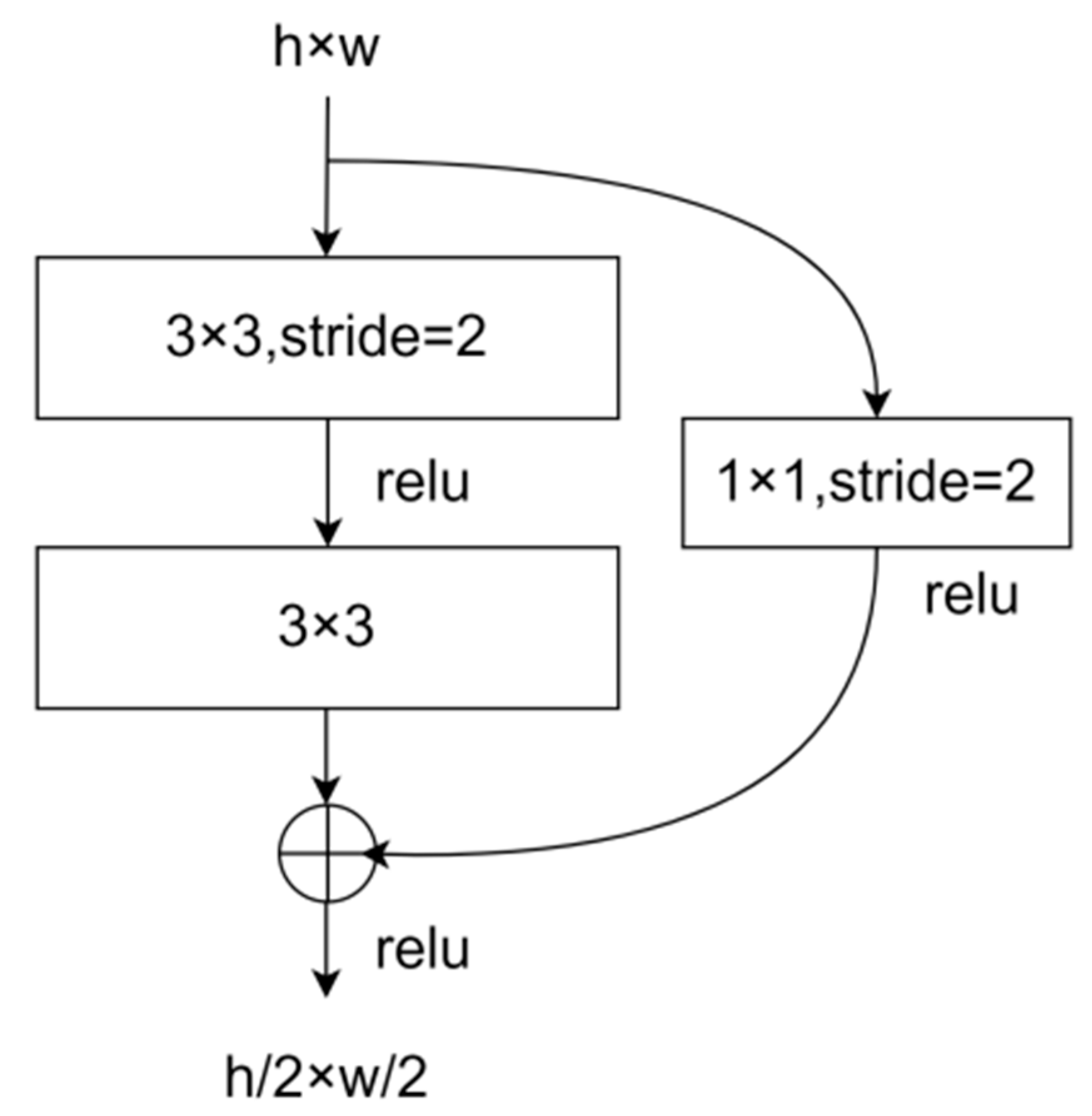

2.3.1. Residual Module for Downsampling

2.3.2. Bilinear Interpolation for Upsampling

2.3.3. Jensen–Shannon (JS) Divergence Loss

2.3.4. Soft-Argmax to Obtain Keypoint Coordinates

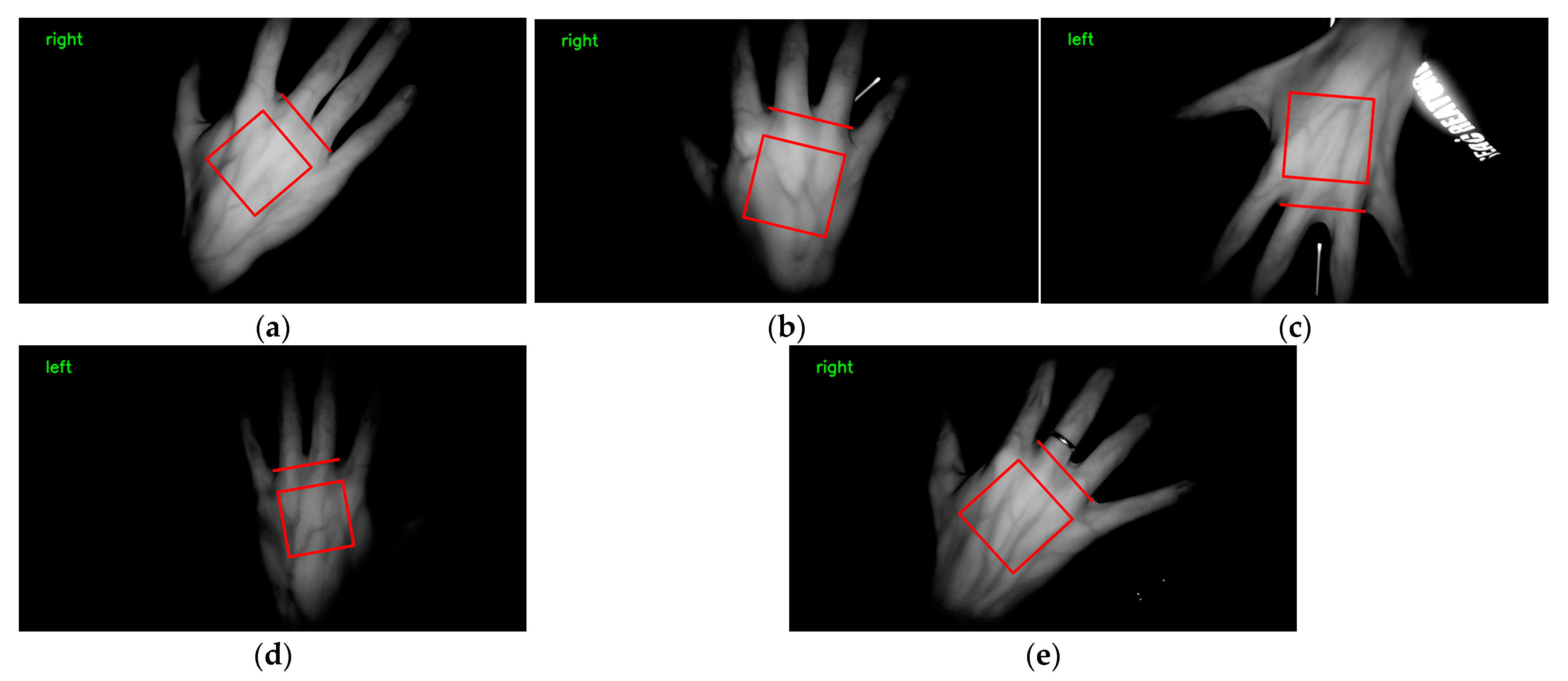

2.4. ROI Calculation Method

| Algorithm 1 Overall Pipeline of DHV ROI Extraction |

| Require: A DHV image I and our detector D. |

| 1: Feed I to D to obtain four keypoints P0, P1, P2, P3; |

| 2: Set the line through P1P3 as the X-axis; |

| 3: Set the direction perpendicular to P1P3 and P0 as the positive direction of the Y-axis; |

| 4: Set the midpoint P4 of P1P3 as the origin (x0, y0); |

| 5: Take as the unit length of axis; |

| 6: Construct the local coordinate system; |

| 7: Set the center coordinate P5 of the ROI to ; |

| 8: Set the side length of ROI to ; |

| 9: return segmented ROI |

2.5. Model Evaluation Indicators

3. Experimental Results and Analysis

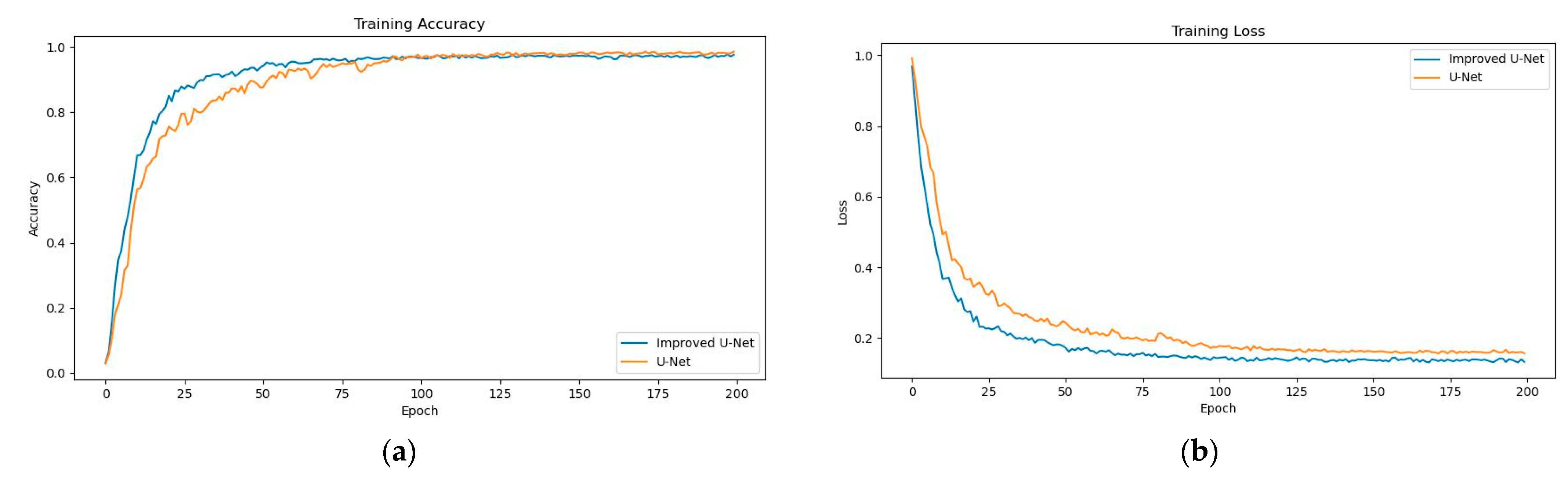

3.1. Model Training

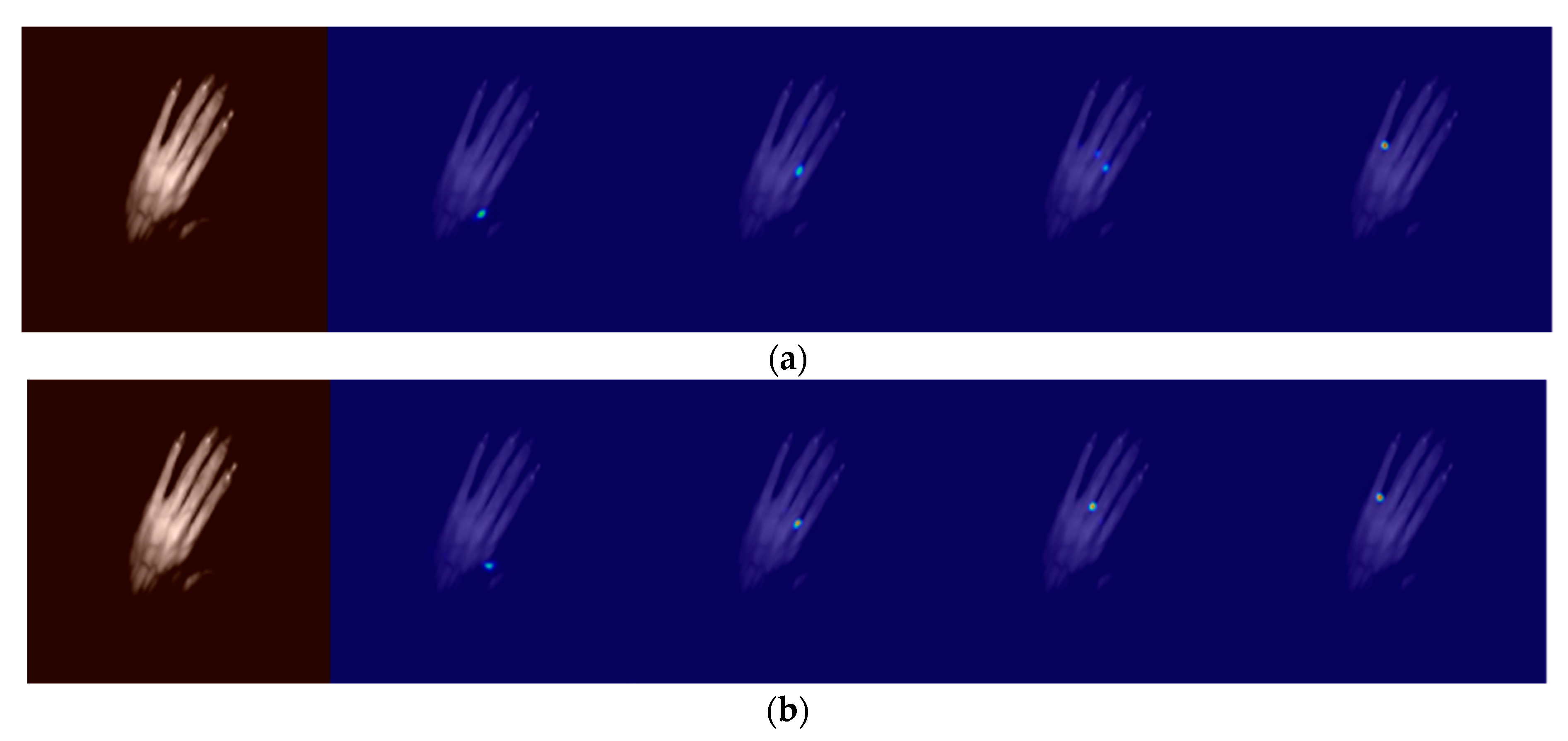

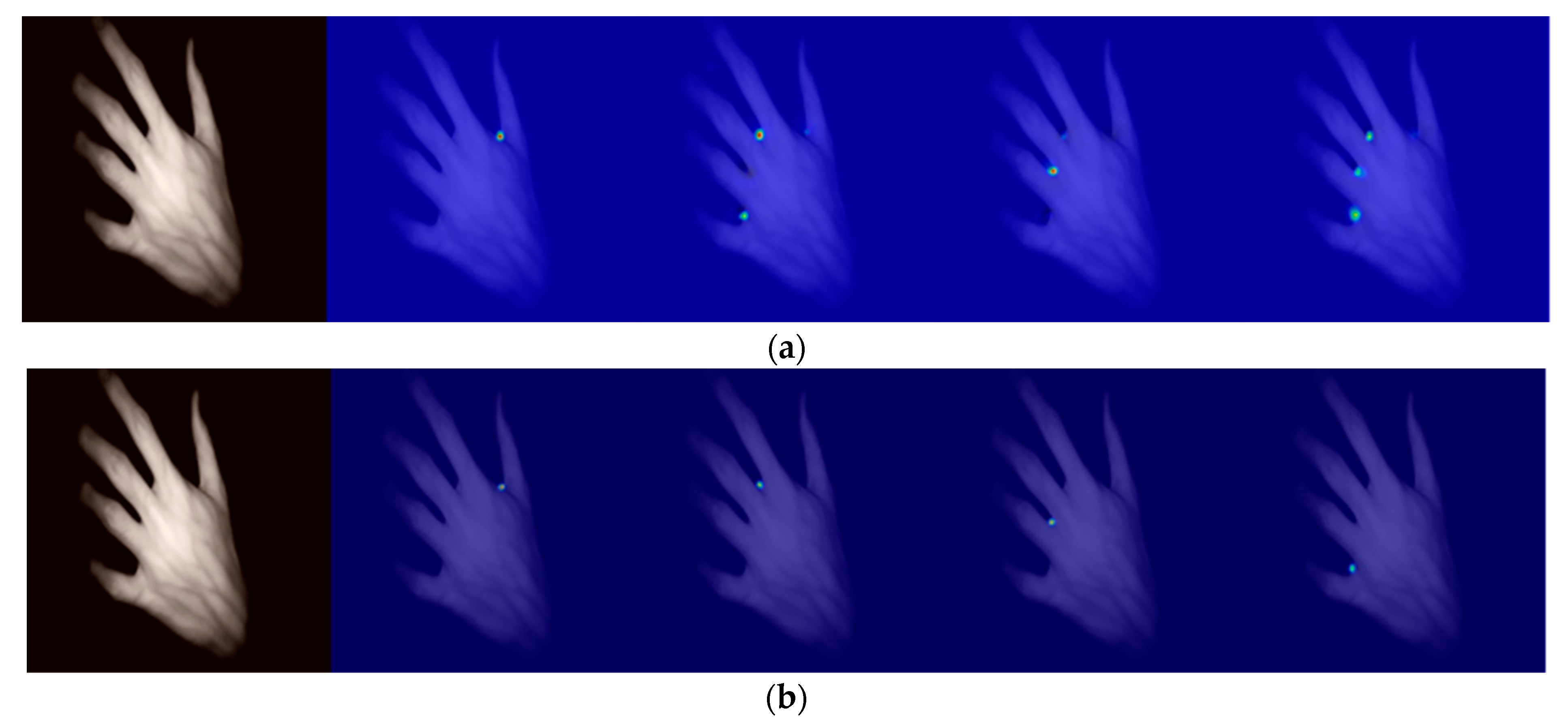

3.2. Residual Module to Implement Downsampling Comparison Tests

- (1)

- Qualitative comparison

- (2)

- Quantitative comparison

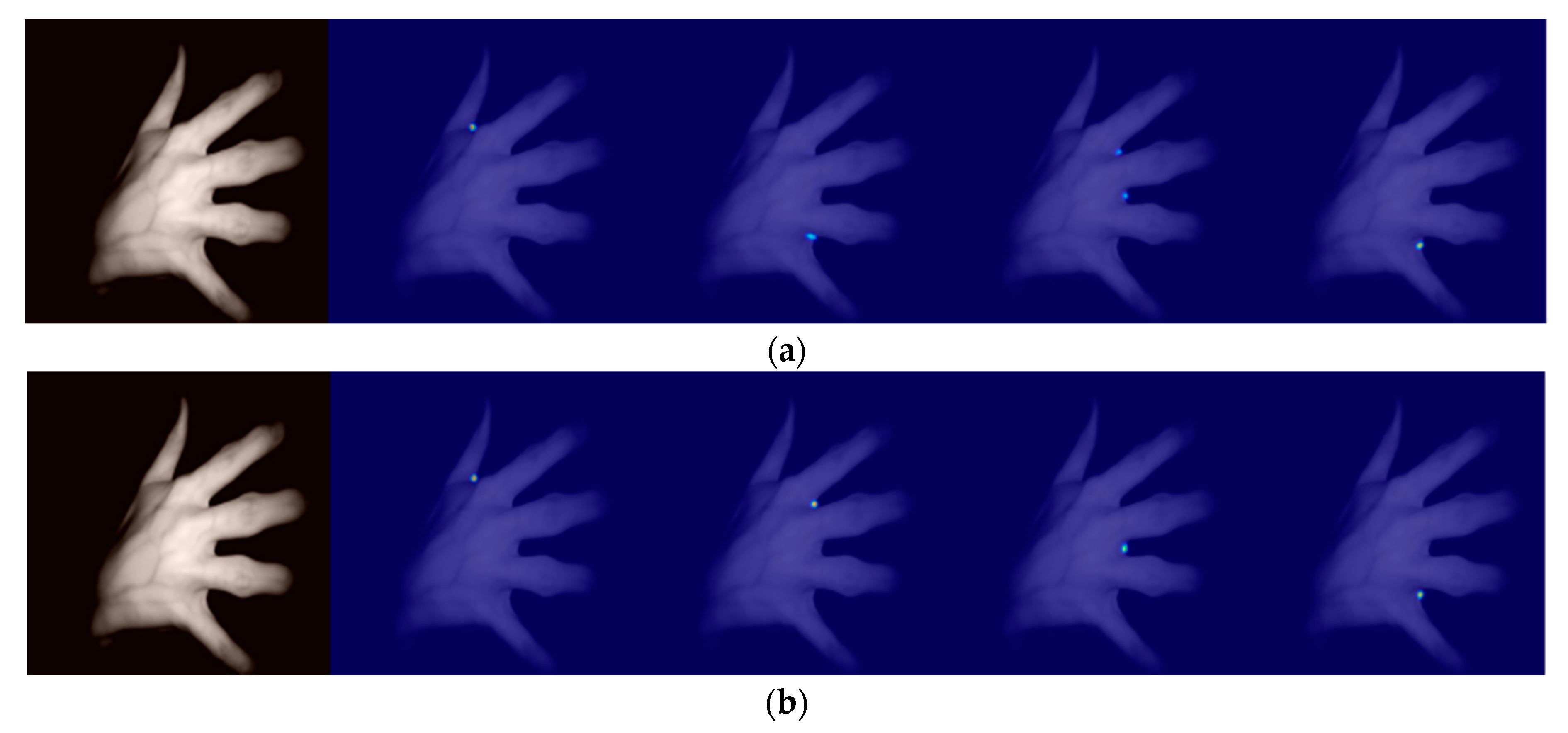

3.3. Improved Transposed Convolution as a Bilinear Interpolation Comparison Test

- (1)

- Qualitative comparison

- (2)

- Quantitative comparison

3.4. Comparative Tests of Supervision Effects with Different Losses

- (1)

- Qualitative comparison

- (2)

- Quantitative comparison

3.5. Comparison Test of the U-Net Model before and after the Improvement

3.6. Improved U-NET Test Results on the Jilin University Public Dataset

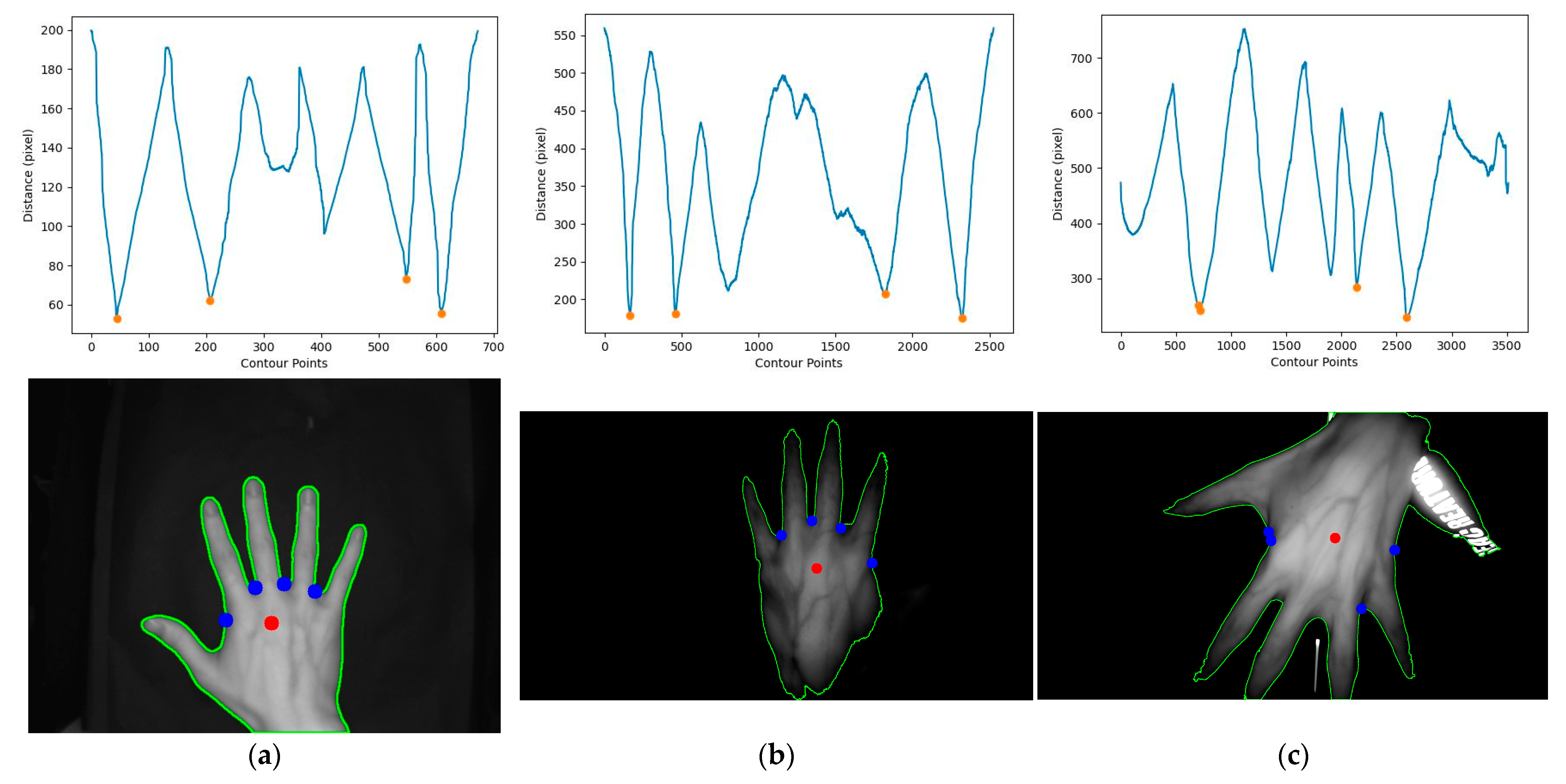

3.7. Comparison of Conventional Image Processing Methods for Locating Keypoints

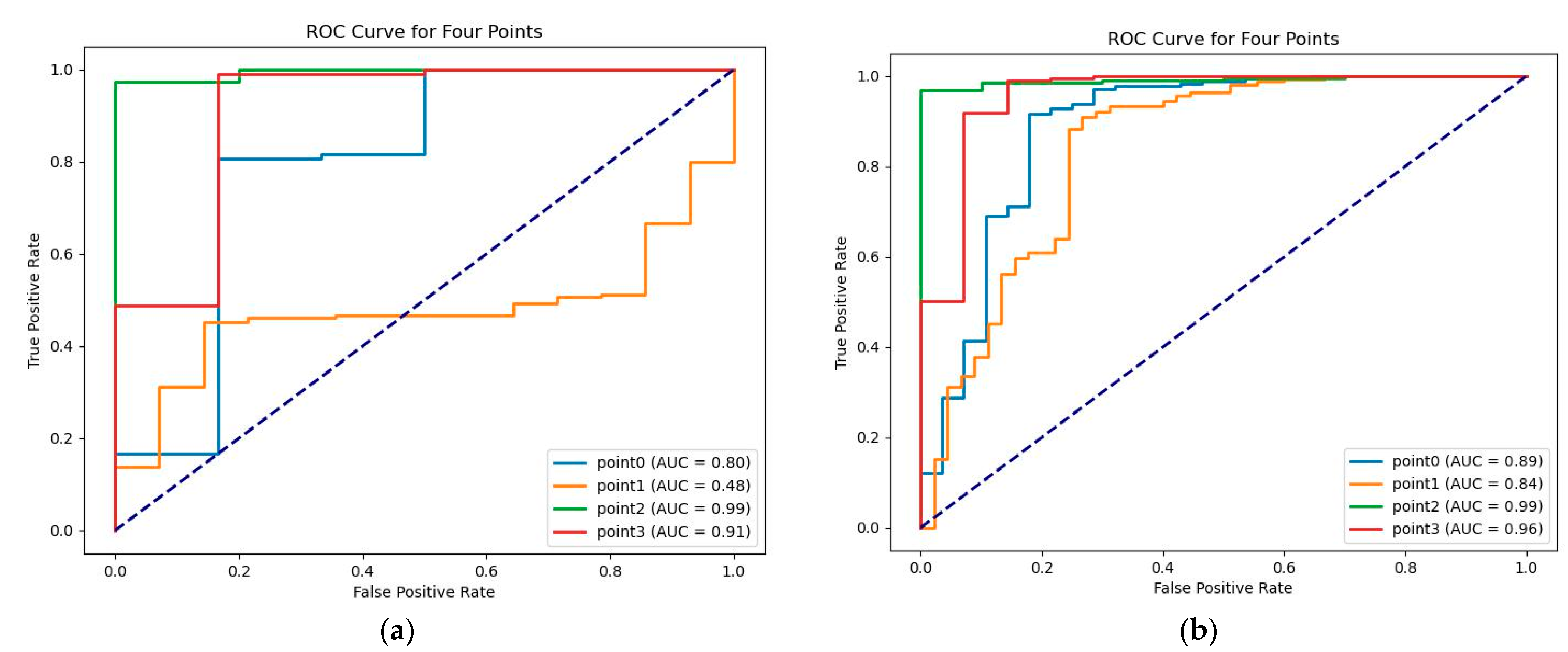

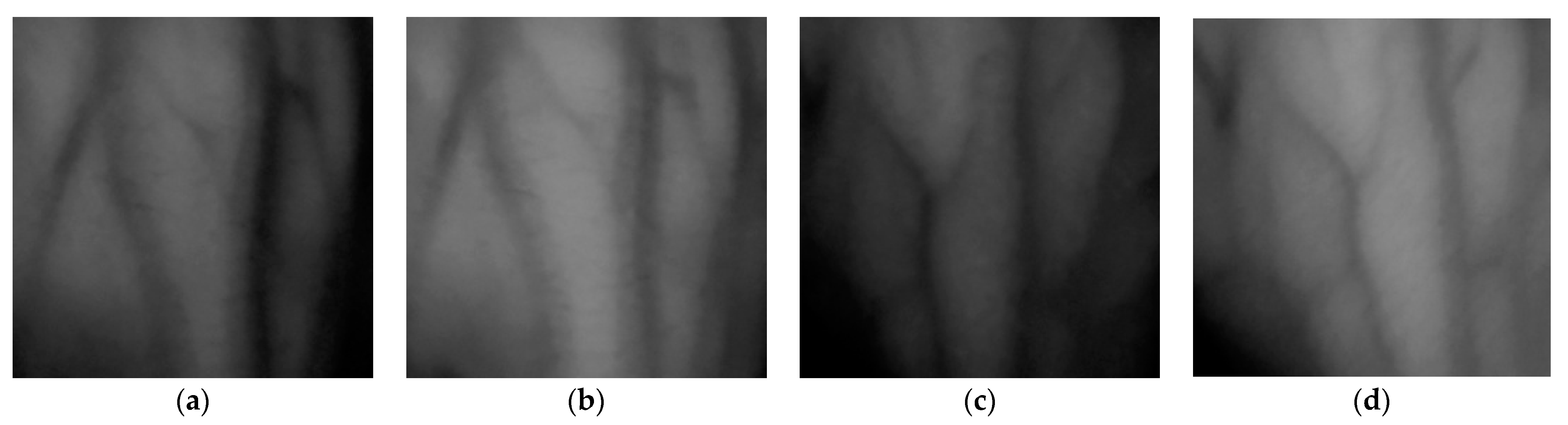

3.8. Hand Dorsal Vein Verification Experiment

4. Conclusions

- (1)

- The proposed improved U-Net network achieved an accuracy of 98.6%, which was 1% higher than that of the original U-Net network model. The file size of the improved U-Net network model was 1.16 M, which was nearly 1/30 that of the original U-Net network model. Therefore, the proposed method achieved a higher accuracy than the original U-Net network model while significantly reducing the number of model parameters, making it suitable for deployment in embedded systems.

- (2)

- The experiments on the self-built dataset and the Jilin University dataset showed that the model could achieve a high accuracy in both complex and clean backgrounds and can meet the requirements of practical applications in real environments.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhong, D.; Shao, H.; Liu, S. Towards application of dorsal hand vein recognition under uncontrolled environment based on biometric graph matching. IET Biom. 2019, 8, 159–167. [Google Scholar] [CrossRef]

- Gu, G.; Bai, P.; Li, H.; Liu, Q.; Han, C.; Min, X.; Ren, Y. Dorsal Hand Vein Recognition Based on Transfer Learning with Fusion of LBP Feature. In Biometric Recognition; Feng, J., Zhang, J., Liu, M., Fang, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12878, pp. 221–230. [Google Scholar] [CrossRef]

- Li, K.; Liu, Q.; Zhang, G. Fusion of Partition Local Binary Patterns and Convolutional Neural Networks for Dorsal Hand Vein Recognition. In Proceedings of the Biometric Recognition: 15th Chinese Conference, CCBR 2021, Shanghai, China, 10–12 September 2021; Proceedings 15. Springer: Berlin/Heidelberg, Germany, 2021; pp. 177–184. [Google Scholar]

- Al-johania, N.A.; Elrefaei, L.A. Dorsal hand vein recognition by convolutional neural networks: Feature learning and transfer learning approaches. Int. J. Intell. Eng. Syst. 2019, 12, 178–191. [Google Scholar] [CrossRef]

- Lefkovits, S.; Lefkovits, L.; Szilágyi, L. CNN Approaches for Dorsal Hand Vein Based Identification. 2019. Available online: https://dspace5.zcu.cz/handle/11025/35634 (accessed on 11 January 2023).

- Wang, Y.; Cao, H.; Jiang, X.; Tang, Y. Recognition of dorsal hand vein based bit planes and block mutual information. Sensors 2019, 19, 3718. [Google Scholar] [CrossRef]

- Chin, S.W.; Tay, K.G.; Huong, A.; Chew, C.C. Dorsal hand vein pattern recognition using statistical features and artificial neural networks. In Proceedings of the IEEE Student Conference on Research and Development (SCOReD), Batu Pahat, Malaysia, 27–29 September 2020; pp. 217–221. [Google Scholar]

- Liu, F.; Jiang, S.; Kang, B.; Hou, T. A recognition system for partially occluded dorsal hand vein using improved biometric graph matching. IEEE Access 2020, 8, 74525–74534. [Google Scholar] [CrossRef]

- Sayed, M.I.; Taha, M.; Zayed, H.H. Real-Time Dorsal Hand Recognition Based on Smartphone. IEEE Access 2021, 9, 151118–151128. [Google Scholar] [CrossRef]

- Lin, C.-L.; Fan, K.-C. Biometric verification using thermal images of palm-dorsa vein patterns. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 199–213. [Google Scholar] [CrossRef]

- Wang, L.; Leedham, G.; Cho, S.-Y. Infrared imaging of hand vein patterns for biometric purposes. IET Comput. Vis. 2007, 1, 113–122. [Google Scholar] [CrossRef]

- Wang, L.; Leedham, G.; Cho, D.S.-Y. Minutiae feature analysis for infrared hand vein pattern biometrics. Pattern Recognit. 2008, 41, 920–929. [Google Scholar] [CrossRef]

- Damak, W.; Trabelsi, R.B.; Damak, M.A.; Sellami, D. Dynamic ROI extraction method for hand vein images. IET Comput. Vis. 2018, 12, 586–595. [Google Scholar] [CrossRef]

- Cimen, M.E.; Boyraz, O.F.; Yildiz, M.Z.; Boz, A.F. A new dorsal hand vein authentication system based on fractal dimension box counting method. Optik 2021, 226, 165438. [Google Scholar] [CrossRef]

- Meng, Z.; Gu, X. Palm-dorsal vein recognition method based on histogram of local gabor phase xor pattern with second identification. J. Signal Process. Syst. 2013, 73, 101–107. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, D. Band selection for improvement of dorsal hand recognition. In Proceedings of the International Conference on Hand-Based Biometrics, Hong Kong, China, 17–18 November 2011; pp. 1–4. [Google Scholar]

- Zhu, Q.; Zhang, Z.; Liu, N.; Sun, H. Near infrared hand vein image acquisition and ROI extraction algorithm. Optik 2015, 126, 5682–5687. [Google Scholar] [CrossRef]

- Yuan, X. Biometric verification using hand vein-patterns. In Proceedings of the IEEE International Conference on Wireless Communications, Networking and Information Security, Beijing, China, 25–27 June 2010; pp. 677–681. [Google Scholar]

- Liu, J.; Cui, J.; Xue, D.; Jia, X. Palm-dorsa vein recognition based on independent principle component analysis. In Proceedings of the International Conference on Image Analysis and Signal Processing, Wuhan, China, 21–23 October 2011; pp. 660–664. [Google Scholar]

- Kumar, A.; Prathyusha, K.V. Personal authentication using hand vein triangulation and knuckle shape. IEEE Trans. Image Process. 2009, 18, 2127–2136. [Google Scholar] [CrossRef] [PubMed]

- Yuksel, A.; Akarun, L.; Sankur, B. Hand vein biometry based on geometry and appearance methods. IET Comput. Vis. 2011, 5, 398–406. [Google Scholar] [CrossRef]

- Nozaripour, A.; Soltanizadeh, H. Robust vein recognition against rotation using kernel sparse representation. J. AI Data Min. 2021, 9, 571–582. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:14091556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:170404861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Tompson, J.J.; Jain, A.; LeCun, Y.; Bregler, C. Joint training of a convolutional network and a graphical model for human pose estimation. Adv. Neural Inf. Process. Syst. 2014, 27, 1799–1807. [Google Scholar]

- Feng, Z.-H.; Kittler, J.; Awais, M.; Huber, P.; Wu, X.-J. Wing loss for robust facial landmark localisation with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2235–2245. [Google Scholar]

- Fuhl, W.; Kasneci, E. Learning to validate the quality of detected landmarks. In Proceedings of the Twelfth International Conference on Machine Vision (ICMV 2019), SPIE, Amsterdam, Netherlands, 16–18 November 2019; pp. 97–104. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015. In Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2016, arXiv:160307285. [Google Scholar]

- Turkowski, K. Filters for common resampling tasks. In Graphics Gems; Academic Press Professional, Inc.: Cambridge, MA, USA, 1990; pp. 147–165. [Google Scholar]

- Luvizon, D.C.; Tabia, H.; Picard, D. Human pose regression by combining indirect part detection and contextual information. Comput. Graph. 2019, 5, 15–22. [Google Scholar] [CrossRef]

- Nibali, A.; He, Z.; Morgan, S.; Prendergast, L. Numerical coordinate regression with convolutional neural networks. arXxiv 2018, arXiv:180107372. [Google Scholar]

- Pishchulin, L.; Insafutdinov, E.; Tang, S.; Andres, B.; Andriluka, M.; Gehler, P.V.; Schiele, B. Deepcut: Joint subset partition and labeling for multi person pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4929–4937. [Google Scholar]

- Chen, Y.; Wang, Z.; Peng, Y.; Zhang, Z.; Yu, G.; Sun, J. Cascaded pyramid network for multi-person pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7103–7112. [Google Scholar]

| Network Model | Reasoning Time/ms | Accuracy Rate/% | Model Size |

|---|---|---|---|

| No short-circuit connection | 42.48 | 98.0 | 1.14 M |

| With short-circuit connection | 47.57 | 98.6 | 1.16 M |

| Network Model | Reasoning Time/ms | Accuracy Rate/% | Model Size |

|---|---|---|---|

| Transposed convolution | 56.32 | 96.7 | 1.18 M |

| Bilinear interpolation | 47.57 | 98.6 | 1.16 M |

| Mean Squared Error (MSE) Loss | Jensen–Shannon Divergence Loss | Euclidean Distance Loss | Accuracy Rate/% |

|---|---|---|---|

| √ | 92.3 | ||

| √ | 95.0 | ||

| √ | 95.5 | ||

| √ | √ | √ | 98.6 |

| Network Model | Reasoning Time/ms | Accuracy Rate/% | Model Size | Total Floating Point Operations per Second (FLOPs) |

|---|---|---|---|---|

| U-Net | 146.12 | 97.6 | 35.0 M | 7.44 G |

| Improved U-Net | 59.57 | 98.6 | 1.16 M | 939.0 M |

| Detection Method | Self-Built Dataset | Jilin University Dataset |

|---|---|---|

| Accuracy Rate/% | Accuracy Rate/% | |

| Convex hull and convex | 55.0 | 99.0 |

| Centroid distance | 69.1 | 97.9 |

| Improved U-Net | 98.6 | 99.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Zou, X.; Deng, X.; Wang, Z.; Chen, Y.; Lin, C.; Xing, H.; Dai, F. Fast and Accurate ROI Extraction for Non-Contact Dorsal Hand Vein Detection in Complex Backgrounds Based on Improved U-Net. Sensors 2023, 23, 4625. https://doi.org/10.3390/s23104625

Zhang R, Zou X, Deng X, Wang Z, Chen Y, Lin C, Xing H, Dai F. Fast and Accurate ROI Extraction for Non-Contact Dorsal Hand Vein Detection in Complex Backgrounds Based on Improved U-Net. Sensors. 2023; 23(10):4625. https://doi.org/10.3390/s23104625

Chicago/Turabian StyleZhang, Rongwen, Xiangqun Zou, Xiaoling Deng, Ziyang Wang, Yifan Chen, Chengrui Lin, Hongxin Xing, and Fen Dai. 2023. "Fast and Accurate ROI Extraction for Non-Contact Dorsal Hand Vein Detection in Complex Backgrounds Based on Improved U-Net" Sensors 23, no. 10: 4625. https://doi.org/10.3390/s23104625

APA StyleZhang, R., Zou, X., Deng, X., Wang, Z., Chen, Y., Lin, C., Xing, H., & Dai, F. (2023). Fast and Accurate ROI Extraction for Non-Contact Dorsal Hand Vein Detection in Complex Backgrounds Based on Improved U-Net. Sensors, 23(10), 4625. https://doi.org/10.3390/s23104625