Three-Dimensional Printing Quality Inspection Based on Transfer Learning with Convolutional Neural Networks

Abstract

1. Introduction

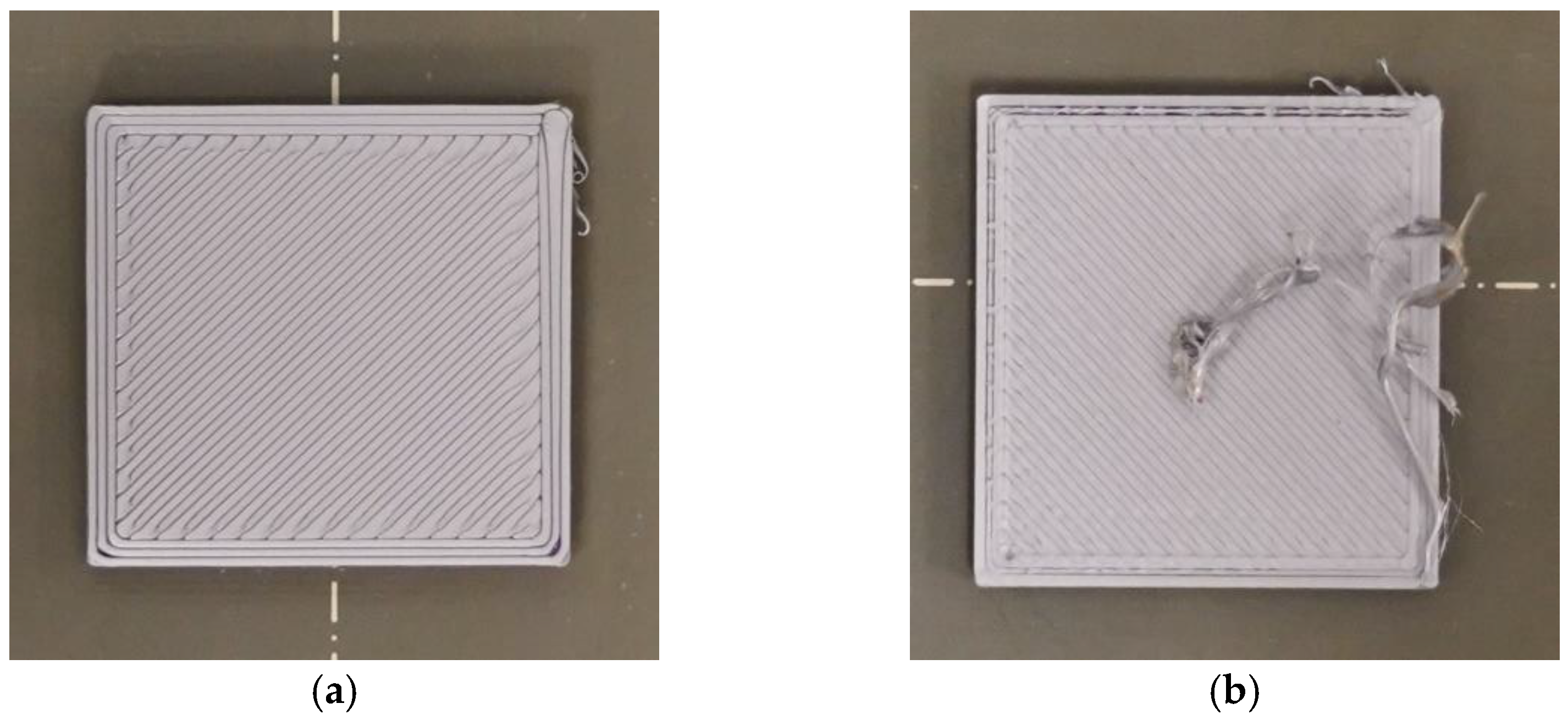

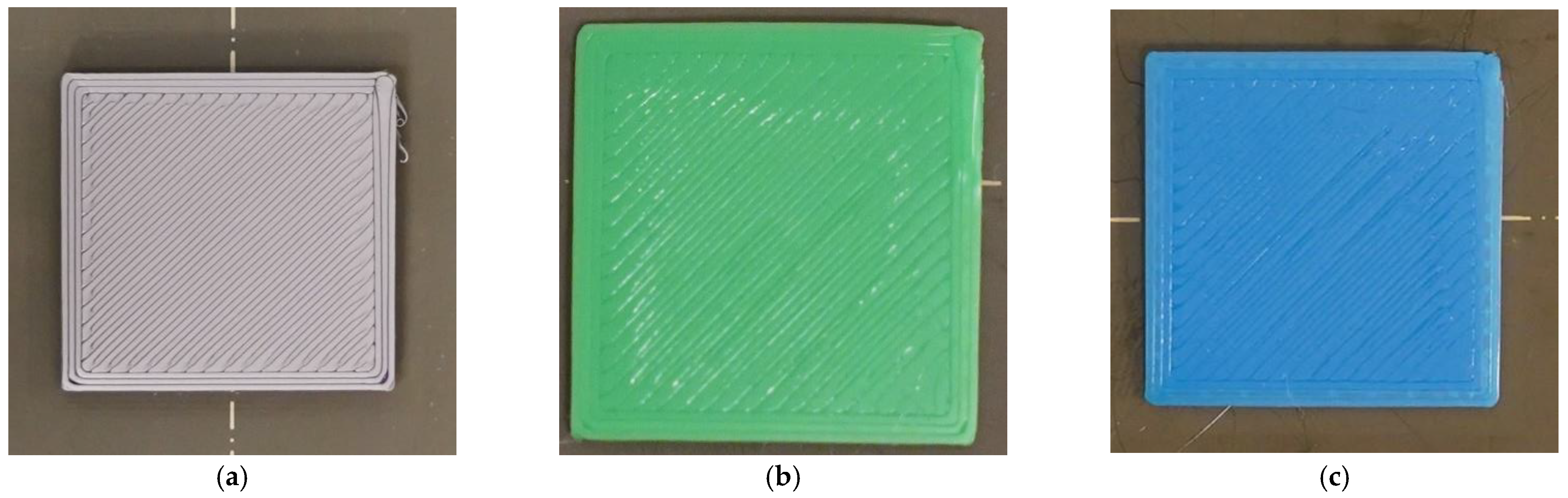

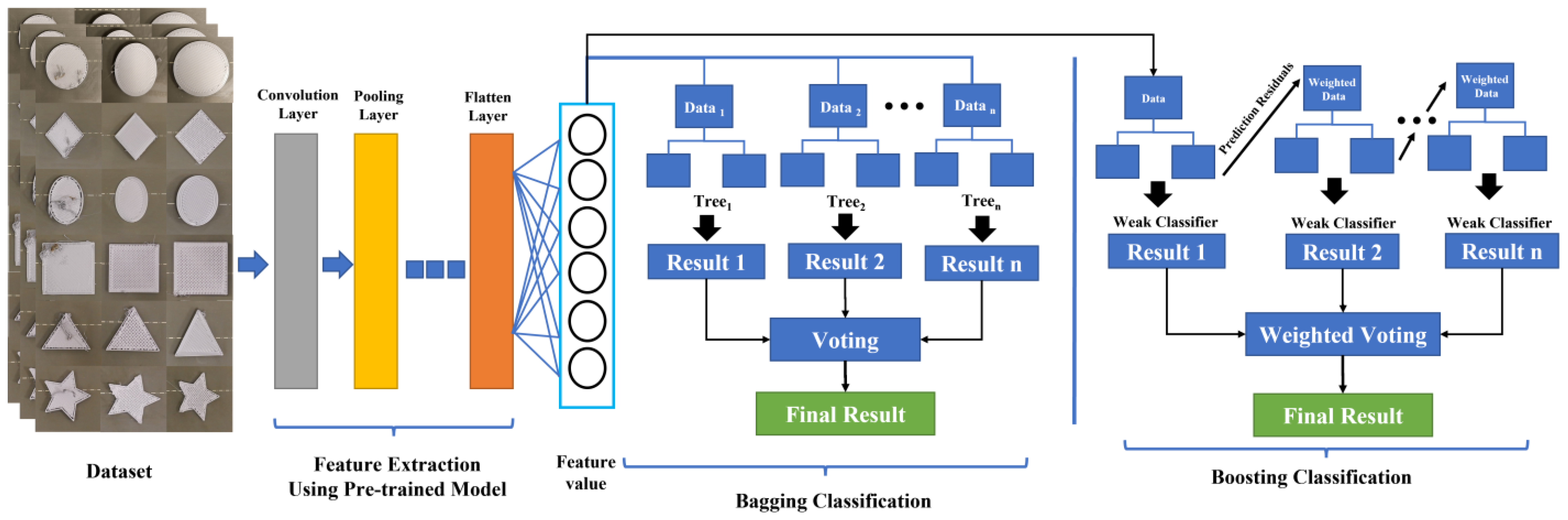

2. Materials and Methods

2.1. Classification Principles

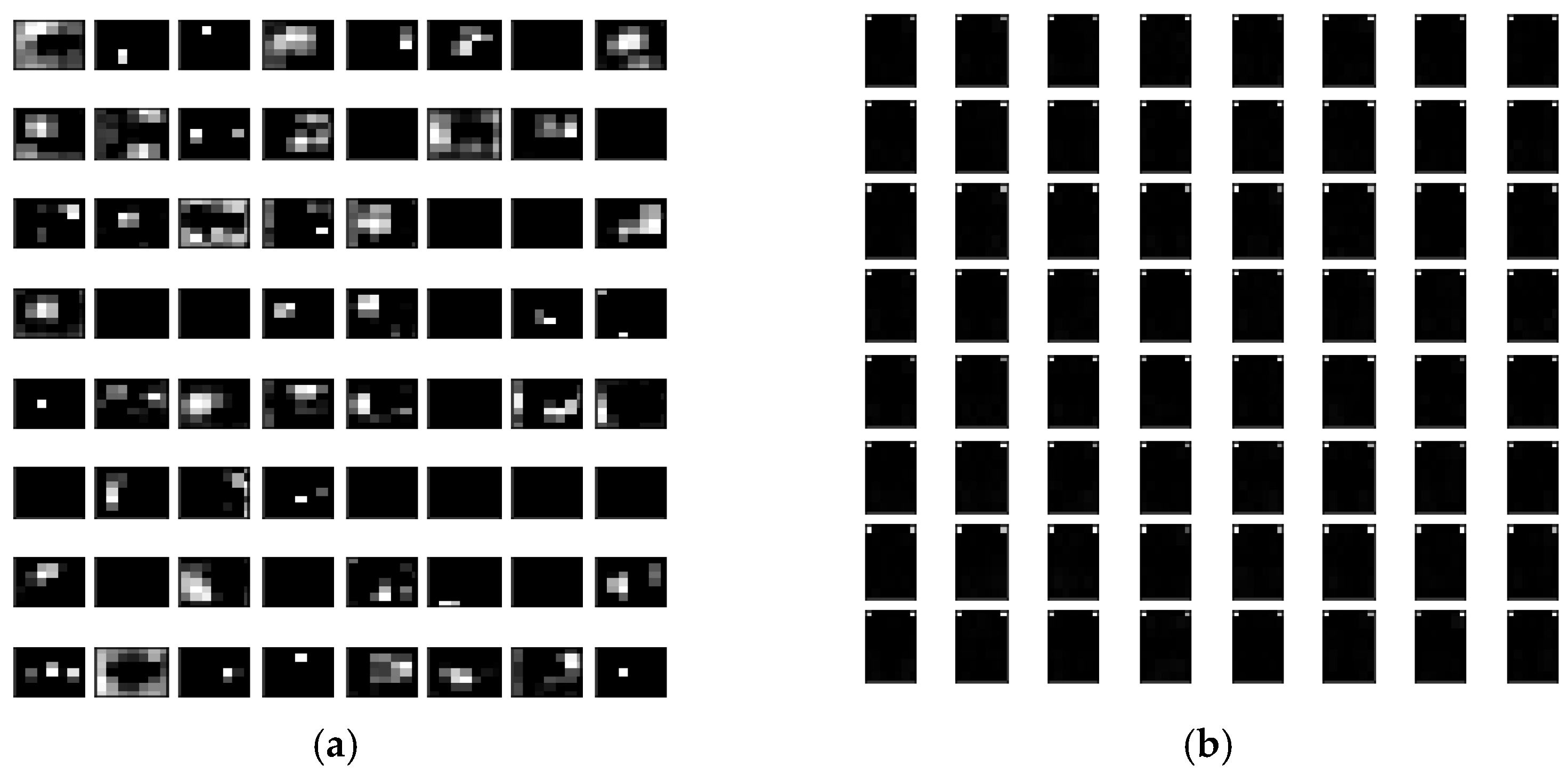

2.2. Experimental Procedures

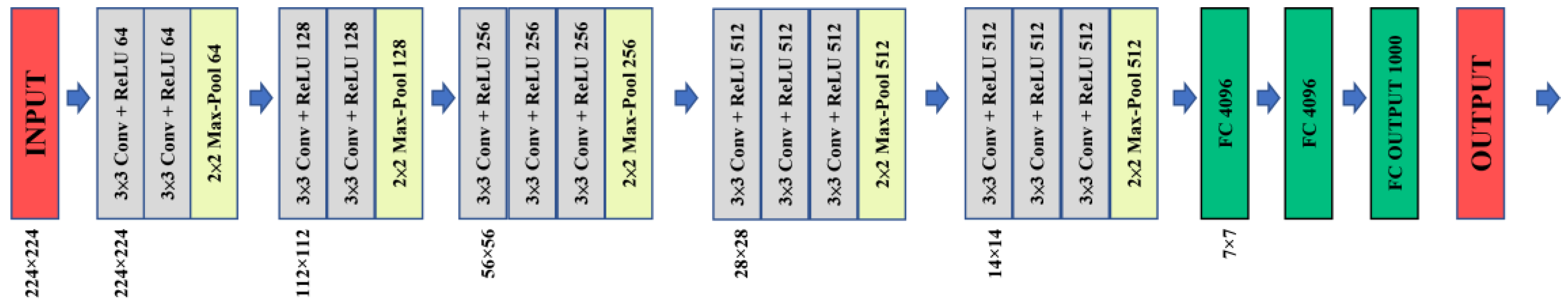

2.3. CNN

2.3.1. VGG16

2.3.2. VGG19

2.3.3. InceptionV3

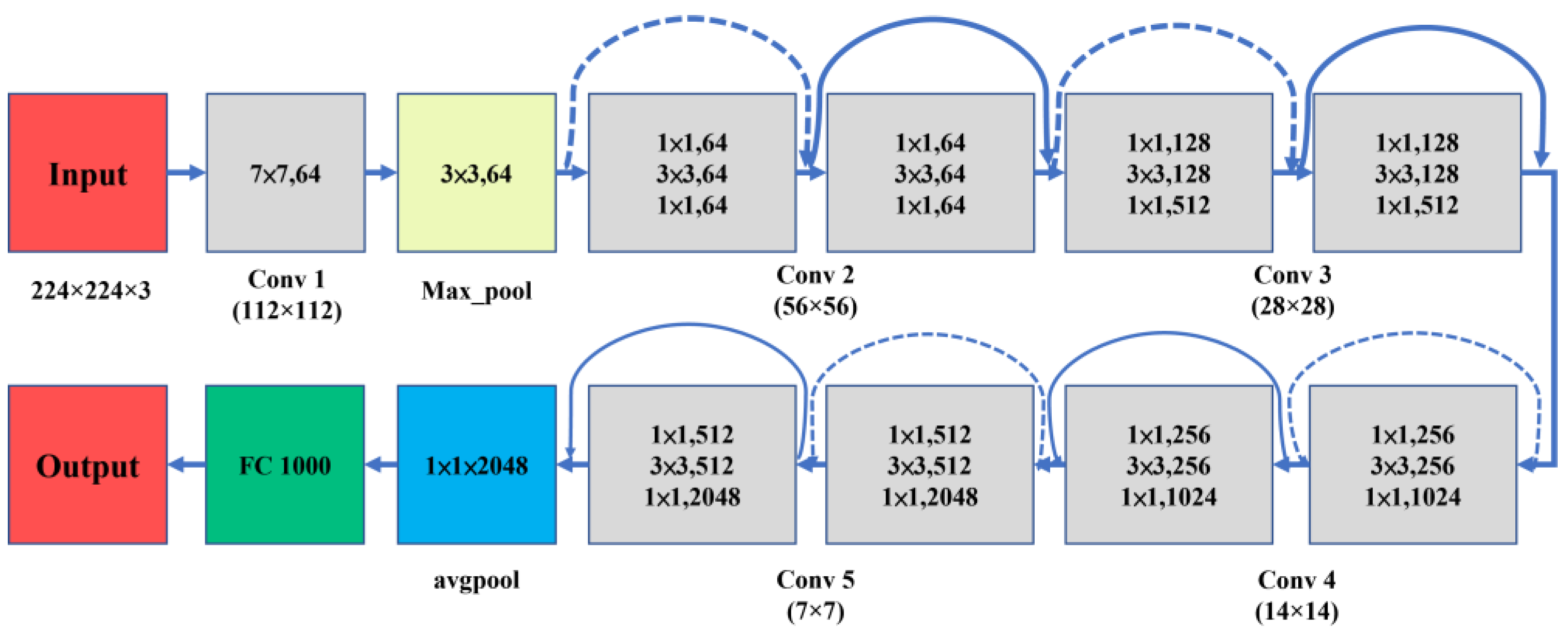

2.3.4. ResNet50

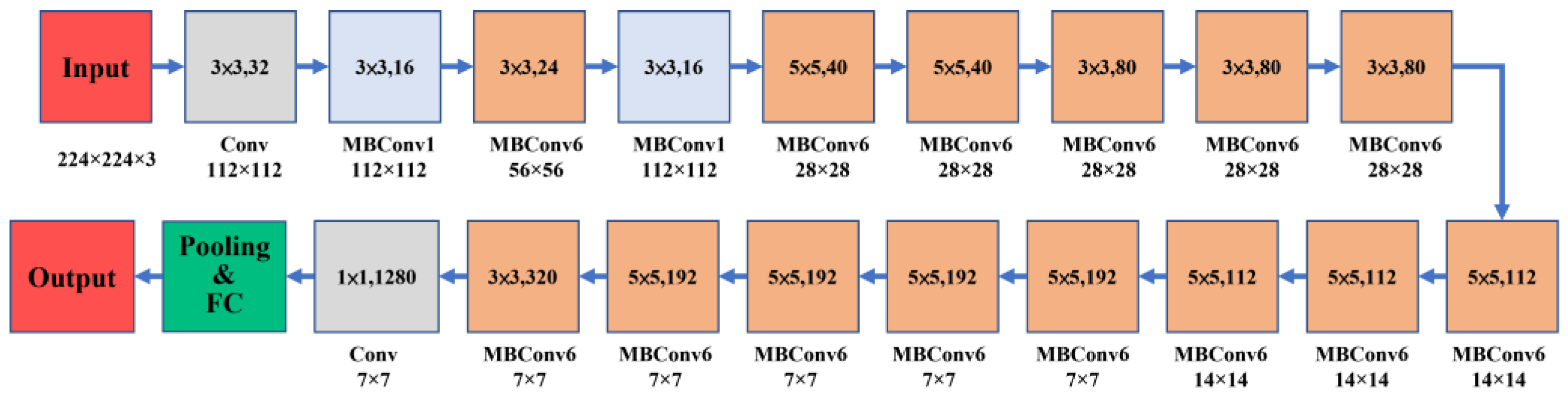

2.3.5. EfficientNetB0

2.3.6. EfficientNetV2L

2.4. Ensemble Learning Algorithms

2.4.1. Bagging

2.4.2. Boosting

- 1.

- AdaBoostThe idea of AdaBoost is to create a strong classifier by summing weighted predictions from a set of weak classifiers. AdaBoost, which is short for adaptive boosting, uses the misclassified samples of the preceding classifiers to train the next generation of classifiers. This is an iterative approach where weighted training data are used instead of random training samples, so that the classifier can focus on hard-to-classify training data. A new classifier is added at each iteration, until the error falls below a threshold. As the model is effectively a strong classifier, it is robust against overfitting. However, noisy data and outliers should be avoided to the greatest extent possible [56,57].

- 2.

- Gradient-Boosting Decision Tree (GBDT)The GDBT is a multiple-additive regression tree and is a technique where a strong classifier is formed by combining many weak classifiers. The GDBT model is applicable to both classification and regression problems. Every prediction differs from the actual value by a residual; in GDBT, the log-likelihood loss function is used to maximize the probability that the predicted value is the real value. To prevent overfitting, the residual and predicted residual are calculated, and the predicted residual is multiplied by the learning rate. New trees are generated one after another to correct the residual until it approaches 0, that is, until the prediction approaches the true value [58,59,60,61].

- 3.

- Extreme Gradient Boosting (XGBoost)XGBoost is a method where additive training is combined with gradient boosting. In each iteration, the original model is left unchanged, and a new function is added to correct the error of the previous tree. The risk of overfitting is minimized through regularization and the addition of a penalty term Ω to the loss function. XGBoost combines the advantages of bagging and boosting, as it allows the trees to remain correlated with each other while utilizing random feature sampling. In contrast to other machine learning methods that cannot handle sparse data, XGBoost can efficiently handle sparse data through sparsity-aware split finding. In this method, the gains obtained from adding sparse data to the left and right sides of a tree are calculated, and the side that gives the highest gain is selected [61,62,63,64].

- 4.

- LightGBMLightGBM is a type of GDBT that uses histogram-based decision trees, which traverse the dataset and select optimal splitting points based on discrete values in a histogram. This reduces the complexity of tree node splitting and makes LightGBM very memory- and time-efficient. LightGBM uses gradient-based one-side sampling to retain training instances with large gradients, as well as exclusive feature bundling to reduce the dimensionality [61,65,66,67].

- 5.

- CatBoostCatBoost is another GDBT-based model. To create unbiased predictions, CatBoost uses ordered boosting to reduce the degree of overfitting and uses oblivious trees as base predictors. In many competitions hosted by Kaggle, CatBoost achieved the highest accuracies and smallest log-loss values [61,66,67,68,69,70].

3. Experiments

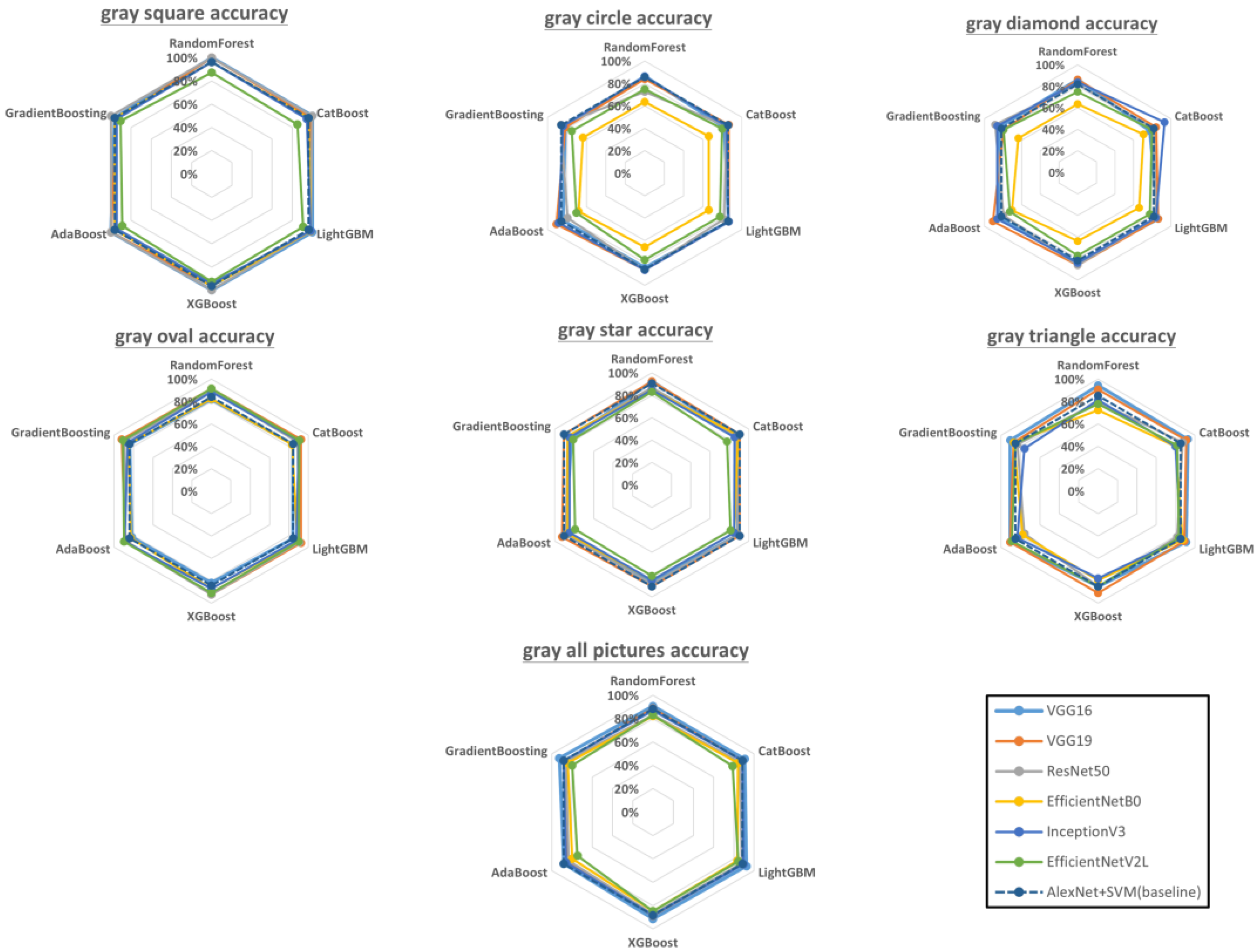

3.1. Effects of Geometric Differences

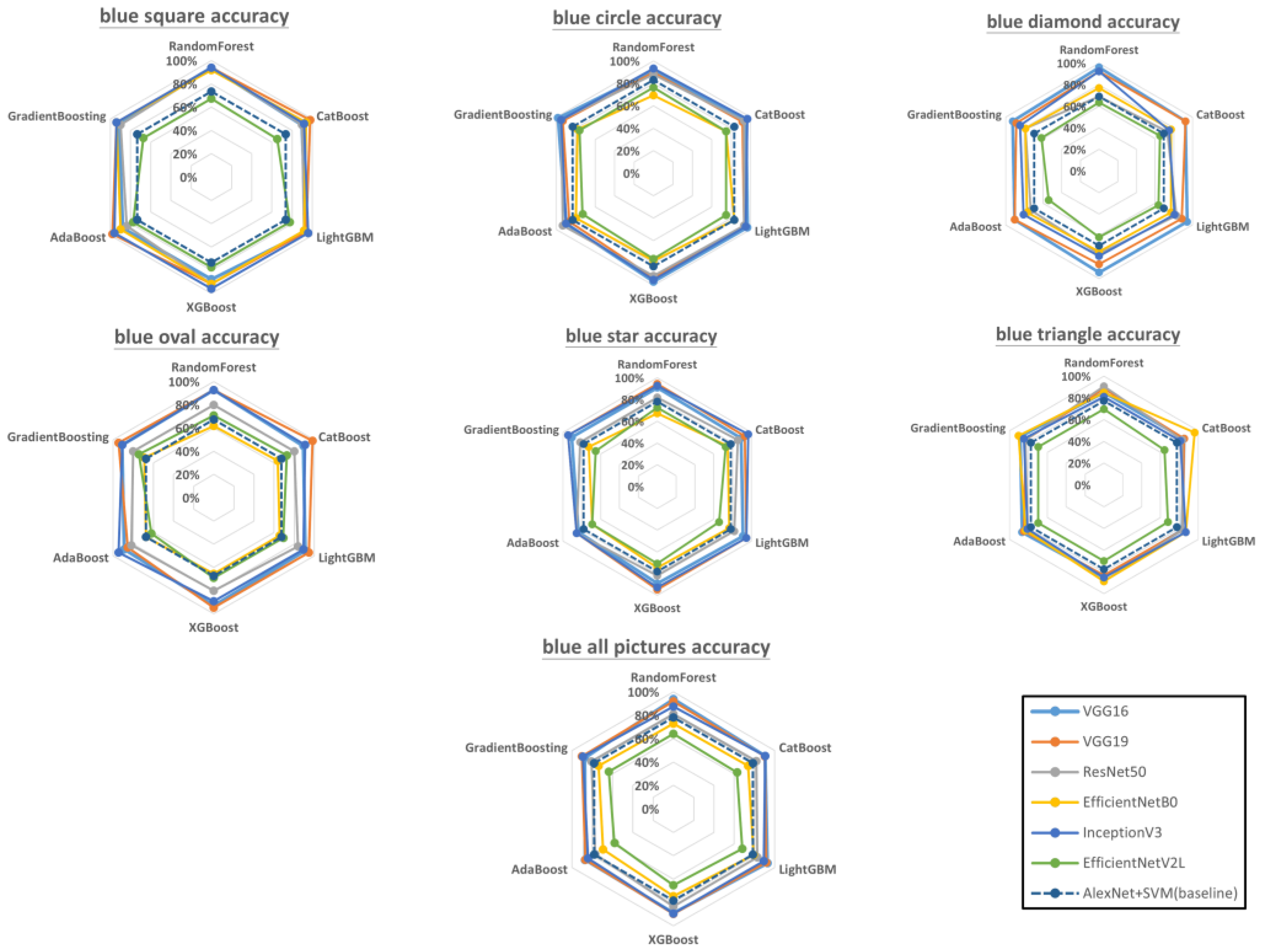

3.2. Effects of Color

4. Discussion

5. Conclusions

- The surface quality of FDM 3D-printed objects can be accurately classified by combining transfer learning with ensemble learning.

- The combination of VGG16 or VGG19 with ensemble learning gave the highest accuracy for gray-colored geometries. Although model combinations with EfficientNetB0 and EfficientNetV2L exhibited the highest accuracy in a few instances, these models were relatively inaccurate in most situations.

- Although boosting ensembles usually outperform bagging ensembles, in this case (quality inspection of 3D-printed objects), the combination of a transfer learning model with a bagging ensemble often resulted in better accuracy. Therefore, it was unable to prove that boosting is superior to bagging (or vice versa) in this study.

- Although deeper networks with novel structures often achieve better CNN performance (and a higher classification accuracy), this rule does not apply to quality inspections for FDM-printed objects.

- In this study, the highest classification accuracy of the model combinations did not vary significantly with respect to the color and geometry. Therefore, the filament color does not significantly affect the classification accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 100% | 100% | 100% | 96.30% | 96.30% | 87.04% |

| Catboost | 100% | 98.15% | 100% | 96.30% | 94.44% | 85.19% |

| LightGBM | 100% | 98.15% | 98.15% | 98.15% | 98.10% | 90.74% |

| XGboost | 100% | 96.30% | 100% | 96.30% | 94.44% | 92.59% |

| AdaBoost | 100% | 100% | 100% | 96.30% | 94.44% | 88.89% |

| GradientBoosting | 96.3% | 94.44% | 100% | 96.30% | 94.44% | 90.74% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 86.36% | 84.09% | 72.73% | 63.64% | 86.36% | 75.00% |

| Catboost | 81.82% | 86.36% | 84.09% | 65.91% | 84.09% | 79.55% |

| LightGBM | 86.36% | 86.36% | 81.82% | 65.91% | 86.40% | 77.27% |

| XGboost | 84.09% | 86.36% | 86.36% | 65.91% | 86.36% | 77.27% |

| AdaBoost | 84.09% | 90.91% | 79.55% | 68.18% | 88.64% | 70.45% |

| GradientBoosting | 81.82% | 81.82% | 86.36% | 63.64% | 84.09% | 75.00% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 86.36% | 86.36% | 81.82% | 63.64% | 84.09% | 75.00% |

| Catboost | 77.27% | 84.09% | 81.82% | 70.45% | 93.18% | 79.55% |

| LightGBM | 86.36% | 86.36% | 84.09% | 65.91% | 84.10% | 77.27% |

| XGboost | 84.09% | 86.36% | 86.36% | 63.64% | 84.09% | 77.27% |

| AdaBoost | 84.09% | 90.91% | 79.55% | 70.45% | 86.36% | 72.73% |

| GradientBoosting | 84.09% | 79.55% | 88.64% | 63.64% | 86.36% | 79.55% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 81.82% | 91.60% | 89.31% | 82.44% | 88.55% | 91.60% |

| Catboost | 84.09% | 92.37% | 88.55% | 83.21% | 90.08% | 90.84% |

| LightGBM | 84.09% | 92.37% | 90.84% | 88.55% | 87.80% | 90.08% |

| XGboost | 81.82% | 92.37% | 92.37% | 87.02% | 87.02% | 90.84% |

| AdaBoost | 81.82% | 88.55% | 89.31% | 83.21% | 89.31% | 90.08% |

| GradientBoosting | 84.09% | 92.37% | 89.31% | 84.73% | 86.26% | 90.84% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 92.45% | 92.45% | 86.79% | 84.91% | 84.91% | 83.02% |

| Catboost | 86.79% | 90.57% | 86.79% | 88.68% | 84.91% | 77.36% |

| LightGBM | 88.68% | 90.57% | 88.68% | 84.91% | 84.90% | 81.13% |

| XGboost | 86.79% | 90.57% | 88.68% | 84.91% | 84.91% | 81.13% |

| AdaBoost | 88.68% | 92.45% | 88.68% | 86.79% | 84.91% | 79.25% |

| GradientBoosting | 83.02% | 90.57% | 86.79% | 86.79% | 84.91% | 81.13% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 94.44% | 90.74% | 79.63% | 72.22% | 81.48% | 77.78% |

| Catboost | 92.59% | 90.74% | 87.04% | 79.63% | 79.63% | 81.48% |

| LightGBM | 90.74% | 88.89% | 81.48% | 87.04% | 85.20% | 83.33% |

| XGboost | 85.19% | 90.74% | 85.19% | 79.63% | 77.78% | 85.19% |

| AdaBoost | 90.74% | 90.74% | 75.93% | 77.78% | 83.33% | 88.89% |

| GradientBoosting | 90.74% | 87.04% | 83.33% | 87.04% | 75.93% | 85.19% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 90.72% | 88.66% | 83.16% | 82.16% | 86.94% | 83.16% |

| Catboost | 90.72% | 88.32% | 85.91% | 84.19% | 88.32% | 78.69% |

| LightGBM | 92.44% | 89.00% | 87.63% | 83.51% | 89.30% | 84.44% |

| XGboost | 91.41% | 88.66% | 87.29% | 84.88% | 88.32% | 84.79% |

| AdaBoost | 87.97% | 84.88% | 83.16% | 79.73% | 85.91% | 74.57% |

| GradientBoosting | 92.44% | 87.63% | 86.25% | 84.19% | 88.32% | 79.73% |

Appendix B

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 100% | 100% | 98.44% | 96.88% | 100% | 92.19% |

| Catboost | 100% | 100% | 100% | 95.31% | 98.44% | 96.88% |

| LightGBM | 100% | 100% | 98.15% | 95.31% | 98.40% | 93.75% |

| XGboost | 100% | 100% | 100% | 95.31% | 94.44% | 96.88% |

| AdaBoost | 100% | 100% | 98.44% | 96.88% | 98.44% | 100% |

| GradientBoosting | 100% | 100% | 100% | 96.88% | 98.44% | 96.88% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 90.91% | 93.94% | 84.85% | 89.39% | 93.94% | 83.33% |

| Catboost | 89.39% | 90.91% | 89.39% | 81.82% | 84.85% | 75.76% |

| LightGBM | 89.39% | 92.42% | 90.91% | 83.33% | 86.40% | 75.76% |

| XGboost | 90.91% | 87.88% | 87.88% | 81.82% | 83.33% | 69.70% |

| AdaBoost | 84.85% | 84.85% | 89.39% | 83.33% | 81.82% | 68.18% |

| GradientBoosting | 84.85% | 90.91% | 87.88% | 81.82% | 86.36% | 74.24% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 85.19% | 83.33% | 81.48% | 88.89% | 77.78% | 85.19% |

| Catboost | 87.04% | 88.89% | 79.63% | 88.89% | 77.78% | 83.33% |

| LightGBM | 85.19% | 87.04% | 77.78% | 92.59% | 83.30% | 74.07% |

| XGboost | 81.48% | 83.33% | 77.78% | 88.89% | 77.78% | 72.22% |

| AdaBoost | 77.78% | 83.33% | 74.07% | 81.49% | 79.63% | 77.78% |

| GradientBoosting | 79.63% | 81.48% | 84.48% | 90.74% | 79.63% | 79.63% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 91.53% | 96.61% | 86.44% | 86.44% | 96.61% | 81.36% |

| Catboost | 94.92% | 98.31% | 93.22% | 88.14% | 96.61% | 81.36% |

| LightGBM | 89.83% | 93.22% | 86.44% | 88.14% | 91.50% | 81.36% |

| XGboost | 89.83% | 94.92% | 93.22% | 86.44% | 98.31% | 79.66% |

| AdaBoost | 89.83% | 89.83% | 88.14% | 79.66% | 91.53% | 71.19% |

| GradientBoosting | 91.53% | 96.61% | 91.53% | 88.14% | 91.53% | 91.36% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 87.50% | 89.58% | 89.58% | 93.75% | 89.58% | 83.33% |

| Catboost | 89.58% | 91.67% | 93.75% | 93.75% | 89.58% | 83.33% |

| LightGBM | 87.50% | 93.75% | 93.75% | 89.58% | 87.50% | 85.42% |

| XGboost | 87.50% | 93.75% | 93.75% | 93.75% | 87.50% | 87.50% |

| AdaBoost | 89.58% | 91.67% | 95.83% | 91.67% | 87.50% | 89.58% |

| GradientBoosting | 85.42% | 89.58% | 95.83% | 95.83% | 83.33% | 83.33% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 86% | 90% | 80% | 76% | 90% | 84% |

| Catboost | 90% | 90% | 80% | 78% | 88% | 82% |

| LightGBM | 94% | 84% | 76% | 80% | 86% | 88% |

| XGboost | 92% | 88% | 82% | 80% | 84% | 82% |

| AdaBoost | 94% | 88% | 78% | 78% | 94% | 76% |

| GradientBoosting | 92% | 86% | 82% | 78% | 86% | 82% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 91.72% | 92.02% | 83.74% | 86.50% | 90.18% | 82.82% |

| Catboost | 91.41% | 91.10% | 87.42% | 86.81% | 92.33% | 84.05% |

| LightGBM | 90.80% | 92.02% | 88.34% | 84.36% | 91.40% | 83.44% |

| XGboost | 91.10% | 92.33% | 87.12% | 80.67% | 91.72% | 84.97% |

| AdaBoost | 82.21% | 88.65% | 80.67% | 77.61% | 85.28% | 76.38% |

| GradientBoosting | 90.18% | 92.02% | 86.50% | 80.67% | 91.10% | 84.66% |

Appendix C

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 93.88% | 93.88% | 93.88% | 91.84% | 93.88% | 67.35% |

| Catboost | 89.80% | 97.96% | 89.80% | 93.88% | 91.84% | 65.31% |

| LightGBM | 93.88% | 93.88% | 91.84% | 91.84% | 95.90% | 77.55% |

| XGboost | 87.76% | 91.84% | 91.84% | 91.84% | 95.92% | 77.55% |

| AdaBoost | 87.76% | 97.96% | 83.67% | 89.80% | 95.92% | 77.55% |

| GradientBoosting | 91.84% | 93.88% | 89.80% | 93.88% | 93.88% | 67.35% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 91.53% | 89.83% | 88.14% | 69.49% | 93.22% | 76.27% |

| Catboost | 94.92% | 91.53% | 93.22% | 74.58% | 96.61% | 74.58% |

| LightGBM | 96.61% | 93.22% | 93.22% | 83.05% | 94.90% | 74.58% |

| XGboost | 96.61% | 93.22% | 91.53% | 77.97% | 94.92% | 76.27% |

| AdaBoost | 89.83% | 86.44% | 93.22% | 79.66% | 89.83% | 72.88% |

| GradientBoosting | 98.31% | 93.22% | 94.92% | 77.97% | 94.92% | 76.27% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 96.15% | 92.31% | 69.23% | 76.92% | 92.31% | 63.46% |

| Catboost | 92.31% | 92.31% | 73.08% | 76.92% | 75.00% | 65.38% |

| LightGBM | 94.23% | 88.46% | 82.69% | 76.92% | 80.80% | 63.46% |

| XGboost | 94.23% | 86.54% | 76.92% | 75.00% | 78.85% | 61.54% |

| AdaBoost | 90.38% | 90.38% | 73.08% | 76.92% | 80.77% | 53.85% |

| GradientBoosting | 92.31% | 88.46% | 78.85% | 78.85% | 84.62% | 61.54% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 92.73% | 92.73% | 80.00% | 61.82% | 92.73% | 70.91% |

| Catboost | 89.09% | 98.18% | 80.00% | 63.64% | 90.91% | 72.73% |

| LightGBM | 90.91% | 94.55% | 83.64% | 65.45% | 89.10% | 69.09% |

| XGboost | 92.73% | 94.55% | 80.00% | 65.45% | 89.09% | 69.09% |

| AdaBoost | 89.09% | 85.45% | 81.82% | 67.27% | 94.55% | 61.82% |

| GradientBoosting | 90.91% | 94.55% | 80.00% | 69.09% | 90.91% | 74.55% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 90.91% | 94.55% | 81.82% | 67.27% | 92.73% | 72.73% |

| Catboost | 89.09% | 92.73% | 85.45% | 74.55% | 96.36% | 72.73% |

| LightGBM | 90.91% | 94.55% | 81.82% | 76.36% | 94.50% | 65.45% |

| XGboost | 89.09% | 94.55% | 81.82% | 74.55% | 92.73% | 70.91% |

| AdaBoost | 83.64% | 85.45% | 85.45% | 69.09% | 85.45% | 69.09% |

| GradientBoosting | 90.61% | 94.55% | 81.82% | 72.73% | 94.55% | 65.45% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 84.91% | 88.68% | 90.57% | 84.91% | 81.13% | 69.81% |

| Catboost | 84.91% | 84.91% | 83.02% | 96.23% | 81.13% | 64.15% |

| LightGBM | 83.02% | 83.02% | 83.02% | 86.79% | 86.80% | 67.92% |

| XGboost | 84.91% | 83.02% | 88.68% | 88.68% | 84.91% | 69.81% |

| AdaBoost | 86.79% | 84.91% | 81.13% | 83.02% | 81.13% | 69.81% |

| GradientBoosting | 88.68% | 83.02% | 83.02% | 90.57% | 84.91% | 69.81% |

| VGG16 | VGG19 | ResNet50 | EfficientNetB0 | InceptionV3 | EfficientNetV2L | |

|---|---|---|---|---|---|---|

| RandomForest | 93.73% | 91.75% | 81.19% | 72.94% | 87.46% | 64.03% |

| Catboost | 90.43% | 90.10% | 81.85% | 73.60% | 90.43% | 62.71% |

| LightGBM | 92.74% | 91.42% | 82.84% | 78.88% | 89.10% | 67.99% |

| XGboost | 89.11% | 89.77% | 83.17% | 74.59% | 89.44% | 65.35% |

| AdaBoost | 87.13% | 86.80% | 77.23% | 69.31% | 84.16% | 58.09% |

| GradientBoosting | 87.46% | 90.10% | 80.86% | 73.60% | 89.11% | 63.70% |

Appendix D

| Gray | Green | Blue | |

|---|---|---|---|

| Square | 96.30% | 95.31% | 73.47% |

| Triangle | 85.19% | 90.00% | 77.36% |

| Circle | 85.71% | 90.30% | 83.05% |

| Oval | 84.09% | 81.36% | 67.27% |

| Diamond | 81.82% | 61.11% | 69.23% |

| Star | 90.57% | 85.42% | 78.05% |

| All pictures | 88.32% | 86.50% | 78.22% |

References

- Singamneni, S.; Lv, Y.; Hewitt, A.; Chalk, R.; Thomas, W.; Jordison, D. Additive Manufacturing for the Aircraft Industry: A Review. J. Aeronaut. Aerospace Eng. 2019, 8, 351–371. [Google Scholar] [CrossRef]

- Lee, J.Y.; An, J.; Chua, C.K. Fundamentals and applications of 3D printing for novel materials. Appl. Mater. Today 2017, 7, 120–133. [Google Scholar] [CrossRef]

- Liu, J.; Sheng, L.; He, Z.Z. Liquid metal wheeled 3D-printed vehicle. In Liquid Metal Soft Machines; Springer: Singapore, 2019; pp. 359–372. [Google Scholar]

- Ricles, L.M.; Coburn, J.C.; Di Prima, M.; Oh, S.S. Regulating 3D-printed medical products. Sci. Transl. Med. 2018, 10, eaan6521. [Google Scholar] [CrossRef] [PubMed]

- Calignano, F.; Manfredi, D.; Ambrosio, E.P.; Biamino, S.; Lombardi, M.; Atzeni, E.; Salmi, A.; Minetola, P.; Iuliano, L.; Fino, P. Overview on additive manufacturing technologies. Proc. IEEE 2017, 105, 593–612. [Google Scholar] [CrossRef]

- Boschetto, A.; Bottini, L. Design for manufacturing of surfaces to improve accuracy in fused deposition modeling. Robot. Comput. Integr. Manuf. 2016, 37, 103–114. [Google Scholar] [CrossRef]

- Valerga, A.P.; Batista, M.; Salguero, J.; Girot, F. Influence of PLA filament conditions on characteristics of FDM parts. Materials 2018, 11, 1322. [Google Scholar] [CrossRef]

- Ottman, N.; Ruokolainen, L.; Suomalainen, A.; Sinkko, H.; Karisola, P.; Lehtimäki, J.; Lehto, M.; Hanski, I.; Alenius, H.; Fyhrquist, N. Soil exposure modifies the gut microbiota and supports immune tolerance in a mouse model. J. Allergy Clin. Immunol. 2019, 143, 1198–1206.e12. [Google Scholar] [CrossRef]

- Conway, K.M.; Pataky, G.J. Crazing in additively manufactured acrylonitrile butadiene styrene. Eng. Fract. Mech. 2019, 211, 114–124. [Google Scholar] [CrossRef]

- Heidari-Rarani, M.; Rafiee-Afarani, M.; Zahedi, A.M. Mechanical characterization of FDM 3D printing of continuous carbon fiber reinforced PLA composites. Compos. B Eng. 2019, 175, 107147. [Google Scholar] [CrossRef]

- Ahmed, S.W.; Hussain, G.; Al-Ghamdi, K.A.; Altaf, K. Mechanical properties of an additive manufactured CF-PLA/ABS hybrid composite sheet. J. Thermoplast. Compos. Mater. 2021, 34, 1577–1596. [Google Scholar] [CrossRef]

- Bacha, A.; Sabry, A.H.; Benhra, J. Fault diagnosis in the field of additive manufacturing (3D printing) using Bayesian networks. Int. J. Online Biomed. Eng. 2019, 15, 110–123. [Google Scholar] [CrossRef]

- Kadam, V.; Kumar, S.; Bongale, A.; Wazarkar, S.; Kamat, P.; Patil, S. Enhancing surface fault detection using machine learning for 3D printed products. Appl. Syst. Innov. 2021, 4, 34. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, G.S.; Ye, D.; Zhu, K.; Fuh, J.Y. Extraction and evaluation of melt pool, plume and spatter information for powder-bed fusion AM process monitoring. Mater. Des. 2018, 156, 458–469. [Google Scholar] [CrossRef]

- Priya, K.; Maheswari, P.U. Deep Learnt Features and Machine Learning Classifier for Texture classification. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021; Volume 2070, p. 012108. [Google Scholar]

- Delli, U.; Chang, S. Automated process monitoring in 3D printing using supervised machine learning. Procedia Manuf. 2018, 26, 865–870. [Google Scholar] [CrossRef]

- Khadilkar, A.; Wang, J.; Rai, R. Deep learning–based stress prediction for bottom-up SLA 3D printing process. Int. J. Adv. Manuf. Technol. 2019, 102, 2555–2569. [Google Scholar] [CrossRef]

- Baumgartl, H.; Tomas, J.; Buettner, R.; Merkel, M. A deep learning-based model for defect detection in laser-powder bed fusion using in-situ thermographic monitoring. Prog. Addit. Manuf. 2020, 5, 277–285. [Google Scholar] [CrossRef]

- Pham, G.N.; Lee, S.H.; Kwon, O.H.; Kwon, K.R. Anti-3D weapon model detection for safe 3D printing based on convolutional neural networks and D2 shape distribution. Symmetry 2018, 10, 90. [Google Scholar] [CrossRef]

- Katiyar, A.; Behal, S.; Singh, J. Automated Defect Detection in Physical Components Using Machine Learning. In Proceedings of the 8th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 17–19 March 2021; IEEE Publications: Piscataway, NJ, USA, 2021; pp. 527–532. [Google Scholar]

- Garfo, S.; Muktadir, M.A.; Yi, S. Defect detection on 3D print products and in concrete structures using image processing and convolution neural network. J. Mechatron. Robot. 2020, 4, 74–84. [Google Scholar] [CrossRef]

- Chen, W.; Zou, B.; Huang, C.; Yang, J.; Li, L.; Liu, J.; Wang, X. The defect detection of 3D-printed ceramic curved surface parts with low contrast based on deep learning. Ceram. Int. 2023, 49, 2881–2893. [Google Scholar] [CrossRef]

- Zhou, W.; Chen, Z.; Li, W. Dual-Stream Interactive Networks for No-Reference Stereoscopic Image Quality Assessment. IEEE Trans. Image Process. 2019, 28, 3946–3958. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Paraskevoudis, K.; Karayannis, P.; Koumoulos, E.P. Real-time 3D printing remote defect detection (stringing) with computer vision and artificial intelligence. Processes 2020, 8, 1464. [Google Scholar] [CrossRef]

- Putra, M.A.P.; Chijioke, A.L.A.; Verana, M.; Kim, D.S.; Lee, J.M. Efficient 3D printer fault classification using a multi-block 2D-convolutional neural network. J. Korean Inst. Commun. Inf. Sci. 2022, 47, 236–245. [Google Scholar] [CrossRef]

- Zhou, J.; Yang, X.; Zhang, L.; Shao, S.; Bian, G. Multisignal VGG19 network with transposed convolution for rotating machinery fault diagnosis based on deep transfer learning. Shock Vib. 2020, 2020, 1–12. [Google Scholar] [CrossRef]

- Kim, H.; Lee, H.; Kim, J.S.; Ahn, S.H. Image-based failure detection for material extrusion process using a convolutional neural network. Int. J. Adv. Manuf. Technol. 2020, 111, 1291–1302. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Eur Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Jin, Z.; Zhang, Z.; Gu, G.X. Automated real-time detection and prediction of interlayer imperfections in additive manufacturing processes using artificial intelligence. Adv. Intell. Syst. 2020, 2, 1900130. [Google Scholar] [CrossRef]

- Ruhi, Z.M.; Jahan, S.; Uddin, J. A Novel Hybrid Signal Decomposition Technique for Transfer Learning Based Industrial Fault Diagnosis. Ann. Emerg. Technol. Comput. (AETiC) 2021, 5, 37–53. [Google Scholar] [CrossRef]

- He, D.; Liu, C.; Chen, Y.; Jin, Z.; Li, X.; Shan, S. A rolling bearing fault diagnosis method using novel lightweight neural network. Meas. Sci. Technol. 2021, 32, 125102. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Ding, Q. Deep residual learning-based fault diagnosis method for rotating machinery. ISA Trans. 2019, 95, 295–305. [Google Scholar] [CrossRef]

- Sun, W.; Luo, W.; Min, X.; Zhai, G.; Yang, X.; Gu, K.; Ma, S. MC360IQA: The Multi-Channel CNN for Blind 360-Degree Image Quality Assessment. In Proceedings of the 2019 IEEE International Symposium on Circuits and Systems (ISCAS), Sapporo, Japan, 26–29 May 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Online, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Zhou, G.; Luo, L.; Xu, H.; Zhang, X.; Guo, H.; Zhao, H. Brick Yourself Within 3 Minutes. In Proceedings of the International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE Publications: Piscataway, NJ, USA, 2022; pp. 6261–6267. [Google Scholar]

- Li, B.; Wang, Z.; Wu, N.; Shi, S.; Ma, Q. Dog Nose Print Matching with Dual Global Descriptor Based on Contrastive Learning. arXiv 2022, arXiv:2206.00580. [Google Scholar]

- Wang, K.; Yu, L.; Xu, J.; Zhang, S.; Qin, J. Energy consumption intelligent modeling and prediction for additive manufacturing via multisource fusion and inter-layer consistency. Comput. Ind. Eng. 2022, 173, 108720. [Google Scholar] [CrossRef]

- Banadaki, Y.; Razaviarab, N.; Fekrmandi, H.; Li, G.; Mensah, P.; Bai, S.; Sharifi, S. Automated quality and process control for additive manufacturing using deep convolutional neural networks. Recent Prog. Mater. 2022, 4, 1. [Google Scholar] [CrossRef]

- Razaviarab, N.; Sharifi, S.; Banadaki, Y.M. Smart additive manufacturing empowered by a closed-loop machine learning algorithm. In Nano-, Bio-, Info-Tech Sensors and 3D Systems III; SPIE: Bellingham, WA, USA, 2019. [Google Scholar]

- Jia, X.; Xiao, B.; Zhao, Z.; Ma, L.; Wang, N. Bearing fault diagnosis method based on CNN-LightGBM. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Sanya, China, 12–14 November 2021; IOP Publishing: Bristol, UK, 2021; Volume 1043, p. 022066. [Google Scholar]

- Available online: https://www.prusa3d.com/product/original-prusa-i3-mk3s-kit-3/ (accessed on 29 December 2022).

- Available online: https://tw-3dp.com/ (accessed on 29 December 2022).

- Available online: https://www.sony.com.tw/en/electronics/interchangeable-lens-cameras/ilce-7m3-body-kit (accessed on 29 December 2022).

- Available online: https://colab.research.google.com/ (accessed on 29 December 2022).

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Zhao, X.; Wu, Y.; Lee, D.L.; Cui, W. iforest: Interpreting random forests via visual analytics. IEEE Trans. Vis. Comput. Graph. 2018, 25, 407–416. [Google Scholar] [CrossRef]

- Bühlmann, P. Bagging, boosting and ensemble methods. In Handbook of Computational Statistics; Springer: Berlin/Heidelberg, Germany, 2021; pp. 985–1012. [Google Scholar]

- Ghojogh, B.; Crowley, M. The theory behind overfitting, cross validation, regularization, bagging, and boosting: Tutorial. arXiv 2019, arXiv:1905.12787. [Google Scholar]

- Ganaie, M.A.; Hu, M. Ensemble Deep Learning: A Review. arXiv 2021, arXiv:2104.02395. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Wang, F.; Li, Z.; He, F.; Wang, R.; Yu, W.; Nie, F. Feature learning viewpoint of AdaBoost and a new algorithm. IEEE Access 2019, 7, 149890–149899. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Statist. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Anghel, A.; Papandreou, N.; Parnell, T.; De Palma, A.; Pozidis, H. Benchmarking and optimization of gradient boosting decision tree algorithms. arXiv 2018, arXiv:1809.04559. [Google Scholar]

- Shi, Y.; Li, J.; Li, Z. Gradient boosting with piece-wise linear regression trees. arXiv 2018, arXiv:1802.05640. [Google Scholar]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intell. Rev. 2021, 54, 1937–1967. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Sagi, O.; Rokach, L. Ensemble learning: A survey. WIREs Data Mining Knowl. Discov. 2018, 8, e1249. [Google Scholar] [CrossRef]

- Zounemat-Kermani, M.; Batelaan, O.; Fadaee, M.; Hinkelmann, R. Ensemble machine learning paradigms in hydrology: A review. J. Hydrol. 2021, 598, 126266. [Google Scholar] [CrossRef]

- Hancock, J.; Khoshgoftaar, T.M. Leveraging LightGBM for Categorical Big Data. In Proceedings of the IEEE Seventh International Conference on Big Data Computing Service and Applications (BigDataService), Athens, Greece, 17–20 July 2021; IEEE Publications: Piscataway, NJ, USA, 2021; pp. 149–154. [Google Scholar]

- Tanha, J.; Abdi, Y.; Samadi, N.; Razzaghi, N.; Asadpour, M. Boosting methods for multi-class imbalanced data classification: An experimental review. J. Big Data. 2020, 7, 1–47. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, J.; Shen, W. A review of ensemble learning algorithms used in remote sensing applications. Appl. Sci. 2022, 12, 8654. [Google Scholar] [CrossRef]

- Dorogush, A.V.; Ershov, V.; Gulin, A. CatBoost: Gradient boosting with categorical features support. arXiv 2018, arXiv:1810.11363. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 6639–6649. [Google Scholar]

- Hancock, J.T.; Khoshgoftaar, T.M. CatBoost for big data: An interdisciplinary review. J. Big Data 2020, 7, 94. [Google Scholar] [CrossRef]

- González, S.; García, S.; Del Ser, J.; Rokach, L.; Herrera, F. A practical tutorial on bagging and boosting based ensembles for machine learning: Algorithms, software tools, performance study, practical perspectives and opportunities. Inf. Fusion. 2020, 64, 205–237. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of deep learning algorithms and architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L.; et al. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Chen, C.; Yuan, J.; Chen, G. Color reproduction accuracy promotion of 3D-printed surfaces based on microscopic image analysis. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2054004. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Z.; Shi, J.; Wu, D. Prediction of surface roughness in extrusion-based additive manufacturing with machine learning. Robot. Comput. Integr. Manuf. 2019, 57, 488–495. [Google Scholar] [CrossRef]

- Amihai, I.; Gitzel, R.; Kotriwala, A.M.; Pareschi, D.; Subbiah, S.; Sosale, G. An Industrial Case Study Using Vibration Data and Machine Learning to Predict Asset Health. In Proceedings of the 20th Conference on Business Informatics (CBI), Vienna, Austria, 11–13 July 2018; IEEE Publications: Piscataway, NJ, USA, 2018. [Google Scholar]

- Paolanti, M.; Romeo, L.; Felicetti, A.; Mancini, A.; Frontoni, E.; Loncarski, J. Machine Learning Approach for Predictive Maintenance in Industry 4.0. In Proceedings of the 14th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Oulu, Finland, 2–4 July 2018; IEEE Publications: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Jayasudha, M.; Elangovan, M.; Mahdal, M.; Priyadarshini, J. Accurate estimation of tensile strength of 3D printed parts using machine learning algorithms. Processes 2022, 10, 1158. [Google Scholar] [CrossRef]

- Gardner, J.M.; Hunt, K.A.; Ebel, A.B.; Rose, E.S.; Zylich, S.C.; Jensen, B.D.; Wise, K.E.; Siochi, E.J.; Sauti, G.; Siochi, E.J.; et al. Machines as craftsmen: Localized parameter setting optimization for fused filament fabrication 3D printing. Adv. Mater. Technol. 2019, 4, 1800653. [Google Scholar] [CrossRef]

- Rahmani Dabbagh, S.R.; Ozcan, O.; Tasoglu, S. Machine learning-enabled optimization of extrusion-based 3D printing. Methods 2022, 206, 27–40. [Google Scholar] [CrossRef]

| Stage | Operator | Stride | Channels | Layers |

|---|---|---|---|---|

| 0 | Conv3 × 3 | 2 | 32 | 1 |

| 1 | Fused-MBConv1, k3 × 3 | 1 | 32 | 4 |

| 2 | Fused-MBConv4, k3 × 3 | 2 | 64 | 7 |

| 3 | Fused-MBConv4, k3 × 3 | 2 | 96 | 7 |

| 4 | MBConv4, k3 × 3, SE0.25 | 2 | 192 | 10 |

| 5 | MBConv6, k3 × 3, SE0.25 | 1 | 224 | 19 |

| 6 | MBConv6, k3 × 3, SE0.25 | 2 | 384 | 25 |

| 7 | MBConv6, k3 × 3, SE0.25 | 1 | 640 | 7 |

| 8 | Conv1 × 1 & Pooling & FC | - | 1280 | 1 |

| Gray Nondefective | Gray Defective | Green Nondefective | Green Defective | Blue Nondefective | Blue Defective | |

|---|---|---|---|---|---|---|

| Square | 90 | 104 | 144 | 114 | 117 | 83 |

| Star | 124 | 134 | 97 | 119 | 76 | 90 |

| Circle | 112 | 98 | 156 | 126 | 112 | 127 |

| Oval | 71 | 122 | 107 | 131 | 124 | 107 |

| Diamond | 62 | 106 | 134 | 133 | 108 | 105 |

| Triangle | 116 | 136 | 97 | 106 | 107 | 118 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.-J.; Huang, W.-K.; Lin, K.-P. Three-Dimensional Printing Quality Inspection Based on Transfer Learning with Convolutional Neural Networks. Sensors 2023, 23, 491. https://doi.org/10.3390/s23010491

Yang C-J, Huang W-K, Lin K-P. Three-Dimensional Printing Quality Inspection Based on Transfer Learning with Convolutional Neural Networks. Sensors. 2023; 23(1):491. https://doi.org/10.3390/s23010491

Chicago/Turabian StyleYang, Cheng-Jung, Wei-Kai Huang, and Keng-Pei Lin. 2023. "Three-Dimensional Printing Quality Inspection Based on Transfer Learning with Convolutional Neural Networks" Sensors 23, no. 1: 491. https://doi.org/10.3390/s23010491

APA StyleYang, C.-J., Huang, W.-K., & Lin, K.-P. (2023). Three-Dimensional Printing Quality Inspection Based on Transfer Learning with Convolutional Neural Networks. Sensors, 23(1), 491. https://doi.org/10.3390/s23010491