DTTrans: PV Power Forecasting Using Delaunay Triangulation and TransGRU

Abstract

1. Introduction

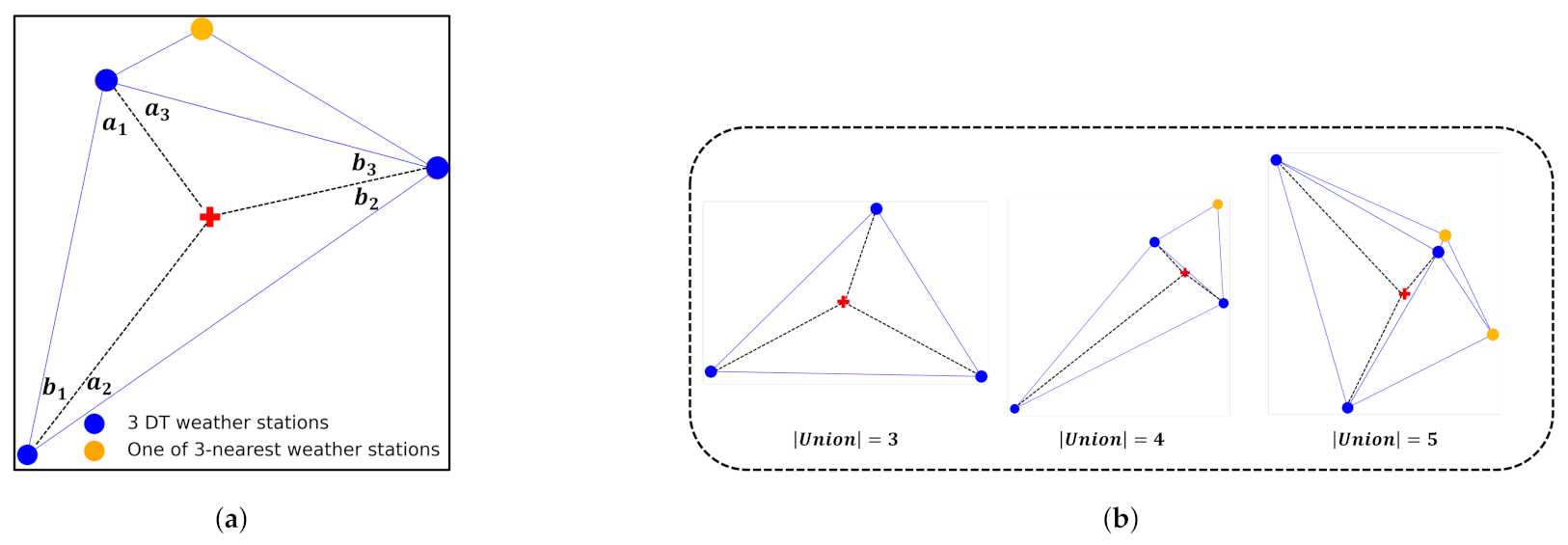

- We develop a framework for PV generation forecasting by using Delaunay triangulation. Unlike the previous methods that use the weather data from the closest weather station, our method can select three weather stations that enclose the target PV site. We will see that this Delaunay triangulation-based approach outperforms selecting the three-nearest weather stations when the target PV site is well positioned in the corresponding Delaunay triangle.

- An interpretable AI model for PV power forecasting has not been actively studied so far. Considering this, we design an interpretable AI model called TransGRU, which is robust against weather forecast errors, by using a Transformer encoder. TransGRU learns input data through the Transformer encoder, which builds the feature importance for PV power generation in terms of feature representation learning. From the transformed feature data, our model can selectively exploit the weather stations and meteorological factors that are highly related to the target PV site.

- Our framework is simple but effective, specifically for newly built PV sites having a short period of data, e.g., one and a half years. We show that our framework overcomes the dependency on historical PV data by using Delaunay triangulation and a Transformer encoder. Specifically, we provide extensive experiments using 1034 PV sites nationwide and 86 weather stations in Korea. The results show that our framework achieves a 7–15% improvement in forecasting individual PV power generation and a 41–60% improvement in PV aggregation in forming a virtual power plant (VPP). As a result, we achieved a 3–4% of forecast error for VPP, which sufficiently satisfies the requirement of 6% or less error to participate in the renewable energy wholesale market run by the Korea Power Exchange (KPX).

2. Proposed Methodologies

2.1. Overall Framework

2.2. Voronoi Tessellation and Delaunay Triangulation

2.3. Union-Inner Triangles Algorithm

| Algorithm 1 Union-Inner Triangles Algorithm |

|

2.4. Proposed TransGRU Model

2.4.1. Transformer Encoder

2.4.2. Gated Recurrent Unit (GRU)

3. Model Selection

3.1. Data Description

3.2. Hyperparameters of the Proposed TransGRU

3.3. Hyperparameters of the Compared Models

3.4. Hyperparameters of the Model Optimization

4. Experiment Results

4.1. Performance Metric

4.2. Reproduction of Weather Forecast Data

4.3. The Impact of Delaunay Triangulation and Union-Inner Triangles Algorithm

4.4. The Impact of Transformer Encoder

4.5. Forecasting of Individual PV Sites

4.6. Forecasting of Aggregated PV Sites

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Global Solar Council. Global Market Outlook for Solar Power 2022–2026. Available online: https://www.solarpowereurope.org/insights/market-outlooks/global-market-outlook-for-solar-power-2022 (accessed on 24 August 2022).

- Kumar, N.; Singh, B.; Panigrahi, B.K. LLMLF-Based Control Approach and LPO MPPT Technique for Improving Performance of a Multifunctional Three-Phase Two-Stage Grid Integrated PV System. IEEE Trans. Sustain. Energy 2020, 11, 371–380. [Google Scholar] [CrossRef]

- Kumar, N.; Singh, B.; Panigrahi, B.K.; Xu, L. Leaky-least-logarithmic-absolute-difference-based control algorithm and learning-based InC MPPT technique for grid-integrated PV system. IEEE Trans. Ind. Electron. 2019, 66, 9003–9012. [Google Scholar] [CrossRef]

- Kumar, N.; Singh, B.; Wang, J.; Panigrahi, B.K. A framework of L-HC and AM-MKF for accurate harmonic supportive control schemes. IEEE Trans. Circuits Syst. I Reg. Pap. 2020, 67, 5246–5256. [Google Scholar] [CrossRef]

- Asif, M.I.; Alam, A.M.; Deeba, S.R.; Aziz, T. Forecasting of photovoltaic power generation: Techniques and key factors. In Proceedings of the 2019 IEEE Region 10 Symposium (TENSYMP), Kolkata, India, 7–9 June 2019; pp. 457–461. [Google Scholar]

- Besharat, F.; Dehghan, A.A.; Faghih, A.R. Empirical models for estimating global solar radiation: A review and case study. Renew. Sustain. Energy Rev. 2013, 21, 798–821. [Google Scholar] [CrossRef]

- Phinikarides, A.; Makrides, G.; Kindyni, N.; Kyprianou, A.; Georghiou, G.E. ARIMA modeling of the performance of different photovoltaic technologies. In Proceedings of the 2013 IEEE 39th Photovoltaic Specialists Conference (PVSC), Tampa, FL, USA, 16–21 June 2013; pp. 97–801. [Google Scholar]

- Li, Y.; Su, Y.; Shu, L. An ARMAX model for forecasting the power output of a grid connected photovoltaic system. Renew. Energy 2014, 66, 78–89. [Google Scholar] [CrossRef]

- Mellit, A.; Pavan, A.M. A 24-h forecast of solar irradiance using artificial neural network: Application for performance prediction of a grid-connected PV plant at Trieste, Italy. Sol. Energy 2010, 84, 807–821. [Google Scholar] [CrossRef]

- Mellit, A.; Sağlam, S.; Kalogirou, S.A. Artificial neural network-based model for estimating the produced power of a photovoltaic module. Renew. Energy 2013, 60, 71–78. [Google Scholar] [CrossRef]

- Lee, D.; Kim, K. Recurrent neural network-based hourly prediction of photovoltaic power output using meteorological information. Energies 2019, 12, 215. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Zhang, C.; Peng, T.; Nazir, M.S. A novel integrated photovoltaic power forecasting model based on variational mode decomposition and CNN-BiGRU considering meteorological variables. Electr. Power Syst. Res. 2022, 213, 108796. [Google Scholar] [CrossRef]

- Sun, Y.; Szűcs, G.; Brandt, A.R. Solar PV output prediction from video streams using convolutional neural networks. Energy Environ. Sci. 2018, 11, 1811–1818. [Google Scholar] [CrossRef]

- Jeong, J.; Kim, H. Multi-site photovoltaic forecasting exploiting space-time convolutional neural network. Energies 2019, 12, 4490. [Google Scholar] [CrossRef]

- Zang, H.; Liu, L.; Sun, L.; Cheng, L.; Wei, Z.; Sun, G. Short-term global horizontal irradiance forecasting based on a hybrid CNN-LSTM model with spatiotemporal correlations. Renew. Energy 2020, 160, 26–41. [Google Scholar] [CrossRef]

- Li, P.; Zhou, K.; Lu, X.; Yang, S. A hybrid deep learning model for short-term PV power forecasting. Appl. Energy 2020, 259, 114216. [Google Scholar] [CrossRef]

- Heo, J.; Song, K.; Han, S.; Lee, D.E. Multi-channel convolutional neural network for integration of meteorological and geographical features in solar power forecasting. Appl. Energy 2021, 295, 117083. [Google Scholar] [CrossRef]

- Yu, D.; Lee, S.; Lee, S.; Choi, W.; Liu, L. Forecasting photovoltaic power generation using satellite images. Energies 2020, 13, 6603. [Google Scholar] [CrossRef]

- Zhang, X.; Zhen, Z.; Sun, Y.; Wang, F.; Zhang, Y.; Ren, H.; Ma, H.; Zhang, W. Prediction Interval Estimation and Deterministic Forecasting Model Using Ground-based Sky Image. IEEE Trans. Ind. Appl. 2022. [Google Scholar] [CrossRef]

- Simeunović, J.; Schubnel, B.; Alet, P.J.; Carrillo, R.E. Spatio-temporal graph neural networks for multi-site PV power forecasting. IEEE Trans. Sustain. Energy 2021, 13, 1210–1220. [Google Scholar] [CrossRef]

- Karimi, A.M.; Wu, Y.; Koyutürk, M.; French, R.H. Spatiotemporal Graph Neural Network for Performance Prediction of Photovoltaic Power Systems. AAAI 2021, 35, 15323–15330. [Google Scholar] [CrossRef]

- Cheng, L.; Zang, H.; Ding, T.; Wei, Z.; Sun, G. Multi-meteorological-factor-based graph modeling for photovoltaic power forecasting. IEEE Trans. Sustain. Energy 2021, 12, 1593–1603. [Google Scholar] [CrossRef]

- Hong, T.; Pinson, P.; Fan, S.; Zareipour, H.; Troccoli, A.; Hyndman, R.J. Probabilistic energy forecasting: Global energy forecasting competition 2014 and beyond. Int. J. Forecast. 2016, 32, 896–913. [Google Scholar] [CrossRef]

- Mitrentsis, G.; Lens, H. An interpretable probabilistic model for short-term solar power forecasting using natural gradient boosting. Appl. Energy 2022, 309, 118473. [Google Scholar] [CrossRef]

- Boots, B.; Sugihara, K.; Chiu, S.N.; Okabe, A. Spatial Tessellations: Concepts and Applications of Voronoi Diagrams, 2nd ed.; Wiley Series in Probability and Statistics; Wiley: New York, NY, USA, 2000. [Google Scholar]

- Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F. Voronoi-based multi-robot autonomous exploration in unknown environments via deep reinforcement learning. IEEE Trans. Veh. Technol. 2020, 69, 14413–14423. [Google Scholar] [CrossRef]

- Lei, H.Y.; Li, J.R.; Xu, Z.J.; Wang, Q.H. Parametric design of Voronoi-based lattice porous structures. Mater. Des. 2020, 191, 108607. [Google Scholar] [CrossRef]

- Luo, Y.; Mi, Z.; Tao, W. DeepDT: Learning geometry from Delaunay triangulation for surface reconstruction. In Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI), Virtually, 2–9 February 2021; pp. 2277–2285. [Google Scholar]

- Wang, W.; Wang, H.; Fei, S.; Wang, H.; Dong, H.; Ke, Y. Generation of random fiber distributions in fiber reinforced composites based on Delaunay triangulation. Mater. Des. 2021, 206, 109812. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, H.; Lu, Z.; Zeng, Q. A dynamic fusion pathfinding algorithm using Delaunay triangulation and improved a-star for mobile robots. IEEE Access 2021, 9, 20602–20621. [Google Scholar] [CrossRef]

- Aurenhammer, F. Voronoi diagrams—A survey of a fundamental geometric data structure. ACM Comput. Surv. (CSUR) 1991, 23, 345–405. [Google Scholar] [CrossRef]

- Dobrin, A. A Review of Properties and Variations of Voronoi Diagrams; Whitman College: Walla Walla, WA, USA, 2005. [Google Scholar]

- Lin, Y.; Koprinska, I.; Rana, M.; Troncoso, A. Solar Power Forecasting Based on Pattern Sequence Similarity and Meta-learning. In Proceedings of the 29th International Conference on Artificial Neural Networks, Bratislava, Slovakia, 15–18 September 2020; Springer: Cham, Switzerland, 2020; pp. 271–283. [Google Scholar]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- KMA. Automated Synoptic Observing System (ASOS) Weather Data. Available online: https://data.kma.go.kr/data (accessed on 5 April 2022).

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Bengio, Y.; Goodfellow, I.; Courville, A. Deep Learning, 1st ed.; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Pudjianto, D.; Ramsay, C.; Strbac, G. Virtual power plant and system integration of distributed energy resources. IET Renew. Power Gener. 2007, 1, 10–16. [Google Scholar] [CrossRef]

- Jang, H.S.; Bae, K.Y.; Park, H.S.; Sung, D.K. Effect of aggregation for multi-site photovoltaic (PV) farms. In Proceedings of the 2015 IEEE International Conference on Smart Grid Communications (SmartGridComm), Miami, FL, USA, 2–5 November 2015; pp. 623–628. [Google Scholar]

| Location | # of PVs | Statistical Parameter of PV Data from Each Location | |||

|---|---|---|---|---|---|

| Maximum | Mean | Conditional Mean | Standard Deviation | ||

| Gangwon | 72 | 0.9439 | 0.1418 | 0.4334 | 0.2377 |

| Gyeonggi | 77 | 0.4336 | 0.0697 | 0.1932 | 0.1059 |

| Gyeongnam | 112 | 1.3644 | 0.2189 | 0.6407 | 0.3493 |

| Gyeongbuk | 147 | 0.8453 | 0.1374 | 0.3798 | 0.2102 |

| Gwangju | 39 | 0.9845 | 0.1499 | 0.4373 | 0.2324 |

| Daegu | 27 | 1.7818 | 0.3044 | 0.8400 | 0.4550 |

| Daejeon | 5 | 0.8604 | 0.0595 | 0.3635 | 0.1419 |

| Busan | 12 | 0.0475 | 0.0086 | 0.0229 | 0.0129 |

| Seoul | 8 | 1.1770 | 0.1986 | 0.5493 | 0.3030 |

| Sejong | 4 | 3.2630 | 0.5486 | 1.5490 | 0.8344 |

| Ulsan | 4 | 0.8950 | 0.1454 | 0.4104 | 0.2245 |

| Incheon | 10 | 0.7373 | 0.1287 | 0.3522 | 0.1932 |

| Jeonnam | 243 | 0.5957 | 0.0985 | 0.2836 | 0.1544 |

| Jeonbuk | 110 | 0.4478 | 0.0700 | 0.2030 | 0.1113 |

| Chungnam | 113 | 1.8684 | 0.3018 | 0.8458 | 0.4673 |

| Chungbuk | 51 | 1.3418 | 0.2063 | 0.5972 | 0.3247 |

| Layer | Name | Dimension | Number of Parameters |

|---|---|---|---|

| 0 | Input | 288 | - |

| 1 | Transformer encoder; Feedforward input | 512 | 147,968 |

| 2 | Transformer encoder; Feedforward output | 288 | 147,744 |

| 3 | GRU | 64 | 67,968 |

| 4 | FC layer 1 | 256 | 16,640 |

| 5 | FC layer 2 | 256 | 65,792 |

| 6 | Output | 24 | 6168 |

| Hyperparameter | Value |

|---|---|

| Batch size | 18 |

| Learning rate | 0.0001 |

| Optimizer | Adam |

| Epoch | 1000 |

| Loss function | MSE |

| Location | Weather Forecast Error (%) | NMAE10 (%) | |||||

|---|---|---|---|---|---|---|---|

| MLP | GRU | TransGRU | |||||

| VT | DT+ | VT | DT+ | VT | DT+ | ||

| Gwangju | 5 | 9.47 | 7.97 | 10.23 | 8.85 | 8.11 | 7.49 |

| 10 | 9.51 | 8.04 | 10.37 | 9.04 | 8.59 | 7.62 | |

| 15 | 10.04 | 8.66 | 10.92 | 9.29 | 8.74 | 7.67 | |

| 20 | 10.98 | 8.79 | 11.60 | 9.74 | 9.22 | 8.27 | |

| Daegu | 5 | 9.48 | 8.41 | 10.07 | 9.02 | 9.40 | 8.09 |

| 10 | 10.35 | 8.79 | 10.64 | 9.42 | 9.46 | 8.21 | |

| 15 | 10.44 | 8.99 | 11.25 | 9.37 | 9.55 | 8.22 | |

| 20 | 11.14 | 9.48 | 12.12 | 9.94 | 10.01 | 8.79 | |

| Location | # of PVs | Capacity (MW) | Weather Forecast Error (%) | NMAE10 (%) | |||||

|---|---|---|---|---|---|---|---|---|---|

| MLP | GRU | TransGRU | |||||||

| VT | DT+ | VT | DT+ | VT | DT+ | ||||

| Gwangju | 39 | 29.92 | 5 | 5.50 | 4.73 | 5.47 | 5.03 | 5.28 | 4.97 |

| 10 | 5.64 | 4.8 | 5.68 | 5.21 | 5.36 | 5.0 | |||

| 15 | 6.13 | 4.81 | 6.09 | 5.49 | 5.59 | 5.12 | |||

| 20 | 6.20 | 5.03 | 6.07 | 5.54 | 6.05 | 5.54 | |||

| Daegu | 27 | 34.47 | 5 | 5.76 | 5.29 | 5.66 | 5.09 | 6.43 | 5.35 |

| 10 | 6.14 | 5.51 | 6.22 | 5.98 | 6.41 | 5.34 | |||

| 15 | 6.67 | 5.88 | 6.50 | 5.12 | 6.44 | 5.53 | |||

| 20 | 7.28 | 5.86 | 7.06 | 5.82 | 7.27 | 6.14 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, K.; Jeong, J.; Moon, J.-H.; Kwon, S.-C.; Kim, H. DTTrans: PV Power Forecasting Using Delaunay Triangulation and TransGRU. Sensors 2023, 23, 144. https://doi.org/10.3390/s23010144

Song K, Jeong J, Moon J-H, Kwon S-C, Kim H. DTTrans: PV Power Forecasting Using Delaunay Triangulation and TransGRU. Sensors. 2023; 23(1):144. https://doi.org/10.3390/s23010144

Chicago/Turabian StyleSong, Keunju, Jaeik Jeong, Jong-Hee Moon, Seong-Chul Kwon, and Hongseok Kim. 2023. "DTTrans: PV Power Forecasting Using Delaunay Triangulation and TransGRU" Sensors 23, no. 1: 144. https://doi.org/10.3390/s23010144

APA StyleSong, K., Jeong, J., Moon, J.-H., Kwon, S.-C., & Kim, H. (2023). DTTrans: PV Power Forecasting Using Delaunay Triangulation and TransGRU. Sensors, 23(1), 144. https://doi.org/10.3390/s23010144