Local Spatial–Temporal Matching Method for Space-Based Infrared Aerial Target Detection

Abstract

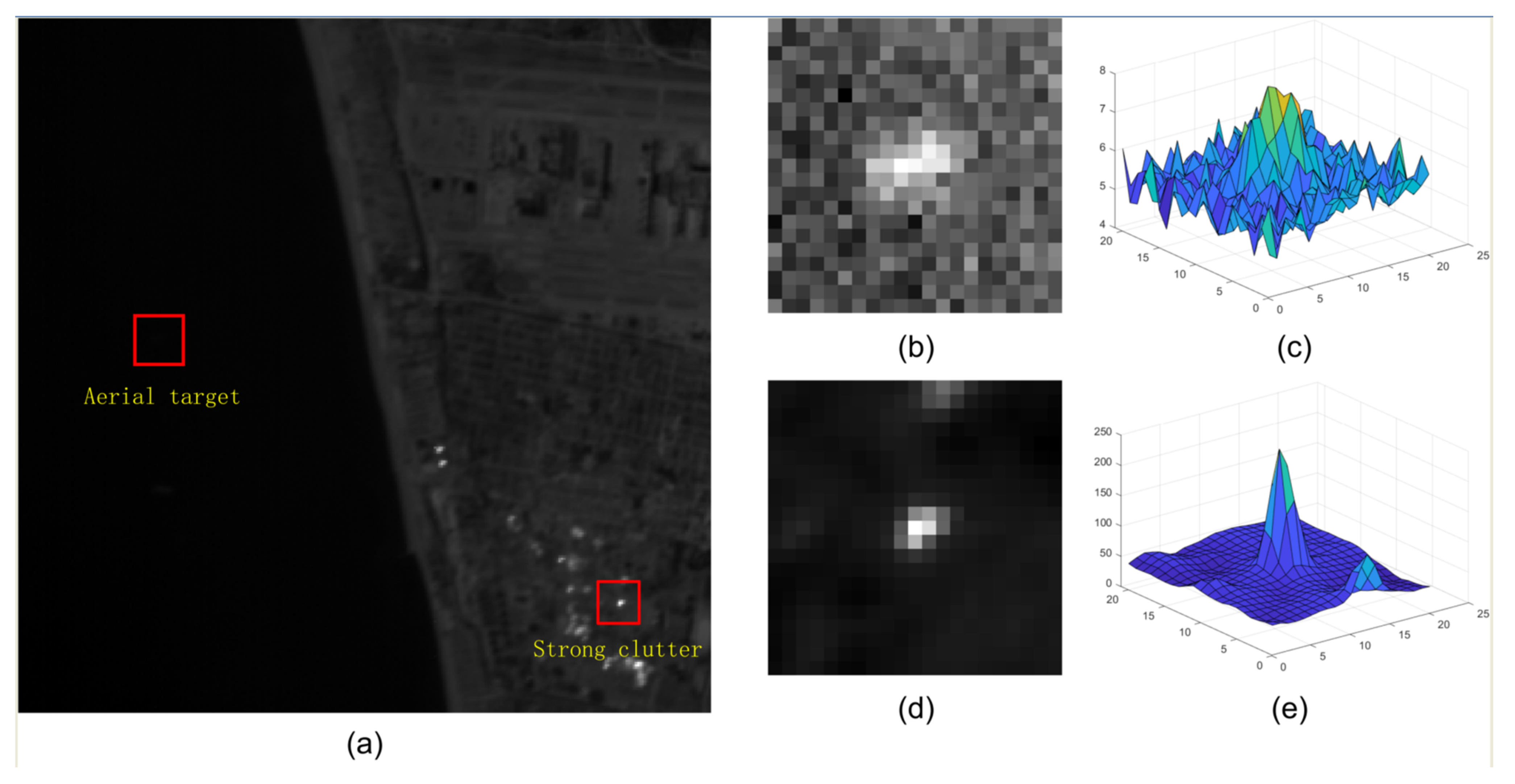

:1. Introduction

- (1)

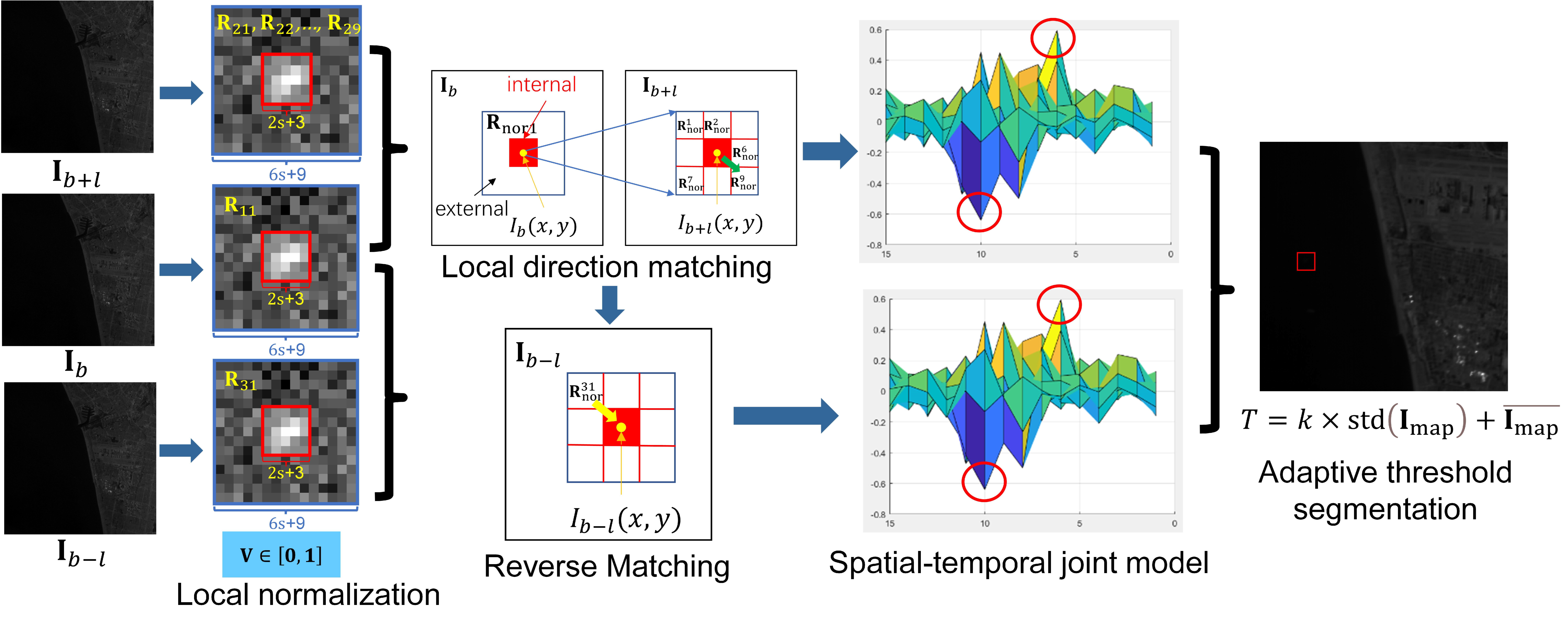

- Local normalization is proposed to shorten the difference between aerial target and strong clutter, which ensures that the weak target and strong clutter will be processed in subsequent steps within the same value domain.

- (2)

- Local direction matching and spatial–temporal joint model are constructed to suppress the strong clutter and enhance aerial target by considering the spatial–temporal difference between aerial target and background.

- (3)

- A reverse matching step is leveraged to further enhance the target and eliminate the residual clutter.

- (4)

- Experiments conducted on the space-based IR datasets demonstrate that LSM can enhance the weak target and suppress the strong clutter simultaneously and effectively and that it performs better than the existing methods on space-based IR aerial target detection.

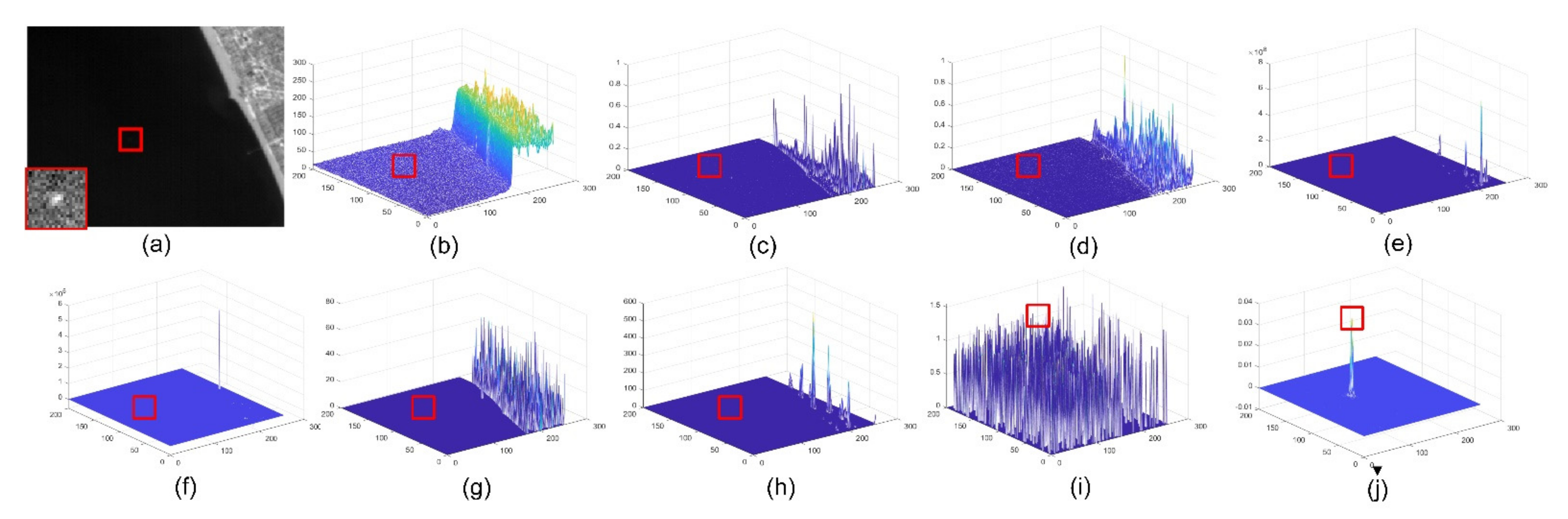

2. Proposed Methods

2.1. Local Slices Extraction and Normalization

2.2. Local Direction Matching

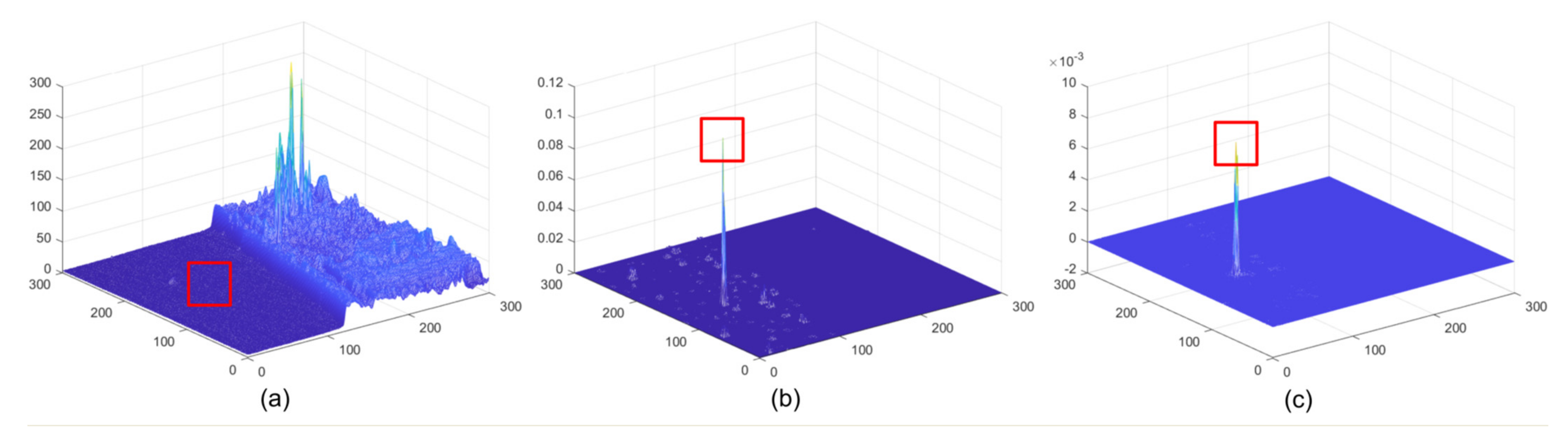

2.3. Spatial–Temporal Joint Model

2.4. Reverse Matching

2.5. Adaptive Threshold Segmentation

| Algorithm 1 Procedure of LSM. |

| Input: Base frame , reference frames and . Output: The position of the aerial target. (1) Obtain the size of . (2) for do (3) for do (4) Obtain the local slices and s by Equations (1) and (2); (5) Obtain the normalized slices and s by Equations (3) and (4); (6) Calculate the matching coefficient r1(x,y) and determine the by Equations (5)–(7); (7) Construct the spatial–temporal joint model between and and calculate by Equations (8)–(14); (8) Conduct reverse matching and obtain by Equations (15)–(17); (9) Calculate the normalized slice of by Equation (3); (10) Calculate the matching coefficient by Equation (5); (11) Construct the spatial–temporal joint model between and and calculate by Equations (8)–(14); (12) Calculate the saliency map value by Equations (18) and (19); (13) end for (14) end for (15) Obtain the saliency map ; (16) Calculate the adaptive threshold by formula Equation (20); (17) Output the position of the aerial target. |

3. Experiments

3.1. Experimental Condition and Evaluation Index

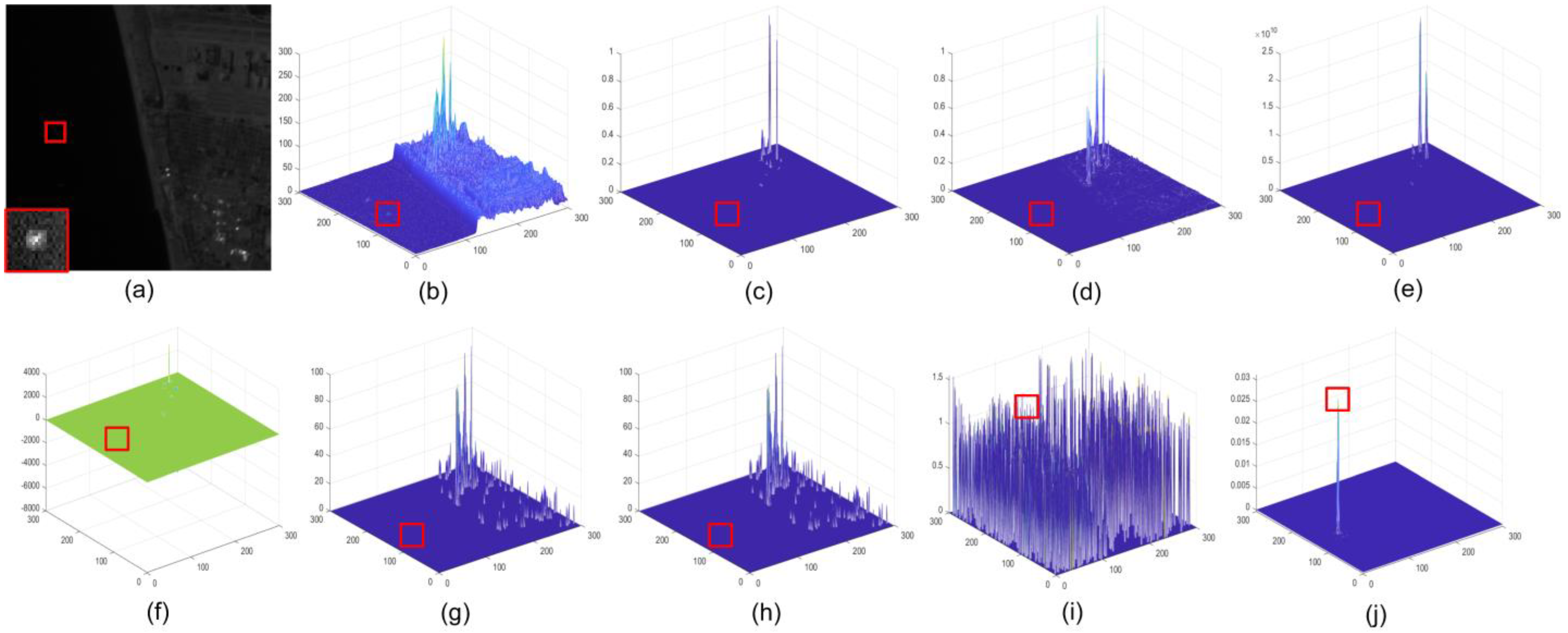

3.2. Experimental Results

4. Analysis and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, G.; Sun, X.; Fu, K.; Wang, H. Aircraft Recognition in High-Resolution Satellite Images Using Coarse-to-Fine Shape Prior. IEEE Geosci. Remote Sens. Lett. 2013, 3, 573–577. [Google Scholar] [CrossRef]

- Zhao, B.; Xiao, S.; Lu, H.; Wu, D. Spatial-temporal local contrast for moving point target detection in space-based infrared imaging system. Infr. Phys. Technol. 2018, 95, 53–60. [Google Scholar] [CrossRef]

- Cawley, S.J. The Space Technology Research Vehicle 2 Medium Wave Infra Red Imager. Acta Astronaut. 2003, 52, 717–726. [Google Scholar] [CrossRef]

- Zhu, H.; Li, Y.; Hu, T.; Rao, P. Key parameters design of an aerial target detection system on a space-based platform. Opt. Eng. 2018, 57, 1. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Z.; Kong, D.; He, Y. Infrared dim and small target detection based on stable multisubspace learning in heterogeneous scene. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5481–5493. [Google Scholar] [CrossRef]

- Cao, Y.; Liu, R.; Yang, J. Small target detection using two-dimensional least mean square (TDLMS) filter based on neighborhood analysis. Int. J. Infrared Millim. Waves 2008, 29, 188–200. [Google Scholar] [CrossRef]

- Zeng, M.; Li, J.; Peng, Z. The design of top-hat morphological filter and application to infrared target detection. Infr. Phys. Technol. 2006, 48, 67–76. [Google Scholar] [CrossRef]

- Lu, Y.; Dong, L.; Zhang, T.; Xu, W. A robust detection algorithm for infrared maritime small and dim targets. Sensors 2020, 20, 1237. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar]

- Pang, D.; Shan, T.; Ma, P.; Li, W.; Liu, S.; Tao, R. A Novel Spatiotemporal Saliency Method for Low-Altitude Slow Small Infrared Target Detection. IEEE Geosci. Remote Sens. Lett. 2021, 19. [Google Scholar] [CrossRef]

- Wu, L.; Ma, Y.; Fan, F.; Wu, M.; Huang, J. A Double-Neighborhood Gradient Method for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1476–1480. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Zhang, H.; Zhao, Q.; Zhang, X.; Li, N. Infrared small target detection based on the weighted strengthened local contrast measure. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1670–1674. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, Z.; Kwan, C.; Zhou, G.; Chen, Y. New Results on Small and Dim Infrared Target Detection. Sensors 2021, 21, 7746. [Google Scholar] [CrossRef] [PubMed]

- Lv, P.; Sun, S.; Lin, C.; Liu, G. A method for weak target detection based on human visual contrast mechanism. IEEE Geosci. Remote Sens. Lett. 2018, 16, 261–265. [Google Scholar] [CrossRef]

- Deng, L.; Zhu, H.; Tao, C.; Wei, Y. Infrared moving point target detection based on spatial–temporal local contrast filter. Infr. Phys. Technol. 2016, 76, 168–173. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Zhou, F.; Wu, Y.; Dai, Y.; Ni, K. Robust infrared small target detection via jointly sparse constraint of l1/2-metric and dual-graph regularization. Remote Sens. 2020, 12, 1963. [Google Scholar] [CrossRef]

- Liu, H.-K.; Zhang, L.; Huang, H. Small target detection in infrared videos based on spatio-temporal tensor model. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8689–8700. [Google Scholar] [CrossRef]

- Kong, X.; Yang, C.; Cao, S.; Li, C.; Peng, Z. Infrared small target detection via nonconvex tensor fibered rank approximation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–21. [Google Scholar] [CrossRef]

- Yang, P.; Dong, L.; Xu, W. Infrared small maritime target detection based on integrated target saliency measure. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 2369–2386. [Google Scholar] [CrossRef]

- Zhao, M.; Li, W.; Li, L.; Ma, P.; Cai, Z.; Tao, R. Three-order tensor creation and tucker decomposition for infrared small-target detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Zhang, T.; Peng, Z.; Wu, H.; He, Y.; Li, C.; Yang, C. Infrared small target detection via self-regularized weighted sparse model. Neurocomputing 2021, 420, 124–148. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional local contrast networks for infrared small target detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Ying, X.; Wang, Y.; Wang, L.; Sheng, W.; Liu, L.; Lin, Z.; Zho, S. MoCoPnet: Exploring Local Motion and Contrast Priors for Infrared Small Target Super-Resolution. arXiv 2022, arXiv:2201.01014. [Google Scholar]

- Ju, M.; Luo, J.; Liu, G.; Luo, H. ISTDet: An efficient end-to-end neural network for infrared small target detection. Infr. Phys. Technol. 2021, 114, 103659. [Google Scholar] [CrossRef]

- Hou, Q.; Wang, Z.; Tan, F.; Zhao, Y.; Zheng, H.; Zhang, W. RISTDnet: Robust infrared small target detection network. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Lv, P.-Y.; Sun, S.-L.; Lin, C.-Q.; Liu, G.-R. Space moving target detection and tracking method in complex background. Infr. Phys. Technol. 2018, 91, 107–118. [Google Scholar] [CrossRef]

- Lv, P.-y.; Lin, C.-q.; Sun, S.-l. Dim small moving target detection and tracking method based on spatial-temporal joint processing model. Infr. Phys. Technol. 2019, 102, 102973. [Google Scholar] [CrossRef]

- Lin, B.; Yang, X.; Wang, J.; Wang, Y.; Wang, K.; Zhang, X. A Robust Space Target Detection Algorithm Based on Target Characteristics. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

| Dataset | Size | Target Size | Details of Backgrounds |

|---|---|---|---|

| Frame | |||

| Seq.1 | Sea and land background, strong clutter, background moving speed is 0.11 pixel/frame; | ||

| 100 | |||

| Seq.2 | |||

| 135 | |||

| Seq.3 | ; | ||

| 135 | |||

| Seq.4 | . | ||

| 120 |

| Methods | Seq.1 | Seq.2 | Seq.3 | Seq.4 |

|---|---|---|---|---|

| STLCF | 7.0835 | 3.7508 | 2.4573 | 4.2582 |

| STLCM | 14.9534 | 8.3428 | 13.0866 | 13.7626 |

| FSM | 11.4422 | 7.3382 | 11.1237 | 28.0876 |

| NSM | 9.0671 | 2.4982 | 13.9567 | 20.3182 |

| STJP | 5.7969 | 1.1265 | 1.9641 | 4.8315 |

| DNGM | 6.0336 | 4.1818 | 6.2833 | 14.9458 |

| MLTC | 1.2551 | 0.6357 | 1.5488 | 2.1215 |

| Proposed | 9.5974 | 6.7767 | 14.2828 | 24.0300 |

| Methods | Seq.1 | Seq.2 | Seq.3 | Seq.4 |

|---|---|---|---|---|

| 2.9400 | 1.7764 | 2.4502 | 2.2381 | |

| STLCF | 1.7490 | 0.7818 | 0.6588 | 0.0897 |

| STLCM | 1.7231 | 2.8566 | 2.0138 | 0.5797 |

| FSM | 1.7213 | 1.3366 | 5.9280 | 0.0028 |

| NSM | 0.0118 | 0.7149 | 2.1257 | 0.0269 |

| STJP | 0.6507 | 0.1349 | 0.4681 | 0.0795 |

| DNGM | 0.7587 | 12.8578 | 7.0548 | 0.4568 |

| MLTC | 13.1471 | 1.2697 | 8.7553 | 28.2292 |

| Proposed | 17.5890 | 7.4260 | 11.7774 | 28.4823 |

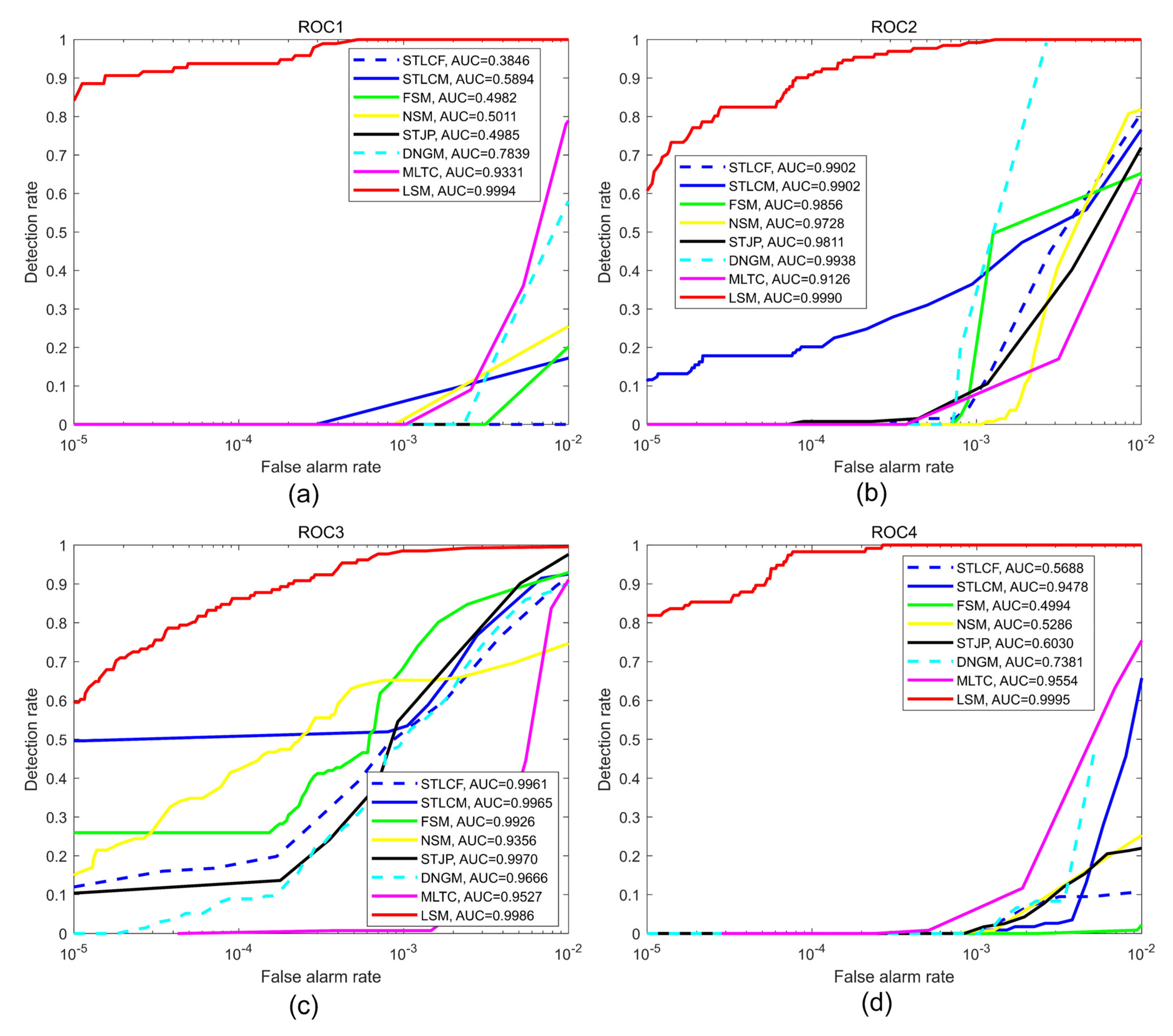

| Methods | Seq.1 | Seq.2 | Seq.3 | Seq.4 | Average |

|---|---|---|---|---|---|

| STLCF | 0.3846 | 0.9902 | 0.9961 | 0.5688 | 0.7350 |

| STLCM | 0.5894 | 0.9902 | 0.9965 | 0.9478 | 0.8809 |

| FSM | 0.4982 | 0.9856 | 0.9926 | 0.4994 | 0.7440 |

| NSM | 0.5011 | 0.9728 | 0.9356 | 0.5286 | 0.7345 |

| STJP | 0.4985 | 0.9811 | 0.9970 | 0.6030 | 0.7699 |

| DNGM | 0.7839 | 0.9938 | 0.9666 | 0.7381 | 0.8706 |

| MLTC | 0.9331 | 0.9126 | 0.9527 | 0.9554 | 0.9534 |

| Proposed | 0.9994 | 0.9990 | 0.9986 | 0.9995 | 0.9991 |

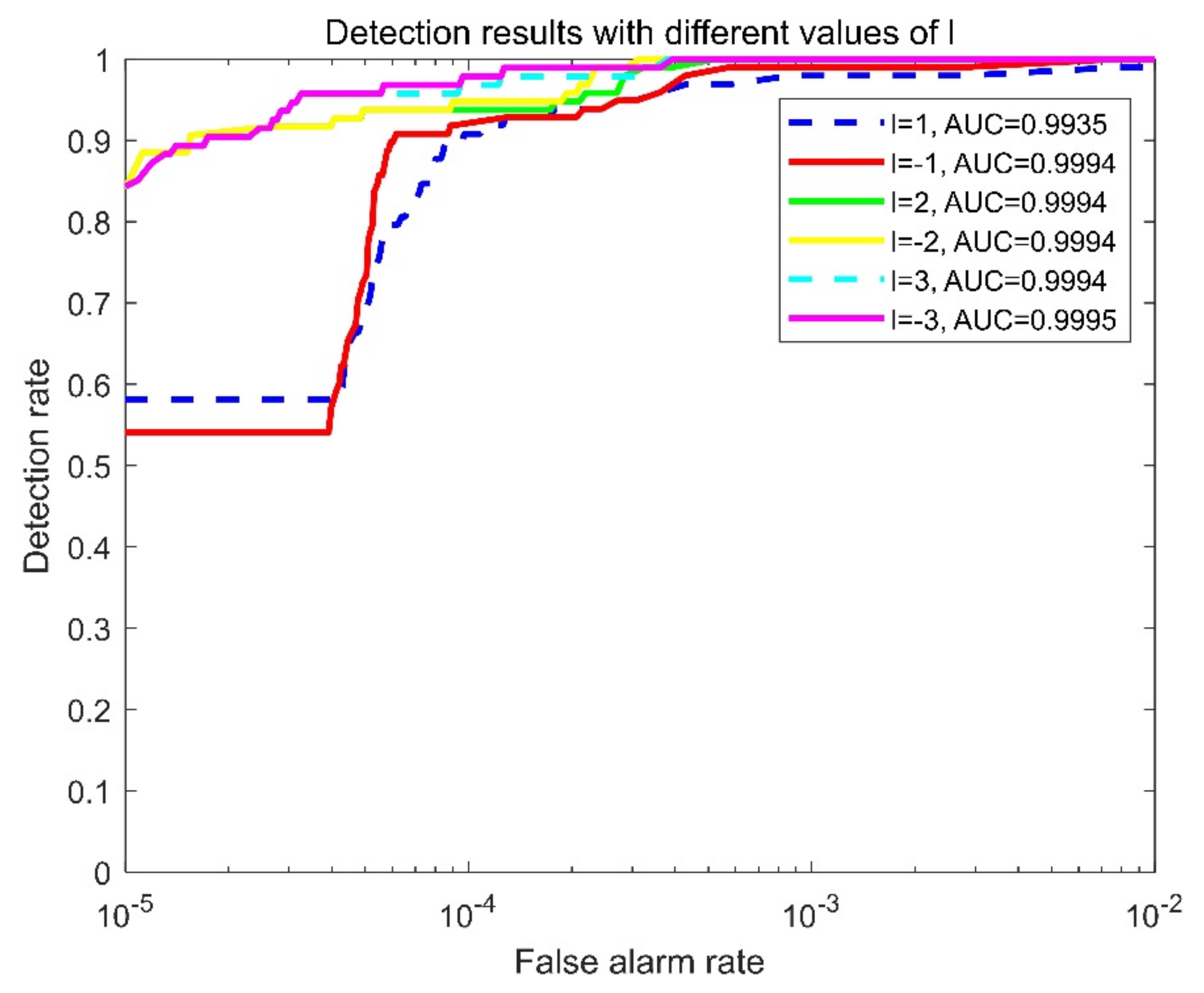

| −3 | −2 | −1 | 1 | 2 | 3 | |

| 24.7905 | 17.2655 | 10.7153 | 10.7153 | 17.5890 | 24.7880 | |

| 9.3730 | 9.4456 | 9.5933 | 9.5933 | 9.5974 | 9.3730 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Rao, P.; Chen, X.; Huang, M. Local Spatial–Temporal Matching Method for Space-Based Infrared Aerial Target Detection. Sensors 2022, 22, 1707. https://doi.org/10.3390/s22051707

Chen L, Rao P, Chen X, Huang M. Local Spatial–Temporal Matching Method for Space-Based Infrared Aerial Target Detection. Sensors. 2022; 22(5):1707. https://doi.org/10.3390/s22051707

Chicago/Turabian StyleChen, Lue, Peng Rao, Xin Chen, and Maotong Huang. 2022. "Local Spatial–Temporal Matching Method for Space-Based Infrared Aerial Target Detection" Sensors 22, no. 5: 1707. https://doi.org/10.3390/s22051707

APA StyleChen, L., Rao, P., Chen, X., & Huang, M. (2022). Local Spatial–Temporal Matching Method for Space-Based Infrared Aerial Target Detection. Sensors, 22(5), 1707. https://doi.org/10.3390/s22051707