1. Introduction

For some passive sensors, such as infrared sensors, photoelectric sensors and cameras, they can detect targets by receiving electromagnetic signals. As they do not emit signals, they can probe targets in a stealth manner [

1]. However, such sensors can measure only angles of signals and thus are termed as angle-only sensors subsequently. The signal position information, which is of great concern in many situations, cannot be obtained with a sensor. To determine the position of signal sources, one can connect distributed sensors with communication links and then estimate the position through a fusion algorithm. This is a hot topic in recent years and gains wide attentions of scholars in different fields [

2,

3,

4,

5].

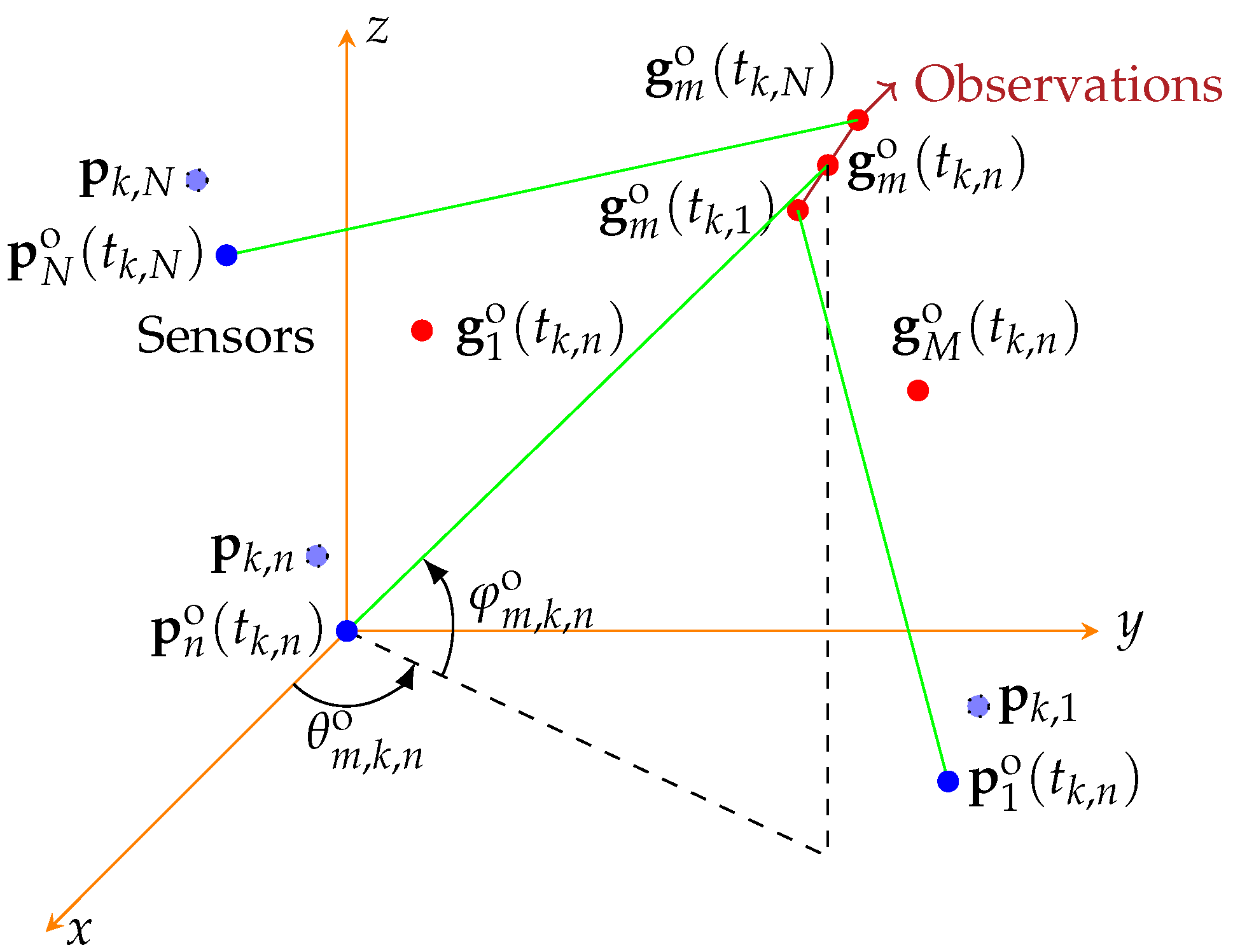

In the 3-dimensional (3D) scenario, the angle information measured by each passive sensor includes the azimuth and elevation of the signal. From a mathematical perspective, each angle observation can be represented by a straight line passing the sensor and a target in space. If no error occurs in this process, all the lines will intersect in a point in space, which is the location of a signal source. In practice, both sensor location measures and angle measures are inevitably contaminated by measurement noises and then the lines may not intersect a point in space. However, as if the signals are from the same target, the lines will intersect in a small volume, whose center can be deemed as the location of a target. Following this concept, an angle-only positioning algorithm is presented in [

6] and a closed-form solution is derived.

In real applications, if the targets of interest are static, or if the sampling frequency to the signals is too high in contrast to the velocities of possible targets, the algorithms can be developed under an assumption that the velocity of the target is static. The least squares (LS) algorithm is applied in the target position estimation based on angle-only measurements by linearizing the angle observation equations [

7,

8,

9,

10]. The intersection localization algorithm is obtained by considering that the straight lines formed by the angle observations will intersect in a small volume in space [

11,

12,

13]. Real sensor often makes observations in an asynchronous manner, namely the observations are not obtained at the same instants. The stationary target assumption will also make the sensor synchronization problem easier, because we can totally drop the timing information of the observations. If the target is stationary, even if the sensors are moving, the straight lines formed by the angle measurements of multiple sensors at different times will converge to a small area near the target location. Therefore, in this scenario, one just needs to solve a target positioning algorithm in an asynchronous manner.

Once the target motion should be considered at different observation instants, target location estimation will face greater biases and then one has to take the target motion issue into consideration. Meanwhile, for moving targets, the observation instants should be taken into account and then as the distributed fusion algorithm should take instant information into account, the fusion algorithm becomes more complicated. There are mainly two strategies available so far. The first strategy is to use the filtering algorithms, such as the Kalman filter that can estimate target velocity through observations from different instants. In the target tracking theoretical framework, the angle-only observations can be described by a measurement equation, although it is heavily nonlinear. Therefore, a nonlinear filtering algorithm should be used [

14,

15]. For instance, the extended Kalman filtering (EKF) algorithm linearizes the angle measurement function through the first-order Taylor approximation, and then uses the standard Kalman filtering algorithm for the angle-only target tracking problem [

16]. The cubature Kalman filter (CKF) [

17], the unscented Kalman filter (UKF) [

18], the pseudo linear Kalman filter (PLKF) [

19,

20], the particle filter [

21] and a series of sigma-point based algorithms can also be used in the target tracking problem with distributed angle-only sensors.

Although the tracking algorithms have been widely used to estimate moving target positions, it requires the noise distribution parameters known a priori. It also faces the convergence problem if the initial state is set improperly [

22,

23]. In distributed sensor networks, if each observation undergoes a tracking process, the computation cost will also be high since the data amount of observations are often intensive in practice. Therefore, a good positioning algorithm should be implemented before filtering. For instance, in [

15], short-term angle-only observations are fused by a distributed positioning algorithm, whose outputs are then processed by a tracking algorithm.

In the other strategy, the target position and velocity can be estimated together and then the result is valid in a longer period. In this case, the tracking operation can be performed in a longer period, so that the computation cost can be further reduced. However, if the velocity is estimated, more optimization variables are involved and then the optimization problem is more complicated. Meanwhile, in a distributed sensor configuration, the communication cost between the sensors may be high if all the observations are transmitted to a fusion center. In this paper, we study the distributed positioning of moving targets with distributed asynchronous angle-only sensors. We consider the scenario where multiple asynchronous passive sensors are linked with the fusion center through communication links. First, we formulate an algorithm, termed as gross LS algorithm, that takes all angle observations of multiple sensors together with their positions in certain period to estimate the position and velocity of the target. Different observations contribute different lines and with many lines available, both the target position and its velocity can be estimated. The classical LS algorithm is formulated such that the computation cost is reduced a lot.

Due to the huge amount of data, this algorithm still has high computational complexity and high communication cost. In order to reduce the communication and computation cost, we further present a distributed positioning algorithm, termed as linear LS algorithm, that can implement the fusion algorithm in a parallel computation manner. In detail, both positions and angle observations of local sensors are processed by LS operations, whose outputs are zero and first orders of the Taylor series of corresponding parameters. The outputs are then transmitted to a fusion center for which we derive a fusion algorithm to efficiently combine position and velocity estimates for a higher parameter estimation accuracy. The later algorithm can greatly compress the data rate from local sensors to the fusion center, such that the communication cost is greatly reduced. Meanwhile, local observations are represented by a few parameters and thus the fusion algorithm also needs a lower computation cost. The sensor location can be recorded asynchronously with the angle observations and thus can make the algorithm easier in applications. Meanwhile, a truncated LS algorithm, which replaces the velocity estimation of the linear LS algorithm by a simply average operation, is also presented.

Numerical results are obtained with distributed asynchronous angle-only sensors measuring a moving target with certain velocity. The convergence performance of both the algorithms are presented first, in order to examine the impact of the number of observations on the positioning performance. Then the impact of the linear approximation of position and angle measures on the estimation accuracy is analyzed. It will be found that the gross LS algorithm often benefits from more observations. However, although the linear LS algorithm and the truncated LS algorithm will perform good if the number of observations is small, as the number of observations increase, their performances will degrade, as a resulting of the linear model mismatching. The truncated LS algorithm will perform better in a short period than the linear LS algorithm but worse in a longer time. To an extreme, the estimation performance of the linear LS algorithm may deteriorate with more observations if the model mismatching is severe. We also verify that the linear LS algorithm has a lower communication cost in most situations and examine the performance loss due to inaccurate platform velocity estimates. Numerical angle distortion errors under the linear approximations are also analyzed.

2. Localization with Angle-Only Passive Sensors

2.1. Signal Model of Passive Observations

Consider a passive sensor network with

N widely separated sensors and

M targets in the surveillance volume. All the

N passive sensors can measure only direction of arrival (DOA) of signals, based on which real position of a signal emitter can be estimated. Assume that all the sensors operate in the same coordinate system through some inherent position and attitude measurement devices, such as the Global Positioning System (GPS) and inertial sensors. A typical coordinate system is the earth-centered earth-fixed (ECEF) of the World Geodetic System 84 (WGS84). Both the targets and the sensors are in motion by assumption. The real position of the

nth sensor at instant

t is denoted by

,

, where

denotes the transpose operation, and

denote the

coordinates of the

nth sensor in the common coordinate system at instant

t, respectively. The real position of the

mth target at instant

t is denoted by

, where

denote the

coordinates of the

mth target at instant

t, respectively. The topology of the passive sensors and targets are shown in

Figure 1.

For the

nth sensor, signals are detected and their DOAs are measured at instants denoted by

, where

denotes the number of observations of the

nth sensor. At the instant

, assume that the position of the

nth sensors is measured as

where

denotes the sensor self-positioning error. For simplicity, we assume that the sensor self-positioning error follows zero mean Gaussian distributions with covariance matrices

, where

denotes the expectation operation.

At instant

, the real position of the

mth signal source is denoted by

Assume that all the observations regarding the same target are obtained in a short period

. In this period, assume that the location of the

mth target can be expressed by

where

denotes the location of the target at the reference instant

, and

denotes the velocity over

. The signal model in use depends on the velocity of the target and the period of observations. If all the observations are collected in a short period and the velocity is small, then one can simply assume

as [

6]. Under the signal model (

3), more observations can be used to make an estimation of the target space locations. If the observations are obtained in a long period and the velocity is huge, then this model may also mismatch and higher order approximations may be used.

For the

nth angle-only passive sensors, the

lth observation at

is indexed by a triple

. For simplicity, we also encode all the triples available, corresponding to all the observations available, with a one-to-one function

. Then we define a set

by

denotes a set of signal indices detected at the instant

by the

nth sensor. Therefore,

, where

over a set denotes the cardinality of the set. As the possibility of miss detection, false alarms and overlapping of signal sources,

may not be equal to

M. Denote

where ∪ denotes the union operation. The total number of observations by

N sensors is denoted by

Each observation is associated with one of

M targets or the false alarm indexed by 0, represented by a set

. It can be considered as a mapping

, which is a correct mapping and is thus typically unknown in practice. According to our setting, the index set

can be partitioned into

disjoint sets

, and

is defined by

where

denotes the index of observations corresponding to false alarms, and

denotes the index set of observations from the

mth signal source. As a partition of

, we have

,

,

, and

, where ∩ denotes the intersection operation of sets. Assume that

and there are totally

observations available.

The signal indices in

are composed of signal indices from all the sensors and the sub set for the

nth sensor is denoted by

which indicates the observations from the

nth sensor probing the

mth target. Denote

and then we have

and

.

For simplicity, we first assume that the mapping

is exactly known and then observations associated with

is exactly known. For observation

, real azimuth angle and elevation angle, regarding the

nth sensor at

, can be expressed by

respectively, where

,

,

is called the two-argument inverse tangent function [

24,

25] and

is the inverse tangent function. Denote

. The azimuth angle and elevation angle measures can be written as

where

and

represent the measurement noise of the azimuth angle and elevation angle, respectively.

For simplicity, we assume that observation noises and are statistically independent and follow zero-mean Gaussian distribution. The covariance matrices of are denoted by , namely , which is typically affected by the SNR of the signal, where denotes the zero-mean Gaussian distribution with mean and covariance matrix .

2.2. Estimation of Target Track

Each angle-only observation contributes a line in 3D space and without measurement error, a target will be present at the line. With many angle-only observations, real position of the target can be determined. The line associated with the

ith observation can be expressed by

where

denotes the sensor location regarding the

ith observation,

is a parameter indicating the distance to the origin

,

is the normalized direction vector associated with the angle observation

, namely

,

over a vector denotes the

-norm, and

In what follows, we consider the observations in

. From (

3), we can rewrite

where

,

denotes the bias term,

and

denotes the identity matrix. In (

18), there are totally 7 unknown parameters and an observation can provide 3 equations. In addition to an observation, one can obtain another 3 equations and the number of unknown parameters will increase by 1. Unless specified, we always refer to the

mth target and drop the subscripts

m in situations without ambiguity subsequently, e.g., denote

.

In order to determine the location and velocity of the target, the optimization problem can be formulated as

where

refers to the

norm subsequently unless explicitly specified,

,

is a matrix whose columns are

,

,

denotes a

all-one vector of length

,

is a matrix whose columns are

, ⊗ denotes the Kronecker product operation,

and

with a vector entry denotes a diagonal matrix with the vector as diagonal elements.

2.3. The Gross LS Algorithm

In practice, the observations are generally contaminated by measurement noise and then the lines often do not intersect into a point in space. With

observations, there are totally

unknown parameters and

equations. Therefore, if we have at least 3 observations from angle-only sensors, we can find 9 unknown parameters together. For that purpose, let

and then we can reformulate (

18). In order to minimize the mismatch, the optimization problem can be rewritten as

The combination of equations for all observation indices in

can be formulated as

where

,

denotes the vectorization operation.

where

, and

with some matrices inputs denotes a block diagonal matrix with the input matrices as block diagonal elements.

For this optimization problem, we can find the classical LS solution as

It can be proved that for symmetric matrices

and

, and

, all of appropriate sizes,

Consequently, with a fact that

, we have

where

and the following equation is used in above formulations.

It can also be proved that

where

,

and ⊗ denotes the Kronecker product operation.

Still evoke (

30) and we have

and thus

Therefore, the estimates for

and

can be written separately as

where

can be written in a concise form as

Under the assumption that all the sample under consideration is from the same target, the track, parameterized by , is identical for all observations hereafter.

2.4. The Linear LS Algorithm

In a long period, one can obtain a sequence of observations. If we take all the observations into consideration for optimization, a huge computation cost may be required. In some cases, it is also unnecessary at all. We can extract information from local observations and then transmit estimated parameters to the fusion center for target location estimation. The target location has been approximated by a linear model. Next, we express the DOA and sensor location by a linear model as well.

For DOA measures from a sensor, we can approximate a series of angle measures by

Now consider the

nth sensor and let

For all observations in

, we can write a polynomial regression problem as

where

,

denotes bias for

,

denotes bias for

, and

It can be proved that the LS estimate of the directions for the

nth sensor can be directly written as

In this case, and , instead of and , will be transmitted to the fusion center, such that the communication cost will be greatly reduced.

In (

18),

and

affects the positioning accuracy through the normalized direction vector

. With a linear approximation model,

can be rewritten as

where

,

and

denotes the Jacobi matrix defined by

For the nth sensor, denote , , and so on.

In practice, the position of the platform is also measured by a device, such as an inertial system or a positioning system. In either case, if the

nth sensor is moving with speed

at

, the position can be expressed by

where

denotes the position of the

nth sensor,

denotes the velocity, both at

, and

denotes the bias of position estimation error.

For simplicity, we assume that the sensor location is measured at

. In practice, in this configuration, the sensor location can be measured at instants other than

. Now we can construct equations as

or in another form as

where

,

, and

for which

where

is similar to

in (

40).

The LS estimate of

is

and

With above operations, we can obtain a linear sensor location parameter , linear DOA parameters , and linear target location parameters . The accuracy depends on the interval of observations, the speed of the target, and the speed of the sensors. A series of observations can now be approximated by two parameters and it is now unnecessary to transmit all the local observation to a fusion center anymore.

With only one observation available, a fusion center can now estimate the target position and velocity with the following equation

where

represents the change rate of

. By expanding Equation (

71) and ignoring the second-order term,

can be reformulated as

where

and

denotes the bias term in approximation target position and direction of arrival by the linear models.

The following equation can be obtained using (

72) for

N sensors,

where

and

In order to minimize the total bias

, we can minimize

where

and

.

To ensure the bias is minimized for

, both the initial position bias,

, and the speed bias,

should be minimized. Therefore, we can solve the optimization problem through solving the following two optimization problems of smaller scale,

The solutions to the problems can be found directly through the LS algorithm as

where

It can be proved that

and then

where

and

.

Meanwhile,

and thus,

which is identical to the solution of (

82). A minor difference is that the matrix inverse operation is over an

matrix

.

The solution to

can be expressed by

where

and

Consequently, we can obtain

One can also derive in another way. According to (93), the change rate

of

can be expressed as

Take (

103) into (

84), which can be rewritten as

The solution of (

104) can also be found through the LS algorithm, which can be expressed as

where

2.5. The Truncated LS Algorithm

For a better performance, it is necessary to estimate the change rate

and if we ignore this term, the optimization problem becomes

whose solution, termed as truncated LS algorithm subsequently, is

which is an average operation. Note that the truncated LS algorithm shares the same position estimate with the linear LS algorithm.

The estimate of the target location at

can be written as

In (

99) and (

109), the velocity terms

are unknown and should be replaced by their estimates, typically

estimated in the LS algorithm as in (

70). In practice, besides the linear regression method performed at local sensors, there may be other methods that can output more accurate velocity and angle difference information. For instance, some inertial devices can measure the velocity more accurately than the LS algorithm in use. With a more accurate velocity estimate, it is possible to obtain a better positioning performance.

Both the linear LS algorithm and the truncated LS algorithm estimate the velocity of the target and thus can make the time-consuming nonlinear filtering operation update in a longer time interval. Subsequently, the performances of these algorithms will be analyzed in numerical results.

3. Numerical Results

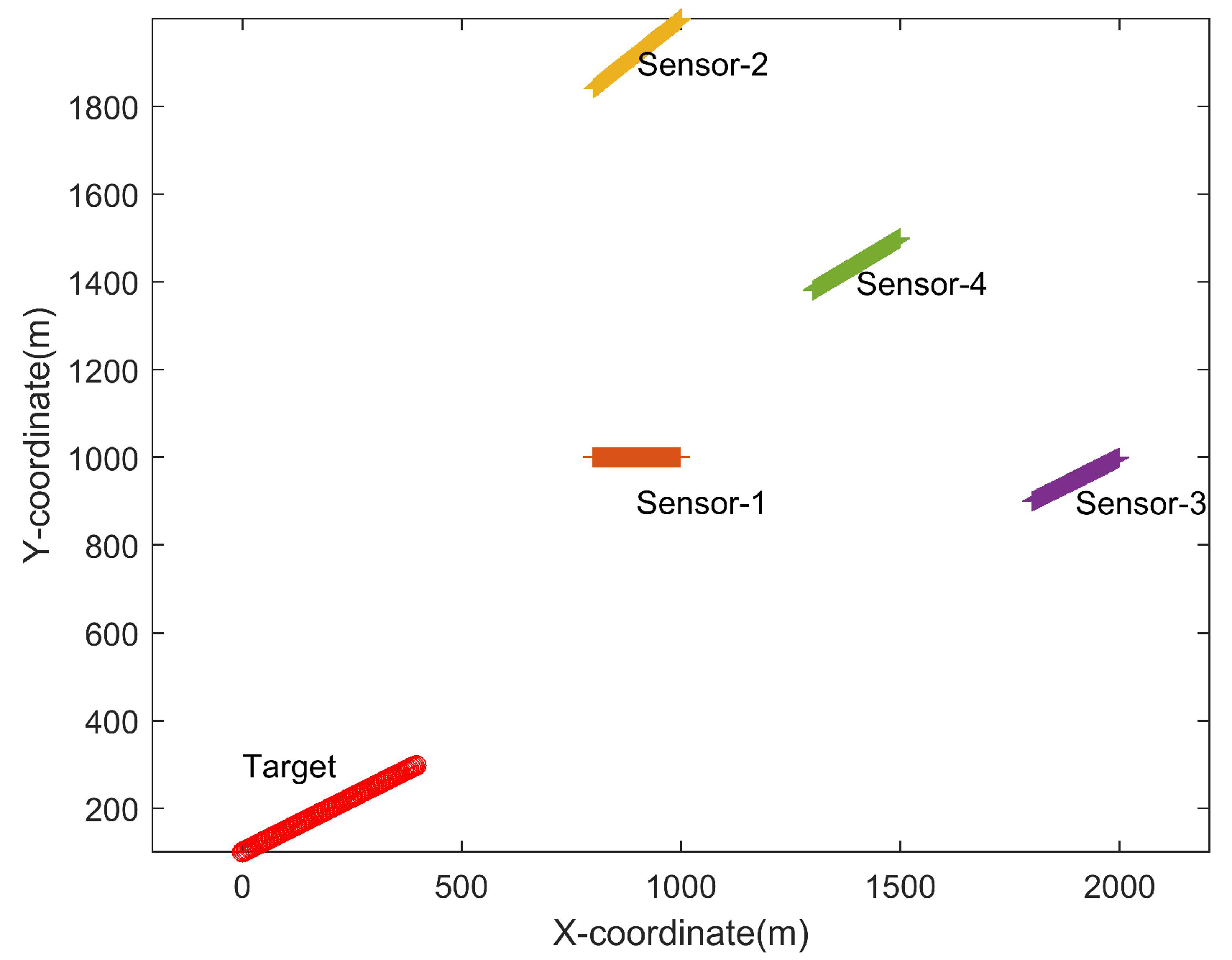

In order to evaluate the performance of the concerned positioning algorithms, we first consider a scenario where four angle-only sensors are estimating the position of a target with their angle-only observations. Both the sensors and the target are moving with a constant speed during the period of observation by assumption. The initial position and the constant speed of the sensors and the target are shown in

Table 1. The scenario is illustrated in

Figure 2.

All the sensors output observations at a frequency of 50 Hz, i.e., with a period of 20 ms. But they operate on an asynchronous manner, namely the sensors record the observations at independent instants. The differences of the sampling instants are randomly generated within 20ms. This assumption is important in real situations because it allows distributed sensors to operate asynchronously. We also assume that there is no error in recording the instants of the observations and for all the sensors, no signal is missed in detection during the observation period.

Assume that the self-positioning error is distributed with zero-mean normal distribution, whose variance is 1m for all the sensors, namely

The angle measurement error also follows zero-mean normal distribution with variance of

degree for all the observations, namely

At the current stage, we do not consider the measurement errors from the gyroscopes installed on the platforms along with the sensors. Therefore, the angle measurement error is caused by the sensors only.

In order to evaluate the performance of the algorithms, we run

random experiments and take the root mean square error (RMSE) as the resulting performance metric. The RMSE of position, RMSE of velocity and the gross RMSE at instant

t in scale and in dB are defined by

respectively, where

denotes the initial position of the target at the

kth experiment,

and

are constants during experiments, and

denotes the estimate of the target speed at the

kth experiment. At each random experiment, the position error and the angle measurement error are generated randomly.

3.1. The Convergence Curves

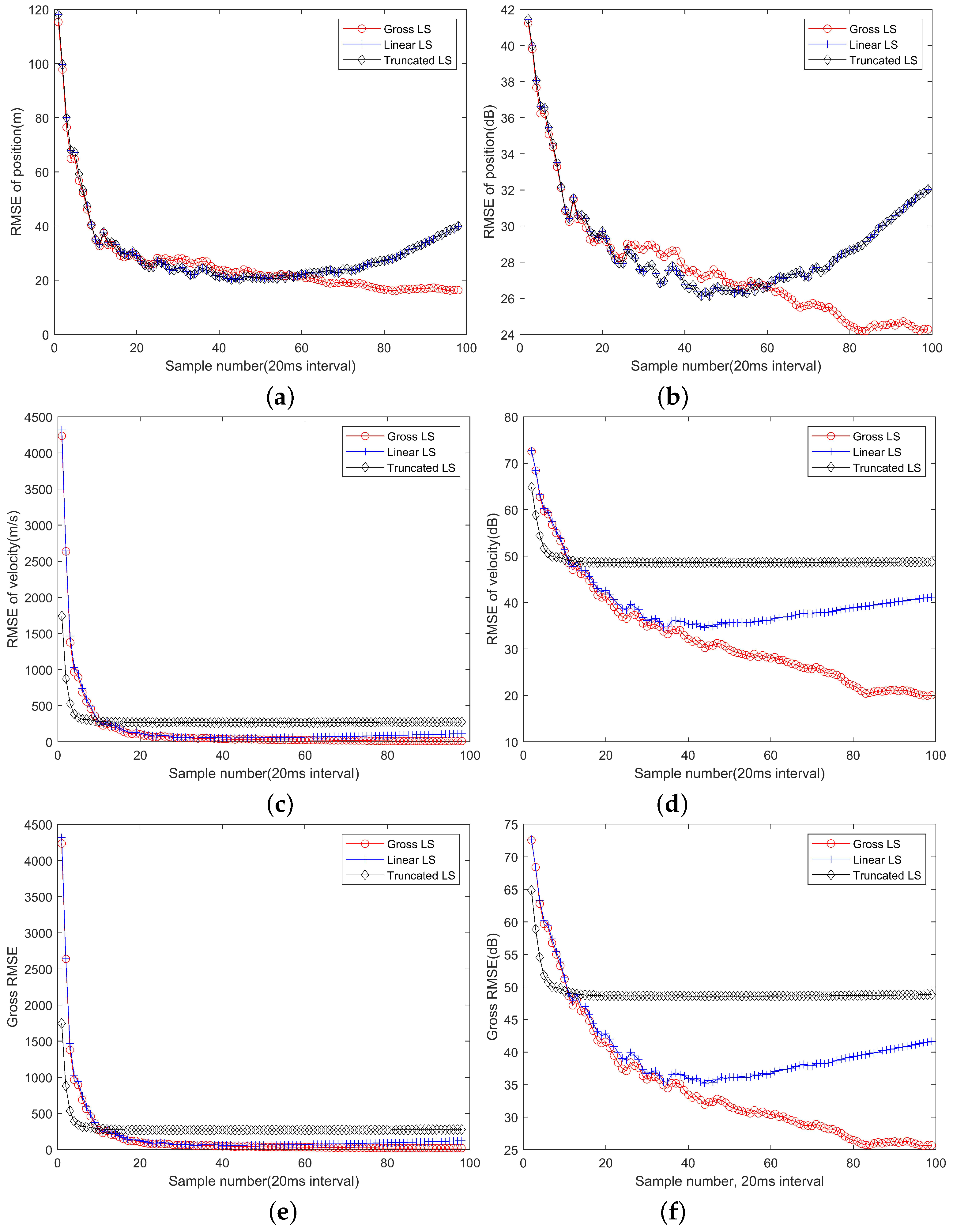

As the number of observations increase, the localization performance will improve.

Figure 3 shows the mean RMSE of position and velocity and the gross RMSE of the gross LS algorithm, the linear LS algorithm and the truncated LS algorithm.

From

Figure 3a,b, it can be seen that with observations in a short while, roughly in about

s corresponding to 40 observations, all the algorithms have close gross RMSE curves. However, as more observations are available, the linear LS algorithm and the truncated LS algorithm will perform worse and the position RMSE even increase with sample number. It is a predictable result, because the linear approximations of the target and platform motion will be inaccurate gradually, resulting a deteriorated positioning performance. The gross LS algorithm will always benefit from the increase of the observations, because it does not rely on the linear approximation, and more observations will contribute more information of the target position.

From

Figure 3c,d, with some initial observations, the truncated LS algorithm performs the best and the linear LS algorithm performs the worst and close to the gross LS algorithm. As more observations are involved, the truncated LS algorithm converges to a level much higher than that can be achieved by the gross LS algorithm and the linear LS algorithm. Therefore, ignoring the term

will cause performance loss for long term observations. The gross LS algorithm is still benefitting from the increase of observations and it is slightly better than the linear LS algorithm for short term observations. The linear LS algorithm can reach a lower RMSE level but will still suffer performance degradation due to the linear model mismatch. With about 40 snapshots of observations, corresponding to 160 observations and

s period, the velocity estimation performances of two algorithms will depart.

The gross RMSE of all the algorithms are shown in

Figure 3e,f, which have very close appearances to

Figure 3c,d. That is because the velocity estimation errors are much greater than the positioning errors. Therefore, although the algorithm can estimate the velocity of targets, the accuracy is low due to a short observation period. In order to estimate the velocity in a higher accuracy, one needs to use observations from a longer period, which can be achieved through a filtering operation.

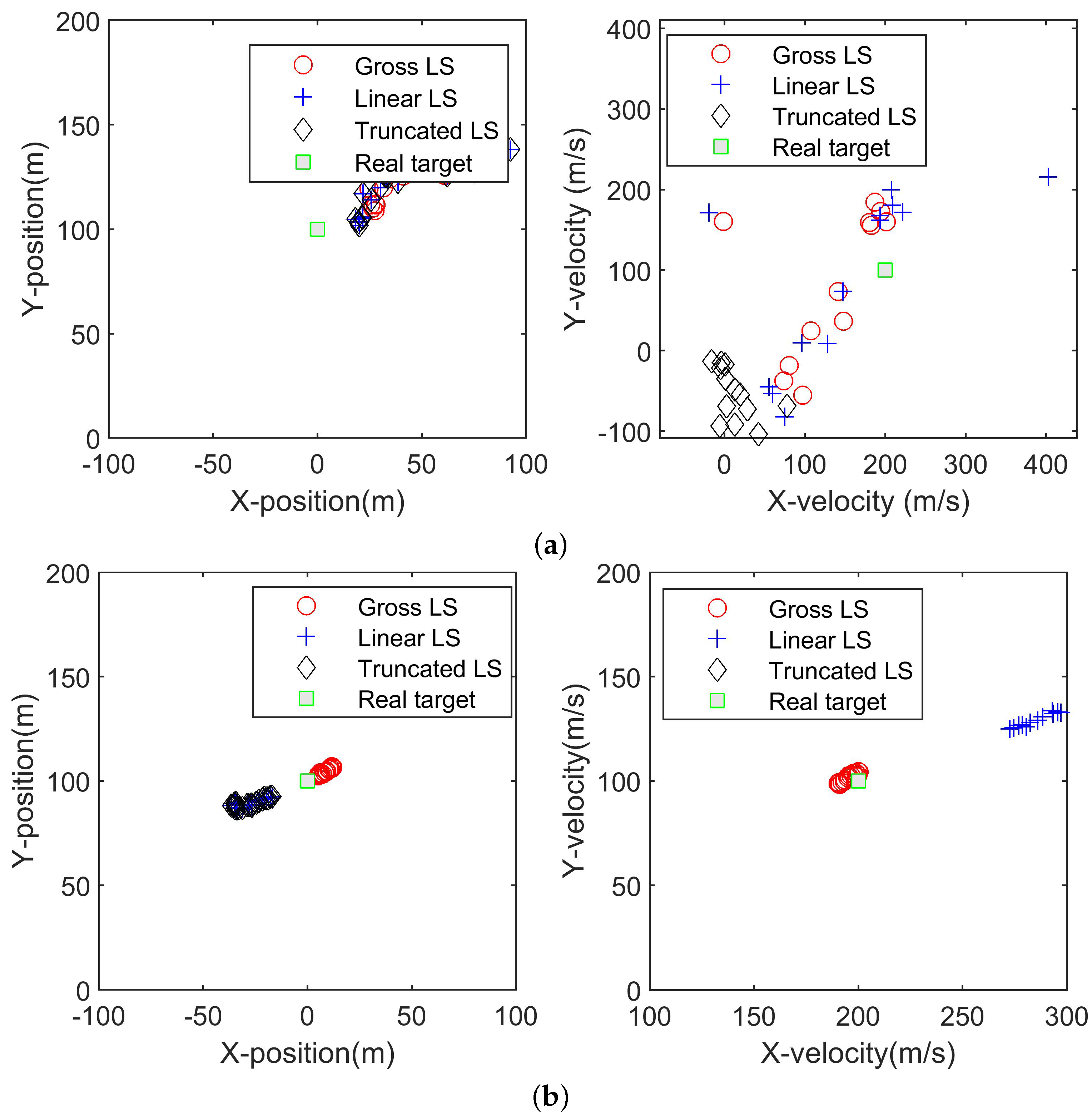

In order to show the way in which the algorithms converge to the real value,

Figure 4a,b are presented to show estimated target positions and velocities at the first 20 snapshots and the latest 20 snapshots, respectively. It can be seen that with a few observations, the linear LS algorithm will converge to the real position of the target to a high accuracy. However, as more observations are available, the gross LS algorithm is closer to the real target position and the linear LS algorithm converges to other locations. Therefore, the gross LS algorithm is more robust in real applications.

3.2. Computation Cost

The advantage of the linear LS algorithm and the truncated LS algorithm lies in its computation cost and communication cost. In applications of the LS algorithms, the locations of the sensor will be approximated by a linear model, which is described by an initial position and a velocity term, with totally 6 parameters. Therefore, it is unnecessary to transmit all observations to the fusion center anymore and thus the communication cost will be reduced. If 100 position estimates are described by 6 parameters, the data to transmit will be reduced to . Of course, there is a limit to which the data can be reduced and the limit depends on the platform speed of the sensors, the positions of sensors, the position of the target, and the periods of the observations.

The computation cost reduction, for both the linear LS algorithm and the truncated LS algorithm, stems from reduced number of multiplication and summation operations at the fusion center. The linear LS algorithm and the truncated LS algorithm can be implemented in a structure like parallel computation, namely, the linear regression of the platform position and the local DOA measures are performed at local sensors, and the fusion center just operates on the results of local sensors. To illustrate this fact, we record the computation times of the 20 random experiments for both the algorithms and show the computation times in

Figure 5a,b, in scale mode and dB mode respectively. It can be seen that as the number of observations increases, the gross LS algorithm requires a longer computation time, but the linear LS algorithm and the truncated LS algorithm have much plain slopes. Meanwhile, the linear LS algorithm needs more computation cost, as a result of estimating

and

. In fact, the computation cost of the linear LS algorithm does not vary with the sample number too much because it always computes with the same number of parameters, namely the number of sensors

N. The computation cost increase due to more observations is imposed over local sensors now.

3.3. The Impact of Velocity Estimation Error

In theoretical derivations, we assumed that the velocities of sensors are estimated by position measures from a device on a platform. In practice, the platform may provide other means to measure the velocity in a higher accuracy. Meanwhile, in order to check whether the performance degradation of the linear LS algorithm in a long period is a result of inaccurate estimation of the platform velocity, we perform a simulation in a way that the estimated velocity is replaced by its real value . In this case, there is no velocity error and the only measurement bias is from position measurement. Note subsequently that the linear LS algorithm shares the same position estimate with the truncated LS algorithm.

The RMSE of position estimation is shown in

Figure 6. In

Figure 6a,b, the sensor location uncertainty is zero-mean normal distributed with

. It can be clearly seen that for the linear LS algorithm, the RMSE curve with real sensor velocity is very close to the RMSE curve using estimated sensor velocity. In order to examine whether a higher position estimation error will make a difference, we make another simulation with

and the results are shown in

Figure 6c,d. Two RMSE curves are still very close. After some experiments with other position measurement errors, we find that the platform location and velocity regression algorithms can reach a high accuracy and thus will not cause too much performance degradation. This conclusion depends heavily on a fact that the numbers of observations under consideration is often huge according to our configurations.

In fact, from (

92), the position estimate of the target does not depend on the velocity estimation of the sensor platform too much. However, there still an insignificant impact, because in our simulation configuration, the sensor location at

is obtained by an interpolation operation and if the platform velocity is exactly known

a priori, the position estimation will be more accurate.

From (

105), the target velocity estimation depends on the sensor velocity more. In order the examine the impact of the sensor velocity estimation on the target velocity estimation performance, we run a simulation with

and the results are shown in

Figure 7a,b, in scale and dB respectively. It can be seen that it makes a little difference to use real sensor velocity instead of estimated velocity, especially in few earlier observations. As more observations are taken into account, it makes a minor different to replace by real platform velocities. That is because more observations make the velocity estimation more accurate. However, accurate sensor velocity information does not make the target velocity estimation better necessarily, and sometimes, its impact is a bit negative. As the target also moves in a constant velocity, it is reasonable to infer that the linear approximation of the signal DOA has a great impact on the target position and velocity estimation accuracy, as will be analyzed in the subsequent results.

3.4. Nonlinearity of the DOA Approximation

In order to examine the impact of DOA nonlinearity on the final performance,

Figure 7c,d show the azimuth angles and elevation angles of the target in the four sensors. The azimuth angle and elevation angle change by about

at most during 100 snapshots. Over 100 observations, corresponding to 2 s, the nonlinearity of both DOA angles becomes obvious. One should refer to explicit numerical quantities to evaluate the nonlinearity acceptable.

4. Conclusions

This paper studies the target position and velocity estimation problem with distributed passive sensors. The problem is formulated with distributed asynchronous sensors connected to a fusion center with communication links. We first present a gross LS algorithm that takes all angle observations from distributed sensors into account to make a LS estimation. The algorithm is simplified after some matrix manipulations, but as it needs local sensors transmitting all local observations to a fusion center, the communication cost is high. Meanwhile, the computation cost at the fusion center is also high. The communication cost is mainly a result of high-dimensional received data. In order to reduce the communication cost and computation cost, we present a linear LS algorithm that approximates local sensor locations and angle observations with linear models and then estimate target position and velocity with the parameters of the linear models. In order to simplify the velocity estimation, we also present a truncated LS algorithm that just take an average operation to estimate target velocity. In this manner, both the communication cost and the computation cost at the fusion center are reduced significantly. However, the linear LS algorithm and the truncated LS algorithm faces the model mismatching problem, namely, if the linear approximation is not accurate anymore, the performance may degrade greatly. That is a difference from the gross LS algorithm, which always benefits from more observations, as if the linear target position model holds.

The performance of the concerned algorithms is verified with numerical results. It is found that with less observations, the truncated LS algorithm performs the best. As the number of observations increase, the linear LS algorithm and the gross LS algorithm perform better. With more observations available, the linear model mismatch and then the gross LS algorithm perform the best. The gross LS algorithm always benefits from more local observations, which is a difference from the other two algorithms. The cost is a higher communication cost and a higher computation cost at fusion center. We also examined the angle distortion problem that is the only nonlinear term in the simulation configurations. Our matrix operations often make the estimation need less computation costs.

Compared to localization and tracking framework, the algorithms with velocity estimation needs a much lower rate of tracking operations, whose matrix inverse operation often need huge computation cost. Meanwhile, it can provide more accurate measures of target states and the tracking algorithm will also benefit from that. In our simulations, the sensor location error is not taken into account. In practice, this is inevitable. If the self-positioning error is non zero-mean Gaussian distributed, one may incorporate this goal in a distributed angle-only based positioning algorithm, which will be considered in our future works.