A Symbols Based BCI Paradigm for Intelligent Home Control Using P300 Event-Related Potentials

Abstract

1. Introduction

2. Materials and Methods

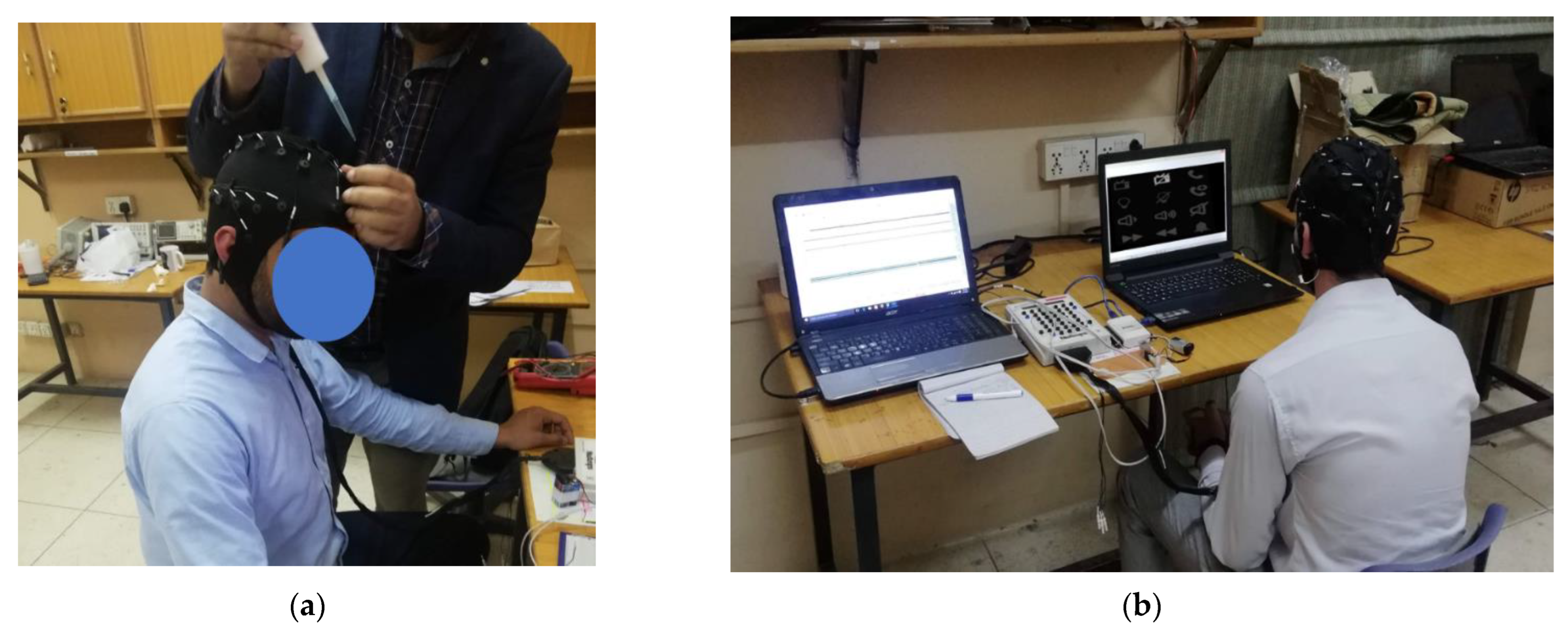

2.1. Participants

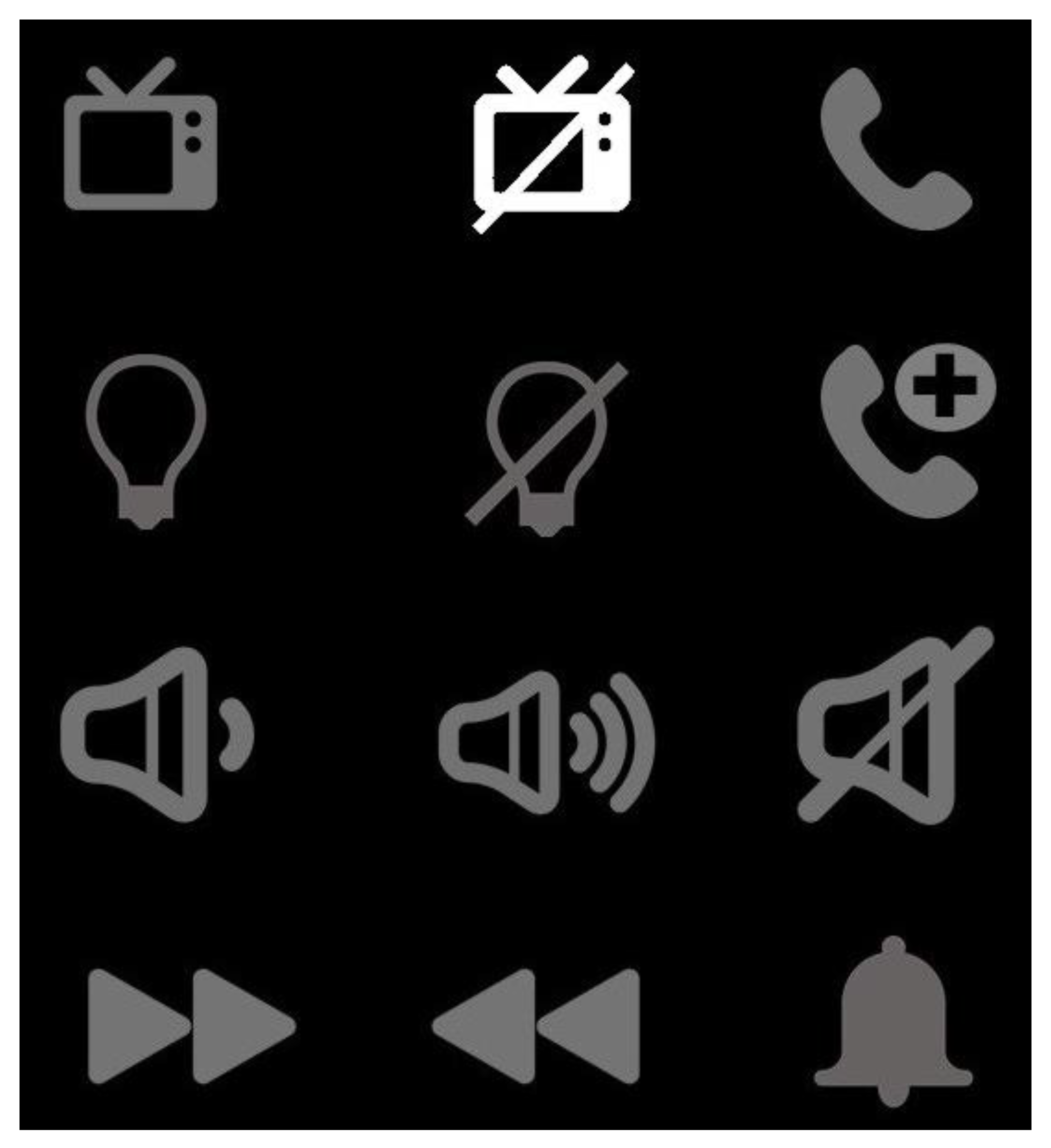

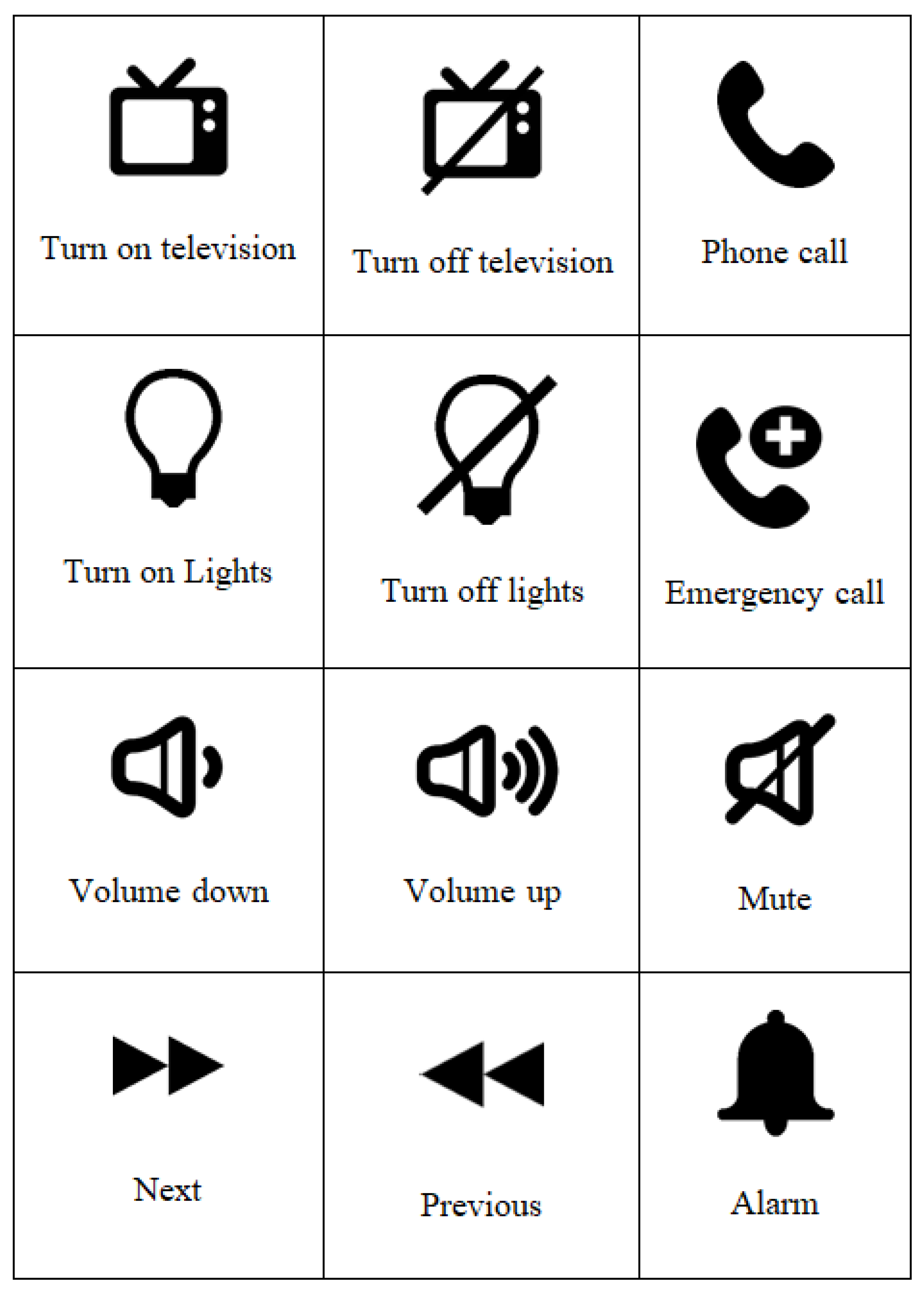

2.2. Primary Display for Controlling Home Appliances

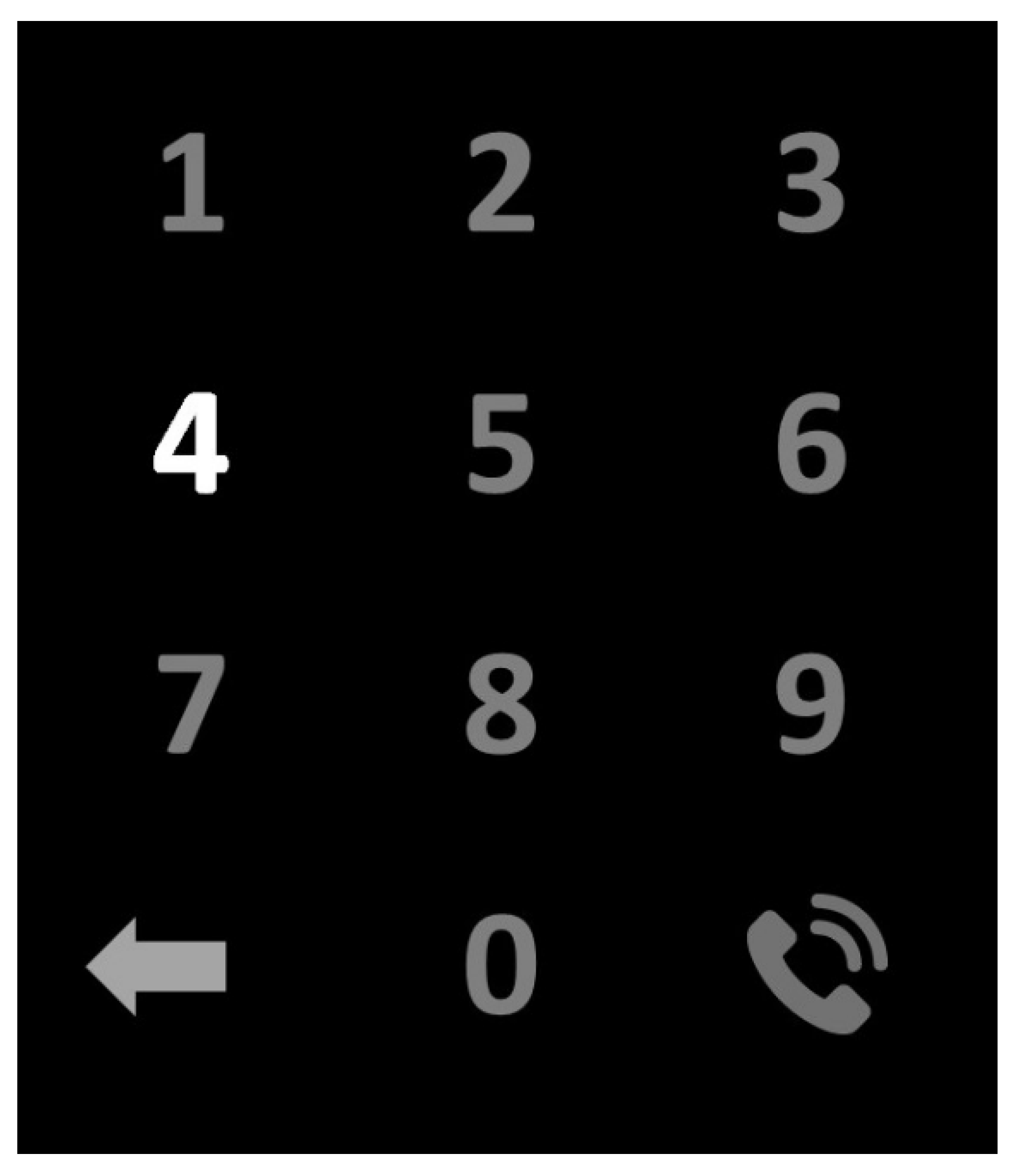

2.3. Secondary Display for Making Phone Calls

2.4. Experimental Setup

2.5. Signal Processing and Classification

3. Results

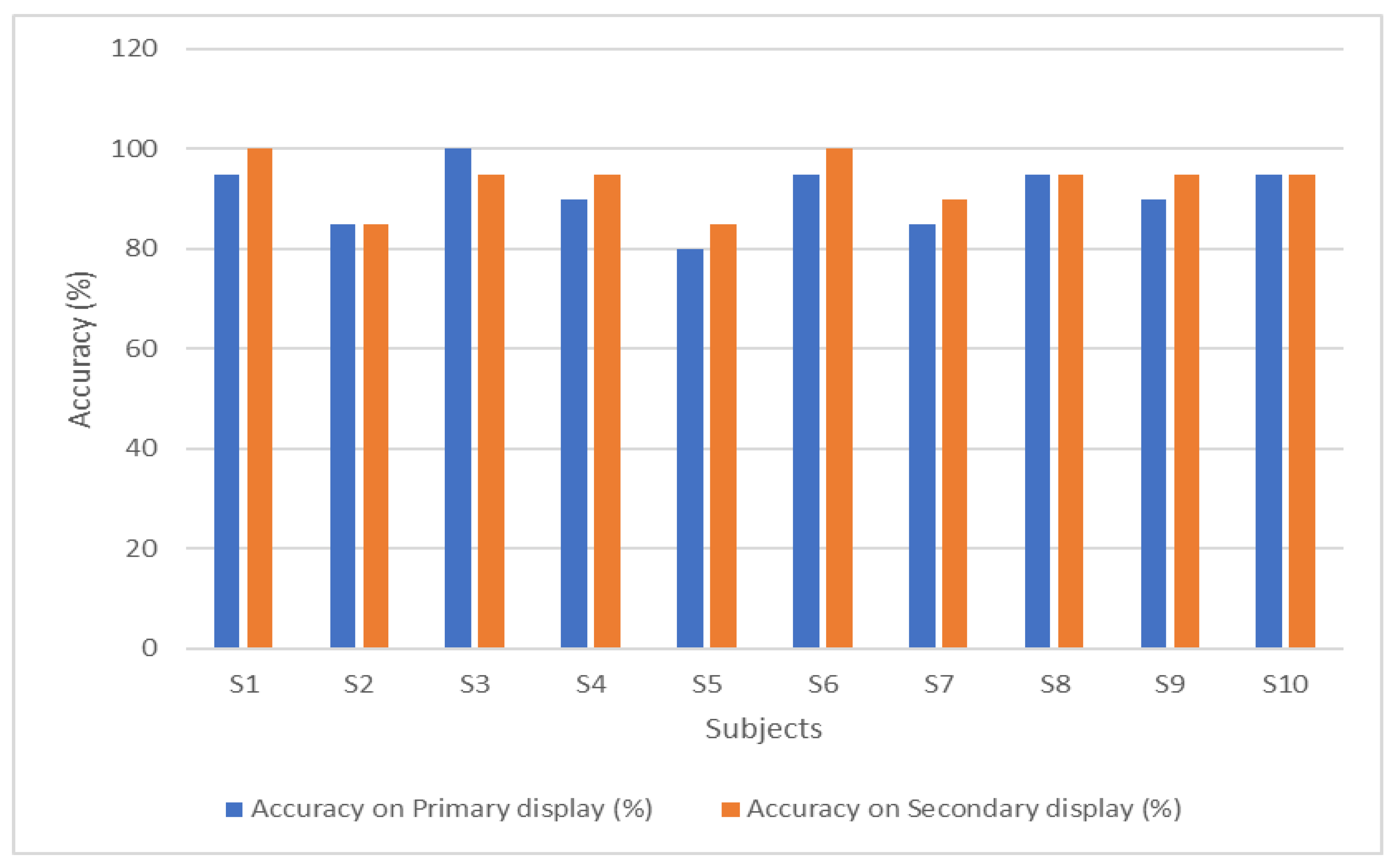

3.1. Experimental Results

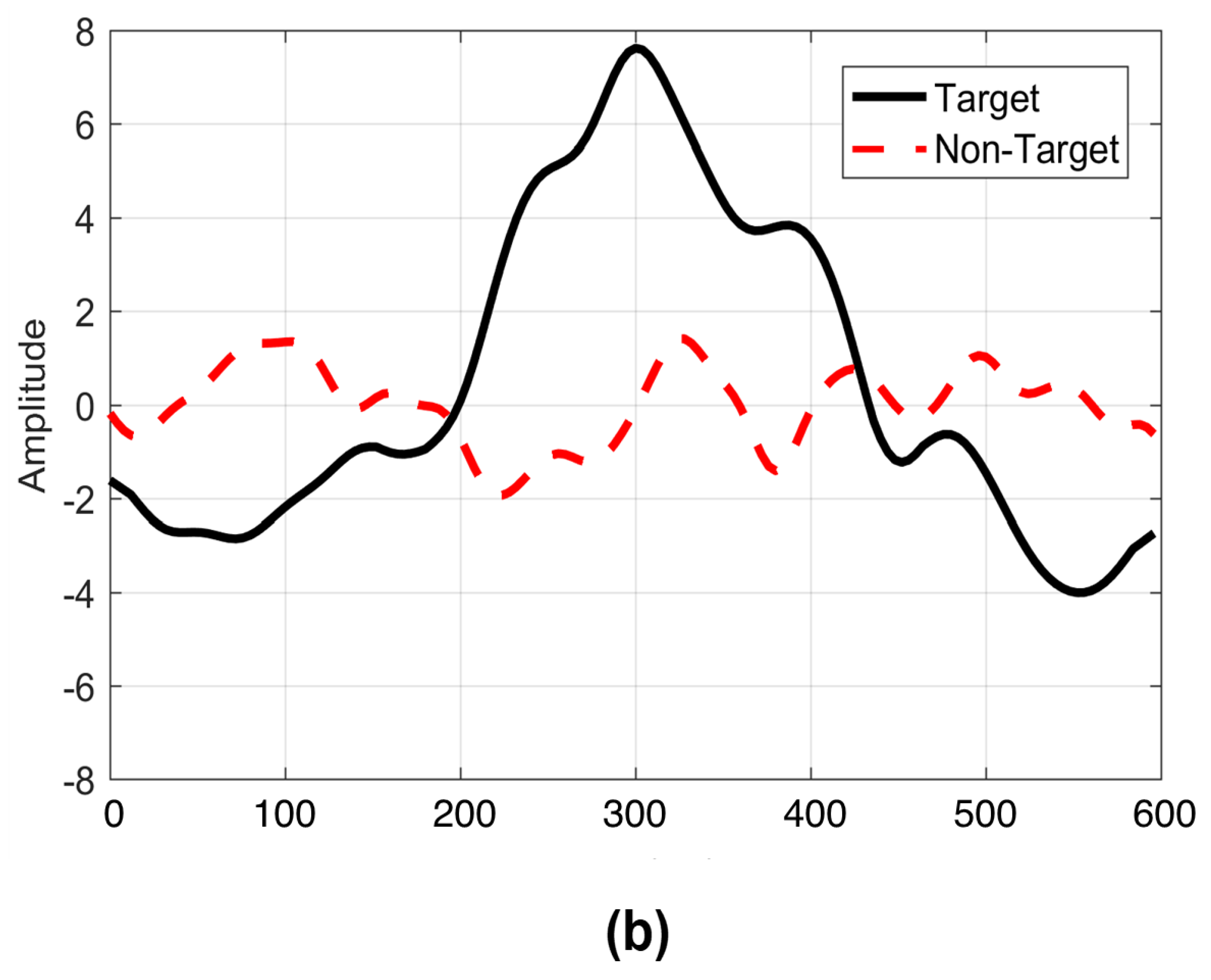

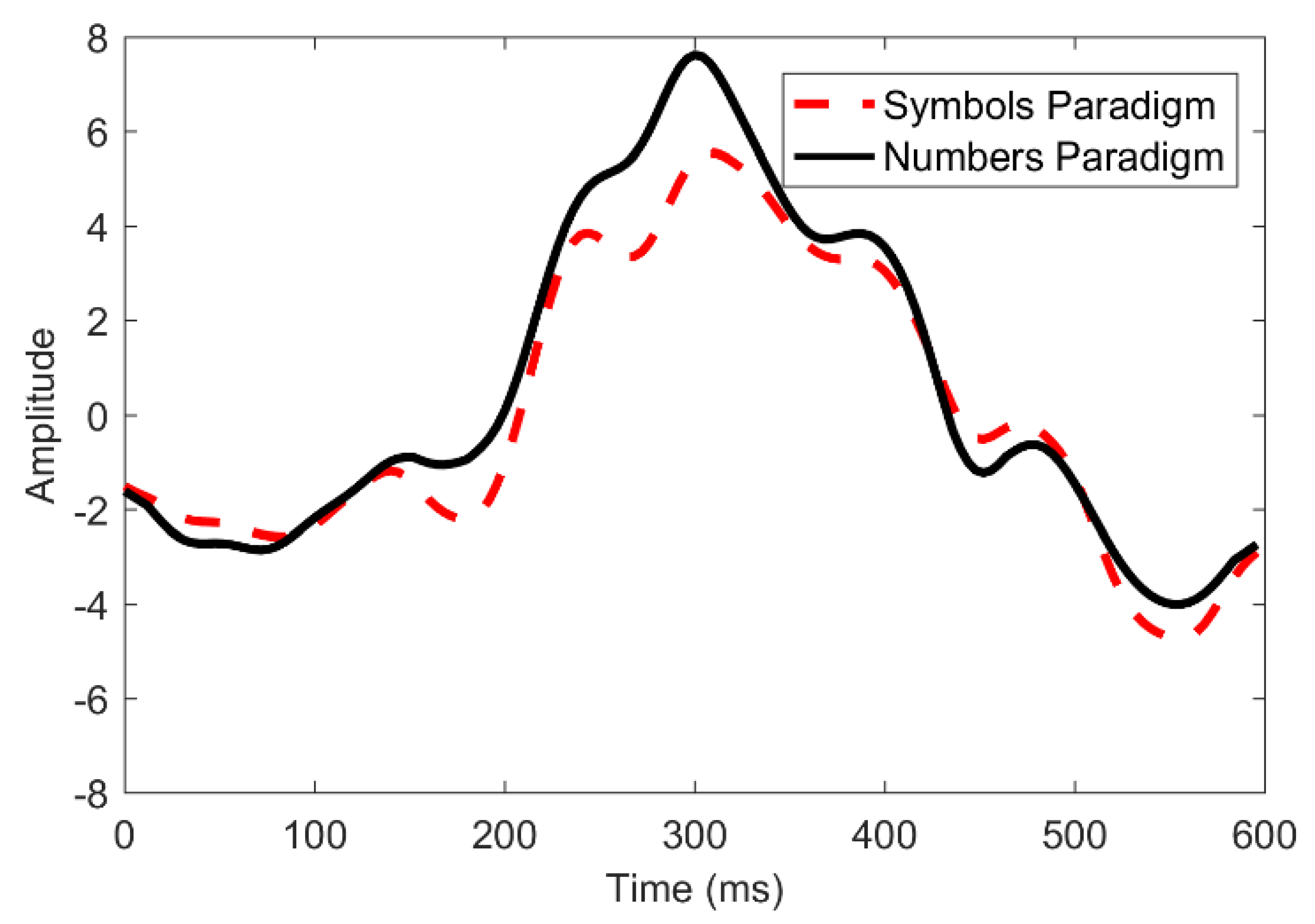

3.2. Waveform Morphologies

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| BCI | Brain-Computer Interface |

| CT | Computer Tomography |

| ECoG | Electrocorticography |

| EEG | Electroencephalography |

| EMG | Electromyography |

| ERP | Event Related Potential |

| fMRI | functional Magnetic Resonance Imaging |

| fNIRS | functional near-infrared spectroscopy |

| kNN | k-Nearest Neighbors |

| LDA | Linear Discriminant Analysis |

| MEG | Magnetoencephalography |

| PET | Positron Emission Tomography |

| RF | Random Forest |

| SSVEP | Steady-State Visual Evoked Potentials |

| SVM | Support Vector Machine |

References

- Laureys, S.; Pellas, F.; van Eeckhout, P.; Ghorbel, S.; Schnakers, C.; Perrin, F.; Berré, J.; Faymonville, M.E.; Pantke, K.H.; Damas, F.; et al. The Locked-in Syndrome: What Is It like to Be Conscious but Paralyzed and Voiceless? Prog. Brain Res. 2005, 150, 495–611. [Google Scholar] [CrossRef] [PubMed]

- Smith, E.; Delargy, M. Locked-in Syndrome. BMJ 2005, 330, 406–409. [Google Scholar] [CrossRef] [PubMed]

- Lulé, D.; Zickler, C.; Häcker, S.; Bruno, M.A.; Demertzi, A.; Pellas, F.; Laureys, S.; Kübler, A. Life Can Be Worth Living in Locked-in Syndrome. Prog. Brain Res. 2009, 177, 339–351. [Google Scholar] [CrossRef] [PubMed]

- Kaufmann, T.; Völker, S.; Gunesch, L.; Kübler, A. Spelling Is Just a Click Away—A User-Centered Brain-Computer Interface Including Auto-Calibration and Predictive Text Entry. Front. Neurosci. 2012, 6, 72. [Google Scholar] [CrossRef]

- Kübler, A.; Furdea, A.; Halder, S.; Hammer, E.M.; Nijboer, F.; Kotchoubey, B. A Brain-Computer Interface Controlled Auditory Event-Related Potential (P300) Spelling System for Locked-In Patients. Ann. N. Y. Acad. Sci. 2009, 1157, 90–100. [Google Scholar] [CrossRef]

- Akram, F.; Han, H.S.; Kim, T.S. A P300-Based Brain Computer Interface System for Words Typing. Comput. Biol. Med. 2014, 45, 118–125. [Google Scholar] [CrossRef]

- Zgallai, W.; Brown, J.T.; Ibrahim, A.; Mahmood, F.; Mohammad, K.; Khalfan, M.; Mohammed, M.; Salem, M.; Hamood, N. Deep Learning AI Application to an EEG Driven BCI Smart Wheelchair. In Proceedings of the 2019 Advances in Science and Engineering Technology International Conferences, ASET 2019, Dubai, United Arab Emirates, 26 March–10 April 2019; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2019. [Google Scholar]

- Sakkalis, V.; Krana, M.; Farmaki, C.; Bourazanis, C.; Gaitatzis, D.; Pediaditis, M. Augmented Reality Driven Steady-State Visual Evoked Potentials for Wheelchair Navigation. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2960–2969. [Google Scholar] [CrossRef]

- McFarland, D.J.; Wolpaw, J.R. Brain-Computer Interface Operation of Robotic and Prosthetic Devices. Computer 2008, 41, 52–56. [Google Scholar] [CrossRef]

- Heo, D.; Kim, M.; Kim, J.; Choi, Y.J.; Kim, S.-P. The Uses of Brain-Computer Interface in Different Postures to Application in Real Life. In Proceedings of the 10th International Winter Conference on Brain-Computer Interface (BCI), Gangwon-do, Republic of Korea, 21–23 February 2022; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2022; pp. 1–5. [Google Scholar]

- Coogan, C.G.; He, B. Brain-Computer Interface Control in a Virtual Reality Environment and Applications for the Internet of Things. IEEE Access 2018, 6, 10840–10849. [Google Scholar] [CrossRef]

- Khan, M.A.; Das, R.; Iversen, H.K.; Puthusserypady, S. Review on Motor Imagery Based BCI Systems for Upper Limb Post-Stroke Neurorehabilitation: From Designing to Application. Comput. Biol. Med. 2020, 123, 103843. [Google Scholar] [CrossRef]

- Yu, Y.; Zhou, Z.; Yin, E.; Jiang, J.; Tang, J.; Liu, Y.; Hu, D. Toward Brain-Actuated Car Applications: Self-Paced Control with a Motor Imagery-Based Brain-Computer Interface. Comput. Biol. Med. 2016, 77, 148–155. [Google Scholar] [CrossRef] [PubMed]

- Gannouni, S.; Alangari, N.; Mathkour, H.; Aboalsamh, H.; Belwafi, K. BCWB: A P300 Brain-Controlled Web Browser. Int. J. Semant. Web Inf. Syst. 2017, 13, 55–73. [Google Scholar] [CrossRef]

- Prasath, M.S.; Naveen, R.; Sivaraj, G. Mind-Controlled Unmanned Aerial Vehicle (UAV) Using Brain–Computer Interface (BCI). Unmanned Aer. Veh. Internet Things 2021, 13, 231–246. [Google Scholar] [CrossRef]

- Masud, U.; Saeed, T.; Akram, F.; Malaikah, H.; Akbar, A. Unmanned Aerial Vehicle for Laser Based Biomedical Sensor Development and Examination of Device Trajectory. Sensors 2022, 22, 3413. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Li, F.; Pan, J.; Zhang, D.; Zhao, S.; Li, J.; Wang, F. The MindGomoku: An Online P300 BCI Game Based on Bayesian Deep Learning. Sensors 2021, 21, 1613. [Google Scholar] [CrossRef] [PubMed]

- Dutta, S.; Banerjee, T.; Roy, N.D.; Chowdhury, B.; Biswas, A. Development of a BCI-Based Gaming Application to Enhance Cognitive Control in Psychiatric Disorders. Innov. Syst. Softw. Eng. 2021, 17, 99–107. [Google Scholar] [CrossRef]

- Louis, J.D.; Alikhademi, K.; Joseph, R.; Gilbert, J.E. Mind Games: A Web-Based Multiplayer Brain-Computer Interface Game. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2022, 66, 2234–2238. [Google Scholar] [CrossRef]

- Farwell, L.A.; Donchin, E. Talking off the Top of Your Head: Toward a Mental Prosthesis Utilizing Event-Related Brain Potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Piccione, F.; Giorgi, F.; Tonin, P.; Priftis, K.; Giove, S.; Silvoni, S.; Palmas, G.; Beverina, F. P300-Based Brain Computer Interface: Reliability and Performance in Healthy and Paralysed Participants. Clin. Neurophysiol. 2006, 117, 531–537. [Google Scholar] [CrossRef]

- Citi, L.; Poli, R.; Cinel, C.; Sepulveda, F. P300-Based BCI Mouse with Genetically-Optimized Analogue Control. IEEE Trans. Neural Syst. Rehabil. Eng. 2008, 16, 51–61. [Google Scholar] [CrossRef]

- Martínez-Cagigal, V.; Santamaría-Vázquez, E.; Gomez-Pilar, J.; Hornero, R. A Brain–Computer Interface Web Browser for Multiple Sclerosis Patients. Neurol. Disord. Imaging Phys. Vol. 2 Eng. Clin. Perspect. Mult. Scler. 2019, 2, 327–357. [Google Scholar] [CrossRef]

- Bai, L.; Yu, T.; Li, Y. A Brain Computer Interface-Based Explorer. J. Neurosci. Methods 2015, 244, 2–7. [Google Scholar] [CrossRef] [PubMed]

- Finke, A.; Lenhardt, A.; Ritter, H. The MindGame: A P300-Based Brain-Computer Interface Game. Neural. Netw. 2009, 22, 1329–1333. [Google Scholar] [CrossRef] [PubMed]

- Eidel, M.; Kübler, A. Wheelchair Control in a Virtual Environment by Healthy Participants Using a P300-BCI Based on Tactile Stimulation: Training Effects and Usability. Front. Hum. Neurosci. 2020, 14, 265. [Google Scholar] [CrossRef] [PubMed]

- Hoffmann, U.; Vesin, J.-M.; Ebrahimi, T.; Diserens, K. An Efficient P300-Based Brain-Computer Interface for Disabled Subjects. J. Neurosci. Methods 2008, 167, 115–125. [Google Scholar] [CrossRef] [PubMed]

- Achanccaray, D.; Flores, C.; Fonseca, C.; Andreu-Perez, J. A P300-Based Brain Computer Interface for Smart Home Interaction through an ANFIS Ensemble. In Proceedings of the IEEE International Conference on Fuzzy Systems, Naples, Italy, 9–12 July 2017; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2017. [Google Scholar]

- Cortez, S.A.; Flores, C.; Andreu-Perez, J. A Smart Home Control Prototype Using a P300-Based Brain–Computer Interface for Post-Stroke Patients. In Proceedings of the 5th Brazilian Technology Symposium. Smart Innovation, Systems and Technologies; Springer Science and Business Media Deutschland GmbH: Cham, Switzerland, 2021; Volume 202, pp. 131–139. [Google Scholar] [CrossRef]

- Carabalona, R.; Grossi, F.; Tessadri, A.; Castiglioni, P.; Caracciolo, A.; de Munari, I. Light on! Real World Evaluation of a P300-Based Brain-Computer Interface (BCI) for Environment Control in a Smart Home. Ergonomics 2012, 55, 552–563. [Google Scholar] [CrossRef]

- Park, S.; Cha, H.S.; Kwon, J.; Kim, H.; Im, C.H. Development of an Online Home Appliance Control System Using Augmented Reality and an SSVEP-Based Brain-Computer Interface. In Proceedings of the 8th International Winter Conference on Brain-Computer Interface, BCI 2020, Gangwon-do, Republic of Korea, 26–28 February 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2020. [Google Scholar]

- Kais, B.; Ghaffari, F.; Romain, O.; Djemal, R. An Embedded Implementation of Home Devices Control System Based on Brain Computer Interface. Proc. Int. Conf. Microelectron. ICM 2014, 2015, 140–143. [Google Scholar] [CrossRef]

- Edlinger, G.; Holzner, C.; Guger, C. A Hybrid Brain-Computer Interface for Smart Home Control. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 9–14 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 417–426. [Google Scholar]

- Katyal, A.; Singla, R. A Novel Hybrid Paradigm Based on Steady State Visually Evoked Potential & P300 to Enhance Information Transfer Rate. Biomed. Signal Process. Control 2020, 59, 101884. [Google Scholar] [CrossRef]

- Chai, X.; Zhang, Z.; Guan, K.; Lu, Y.; Liu, G.; Zhang, T.; Niu, H. A Hybrid BCI-Controlled Smart Home System Combining SSVEP and EMG for Individuals with Paralysis. Biomed. Signal Process. Control 2020, 56, 101687. [Google Scholar] [CrossRef]

- Uyanik, C.; Khan, M.A.; Das, R.; Hansen, J.P.; Puthusserypady, S. Brainy Home: A Virtual Smart Home and Wheelchair Control Application Powered by Brain Computer Interface. Biodevices 2022, 1, 134–141. [Google Scholar] [CrossRef]

- Kim, H.J.; Lee, M.H.; Lee, M. A BCI Based Smart Home System Combined with Event-Related Potentials and Speech Imagery Task. In Proceedings of the 2020 8th International Winter Conference on Brain-Computer Interface (BCI), Gangwon-do, Republic of Korea, 26–28 February 2020. [Google Scholar] [CrossRef]

- Masud, U.; Baig, M.I.; Akram, F.; Kim, T.-S. A P300 Brain Computer Interface Based Intelligent Home Control System Using a Random Forest Classifier. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence, Honolulu, HI, USA, 27 November–1 December 2017; Volume 2018. [Google Scholar]

- Lee, T.; Kim, M.; Kim, S.-P. Improvement of P300-Based Brain–Computer Interfaces for Home Appliances Control by Data Balancing Techniques. Sensors 2020, 20, 5576. [Google Scholar] [CrossRef] [PubMed]

- Vega, C.F.; Quevedo, J.; Escandón, E.; Kiani, M.; Ding, W.; Andreu-Perez, J. Fuzzy Temporal Convolutional Neural Networks in P300-Based Brain–Computer Interface for Smart Home Interaction. Appl. Soft Comput. 2022, 117, 108359. [Google Scholar] [CrossRef]

- Shukla, P.K.; Chaurasiya, R.K.; Verma, S.; Sinha, G.R. A Thresholding-Free State Detection Approach for Home Appliance Control Using P300-Based BCI. IEEE Sens. J. 2021, 21, 16927–16936. [Google Scholar] [CrossRef]

- Shukla, P.K.; Chaurasiya, R.K.; Verma, S. Performance Improvement of P300-Based Home Appliances Control Classification Using Convolution Neural Network. Biomed. Signal Process. Control 2021, 63, 102220. [Google Scholar] [CrossRef]

- Allison, B.Z.; Pineda, J.A. ERPs Evoked by Different Matrix Sizes: Implications for a Brain Computer Interface (BCI) System. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 110–113. [Google Scholar] [CrossRef]

- Linden, D.E.J. The P300: Where in the Brain Is It Produced and What Does It Tell Us? Neuroscientist 2016, 11, 563–576. [Google Scholar] [CrossRef]

- Bledowski, C.; Prvulovic, D.; Hoechstetter, K.; Scherg, M.; Wibral, M.; Goebel, R.; Linden, D.E.J. Localizing P300 Generators in Visual Target and Distractor Processing: A Combined Event-Related Potential and Functional Magnetic Resonance Imaging Study. J. Neurosci. 2004, 24, 9353–9360. [Google Scholar] [CrossRef]

- Akram, F.; Han, S.M.; Kim, T.-S. An Efficient Word Typing P300-BCI System Using a Modified T9 Interface and Random Forest Classifier. Comput. Biol. Med. 2015, 56, 30–36. [Google Scholar] [CrossRef]

- Kaufmann, T.; Schulz, S.M.; Grünzinger, C.; Kübler, A. Flashing Characters with Famous Faces Improves ERP-Based Brain-Computer Interface Performance. J. Neural. Eng. 2011, 8, 56016. [Google Scholar] [CrossRef]

| Intensification time | 100 ms |

| Inter-stimulus blank time | 75 ms |

| Total Symbols | 12 |

| Number of repetitions for each symbol | 15 |

| Subjects | Accuracy on Primary Display (%) | Accuracy on Secondary Display (%) | Average Accuracy (%) |

|---|---|---|---|

| S1 | 95 | 100 | 97.5 |

| S2 | 85 | 85 | 85 |

| S3 | 100 | 95 | 97.5 |

| S4 | 90 | 95 | 92.5 |

| S5 | 80 | 85 | 82.5 |

| S6 | 95 | 100 | 97.5 |

| S7 | 85 | 90 | 87.5 |

| S8 | 95 | 95 | 95 |

| S9 | 90 | 95 | 92.5 |

| S10 | 95 | 95 | 95 |

| Mean | 91 | 93.5 | 92.25 |

| Subjects | Random Forest | SVM | LDA | kNN |

|---|---|---|---|---|

| S1 | 97.5 | 95 | 92.5 | 95 |

| S2 | 85 | 85 | 82.5 | 85 |

| S3 | 97.5 | 97.5 | 95 | 92.5 |

| S4 | 92.5 | 95 | 90 | 92.5 |

| S5 | 82.5 | 85 | 82.5 | 80 |

| S6 | 97.5 | 92.5 | 90 | 92.5 |

| S7 | 87.5 | 85 | 87.5 | 87.5 |

| S8 | 95 | 95 | 92.5 | 90 |

| S9 | 92.5 | 92.5 | 90 | 95 |

| S10 | 95 | 87.5 | 90 | 92.5 |

| Mean | 92.25 | 91 | 89.25 | 90.25 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akram, F.; Alwakeel, A.; Alwakeel, M.; Hijji, M.; Masud, U. A Symbols Based BCI Paradigm for Intelligent Home Control Using P300 Event-Related Potentials. Sensors 2022, 22, 10000. https://doi.org/10.3390/s222410000

Akram F, Alwakeel A, Alwakeel M, Hijji M, Masud U. A Symbols Based BCI Paradigm for Intelligent Home Control Using P300 Event-Related Potentials. Sensors. 2022; 22(24):10000. https://doi.org/10.3390/s222410000

Chicago/Turabian StyleAkram, Faraz, Ahmed Alwakeel, Mohammed Alwakeel, Mohammad Hijji, and Usman Masud. 2022. "A Symbols Based BCI Paradigm for Intelligent Home Control Using P300 Event-Related Potentials" Sensors 22, no. 24: 10000. https://doi.org/10.3390/s222410000

APA StyleAkram, F., Alwakeel, A., Alwakeel, M., Hijji, M., & Masud, U. (2022). A Symbols Based BCI Paradigm for Intelligent Home Control Using P300 Event-Related Potentials. Sensors, 22(24), 10000. https://doi.org/10.3390/s222410000