An Efficient Algorithm for Infrared Earth Sensor with a Large Field of View

Abstract

1. Introduction

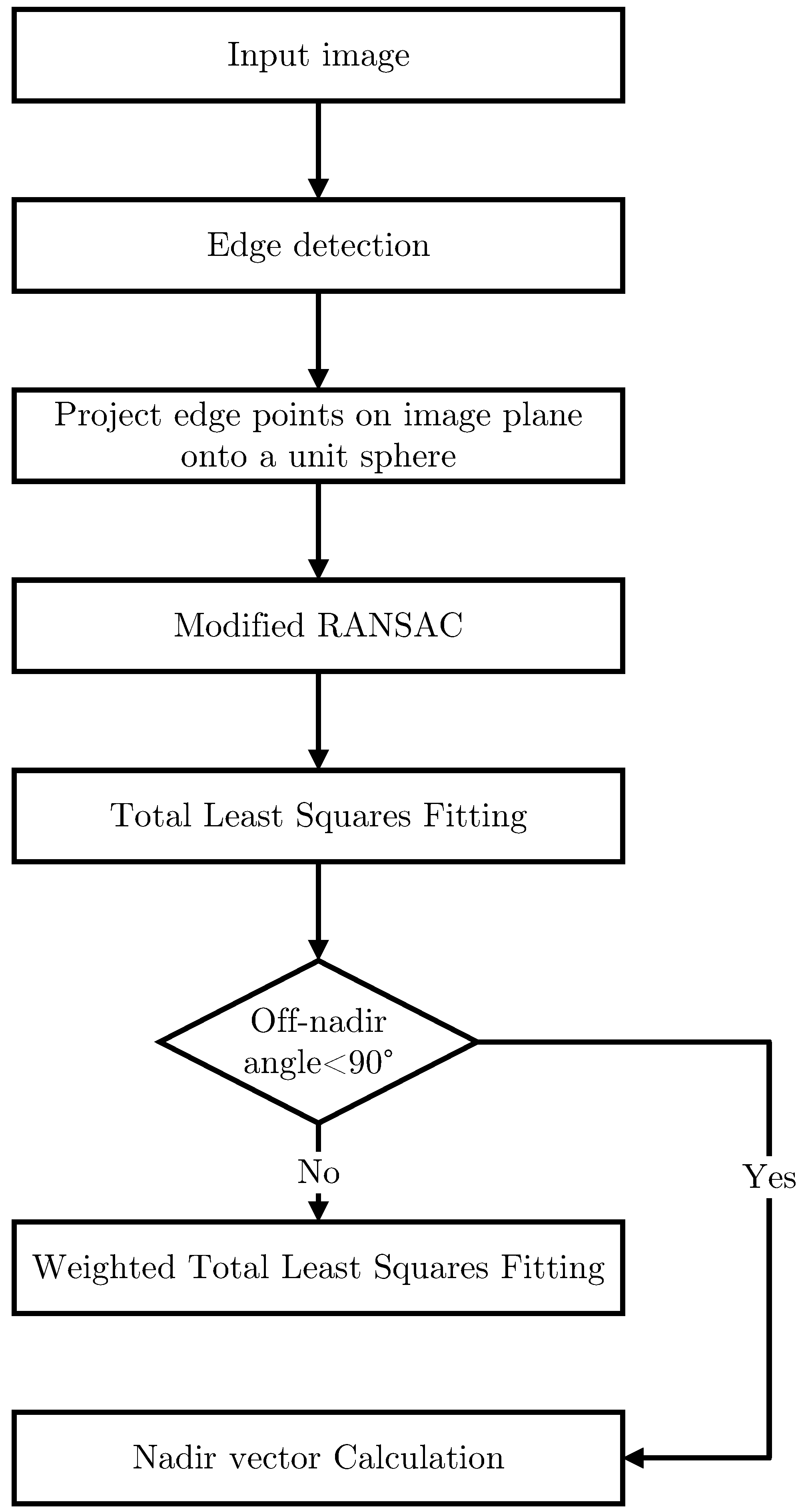

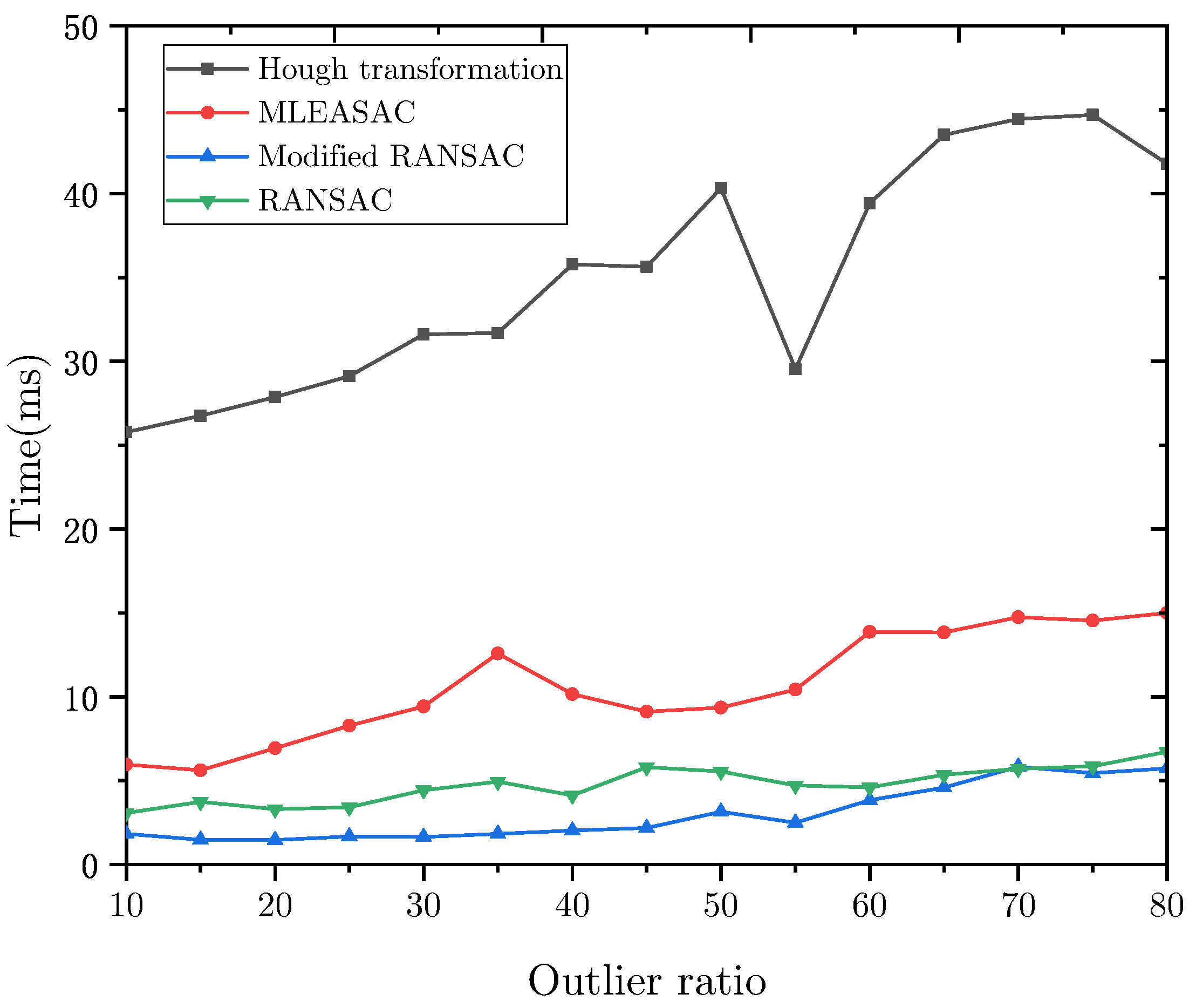

- A modified RANSAC with a pre-verification procedure is used to remove outliers. A small amount of data instead of all measured data are used to qualify the established models, which is the pre-verification procedure that improves the efficiency.

- The Earth horizon points are mapped onto the unit sphere instead of the image plane, which forms a three-dimensional curve instead of a conic section. The 3D curve fitting is more robust than conic fitting for PAL images or fisheye images.

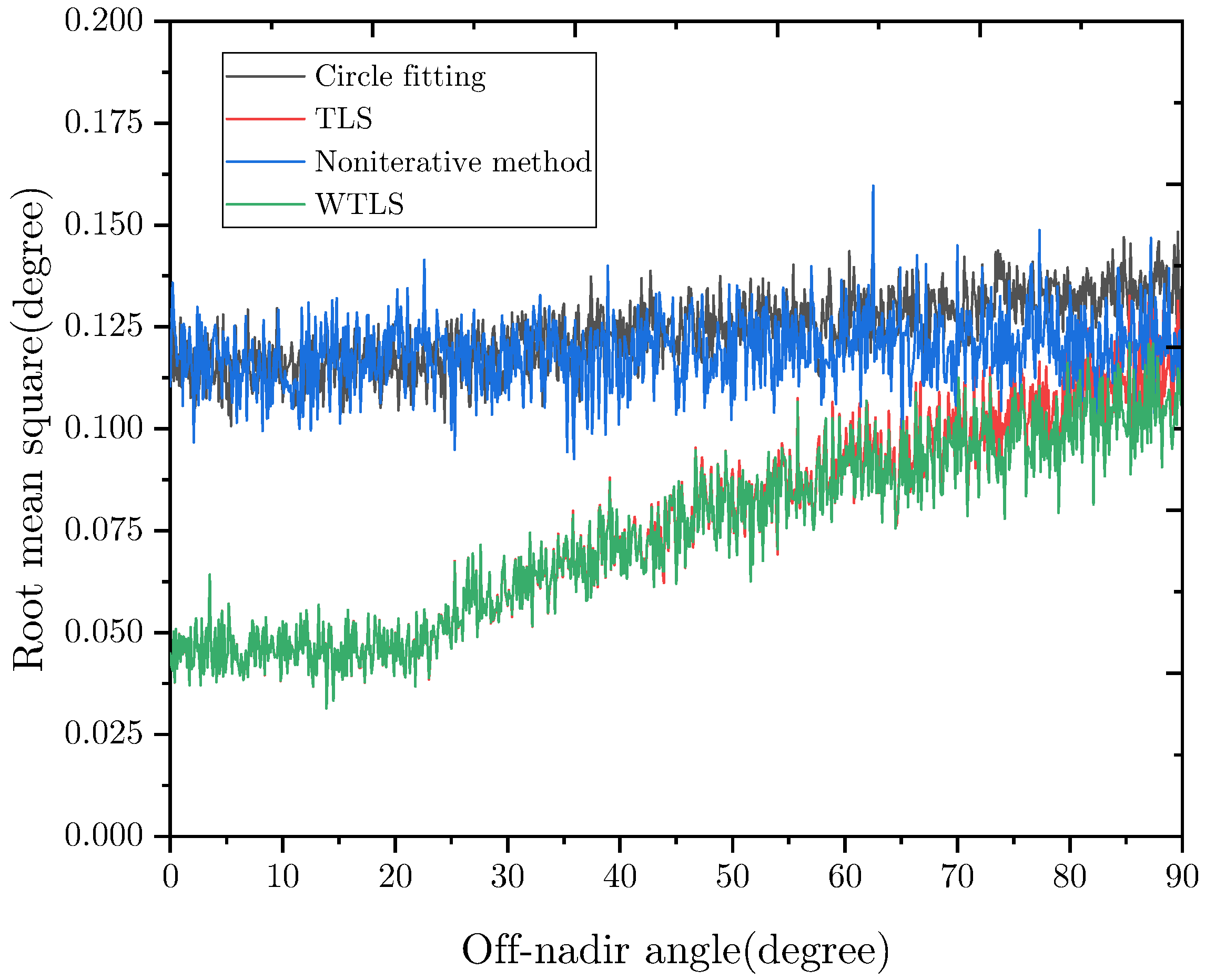

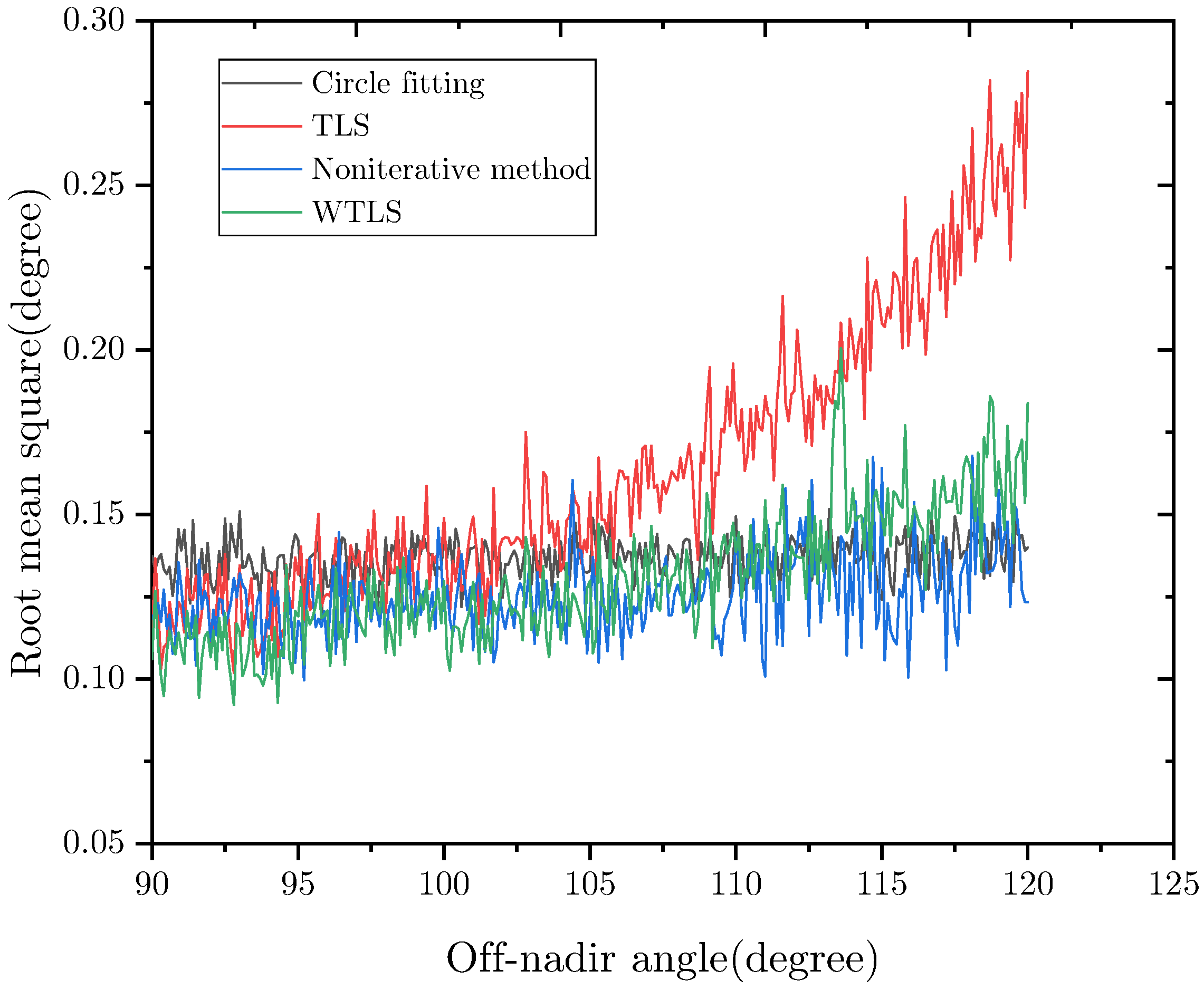

- The WTLS is introduced into the 3D curve fitting which is different for each horizon point’s precision. Consequently, the accuracy of the sensor is improved.

2. Algorithm Description

2.1. Edge Detection

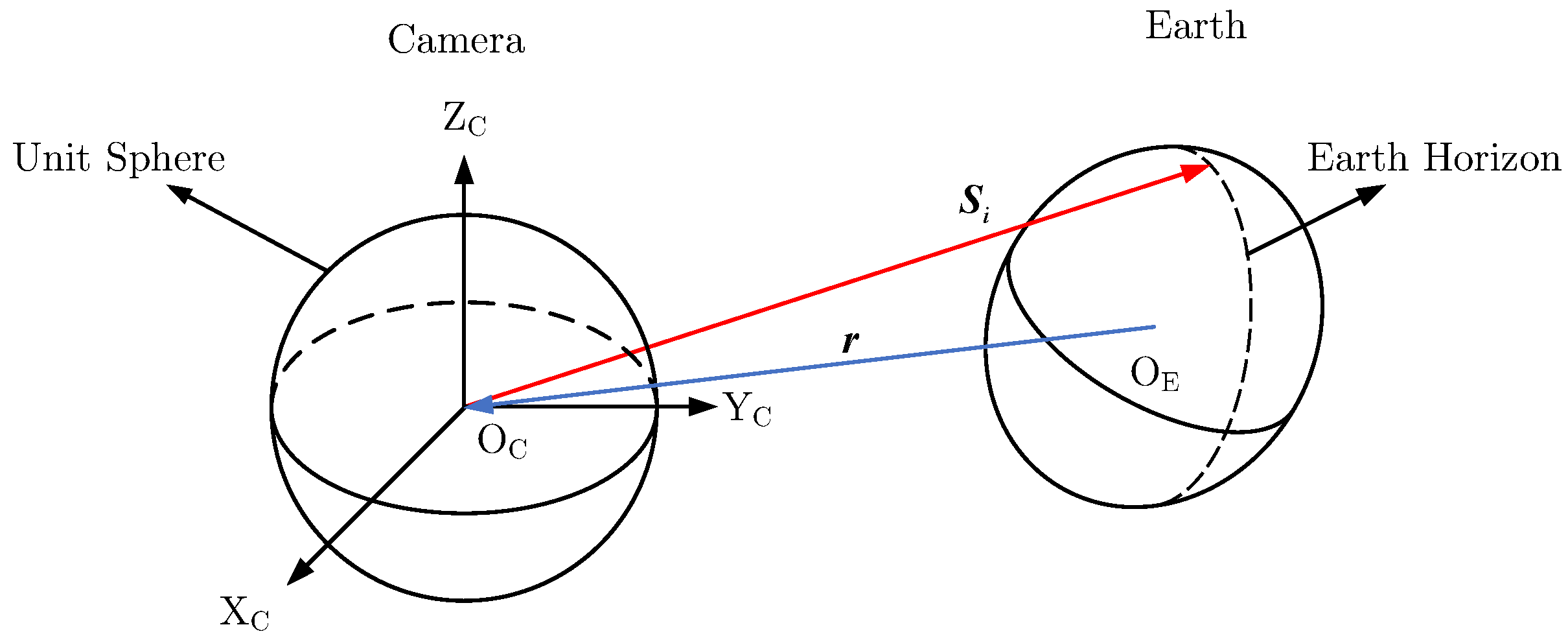

2.2. Horizon Projection

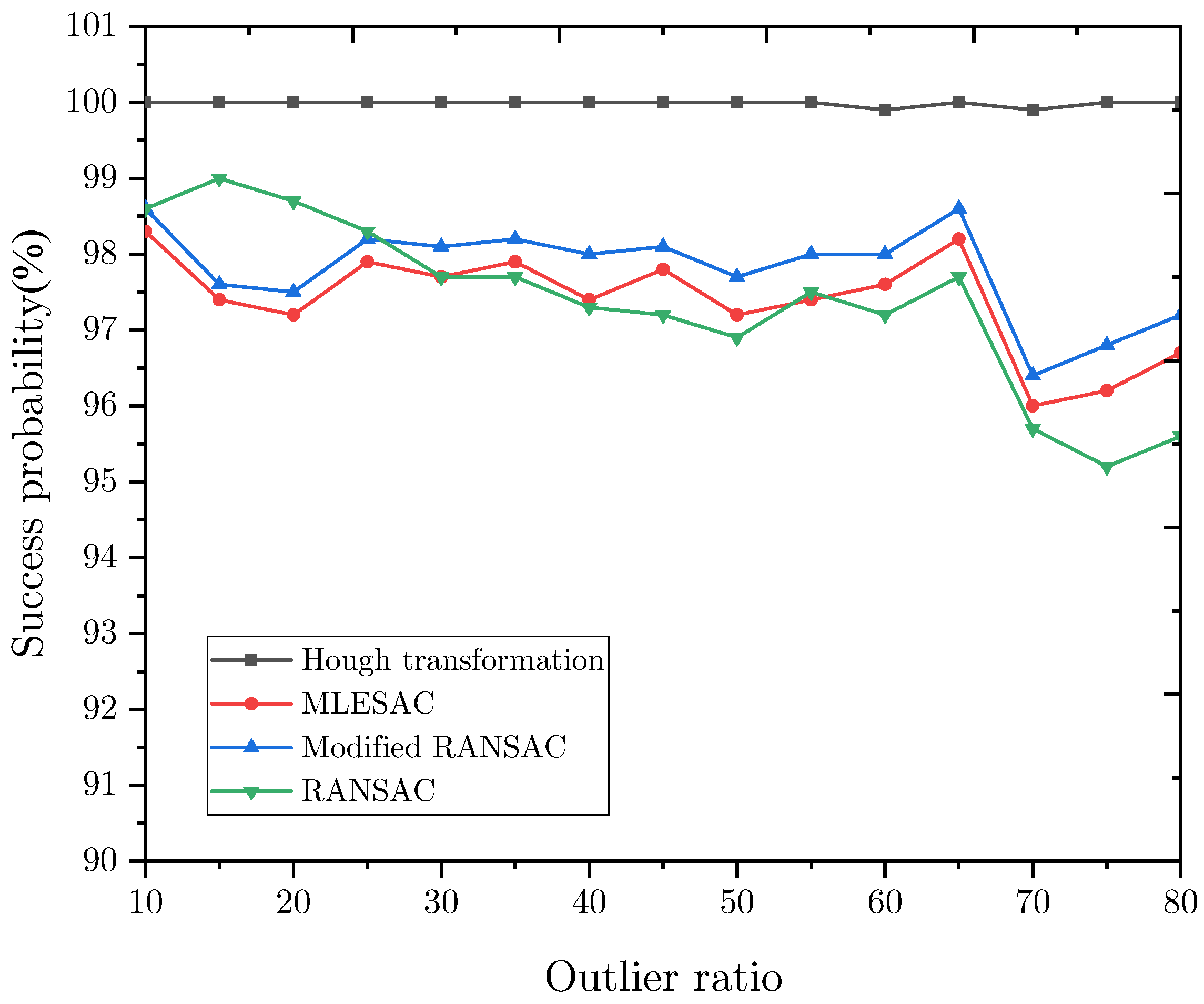

2.3. Modified RANSAC

- Randomly select five sample points. The coordinates of the i-th point are .

- Calculate the normal vector to the plane determined by three of them using Equation (4):

- Calculate the angles between the normal vector and the vectors pointing from the origin to the sample points, respectively, – . is the mean of these angles. If the mean deviation , go back to step 1.

- Calculate the angles between the normal vector and vectors pointing from the origin to the rest points, for instance, , if , the point is considered as an inlier. The number of inliers is denoted as .

- If , then set .

- Repeat steps 1–5 times. Note that, if a set with inliers is discovered, end the loop.

- Remove all the outliers and extract the actual horizon. Furthermore, the normal vector is approximately the nadir vector and thus the approximate off-nadir angle can be obtained.

2.4. Three-Dimensional Curve Fitting

2.4.1. Projection of Earth Horizon on the Unit Sphere

2.4.2. Weighted Total Least Squares

- is estimated from TLS

- for i=1 to N do

- end when

3. Experiments

3.1. Calibration of PAL

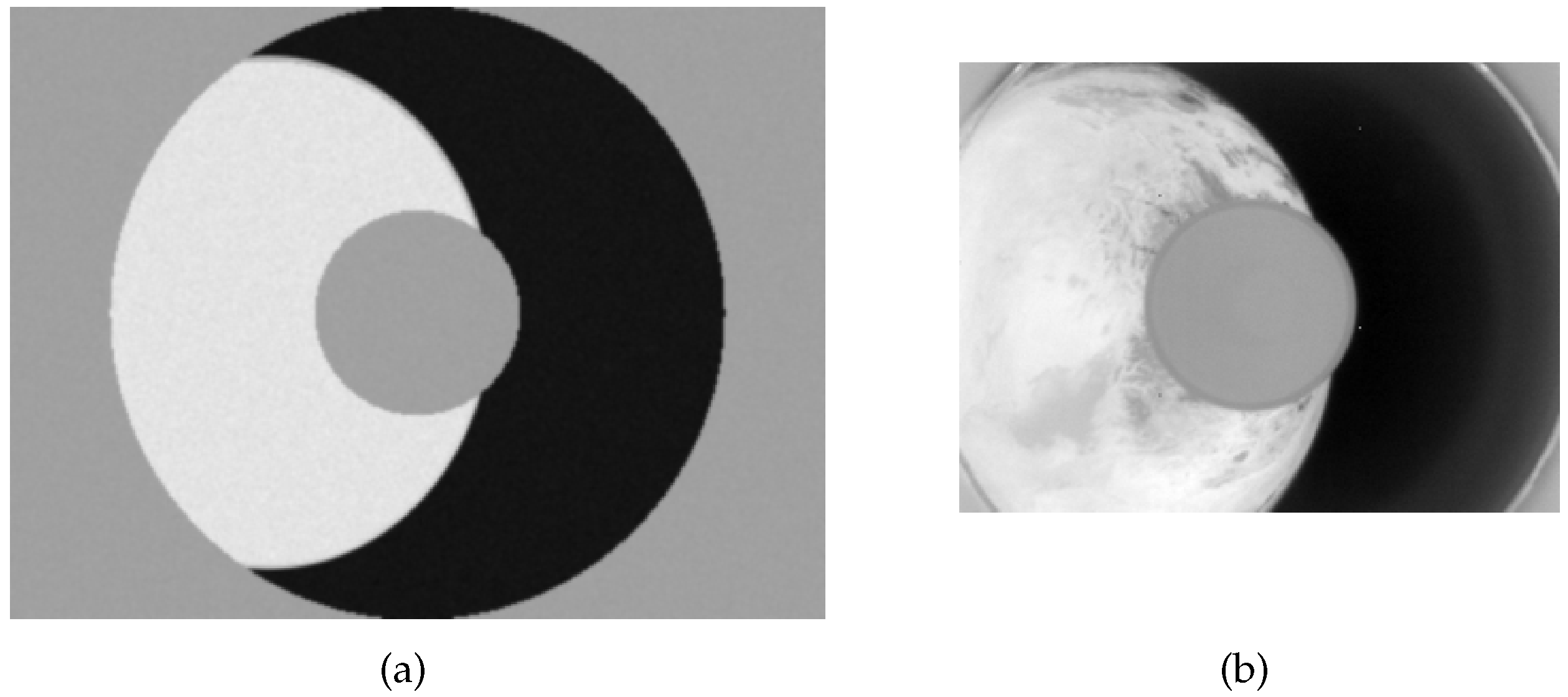

3.2. Simulation System

- Step1: Randomly set the Earth sensor’s position and attitude.

- Step2: For each pixel of the image sensor, the line of sight is set as the vector from the camera origin to the pixel’s projection on the unit-image sphere. Calculate the corresponding tangent height and latitude.

- Step3: Calculate each pixel’s radiance with tangent height and latitude.

- Step4: The image intensity of each pixel is calculated by Equation (24).

- Step5: Blur the image by a Gaussian function to simulate the effect of defocusing. Then, add Gaussian noise to the image.

- Step6: Add noisy points to the edge points to simulate the effect of clouds.

4. Results

4.1. Computational Efficiency

4.2. Accuracy

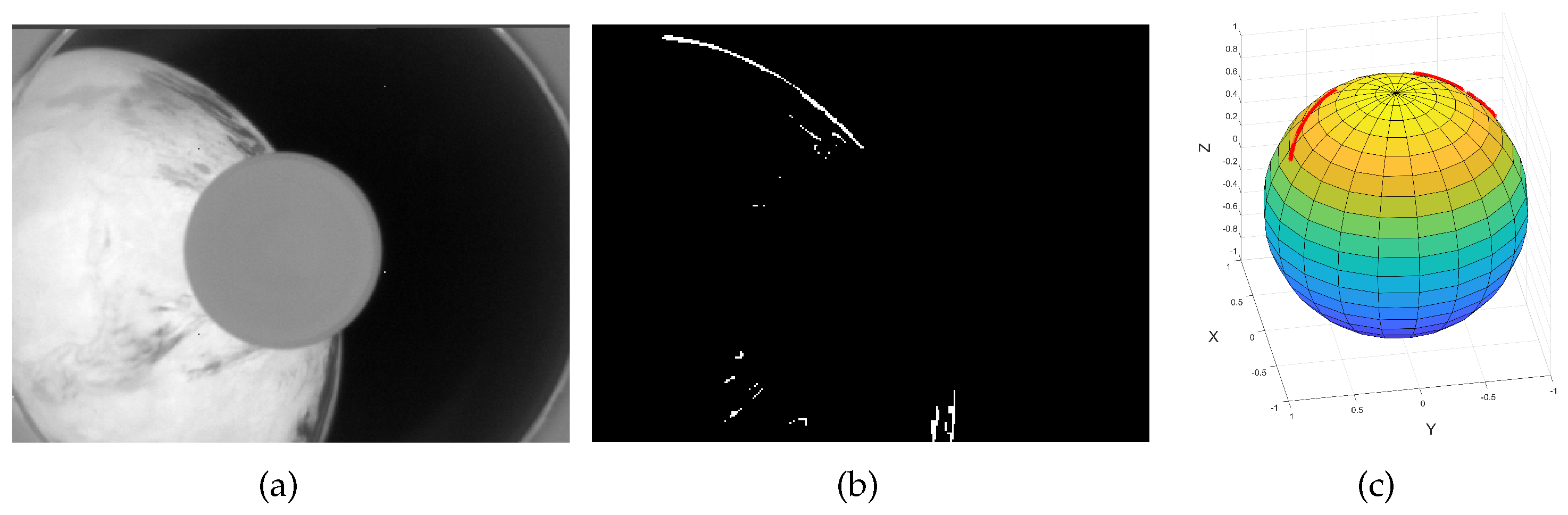

4.3. Performance on Real Earth Images

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Diriker, F.K.; Frias, A.; Keum, K.H.; Lee, R.S.K. Improved Accuracy of a Single-Slit Digital Sun Sensor Design for CubeSat Application Using Sub-Pixel Interpolation. Sensors 2021, 21, 1472. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Wu, G.; Xing, L.; Pedrycz, W. Agile Earth Observation Satellite Scheduling Over 20 Years: Formulations, Methods, and Future Directions. IEEE Syst. J. 2020, 15, 3881–3892. [Google Scholar] [CrossRef]

- Nguyen, T.; Cahoy, K.; Marinan, A. Attitude Determination for Small Satellites with Infrared Earth Horizon Sensors. J. Spacecr. Rocket. 2018, 55, 1466–1475. [Google Scholar] [CrossRef]

- STD 15 Earth Sensor. Available online: https://www.satcatalog.com/component/std-15/ (accessed on 12 September 2022).

- STD 16 Earth Sensor. Available online: https://www.satcatalog.com/component/std-16/ (accessed on 12 September 2022).

- IERS. Available online: https://space.leonardo.com/en/product-catalogue (accessed on 12 September 2022).

- García Sáez, A.; Quero, J.M.; Angulo Jerez, M. Earth Sensor Based on Thermopile Detectors for Satellite Attitude Determination. IEEE Sens. J. 2016, 16, 2260–2271. [Google Scholar] [CrossRef]

- HSNS. Available online: https://www.solar-mems.com/hsns/ (accessed on 12 September 2022).

- Xu, L.; Chen, H. Improvement of Infrared Horizon Detector Using Two-dimensional Infrared Temperature Distribution Model. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Kissimmee, FL, USA, 5–9 January 2015. [Google Scholar]

- Rensburg, V. An Infrared Earth Horizon Sensor for a Leo Satellite. Master’s Thesis, University of Stellenbosch, Stellenbosch, South Africa, 2008. [Google Scholar]

- Digital Earth Sensor. Available online: http://www.sitael-hellas.com/wp-content/uploads/2015/10/Digital-Earth-Sensor.pdf (accessed on 12 September 2022).

- Saadat, A. Attitude Determination with Self-Inspection Cameras Repurposed as Earth Horizon Sensors. In Proceedings of the 36th Annual Small Satellite Conference, Utah State University, Logan, UT, USA, 6–11 August 2022. [Google Scholar]

- Kikuya, Y.; Iwasaki, Y.; Yatsu, Y.; Matunaga, S. Attitude Determination Algorithm Using Earth Sensor Images and Image Recognition. Trans. JSASS Aerosp. Tech. Jpn. 2021, 64, 82–90. [Google Scholar] [CrossRef]

- Modenini, D.; Locarini, A.; Zannoni, M. Attitude Sensor from Ellipsoid Observations: A Numerical and Experimental Validation. Sensors 2020, 20, 433. [Google Scholar] [CrossRef]

- Christian, J.A. A Tutorial on Horizon-Based Optical Navigation and Attitude Determination With Space Imaging Systems. IEEE Access 2021, 9, 19819–19853. [Google Scholar] [CrossRef]

- Qiu, R.; Dou, W.; Kan, J.; Yu, K. Optical design of wide-angle lens for LWIR earth sensors. In Proceedings of the Image Sensing Technologies: Materials, Devices, Systems, and Applications IV, Anaheim, CA, USA, 9–13 April 2017. [Google Scholar]

- CubeSense. Available online: https://www.cubespace.co.za/products/gen-1/sensors/cubesense/ (accessed on 12 September 2022).

- Barf, J. Development and Implementation of an Image-Processing-Based Horizon Sensor for Sounding Rockets. Master’s Thesis, Luleå University of Technology, Luleå, Sweden, 2017. [Google Scholar]

- Braun, B.; Barf, J. Image processing based horizon sensor for estimating the orientation of sounding rockets, launch vehicles and spacecraft. CEAS Space J. 2022. [Google Scholar] [CrossRef]

- Manzoni, G.; Brama, Y.L.; Zhang, M. Athenoxat-1, Night Vision Experiments in LEO. In Proceedings of the 30th Annual Small Satellite Conference, Utah State University, Logan, UT, USA, 6–11 August 2016. [Google Scholar]

- Pack, D.W.; Ardila, D.; Herman, E.; Rowen, D.W.; Welle, R.P.; Wiktorowicz, S.J.; Hattersley, B. Two Aerospace Corporation CubeSat Remote Sensing Imagers: CUMULOS and R3. In Proceedings of the 31th Annual Small Satellite Conference, Utah State University, Logan, UT, USA, 7–10 August 2017. [Google Scholar]

- Wang, H.; Wang, Z.; Wang, B.; Jin, Z.; Crassidis, J.L. Infrared Earth sensor with a large field of view for low-Earth-orbiting micro-satellites. Front. Inf. Technol. Electron. Eng. 2021, 22, 262–271. [Google Scholar] [CrossRef]

- Deng, S.; Meng, T.; Wang, H.; Du, C.; Jin, Z. Flexible attitude control design and on-orbit performance of the ZDPS-2 satellite. Acta Astronaut. 2017, 130, 147–161. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Publishing House of Electronics Industry: Beijing, China, 2020; pp. 511–529. [Google Scholar]

- Christian, J.A. Accurate Planetary Limb Localization for Image-Based Spacecraft Navigation. J. Spacecr. Rocket. 2017, 54, 708–730. [Google Scholar] [CrossRef]

- Renshaw, D.T.; Christian, J.A. Subpixel Localization of Isolated Edges and Streaks in Digital Images. J. Imaging 2020, 6, 33. [Google Scholar] [CrossRef] [PubMed]

- Niu, S.; Bai, J.; Hou, X.; Yang, G. Design of a panoramic annular lens with a long focal length. Appl. Opt. 2007, 46, 7850–7857. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Yang, B.; Liu, F.; Zhu, Q.; Li, S. Optimization of short-arc ellipse fitting with prior information for planetary optical navigation. Acta Astronaut. 2021, 184, 119–127. [Google Scholar] [CrossRef]

- Christian, J.A.; Robinson, S.B. Noniterative Horizon-Based Optical Navigation by Cholesky Factorization. J. Guid. Control Dyn. 2016, 39, 2757–2765. [Google Scholar] [CrossRef]

- Modenini, D. Attitude Determination from Ellipsoid Observations: A Modified Orthogonal Procrustes Problem. J. Guid. Control Dyn. 2018, 41, 2324–2326. [Google Scholar] [CrossRef]

- Mortari, D.; De Dilectis, F.; Zanetti, R. Position Estimation Using the Image Derivative. Aerospace 2015, 2, 435–460. [Google Scholar] [CrossRef]

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Gioia, F.; Meoni, G.; Giuffrida, G.; Donati, M.; Fanucci, L. A Robust RANSAC-Based Planet Radius Estimation for Onboard Visual Based Navigation. Sensors 2020, 20, 4041. [Google Scholar] [CrossRef]

- Moritz, H. Geodetic reference system. Bull. Geod. 1980, 54, 395–405. [Google Scholar] [CrossRef]

- Wang, H.; Bai, S. A versatile method for target area coverage analysis with arbitrary satellite attitude maneuver paths. Acta Astronaut. 2022, 194, 242–254. [Google Scholar] [CrossRef]

- Crassidis, J.L.; Cheng, Y. Maximum Likelihood Analysis of the Total Least Squares Problem with Correlated Errors. J. Guid. Control Dyn. 2019, 42, 1204–1217. [Google Scholar] [CrossRef]

- Markovsky, I.; Van Huffel, S. Overview of total least-squares methods. Signal Process. 2007, 87, 2283–2302. [Google Scholar] [CrossRef]

- Wang, B.; Li, J.; Liu, C. A robust weighted total least squares algorithm and its geodetic applications. Stud. Geophys. Geod. 2016, 60, 177–194. [Google Scholar] [CrossRef]

- Jazaeri, S.; Amiri-Simkooei, A.R.; Sharifi, M.A. Iterative algorithm for weighted total least squares adjustment. Surv. Rev. 2013, 46, 19–27. [Google Scholar] [CrossRef]

- Kannala, J.; Brandt, S.S. A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern. Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef]

- Zhang, G.; Wei, X.; Fan, Q.; Jiang, J. Method and Device for Calibration of Digital Sun Sensor. U.S. Patent 2009/0012731 A1, 8 January 2009. [Google Scholar]

- Phenneger, M.; Singhal, S.; Lee, T.; Stengle, T. Infrared Horizon Sensor Modeling for Attitude Determination and Control: Analysis and Mission Experience; NASA: Washington, DC, USA, 1985.

- Gontin, R.; Ward, K. Horizon sensor accuracy improvement using earth horizon profile phenomenology. In Proceedings of the Guidance, Navigation and Control, Monterey, CA, USA, 17–19 August 1987. [Google Scholar]

| Parameter | Value |

|---|---|

| Resolution of CMOS | |

| Size of one pixel | |

| Focal length | |

| FOV | |

| Spectral range | 8−14 |

| Dimension | |

| Weight | 40 g |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Wang, H.; Jin, Z. An Efficient Algorithm for Infrared Earth Sensor with a Large Field of View. Sensors 2022, 22, 9409. https://doi.org/10.3390/s22239409

Wang B, Wang H, Jin Z. An Efficient Algorithm for Infrared Earth Sensor with a Large Field of View. Sensors. 2022; 22(23):9409. https://doi.org/10.3390/s22239409

Chicago/Turabian StyleWang, Bendong, Hao Wang, and Zhonghe Jin. 2022. "An Efficient Algorithm for Infrared Earth Sensor with a Large Field of View" Sensors 22, no. 23: 9409. https://doi.org/10.3390/s22239409

APA StyleWang, B., Wang, H., & Jin, Z. (2022). An Efficient Algorithm for Infrared Earth Sensor with a Large Field of View. Sensors, 22(23), 9409. https://doi.org/10.3390/s22239409