Object Detection Based on Roadside LiDAR for Cooperative Driving Automation: A Review

Abstract

1. Introduction

- ✧

- The characteristics of roadside LiDAR, the challenges in object detection tasks, and methods of object detection based on roadside LiDAR in recent years are reviewed in depth, including the methods based on a single roadside LiDAR and cooperative detection of multiple LiDAR.

- ✧

- The influence of adverse weather on LiDAR and methods of LiDAR perception in adverse weather are reviewed. Moreover, this paper collects and analyzes the currently published datasets related to roadside LiDAR perception.

- ✧

- The existing problems, open challenges, and possible research directions of object detection based on roadside LiDAR are discussed in depth to serve as a reference and stimulate future works.

2. Characteristics of Roadside LiDAR and Challenges in Object Detection Task

- (1)

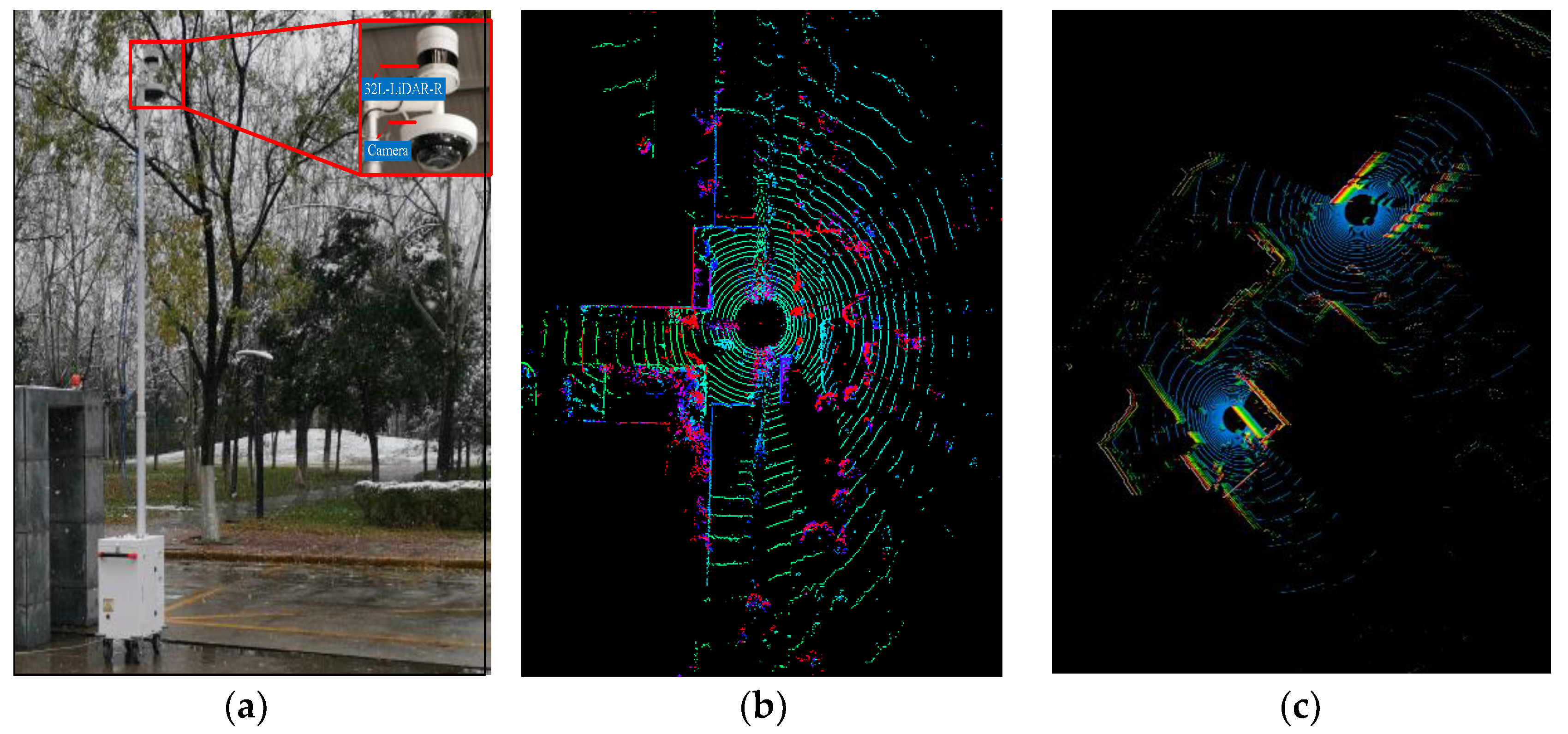

- The best LiDAR sensor type for roadside perception remains an open question; except for a few LiDAR models especially developed for roadside sensing (e.g., 32L-LiDAR-R), most of the roadside collaborative projects directly deploy the onboard LiDAR at the roadside. LiDAR can be divided into mechanical rotary and solid-state LiDAR according to its structure. Mechanical rotary LiDAR changes faster and more accurately from “line” to “surface” by continuously rotating the transmitting head and arranging multiple beams of laser in the vertical direction to form multiple surfaces, to achieve the purpose of dynamic scanning and dynamic information reception. Commercially available rotary LiDARs include 16, 32, 40, 64, 80, and 128-beam LiDARs, which can perform 360-degree rotary scanning to achieve the three-dimensional reconstruction of full-scene traffic objects; the detailed parameters of the typical LiDAR are shown in Table 1. Hybrid solid-state LiDAR uses semiconductor “micro-motion” devices (such as a MEMS scanning mirror) to replace the macro-mechanical scanner and changes the emission angle of a single emitter through a micro-galvanometer, to achieve the effect of scanning without the external rotating structure. Therefore, this type of LiDAR can achieve a higher resolution and achieve image-level point cloud output. For example, the Falcon-K LiDAR has a higher resolution equivalent to 300 beams, a horizontal angle of view of 120 degrees, an angular resolution of 0.08 degrees, and an output of 3 million points per second in the double echo return mode.

- (2)

- In order to allow the roadside LiDAR to obtain a sufficiently large coverage area, the deployment height of the roadside LiDAR is generally above 5 m. Therefore, compared with the point cloud output by onboard LiDAR, the point cloud is more widely distributed and sparser, which increases the difficulty of object detection. In addition, the roadside LiDAR is installed at a fixed location, resulting in a high degree of similarity and lack of diversity in the background point cloud. Moreover, the lack of large-scale roadside LiDAR point cloud datasets limits the use of deep learning methods, which causes the robustness and scene generalization ability to existing detection algorithms to still face enormous challenges in practical applications.

- (3)

- Roadside LiDAR is fixed and deployed on the roadside, and it needs to work for a long time and in all weather. It will inevitably encounter adverse weather conditions such as rain, snow, and fog. Studies have shown that adverse weather has a greater impact on the performance of LiDAR. Rain, snow, fog, and other adverse weather will reduce the reflection intensity of the object point cloud and the number of object points, while increasing noise and reducing the resolution of the object in the point cloud, as shown in [31,32,33,34]. Therefore, the developed algorithm needs to maintain high reliability and accuracy in adverse weather.

- (4)

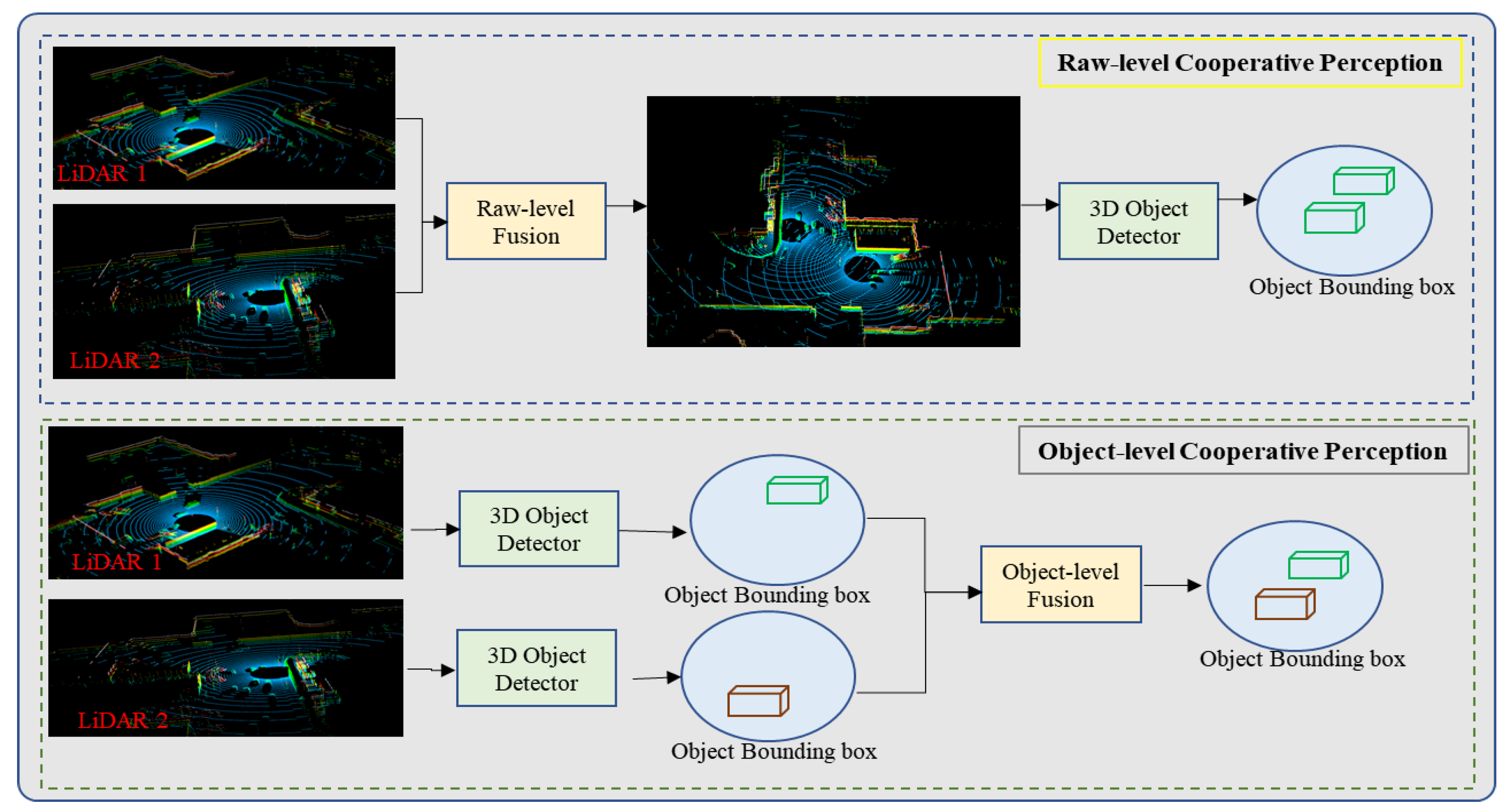

- Most of the roadside LiDAR detection methods proposed at present are based on a single LiDAR, but the field of view of a single LiDAR is limited, and the point cloud data obtained by a single LiDAR have certain defects. The accuracy of perception can be significantly improved by fusing multiple LiDAR point clouds with the diversity of the surrounding space to achieve collaborative perception.

3. Object Detection Based on Roadside LiDAR

3.1. Object Detection Methods Based on a Single Roadside LiDAR

3.2. Multi-LiDAR Cooperative Detection Method

3.3. Object Detection under Adverse Weather Conditions

3.4. Datasets

4. Discussion and Future Works

- (1)

- Towards Scene-Adaptive High-Precision Perception: Due to the homogeneity and lack of diversity of the background point clouds output by the roadside LiDAR, the existing detection methods mainly use traditional background filtering, clustering segmentation, feature extraction, and classification methods. This cascade method is easily affected by any changes and errors in the upstream model. The reliability and accuracy of the algorithm are greatly affected by the LiDAR deployment environment. These problems will limit the application of traditional perception methods in real scenes. Therefore, it is necessary to use the powerful learning ability of deep learning to develop an integrated object detection network with scene adaptability to improve the accuracy and reliability of object detection based on roadside LiDAR. Therefore, on the one hand, we can try to collect and annotate a large number of roadside LiDAR data from the simulation environment or the real scene to promote the research of roadside LiDAR perception methods based on data-driven considerations. On the other hand, based on the existing small number of labeled roadside point cloud datasets, we can attempt to carry out research on the deep learning method of roadside LiDAR based on few-shot learning [124].

- (2)

- Adaptation in Different Roadside LiDARs: According to the research review in this paper, the roadside LiDAR used in the existing methods or published datasets has great differences in the scanning mode and the number of scanning lines; specifically, the number of scanning lines of LiDAR ranges from 16 to 300. The observation angle of the object, sparsity of the output point cloud, and regional occlusion are different for the roadside LiDAR with a different number of scanning lines and different installation height, which makes it difficult for the existing perception methods to be applied to different roadside LiDARs. Thus far, there is no uniform standard specification for which type of LiDAR will dominate the roadside perception application in the future. Therefore, it is very important to promote research on the common perception algorithm of different roadside LiDARs, which can mine the characteristics of LiDAR point cloud data and try to build a domain-invariant data representation, so that the detection model trained based on existing LiDAR data can be reused for new LiDARs [125].

- (3)

- Adaptation in Different Weather Scenarios: The existing perception algorithms based on roadside LiDAR are usually developed for scenes under normal weather, and do not perform well in adverse weather conditions such as rain and snow. However, the construction of roadside LiDAR point cloud datasets under adverse weather is time-consuming and costly. Therefore, we can try to establish a physical model of LiDAR with high confidence under adverse weather based on the study of the impact of adverse weather on LiDAR parameters, and then use the existing roadside LiDAR point cloud collected under normal weather to quickly construct a large-scale LiDAR point cloud dataset under adverse weather. This will promote the research of roadside LiDAR perception algorithms for weather domain adaptation.

- (4)

- Towards Multiple Roadside LiDAR Cooperation: Through a review of the roadside LiDAR perception approach in recent years, most of the studies above are based on a single LiDAR sensor, while few of them use multiple roadside LiDARs to enhance perception. However, the field of view of a single laser radar is limited, and the point cloud data obtained have some defects. The accuracy of scene perception can be significantly improved by integrating multiple laser radar point clouds from different perspectives in the surrounding space to achieve cooperative perception. At present, high-precision data sharing and low communication overhead are major challenges for multi-side LiDAR cooperative perception. If we can explore the fusion strategy of object information and key point features based on the output of multiple LiDARs, and construct the object detection model of multiple roadside LiDARs based on the fusion of key point depth features, it will provide a new idea for the realization of the enhanced perception of traffic objects under low communication bandwidth.

- (5)

- Towards Multi-Model Cooperation: The perception system based on multi-modal sensor information fusion can significantly improve the perceived performance of a single modal sensor through the complementarity of different types of modal information (such as LiDAR point clouds and images) and appropriate fusion technology [126]. However, the performance of multi-modal fusion has been limited due to the spatiotemporal asynchrony between sensors, domain bias, and noise in different modal data. Future work can explore more effective spatiotemporal registration and data fusion strategies for different modal sensors, and thus achieve better perception performance.

5. Conclusions

- (1)

- Due to the particularity of the deployment location of roadside LiDAR, most of the current object detection methods based on roadside LiDAR mainly adopt the traditional point cloud processing method, which has low accuracy compared with the existing object detection method based on onboard LiDAR and has poor adaptability to changing scenes and LiDAR with different beams.

- (2)

- The roadside LiDAR is deployed on the roadside infrastructure for a long time, and the developed algorithm must consider the impact of adverse weather on the LiDAR. At present, there is a lack of roadside LiDAR point cloud datasets in adverse weather, and the work of roadside LiDAR detection in adverse weather is mainly focused on point cloud denoising.

- (3)

- Most of the algorithms are based on the data of a single roadside LiDAR, and the cooperativity of the LiDAR can better exploit the advantages of the roadside LiDAR, so it is necessary to continue to promote research on the cooperative perception of multiple roadside LiDAR.

- (4)

- Since last year, some roadside LiDAR and image datasets have been released, but the coverage of the scene is limited, the data collected in adverse weather are sparse, and the number of LiDAR lines used in each dataset is also very different.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- China SAR. Energy-Saving and New Energy Vehicle Technology Roadmap 2.0; China SAR: Beijing, China, 2020. [Google Scholar]

- Rana, M.; Hossain, K. Connected and autonomous vehicles and infrastructures: A literature review. Int. J. Pavement Res. Technol. 2021. [Google Scholar] [CrossRef]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Liu, S.; Yu, B.; Tang, J.; Zhu, Q. Towards fully intelligent transportation through infrastructure-vehicle cooperative autonomous driving: Challenges and opportunities. In Proceedings of the 2021 58th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 5–9 December 2021; pp. 1323–1326. [Google Scholar]

- Sun, Q.; Chen, X.; Liang, F.; Tang, X.; He, S.; Lu, H. Target Recognition of Millimeter-wave Radar based on YOLOX. J. Phys. Conf. Ser. 2022, 2289, 012012. [Google Scholar] [CrossRef]

- Sheeny, M.; Wallace, A.; Wang, S. 300 GHz radar object recognition based on deep neural networks and transfer learning. IET Radar Sonar Navig. 2020, 14, 1483–1493. [Google Scholar] [CrossRef]

- Chetouane, A.; Mabrouk, S.; Jemili, I.; Mosbah, M. Vision-based vehicle detection for road traffic congestion classification. Concurr. Comput. Pract. Exp. 2022, 34, e5983. [Google Scholar] [CrossRef]

- Zou, Z.; Zhang, R.; Shen, S.; Pandey, G.; Chakravarty, P.; Parchami, A.; Liu, H. Real-time full-stack traffic scene perception for autonomous driving with roadside cameras. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–25 May 2022; pp. 890–896. [Google Scholar]

- Cho, G.; Shinyama, Y.; Nakazato, J.; Maruta, K.; Sakaguchi, K. Object recognition network using continuous roadside cameras. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference:(VTC2022-Spring), Helsinki, Finland, 19–22 June 2022. [Google Scholar]

- Roy, A.; Gale, N.; Hong, L. Automated traffic surveillance using fusion of Doppler radar and video information. Math. Comput. Model. 2011, 54, 531–543. [Google Scholar] [CrossRef]

- Bai, J.; Li, S.; Huang, L.; Chen, H. Robust detection and tracking method for moving object based on radar and camera data fusion. IEEE Sens. J. 2021, 21, 10761–10774. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Z.; Di, X.; Tian, J. A roadside camera-radar sensing fusion system for intelligent transportation. In Proceedings of the 2020 17th European Radar Conference (EuRAD), Utrecht, The Netherlands, 10–15 January 2021; pp. 282–285. [Google Scholar]

- Liu, P.; Yu, G.; Wang, Z.; Zhou, B.; Chen, P. Object Classification Based on Enhanced Evidence Theory: Radar–Vision Fusion Approach for Roadside Application. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Barad, J. Roadside Lidar Helping to Build Smart and Safe Transportation Infrastructure; SAE Technical Paper; Velodyne Lidar: San Jose, CA, USA, 2021. [Google Scholar]

- Wang, B.; Lan, J.; Gao, J. LiDAR Filtering in 3D Object Detection Based on Improved RANSAC. Remote Sens. 2022, 14, 2110. [Google Scholar] [CrossRef]

- Zhao, X.; Sun, P.; Xu, Z.; Min, H.; Yu, H. Fusion of 3D LIDAR and camera data for object detection in autonomous vehicle applications. IEEE Sens. J. 2020, 20, 4901–4913. [Google Scholar] [CrossRef]

- Lin, X.; Wang, F.; Yang, B.; Zhang, W. Autonomous vehicle localization with prior visual point cloud map constraints in GNSS-challenged environments. Remote Sens. 2021, 13, 506. [Google Scholar] [CrossRef]

- Liu, H.; Ye, Q.; Wang, H.; Chen, L.; Yang, J. A precise and robust segmentation-based lidar localization system for automated urban driving. Remote Sens. 2019, 11, 1348. [Google Scholar] [CrossRef]

- Arnold, E.; Dianati, M.; de Temple, R.; Fallah, S. Cooperative perception for 3D object detection in driving scenarios using infrastructure sensors. IEEE Trans. Intell. Transp. Syst. 2020, 23, 1852–1864. [Google Scholar] [CrossRef]

- Cui, G.; Zhang, W.; Xiao, Y.; Yao, L.; Fang, Z. Cooperative perception technology of autonomous driving in the internet of vehicles environment: A review. Sensors 2022, 22, 5535. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep learning for lidar point clouds in autonomous driving: A review. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3412–3432. [Google Scholar] [CrossRef]

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR technology: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6282–6297. [Google Scholar] [CrossRef]

- Wu, D.; Liang, Z.; Chen, G. Deep learning for LiDAR-only and LiDAR-fusion 3D perception: A survey. Intell. Robot. 2022, 2, 105–129. [Google Scholar] [CrossRef]

- Bai, Z.; Wu, G.; Qi, X.; Liu, Y.; Oguchi, K.; Barth, M.J. Infrastructure-based object detection and tracking for cooperative driving automation: A survey. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 1366–1373. [Google Scholar]

- Bula, J.; Derron, M.H.; Mariethoz, G. Dense point cloud acquisition with a low-cost Velodyne VLP-16. Geosci. Instrum. Methods Data Syst. 2020, 9, 385–396. [Google Scholar] [CrossRef]

- Carballo, A.; Lambert, J.; Monrroy, A.; Wong, D.; Narksri, P.; Kitsukawa, Y.; Takeda, K. LIBRE: The multiple 3d lidar dataset. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1094–1101. [Google Scholar]

- Busch, S.; Koetsier, C.; Axmann, J.; Brenner, C. LUMPI: The Leibniz University Mul-ti-Perspective Intersection Dataset. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 1127–1134. [Google Scholar]

- Wang, H.; Zhang, X.; Li, Z.; Li, J.; Wang, K.; Lei, Z.; Haibing, R. IPS300+: A Challenging multi-modal data sets, including point clouds and images for Intersection Perception System. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–25 May 2022; pp. 2539–2545. [Google Scholar]

- Robosense Technology Co., Ltd. RS-Ruby 128-Channel Mechanical LiDAR. Available online: https://www.robosense.cn/rslidar/RS-Ruby (accessed on 10 November 2022).

- Yu, H.; Luo, Y.; Shu, M.; Huo, Y.; Yang, Z.; Shi, Y.; Nie, Z. DAIR-V2X: A Large-Scale Dataset for Vehicle-Infrastructure Cooperative 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 21361–21370. [Google Scholar]

- Rasshofer, R.H.; Spies, M.; Spies, H. Influences of weather phenomena on automotive laser radar systems. Adv. Radio Sci. 2011, 9, 49–60. [Google Scholar] [CrossRef]

- Filgueira, A.; González-Jorge, H.; Lagüela, S.; Díaz-Vilariño, L.; Arias, P. Quantifying the influence of rain in LiDAR performance. Measurement 2017, 95, 143–148. [Google Scholar] [CrossRef]

- Li, Y.; Duthon, P.; Colomb, M.; Ibanez-Guzman, J. What happens for a ToF LiDAR in fog? IEEE Trans. Intell. Transp. Syst. 2020, 22, 6670–6681. [Google Scholar] [CrossRef]

- Michaud, S.; Lalonde, J.F.; Giguere, P. Towards characterizing the behavior of LiDARs in snowy conditions. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Sun, Y.; Xu, H.; Wu, J.; Zheng, J.; Dietrich, K.M. 3-D data processing to extract vehicle trajectories from roadside LiDAR data. Transp. Res. Rec. 2018, 2672, 14–22. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Zheng, J.; Yang, S.; Wang, X.; Xiao, Y.; Li, T. Background Noise Filtering and Clustering With 3D LiDAR Deployed in Roadside of Urban Environments. IEEE Sens. J. 2021, 21, 20629–20639. [Google Scholar] [CrossRef]

- Sahin, O.; Nezafat, R.V.; Cetin, M. Methods for classification of truck trailers using side-fire light detection and ranging (LiDAR) Data. J. Intell. Transp. Syst. 2021, 26, 1–13. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Sun, Y.; Zheng, J.; Yue, R. Automatic Background Filtering Method for Roadside LiDAR Data. Transp. Res. Rec. 2018, 2672, 106–114. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Xia, X.; Liu, H. Azimuth-Height background filtering method for roadside LiDAR data. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 23–30 October 2019; pp. 2421–2426. [Google Scholar]

- Lee, H.; Coifman, B. Side-fire lidar-based vehicle classification. Transp. Res. Rec. 2012, 2308, 173–183. [Google Scholar] [CrossRef]

- Song, Y.; Zhang, H.; Liu, Y.; Liu, J.; Zhang, H.; Song, X. Background filtering and object detection with a stationary LiDAR using a layer-based method. IEEE Access 2020, 8, 184426–184436. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, J.; Xu, H.; Wang, X.; Fan, X.; Chen, R. Automatic background construction and object detection based on roadside LiDAR. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4086–4097. [Google Scholar] [CrossRef]

- Liu, H.; Lin, C.; Gong, B.; Wu, D. Extending the Detection Range for Low-Channel Roadside LiDAR by Static Background Construction. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, R.; Dou, J.; Zhu, Y.; Sun, J.; Pu, S. Rpvnet: A deep and efficient range-point-voxel fusion network for lidar point cloud segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16024–16033. [Google Scholar]

- Zhang, Z.Y.; Zheng, J.Y.; Wang, X.; Fan, X. Background filtering and vehicle detection with roadside lidar based on point association. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 7938–7943. [Google Scholar]

- Wu, J.; Tian, Y.; Xu, H.; Yue, R.; Wang, A.; Song, X. Automatic ground points filtering of roadside LiDAR data using a channel-based filtering algorithm. Opt. Laser Technol. 2019, 115, 374–383. [Google Scholar] [CrossRef]

- Lv, B.; Xu, H.; Wu, J.; Tian, Y.; Yuan, C. Raster-based background filtering for roadside LiDAR data. IEEE Access 2019, 7, 76779–76788. [Google Scholar] [CrossRef]

- Cui, Y.; Wu, J.; Xu, H.; Wang, A. Lane change identification and prediction with roadside LiDAR data. Opt. Laser Technol. 2020, 123, 105934. [Google Scholar] [CrossRef]

- Wu, J.; Lv, C.; Pi, R.; Ma, Z.; Zhang, H.; Sun, R.; Wang, K. A Variable Dimension-Based Method for Roadside LiDAR Background Filtering. IEEE Sens. J. 2021, 22, 832–841. [Google Scholar] [CrossRef]

- Wang, G.; Wu, J.; Xu, T.; Tian, B. 3D vehicle detection with RSU LiDAR for autonomous mine. IEEE Trans. Veh. Technol. 2021, 70, 344–355. [Google Scholar] [CrossRef]

- Wang, L.; Lan, J. Adaptive Polar-Grid Gaussian-Mixture Model for Foreground Segmentation Using Roadside LiDAR. Remote Sens. 2022, 14, 2522. [Google Scholar] [CrossRef]

- Xia, Y.; Sun, Z.; Tok, A.; Ritchie, S. A dense background representation method for traffic surveillance based on roadside LiDAR. Opt. Lasers Eng. 2022, 152, 106982. [Google Scholar] [CrossRef]

- Zhang, T.; Jin, P.J. Roadside lidar vehicle detection and tracking using range and intensity background subtraction. J. Adv. Transp. 2022, 2022, 2771085. [Google Scholar] [CrossRef]

- Zhang, J.; Xiao, W.; Coifman, B.; Mills, J. Image-based vehicle tracking from roadside LiDAR data. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, 2019, 1177–1183. [Google Scholar] [CrossRef]

- Zhang, J.; Xiao, W.; Coifman, B.; Mills, J.P. Vehicle tracking and speed estimation from roadside lidar. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5597–5608. [Google Scholar] [CrossRef]

- Zhang, J.; Xiao, W.; Mills, J.P. Optimizing Moving Object Trajectories from Roadside Lidar Data by Joint Detection and Tracking. Remote Sens. 2022, 14, 2124. [Google Scholar] [CrossRef]

- Zhang, L.; Zheng, J.; Sun, R.; Tao, Y. Gc-net: Gridding and clustering for traffic object detection with roadside lidar. IEEE Intell. Syst. 2020, 36, 104–113. [Google Scholar] [CrossRef]

- Wu, J. An automatic procedure for vehicle tracking with a roadside LiDAR sensor. ITE J. 2018, 88, 32–37. [Google Scholar]

- Cui, Y.; Xu, H.; Wu, J.; Sun, Y.; Zhao, J. Automatic vehicle tracking with roadside LiDAR data for the connected-vehicles system. IEEE Intell. Syst. 2019, 34, 44–51. [Google Scholar] [CrossRef]

- Chen, J.; Tian, S.; Xu, H.; Yue, R.; Sun, Y.; Cui, Y. Architecture of vehicle trajectories extraction with roadside LiDAR serving connected vehicles. IEEE Access 2019, 7, 100406–100415. [Google Scholar] [CrossRef]

- Zhang, J.; Pi, R.; Ma, X.; Wu, J.; Li, H.; Yang, Z. Object classification with roadside lidar data using a probabilistic neural network. Electronics 2021, 10, 803. [Google Scholar] [CrossRef]

- Zhang, Y.; Bhattarai, N.; Zhao, J.; Liu, H.; Xu, H. An Unsupervised Clustering Method for Processing Roadside LiDAR Data with Improved Computational Efficiency. IEEE Sens. J. 2022, 22, 10684–10691. [Google Scholar] [CrossRef]

- Bogoslavskyi, I.; Stachniss, C. Fast range image-based segmentation of sparse 3D la-ser scans for online operation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 163–169. [Google Scholar]

- Yuan, X.; Mao, Y.; Zhao, C. Unsupervised segmentation of urban 3d point cloud based on lidar-image. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; pp. 2565–2570. [Google Scholar]

- Hasecke, F.; Hahn, L.; Kummert, A. Flic: Fast lidar image clustering. arXiv 2021, arXiv:2003.00575. [Google Scholar]

- Zhao, Y.; Zhang, X.; Huang, X. A divide-and-merge point cloud clustering algorithm for LiDAR panoptic segmentation. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–25 May 2022; pp. 7029–7035. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene under-standing of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Li, Y.; Le Bihan, C.; Pourtau, T.; Ristorcelli, T. Insclustering: Instantly clustering lidar range measures for autonomous vehicle. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Shin, M.O.; Oh, G.M.; Kim, S.W.; Seo, S.W. Real-time and accurate segmentation of 3-D point clouds based on Gaussian process regression. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3363–3377. [Google Scholar] [CrossRef]

- Beltran, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garcia, F.; De La Escalera, A. BirdNet: A 3D Object Detection Framework from LiDAR Information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3517–3523. [Google Scholar] [CrossRef]

- Barrera, A.; Beltrán, J.; Guindel, C.; Iglesias, J.A.; García, F. BirdNet+: Two-Stage 3D Object Detection in LiDAR Through a Sparsity-Invariant Bird’s Eye View. IEEE Access 2021, 9, 160299–160316. [Google Scholar] [CrossRef]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7652–7660. [Google Scholar]

- Ali, W.; Abdelkarim, S.; Zidan, M.; Zahran, M.; El Sallab, A. Yolo3d: End-to-end real-time 3d oriented object bounding box detection from lidar point cloud. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Meyer, G.P.; Laddha, A.; Kee, E.; Vallespi-Gonzalez, C.; Wellington, C.K. Lasernet: An efficient probabilistic 3d object detector for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12677–12686. [Google Scholar]

- Zhou, J.; Tan, X.; Shao, Z.; Ma, L. FVNet: 3D front-view proposal generation for real-time object detection from point clouds. In Proceedings of the 2019 12th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Suzhou, China, 19–21 October 2019; pp. 1–8. [Google Scholar]

- Fan, L.; Xiong, X.; Wang, F.; Wang, N.; Zhang, Z. Rangedet: In defense of range view for lidar-based 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2918–2927. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Kuang, H.; Wang, B.; An, J.; Zhang, M.; Zhang, Z. Voxel-FPN: Multi-scale voxel feature aggregation for 3D object detection from LIDAR point clouds. Sensors 2020, 20, 704. [Google Scholar] [CrossRef]

- Li, Y.; Yang, S.; Zheng, Y.; Lu, H. Improved point-voxel region convolutional neural network: 3D object detectors for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2021, 23, 9311–9317. [Google Scholar] [CrossRef]

- Wang, L.C.; Goldluecke, B. Sparse-Pointnet: See further in autonomous vehicles. IEEE Robot. Autom. Lett. 2021, 6, 7049–7056. [Google Scholar] [CrossRef]

- Shi, S.; Wang, Z.; Wang, X.; Li, H. Part-a2 net: 3d part-aware and aggregation neural network for object detection from point cloud. arXiv 2019, arXiv:1907.03670. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Liu, Z.; Zhao, X.; Huang, T.; Hu, R.; Zhou, Y.; Bai, X. Tanet: Robust 3d object detection from point clouds with triple attention. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11677–11684. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Zhou, S.; Xu, H.; Zhang, G.; Ma, T.; Yang, Y. Leveraging Deep Convolutional Neural Networks Pre-Trained on Autonomous Driving Data for Vehicle Detection from Roadside LiDAR Data. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22367–22377. [Google Scholar] [CrossRef]

- Bai, Z.; Nayak, S.P.; Zhao, X.; Wu, G.; Barth, M.J.; Qi, X.; Oguchi, K. Cyber Mobility Mirror: Deep Learning-based Real-time 3D Object Perception and Reconstruction Using Roadside LiDAR. arXiv 2022, arXiv:2202.13505. [Google Scholar] [CrossRef]

- Zimmer, W.; Grabler, M.; Knoll, A. Real-Time and Robust 3D Object Detection Within Road-Side LiDARs Using Domain Adaptation. arXiv 2022, arXiv:2204.00132. [Google Scholar]

- Bai, Z.; Wu, G.; Barth, M.J.; Liu, Y.; Sisbot, E.A.; Oguchi, K. PillarGrid: Deep Learning-based Cooperative Perception for 3D Object Detection from Onboard-Roadside LiDAR. arXiv 2022, arXiv:2203.06319. [Google Scholar]

- Mo, Y.; Zhang, P.; Chen, Z.; Ran, B. A method of vehicle-infrastructure cooperative perception based vehicle state information fusion using improved kalman filter. Multimed. Tools Appl. 2022, 81, 4603–4620. [Google Scholar] [CrossRef]

- Wang, J.; Guo, X.; Wang, H.; Jiang, P.; Chen, T.; Sun, Z. Pillar-Based Cooperative Perception from Point Clouds for 6G-Enabled Cooperative Autonomous Vehicles. Wirel. Commun. Tions Mob. Comput. 2022, 2022, 3646272. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, J.; Tao, Y.; Xiao, Y.; Yu, S.; Asiri, S.; Li, T. Traffic Sign Based Point Cloud Data Registration with Roadside LiDARs in Complex Traffic Environments. Electronics 2022, 11, 1559. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, Y.; Tian, Y.; Yue, R.; Zhang, H. Automatic vehicle tracking with LiDAR-enhanced roadside infrastructure. J. Test. Eval. 2020, 49, 121–133. [Google Scholar] [CrossRef]

- Ghamisi, P.; Rasti, B.; Yokoya, N.; Wang, Q.; Hofle, B.; Bruzzone, L.; Benediktsson, J.A. Multisource and multitemporal data fusion in remote sensing: A comprehensive review of the state of the art. IEEE Geosci. Remote Sens. Mag. 2019, 7, 6–39. [Google Scholar] [CrossRef]

- Chen, Q.; Tang, S.; Yang, Q.; Fu, S. Cooper: Cooperative perception for connected autonomous vehicles based on 3d point clouds. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; pp. 514–524. [Google Scholar]

- Hurl, B.; Cohen, R.; Czarnecki, K.; Waslander, S. Trupercept: Trust modelling for autonomous vehicle cooperative perception from synthetic data. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 341–347. [Google Scholar]

- Chen, Q.; Ma, X.; Tang, S.; Guo, J.; Yang, Q.; Fu, S. F-cooper: Feature based cooperative perception for autonomous vehicle edge computing system using 3D point clouds. In Proceedings of the 4th ACM/IEEE Symposium on Edge Computing, Arlington, VA, USA, 7–9 November 2019; pp. 88–100. [Google Scholar]

- Marvasti, E.E.; Raftari, A.; Marvasti, A.E.; Fallah, Y.P.; Guo, R.; Lu, H. Cooperative lidar object detection via feature sharing in deep networks. In Proceedings of the 2020 IEEE 92nd Vehicular Technology Conference (VTC2020-Fall), Victoria, BC, Canada, 18 November–16 December 2020; pp. 1–7. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Manivasagam, S.; Wang, S.; Wong, K.; Zeng, W.; Sazanovich, M.; Tan, S.; Urtasun, R. Lidarsim: Realistic lidar simulation by leveraging the real world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11167–11176. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the 1st Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Lopez, P.A.; Behrisch, M.; Bieker-Walz, L.; Erdmann, J.; Flötteröd, Y.P.; Hilbrich, R.; Wießner, E. Microscopic traffic simulation using sumo. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2575–2582. [Google Scholar]

- Wang, T.H.; Manivasagam, S.; Liang, M.; Yang, B.; Zeng, W.; Urtasun, R. V2vnet: Vehicle-to-vehicle communication for joint perception and prediction. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 605–621. [Google Scholar]

- Kutila, M.; Pyykönen, P.; Holzhüter, H.; Colomb, M.; Duthon, P. Automotive LiDAR performance verification in fog and rain. In Proceedings of the2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 1695–1701. [Google Scholar]

- Park, J.I.; Park, J.; Kim, K.S. Fast and accurate desnowing algorithm for LiDAR point clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar] [CrossRef]

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. Cnn-based lidar point cloud de-noising in adverse weather. IEEE Robot. Autom. Lett. 2020, 5, 2514–2521. [Google Scholar] [CrossRef]

- Roriz, R.; Campos, A.; Pinto, S.; Gomes, T. DIOR: A Hardware-assisted Weather Denoising Solution for LiDAR Point Clouds. IEEE Sens. J. 2021, 22, 1621–1628. [Google Scholar] [CrossRef]

- Lu, Q.; Lan, X.; Xu, J.; Song, L.; Lv, B.; Wu, J. A combined denoising algorithm for roadside LiDAR point clouds under snowy condition. In Proceedings of the International Conference on Intelligent Traffic Systems and Smart City (ITSSC 2021), Zhengzhou, China, 19–21 November 2021; pp. 351–356. [Google Scholar]

- Wu, J.; Xu, H.; Zheng, J.; Zhao, J. Automatic vehicle detection with roadside LiDAR data under rainy and snowy conditions. IEEE Intell. Transp. Syst. Mag. 2020, 13, 197–209. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Tian, Y.; Pi, R.; Yue, R. Vehicle detection under adverse weather from roadside LiDAR data. Sensors 2020, 20, 3433. [Google Scholar] [CrossRef]

- Yang, T.; Li, Y.; Ruichek, Y.; Yan, Z. Performance Modeling a Near-Infrared ToF LiDAR Under Fog: A Data-Driven Approach. IEEE Trans. Intell. Transp. Syst. 2021, 23, 11227–11236. [Google Scholar] [CrossRef]

- Kilic, V.; Hegde, D.; Sindagi, V.; Cooper, A.B.; Foster, M.A.; Patel, V.M. Lidar light scattering augmentation (LISA): Physics-based simulation of adverse weather conditions for 3D object detection. arXiv 2021, arXiv:2107.07004. [Google Scholar]

- Hahner, M.; Sakaridis, C.; Dai, D.; Van Gool, L. Fog simulation on real LiDAR point clouds for 3D object detection in adverse weather. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15283–15292. [Google Scholar]

- Hahner, M.; Sakaridis, C.; Bijelic, M.; Heide, F.; Yu, F.; Dai, D.; Van Gool, L. Lidar snowfall simulation for robust 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 16364–16374. [Google Scholar]

- Patil, A.; Malla, S.; Gang, H.; Chen, Y.T. The h3d dataset for full-surround 3d multi-object detection and tracking in crowded urban scenes. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9552–9557. [Google Scholar]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The apolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2702–2719. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Anguelov, D. Scalability in perception for autonomous driving: Waymo open dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Xiao, P.; Shao, Z.; Hao, S.; Zhang, Z.; Chai, X.; Jiao, J.; Yang, D. Pandaset: Advanced sensor suite dataset for autonomous driving. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3095–3101. [Google Scholar]

- Fong, W.K.; Mohan, R.; Hurtado, J.V.; Zhou, L.; Caesar, H.; Beijbom, O.; Valada, A. Panoptic nuscenes: A large-scale benchmark for lidar panoptic segmentation and tracking. IEEE Robot. Autom. Lett. 2022, 7, 3795–3802. [Google Scholar] [CrossRef]

- Yongqiang, D.; Dengjiang, W.; Gang, C.; Bing, M.; Xijia, G.; Yajun, W.; Juanjuan, L. BAAI-VANJEE Roadside Dataset: Towards the Connected Automated Vehicle Highway technologies in Challenging Environments of China. arXiv 2021, arXiv:2105.14370. [Google Scholar]

- Creß, C.; Zimmer, W.; Strand, L.; Fortkord, M.; Dai, S.; Lakshminarasimhan, V.; Knoll, A. A9-dataset: Multi-sensor infrastructure-based dataset for mobility research. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 965–970. [Google Scholar]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Triess, L.T.; Dreissig, M.; Rist, C.B.; Zöllner, J.M. A survey on deep domain adaptation for lidar perception. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium Workshops (IV Workshops), Nagoya, Japan, 11–15 July 2021; pp. 350–357. [Google Scholar]

- Wang, S.; Pi, R.; Li, J.; Guo, X.; Lu, Y.; Li, T.; Tian, Y. Object Tracking Based on the Fusion of Roadside LiDAR and Camera Data. IEEE Trans. Instrum. Meas. 2022, 71, 1–14. [Google Scholar] [CrossRef]

| LiDAR | LiDAR Beams | FOV (Vertical) | FOV (Horizontal) | Resolution (Vertical) | Resolution (Horizontal) | Range | Range Accuracy | Points Per Second |

|---|---|---|---|---|---|---|---|---|

| VLP-16 [25] | 16 | 30° (−15~+15) | 360° | 2° | 0.1~0.4° | 100 m | ±3 cm | 300,000 |

| VLP-32C [26] | 32 | 40° (−25~15) | 360° | ≥0.03° | 0.1~0.4° | 200 m | ±3 cm | Single echo mode: 600,000 |

| Pandar40M [26] | 40 | 40° (−25~15) | 360° | 0.33~6° | 0.2° | 120 m | ±2 cm | Single echo mode: 720,000 |

| Pandar64 [27] | 64 | 40° (−25~15°) | 360° | 0.167~6° | 0.2° | 200 m | ±2/5 cm | Single echo mode: 1,152,000 |

| HDL-64E [27] | 64 | 26.8° (24.8~2°) | 360° | 0.33~6° | 0.08~0.35° | 120 m | ±2 cm | Single echo mode: 1,300,000 |

| RS-Ruby Lite [28] | 80 | 40° (−25~15°) | 360° | Up to 0.1° | 0.1~0.4° | 230 m | ±3 cm | Single echo mode: 1,440,000 |

| RS-Ruby [29] | 128 | 40° (−25~15°) | 360° | Up to 0.1° | 0.2~0.4° | 200 m | ±3 cm | Single echo mode: 2,304,000 |

| Falcon-K [30] | Eq. to 300 | 25° | 120° | 0.16° | 0.24° | 250 m | ±2 cm | Double echo mode: 3,000,000 |

| References | Data Description | Initial Frames | Criteria of Background/Foreground | Merit | Limitation |

|---|---|---|---|---|---|

| Zhao et al. [40] | Azimuth–height table | Manually select the frame without foreground points | Height information of the background frame | High real-time performance. | Manually select the initial frame and poor environmental adaptability. |

| Lee et al. [41] | Range image | Multi-frame point cloud | The median value and the average height of the sampling points in the accumulated frame. | High real-time performance, low complexity. | Ineffective background modeling for scenes with more dynamic targets in consecutive frames. |

| Zhang et al. [43] | Spherical range image | 954-point cloud frames with few vehicle points | The maximum distance and mean distance of the sampling points in the accumulated frames. | High real-time performance, low complexity. | Not applicable to congested traffic scenarios. |

| Liu et al. [44] | Spherical range image | 315-point cloud frames | The maximum range value of consecutive frames after filtering the object points. | Background modeling uses fewer point cloud frames and can adapt to different levels of traffic scenarios. | Decrease in background modeling accuracy when LiDAR swings due to wind. |

| Wu et al. [46,47,48,49] | Voxel/3D cube | 2500-point cloud frames | The density of each cube is learned from accumulated frames. | High background modeling accuracy in areas with high point density at close range. | The size of the cube largely influences accuracy and computational cost. |

| Wu et al. [50] | Dynamic matrix | Randomly select a frame | The number of neighbors, and the distance between the points in the current frame and the aggregated frames. | Effectively filter the background points under different scenarios. | The value parameter mainly depends on experience and lacks portability. |

| Wang et.al [51] | Voxel/cubes | Multiple point cloud frames from different periods | Established a Gaussian background model with average height and number of points as parameters. | The robustness of the algorithm is good, and it still achieves good performance in the case of LiDAR shaking. | Different voxel sizes need to be set for different scenes, and it is difficult to select the appropriate size. |

| Wang et al. [52] | An adaptive grid | One frame of the LiDAR data | Established a Gaussian mixture background model with the maximum distance as the hyper-parameter. | No manual selection of background frames and high background extraction accuracy for sparse point clouds. | The algorithm has high time complexity. |

| Zhang et al. [54] | Elevation azimuth matrix | Successive frames | Established a background model by regressing the intensity features of continuous frames based on the DMD algorithm. | The algorithm process is applied directly on the scattered and discrete point clouds and has strong robustness. | Reflection intensity is attenuated in adverse weather, resulting in reduced algorithm performance. |

| References | Object Clustering | Selected Features | Classifier | Applicability |

|---|---|---|---|---|

| Zhang et al. [55,56] | Euclidean cluster |

| SVM classifier with RBF kernel | Vehicle detection |

| Zhang et al. [54] | Distance-based |

| - | Vehicle detection |

| Wu et al. [59,60] | DBSCAN |

| Naïve Bayes, K-nearest neighbor classification, decision tree, and SVM | Vehicle classification |

| Zhang et al. [43] | DBSCAN |

| - | Vehicle and pedestrian detection |

| Chen et al. [61] | DBSCAN |

| SVM classifier | Vehicle detection |

| Zhang et al. [62] | DBSCAN |

| Probabilistic neural network (PNN) | Pedestrian, bicycle, passenger car, and track classification |

| Zhao et al. [30,36] | Improved DBSCAN |

| Backpropagation artificial neural network (BP-ANN) | Pedestrian and vehicle classification |

| References | Year | Architecture | Dataset | Strategy |

|---|---|---|---|---|

| Wang et al. [51] | 2021 | Background filtering module + 3D CNN detectors | Training: collected using roadside LiDAR (800 frames). Testing: collected using roadside LiDAR (100 frames). |

|

| Zhang et al. [57] | 2022 | Background filtering, clustering, tracker module + PointVoxel-RCNN detector | Training: collected using a RS-LiDAR-32 roadside LiDAR (700 frames). Testing: collected using a RS-LiDAR-32 roadside LiDAR (63 frames). |

|

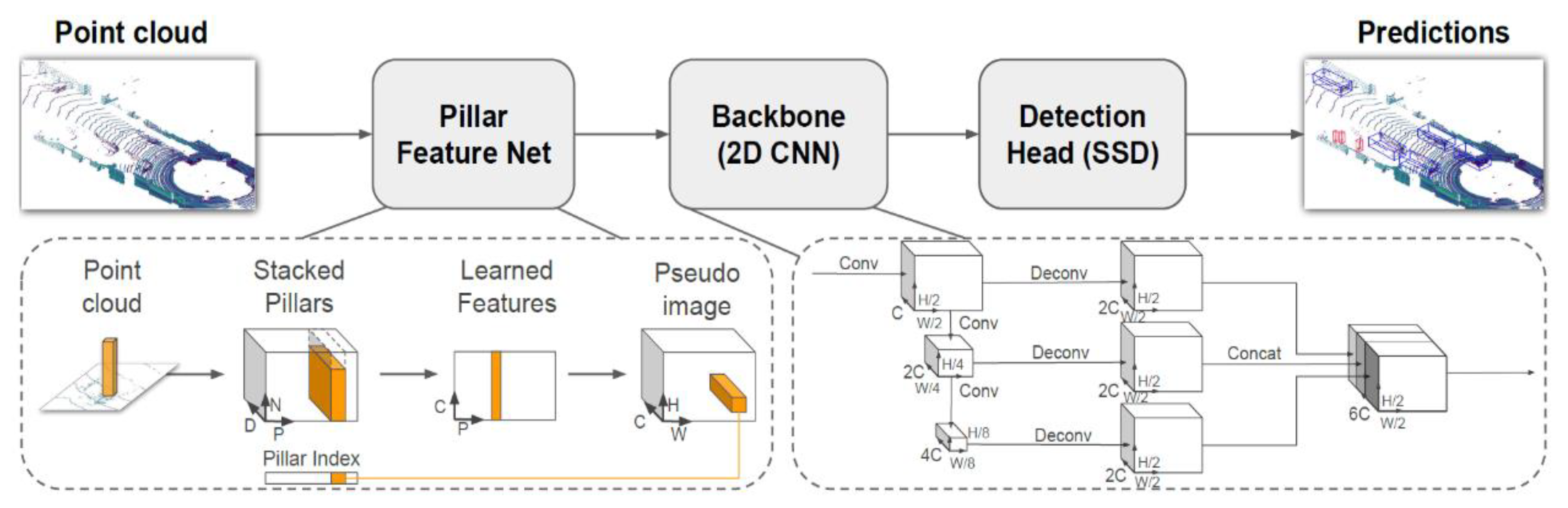

| Zhou et al. [87] | 2022 | Modified PointPillars | Training: a large-scale autonomous driving LiDAR dataset, PandaSet, captured through a Panda 64 LiDAR (11200 frames). Testing: Roadside LiDAR data collected using a Velodyne VLP-32C. (1000 frames). |

|

| Bai et al. [88] | 2022 | RPEaD + PointPillars + FPN | Training: large-scale autonomous driving LiDAR dataset, Nuscenes. Testing: 130 frames manually labelled based on the drone’s view. |

|

| Zimmer et al. [89] | 2022 | DASE-ProPillars, an improved version of the PointPillars model | Training: a semi-synthetic dataset with 6000 frames generated by a OSI-64 simulated LiDAR sensor. A9 dataset, Regensburg. Testing: A9 dataset, Regensburg Next Project. |

|

| Dataset | Year | LiDAR | Cameras | Annotated LiDAR Frames | 3D Boxes | 2D Boxes | Classes | Traffic Scenario | Weather and Times | Sensor Height |

|---|---|---|---|---|---|---|---|---|---|---|

| BAAI-VANJEE [122] | 2021 | 1 32L-LiDAR-R 32-beam LiDAR | 2 RGB cameras | 2500 frames | 74 k | 105 k | 12 | Urban | Sunny/cloudy/rainy, day/night | 4.5 m |

| IPS300+ [28] | 2022 | 1 Robosense Ruby-Lite 80-beam LiDAR | 2 color cameras | 14,198 frames | 454 M | - | 7 | Urban | Day/night | 5.5 m |

| DAIR-X2X_I [30] | 2022 | 1 300-beam LiDAR | 1 RGB camera | 10,084 frames | 493 k | - | 10 | Urban highway | Sunny/rainy/fogy, day/night | - |

| A9-Dataset [123] | 2022 | 1 Ouster-OS1 64-beam LIDAR | 1 RGB camera | 1098 frames | 14 k | - | 7 | Autobahn highway | daylight | 7 m |

| LUMPI [27] | 2022 | 1 VLP-16, 1 HDL-64, 1 Pandar64, 1 PandarQT | 1 PiCam 1ATOM 1YiCam | 145 min | - | - | 6 | Urban | Sunny/cloudy/hazy/ | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, P.; Sun, C.; Wang, R.; Zhao, X. Object Detection Based on Roadside LiDAR for Cooperative Driving Automation: A Review. Sensors 2022, 22, 9316. https://doi.org/10.3390/s22239316

Sun P, Sun C, Wang R, Zhao X. Object Detection Based on Roadside LiDAR for Cooperative Driving Automation: A Review. Sensors. 2022; 22(23):9316. https://doi.org/10.3390/s22239316

Chicago/Turabian StyleSun, Pengpeng, Chenghao Sun, Runmin Wang, and Xiangmo Zhao. 2022. "Object Detection Based on Roadside LiDAR for Cooperative Driving Automation: A Review" Sensors 22, no. 23: 9316. https://doi.org/10.3390/s22239316

APA StyleSun, P., Sun, C., Wang, R., & Zhao, X. (2022). Object Detection Based on Roadside LiDAR for Cooperative Driving Automation: A Review. Sensors, 22(23), 9316. https://doi.org/10.3390/s22239316