A Convolutional Neural Networks-Based Approach for Texture Directionality Detection

Abstract

:1. Introduction

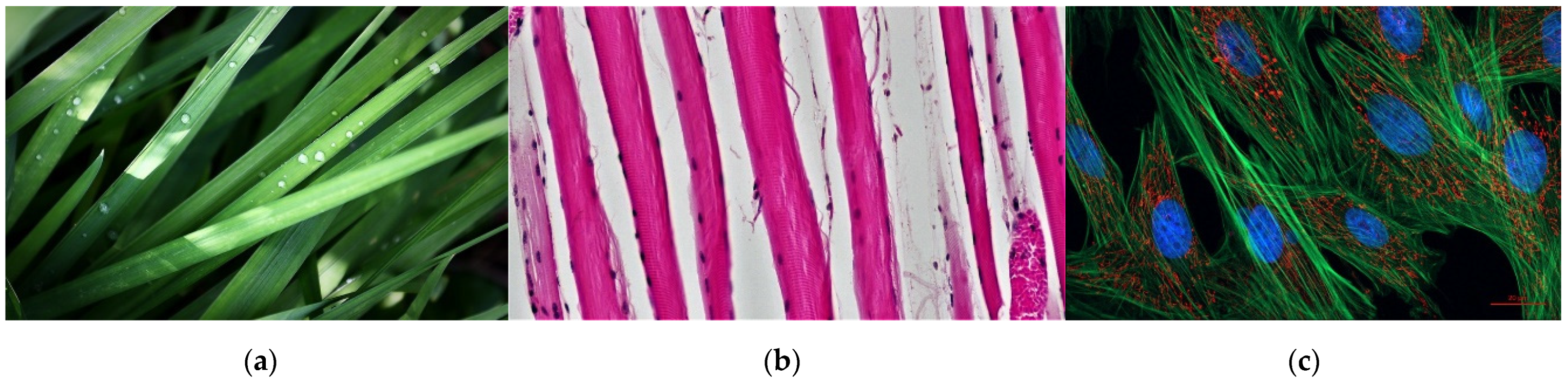

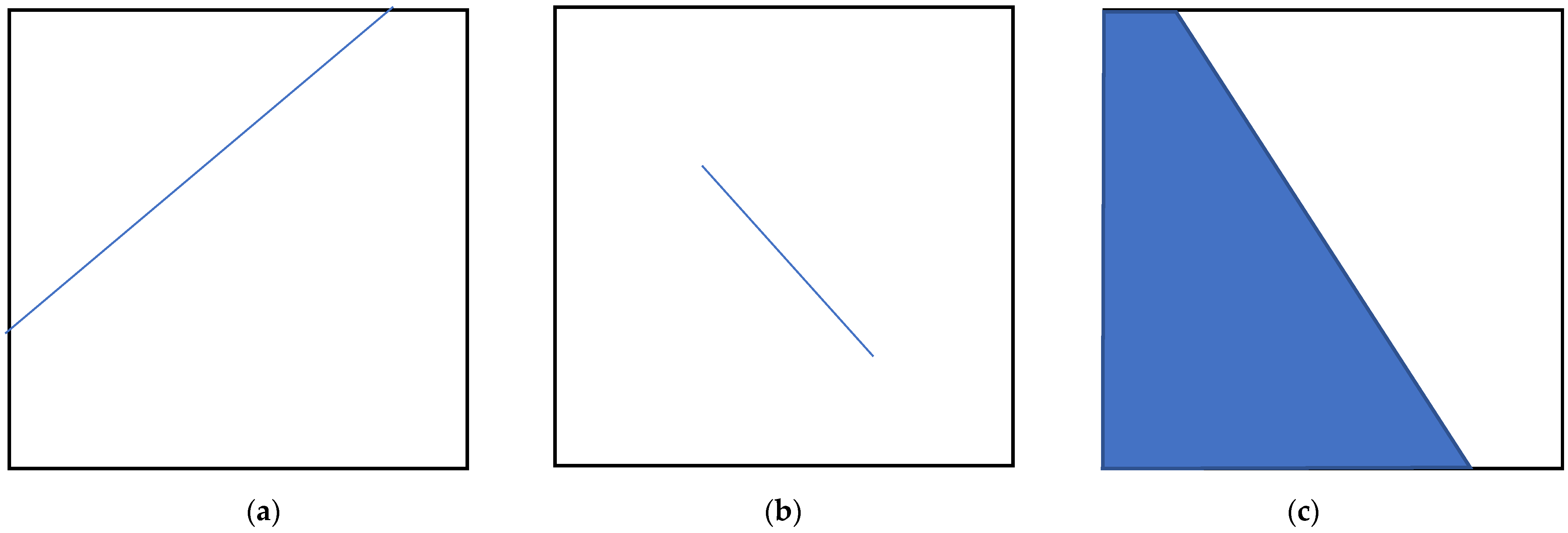

1.1. Texture Directionality Definition

1.2. Related Work

1.3. Technical Approach

2. Materials and Methods

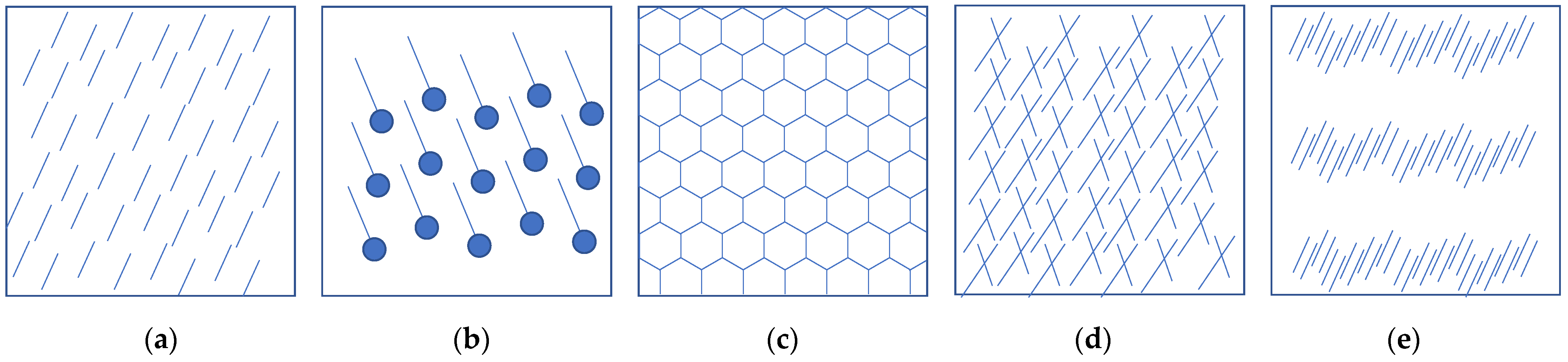

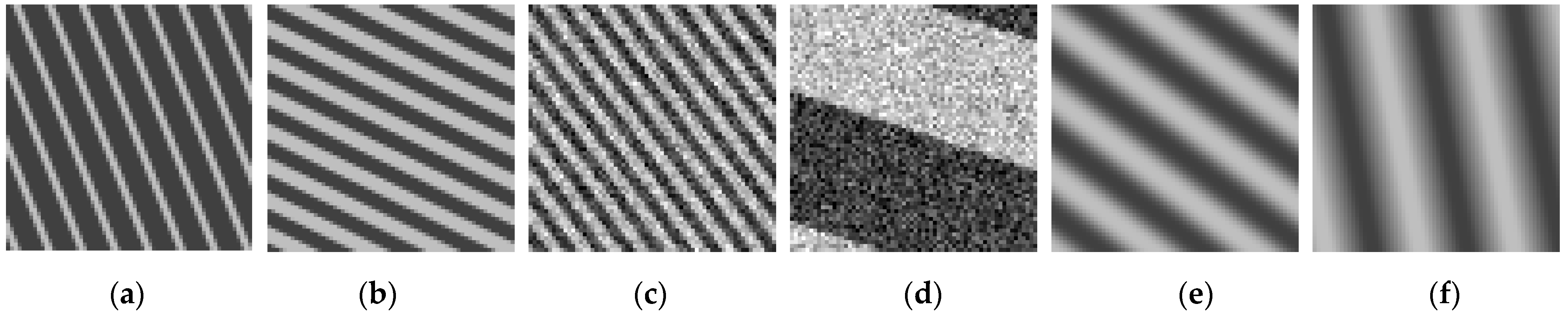

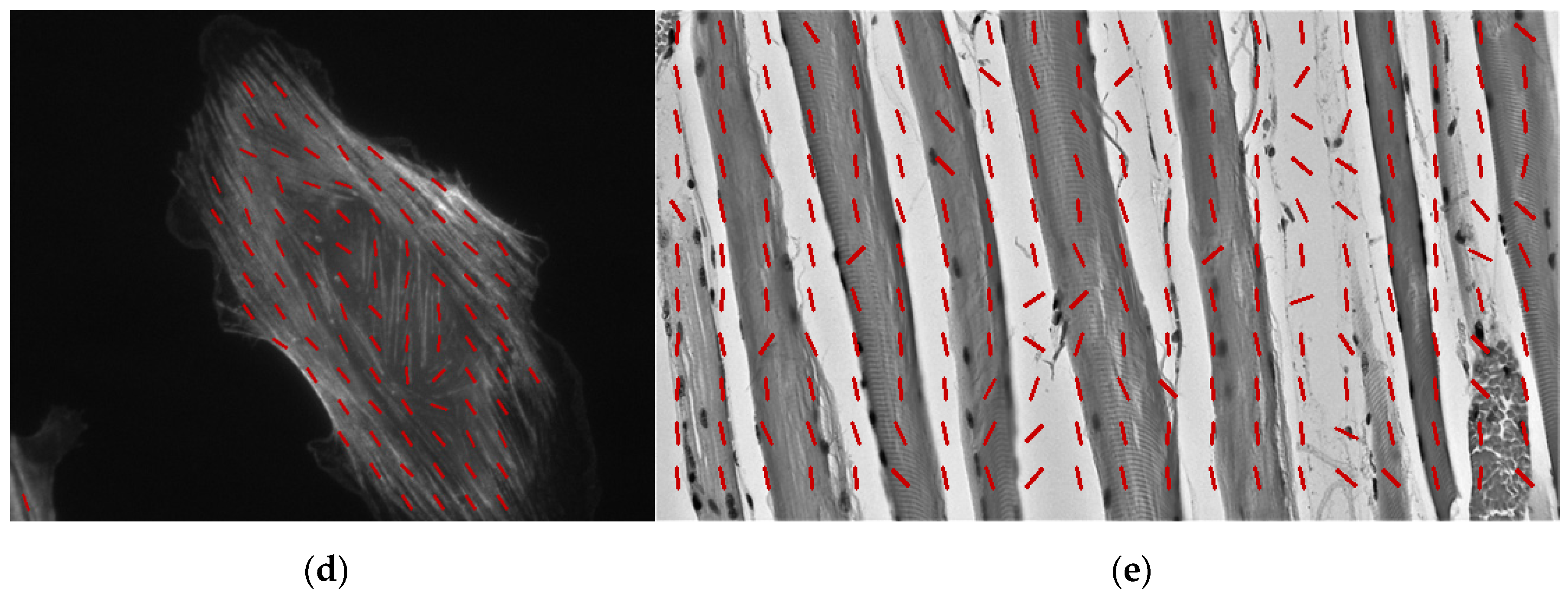

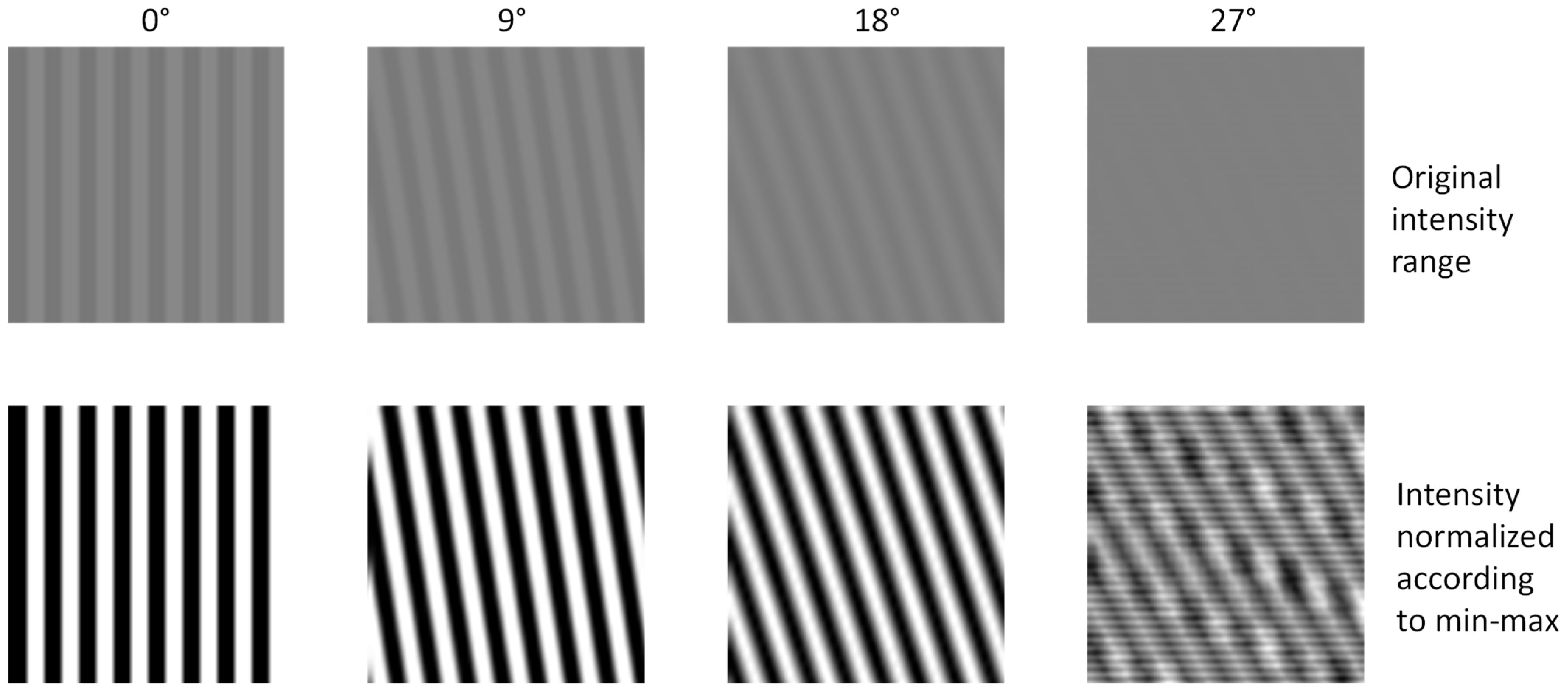

2.1. Synthetic and Real Texture Images for CNN

2.2. CNN-Based Directionality Detection

2.3. Training and Testing Procedures

3. Results

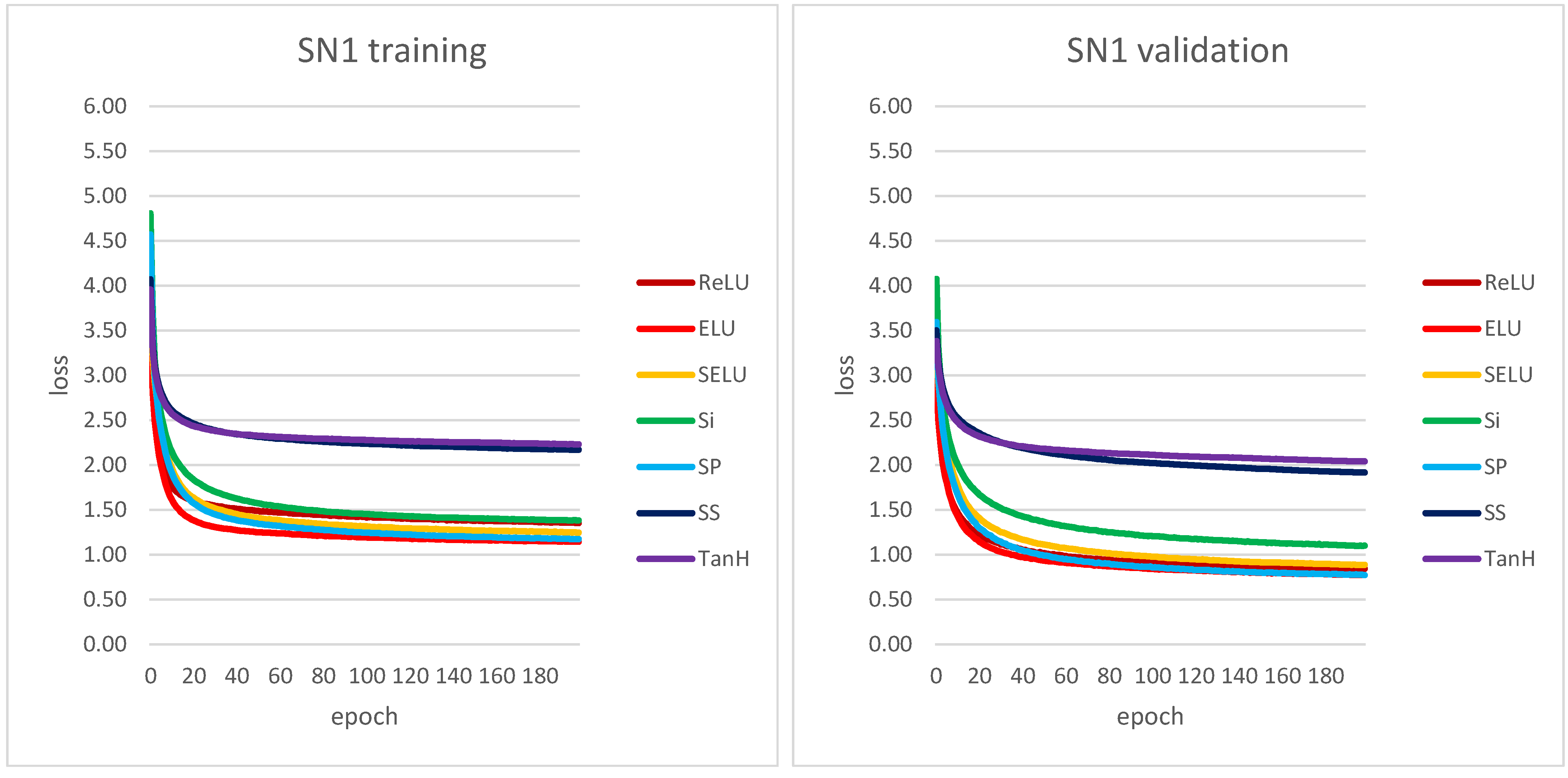

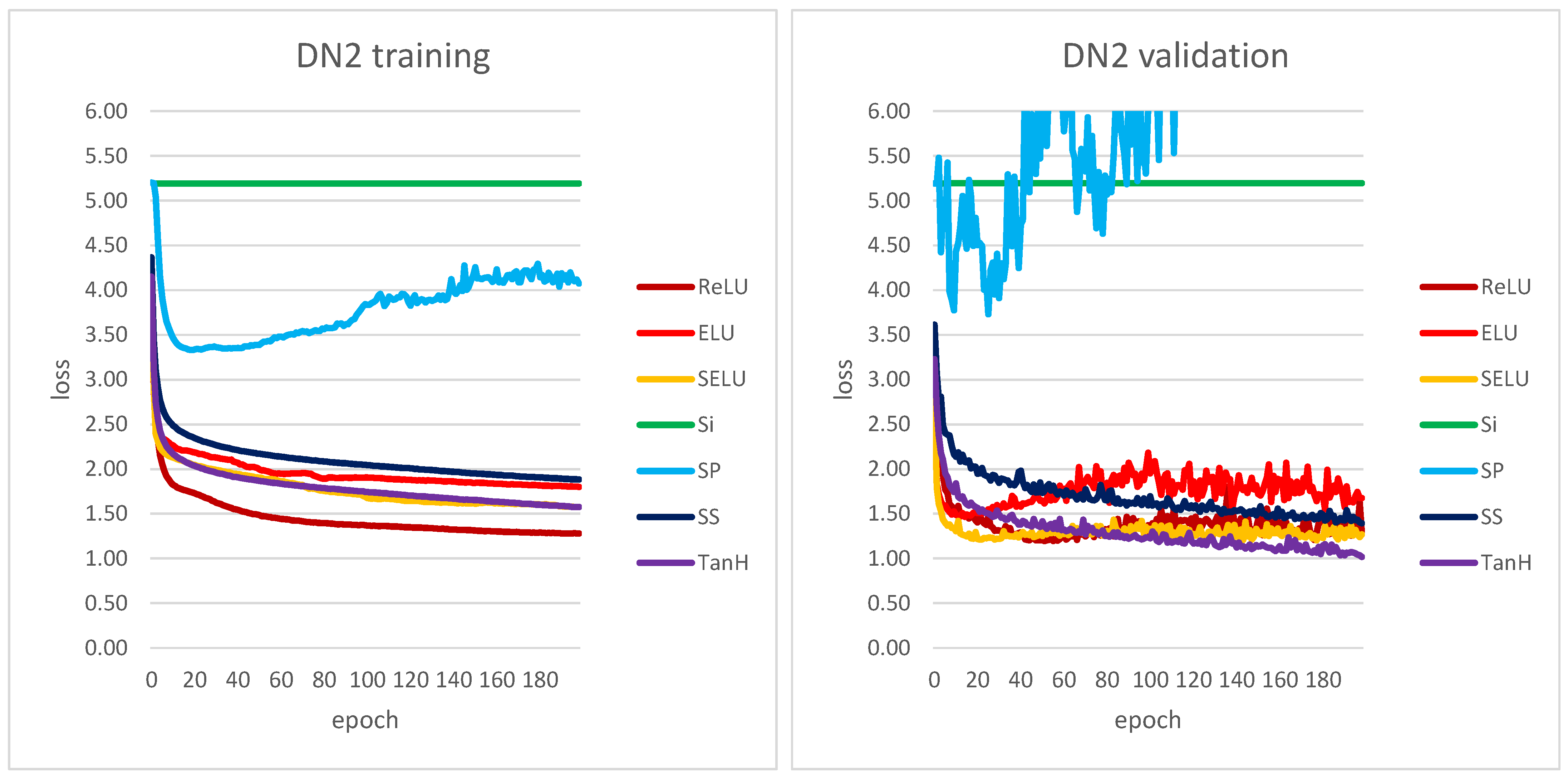

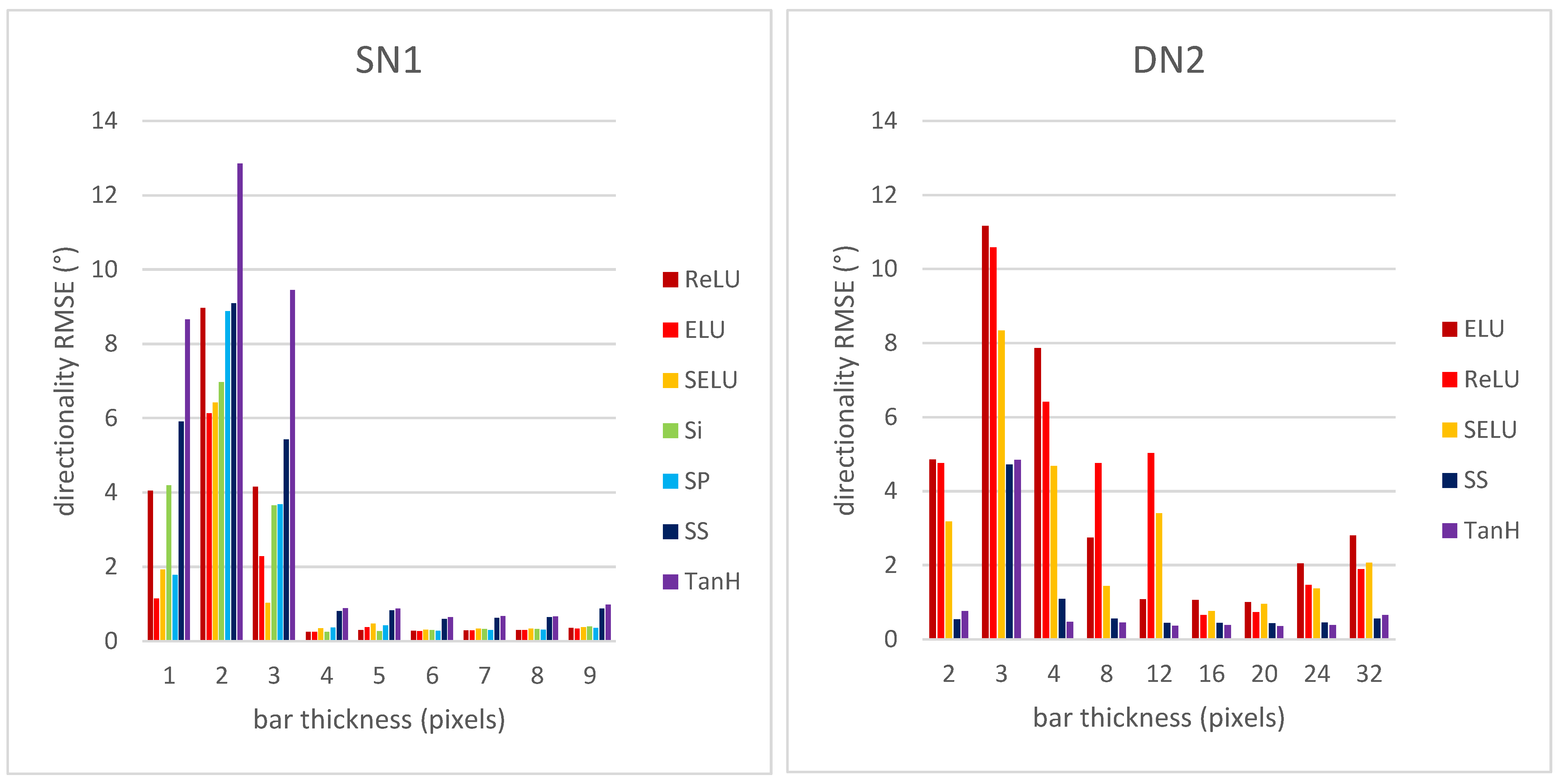

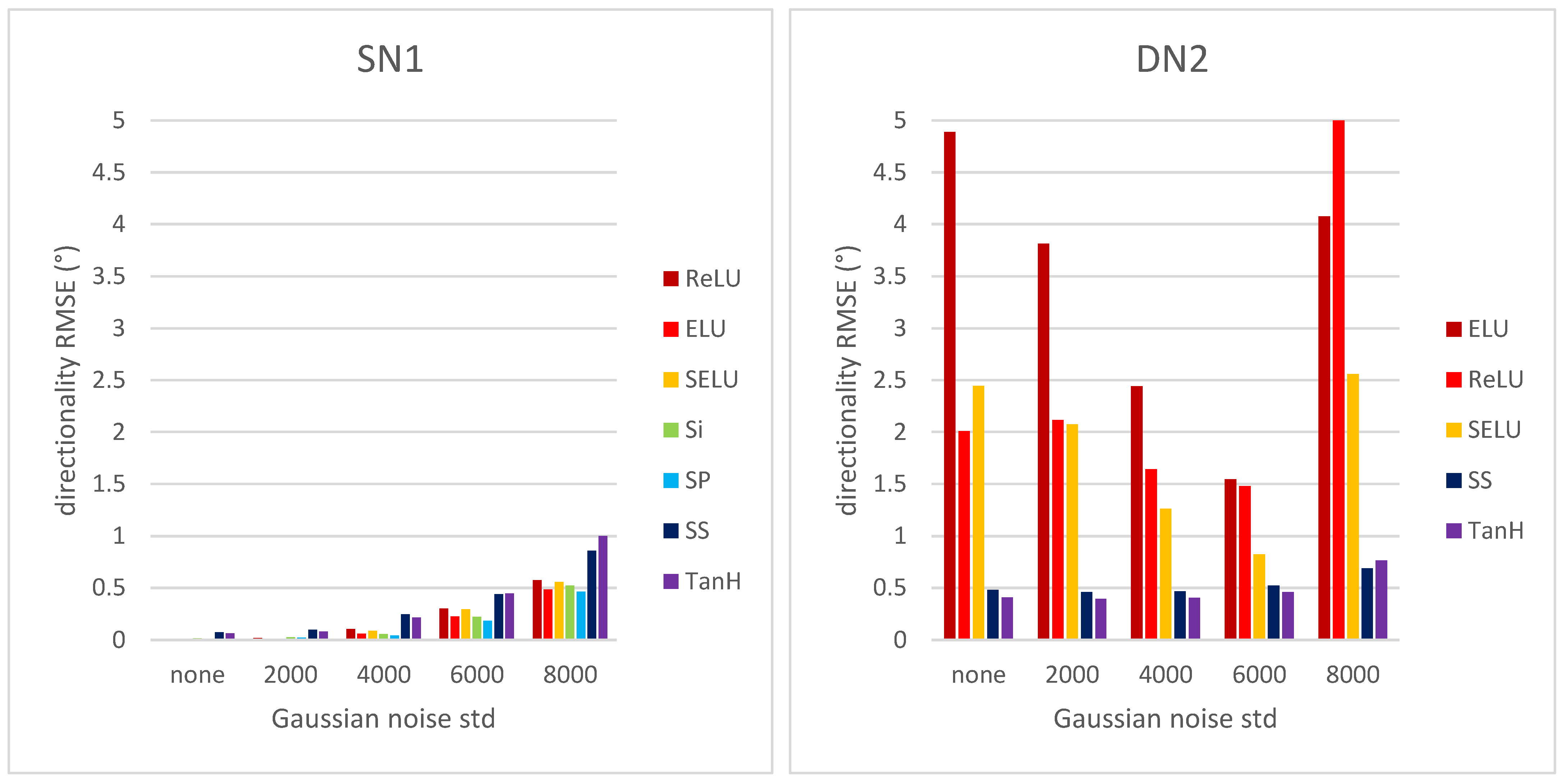

3.1. Training and Testing on Synthetic Textures

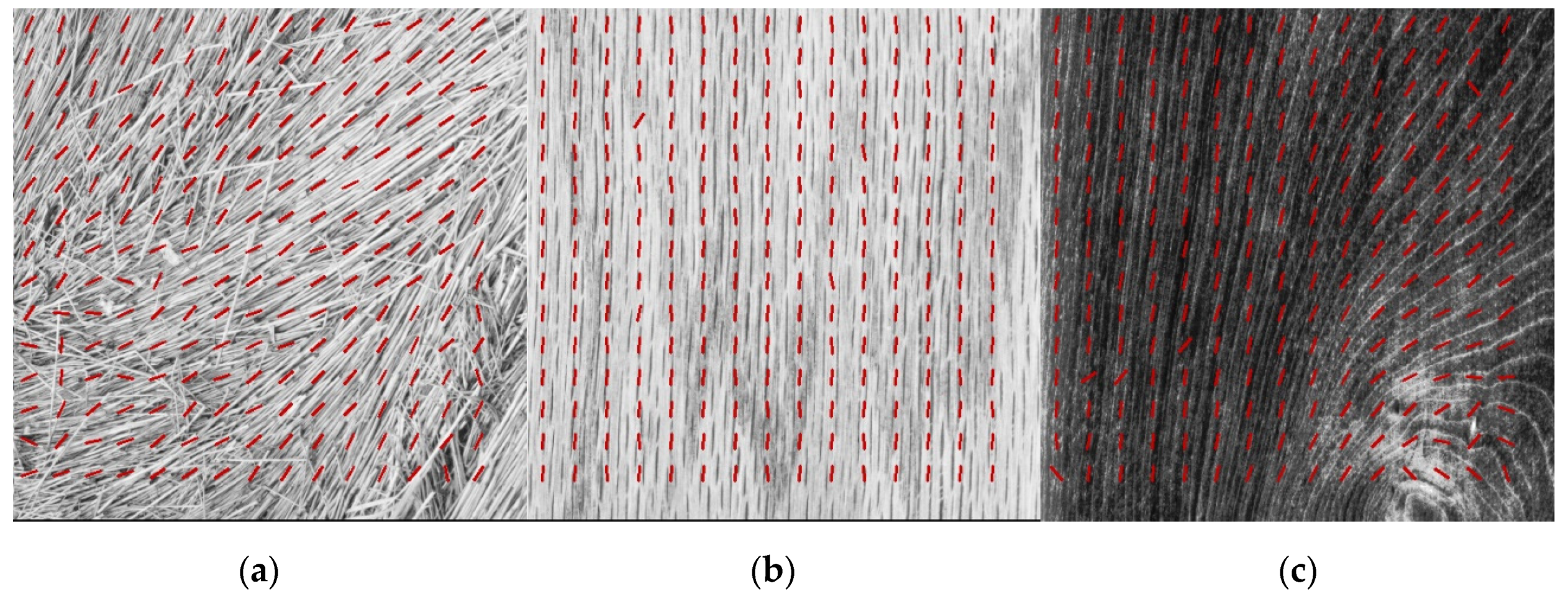

3.2. Demonstration on Non-Synthetic Textures

4. Discussion and Future Work Directions

5. Summary

6. Disclaimer

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Julesz, B.; Gilbert, E.N.; Shepp, L.A.; Frisch, H.L. Inability of Humans to Discriminate between Visual Textures That Agree in Second Order Statistics: Revisited. Perception 1973, 2, 391–405. [Google Scholar] [CrossRef] [PubMed]

- Todorovic, S.; Ahuja, N. Texel-Based Texture Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 841–848. [Google Scholar] [CrossRef] [Green Version]

- Pawel, M. Grass, Available at Flickr, License: CC BY 2.0. Available online: https://www.flickr.com/photos/pawel-m/6773518747/ (accessed on 18 June 2021).

- Reynolds Fayette A Muscle Tissue: Skeletal Muscle Fibers Cross Section: Teased Skeletal Muscle, Berkshire Community College Bioscience Image Library, Available at Flickr, License: CC0 1.0 Universal (CC0 1.0) Public Domain Dedication. Available online: https://www.flickr.com/photos/146824358@N03/40153600100/ (accessed on 18 June 2021).

- Davidson Michael, W. Indian Muntjac Fibroblast Cells, ZEISS Microscopy Sample Courtesy of Michael W. Davidson, Florida State University, Available at Flickr, License: Attribution 2.0 Generic (CC BY 2.0). Available online: https://www.flickr.com/photos/zeissmicro/24327908636/ (accessed on 18 June 2021).

- Bajcsy, P.; Chalfoun, J.; Simon, M. Introduction to Big Data Microscopy Experiments. In Web Microanalysis of Big Image Data; Springer: Cham, Switzerland, 2018; pp. 1–15. [Google Scholar] [CrossRef]

- Nair, P.; Srivastava, D.K.; Bhatnagar, R. Remote Sensing Roadmap for Mineral Mapping Using Satellite Imagery. In Proceedings of the 2nd International Conference on Data, Engineering and Applications, IDEA 2020, Bhopal, India, 28–29 February 2020. [Google Scholar] [CrossRef]

- Jian, M.; Liu, L.; Guo, F. Texture Image Classification Using Perceptual Texture Features and Gabor Wavelet Features. Proc. Asia-Pacif. Conf. Inf. Proc. APCIP 2009, 2, 55–58. [Google Scholar] [CrossRef]

- Islam, M.M.; Zhang, D.; Lu, G. A Geometric Method to Compute Directionality Features for Texture Images. In Proceedings of the 2008 IEEE International Conference on Multimedia and Expo, ICME 2008—Proceedings, Hannover, Germany, 23 June–26 April 2008; pp. 1521–1524. [Google Scholar] [CrossRef]

- Hassekar, P.P.; Sawant, R.R. Experimental Analysis of Perceptual Based Texture Features for Image Retrieval. In Proceedings of the 2015 International Conference on Communication, Information and Computing Technology, ICCICT, Mumbai, India, 15–17 January 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Lin, X.; Ye, L.; Zhong, W.; Zhang, Q. Directionality-Based Modified Coefficient Scanning for Image Coding. In Proceedings of the 2015 8th International Congress on Image and Signal Processing, CISP 2015, Shenyang, China, 14–16 October 2016; pp. 194–198. [Google Scholar] [CrossRef]

- Hu, W.; Li, H.; Wang, C.; Gou, S.; Fu, L. Characterization of Collagen Fibers by Means of Texture Analysis of Second Harmonic Generation Images Using Orientation-Dependent Gray Level Co-Occurrence Matrix Method. J. Biomed. Opt. 2012, 17, 026007. [Google Scholar] [CrossRef] [PubMed]

- Mostaço-Guidolin, L.B.; Smith, M.S.D.; Hewko, M.; Schattka, B.; Sowa, M.G.; Major, A.; Ko, A.C.T. Fractal Dimension and Directional Analysis of Elastic and Collagen Fiber Arrangement in Unsectioned Arterial Tissues Affected by Atherosclerosis and Aging. J. Appl. Physiol. 2018, 126, 638–646. [Google Scholar] [CrossRef]

- Ray, A.; Slama, Z.M.; Morford, R.K.; Madden, S.A.; Provenzano, P.P. Enhanced Directional Migration of Cancer Stem Cells in 3D Aligned Collagen Matrices. Biophys. J. 2017, 112, 1023–1036. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dan, B.; Ma, A.W.K.; Hároz, E.H.; Kono, J.; Pasquali, M. Nematic-like Alignment in SWNT Thin Films from Aqueous Colloidal Suspensions. Ind. Eng. Chem. Res. 2012, 51, 10232–10237. [Google Scholar] [CrossRef]

- Nagarajan, B.; Eufracio Aguilera, A.F.; Wiechmann, M.; Qureshi, A.J.; Mertiny, P. Characterization of Magnetic Particle Alignment in Photosensitive Polymer Resin: A Preliminary Study for Additive Manufacturing Processes. Addit. Manuf. 2018, 22, 528–536. [Google Scholar] [CrossRef]

- Kempton, D.J.; Ahmadzadeh, A.; Schuh, M.A.; Angryk, R.A. Improving the Functionality of Tamura Directionality on Solar Images. Proc. IEEE Int. Conf. Big Data Big Data 2017, 2018, 2518–2526. [Google Scholar] [CrossRef]

- Feng, D.; Li, C.; Xiao, C.; Sun, W. Research of Spectrum Measurement of Texture Image. In Proceedings of the World Automation Congress (WAC), Puerto Vallarta, Mexico, 24–28 June 2012; pp. 163–165. [Google Scholar]

- Rasband, W.S. Effect of Cut-Off Frequency of Butterworth Filter on Detectability and Contrast of Hot and Cold Regions in Tc-99m SPECT; U.S. National Institutes of Health: Bethesda, MD, USA, 1997. [Google Scholar]

- Jafari-Khouzani, K.; Soltanian-Zadeh, H. Radon Transform Orientation Estimation for Rotation Invariant Texture Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1004–1008. [Google Scholar] [CrossRef] [Green Version]

- Peng Jia, P.; Junyu Dong, J.; Lin Qi, L.; Autrusseau, F. Directionality Measurement and Illumination Estimation of 3D Surface Textures by Using Mojette Transform. In Proceedings of the 2008 19th International Conference on Pattern Recognition, IEEE, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Fernandes, S.; Salta, S.; Bravo, J.; Silva, A.P.; Summavielle, T. Acetyl-L-Carnitine Prevents Methamphetamine-Induced Structural Damage on Endothelial Cells via ILK-Related MMP-9 Activity. Mol. Neurobiol. 2016, 53, 408–422. [Google Scholar] [CrossRef] [Green Version]

- Padlia, M.; Sharma, J. Fractional Sobel Filter Based Brain Tumor Detection and Segmentation Using Statistical Features and SVM. In Proceedings of the Lecture Notes in Electrical Engineering; Springer: Berlin, Germany, 2019; Volume 511, pp. 161–175. [Google Scholar]

- Mester, R. Orientation Estimation: Conventional Techniques and a New Non-Differential Approach. In Proceedings of the Signal Processing Conference, 2000 10th European, Tampere, Finland, 4–8 September 2000; pp. 3–6. [Google Scholar]

- Lu, W. Adaptive Noise Attenuation of Seismic Image Using Singular Value Decomposition and Texture Direction Detection. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; Volume 2, pp. 465–468. [Google Scholar]

- Iqbal, N.; Mumtaz, R.; Shafi, U.; Zaidi, S.M.H. Gray Level Co-Occurrence Matrix (GLCM) Texture Based Crop Classification Using Low Altitude Remote Sensing Platforms. PeerJ Comput. Sci. 2021, 7, 1–26. [Google Scholar] [CrossRef]

- Huang, X.; Liu, X.; Zhang, L. A Multichannel Gray Level Co-Occurrence Matrix for Multi/Hyperspectral Image Texture Representation. Remote Sens. 2014, 6, 8424–8445. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Cui, J.; Wang, W.; Lin, C. A Study for Texture Feature Extraction of High-Resolution Satellite Images Based on a Direction Measure and Gray Level Co-Occurrence Matrix Fusion Algorithm. Sensors 2017, 17, 1474. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kociolek, M.; Bajcsy, P.; Brady, M.; Cardone, A. Interpolation-Based Gray-Level Co-Occurrence Matrix Computation for Texture Directionality Estimation. In Proceedings of the Signal Processing—Algorithms, Architectures, Arrangements, and Applications Conference Proceedings, Poznan, Poland, 5 December 2018; Volume 2018, pp. 146–151. [Google Scholar]

- Marcin Kociołek Directionality Detection GUI in GitHub Repository. Available online: https://github.com/marcinkociolek/DirectionalityDetectionGui (accessed on 3 September 2021).

- Trivizakis, E.; Ioannidis, G.S.; Souglakos, I.; Karantanas, A.H.; Tzardi, M.; Marias, K. A Neural Pathomics Framework for Classifying Colorectal Cancer Histopathology Images Based on Wavelet Multi-Scale Texture Analysis. Sci. Rep. 2021, 11, 15546. [Google Scholar] [CrossRef]

- Gogolewski, D. Fractional Spline Wavelets within the Surface Texture Analysis. Meas. J. Int. Meas. Confed. 2021, 179, 2411–2502. [Google Scholar] [CrossRef]

- Maskey, M.; Newman, T.S. On Measuring and Employing Texture Directionality for Image Classification. Pattern Anal. Appl. 2021, 107, 2411–2502. [Google Scholar] [CrossRef]

- Gogolewski, D.; Makieła, W. Problems of Selecting the Wavelet Transform Parameters in the Aspect of Surface Texture Analysis. Teh. Vjesn. 2021, 28, 305–312. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Liu, L.; Chen, J.; Fieguth, P.; Zhao, G.; Chellappa, R.; Pietikäinen, M. From BoW to CNN: Two Decades of Texture Representation for Texture Classification. Int. J. Comput. Vis. 2019, 127, 74–109. [Google Scholar] [CrossRef] [Green Version]

- Aggarwal, A.; Kumar, M. Image Surface Texture Analysis and Classification Using Deep Learning. Multimed. Tools Appl. 2021, 80, 1289–1309. [Google Scholar] [CrossRef]

- Andrearczyk, V.; Whelan, P.F. Using Filter Banks in Convolutional Neural Networks for Texture Classification. Pattern Recognit. Lett. 2016, 84, 63–69. [Google Scholar] [CrossRef] [Green Version]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Texture Synthesis Using Convolutional Neural Networks. arXiv 2015, arXiv:1505.07376. [Google Scholar]

- Liu, G.; Gousseau, Y.; Xia, G.-S. Texture Synthesis through Convolutional Neural Networks and Spectrum Constraints. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3234–3239. [Google Scholar]

- Minhas, M.S. Anomaly Detection in Textured Surfaces; University of Waterloo: Waterloo, ON, Canada, 2019. [Google Scholar]

- Li, Y.; Yu, Q.; Tan, M.; Mei, J.; Tang, P.; Shen, W.; Yuille, A.; Xie, C. Shape-Texture Debiased Neural Network Training. arXiv 2021, arXiv:2010.05981. [Google Scholar]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of Fir Trees (Abies Sibirica) Damaged by the Bark Beetle in Unmanned Aerial Vehicle Images with Deep Learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Zhou, Q.; Wu, J.; Wang, Y.; Wang, H.; Li, Y.; Chai, Y.; Liu, Y. A Cloud Detection Method Using Convolutional Neural Network Based on Gabor Transform and Attention Mechanism with Dark Channel Subnet for Remote Sensing Image. Remote Sens. 2020, 12, 3261. [Google Scholar] [CrossRef]

- Geirhos, R.; Rubisch, P.; Michaelis, C.; Bethge, M.; Wichmann, F.A.; Brendel, W. ImageNet-Trained CNNs Are Biased towards Texture; Increasing Shape Bias Improves Accuracy and Robustness. arXiv 2018, arXiv:1811.12231. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Guangzhou, China, 14–16 May 2016; pp. 770–778. [Google Scholar]

- Chollet, F. Deep Learning Mit Python Und Keras: Das Praxis-Handbuch Vom Entwickler Der Keras-Bibliothek; MITP-Verlags GmbH & Co. KG: Wachtendonk, Germany, 2018. [Google Scholar]

- Home—OpenCV. Available online: https://opencv.org/ (accessed on 20 August 2021).

- Pickle—Python Object Serialization—Python 3.9.6 Documentation. Available online: https://docs.python.org/3/library/pickle.html (accessed on 20 August 2021).

- Brodatz, P. Textures: A Photographic Album for Artists and Designers; Dover Publications: New York, NY, USA, 1966; ISBN 0486216691. [Google Scholar]

- Plant, A.L.; Bhadriraju, K.; Spurlin, T.A.; Elliott, J.T. Cell Response to Matrix Mechanics: Focus on Collagen. Biochim. Biophys. Acta Mol. Cell Res. 2009, 1793, 893–902. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gulli, A.; Pal, S. Deep Learning with Keras; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Layer Activation Functions. Available online: https://keras.io/api/layers/activations/ (accessed on 23 August 2021).

- Zhang, Z.; Sabuncu, M.R. Generalized Cross Entropy Loss for Training Deep Neural Networks with Noisy Labels. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3 December 2018; Bengio, S., Wallach, H.M., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Eds.; Curran Associates Inc.: Montréal, QC, Canada, 2018; pp. 8792–8802. [Google Scholar]

- SIPI Image Database - Textures, Signal and Image Processing Institute University od Southern California. Available online: https://sipi.usc.edu/database/database.php?volume=textures (accessed on 5 March 2021).

- He, D.-C.; Safia, A. Original Brodatz’s Texture Database. Available online: https://multibandtexture.recherche.usherbrooke.ca/original_brodatz.html (accessed on 11 May 2021).

- Borjali, A.; Chen, A.F.; Muratoglu, O.K.; Morid, M.A.; Varadarajan, K.M. Deep Learning in Orthopedics: How Do We Build Trust in the Machine? Healthc. Transform. 2020; ahead of print. [Google Scholar] [CrossRef]

- Hong, J.; Wang, S.H.; Cheng, H.; Liu, J. Classification of Cerebral Microbleeds Based on Fully-Optimized Convolutional Neural Network. Multimed. Tools Appl. 2020, 79, 15151–15169. [Google Scholar] [CrossRef]

- Wimmer, G.; Hegenbart, S.; Vecsei, A.; Uhl, A. Convolutional Neural Network Architectures for the Automated Diagnosis of Celiac Disease. Lect. Notes Comput. Sci. 2016, 10170 LNCS, 104–113. [Google Scholar] [CrossRef]

- Meng, N.; Lam, E.Y.; Tsia, K.K.; So, H.K.H. Large-Scale Multi-Class Image-Based Cell Classification with Deep Learning. IEEE J. Biomed. Health Inform. 2019, 23, 2091–2098. [Google Scholar] [CrossRef] [PubMed]

- Yildirim, M.; Cinar, A. Classification with Respect to Colon Adenocarcinoma and Colon Benign Tissue of Colon Histopathological Images with a New CNN Model: MA_ColonNET. Int. J. Imaging Syst. Technol. 2021, 32, 155–162. [Google Scholar] [CrossRef]

| # | Layer Type | SN1 | SN2 | SN3 | SN4 |

|---|---|---|---|---|---|

| 1 | input (min size x, min size y, channels) | 17, 17, 1 | 17, 17, 1 | 13, 13, 1 | 13, 13, 1 |

| 2 | convolution (size x, size y, count) | 17, 17, 180 | 17, 17, 90 | 13, 13, 180 | 13, 13, 90 |

| 3 | global max pool | ||||

| 4 | dropout | 0.25 | 0.25 | 0.25 | 0.25 |

| 5 | output (count) | 180 | 180 | 180 | 180 |

| Total weights/parameters count | 84,780 | 42,480 | 63,180 | 31,680 |

| # | Layer Type | DN1 | DN2 | DN3 | DN4 |

|---|---|---|---|---|---|

| 1 | input (min size x, min size y, channels) | 36, 36, 1 | 36, 36, 1 | 36, 36, 1 | 36, 36, 1 |

| 2 | convolution (size x, size y, count) | 17, 17, 16 | 17, 17, 16 | 17, 17, 90 | 17, 17, 90 |

| 3 | max pool (size x, size y) | 2, 2 | 2, 2 | 2, 2 | 2, 2 |

| 4 | dropout | 0.25 | 0.25 | 0.25 | 0.25 |

| 5 | convolution (size x, size y, count) | 5, 5, 16 | 5, 5, 32 | 5, 5, 16 | 5, 5, 32 |

| 6 | max pool | 2, 2 | 2, 2 | 2, 2 | 2, 2 |

| 7 | dropout | 0.25 | 0.25 | 0.25 | 0.25 |

| 8 | convolution (size x, size y, count) | 3, 3, 90 | 3, 3, 90 | 3, 3, 90 | 3, 3, 90 |

| 9 | global max pool | ||||

| 10 | dropout | 0.25 | 0.25 | 0.25 | 0.25 |

| 11 | dense (count) | 90 | 90 | 90 | 90 |

| 12 | dropout | 0.5 | 0.5 | 0.5 | 0.5 |

| 13 | output (count) | 180 | 180 | 180 | 180 |

| Total weights/parameters count | 48,676 | 68,052 | 99,736 | 148,712 |

| # | Layer Type | DN5 | DN6 | DN7 | DN8 |

|---|---|---|---|---|---|

| 1 | input (min size x, min size y, channels) | 26, 26, 1 | 26, 26, 1 | 26, 26, 1 | 26, 26, 1 |

| 2 | convolution (size x, size y, count) | 7, 7, 16 | 7, 7, 16 | 7, 7, 90 | 7, 7, 90 |

| 3 | max pool (size x, size y) | 2, 2 | 2, 2 | 2, 2 | 2, 2 |

| 4 | dropout | 0.25 | 0.25 | 0.25 | 0.25 |

| 5 | convolution (size x, size y, count) | 5, 5, 16 | 5, 5, 32 | 5, 5, 16 | 5, 5, 32 |

| 6 | max pool | 2, 2 | 2, 2 | 2, 2 | 2, 2 |

| 7 | dropout | 0.25 | 0.25 | 0.25 | 0.25 |

| 8 | convolution (size x, size y, count) | 3, 3, 90 | 3, 3, 90 | 3, 3, 90 | 3, 3, 90 |

| 9 | global max pool | ||||

| 10 | dropout | 0.25 | 0.25 | 0.25 | 0.25 |

| 11 | dense (count) | 90 | 90 | 90 | 90 |

| 12 | dropout | 0.5 | 0.5 | 0.5 | 0.5 |

| 13 | output (count) | 180 | 180 | 180 | 180 |

| Total weights/parameters count | 44,836 | 56,562 | 78,136 | 127,112 |

| SN1-ELU | iGLCM | Fourier | LGO | |

| Gaussian Noise Std Value | RMSE (°) | RMSE (°) | RMSE (°) | RMSE (°) |

| none | 0.00 | 0.00 | 1.58 | 2.03 |

| 2000 | 0.01 | 0.00 | 1.70 | 2.11 |

| 4000 | 0.06 | 0.07 | 2.11 | 2.11 |

| 6000 | 0.23 | 0.16 | 2.93 | 2.43 |

| 8000 | 0.48 | 0.31 | 5.64 | 2.69 |

| SN1-ELU | iGLCM | Fourier | LGO | |

| Blur Kernel Size | RMSE (°) | RMSE (°) | RMSE (°) | RMSE (°) |

| none | 0.00 | 0.00 | 1.58 | 2.03 |

| 3 × 3 | 0.01 | 0.00 | 2.42 | 2.61 |

| 5 × 5 | 0.07 | 0.00 | 3.16 | 1.27 |

| 7 × 7 | 2.79 | 2.86 | 4.41 | 3.41 |

| 9 × 9 | 5.81 | 6.00 | 5.29 | 3.91 |

| SN1-ELU | iGLCM | Fourier | LGO | |

| # Tiles Tested | (tiles/s) | (tiles/s) | (tiles/s) | (tiles/s) |

| 178,605 | 6613.3 | 34.4 | 902.2 | 902.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kociołek, M.; Kozłowski, M.; Cardone, A. A Convolutional Neural Networks-Based Approach for Texture Directionality Detection. Sensors 2022, 22, 562. https://doi.org/10.3390/s22020562

Kociołek M, Kozłowski M, Cardone A. A Convolutional Neural Networks-Based Approach for Texture Directionality Detection. Sensors. 2022; 22(2):562. https://doi.org/10.3390/s22020562

Chicago/Turabian StyleKociołek, Marcin, Michał Kozłowski, and Antonio Cardone. 2022. "A Convolutional Neural Networks-Based Approach for Texture Directionality Detection" Sensors 22, no. 2: 562. https://doi.org/10.3390/s22020562

APA StyleKociołek, M., Kozłowski, M., & Cardone, A. (2022). A Convolutional Neural Networks-Based Approach for Texture Directionality Detection. Sensors, 22(2), 562. https://doi.org/10.3390/s22020562