Abstract

This paper presents a tracking controller for nonlinear systems with matched uncertainties based on contraction metrics and disturbance estimation that provides exponential convergence guarantees. Within the proposed approach, a disturbance estimator is proposed to estimate the pointwise value of the uncertainties, with a pre-computable estimation error bounds (EEB). The estimated disturbance and the EEB are then incorporated in a robust Riemannian energy condition to compute the control law that guarantees exponential convergence of actual state trajectories to desired ones. Simulation results on aircraft and planar quadrotor systems demonstrate the efficacy of the proposed controller, which yields better tracking performance than existing controllers for both systems.

1. Introduction

Robotic systems generally have nonlinear dynamics and are subject to model uncertainties and disturbances. Moreover, many robotic systems are underactuated, i.e., having fewer independent control inputs than degrees of freedom, including fixed-wing aircraft, quadrotors and dynamic walking robots. The design of tracking controllers for underactuated robotic systems is a much more challenging problem compared to that for fully-actuated systems. Recently, the concept of a control contraction metric (CCM) was introduced in [] to synthesize trajectory tracking controllers for general nonlinear systems, including underactuated ones. The CCM extends contraction theory [] from analysis to constructive control design, while contraction theory is focused on analyzing nonlinear systems in a differential framework by studying the convergence between pairs of state trajectories toward each other. It was shown in [] that CCM reduces to conventional sliding and energy-based designs for fully-actuated systems. On the other hand, for underactuated systems, compared to prior approaches based on local linearization [], the CCM approach leads to a convex optimization problem for controller synthesis and generates controllers that stabilize every feasible trajectory in a region, instead of just a single target trajectory that must be known a priori [].

On the other hand, control design methods to deal with dynamic uncertainties in the deterministic setting can be roughly classified into adaptive and robust approaches. Robust approaches, such as control [], synthesis [] and robust/tube model predictive control (MPC) [,], usually consider parametric uncertainties or bounded disturbances and aim to find controllers with performance guarantees for the worst case of such uncertainties. The consideration of worst-case scenarios associated with robust approaches often leads to conservative nominal performance. Disturbance–observer (DOB) based control and related methods such as active disturbance rejection control (ADRC) [] lump all uncertainties that may include parametric uncertainties, unmodeled dynamics and external disturbances, together as a “total disturbance”, estimate it via an observer and then compute control actions to compensate for the estimated disturbance [] to recover the nominal performance. However, for state-dependent uncertainties, DOB-based control methods usually ignore the dependence of “disturbance” on system states and rely on assumptions on the derivative of the “disturbance” that are difficult to verify for theoretical guarantees [,]. Alternatively, adaptive control methods such as model reference adaptive control (MRAC) [] usually need a parametric structure for the uncertainties, rely on online estimation of the parameters for control law construction and provide asymptotic performance guarantees in most cases. One of the exceptions is adaptive control [] that does not need a parameterization of the uncertainties (similar to DOB-based control) and focuses on transient performance guarantees in terms of uniformly bounded error between the ideal and uncertain systems.

Both robust and adaptive control approaches have been explored in the context of CCM-based control in the presence of uncertainties and disturbances. In particular, adaptive control was combined with CCM to handle nonlinear control-affine systems with both parametric [] and non-parametric uncertainties []. The case of bounded disturbances in CCM-based control was addressed by leveraging input-to-state stability analysis [] or robust CCM [,]. CCM for stochastic systems was developed in [] to minimize the mean squared tracking error in the presence of stochastic disturbances. Closely relevant to this paper, [] designed an adaptive controller to augment a baseline CCM-based controller to compensate for matched nonlinear non-parametric uncertainties that can depend on both time and states. The authors of [] proved that transient tracking performance was guaranteed in the sense that the actual state trajectory exponentially converges to a neighborhood or a tube around the desired one. Compared to [], our approach relies on a disturbance observer that yields an estimation error bound and robust Riemannian energy condition and ensures that the actual state trajectory exponentially converges to the nominal one.

Statement of Contributions: We present a tracking controller for nonlinear systems subject to matched uncertainties that can depend on both time and states based on contraction metrics and disturbance estimation. Our controller leverages a disturbance estimator to estimate the pointwise value of the uncertainties, with a pre-computable estimation error bound. The estimated disturbance and the estimation error bound are then incorporated into a robust Riemannian energy condition to compute the control law that guarantees exponential convergence of actual state trajectories to nominal ones. We validate the efficacy of our controller on two simulation examples and demonstrate its advantages over existing controllers.

The idea presented in this paper is leveraged in [] for safe learning of uncertain dynamics using deep neural networks. Compared to [], this paper is not relevant to learning and allows the uncertainty to be dependent on both time and states, as opposed to the dependence on states only in []. Additionally, this paper includes an additional aircraft example for performance illustration and conducts extensive comparisons with existing adaptive approaches in simulations that are not available in [].

Notations: Let , and denote the n-dimensional real vector space, the set of non-negative real numbers and the set of real m by n matrices, respectively. I and 0 denote an identity matrix, and a zero matrix of compatible dimensions, respectively; ‖·‖ denotes the 2-norm of a vector or a matrix. For a vector y, denotes its ith element. For a matrix-valued function and a vector , denotes the directional derivative of along y. For symmetric matrices P and Q, () means is positive definite (semidefinite). is the shorthand notation of . Finally, ⊖ denotes the Minkowski set difference.

2. Problem Statement and Preliminaries

Consider a nonlinear control-affine system with uncertainties

where is the state vector, is the control input vector, and are known and locally Lipschitz continuous functions, and represents the matched model uncertainty that can depend on both time and states. We assume that has full column rank for any . Suppose is a compact set that contains the origin, and the control constraint set is defined as , where denote the lower and upper bounds of all control channels, respectively. Furthermore, we make the following assumptions on and .

Assumption 1.

There exist known positive constants , , and such that for any and , the following inequalities hold:

Remark 1.

Assumption 1 indicates that the uncertain function is locally Lipschitz in both t and x with known Lipschitz constants and is uniformly bounded by a known constant in the compact set .

In fact, given the local Lipschitz constants and , a uniform bound on in can always be derived by using Lipschitz continuity properties if the bound on for an arbitrary in and any is known. For instance, assuming , from (3), we have for any and . In practice, some prior knowledge about the actual system and the uncertainty may be leveraged to obtain a tighter bound than the one based on the Lipschitz continuity explained earlier, which is why we directly make an assumption on the uniform bound. With Assumption 1, we will show (in Section 3.3) that the pointwise value of at any time t can be estimated with a pre-computable estimation error bound.

For the system in (1), assume we have a nominal state and input trajectory, and , which satisfy the nominal, i.e., uncertainty-free, dynamics:

We would like to design a state-feedback controller in the form of

so that the actual state trajectory exponentially converges to the nominal one . Our solution is based on CCM and disturbance estimation. Next, we briefly review CCM for uncertainty-free systems.

Control Contraction Metrics (CCMs)

We first introduce some notations related to Riemannian geometry, most of which are from []. A Riemannian metric on is a symmetric positive-definite matrix function , smooth in x, which defines a “local Euclidean” structure for any two tangent vectors and through the inner product and the norm . A metric is called uniformly bounded if holds and for some scalars . Let be the set of smooth paths connecting two points a and b in , where each is a piecewise smooth mapping, , satisfying . We use the notation , and . Given a metric and a curve , we define the Riemannian energy of as . The Riemannian energy between a and b is defined as .

Contraction theory [] draws conclusions on the convergence between pairs of state trajectories toward each other by studying the evolution of the distance between any two infinitesimally close neighbouring trajectories. CCM generalizes contraction analysis to the controlled dynamics setting in which the analysis jointly searches for a controller and a metric that describes the contraction properties of the resulting closed-loop system. Following [,], we now briefly review CCMs by considering the nominal, i.e., uncertainty-free, system:

where and . The differential form of (7) is given by where with denoting the ith column of . Consider a function for some positive definite metric , which can be viewed as the Riemannian squared differential length at point x. Differentiating and imposing that the squared length decreases exponentially with rate , one obtains

where . We first recall some basic results related to CCM.

Definition 1

([]). The system (7) is said to be universally exponentially stabilizable if, for any feasible desired trajectory and , a feedback controller can be constructed that for any initial condition , a unique solution to (7) exists and satisfies where λ and R are the convergence rate and overshoot, respectively, independent of the initial conditions.

Lemma 1

([]). If there exists a uniformly bounded metric M(x), i.e., for some positive constants and , such that for all x and satisfying ,

hold, then the system (7) is universally exponentially stabilizable in the sense of Definition 1 via continuous feedback defined almost everywhere, and everywhere in the neighborhood of the target trajectory with the convergence rate λ and overshoot .

The condition (9) ensures that the dynamics orthogonal to the input are contracting, i.e., (8) holds in the presence of and is often termed as the strong CCM condition []. In particular, the condition (9b) can be satisfied by enforcing that each column of forms a killing vector field for the metric , i.e., for all . The CCM condition (9) can be transformed into a convex constructive condition for the metric by a change of variables. Let (commonly referred to as the dual metric), and be a matrix whose columns span the null space of the input matrix B (i.e., ). Then, condition (9) can be cast as convex constructive conditions for :

The existence of a contraction metric is sufficient for stabilizability via Lemma 1. What remains is constructing a feedback controller that achieves the universal exponential stabilizability (UES). As mentioned in [,], one way to derive the controller is to interprete the Riemann energy, , as an incremental control Lyapunov function and use it to construct a min-norm controller that renders for any time t

Specifically, at any time , given the metric and a desired/actual state pair , a minimum-energy path, i.e., a geodesic, connecting these two states (i.e., and ), can be computed (e.g., using the pseudospectral method in [] to solve a nonlinear programming problem). Consequently, the Riemannian energy of the geodesic is defined as , where , can be calculated. As noted in [], from the formula for the first variation of energy [], . Therefore, (11) can be rewritten as

where and . Therefore, the control signal with a minimum norm for can then be obtained by solving the following quadratic programming (QP) problem:

at each time t, which is guaranteed to be feasible under condition (9) []. The minimization problem (13) is often termed as the pointwise min-norm control problem and has an analytic solution []. The above discussions can be summarized in the following theorem. The proof is trivial by following Lemma 1 and the subsequent discussions and is thus omitted.

Theorem 1

([]). Given a nominal system (7), assume that there exists a uniformly bounded metric that satisfies (10) for all . Then, the control law constructed by solving (13) with , universally exponentially stabilizes the system (7) in the sense of Definition 1, where with and being two positive constants satisfying .

Remark 2.

According to Definition 1 and Theorem 1, under the conditions of Theorem 1, given any feasible trajectory () of (7), a controller can always be constructed to ensure that the actual state trajectory exponentially converges to .

3. Robust Trajectory Tracking Using CCM and Disturbance Estimation

In Section 2, we have shown that existence of a CCM for a nominal (i.e., uncertainty-free) system can be used to construct a feedback control law to guarantee the universal exponential stabilizability (UES) of the system. In this section, we present a controller based on CCM and disturbance estimation to ensure the UES of the uncertain system (1), whose architecture is depicted in Figure 1.

Figure 1.

Block diagram of the closed-loop system with the proposed DE-CCM controller.

3.1. CCMs for the Actual System

To apply the contraction method to design a controller to guarantee the UES of the uncertain system (1), we need to first search a valid CCM for it. Following Section 2, we can derive the counterparts of the strong CCM condition (9) or (10). Due to the particular structure with (1) attributed to the matched uncertainty assumption, we have the following lemma. A similar observation has been made in [] for the case of matched parametric uncertainties. The proof is straightforward and thus omitted. One can refer to [] for more details.

Lemma 2.

The strong (dual) CCM condition for the uncertain system (1) is the same as the strong (dual) CCM condition, i.e., (9) and (10), for the nominal system.

Remark 3.

As a result of Lemma 2, a metric (dual metric ) satisfying the condition (9) and (10) for the nominal system (7) is always a CCM (dual CCM) for the true system (1).

Define , where is introduced in Assumption 1. Assumption 1 indicates for any and . As mentioned in Section 2, given a CCM and a desired trajectory and for a nominal system, a control law can be constructed to ensure exponential convergence of the actual state trajectory to the desired state trajectory . In practice, we have access to only the nominal dynamics (5) instead of the true dynamics to plan a trajectory and . The following lemma gives the condition when , planned using the nominal dynamics (5), is also a feasible state trajectory for the true system.

Lemma 3.

Given a desired trajectory and satisfying the nominal dynamics (5) with , if

then is also a feasible state trajectory for the true system (1).

Proof.

Define . Since and , which is due to and Assumption 1, we have . By comparing the dynamics in (1) and (5), we conclude that and satisfy the true dynamics (1) and thus are a feasible state and input trajectory for the true system. □

Lemma 3 provides a way to verify whether a trajectory planned using the nominal dynamics is a feasible trajectory for the true system in the presence of actuator limits. In the absence of such limits, any feasible trajectory for the learned dynamics is also a feasible trajectory for the true dynamics due to the particular structure of (1) associated with the matched uncertainty assumption.

3.2. Robust Riemannian Energy Condition

Section 2 shows that, given a nominal system and a CCM for such a system, a control law can be constructed via solving a QP problem (13) with a condition to constrain the decreasing rate of the Riemannian energy, i.e., condition (12). When considering the uncertain dynamics in (1), the condition (12) becomes

where represents the true dynamics evaluated at , and as defined in (5). Several observations follow immediately. First, it is clear that (15) is not implementable due to its dependence on the true uncertainty through . Second, if we could have access to the pointwise value of at each time t, (15) will become implementable even when we do not know the exact functional representation of . Third, if we could estimate the pointwise value of at each time t with a bound to quantify the estimation error, then we could derive a robust condition for (15). Specifically, assume is estimated as at each time t with a uniform estimation error bound (EEB) , i.e., Then, we could immediately get the following sufficient condition for (15):

where

Moreover, since satisfies the CCM condition (9), that satisfies (16) is guaranteed to exist for any , regardless of the size of , if the input constraint set is sufficiently large. We term condition (16) the robust Riemannian energy (RRE) condition.

3.3. Disturbance Estimation with a Computable EEB

We now introduce a disturbance estimation scheme to estimate the pointwise value of the uncertainty with a pre-computable EEB, which can be systematically improved by tuning a parameter in the estimation law. The estimation scheme is based on the piecewise-constant estimation (PWCE) law in [], which was originally from []. The PWCE law consists of two elements, namely a state predictor and a piecewise-constant update law. The state predictor is defined as:

where is the prediction error, and a is an arbitrary positive constant. The estimation, , is updated in a piecewise-constant way:

where T is the estimation sampling time, and . Finally, the pointwise value of at time t is estimated as

where is the pseudoinverse of . The following lemma establishes the EEB associated with the estimation scheme in (18) and (19). The proof is similar to that in []. For completeness, it is given in Appendix A.

Lemma 4.

Proof.

See Appendix A. □

Remark 4.

Lemma 4 implies that theoretically, for , the disturbance estimation after a single sampling interval can be made arbitrarily accurate by reducing T, which further indicates that the conservatism with the RRE condition can be arbitrarily reduced after a sampling interval.

In practice, the value of T is subject to the limitations related to computational hardware and sensor noise. Additionally, using a very small T tends to introduce high frequency components in the control loop, potentially harming the robustness of the closed-loop system, e.g., against time delay. This is similar to the use of a high adaptation rate in model reference adaptive control schemes as discussed in []. Therefore, one should avoid the use of a very small T for the sake of robustness unless a low-pass filter is used to filter the estimated disturbance before fed into (16), as suggested by the adaptive control theory [].

Remark 5.

The estimation in cannot be arbitrarily accurate. This is because the estimation in depends on according to (19). Considering that is purely determined by the initial state of the system, , and the initial state of the predictor, , it does not contain any information of the uncertainty. Since T is usually very small in practice, lack of a tight estimation error bound for the interval will not cause an issue from a practical point of view. Additionally, the estimation of ϕ defined in (23) could be quite conservative. Further considering the frequent use of Lipschitz continuity and inequalities related to matrix/vector norms in deriving the constant , can be overly conservative. Therefore, for practical implementation, one should leverage some empirical study, e.g., performing simulations under a few user-selected functions of and determining a bound for . In our experiments, we found the theoretical bound computed according to (21) was usually at least 10 and could be times more conservative.

3.4. Exponentially Convergent Trajectory Tracking

Based on the review of contraction control in Section 2 and the discussions in Section 3.2 and Section 3.3, the control law can be obtained by solving the following QP problem at each time t:

subject to

where , according to (17), depends on , which is from the disturbance estimation law defined by (18) to (20), as defined in (21), and as defined in (5). Similar to (13), problem (24) is a pointwise min-norm control problem and has an analytic solution []. Specifically, denoting and , (25) can be written as , and the solution for (24) is given by

To move forward with analysis, we need to verify that when , the control signal resulting from solving the QP problem (24) satisfies . Deriving verifiable conditions to ensure this set bound is outside the scope of this paper and will be addressed as future work. We are now ready to state the main result of the paper.

Theorem 2.

Given an uncertain system represented by (1) satisfying Assumption 1, assume that there exists a metric such that for all , (10) holds and holds for positive constants and . Furthermore, suppose that a nominal trajectory () planned using the nominal dynamics (5) and the initial actual states satisfy (14) and

for any . Then, if from solving (24) satisfies for any , the control law constructed by solving (24) ensures for any , and furthermore, universally exponentially stabilizes the uncertain system (1) in the sense of Definition 1 with , i.e.,

Proof.

We use contradiction to show for all . Assume this is not true. According to (27), . Since is continuous, there must exist a time such that

Now let us consider the system evolution in . Since by assumption and for any t in , the EEB in (21) holds in . As a result, the control law obtained from solving (24) ensures satisfaction of the RRE condition (16) and thus satisfaction of the Riemannian energy condition (15) for the uncertain system (1), and thereby universally exponentially stabilizes the uncertain system (1) in , in the sense of Definition 1 with , according to Theorem 1. On the other hand, satisfaction of (14) implies that is a feasible state trajectory for the uncertain system (1) according to Lemma 3. Further considering Theorem 1, we have for any t in . Due to (27), the preceding inequality indicates that remains in the interior of for t in . This, together with the continuity of , immediately implies , which contradicts (29). Therefore, we conclude that for all . From the development of the proof, it is clear that with the control law given by the solution of (24), the UES of the closed-loop system in the sense of Definition 1 with for all is achieved, which is mathematically represented by (28). The proof is complete. □

3.5. Discussion

Theorem 2 essentially states that under certain assumptions, the proposed controller guarantees exponential convergence of the actual state trajectory to a desired one . With the exponential guarantee, if the actual trajectory meets the desired trajectory at certain time , then these two trajectories will stay together afterward. While the exponential convergence guarantee is stronger than the performance guarantees provided by existing adaptive CCM-based approaches [,] that deal with similar settings (i.e., matched uncertainties), the proposed method requires the knowledge of the Lipschitz bound of the uncertainty and the input matrix function to be in a compact set known a priori (see Assumption 1), and the actual control inputs to stay in a compact set known a priori, which cannot be verified at this moment due to the lack of a bound on the control inputs. These requirements are not needed in [,].

The approach here is related to the robust control Lyapunov-based approaches [] which provide robust stabilization around an equilibrium point (as opposed to a trajectory considered in this paper) in the presence of uncertainties.

Remark 6.

The exponential convergence guarantee stated in Theorem 2 is based on a continuous-time implementation of the controller. In practice, a controller is normally implemented on a digital processor or controller with a fixed sampling time. As a result, the property of exponential convergence may be slightly violated.

Computational cost: As can be seen from Section 2 and Section 3.2, Section 3.3 and Section 3.4, computation of the control signal at each time t includes three steps: (i) updating the estimated disturbance via (18) to (20), (ii) computing the geodesic connecting the actual and nominal states (see the discussion below (11)), and (iii) computing the control signal via (26). The computation costs of steps (i) and (iii) are quite low as they only involve integration and algebraic calculation. In comparison, step (ii) has a relatively high computational cost as it necessitates solving a nonlinear programming (NLP) problem. However, since the NLP problem does not involve dynamic constraints, it is much easier to solve than a nonlinear model predictive control (MPC) problem []. Following [], such a problem can be efficiently solved by applying a pseudospectral method.

4. Simulation Results

In this section, we illustrate the performance of our proposed tracking controller based on the RRE condition and disturbance estimation, denoted as DE-CCM, using aircraft and planar quadrotor examples. For both examples, we perform comparisons of DE-CCM with standard CCM controllers that ignore the uncertainties and adaptive CCM (Ad-CCM) controllers considering parametric uncertainties designed using the approach in []. All the computations and simulations were performed in Matlab R2021b.

4.1. Longitudinal Dynamics of an Aircraft

We first implement our method on the simplified pitch dynamics of an aircraft borrowed from []:

where , and q are the pitch angle (in rad), angle of attack (in rad), and pitch rate (in rad/s). Here and are aerodynamic lift and moment, respectively. Using the flat plat theory, these two aerodynamic terms are approximated by and with unknown parameters and . For all the simulations, the true values are chosen to be , , while the nominal values of these parameters used in designing all the tested controllers are limited to , . As a result, the dynamics can be recast in the form of (30) with , and . The control objective is to drive the system from nominal initial states to terminal states . For CCM search and trajectory planning, the following constraints are enforced: s, /s.

We set the convergence rate to 1. By gridding the set of and evaluating the constraints (9) in those grid points, we found a CCM as a quadratic function of with the SPOT toolbox [] (to formulate the convex optimization problem) and Mosek solver []. Additionally, the constants and in (28) such that for all were found to be and . We planned a nominal trajectory (, ) using OptimTraj [,], to drive the system from the initial states to the terminal states , while minimizing the task completion time () and energy consumption characterized by the cost function . For simulation, OPTI [] and Matlab fmincon solvers were used to solve the geodesic optimization problem (see Section 2). The initial states of the actual system were chosen to be , slightly deviated from that planned ones to better illustrate the tracking performance. We implemented our proposed DE-CCM, Ad-CCM from [] and a standard CCM which neglects all the uncertainty. For Ad-CCM design, the adaptation gain was chosen to be diag to achieve a relatively good tracking result, while further increasing it did not help much with the tracking performance. The design procedure for Ad-CCM in [] requires a parametric structure for the uncertainty, which is given by

where is the known base function and is the unknown parameter vector to be estimated by the adaptive law proposed in []. The control signals under all three controllers were updated at 200 Hz.

It is easy to notice from Assumption 1 that and since the input matrix is constant, and the uncertainty is time-invariant. We can also verify that the disturbance is bounded by and has a Lipschitz constant . By gridding the space and making use of the control input bound, the system derivative can also be bounded by a constant . According to (21), if we want to achieve an EEB for all , the maximum value for the estimation sample time T is s. However, as mentioned in Remark 5, the way to compute the EEB is quite conservative. In the simulations we found that was more than enough to ensure the EEB and therefore used for implementing the DE-CCM controller.

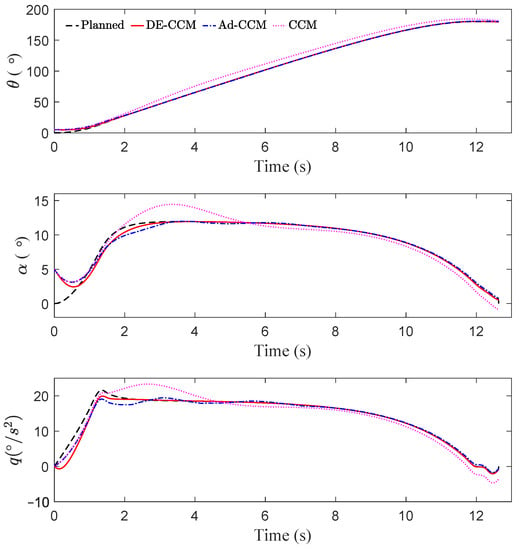

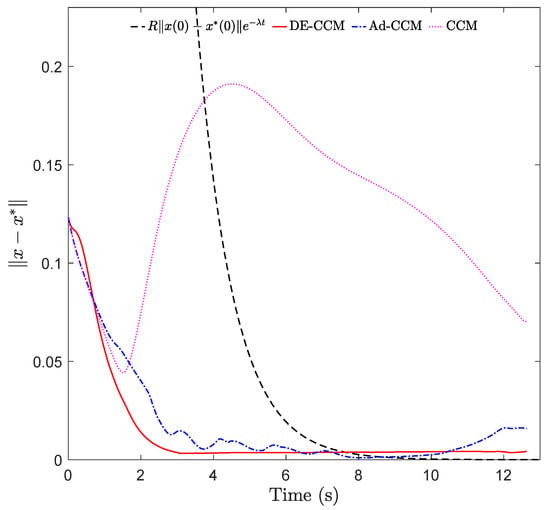

As shown in Figure 2 and Figure 3, due to ignoring the uncertainties, CCM yielded a large tracking error between 2 and 6 s. The state trajectories under Ad-CCM had some oscillations, which lasted roughly up to 8 s. All three states yielded by DE-CCM achieve good tracking performance without large deviations from the planned trajectories, unlike the performance yielded by Ad-CCM and CCM. From Figure 3 we notice that the tracking error represented by under DE-CCM monotonically decreases and achieves the smallest steady-state error. The small non-zero tracking error at the end under DE-CCM, which is inconsistent with the performance guarantee in (25), is due to the limited control update frequency, while the performance guarantee in Lemma 4 holds under continuous update of the control signal, i.e., corresponding to an infinitely high update frequency. Table 1 shows the mean squared error (MSE) for state trajectory tracking defined by

where N is the number of data points, under DE-CCM, Ad-CCM and CCM. We observe that DE-CCM outperforms CCM and Ad-CCM in terms of MSE by 54% and 2%, respectively.

Figure 2.

Trajectory tracking performance of different controllers.

Figure 3.

Tracking error under different controllers.

Table 1.

MSE for state trajectory tracking for the aircraft example.

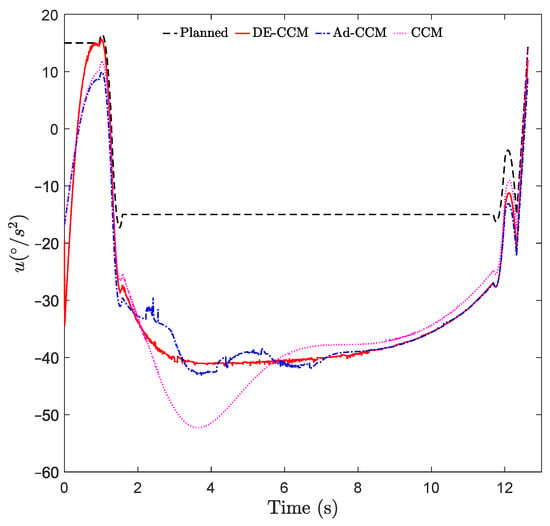

From Figure 4, we observe that the input of DE-CCM is smoother than Ad-CCM. The small oscillations between 2 s to 8 s in DE-CCM input are due to the finite tolerance in the geodesic optimization. Decreasing the tolerance and the sample time will reduce the oscillations but request more iterations (and thus more time) to compute the control signal at each time step.

Figure 4.

Control inputs yielded by different controllers.

4.2. Planar Quadrotor

A planar quadrotor system is borrowed from []. The state vector is defined as , where and are the positions in x and z directions, respectively, and are the slip velocity (lateral) and the velocity along the thrust axis in the body frame of the vehicle, is the angle between the x direction of the body frame and the x direction of the inertia frame. The input vector contains the thrust force produced by each of the two propellers. The dynamics of the vehicle are given by

where m and J denote the mass and moment of inertia about the out-of-plane axis, and l is the distance between each of the propellers and the vehicle center, and denotes the unknown disturbances exerted on the propellers. The parameters were set as kg, , and m. The uncertainty was set to be . We imposed the following constraints: , .

When searching for CCM, we parameterized the CCM W by and and imposed the constraint . The convergence rate was chosen to be 0.8. More details about synthesizing the CCM can be found in []. For estimating the disturbance using (18) to (20), we set . It is easy to verify that , , , and (due to the fact that B is constant) satisfy (2). By gridding the space , the constant in (23) can be determined as . According to (21), if we want to achieve an EEB for all , then the estimation sampling time needs to satisfy s. However, as noted in Remark 5, the EEB computed according to (21) could be overly conservative. In the simulations, we found that the estimation sampling time of s was more than enough to ensure the desired EEB and therefore simply set s.

We consider the task of navigation from to while avoiding three obstacles depicted by black circles in Figure 5. A nominal trajectory (, ) was generated using OptimTraj [] to minimize the cost , where is the arrival time. OPTI [] and Matlab fmincon solvers were used to solve the geodesic optimization problem (see Section 2). The actual start point was set to be , which was different from the planned start point, to reveal the trajectory convergence pattern.

Figure 5.

Trajectory tracking performance of different controllers.

For comparison, we also designed a standard CCM controller by completely ignoring the uncertainty and two adaptive CCM (Ad-CCM) controllers following the approach in []. To apply the approach in [] which can only handle parametric uncertainties, we parameterized the uncertainty as

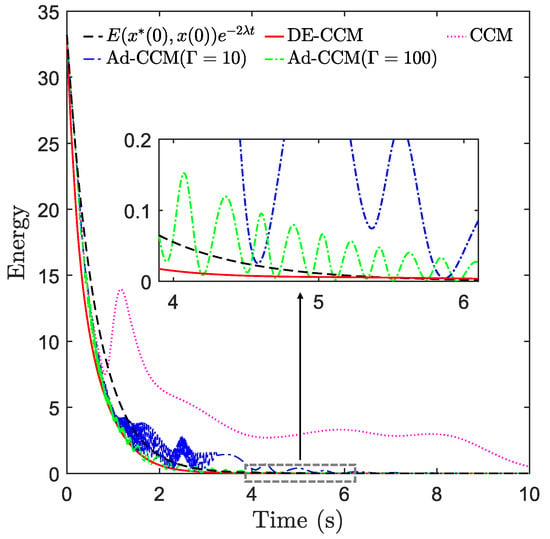

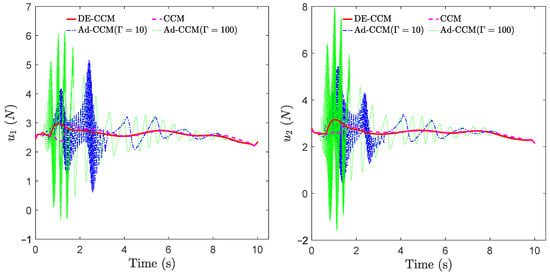

where is the basis function that is assumed to be known, and is th vector of unknown parameters. With the parametric structure (33), we designed two adaptive CCM controllers using and , respectively, where denotes the adaptive gain. Figure 5 shows the planned and actual trajectories under the CCM, Ad-CCM, and our proposed controller based on the RRE condition and disturbance estimation, denoted as DE-CCM, while Figure 6 and Figure 7 show the control inputs and Riemannian energy. One can see that the actual trajectories yielded by the CCM controller deviated quite a lot from the planned ones and collided with one obstacle. On the other hand, the actual trajectories yielded by the DE-CCM controller converged to the desired trajectory as expected and almost overlapped with it afterward. In fact, the slight deviations of actual trajectories from the desired ones under the DE-CCM controller were due to the finite step size associated with the ODE solver used for the simulations (see Remark 6). Table 2 shows the MSE for state trajectory tracking defined in (32). We observe that DE-CCM outperforms CCM and Ad-CCM in terms of MSE by 46% and 14%, respectively.

Figure 6.

Riemannian energy under different controllers. .

Figure 7.

Control inputs yielded by different controllers.

Table 2.

MSE for state trajectory tracking for the planar quadrotor example. For Ad-CCM, only the result for , which corresponds to better tracking performance compared to , is included.

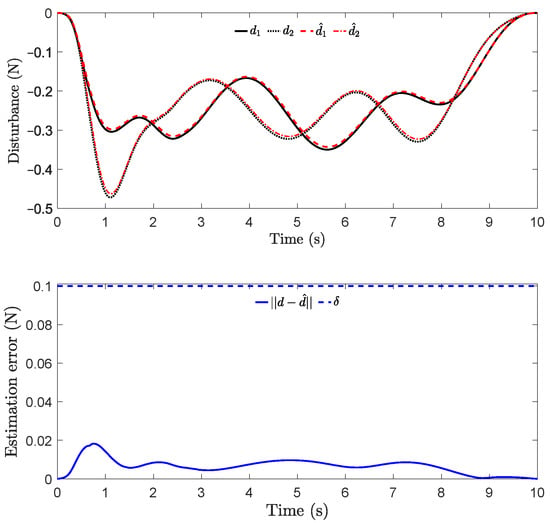

From Figure 6, one can see that the magnitude of under the RD-CCM controller decreased exponentially, and the magnitude was bounded by the curve from above except at the very end when the energy is close to zero. In comparison, Ad-CCM with yielded similar tracking performance to DE-CCM, while the tracking performance of Ad-CCM with was relatively worse and characterized by larger oscillations. Additionally, from Figure 7, one can see that the control inputs generated by both of the Ad-CCM controllers have high-frequency oscillations before 3 s, which is undesired for practical deployment. Finally, Figure 8 shows the actual and estimated disturbances as well as the estimation error. One can see that the estimated disturbance is quite close to the actual one for both channels, and the EEB of 0.1 is respected throughout the simulation.

Figure 8.

Actual and estimated disturbances (top) and the estimation error (bottom). Note that and () represent the ith element of d (actual disturbance) and (estimated disturbance), respectively. The blue dashed line in the bottom plot denotes the EEB used in computing the control inputs.

5. Conclusions

This paper presents a robust trajectory tracking controller with exponential convergence for uncertain nonlinear systems based on control contraction metrics (CCM) and disturbance estimation. The controller uses a disturbance estimator to estimate the pointwise value of the uncertainty with a pre-computable estimation error bound (EEB). The estimated disturbance and the EEB are then incorporated into a robust Riemannian energy condition, which guarantees exponential convergence of actual trajectories to desired ones. The efficacy of the proposed controller is validated in simulations. In particular, the proposed controller outperforms an existing adaptive CCM controller in terms of tracking performance by 2% for the aircraft example and 14% for the planar quadrotor example, while not needing to know the basis functions to parameterize the uncertainties that are needed by the adaptive CCM controller.

This paper considers only matched uncertainties, which are added to the system through the same channels as control inputs. In the future, we would like to address unmatched uncertainties that widely exist in practical systems. Additionally, we would like to experimentally validate the proposed controller on real hardware.

Author Contributions

The individual contributions of the authors are as follows. Conceptualization, methodology and formal analysis, P.Z.; software and investigation, Z.G. and P.Z.; validation and visualization, Z.G.; supervision, N.H.; writing—original draft preparation, P.Z.; writing—review and editing, Z.G. and N.H.; project administration, P.Z.; funding acquisition, N.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by AFOSR, in part by NASA, and in part by NSF under the RI grant #2133656 and NRI grant #1830639.

Institutional Review Board Statement

Not available.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not available.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Lemma 4:

Note that (and thus due to (20)) for any according to (19). Further considering the bound on in (4), we have

We next derive the bound on for . For any (), we have

Since is continuous, the preceding equation implies

where the first and last equalities are due to the estimation law (19).

Since is continuous, is also continuous given Assumption 1. Furthermore, considering that is always positive, we can apply the first mean value theorem in an element-wise manner (Note that the mean value theorem for definite integrals only holds for scalar valued functions) to (A3), which leads to

for some with and , where is the j-th element of , and

The estimation law (19) indicates that for any t in , we have The preceding equality and (A4) imply that for any t in with , there exist () such that

Note that

where . Similarly,

where , and the last inequality is due to the fact and (4). Therefore, for any (), we have

for some , where the equality is due to (A5), and the last inequality is due to (A6) and (A7). The dynamics in (1) indicates that

where is defined in (23). As a result, the inequality (A9) implies that

where the last inequality is due to the fact that

Therefore, we have

where the second inequality is due to Assumption 1 and the last inequality is due to (A10) and (A11). Finally, plugging (A12) into (A8) leads to

for any . From (A2) and (A14) and the relation between and in (20), we arrive at (21). Considering Assumption 1 and the assumption that and are compact, the constants involved in the definition of in (22) are all finite. As a result, we have , which further indicates that for any . The proof is complete. □

References

- Manchester, I.R.; Slotine, J.J.E. Control contraction metrics: Convex and intrinsic criteria for nonlinear feedback design. IEEE Trans. Autom. Control 2017, 62, 3046–3053. [Google Scholar] [CrossRef] [Green Version]

- Lohmiller, W.; Slotine, J.J.E. On contraction analysis for non-linear systems. Automatica 1998, 34, 683–696. [Google Scholar] [CrossRef] [Green Version]

- Manchester, I.R.; Tang, J.Z.; Slotine, J.J.E. Unifying robot trajectory tracking with control contraction metrics. In Robotics Research; Springer: Cham, Switzerland, 2018; pp. 403–418. [Google Scholar]

- Tedrake, R.; Manchester, I.R.; Tobenkin, M.; Roberts, J.W. LQR-trees: Feedback motion planning via sums-of-squares verification. Int. J. Robot. Res. 2010, 29, 1038–1052. [Google Scholar] [CrossRef] [Green Version]

- Doyle, J.; Glover, K.; Khargonekar, P.; Francis, B. State-space solutions to standard H2 and H∞ control problems. IEEE Trans. Autom. Control 1989, 34, 831–847. [Google Scholar] [CrossRef]

- Packard, A.; Doyle, J. The complex structured singular value. Automatica 1993, 29, 71–109. [Google Scholar] [CrossRef] [Green Version]

- Mayne, D.Q.; Seron, M.M.; Raković, S. Robust model predictive control of constrained linear systems with bounded disturbances. Automatica 2005, 41, 219–224. [Google Scholar] [CrossRef]

- Mayne, D.Q. Model predictive control: Recent developments and future promise. Automatica 2014, 50, 2967–2986. [Google Scholar] [CrossRef]

- Han, J. From PID to active disturbance rejection control. IEEE Trans. Ind. Electron. 2009, 56, 900–906. [Google Scholar] [CrossRef]

- Chen, W.H.; Yang, J.; Guo, L.; Li, S. Disturbance-observer-based control and related methods—An overview. IEEE Trans. Ind. Electron. 2015, 63, 1083–1095. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Yang, J.; Chen, W.; Chen, X. Generalized extended state observer based control for systems With mismatched uncertainties. IEEE Trans. Ind. Electron. 2012, 59, 4792–4802. [Google Scholar] [CrossRef] [Green Version]

- Ioannou, P.A.; Sun, J. Robust Adaptive Control; Dover Publications, Inc.: Mineola, NY, USA, 2012. [Google Scholar]

- Hovakimyan, N.; Cao, C. Adaptive Control Theory: Guaranteed Robustness with Fast Adaptation; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2010. [Google Scholar]

- Lopez, B.T.; Slotine, J.J.E. Adaptive nonlinear control with contraction metrics. IEEE Control Syst. Lett. 2020, 5, 205–210. [Google Scholar] [CrossRef]

- Lakshmanan, A.; Gahlawat, A.; Hovakimyan, N. Safe feedback motion planning: A contraction theory and -adaptive control based approach. In Proceedings of the 59th IEEE Conference on Decision and Control (CDC), Jeju Island, Korea, 14–18 December 2020; pp. 1578–1583. [Google Scholar]

- Singh, S.; Landry, B.; Majumdar, A.; Slotine, J.J.; Pavone, M. Robust feedback motion planning via contraction theory. Int. J. Robot. Res. 2019. under review. [Google Scholar]

- Zhao, P.; Lakshmanan, A.; Ackerman, K.; Gahlawat, A.; Pavone, M.; Hovakimyan, N. Tube-certified trajectory tracking for nonlinear systems with robust control contraction metrics. IEEE Robot. Autom. Lett. 2022, 7, 5528–5535. [Google Scholar] [CrossRef]

- Manchester, I.R.; Slotine, J.J.E. Robust control contraction metrics: A convex approach to nonlinear state-feedback H∞ control. IEEE Control Syst. Lett. 2018, 2, 333–338. [Google Scholar] [CrossRef]

- Tsukamoto, H.; Chung, S.J. Robust controller design for stochastic nonlinear systems via convex optimization. IEEE Trans. Autom. Control 2020, 66, 4731–4746. [Google Scholar] [CrossRef]

- Zhao, P.; Guo, Z.; Cheng, Y.; Gahlawat, A.; Kang, H.; Hovakimyan, N. Guaranteed nonlinear tracking control in the presence of DNN-learned dynamics with contraction metrics and disturbance estimation. IEEE Conf. Decis. Control. 2022. under review. [Google Scholar]

- Leung, K.; Manchester, I.R. Nonlinear stabilization via control contraction metrics: A pseudospectral approach for computing geodesics. In Proceedings of the American Control Conference, Seattle, WA, USA, 24–26 May 2017; pp. 1284–1289. [Google Scholar]

- Do Carmo, M.P.; Flaherty Francis, J. Riemannian Geometry; Springer: Boston, MA, USA, 1992. [Google Scholar]

- Freeman, R.; Kokotovic, P.V. Robust Nonlinear Control Design: State-Space and Lyapunov Techniques; Springer Science & Business Media: Berlin, Germany, 2008. [Google Scholar]

- Zhao, P.; Mao, Y.; Tao, C.; Hovakimyan, N.; Wang, X. Adaptive robust quadratic programs using control Lyapunov and barrier functions. In Proceedings of the 59th IEEE Conference on Decision and Control, Jeju Island, Korea, 14–18 December 2020; pp. 3353–3358. [Google Scholar]

- Cao, C.; Hovakimyan, N. adaptive output feedback controller for non strictly positive real reference systems with applications to aerospace examples. In Proceedings of the AIAA Guidance, Navigation and Control Conference and Exhibit, Honolulu, HI, USA, 18–21 August 2008; p. 7288. [Google Scholar]

- Lopez, B.T.; Slotine, J.J.E.; How, J.P. Robust Adaptive Control Barrier Functions: An Adaptive and Data-Driven Approach to Safety. IEEE Control Syst. Lett. 2021, 5, 1031–1036. [Google Scholar] [CrossRef]

- Megretski, A. Systems Polynomial Optimization Tools (SPOT). 2010. Available online: https://github.com/spot-toolbox/spotless (accessed on 1 September 2021).

- Andersen, E.D.; Andersen, K.D. The MOSEK interior point optimizer for linear programming: An implementation of the homogeneous algorithm. In High Performance Optimization; Springer: New York, NY, USA, 2000; pp. 197–232. [Google Scholar]

- Kelly, M. An introduction to trajectory optimization: How to do your own direct collocation. SIAM Rev. 2017, 59, 849–904. [Google Scholar] [CrossRef]

- Kelly, M.P. OptimTraj User’s Guide, Version 1.5. 2016. Available online: https://github.com/MatthewPeterKelly/OptimTraj (accessed on 1 September 2021).

- Currie, J.; Wilson, D.I. OPTI: Lowering the barrier between open source optimizers and the industrial MATLAB user. In Proceedings of the Foundations of Computer-Aided Process Operations, Savannah, GA, USA, 8–11 January 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).