Abstract

In this work, we apply Convolutional Neural Networks (CNNs) to detect gravitational wave (GW) signals of compact binary coalescences, using single-interferometer data from real LIGO detectors. Here, we adopted a resampling white-box approach to advance towards a statistical understanding of uncertainties intrinsic to CNNs in GW data analysis. We used Morlet wavelets to convert strain time series to time-frequency images. Moreover, we only worked with data of non-Gaussian noise and hardware injections, removing freedom to set signal-to-noise ratio (SNR) values in GW templates by hand, in order to reproduce more realistic experimental conditions. After hyperparameter adjustments, we found that resampling through repeated k-fold cross-validation smooths the stochasticity of mini-batch stochastic gradient descent present in accuracy perturbations by a factor of . CNNs are quite precise to detect noise, for H1 data and for L1 data; but, not sensitive enough to recall GW signals, for H1 data and for L1 data—although recall values are dependent on expected SNR. Our predictions are transparently understood by exploring tthe distribution of probabilistic scores outputted by the softmax layer, and they are strengthened by a receiving operating characteristic analysis and a paired-sample t-test to compare with a random classifier.

Keywords:

gravitational waves; Deep Learning; convolutional neural networks; binary black holes; LIGO detectors; probabilistic binary classification; resampling regime; white-box testings; uncertainty PACS:

04.30.-w; 07.05.Kf; 07.05.Mh

1. Introduction

Beginning with the first direct detection of a gravitational wave (GW) by LIGO and Virgo collaborations [1], the observation of GW events that are emitted by compact binary coalescences (CBCs) have made GW astronomy, to some extent, a routine practice. 11 of these GW events were observed during O1 and O2 scientific runs, which are collected in the catalogue GWTC-1 [2] of CBCs and, more recently, 39 events have been reported in the new catalogue GWTC-2 [3] of CBCs, which are detections during the first half of the third scientific run, namely O3a. This last run have been recorded with the network of LIGO Livingston (L1) and Hanford (H1) twin observatories and Virgo (V1) observatory; an, recently, KAGRA observatory joined the network of GW detectors [4].

The sensitivity of GW detectors has been remarkably increased these last years and, in this improvement process, GW data analysis had a crucial role quantifying and minimizing, as much as possible, the effects of non-Gaussian noise—mainly short-time transients, called “glitches” [5], for which, in several cases, such as those involving blip transients [6], there is still no full explanations about their physical causes. Monitoring signal-to-noise ratio (SNR) of all detections is an essential procedure here, indeed being the heart of the standard algorithm that is used for the detection and characterization of GWs in LIGO and Virgo, namely Matched Filter (MF) [7,8,9]. This technique, which is the GW detection stage of current CBC search pipelines of LIGO, is based on the assumption that signals to be detect are embedded in additive Gaussian noise and it consists in a one-by-one correlation between data from detectors (after a whitening process) with each one GW template of a bank of GW templates. Subsequently, those matchs that minimize SNR [10] are selected. Standard pipelines that include MF and are used in LIGO and Virgo analyses are PyCBC [11,12], GstLAL [13], and MBTA [14].

MF has shown be a powerful tool having a crucial role in all confirmed detections of GWs that are emitted by CBCs. However, there are significant reasons for exploring alternative detection strategies. To begin, noise outputted by interferometric detectors is non-Gaussian, conflicting with assumption of Gaussian noise in MF. Generally, and also included in LIGO search pipelines, supplementary tools are used for facing this issue as whitening techniques [15]. However, as glitches remain, consistence statistical tests [16] and coincidence procedures as cross-correlation [17,18] are applied for a network of detectors, raising the still open problem of how to systematically deal with single-interferometer data. In sum, the assumption about Gaussian noise of MF raises the issue about how to detect glitches in single-interferometer data.

Elsewhere, one would want to perform more general GW searchings. The natural next step is to include precessing spins [19], orbital eccentricity [20], and neutron star tidal deformability [21], among other aspects. The more general a GW searching the much wider the parameter space and MF becomes computationally prohibitive.

Actually, if we are not interested in replacing MF, still it is pertinent to have alternative algorithms just for independent verifications and/or increasing the confidence level of GW detections.

In this context, Machine Learning (ML) [22] and its successor, Deep Learning (DL) [23], emerge as promising alternatives or, at least, complementary tools to MF. These techniques assume nothing regarding background noise, which is clearly advantageous if we want to work with single-interferometer data; we can identify non-Gaussian noise without needing a network of detectors. In addition, they have shown be remarkably useful in analyzing enormous mounts of data through sequential or online learning processes, which could be a significant improvement for more general GW searchings in real time. Additionally, if we do not have deterministic templates to predict GW signals as those emmited by Core-collapse supernovae (CCSNe) or anticipate noise artifacts as non-Gaussian glitches, unsupervised ML and DL algorithms (which do not need prior labeled training samples) could be interesting for future explorations.

The implementation of ML algorithms in GW data analysis has no more than a few years. Biswas et al. [24] contributed with a pioneering work, where Artificial Neural Networks (ANNs), Support Vector Machines, and Random Forest algorithms were used to detect glitches in data from H1 and L1 detectors, recorded during S4 and S6 runs. Competitive performance results, back then, were obtained. Later, works focused on several problems were published, with better results. For instance: ANN for the detection of GWs that are associated with short gamma-ray bursts [25], Dictionary Learning for denoising [26], Difference Boosting Neural Network and Hierarchical Clustering for detection of glitches [27], ML and citizen science for glitches classification [28], and background reduction for CCSNe searching with single-interferometer data [29], among others.

Applications of DL, in particular Convolutional Neural Network (CNN) algorithms, are even more recent in GW data analysis. Gabbard et al. [30], George and Huerta [31], provided first works, in which CNNs were implemented to detect simulated GW signals from Binary black holes (BBHs) that were embedded in Gaussian noise. They claimed that performance of CNNs was similar and much better than MF, respectively. Later, George and Huerta extended their work by embedding simulated GWs and analytical glitches in real non-Gaussian noise data of LIGO detectors, with similar results [32]. Thereafter, CNN algorithms has been applied for several instrumental and physical problems, showing more improvements. For instance, the detection of glitches [33], trigger generation for locating coalescente time of GWs emitted by BBHs [34], detection of GWs from BBHs [35] and Binary neutron star (BNS) systems [36], detection of GWs emitted by CCSNe and using both phenomenological [37] and numerical [38] waveforms, and the detection of continuous GWs from isolated neutron stars [39], among others.

From a practical point of view, previous works of CNN algorithms that were applied to GW detection and characterization reach competitive performance results according to standard metrics, such as accuracy, loss functions, false alarm rates, etc., showing feasibility of DL in GW data analysis in the first place. However, from a formal statistical point of view, we warn that, beyond just applications, we are in need of deeper explorations that seriously take the inevitable uncertainty that is involved in DL algorithms into account before putting them as real alternatives to standard pipelines in LIGO and Virgo.

For this research, our general goal is to draw on CNN architectures to make a standard GW detection. In particular, beginning from a training set containing single-interferometer strain data, and then transforming it into time-frequency images with a Morlet wavelet transform, the aim is distinguish those samples that are only non-Gaussian noise from those samples that contain a GW embedded in non-Gaussian noise—in ML language, this is just a binary classification. Moreover, as a novel contribution, here we will take two ingredients into consideration to advance towards a statistically informed understanding of involved uncertainties, namely: resampling, and a white-box approach. Resampling consists of repeated experiments for training and testing CNNs, and the white-box approach involves a clear mathematical understanding of how these CNNs internally work. See Section 2.4 for more details.

Our choice of Morlet as a mother wavelet is because we want to capture all time-frequency information of each sample at once rather performing multiresolution analyses for detecting closely space features, given that our CNN algorithms, precisely, will be in charge of performing feature extraction for our image classification. Subsequently, this setup removes, beforehand, the need of working with compact support wavelets for multiresolution analyses such as Haar, Symlet, and Daubechies, among others [40]. Our Morlet wavelet is also normalized to conserve energy and become symmetric.

In this work, we are not interested in reaching higher performance metric values than those reported in previous works, neither testing new CNN architectures, nor using latest real LIGO data and/or latest simulated templates, but rather facing the question about how to clever deal with uncertainties of CNNs, which is an unescapable requesite if we claim that DL techniques are real alternatives to current pipelines. Moreover, as a secondary goal, we want to show that CNN algorithms for GW detection, even when considering repeated experiments, can be easily run in a single local CPU and reach good performance results. The key motivation for this is to make reproducibility easier for the scientific community. We also released all of the code used in this work [41].

With regard to data, we decided to use recordings from S6 LIGO run, separately from H1 and L1 detectors, considering GW signals of CBCs that were only generated by hardware injections. This choice is ultimately motivated by the fact that for reaching stronger conclusions, CNN algorithms should be tested in conditions as adverse as possible. To work solely with hardware injections means to eliminate freedom to set SNR values by hand and, therefore, to remove a choice that can influence performance of CNN algorithms. Subsequently, we draw on S6 data because they have a greater amount of hardware injections than more recent data. See Section 2.2 for more details.

2. Methods and Materials

2.1. Problem Statement

As starting point of our problem, we have a th slice of raw strain data recorded at one of the LIGO detectors. In mathematical notation, this slice of data is expressed by a column vector of times series in N dimensions,

where the sampling time is with , the sampling frequency is , and, of course, the time length of the slice is (in seconds) with points of data. The next step is to theoretically model the above slice of data; therefore, we introduce the following expression:

where is the non-Gaussian noise from the detector and is the observed strain, which is a function of the antenna response of the interferometer when a GW, with unknown duration, arrival time, and waveform, is detected. For this research, the problem that we address is to decide whether a segment of strain data contains only noise, or it contains noise plus a unknown GW signal. Then, in practice, we will implement CNN algorithms that performs a binary classification, inputting strain samples of time lengh and deciding, for each sample, if it does not contain a GW signal (i.e., the sample ∈ class 1), or it contains a GW signal (i.e., the sample ∈ class 2).

2.2. Dataset Description

For this study, we use real data that were provided by LIGO detectors, which are freely available on the LIGO-Virgo Gravitational Wave Open Science Center (GWOSC), https://www.gw-openscience.org (accessed on 1 April 2019) [42]. Because of reasons that are presented at the end of Section 1, we decided to use data of sixth science run (S6), recorded from 7 July 2009 to 20 October 2010. During this run, the detectors achieved a sensitivity given by a power spectral denstity around , with uncertainties up to in amplitude [43]. Each downloaded strain data slice has a time length of s and a sampling frequency of Hz.

S6 contains hardware injections that were already added to the noise strain data. These injections are generated by actuating the test masses (mirrors) and, therefore, changing the physical length of the arms of the detectors. This procedure simulates the effects of GWs, and is used for experimental tests and calibration of the detectors [44]. For this research, we solely work with hardware injections of GWs emitted by CBCs: 724 injections in data from H1 detector and 656 injections in data from L1 detector. For each injection, we know the coalescence (or merger) time in Global Positioning System (GPS), masses and in solar mass units , the distance D to the source in Mpc, and the expected and the recovered signal-to-noise ratio, namely , , respectively. All of this information is provided by LIGO on the aforementioned website.

Because S6 has a much greater mount of hardware injections of CBC GW signals than in later public data, this is the best option for our study. Besides, if we reach good results with S6 data, we are providing highly convincing evidence for GW detection by using CNNs because the sensitivity of S6 is less than in later public data and, even, we could expect that working with later public data our algorithms will work much better.

Take in mind that our approach allows for advancing towards more realistic experimental conditions regarding the evaluation of the CNNs. We are showing, beyond any doubt, that we do not have control on distribution of recorded real signals and, therefore, we do not have any ability to influence the performance results. It is known that the SNR values of recorded GW events and their frequency of occurrence are given as (not handleable) facts. Along a LIGO run data, both in hardware injections set and real observing signals set, each event consecutively occurred in real time once and never again. Therefore, if our CNN algorithms are able to deal with this starting adverse evaluation constraint, then we are advancing towards the more general purpose of analyzing arbitrarily distributed data without drawing on ad hoc choices that could generate excessively optimistic performance results.

The above advantage does not lie on the set of parameters that characterize hardware injections, which is to say, data populations. In general, hardware injections has been used to perform end-to-end validation checks [45], and they are experimentally expensive to perform as repeatedly as software injections to generate diverse distributions of GW events. The point rather is that our approach lies on the fact that we cannot vary these populations by hand, that is to say, on the constraint to generate this population, not in the population itself. This advantage cannot achieved with software injections, which still are handleable and, therefore, the evaluation results can be influenced. For instance, in random software injections, we could vary injection ratio to influence the trade-off between variance vs. bias of stochastic predictions and, therefore, to improve performance of a CNN algorithm either by artificially balancing dataset regarding target values and/or costs of different classification errors.

It should be stressed that problem of bias and fairness (with regards to ad hoc choices in datasets and algorithms) is an open and highly debate issue in the ML community [46], even with deep ethical implications if we apply ML/DL to social ambits. Of course, in GW data analysis, we do not have this kind of implications, but this does not remove the fact that, at a pure statistical level, bias and fairness are still present. If we are committed to a deep multidisciplinary understanding of ML, it is needed to face this challenge by drawing on evaluations as transparent as possible.

In any case, even if the above advantage is not considered, our approach still is scientifically relevant, because it lays the groundwork for future calibration procedures with CNN detection algorithms inputting hardware injections.

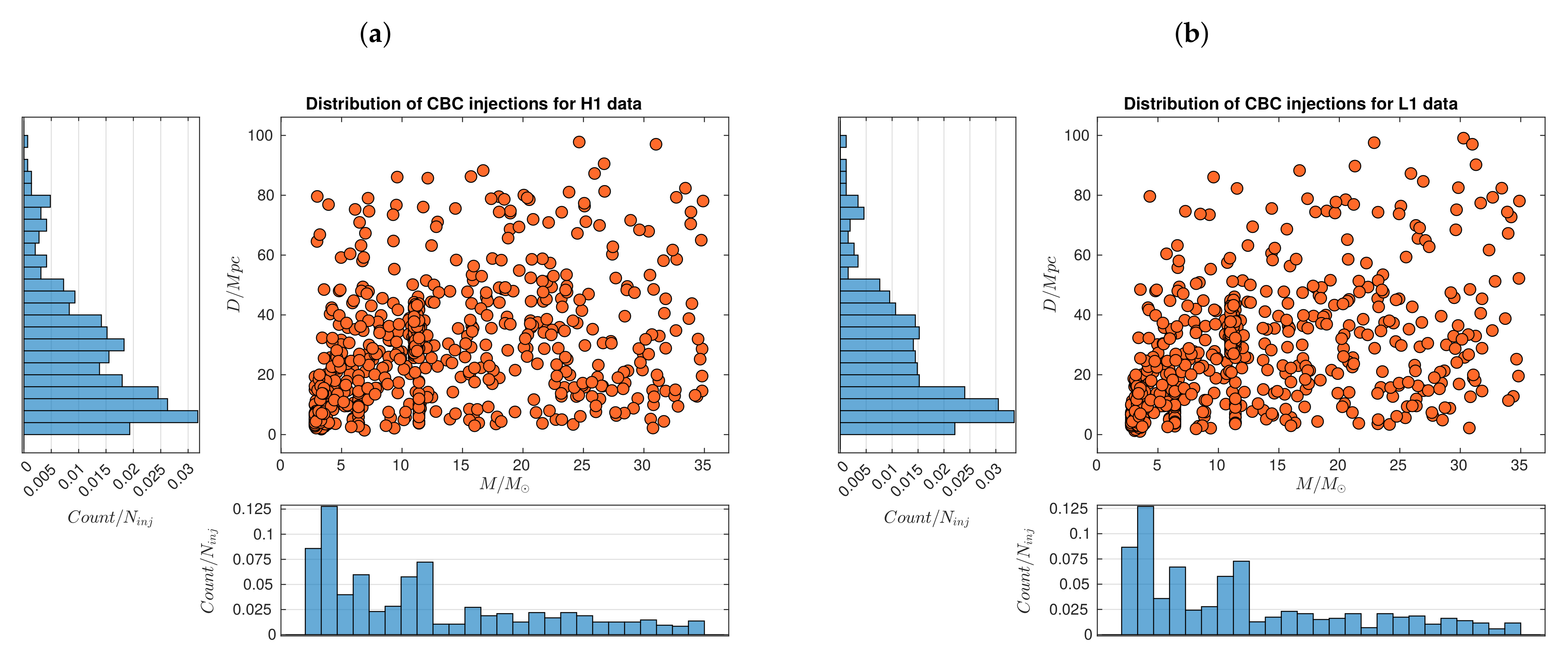

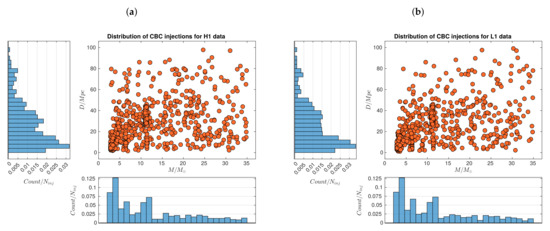

Figure 1 shows the distributions of injections present in S6 data from H1 and L1 detectors, detaling information about total mass of each source and distance D to it. These injections simulate stellar BBHs that are located at the scale of our galaxy supercluster, covering distances from 1 Mpc to 100 Mpc and masses from to . In both panels, we have a high occurrency of events of masses from to at distances from 1 Mpc to 50 Mpc aprox. Even, in each of these regions there is a subregion where the highest concentration of events appears, namely for masses from to and distances from 1 Mpc to 26 Mpc aprox., corresponding to the scale of nearby galaxy clusters. Subsequently, in the region and Mpc, we observe a clear decrease in the frequency of occurence of BBHs, which is even more pronounced for the farest events at distances Mpc for all masses. In any case, by solely exploring marginal histograms for distances, the clearest trend that we notice in data is that, as D increases from 4 Mpc, GW events are less frequent.

Figure 1.

Distributions of hardware injections simulating GW emitted by CBCs that are present in S6 data from H1 (a) and L1 (b) LIGO detectors. The total masses in solar mass units and distances D from the sources in megaparsecs are shown. Depending on M and D, we found are several regions in which GW events are more or less frequenct. In any case, the clearest trend in data is that, as distance D increase from 4 Mpc, GW events are less frequent. Data dowloaded from the LIGO-Virgo GWOSC: https://www.gw-openscience.org (accessed on 1 April 2019).

We downloaded 722 slices of strain data from the H1 detector and 652 slices of strain data from L1 detector, to be pre-process as explained in Section 2.3. Besides, we only consider hardware injection for which we know their parameters, because we will characterize detected GW events depending on their given expected SNR values, as will shown in results of Section 3.3.

2.3. Data Pre-Processing

With LIGO raw strain data segments at hand, the next methodological step is data pre-processing. This has three stages, namely data cleaning, the construction of strain samples, and application of wavelet transform.

2.3.1. Data Cleaning

Data cleaning or data conditioning is a standard stage for reducing the noise and flattening the power spectral density (PSD). It consists in three steps. Firstly, for each th slice of raw strain data that we introduced in Equation (1), segments around coalescence times are extracted via blackman window of time length 128 s. If a slice does not have an injection, then it is not possible to extract a segment of 128 s and, therefore, this slice does not go beyond this step. Moreover, of all segments containing injections, we discard those with NaN (not a number) entries. This rejection is valid, because NaN entries span time ranges from 2 s to 50 s, being greater than time resolutions that we use for building samples to be inputted by the CNNs (see Section 2.3.2), namely from s to s (see Section 3.2). Here, feature engineering techniques as imputation or interpolation are not suitable. At this point, we decrease the mount of our data segments from 722 to 501 for H1 detector, and from 652 to 402 for L1 detector.

Secondly, a whitening is applied to each th of these segments as:

where is the point Discrete Fourier Transform (DFT) of the 128 s segment of raw strain data , the point two-sided PSD of the raw data, N is the number of points of data in the 128 s raw strain segment, and i the imaginary unit. In theory, PSD is defined as the Fourier transform of the raw data autocorrelation. Subsequently, we implemented the Welch’s estimate [47], where we compute the PSD between 0 Hz and 2048 Hz at a resolution of Hz applying Hanning-windowed epochs of time length 128 s with an overlap of 64 s. At the end, the goal of whitening procedure is to approximate strain data to a Gaussian stochastic process that is defined by the following autocorrelation:

with denoting the variance and the discrete time unit impulse function. (Explicitly: the discrete time unit impulse function is defined by for , and for ). Finally, a Butterworth band-pass filter from 20 Haz to 1000 Hz is applied to the already whitened segment. This filtering removes extreme frequencies that are out of our region of interest and discards 16 s on the borders of the segment to avoid spurious effects produced by the whitening, which results in a new segment of time length s. Edge effects appear because, in the whitening, DFT assumes that each finite slice is repeated in the form of consecutive bins, to have a fully periodic and time infinite data. This inescapable assumption leads to a spectral leakage by generating new artificial frequencies due to the inter-bin discontinuities.

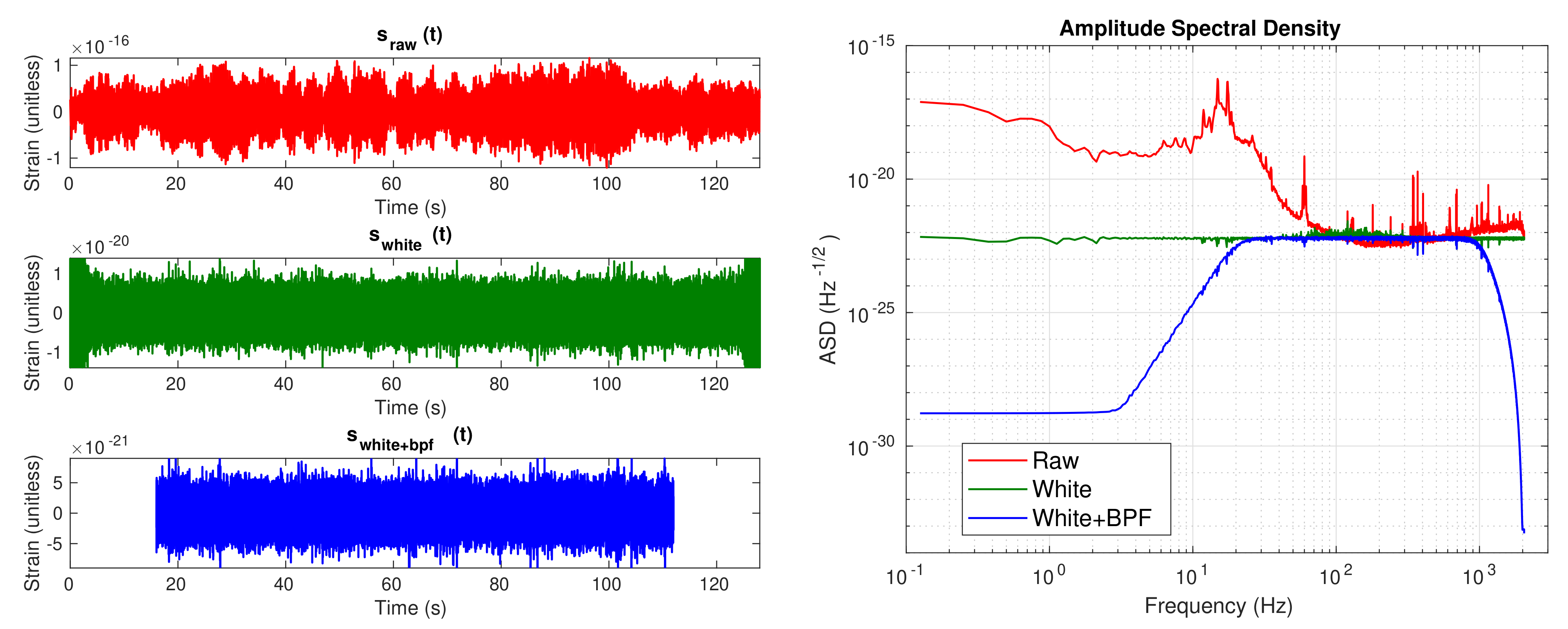

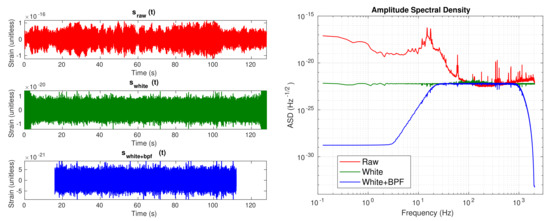

Figure 2 shows how our strain data segments look after applying the whitening and the band-pass filtering, both in the time domain and amplitude spectral density (ASD), which is computed as . From the time domain plots, it can be seen that after the cleaning, amplitude of the strain data is reduced 5 orders of magnitude, from to , and edge effects after whitening are clearly shown in the middle plot. Before applying cleaning, ASD shows the known noise profile that describes the sensitivity of LIGO detectors, which is the sum of contributions of all noise sources [48].

Figure 2.

Illustration of the raw strain data cleaning. The left panel shows three plots as time series segments. First, a segment of raw strain data of 128 s after we applied the blackman window. Second, the resulting strain data after the whitening, in which it is noticeable the spurious effects at the edges. Finally, the cleaned segment of data after applied the band-pass filtering and edges removal to the previous whitened segment of data. In the right panel, we plot the same data, but now in the amplitude spectral density (ASD) vs. frequency space.

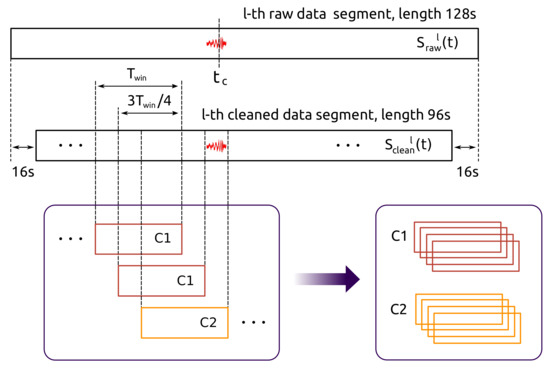

2.3.2. Strain Samples

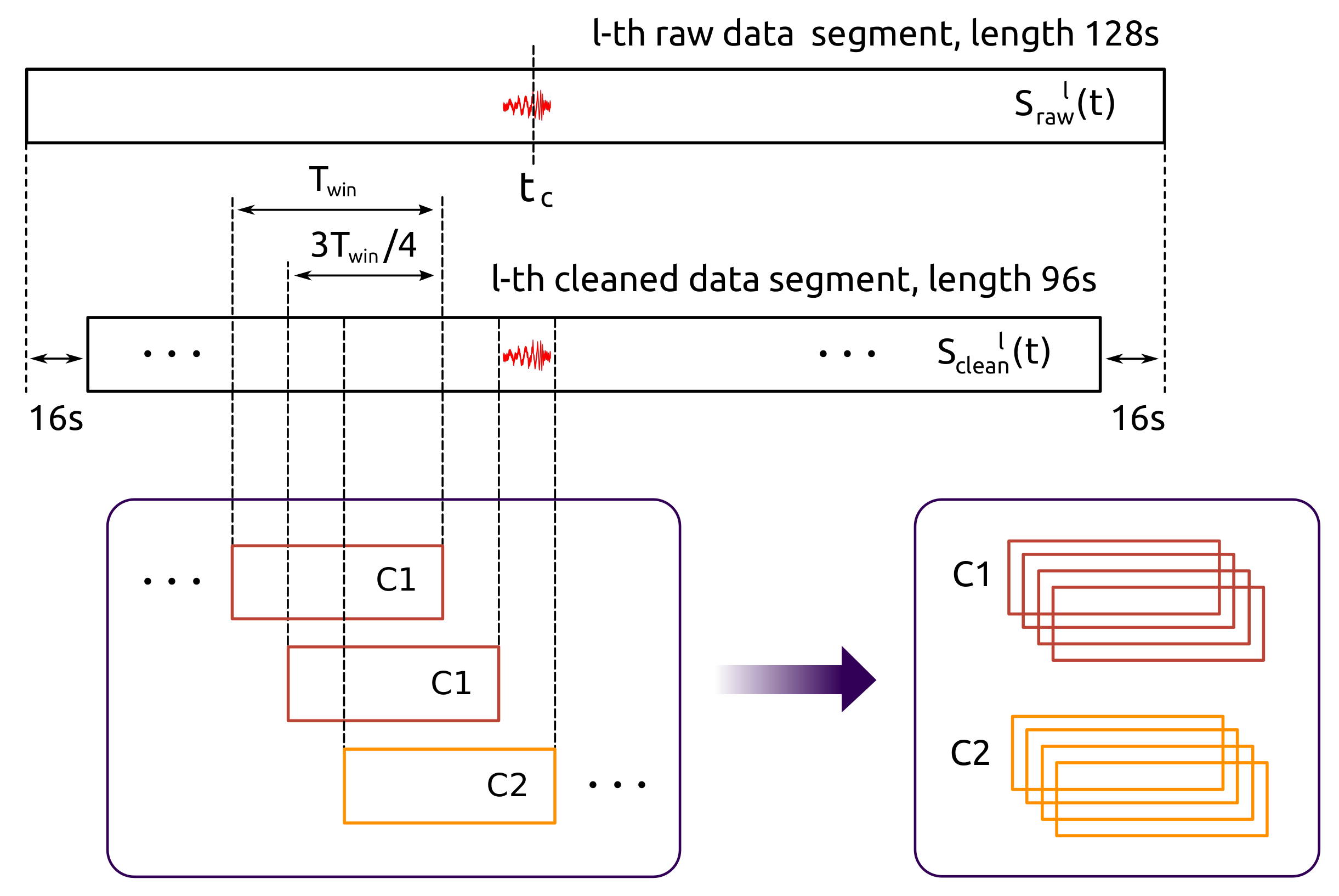

The building of strain samples that will be inputted by our CNNs is the next stage of the pre-processing. This is schematically depicted in Figure 3. This procedure has two steps. First, a th cleaned strain data segment, denoted as , is splited in overlapped windows of duration , identifying if it has or does not have an injection—time length is of the order of the injected waveform duration, leastwise. Here classes are assigned: if a window of data contains an injection, then class 2 () is assigned; on the other hand, if that window does not contain an injection, class 1 () is assigned. This class assignment is applied to all windows of data. Next, from the set of all tagged windows, and discarding beforehand those windows with , we select four consecutive ones of and four consecutive ones of . This procedure is applied to each segment of clean data and, for avoid confusion with notation, we depict a th windowed strain sample as:

where according to the time length of the samples, with the sampling frequency. In practice, as we initially have 501 segments from H1 and 402 segments from L1, then and strain samples, respectively, are generated. Consequently, index k appearing in Equation (5) takes values from 0 to 4007 for H1 detector data, and values from 0 to 3359 for L1 detector data. Besides, is a resolution measure and, as it will seen later, we run our code with several values of in order to identify which of them are optimal with respect to the performances of CNNs.

Figure 3.

Strain samples generation by slidding windows. A CBC GW injection is located at coalescence time in th raw strain data segment of 128 s. After a cleaning, the segment is splitted in overlapped windows, individually identifying whether a window has or does not have the GW injection. Finally, among all these windows, and discarding windows with , a set of eight strain samples is selected, four with noise alone () and four with noise plus the mentioned CBC GW injection (). This set of eight samples will be part of the input dataset of our CNNs. This procedure is applied to all raw strain data segments.

2.3.3. Wavelet Transform

Some works in GW data analysis have used raw strain time series directly as input to deep convolutional neural networks (e.g., Refs. [31,34]); however, we will not follow this approach. We rather decided to apply CNNs for what was designed in its origins [49], and for what have dramatically improved last decades [50], namely image recognition. Then, we need a method to transform our strain vectors to image matrices, i.e., grid of pixels. For this research, we decided to use the wavelet transform (WT), which, in signal processing, is a known approach for working in the time-frequency representation [51]. One of the great advantages of the WT is that, by using a localized wavelet as kernel (also called “mother wavelet”), it allows for visualizing tiny changes of the frequency structure in time and, therefore, improve the search of GW candidates that arise as non-stationary short-duration transients in addition to the detector noise.

In general, there are several wavelets that can be used as kernel. Here, we decide to work with the complex Morlet wavelet [52], which, in its discrete version, has the following form:

having a Gaussian form along time and along frequency, with standard deviations and , respectively. Moreover, these standard deviations are not independent of each other, because they are related by and , where is the width of the wavelet and its center in the frequency domain.

It is important to clarify that Morlet wavelet has a Gaussian shape, then it does not have a compact support. For this reason, the mesh in which we defined Equation (6), i.e., the set of discrete values for time and frequency , is infinite by definition. Further, resolutions and are solely constrained by our system resources and/or to what extent we want to economize these resources.

Subsequently, to perform the WT of the strain sample (defined by Equation (5)) with respect the kernel wavelet (defined by Equation (6)), we just need to compute the following convolution operation:

where is the th element of the column vector . Besides, and , where and define the size of each th image generated by the WT transform, being just the element or pixel of the each generated image. In practice, we set our WT, such that it outputs images with dimensions and pixels.

In general, the grid of pixels that are defined by all values and depends on the formulation of the problem. Here, we chose frequencies varying from Hz to Hz, with a resolution of Hz, given that is consistent with the GW signals that we want to detect. In addition, as we apply the WT to each cleaned strain data sample, we have discrete time values varying from s to , with a resolution of , where Hz is the sampling frequency of the initial segments of strain data.

Although the size of output images is not too large, we decided to apply a resizing to reduce them from pixels to pixels. Keep in mind that, as we need to analyze several thousand images, using their original size would be unnecessarily expensive for system resources. The resizing was performed by a bicubic interpolation, in which each pixel of a reduced image is a weighted average of pixels in the nearest four-by-four neighborhood.

In summary, after applying the WT and the resizing to each strain data sample, we generated an image dataset , where depicts each image as a matrix of pixels (notice that we are inverting the standard notation used in image processing of , because we defined our images by a discrete plot of x axis vs. y axis), the classes that we are working with, and , depending on whether we are using data from H1 or L1, respectively.

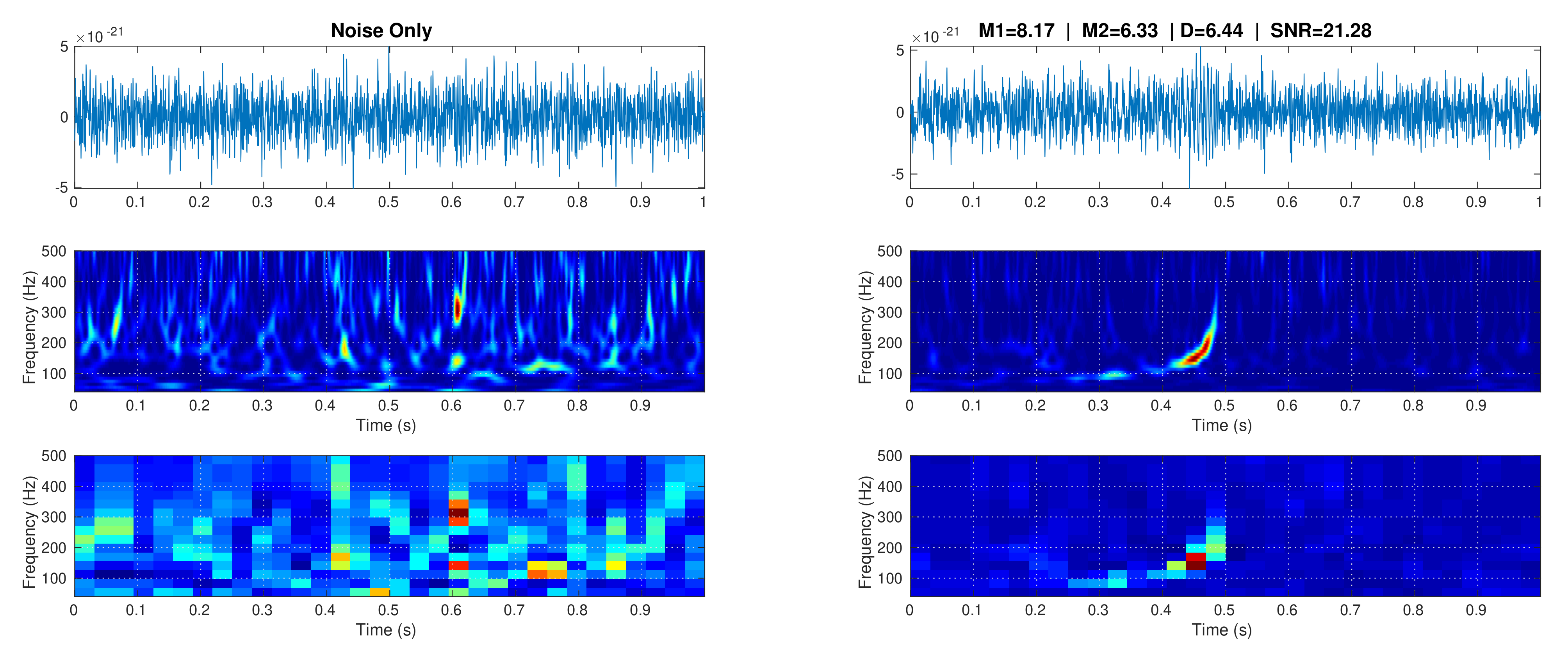

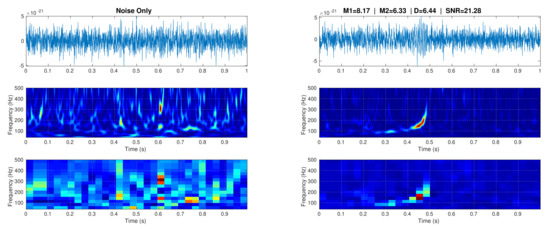

Figure 4 shows two representative strain date samples (one belonging to and other to ) as a time domain signal, their time-frequency representation according to the WT with a Morlet wavelet as kernel, and its resized form. Both of the samples were generated from a strain data segment recorded by the L1 detector of 4094 s at GPS time 932335616. The image sample at the right shows a GW transcient that has a variable frequency approximately between 100 Hz and 400 Hz. It is important to clarify that, before entering to CNNs, image samples are rearranged, such that they the whole dataset has a size , denoting images of size pixels using 1 channel for grayscale—sample input images that are shown in Figure 4 are in color just for illustrative purposes.

Figure 4.

Visualization of two image samples of s, generated from a strain data segment of length 4096 s and GPS initial time 932335616, recorded at the L1 detector. In the left panel, a sample of noise alone (class 1) is shown, and in the right panel a sample of noise plus GW (class 2). In the class 2 sample, masses and are in solar masses units, distance D in Mpc, and SNR is the expected signal-to-noise ratio. Upper panel show strain data in the time domain, middle panels show time-frequency representations after apply a WT, and bottom panels show resized images by a bicubic interpolation.

2.4. Resampling and White-Box Approach

In Section 1, we briefly mentioned our resampling white-box approach for dealing with intrinsic uncertainties to CNN algorithms. Subsequently, it is important to clarify subtleties and advantages of this approach.

A CNN algorithm does not input waveform templates as isolated samples, but also the distribution of these templates considering the whole training and testing datasets, as Gebhard et al. [34] critically pointed out. Indeed, in all previous works of DL applied to GW data analysis, distributions of training samples were set up as class-balanced artificially by hand, which is to say, with equal or similar number of samples for each class, despite that real occurrences of classes in recorded observational data of LIGO are very different from each other—works, such as [53,54], have reported information about BBH population from O1 and O2 runs. It is a common practice in ML and DL to draw on artificial balanced datasets to made CNN algorithms easily tractable with respect to hyperparameter tuning, choice of performance metrics, and cost missclasification. Nonetheless, when uncertainty is taken into account, the real frequency of occurrence of samples cannot be ignored, because they define how reliable is our decision criteria for classifying when an algorithm outputs a score for a single input sample. These kinds of details are very known in the ML community [55], and they need to be seriously explored in GW data analysis beyond just hands-on approaches. Deeper multidisciplinary researches are necessary for advancing in this field.

The full problem of dealing with arbitrarily imbalanced datasets, for now, is beyond of our research. Nonetheless, even though working with balanced datasets, a good starting point is to include stochasticity by resampling, which in turn we define as repeated experiments of a global k-fold cross-validation (CV) routine. This stochasticity is different to that usually introduced in each learning epoch by taking a mini-batch of the whole training set for updating the model parameters (e.g., by a stochastic gradient descent algorithm) and, therefore, minimizing the cross-entropy. For this research, we will consider the above two sources of stochasticity.

Stochastic resampling helps to aliviate artificiality that is introduced by a balanced dataset, because the initial splitting into k folds is totally random and each whole k-fold CV experiment is not reproducible in a deterministic fashion. Besides, this approach implemented an experimental setup in which uncertainties are even more evident and need to be seriously treated beyond of just reporting metrics of single values. Indeed, in most common situations with really big datasets (i.e., millions, billions, or more samples), stastistical tools for decreasing system resources, and data changing over time, among others; CNN algorithms are generally set such that their predictions are not deterministic, leading to distributions of performance metrics instead of single value metrics—and demanding formal probabilistic analyses. Given these distributions, with their inherent uncertainties, a statistical paired-sample test is necessary for formally concluding how close or far our CNNs are to a totally random classifier, and that we performed in this work.

On the other hand, our white-box approach works as a complementary tool to understand how uncertainties influence performance of CNN algorithms. In principle, white-box testings depend on the complex and still open problem of explainability in ML/DL. Nonetheless, for our purposes it is enough to define “white-box” as a methodology that appeals to the information regarding how the CNN algorithms internally behave. A crucial aspect here is to explicitly (mathematically) describe how each layer of a CNN works and why to choose them. This is actually the most basic explanatory procedure when considering that, from a fundamental point of view, we still do not have analytical theories to explain low generalization errors in DL or even, to establish analytical criteria to unequivocally choose a specific architecture for performing a particular task [56]—in practice, we only have a lot of previously implemented CNN architectures for other problems, which need to be test as a just essay-error process for a new problem, actually. This white-box approach allowed us to smartly choose a reduced set of hyperparameters to be adjust, which was very useful for avoiding unnecessary intensive explorations given our limited system resources. Moreover, this approach assisted us in understanding why the resulting performances of our classifications are class- and threshold-dependent based on the distribution of output probabilistic scores.

2.5. CNN Architectures

Our CNN algorithms consist of two main stages in terms of functionality: feature extraction and classification. First stage begins inputting images from a training dataset and the second stage ends ouputing predicted classes for each image sample. Classification is our ultimate goal, consisting in a perceptron stage plus an activation function as usual in ANNs. Feature extraction, on the other and, is the core of CNNs, because it provides the ability of image recognition, consisting in three substages: convolution, detection, and pooling. These substages are implemented through layers that, as a whole, define a stack. Subsequetly, this stack can be connected to a second stack, and so on, until last stack is connected to the classifier. For this work, we tested CNNs with 1, 2, and 3 stacks.

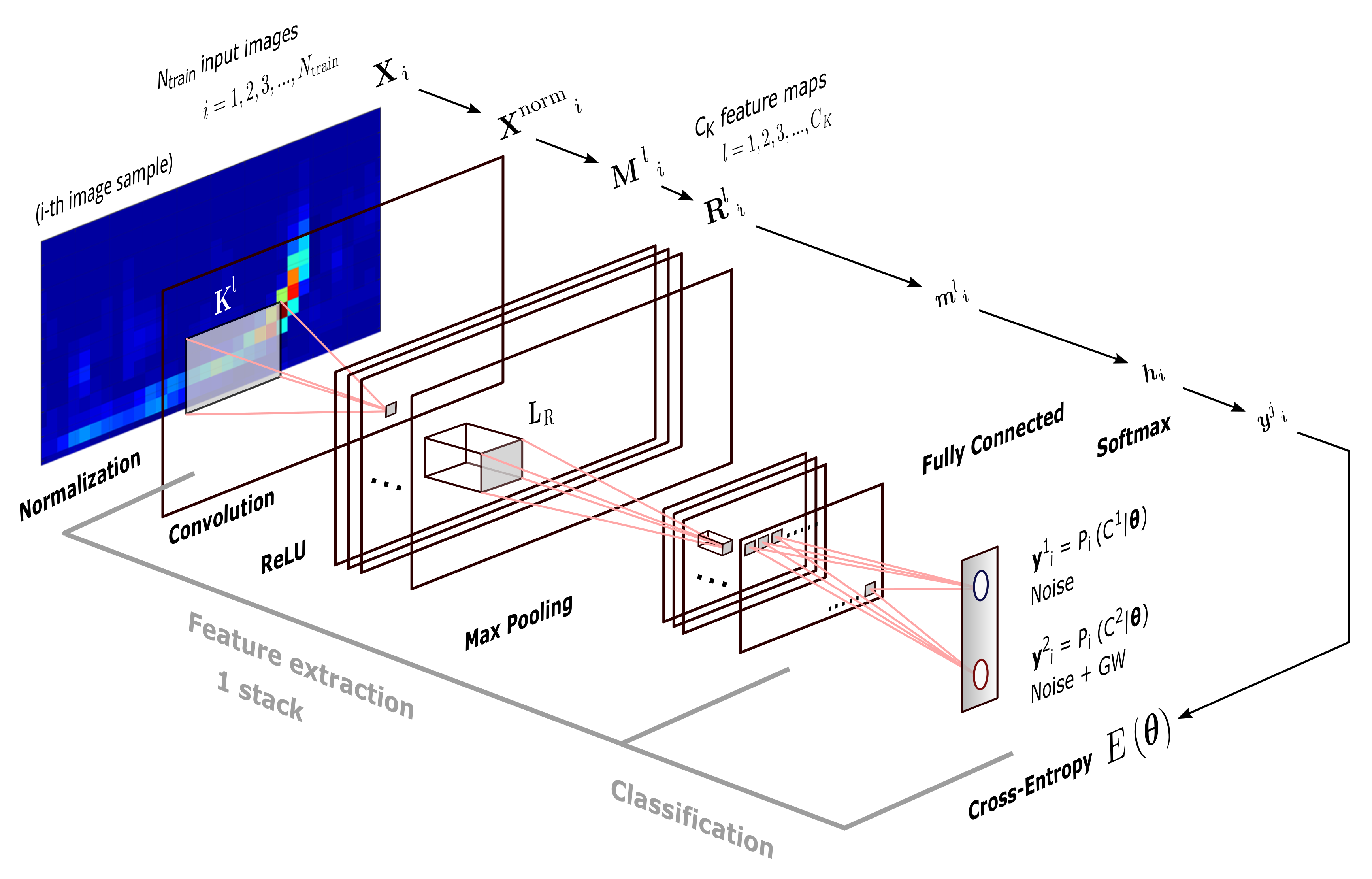

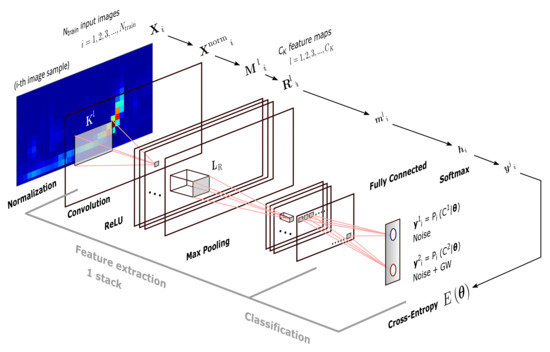

Knowing that general functionality of our CNNs is a must, but, for contributing with a white-box approach, we need to understand what each layer does. For this reason, we proceed to mathematically describe each kind of layer that were used—not only those that are involved in the already mentioned (sub)stages of the CNN, but also those that were required in our hands-on implementation with the MATLAB Deep Learning Toolbox [57]. Take in mind that the output of a layer is the input of the next layer, as detailed in Figure 5 for a single-stack CNN.

Figure 5.

Single stack CNN architecture used as basis for this research. same size image samples are simultaneously inputted, but, for simplicity, we detail the procedure for a single image—besides, although this image is shown colorized, training images dataset occupies just one channel. A image feed the CNN, and a two-dimensional vector is outputted, giving us posterior probabilities of class 1 (noise alone) and class 2 (noise + GW), both being conditioned by model parameters included in vector . After that, cross-entropy is computed. The notation for input(output) matrices and vectors is the same as that introduced in Section 2.5 to mathematically describe the several kinds of layers that are used in the CNNs.

- Image Input Layer. Inputs images and applies a zero-center normalization. Denoting an th input sample as the matrix of pixels belonging to a dataset of same size training images, this layer outputs the normalized image:where the second term is the average image of the whole dataset. Normalization is useful for dimension scaling, making changes in each attribute, i.e., each pixel along all images, of a common scale. Because normalization does not distort relative intensities too seriously and helps to enhance contrast of images, we can apply it to the entire training dataset, independently what class each image belong for.

- Convolution Layer. Convolves each image with sliding kernels of dimension . Denoting each th kernel by with , this layer outputs feature maps, and each of them is an image that is composed by the elements or pixels:where b is a bias term, and indices p and q run over all values that lead to legal subscripts of and . Depending on the parametrization of subscripts m and n, dimension of images can vary. If we include the width and height of output maps (in pixels) in of a two-dimensional vector just for notation, these spatial sizes are computed by:where str (i.e., stride) is the step size in pixels with which a kernel moves above , and padd (i.e., padding) denotes time rows and/or frequency columns of pixels that are added to for moving the kernel beyond the borders of the former. During the training, components of kernel and bias terms are iteratively learned from certain initial values appropriately chosen (see Section 2.6); then, once the CNN has captured and fixed optimal values for these parameters, convolution is applied to all testing images.

- ReLU Layer. Applies the Rectified Linear Unit (ReLU) activation function to each neuron (pixel) of each feature map obtained from the previous convolutional layer, outputting the following:In practice, this layer detects nonlinearities in input sample images; and, its neurons can output true zero values, generating sparse interactions that are useful for reducing system requirements. Besides, this layer does not lead to saturation in hidden units during the learning, because its form, as given by Equation (11), does not converge to finite asymptotic values. (Saturation is the effect when an activation function located in a hidden layer of a CNN converge rapidly to its finite extreme values, becoming the CNN insensitive to small variations of input data in most of its domain. In feedforward networks, activation functions as or are prone to saturation, hence they use are discouraged except when the output layer has a cost function able to compensate their saturation [23] as, for example, the cross-entropy function).

- Max Pooling Layer. Downsamples each feature map with the maximum on local sliding regions of dimension . Each pixel of a resulting reduced featured map is given by the following:where ranges for indices r and s depend on the spatial sizes of outputs maps; and these sizes, i.e., width and height , being included in a two-dimensional vector just for notation, are computed by:where the padding and stride values have the same meanings as in the convolutional layer. Interestly and apart of reducing system requeriments, max pooling layer leaves invariant output values under small translations in the input images, which could be useful for working with a network of detectors—the case in which a detected GW signal appears with a time lag between two detectors.

- Fully Connected Layer. This is the classic perceptron layer used in ANNs and it performs the binary classification. It maps all images to the two-dimensional vector by the affine transformation:where is a vector of dimensions, with the total number of neurons considering all input feature maps, a two-dimensional bias vector, and a weight matrix of dimension . Similarly to the convolutional layer, elements of and are model parameters to be learn in the training. Matrix includes pixels of all feature maps (with ) as a single “flattened” column vector of pixels; then, information about topology or edges of sample images is lost.

- Softmax Layer. Applies the softmax activation function to each component j of vector :where , depending on the class. Softmax layer is the multiclass generalization of sigmoid function, and we include it in the CNN, because, by definition, transform real output values of fully connected layer in probabilities. In fact, according to [58], output values are interpreted as posterior distributions of class conditioned by model parameters. That is to say , where is a multidimensional vector containing all model parameters. It is common to refer to values as the output scores of the CNN.

- Classification Layer. Stochastically takes samples and computes the cross-entropy function:where and are the two posterior probabilites that are outputted by softmax layer and a likelihood function. Cross-entropy is a measure of the risk of our classifier and, following a discriminative approach [22], Equation (16) defines the maximum likelihood estimation for parameters included in . Now, we need now a learning algorithm for maximizing the likelihood or, equivalently, minimizing , with respect to model parameters. Section 2.6 introduces this algorithm. (Take in mind that our approach estimates the model parameters through a feedforward learning algorithm from classification layer to previous layers of the CNN. Alternatively, when considering that posterior probability outputted by softmax layer is because of the Bayes theorem, the other approach could be maximize likelihood funcion with respect to model parameters. This alternative approach is called generative and will be not considered in our CNN model. In short, in Section 3.4 we will present the simplest generative models to compare with our CNN algorithms, namely Naive Bayes classifiers).

Table 1 shows the three architectures that we implemented for this study. A standard choice for convolutional kernel size is , but, as we need to recognize images of width greater than height, we set a first kernel with size . Besides, we chose a size for a first max pooling region, which is also an standard downsampling option. Subsequently, with these dimensions, we have that our CNN algorithms can increase the number of stacks only to 3, where the minimum output layer size is reached—provided that the size of kernel and max pooling regions becomes smaller if they are located in subsequent stacks. This scenario is very convenient, because it allowed us to importantly decrease system resources in our hyperparameter adjustments, as will seen in Section 3.2. For choosing the number of kernels , there is not a beforehand criteria, then we leave it as a matter of experiments.

Table 1.

The CNN architecture of 3 stacks used for this research. The number of kernels in convolutional layers is variable, and it took values . Part of this illustration is also valid for CNN architectures of 1 or 2 stacks (also implemented in this research), in which the image input layer is followed by the next three or six layers as feature extractor, respectively, and then by the fully connected layer until the output cros-entropy layer in the classification stage. All of these CNNs were implemented with the MATLAB Deep Learning Toolbox.

2.6. Model Training

As detailed, model parameters are included in vector , which, in turn, appear in the cross-entropy function (16). Starting from given initial values, these parameters have to be learned by an minimization of the cross-entropy, taking random image samples, i.e., a mini-batch. For model parameters updating, we draw on the known gradient descent algorithm, including a momentum term to boost iterations. Denoting model parameters at the th iteration or epoch as , then its updating at the th iteration is given by the following optimization rule:

where we have that is the gradient with respect to model parameters and E the cross-entropy, in addition to two empirical quantities to be set by hand, namely the learning rate and momentum . Given that we are computing the cross-entropy with random samples, the above rule is called the mini-batch stochastic gradient descent (SGD) algorithm. Besides, for all our experiments, we set a learning rate , a momentum , and a mini-batch size of image samples.

With regard to the initialization of weights, we draw on Glorot initializer [59]. This scheme independently samples values from a uniform distribution with a mean equal to zero and a variance given by , where and for convolutional layers, and and for the fully connected layer—remember that is the number of kernels of dimension and the vector that is inputted by the fully connected layer. All biases, on the other hand, are initialized with zeros.

Finally, whether we work with data from H1 or L1 detector, will be significantly greater than ; nevertheless, its specific value depends on our global validation technique is explained in Section 2.7.

2.7. Global and Local Validation

For this research, we only used real LIGO strain data with a given and limited number of CBC hardware injections, removing beforehand the instrumental freedom to generate an arbitrarily diverse bank of GW templates based on software injections with numerical and/or analytical templates. Under this approach, we intentionally adopt a limitation in which we cannot generate big datasets, and global validation techniques that are based on resampling are required to reach good statistical confidence and perform fair model evaluations. Even when using syntetic data from numerical relativity, and because of current system resources limitations [60], the order of magnitude of generated templates still is quite small when compared to the big data regime [61] with volumes of petabytes, exabytes, or even more; the aforementioned techniques are yet to be required. For these reasons, we implemented the k-fold CV technique [62], that consists in the following recipe: (i) split the original dataset into k nonoverlapping subsets to perfom k trials, then (ii) for each th trial use the th subset for testing, leaving the rest of the data for training, and finally (iii) compute the average of each performance metric across all trials.

It is known that the value of k in k-fold CV defines a trade-off between bias and variance. When (i.e., leave-one-out cross-validation), the estimation is unbiased, but variance can be high and, when k is small, the estimation will have lower variance, but bias could be a problem [63]. For several classic ML algorithms, previous works have suggested that represents the best trade-off option ([64,65,66]), then we decided to take this value as a first approach. Moreover, in the following works [67,68], we decided to perform 10 repetitions of the 10-fold cross-validation process [55], in order to reach a good stability of our estimates, to present fair values of the cross-entropy function given the stochastic nature of our resampling approach, and, more important, obtain information regarding the distribution of accuracy (and other standard metrics) in which there is involved uncertainty.

Moreover, k-fold CV helps to aliviate the artificiality that is introduced by balanced dataset, because the initial splitting into k folds is totally random. In research [34] authors warns about the fact that CNNs not only capture GW templates alone, but also transfer to the test stage the exact same probability distribution given in the training set. This claim is true but, it is important to take in mind that, working with balanced datasets, as a first approach, is simply motivated by the fact that many of the standard performance metrics give excessive optimistic results on classes of higher frequency in imbalanced dataset, and dealing with arbitrarily unbalanced datasets is not a trivial task. In any case, including k-fold CV as a random resampling, starting from a balanced dataset, is desirable for statistical purposes.

With the sizes of our datasets detailed at the end of Section 2.3.3 and the 10-fold CV, we have a training set of samples obtained from H1 detector, and of samples from the L1 detector. Consequently, for H1 data and for L1 data.

Local validation, which is to say validation performed within a learning epoch, is also an crucial ingredient of our methodology. In particular, our algorithm splits the training set of data into two subsets, one for the training itself ( and the other for validation (). Validation works as a preparatory mini test that is useful for monitoring learning and generalization during the training process.

With regard to regularization techniques, local validation was performed once per epochs and cross-entropy was monitored with a validation patience (value given by hand), which simply means that, if occurs p times during the validation, then the training process is automatically stopped. Besides, to avoid overfitting, training samples were randomized before training and before validation, solely in the first learning epoch. This randomization is performed, such that the link between each training image and its respective class is left intact, because we do not want to “mislead” our CNN when it learns from known data.

2.8. Performance Metrics

Once our CNN is trained, the goal is predicting classes of unseen data, i.e., data on which the model was not trained and, of course, achieve a good performance. Hence, performance metrics in the test are especially crucial. When considering the last layer of our CNNs, a metric that is natural to monitor during the training and validation process is the cross-entropy. Other metrics that we use come from counting predictions. As our task has to do with a binary classification, these metrics can be computed from the elements of a confusion matrix, namely true positives (TP), false positives (FP) or type I errors, true negatives (TN), and false negatives (FN) or type II errors, as detailed in Table 2.

Table 2.

Confusion matrix for a binary classifier and its consequent standard performance metrics. In general, to have a complete view of the classifier, it is suitable to draw on, at least, accuracy, precision, recall, and fall-out. The F1 score and G mean1 are useful metrics for imbalanced multilabel classifications, and they also give important moderation features that help in model evaluation. Each metric has its probabilistic interpretation.

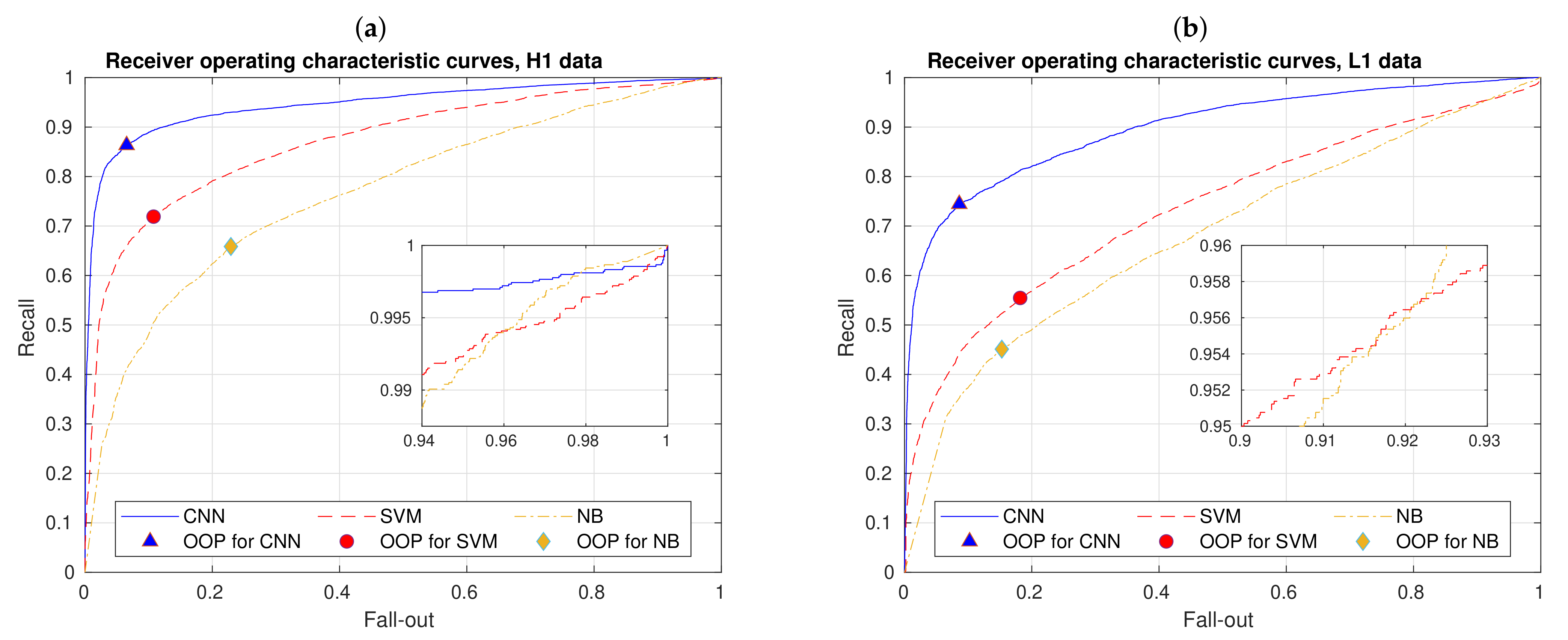

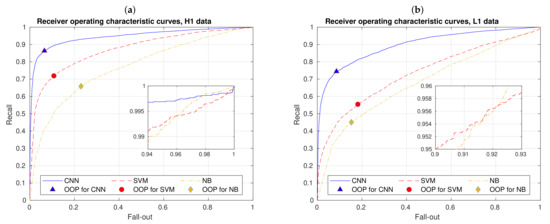

Accuracy is the most used standard metric in binary classifications. Besides, all of the metrics shown in the right panel of Table 2 depend on a choosen threshold as a crucial part our decision criterion. Depending of this threshold, and the output score for an input image sample, our CNN algorithms assign class 1 or class 2. Although the threshold is fixed for all these metrics, one can also vary it for generating the well known Receiving Operating Characteristic (ROC) [69] and Precision-Recall [70] curves, among others. The first curve describes the performance of the CNN in the fall-out (or false positive rate) vs. recall (or true positive rate) space, and the second one in the recall vs. precision space. Given that the whole curves are generated by varying the threshold, each point of those curves represents the performance of the CNN given a specific threshold. As we worked with balanced datasets, ROC curves are the most suitable option. In particular, for a probabilistic binary classifier, each point of its ROC curve is given by the ordered pair:

where . Finally, F1 score and G mean1 are metrics that summarize in a single metric pairs of other metrics and being, in general, useful for imbalanced multiclass classifications. In short, we decided to compute these two last metrics, because they give useful moderation features for performance evaluation—more details are presented in Section 3.3.

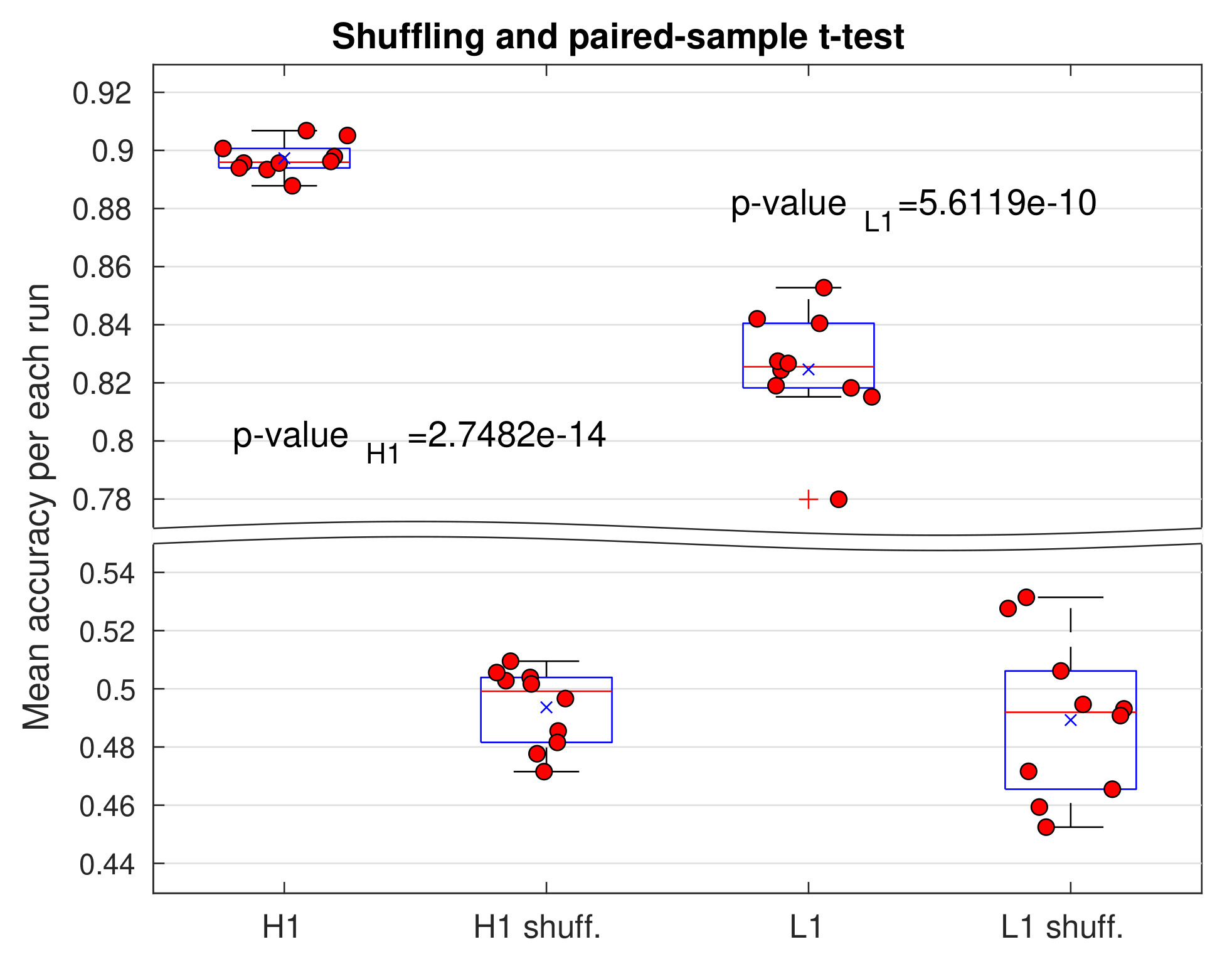

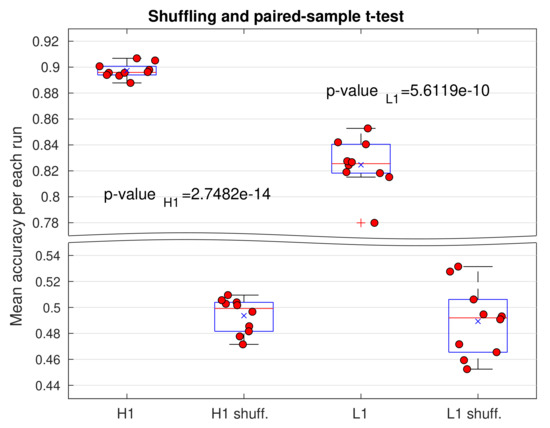

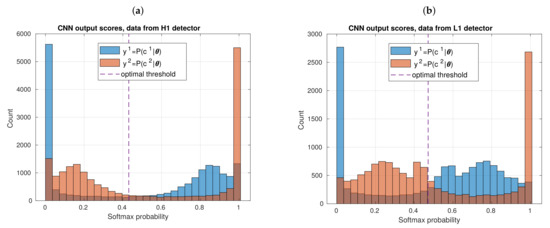

We also want to perform a shuffling in order to ensure that our results are statistically significant. Our algorithm already performs a randomization over the training set, before the training and before the validation in order to prevent overfitting, as mentioned in Section 2.7. However, the shuffling applied here is more radical, because it broke the link between training images and their respective classes, and it is made by random permutation over indices i solely for the matrices , belonging to training set , before each the training. Subsequently, if this shuffling is present and our results are truly significant, we expect that accuracy in testing be lower than that computed when no shuffling is present, reaching values around —as this is the chance level for a binary classifier. This will be visually explored, looking at the dispersion of mean accuracies in boxplots and, more formally, confirmed by a paired-sample t-test of statistical inference. For each distribution of mean accuracies, with and without shuffling, we can define sets and , respectively. Subsequently, with means of each of these sets, namely and , the goal is testing the null hypothesis , and, then, we just would need to compute the p-value, i.e., the probability of resulting accuracies be possible assuming that the mentioned null hypothesis is correct, given a level of significance—Section 3.5 presents more details about shuffling and consequent statistical tests.

3. Results and Discussion

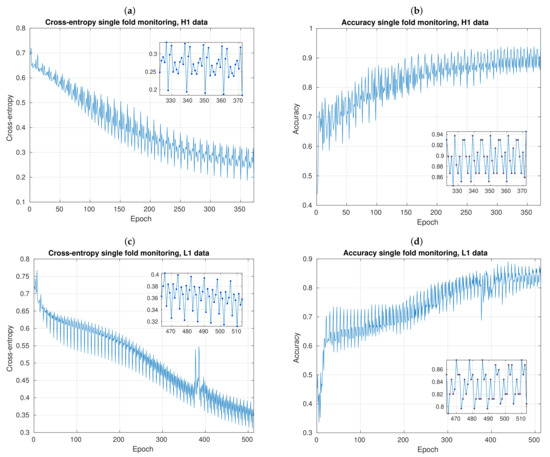

3.1. Learning Monitoring per Fold

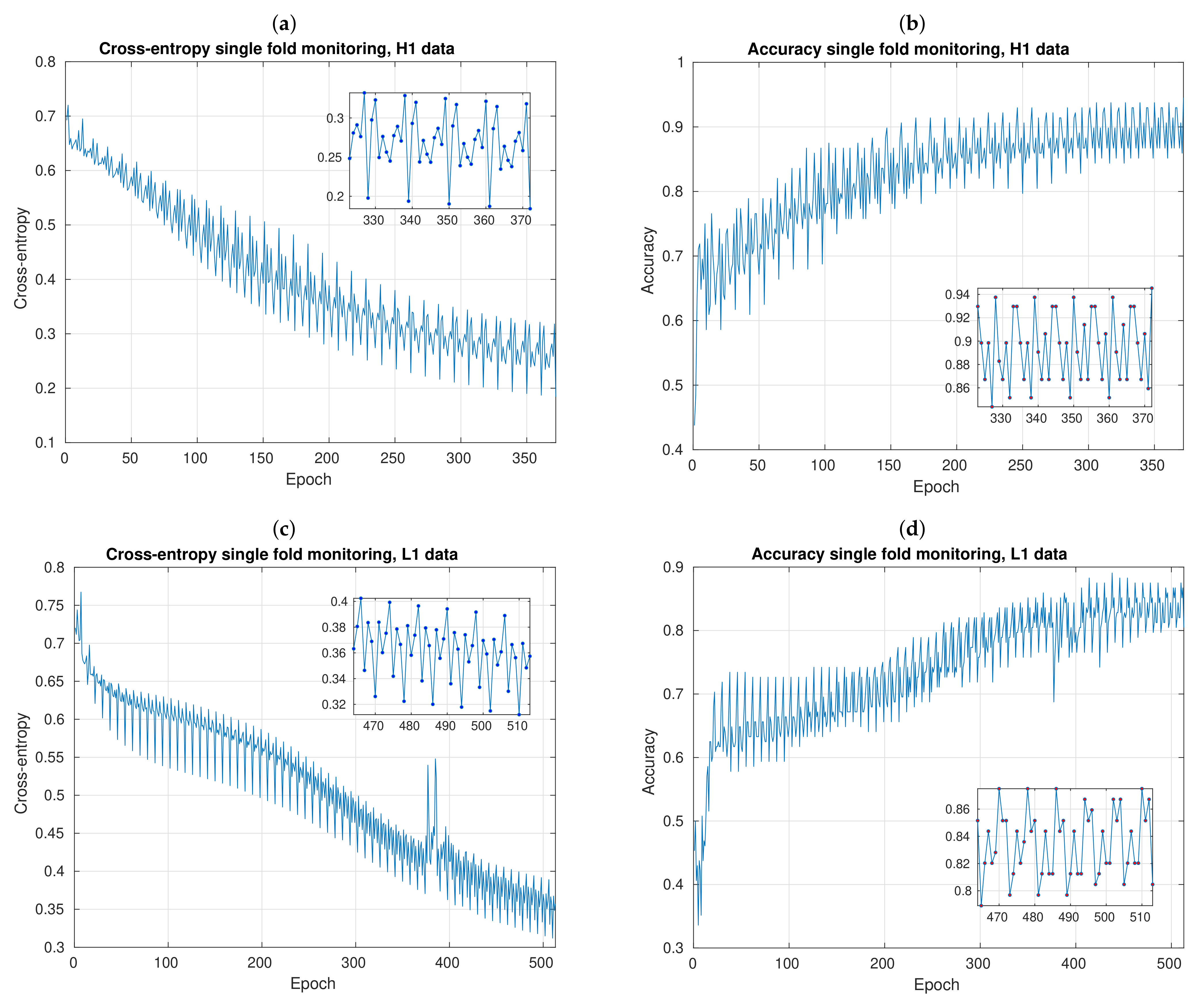

While the mini-batch SGD was running, we monitored the cross-entropy and accuracy evolution along epochs. This is the very first check to ensure our CNNs were properly learning from data and our local validation criteria stopped the learning algorithm in the right moment. If our CNNs were correctly implemented, we expected that cross-entropy be minimized to reach values as close as possible to 0, and the accuracy to reach values as close as possible to 1. If this check gave wrong results, then there would be no point in computing subsequent metrics.

Figure 6 shows two representative examples of this check, using data from H1 and L1 detectors. Both were performed during a single fold of a 10-fold CV experiment, from the first to the last mini-batch SGD epoch. Besides, here we used a time resolution of s with 2 stacks, and 20 kernels in convolutional layers. Notice that cross-entropy shows decreasing trends (Figure 6a,c) and accuracy increasing trends (Figure 6b,d). The total number of epochs for H1 data was 372, and for L1 data was 513, which means that the CNN has greater difficulties in learning parameters with L1 than with H1 data. When the CNN finish its learning process, cross-entropy and accuracy reach values of and , respectively, using H1 data; and and , respectively, using L1 data.

Figure 6.

Evolution of cross-entropy (left panels: (a,c)) and accuracy (right panels: (b,d)), in the function of epochs of learning process, using data from H1 detector (upper panels) and L1 detector (lower panels). On trending, cross-entropy decreases and accuracy increases, even if there is a clear stochastic component due to the mini-batch SGD learning algorithm. Here we set length of sliding windows in s, and the CNN architecture with 2 stacks and 20 kernels. Some anomalous peaks appear when data from L1 detector is used, but this does not affect the general trends of mentioned metrics.

Notice that, from all plots shown in Figure 6, fluctuations appear. This is actually expected, since, in the mini-batch SGD algorithm, a randomly number of samples are taken, then stochastic noise is introduced. Besides, when using data from L1 detector, some anomalous peaks appear between epoch 350 and 400, but this is not a problem because CNN normally continues its learning process and trendings in both metrics are not affected. At the end, we can observe this resilience effect, because of our validation patience criterion, which is implemented to prevent our CNN algorithm prematurely stopping and/or to dispense with manually adjust the total number of epochs for each learning fold.

Still focusing on the SGD fluctuations, zoomed plots in Figure 6 show their order of magnitude—the highest peak minus the lowest peak. When we work with H1 data, cross-entropy fluctuations are about (Figure 6a) and accuracy fluctuacions are about (Figure 6b). On the other hand, when we learn from L1 data, both cross-entropy and accuracy fluctuations are about (Figure 6c,d). Here, it should be stressed that, although mini-batch SGD perturbations contribute with its own uncertainty, when we compute mean accuracies among all folds in the next Section 3.2, we will see that the magnitude of these perturbations do not totally influence the magnitude of data dispersion present in the distribution of mean accuracies.

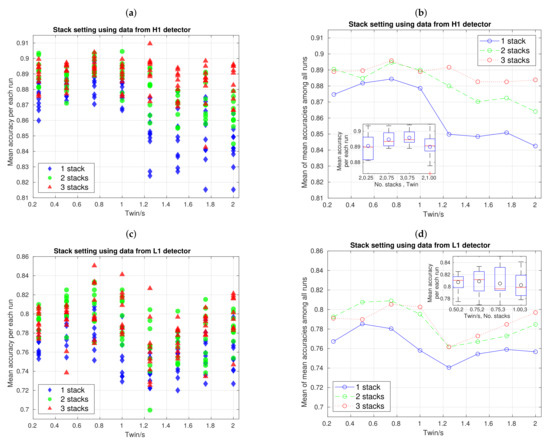

3.2. Hyperparameter Adjustments

Our CNN models introduce several hyperparameters, namely the number of stacks, size and mount of kernels, size of pooling regions, number of layers for each stack, stride, and padding, among many others. Presenting a systematic study for all hyperparameters is beyond the scope of our research. However, given CNN architectures shown in Table 1, we decided to study and adjust two of them, namely the number of stacks and the number of kernels in convolutional layers. In addition, although it is not an hyperparameter of the CNN, the time length of the samples is a resolution that also required being set to reach an optimal performance, then we included it in the following analyses.

A good or bad choice of hyperparameters will affect the performance, and this choice introduces uncertainty. Nonetheless, once we found a set of hyperparameters defining an optimal setting and, regardless of how sophisticated our adjustment method is, the goal is only using this setting for predictions. That is to say, once we find optimal hyperparameters, we remove the randomness that would be introduced in predictions if we run our CNN algorithms with different hyperparameters. For this reason, we say that uncertainty that is present in hyperparameter selection defines a prior level to intrinsic uncertainties of a setting already chosen—according to the Bayesian formalism, this is a prior belief. Last uncertainties are more risky, because they introduce stochasticity in all predictions of our models, despite that we are working with a fixed particular hyperparameter setting.

In any case, we emphasize that our methodology for hyperparameter selection is robust. Thanks to our white-box approach, a clear understanding of the internal behavior of our CNNs was shown and, based on this understanding, we heuristically proposed a reduced set of hyperparameters to perform a transparent statistical exploration on possible meaningful values—obtaining good results, as will be seen. This methodology is smartly distancing from the blind brute force approach of performing unnecesarily large and expensive explorations (many hyperparameter settings could be silly to waste time on them), and even much more far from a naive perspective of trivializing model decision as a superficial detail and/or something opaquely given without showing a clear exploration.

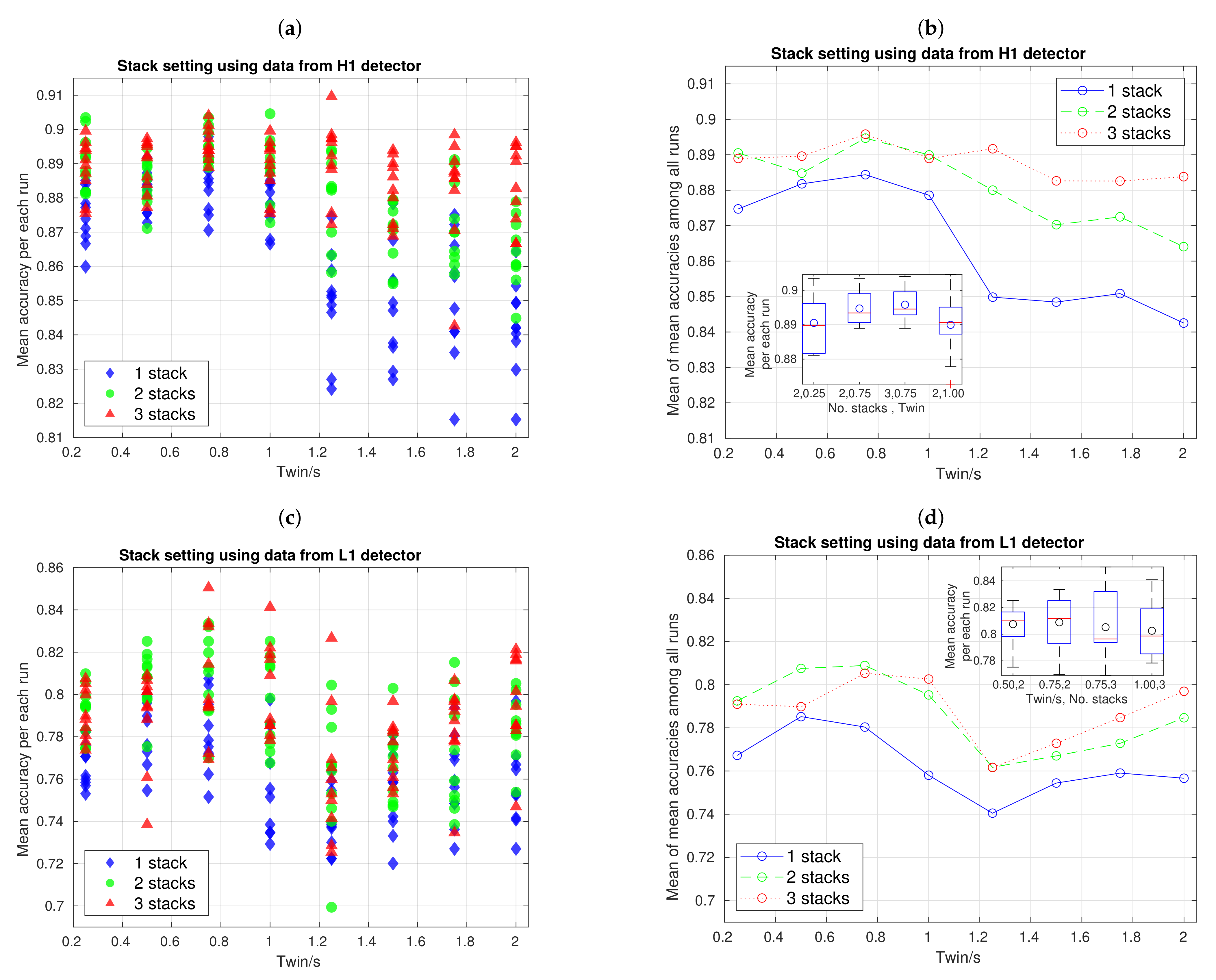

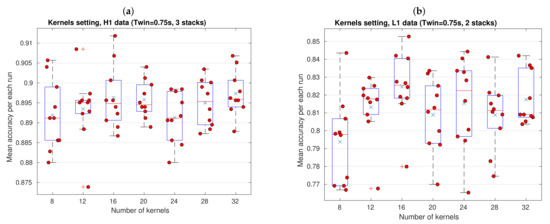

The first hyperparameter adjustment is shown in Figure 7, and it was implemented to find optimal number of stacks and time length resolution, according to the resulting mean accuracies. The left panels (Figure 7a,c) show the distribution of mean accuracy for all 10 repetitions or runs of the entire 10-fold CV experiment, in function of . Each of these mean accuracies, which we can denote as , is the average among all fold-accuracies of a th run of the 10-fold CV. In addition, the right panels (Figure 7b,d) show the mean of mean accuracies among all 10 runs, i.e., , in function of . Inside right plots, we have included small boxplots that, as will be seen next, are useful to study dispersion and skewness of mean accuracy distributions—circles inside boxplots are distribution mean values. The line plots show contributions of our three CNN architectures, i.e., with 1, 2, and 3 stacks.

Figure 7.

Hyperparameter adjustment to find best CNN architectures, i.e., number of stacks, and time resolution . Left panels, (a,c), show all samples of mean accuracies, and right panels, (b,d), the mean of mean accuracies among all runs; all in function of for 1, 2, and 3 stacks in the CNN. Besides, small boxplots are included inside right plots to have more clear information about dispersion and skewness of distribution of mean accuracies that are more relevant and from where we chose our optimal settings. Based on this, we concluded that s, with 3 stacks (H1 data) and with 2 stacks (L1 data), are optimal settings. We used 20 convolutional kernels, repeating a 10-fold CV experiment 10 times.

Consider the top panels of Figure 7 for H1 data. Notice, from Figure 7b that, for all CNN architectures, mean of mean accuracies shows a trend to decrease when s and this decrease occurs more pronouncedly when we work with less stacks. Besides, when s, a slight increase appears, even if local differences are of the order of SGD fluctuations. In short, given our mean accuracy sample dataset, the highest mean of mean accuracies, about , occurs when s with a CNN of 3 stacks. Subsequently, to decide if this setting is optimal, we need to explore Figure 7a together with boxplots inside Figure 7b. From Figure 7a, we have that not only the mentioned setting give high mean accuracy values, but also s and s, both with 2 stacks, s with 2 and 3 stacks, and even s with 3 stacks; all these settings reach a mean accuracy that is greater than . Setting s with 3 stacks can be discarded because its maximum mean accuracy is clearly an outlier and, to elucidate what of remaining settings is optimal, we need to explore boxplots.

Here, it is crucial to assimilate that the optimal setting to choose actually depends on what specifically we have. Let us focus on Figure 7b. If the dispersion does not worry us too much and we want to have a high probability of occurence for many high values of mean accuracy, setting of s with 2 stacks is the best, because its distribution has a slighly negative skewness concentrating most of mass probability to upper mean accuracy values. On the other hand, if we prefer to have more stable estimates working with less dispersion at the expense of having a clear positive skewness (in fact, having a high mass concentration in a region that does not reach as high mean accuracy values as the range from median to third quartile in the previous setting), setting of s with 3 stacks is the natural choice. In practice, we would like to work with greater dispersions if they help to reach the highest mean accuracy values, but, as all our boxplots have similar maximum values, we decide to maintain our initial choice of s with 3 stacks for H1 data.

From Figure 7a, it can be seen that, regardless number of stacks, data dispersion in s is greater than in s, even if in the former region dispersion slightly tends to decrease as we increase the number of stacks. This actually is a clear visual hint that, together with the evident trend to decrease mean accuracy as increase, motivate discarding all settings for s. However, this hint is not present in the bottom panels, in which a trend of decrease and then increase appears (which is clearer in the right plot), and data dispersion of mean accuracy distributions are similar almost for all time resolutions. For this reason, and although the procedure for hyperparameter adjustment is the same as upper panels, one should be cautious, in the sense that decisions here are more tentative, especially if we have prospect to increase the mount of data.

In any case, given our current L1 data and based on scatter distribution plot in Figure 7c and line mean of mean accuracies plot in Figure 7d, we have that the best performance(s) should be among settings of s with 2 stacks, s with 2 and 3 stacks, and s with 3 stacks. Now, exploring the boxplots in Figure 7d, we notice that, even if settings of s with 3 stacks reach the highest mean accuracy values, their positive skewness toward lower values of mean accuracies is not great. SUbsequently, the two remaining settings, which, in fact, have negative skewness toward higher mean accuracy values, are the optimal options, and again choosing one or other will depend of what extent we tolerate data dispersion. Unlike upper panels, here a larger dispersion increase the probality to reach higher mean accuracy values; therefore, we finally decide to work with the setting of s with 2 stacks for L1 data.

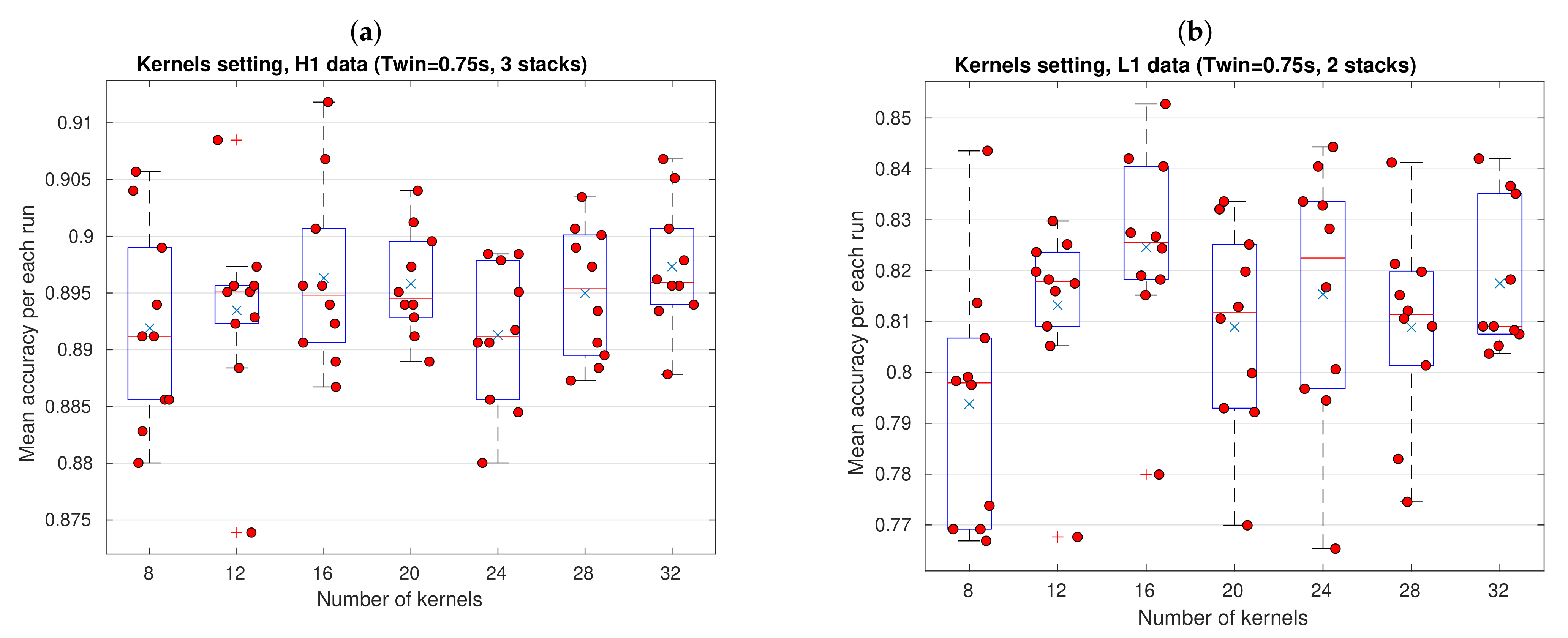

To find the optimal mount of kernels in convolution layers, we perfomed the adjustment that is shown in Figure 8, again separately for data from each LIGO detector. When considering the information that is provided by previous adjustment, we set s, and the number of stacks in 3 for H1 data and 2 for L1 data. Subsequently, once the 10-fold CV was run 10 times as usual, we generated boxplots for CNN configurations with several mounts of kernels, as was advanced in Table 1, including all mean accuracies for each run marked (red circles). Besides, the average for each boxplot is included (blue crosses). Random data horizontal spreading inside each boxplot was made to avoid visual overlap of markers, and it does not mean that samples were obtained with a number of kernels different from those already specified in horizontal axis.

Figure 8.

Adjustment to find the optimal number of kernels in CNNs for H1 data (a) and L1 data (b). Both panels show boxplots for the distributions of mean accuracies in function of the number of kernels, with values of and mount of stacks found in previous hyperparameter adjustments. Based on location of mean accuracy samples, dispersion, presence of outliers, and skewness of distributions, CNN architectures with 32 kernels and 16 kernels are the optimal choices to reach the highest mean accuracy values, when working with data from the H1 and L1 detectors, respectively.

Let us concentrate on kernels adjustment for H1 data in the left panel of Figure 8. From these results we have that a CNN with 12 kernels give us more stable results by far, because most of its mean accuracies lie in the smallest dispersion region—discarding outliers, half of mean accuracies are concentrated in a tiny interquartile region located near to . On the other hand, CNN configuration with 24 kernels is the least suitable setting among all, not because its mean accuracy values are low per se (values from to are actually good), but rather, because, unlike other cases, the nearly zero skewness of its distribution is not prone to boost sample values beyond the third quartile as it is appeared. Configuration with 8 kernels has a distribution mean very close to the setting with 24 kernels and, even, reaches two mean accuracy values of about . Nonetheless, given that settings with 16, 20, 28, and 32 have mean of mean accuracies greater or equal to (and, hence, boxplots that are located towards relative higher mean accuracy values), these last four configurations offer the best options. At the end, we decided to work with 32 kernels, because this setting groups a whole set of desirable features: the highest mean of mean accuracies, namely , a relatively low dispersion, and a positive skewness that is defined by a pretty small range from the first quartile to the median.

Kernels adjustment for L1 data is shown in right panel of Figure 8. Here, the situation is easier to analize, because performance differences appears to be visually clearer than those for data from H1. Settings with 8, 20, and 28 kernels lead to mediocre performances, specially the first one which has a high dispersion and of its samples are below of mean accuracy. Notice that, like adjustment for H1 data, setting with 12 kernels shows the smallest dispersion (discarding a outlier below ), where we have mean accuracies from to , and, again, this option will be suitable if we would be very interested in reaching stable estimates. We decided to pick up setting with 16 kernels, which has the highest distribution mean, , of mean accuracy samples above the distribution mean, an aceptable data dispersion (without counting the clear outlier), and a relatively small region from the minimum to the median.

In summary, based on all of the above adjustments, the best time resolution is s, with a CNN architecture of 3 stacks and 32 kernels when working with data from H1 detector, and 2 stacks and 16 kernels when working with data from the L1 detector. We only use these hyparameter settings hereinafter.

Now, let us finish this subsection reporting an interesting additional result. We can ask to what extent magnitude of perturbations from the mini-batch SGD algorithm influence the dispersion of mean accuracy distributions, as was mentioned at the end of Section 3.1. Here, we can compare the order of magnitude of SGD perturbations and dispersion present in boxplots. In previous subsection, we had that, when s and we work with a CNN architecture of 2 stacks and 20 kernels in convolution layers, the order of magnitude of SGD fluctuations in accuracy is about . Curiously, this value is much greater than the dispersion of data distribution shown in the left panel of Figure 7, which, in turn, reach a value of , that is to say, times smaller. These results are good news, because apart of showing that stochasticity of mini-batch SGD perturbations do not totally define dispersion of mean accuracy distributions, it seems that our resampling approach, actually contributes to smooth stochastic effects of mini-batch SGD perturbations and, hence, to decrease the uncertainty in the mentioned distributions. This is a very important result that could serve as motivation and standard guide to future works. Given that very few previous works of ML/DL applied to GW detection have transparently reported their results under a resampling regime, i.e., clearly showing distributions of their performance metrics (for instance, [29,39]), this motivation is highly relevant. Resampling is a fundamental tool in ML/DL that should be used, even if the involved algorithms are deterministic, because there will always be uncertainty given that data are always finite. Moreover, under this regime, it is important to report the distributions of metrics to understand the probabilistic behavior of our algorithms, beyond mere averages or single values from arbitrarily picked out runs.

3.3. Confusion Matrices and Standard Metrics

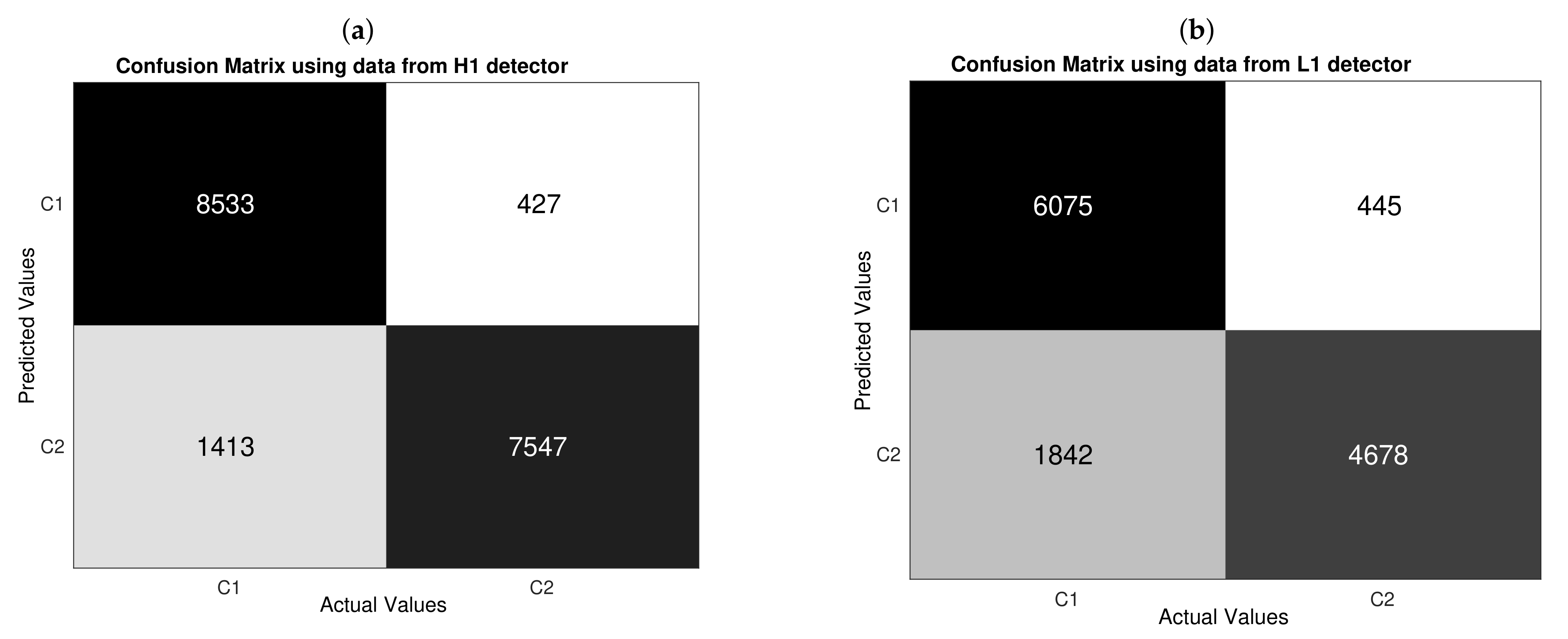

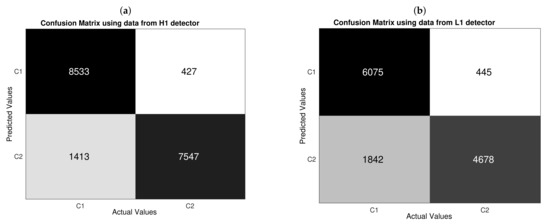

In general, accuracy provides information regarding the probability of a successful classification, either if we are classifying a noise alone sample () or noise plus GW sample (); that is to say, it is a performance metric with multi-label focus. However, we would like to elucidate to what extent our CNNs are proficient in separately detecting samples of each class, then it is useful to introduce peformance metrics with single-label focus. A standard tool is the confusion matrix, which is shown in Figure 9, depending on data from each detector. As we are under a resampling regime, each element of confusion matrices is computed when considering the entire mount of detections, which, in turn, are resulting from concatenating all prediction vectors of dimension that are outputted by the 10 runs of the 10-k fold CV.

Figure 9.

Confusion matrices computed from the testing for H1 data (a) and L1 data (b). is the label for noise only and the label for noise plus GW injection. Our CNN has 32 kernels and 3 stacks with H1 data, and 16 kernels and 2 stacks with L1 data. Time resolution is s. Working with H1 data, predicitions for are correct and predictions for are incorrect and, working L1 data, predicitions for are correct and predictions for are incorrect. These results show that our CNN very precisely classifies noise samples at the cost of reaching a not less number of false GW predictions.

A first glance of the confusion matrices shown in Figure 9 reveals that our CNNs have a better performance in detecting noise alone samples than detecting noise plus GW samples, because (we are using the notation to represent each element of a confusion matrix) the element is greater than for both matrices. Yet, the amount of successful predictions of noise plus GW are reasonably good because they considerably surpass a totally random performance—as described by successful detections or the order of of total negative samples.

Moreover, from Figure 9, we have that, based on wrong predictions, CNNs are more likely to make a type II error than type I error, because for both confusion matrices. If we think more carefully, this result leads to an advantage and a disadvantage. The advantage is that our CNN performs a “conservative” detection of noise alone samples in the sense that a sample will be not classified as beloging to class 1 unless the CNN is very sure, which is to say the CNN is quite precise to detect noise samples. Using H1 data, of samples predicted as belong this class; and, using L1 data, of samples that were predicted as belong this class. This is an important benefit if, for instance, we wanted to apply our CNNs to remove noise samples from a segment of data with a narrow marging of error in addition to other detection algorithms and/or analysis focused on generating triggers. Nonetheless, the disadvantage is that a not less number of noise samples are lost by wrongly classifying them as GW event samples. In terms of false negative rates, we have that of actual noise samples are misclassified with H1 data, and of actual noise samples are misclassified with L1 data. This would be a serious problem if our CNNs were implemented to decide whether an individual trigger is actually a GW signal and not a noise sample—either Gaussian or non-Gaussian noise.

Taking in mind that, according to statistical decision theory, there will always be a trade-off between type I and type II errors [71]. Hence, given our CNN architecture and datasets, it is not possible to reduce value of element without increasing value of element. In principle, keeping the total number of training samples, we could generalize the CNN architecture for a multi-label classification to further specify the noise including several kind of glitches as was implemented in works as [33,38]. Indeed, starting from our current problem, such multiclass generalization could be motivated to redistribute the current false negative counts in new elements of a bigger confusion matrix, where several false positive predictions will be converted to new sucessful detections located along a new longer diagonal. Nonetheless, it is not clear how to keep constant the bottom edge of the diagonal of the original binary confusion matrix when the number of noise classes is increased; not to mention that this approach can be seen as a totally different problem instead of a generalization.

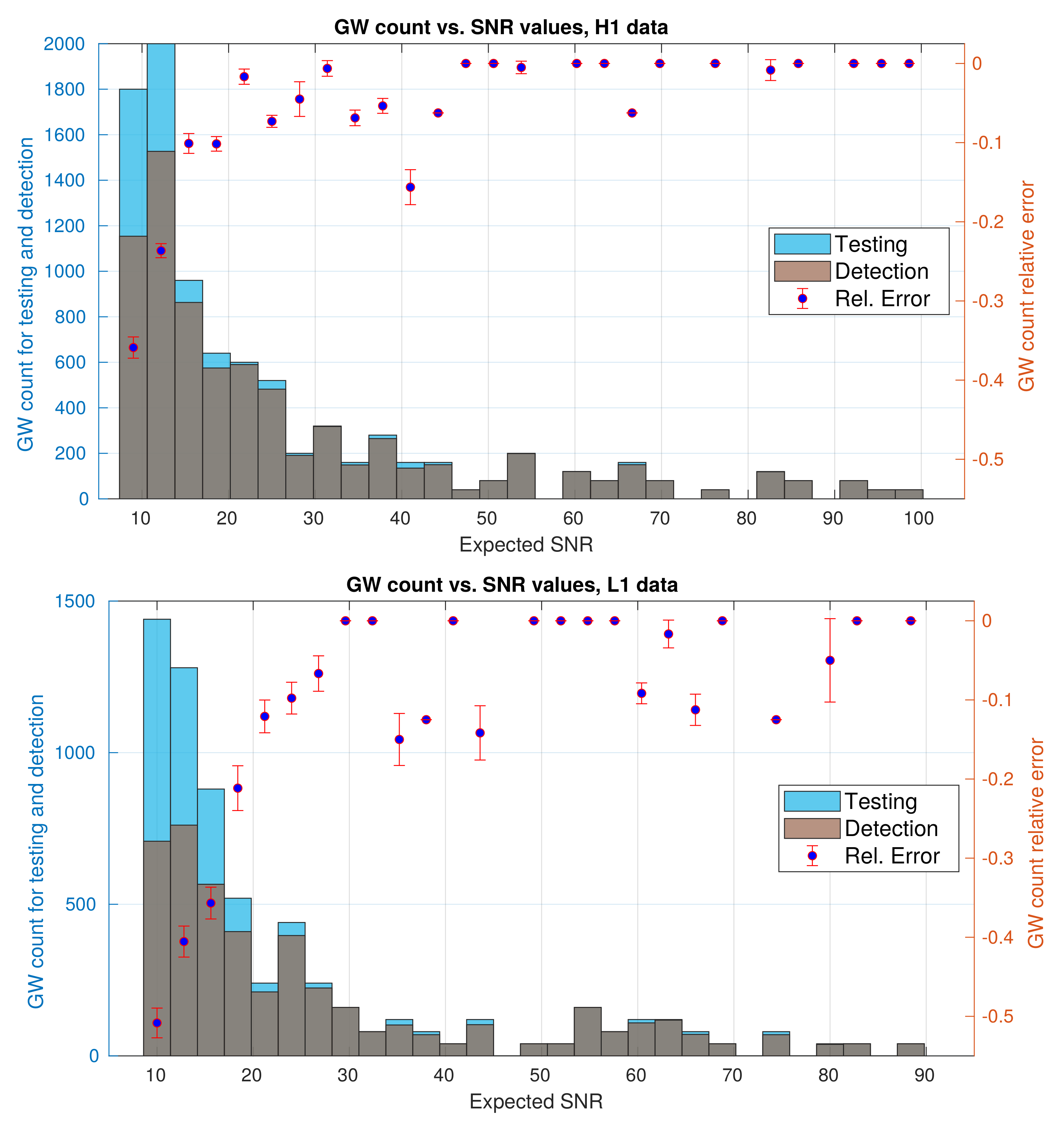

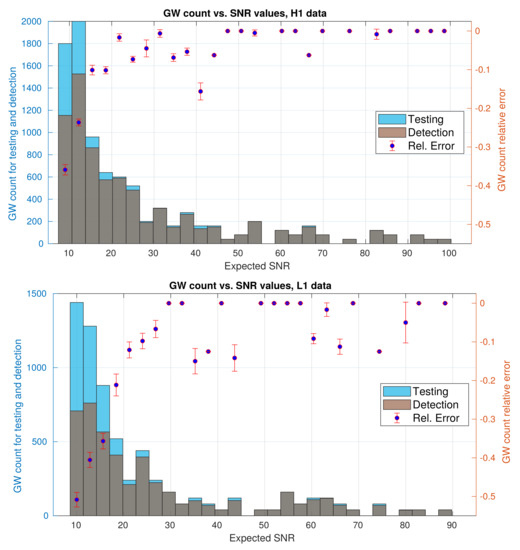

With regard to misclassified GW event samples, despite that they are quite less than misclassified noise samples, we would like to understand more about them. Subsequently, we decided to study the ability of the CNN to detect GW events depending on the values of their expected SNR—values that are provided with LIGO hardware injections. The results are shown in Figure 10; upper panel with data from H1 detector, and lower panel with data from L1 detector. Both panels include a blue histogram for actual (injected) GW events that come from the testing set, a gray histogram with GW events detected for the CNN, and the bin-by-bin discrepancy between both histograms as scatter points. As a first approach, we defined this bin-by-bin discrepancy as the relative error:

where and are the detected GW count and injected GW count, respectively, and index i represent a bin. Here, we set 29 same-length bins for both histograms, starting from a lower edge to a upper edge for H1 data, and from to for L1 data, respectively. For testing histograms, the count of events comes from our predictions given our resampling regime.

Figure 10.

Histograms for counting GW samples present in test set and GW samples detected by the CNN. This count was made from all predictions because we have SGD learning-testing runs. Besides, the relative error between both histograms is shown as scatter points, with their respective standard deviations being computed from the 10 repetitions of the 10-fold CV routine. From plots, we have that our CNN detects more CBC GW events insofar as they have a for H1 data (upper panel) and for L1 data (bottom panel).

By comparing most bins that appear on both panels of Figure 10, we have detected that the GW count is greater the more actual injections in the testing set there are. Besides, most GW events are concentrated in a region of smaller SNR values. For H1 data, most events are in the first six bins, namely from to ; with 6520 actual GW events and 5191 detected GW events, representing aprox. the and of the total number of actual GW events and detected GW events, respectively. For L1 data, on the other hand, most of the events are in the first seven bins, from to ; with 5040 actual GW events and 3277 detected GW events, representing aprox. the and of the total number of actual GW events and detected GW events, respectively.