Character Recognition of Components Mounted on Printed Circuit Board Using Deep Learning

Abstract

1. Introduction

- Data collection: It is not easy to acquire data during the inspection process because delays in the data collection process can cause decrease the in productivity.

- Data privacy: Many PCB manufacturers do not allow data collection due to confidentiality issue.

- Unique problems with the data: Because each PCB production plant uses has different types of PCBs and components used, data collected by one plant can not represent data from all production plants.

- We propose a data augmentation method that could generate representative datasets for learning with just data collected from a few factories so that it could be applied to multiple factories for examining printed characters on the top of parts. This method takes color, shape, size, and font shape into account.

- PCB tester production companies can simultaneously batch-process the learning and distribution and upgrade the deep learning model for multiple factories instead of generating respective models for individual factories.

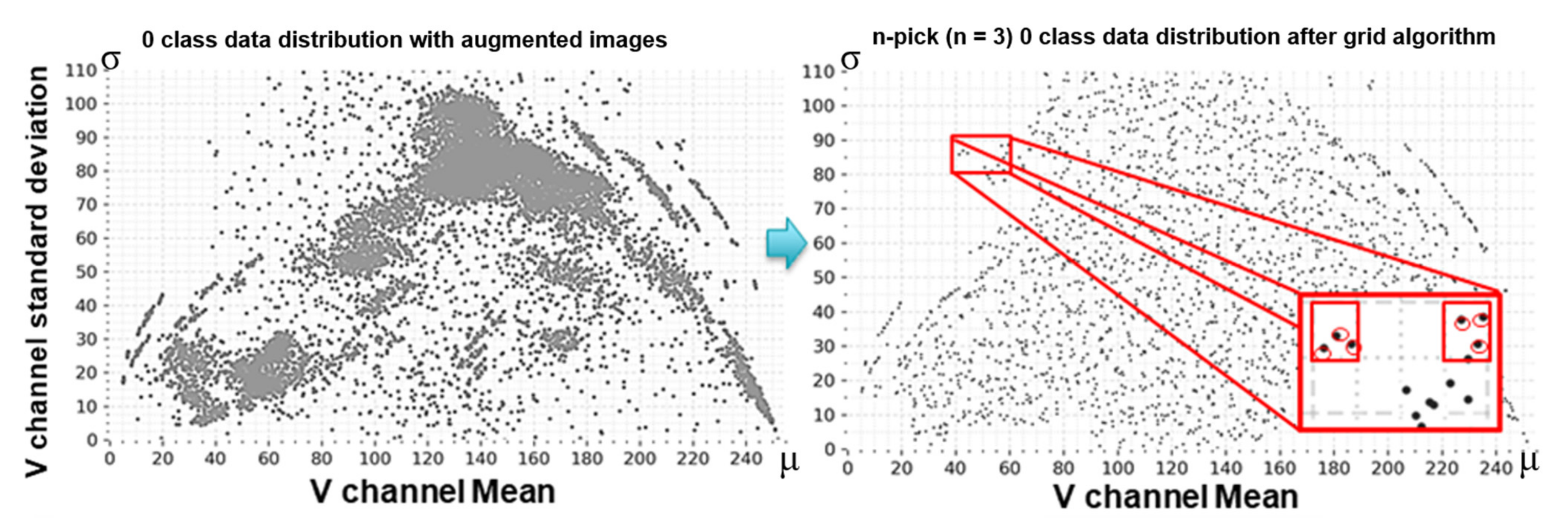

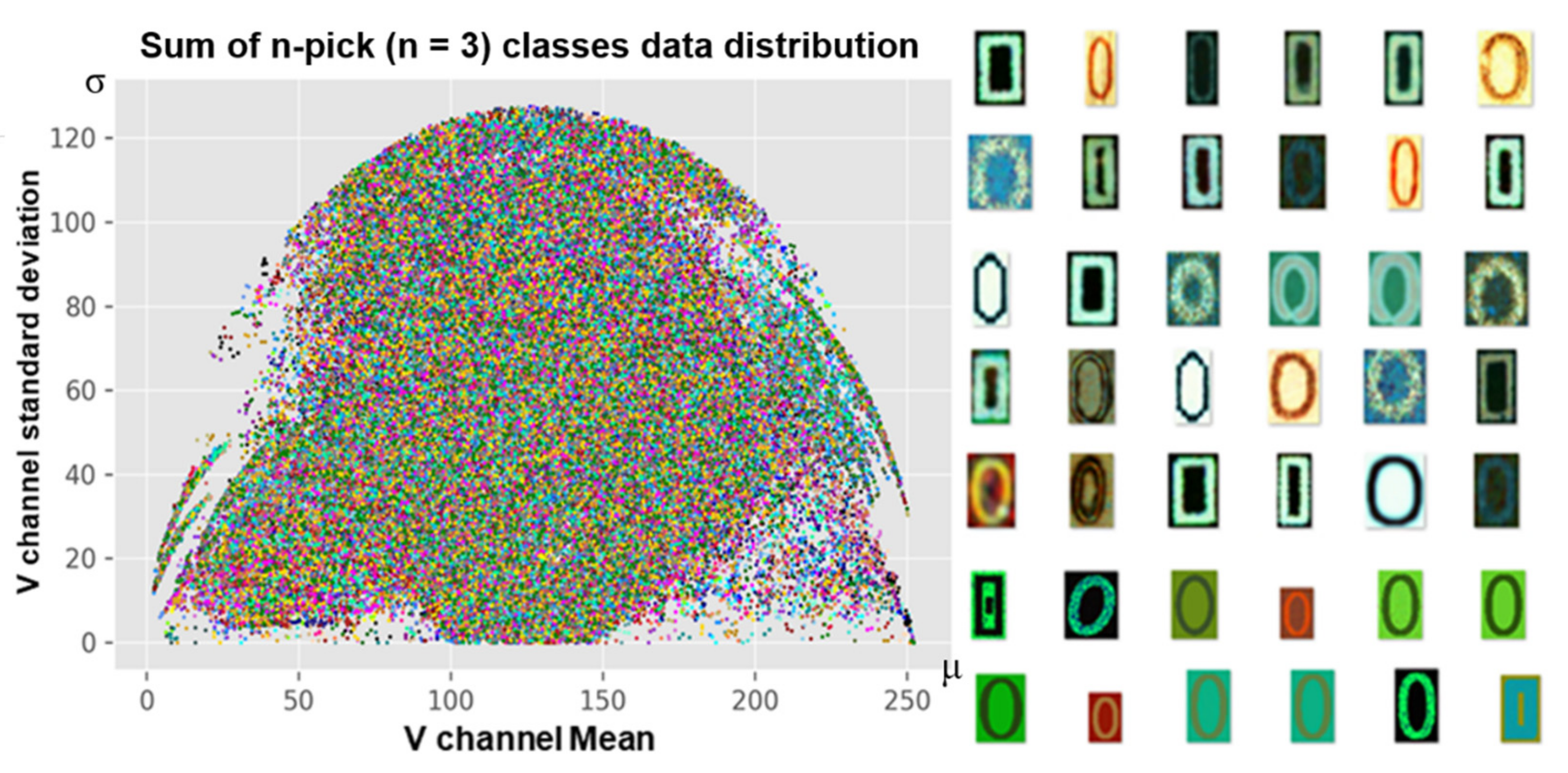

- Types of PCB parts continue to grow. Thus, a lot of time is needed to accumulate and learn all collected data. In this work, we used an n-pick grid sampling to include sufficient features while minimizing existing accumulated data, leading to a continuous learning with a quite simple method.

2. Related Work

2.1. PCB-Related Optical Character Recognition (OCR) Research and Component Defect Inspection

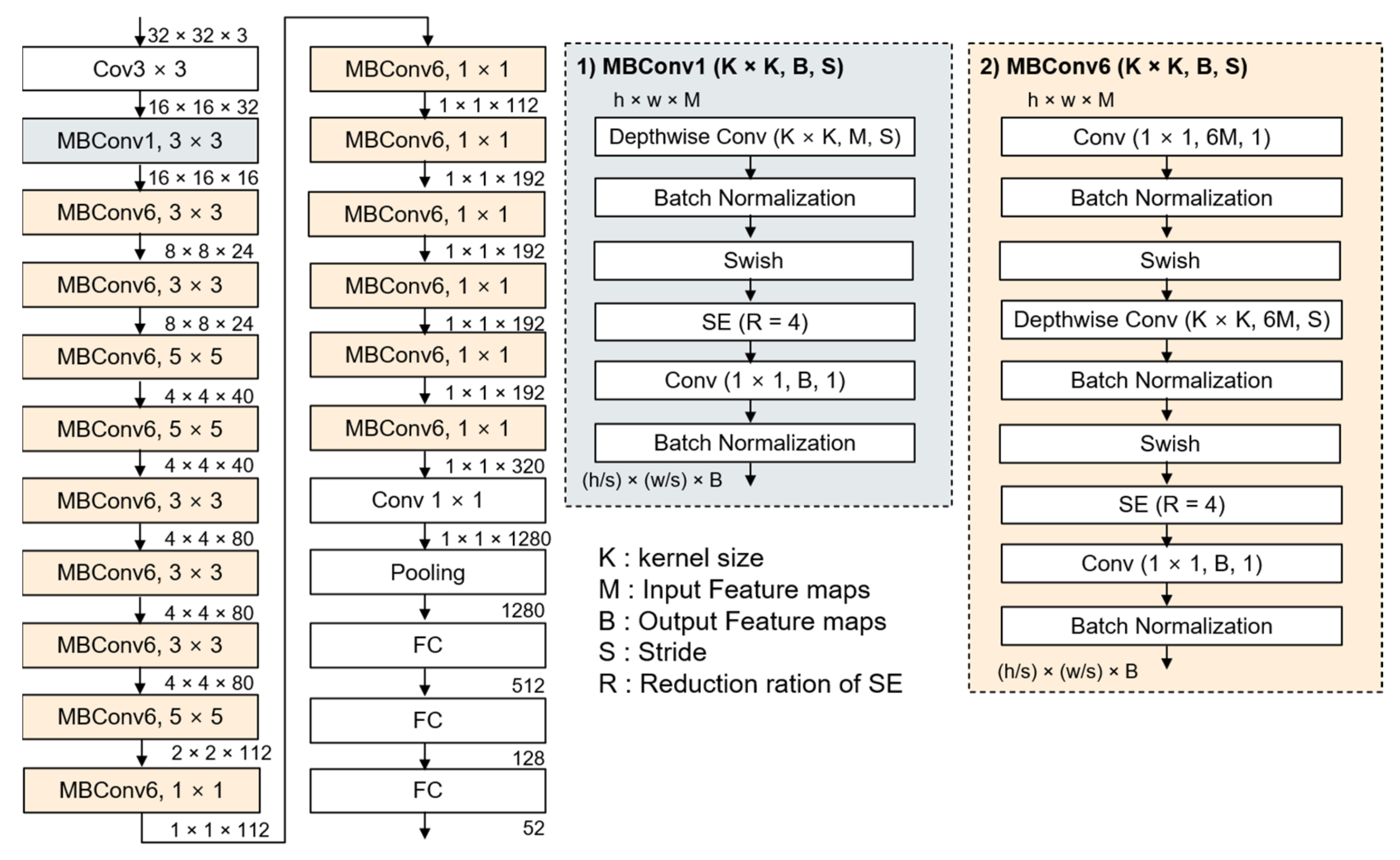

2.2. EfficientNet

3. Data Collection and Processing

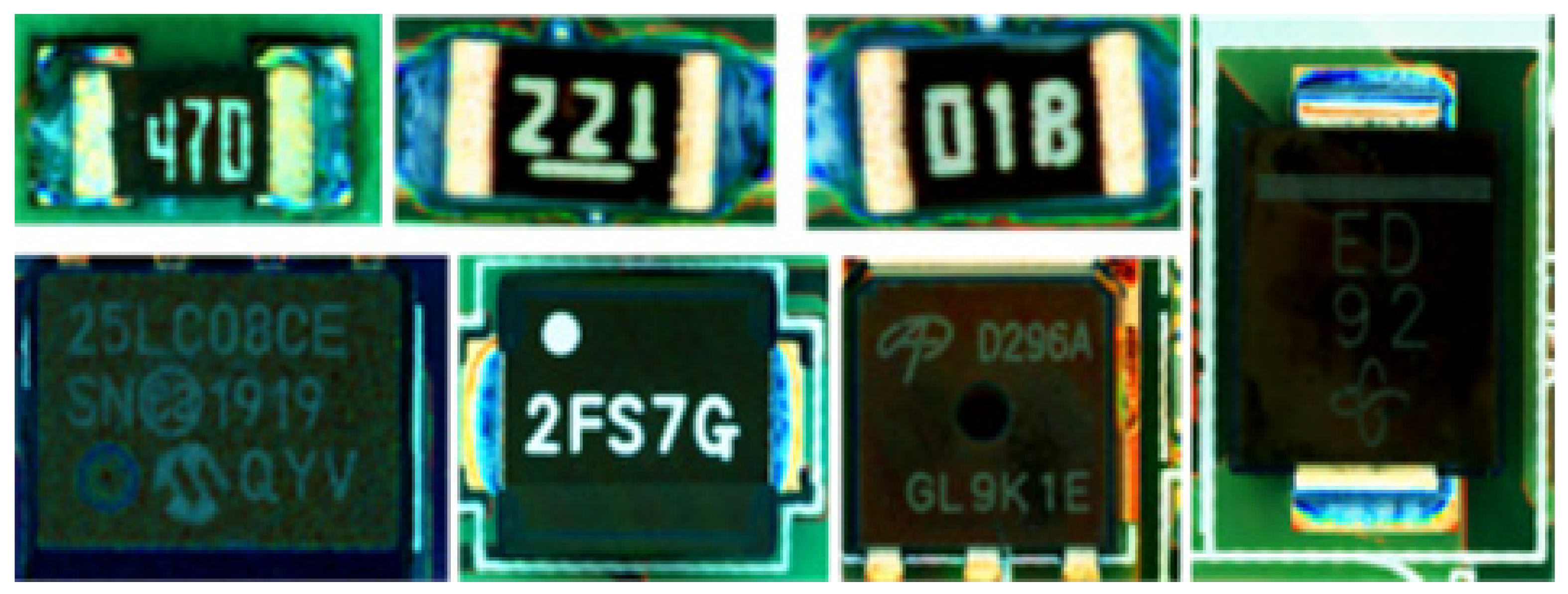

3.1. Data Collection

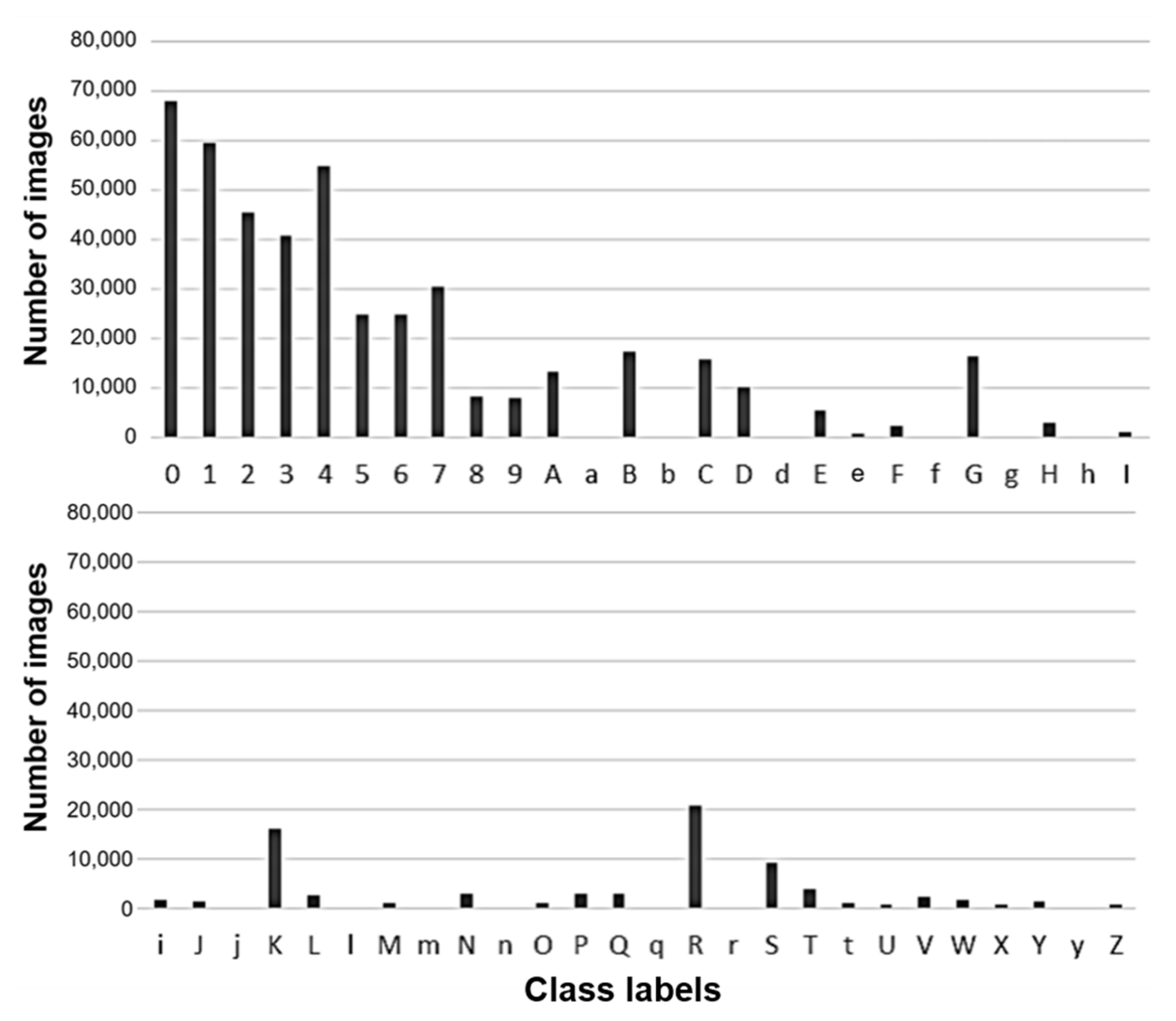

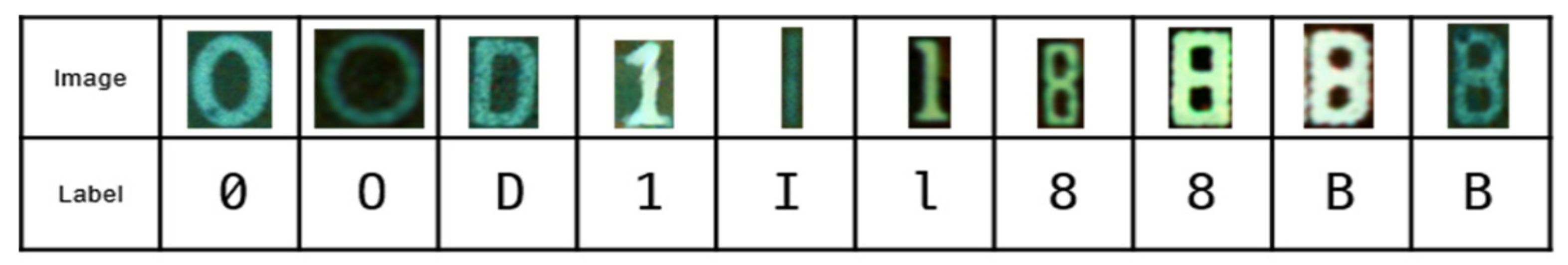

3.2. Class Definition

3.3. Analysis of Data Imbalance Issues

3.4. Enhancement and Augmentation of Character Data

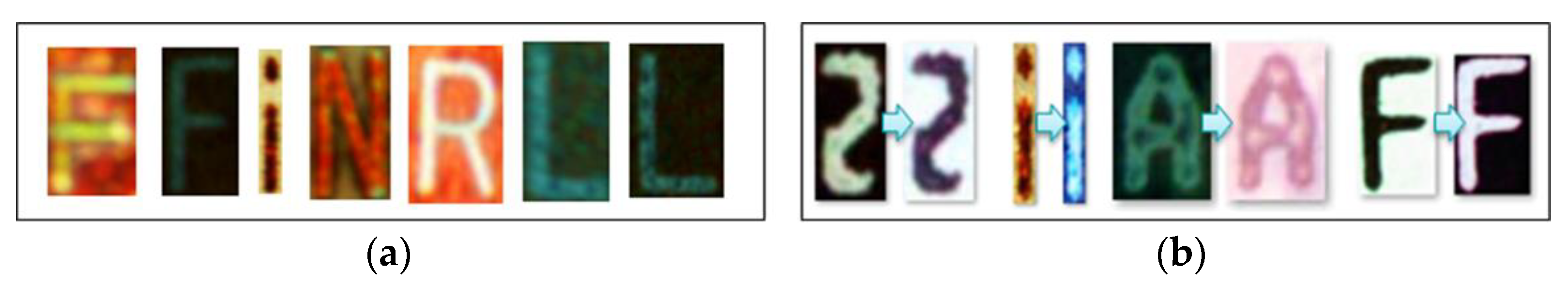

3.4.1. Illumination Variance

3.4.2. Rotation Variance

3.4.3. Size Variance

3.4.4. Noise and Contamination (Occlusion) Variance

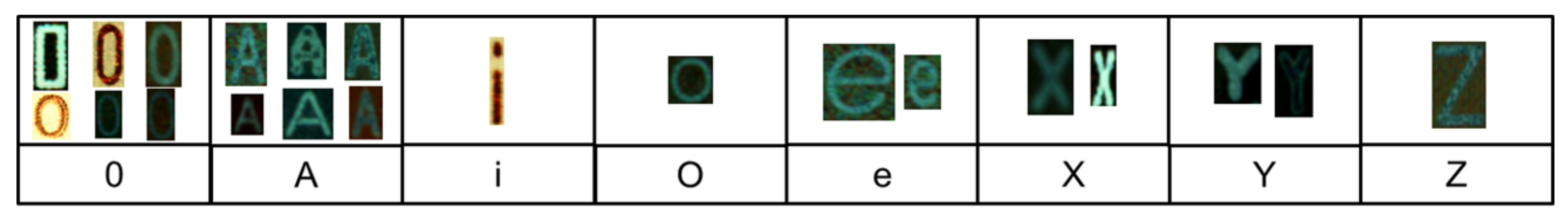

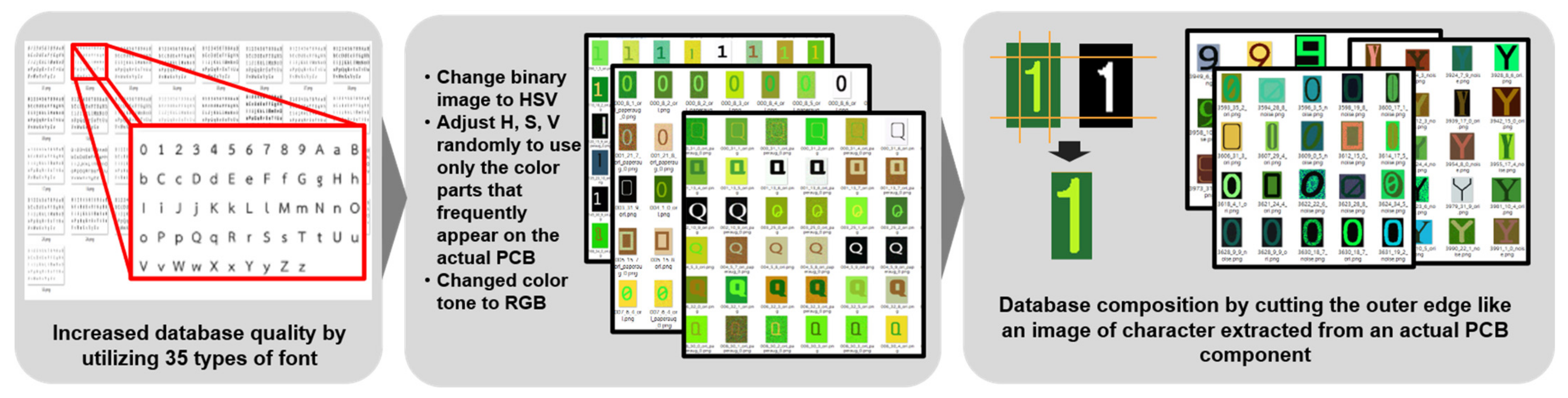

3.4.5. Font Shapes Within Class Variance

- (a)

- Fonts installed on the computer (35 types of fonts in this study) was used to generate 52 classes. It meant that 52 characters’ images were generated for 35 types of fonts, resulting in a total number of 1820 images. The foreground of the image was made black and the background was made white.

- (b)

- This was repeated for a total of 52 times (from 0 to Z) and 35 font styles were randomly selected.

- (c)

- The RGB channel of the image was converted to hue, saturation, value (HSV) channel [29].

- (d)

- When R, G, and B values of a pixel were 0, it was a foreground (i.e., a character). H, S, and V values of a pixel were randomly set to satisfy the following ranges: 0 ≤ H < 30, 100 ≤ S < 256, and 100 ≤ S < 256.

- (e)

- Conversely, if R, G, and B values of a pixel were 255, it was a background. H, S, and V values of the pixel were randomly set to satisfy the following ranges: 0 ≤ H < 30, 100 ≤ S < 256, and 0 ≤ S < 150.

- (f)

- Processes (4) and (5) were repeated by checking all pixels in the image. In addition, ranges for H, S, and V in processes (4) and (5) were just examples. They were empirically set as color frequently found in PCBs.

- (g)

- The HSV channel image was converted to RGB and saved.

- (h)

- Random noise was performed for half of processes from (2) to (7) and mixed into the image.

- (i)

- The operation from processes (2) to (8) was repeated as many times as set by the user. In this work, this method was utilized to create 8000 images per class.

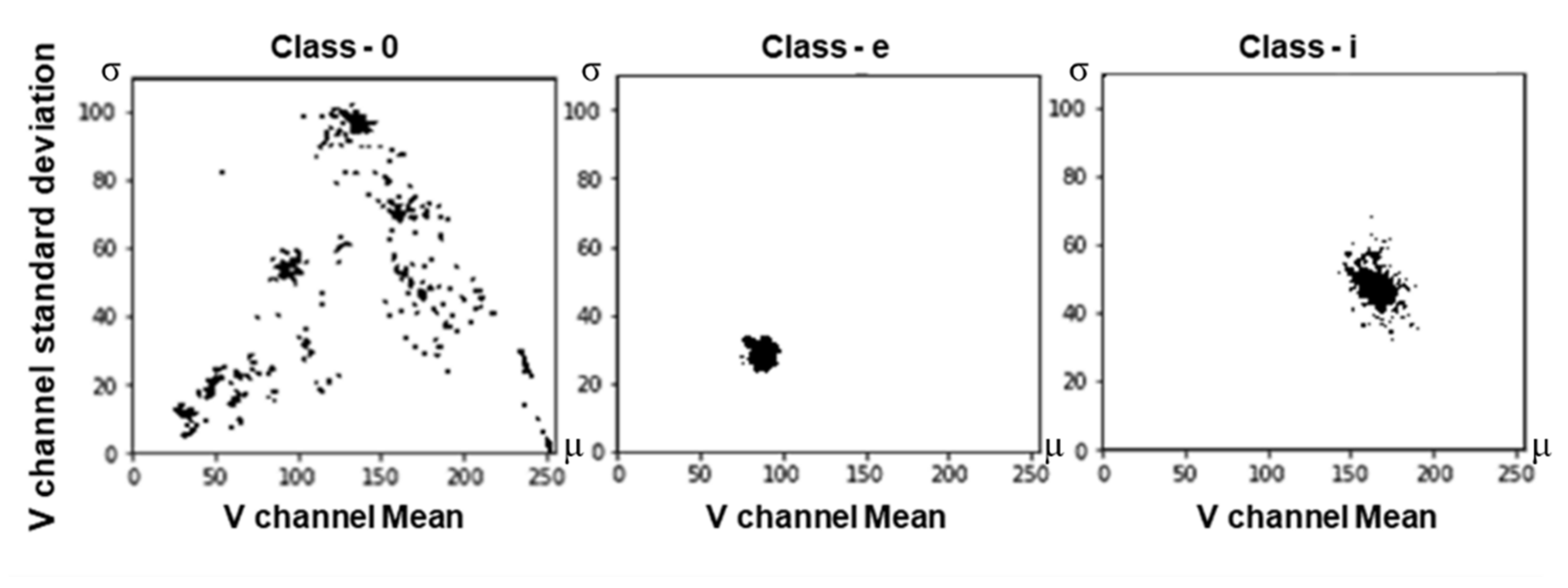

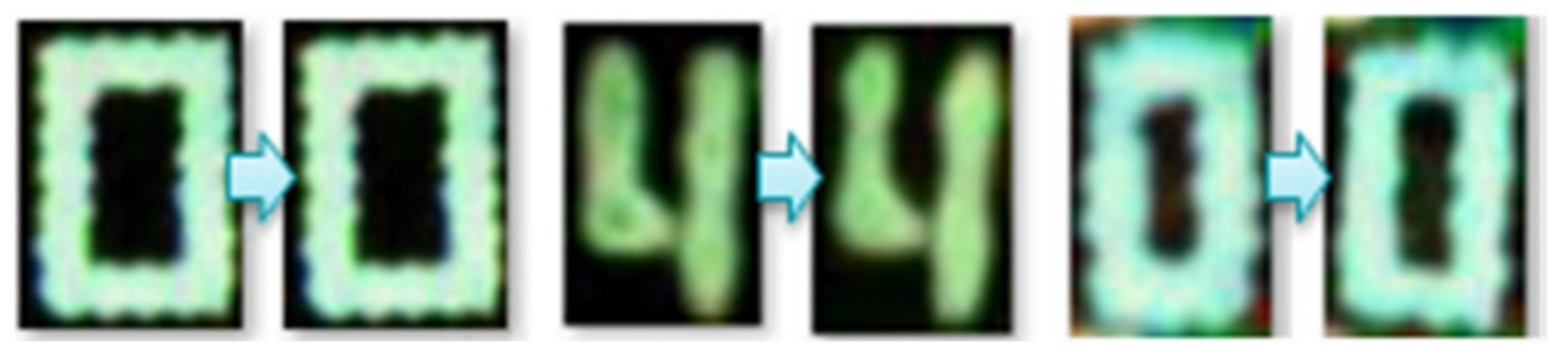

3.5. Data Sampling and Grid Algorithm

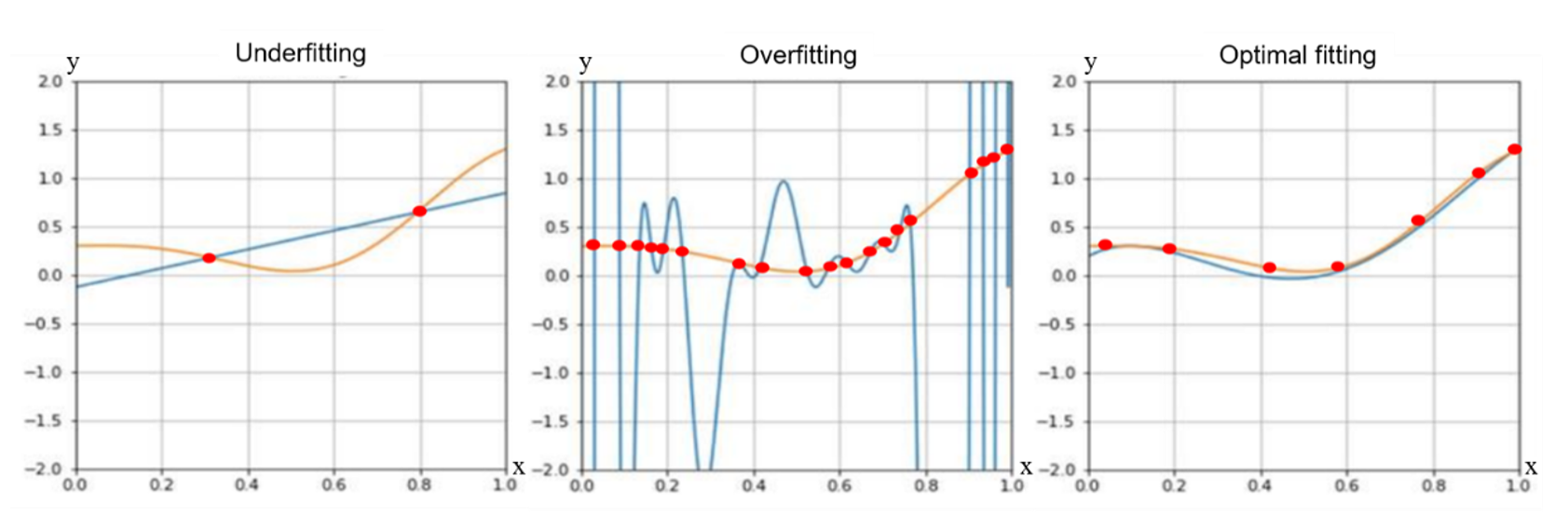

3.5.1. Overfitting and Underfitting

3.5.2. Grid Sampling

| Algorithm 1: Grid and n-pick sampling algorithm |

| imgNum = number of images n = Number of n for n-Pick sampling m = Number of grid sampling that extracts a total of m sheets from the original dataset imageList = Data list of Total images (image path) randomPickupImg = random sampling algorithm graph_w = 255, graph_h = 150, cut_num = 5 grid_w = int(graph_w/cut_num), grid_h = int(graph_h/cut_num) /* Check the number of data in each grid */ for i to imgNum: for x to grid_w: for y to grid_h: if mean[i] >= x*cut_num and mean[i] < (x + 1)*cut_num: if std[i] >= y*cut_num and std[i] < (y + 1)*cut_num: countNumberOfImgInGrid[x,y] + = + 1 /* Calculate the percentage of data occupied by each grid */ imgRatioInGrid[x,y] = (countNumberOfImgInGrid[x,y]/imgNum) /* N-pick sampling of one grid */ if nPick grid sampling: for x to grid_w: for y to grid_h: if countNumberOfImgInGrid[x,y] > 0: finalSampledDataList = randomPickupImg (imageList in grid [x. y]) until n /* Grid sampling for a total of m sheets */ else: for x to grid_w: for y to grid_h: if countNumberOfImgInGrid[x,y] > 0: if (m * imgRatioInGrid[x,y]) > 1: z = (int) (m * imgRatioInGrid[x,y]) else if (m * imgRatioInGrid[x,y]) < 1: z = 1 finalSampledDataList = randomPickupImg (imageList in grid [x. y]) until z if Number of finalSampledDataList > m: randomPickupImg (finalSampledDataList) until m |

| /* Save image in sampling folder */ Save(finalSampledDataList into sampling folder) |

4. Proposed Method

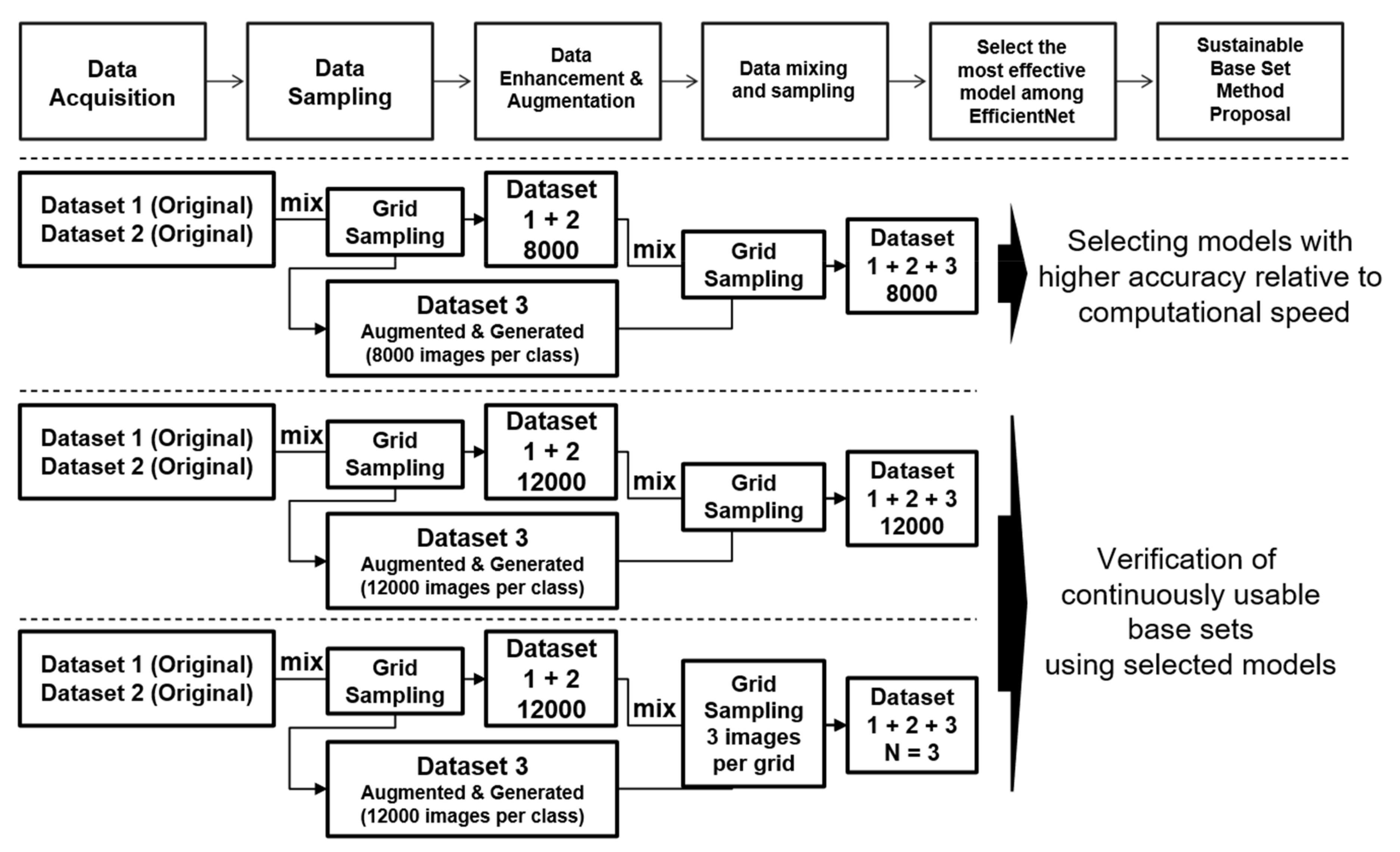

4.1. Process of the Proposed Method

- Models learned using sample data from few factories cannot be represented by models for all factories.

- As a result of experimenting with combinations of all cases, the accuracy is higher when mixing newly generated data using fonts than existing data augmentation.

- When using grid sampling algorithms, the higher the number of sampled data, the better the learning accuracy. However, considering the learning speed, about 8000 pcs are effective.

- Previous studies did not mention the n-pick algorithm.

4.2. Configuration of the Coreset

5. Implementation and Experimental Results

5.1. Selection of Models with High Accuracy and Good Computation Speed

5.2. A Continuously Available Base Set for Future Learning

5.3. Analysis of Results and Failure Factors

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, X.W.; Lin, X. Big data deep learning: Challenges and perspectives. IEEE Access 2014, 2, 514–525. [Google Scholar] [CrossRef]

- Zhang, Q.; Yang, L.T.; Chen, Z.; Li, P. A survey on deep learning for big data. Inf. Fusion 2018, 42, 146–157. [Google Scholar] [CrossRef]

- Gang, S.; Fabrice, N.; Lee, J.J. Coresets for PCB Character Recognition based on Deep Learning. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication, ICAIIC 2020, Fukuoka, Japan, 19–21 February 2020; pp. 637–642. [Google Scholar] [CrossRef]

- Fabrice, N.; Gang, S.; Lee, J.J. Training Data Sets Construction from Large Data Set for PCB Character Recognition. J. Multimed. Inf. Syst. 2019, 6, 225–234. [Google Scholar] [CrossRef]

- Gang, S.; Lee, J. Coreset Construction for Character Recognition of PCB Components Based on Deep Learning. J. Korea Multimed. Soc. 2021, 24, 382–395. [Google Scholar]

- Bachem, O.; Lucic, M.; Krause, A. Practical coreset constructions for machine learning. arXiv 2017, arXiv:1703.06476. [Google Scholar]

- Jung, J.; Park, T. A PCB Character Recognition System Using Rotation-Invariant Features. J. Inst. Control. Robot. Syst. 2006, 12, 241–247. [Google Scholar]

- Kim, W.; Lee, J.; Ko, Y.; Son, C.; Jin, C. Parallel Pre-Process Model to Improve Accuracy of Tesseract-OCR. In Proceedings of the Conferece on Korea Information Science Society, Jeju Island, Korea, 27–29 April 2018; pp. 641–643. [Google Scholar]

- Li, W.; Neullens, S.; Breier, M.; Bosling, M.; Pretz, T.; Merhof, D. Text recognition for information retrieval in images of printed circuit boards. In Proceedings of the IECON 2014—40th Annual Conference of the IEEE Industrial Electronics Society, Dallas, TX, USA, 29 October–1 November 2014; pp. 3487–3493. [Google Scholar] [CrossRef]

- Nava-Dueñas, C.F.; Gonzalez-Navarro, F.F. OCR for unreadable damaged characters on PCBs using principal component analysis and Bayesian discriminant functions. In Proceedings of the 2015 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 7–9 December 2015; pp. 535–538. [Google Scholar] [CrossRef]

- Lee, S.H.; Song, J.K.; Yoon, B.W.; Park, J.S. Improvement of character recognition for parts book using pre-processing of deep learning. J. Inst. Control. Robot. Syst. 2019, 25, 241–248. [Google Scholar] [CrossRef]

- Song, J.; Park, T. Segmentation of Feature Extraction Regions for PCB Solder Joint Defect Classification. In Proceedings of the Conference on Information and Control Systems, Mokpo, Korea, 26–28 October 2017; pp. 13–14. [Google Scholar]

- Baek, Y.T.; Sim, J.G.; Pak, C.Y.; Lee, S.H. PCB Defect Inspection using Deep Learning. In Proceedings of the Korean Society of Computer Information Conference, JeJu Island, Korea, 16–18 July 2018; pp. 325–326. [Google Scholar]

- Cho, T. Detection of PCB Components Using Deep Neural Nets. J. Semicond. Disp. Technol. 2020, 19, 11–15. [Google Scholar]

- Tang, S.; He, F.; Huang, X.; Yang, J. Online PCB Defect Detector on a New PCB Defect Dataset. arXiv 2019, arXiv:1902.06197. [Google Scholar]

- Silva, L.H.D.S.; Azevedo, G.O.D.A.; Fernandes, B.J.T.; Bezerra, B.L.D.; Lima, E.B.; Oliveira, S.C. Automatic Optical Inspection for Defective PCB Detection Using Transfer Learning. In Proceedings of the 2019 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Guayaquil, Ecuador, 11–15 November 2019. [Google Scholar] [CrossRef]

- Huang, W.; Wei, P.; Zhang, M.; Liu, H. HRIPCB: A challenging dataset for PCB defects detection and classification. J. Eng. 2020, 2020, 303–309. [Google Scholar] [CrossRef]

- Li, Y.T.; Guo, J.I. A VGG-16 based Faster RCNN Model for PCB Error Inspection in Industrial AOI Applications. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Taichung, Taiwan, 19–21 May 2018. [Google Scholar]

- Kuo, C.W.; Ashmore, J.D.; Huggins, D.; Kira, Z. Data-efficient graph embedding learning for PCB component detection. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 551–560. [Google Scholar] [CrossRef]

- Li, Y.T.; Kuo, P.; Guo, J.I. Automatic Industry PCB Board DIP Process Defect Detection with Deep Ensemble Method. IEEE Int. Symp. Ind. Electron. 2020, 2020, 453–459. [Google Scholar] [CrossRef]

- Hu, B.; Wang, J. Detection of PCB Surface Defects with Improved Faster-RCNN and Feature Pyramid Network. IEEE Access 2020, 8, 108335–108345. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 10691–10700. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Bazi, Y.; Rahhal, M.M.A.; Alhichri, H.; Alajlan, N. Simple yet effective fine-tuning of deep cnns using an auxiliary classification loss for remote sensing scene classification. Remote Sens. 2019, 11, 2908. [Google Scholar] [CrossRef]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. Mnasnet: Platform-aware neural architecture search for mobile. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2815–2823. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Mariani, G.; Scheidegger, F.; Istrate, R.; Bekas, C.; Malossi, C. BAGAN: Data augmentation with balancing GAN. arXiv 2018, arXiv:1803.09655. [Google Scholar]

- Bowles, C.; Chen, L.; Guerrero, R.; Bentley, P.; Gunn, R.; Hammers, A.; Dickie, D.A.; Hernández, M.V.; Wardlaw, J.; Rueckert, D. GAN augmentation: Augmenting training data using generative adversarial networks. arXiv 2018, arXiv:1810.10863. [Google Scholar]

- HSL and HSV—Wikipedia. Available online: https://en.wikipedia.org/wiki/HSL_and_HSV (accessed on 25 March 2021).

- Bonaccorso, G. Machine Learning Algorithms; Vikrant, P., Singh, A., Eds.; Packt Publishing Ltd.: Birmingham, UK, 2017; ISBN 9781785889622. [Google Scholar]

- Phillips, J.M. Coresets and sketches. Handb. Discret. Comput. Geom. Third Ed. 2017, 1269–1288. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on deep learning with class imbalance. J. Big Data 2019, 6. [Google Scholar] [CrossRef]

- Gu, K.; Tao, D.; Qiao, J.; Lin, W. Learning a no-reference quality assessment model of enhanced images with big data. arXiv 2019, arXiv:1904.08632. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6. [Google Scholar] [CrossRef]

| No | Database | Details |

|---|---|---|

| 1 | Dataset 1 | Collected data from the 1st factory |

| 2 | Dataset 2 | Collected data from the 2nd factory |

| 3 | Dataset 3 | - Augmented images from datasets 1, 2 - Generated font data |

| 4 | Test dataset | - Collected data from the 1st factory, 2nd factory, and other factories (over five factories) - Amount of data from the 1st factory, 2nd factory: 10% of the total amount - Amount of other factories data: 90% of the total amount - Collect at a different period |

| No | Images Per Class | Number of Learning Data | Number of Validation Data | Number of Test Data |

|---|---|---|---|---|

| 1 | 8000 | 332,800 | 83,200 | 367,510 |

| 2 | 12,000 | 499,200 | 124,800 | |

| 3 | 3 images per grid | 79,395 | 19,848 |

| Experiments | ResNet 56 Layers | EfficientNet B0 | EfficientNet B7 |

|---|---|---|---|

| Original dataset1 | 79.917 | 97.741 | 97.887 |

| Experiments | ResNet 56 Layers Top1 | ResNet 56 Layers Top5 |

|---|---|---|

| 8000 images per class | 97.763 | 99.9 |

| Category | CPU | GPU |

|---|---|---|

| ResNet 56 | 0.00606 | 0.00028 |

| No. | Experiments | EfficientNet Top1 | EfficientNet Top5 |

|---|---|---|---|

| 1 | B0 | 98.274 | 99.881 |

| 2 | B1 | 99.003 | 99.965 |

| 3 | B2 | 98.869 | 99.955 |

| 4 | B3 | 98.135 | 99.900 |

| 5 | B4 | 98.643 | 99.955 |

| 6 | B5 | 98.395 | 99.942 |

| 7 | B6 | 98.965 | 99.940 |

| 8 | B7 | 99.065 | 99.965 |

| Category | EfficientNet Models | |||||||

|---|---|---|---|---|---|---|---|---|

| B0 | B1 | B2 | B3 | B4 | B5 | B6 | B7 | |

| CPU | 0.00120 | 0.00170 | 0.00183 | 0.00235 | 0.00326 | 0.00479 | 0.00644 | 0.00883 |

| GPU | 0.00027 | 0.00036 | 0.00037 | 0.00043 | 0.00055 | 0.00071 | 0.00083 | 0.00113 |

| No. | Experiments | EfficientNet B0 Top1 | EfficientNet B1 Top1 |

|---|---|---|---|

| 1 | 12,000 images per class | 99.829 | 99.851 |

| 2 | n-pick(n = 3) dataset | 98.921 | 99.275 |

| Category | Precision | Recall | F1-score | Support |

|---|---|---|---|---|

| micro avg | 0.9904 | 0.9904 | 0.9904 | 367,510 |

| macro avg | 0.7067 | 0.7339 | 0.7158 | 367,510 |

| weighted avg | 0.9922 | 0.9904 | 0.9912 | 367,510 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gang, S.; Fabrice, N.; Chung, D.; Lee, J. Character Recognition of Components Mounted on Printed Circuit Board Using Deep Learning. Sensors 2021, 21, 2921. https://doi.org/10.3390/s21092921

Gang S, Fabrice N, Chung D, Lee J. Character Recognition of Components Mounted on Printed Circuit Board Using Deep Learning. Sensors. 2021; 21(9):2921. https://doi.org/10.3390/s21092921

Chicago/Turabian StyleGang, Sumyung, Ndayishimiye Fabrice, Daewon Chung, and Joonjae Lee. 2021. "Character Recognition of Components Mounted on Printed Circuit Board Using Deep Learning" Sensors 21, no. 9: 2921. https://doi.org/10.3390/s21092921

APA StyleGang, S., Fabrice, N., Chung, D., & Lee, J. (2021). Character Recognition of Components Mounted on Printed Circuit Board Using Deep Learning. Sensors, 21(9), 2921. https://doi.org/10.3390/s21092921