1. Introduction

Numerical or mathematical models are common tools in civil and structural engineering when analyzing the internal forces, the displacements and modal attributes of a structure, or the vibration responses due to dynamic loading. These can be addressed as a direct analysis when the structural parameters are all known. However, given the structural degradation during service life, some structural properties become unknown or uncertain. Structural system identification (SSI), as a one way of inverse analysis, evaluates the actual condition of existing structures, which is of primary importance for their safety.

Most research works focus on the deterministic SSI and probabilistic approach [

1,

2,

3], which aims to find the structural parameters of a numerical model that guarantees the best possible fit between the model output and the observed data. Nevertheless, considering the uncertainties related to the structure model and observed data, uncertainty quantification (UQ) is necessary for assessing the effect of uncertainty and the estimated accuracy [

4]. The detail literature review of the UQ approach is given in

Section 2.

The observability method (OM) has been used in many fields, such as hydraulics, electrical, and power networks or transportation. This mathematical approach has been applied as a static SSI method [

5,

6,

7,

8]. The numerical OM [

9] and constrained observability method (COM) [

10,

11] were developed based on the observability method for the static and dynamic analysis. In order to obtain accurate and reliable parameters, OM identification needs to be robust in terms of variations of systematic modeling uncertainty introduced when modeling complex systems and measurement uncertainty caused by the quality of test equipment and the accuracy of the sensors [

12]. Therefore, in order to apply OM accurately and with the required reliability, it is necessary to carry out an UQ analysis. This is the objective of the present paper when dynamic data is used.

UQ analysis seems to be highly probability-independent from optimal sensor placement. In contrast, the sensors need to be installed on the most informative position, that is, the location that provides the least uncertainty in the bridge parameter evaluations [

13]. One of the most known and commonly adopted approaches for optimal sensor placement was developed by Kammer [

14]. Since then, several variants of this approach have been suggested to resolve the positioning of SSI sensors [

13,

15,

16,

17]. However, no research works have noticed that the choice in the best position of the sensors might change when different sources of uncertainty are considered in the uncertainty analysis. To fill this gap, one of the major contributions of this study is to investigate whether there is a best measurement set (optimum sensor deployment providing the most accurate results) independently from the different sources of uncertainty.

This research aims to understand how the uncertainty in the model parameters and data from sensors affect the uncertainty of the output variables, that is, how the uncertainties from different sources propagate or how they will pose their influence on the estimated result. Moreover, by dividing the source of uncertainty into aleatory and epistemic, important insights can be obtained into the extent of uncertainty that can be potentially removed.

Epistemic uncertainty refers to the type of uncertainty caused by the lack of knowledge, thus, with time and more data acquisition, this type of uncertainty can be reduced. On the other hand, the aleatory uncertainty refers to the intrinsic uncertainty that depends on the random nature of the observed property or variable, thus, it cannot be removed no matter the amount of data used [

18] as the noise of measurement sensors always exists.

From the practical point of view, determining the level of uncertainty of the estimated parameters through the dynamic observability method is of interest to determine the robustness of the method. Moreover, an informed decision-making process requires not only of a punctual estimation of the variables, but also the level of confidence of the estimation. The uncertainty of the structural parameters will allow a more accurate reliability analysis of the structure. Additionally, it is also essential to compare the advantages and disadvantages of this method with the existing methods to show the applicability of COM.

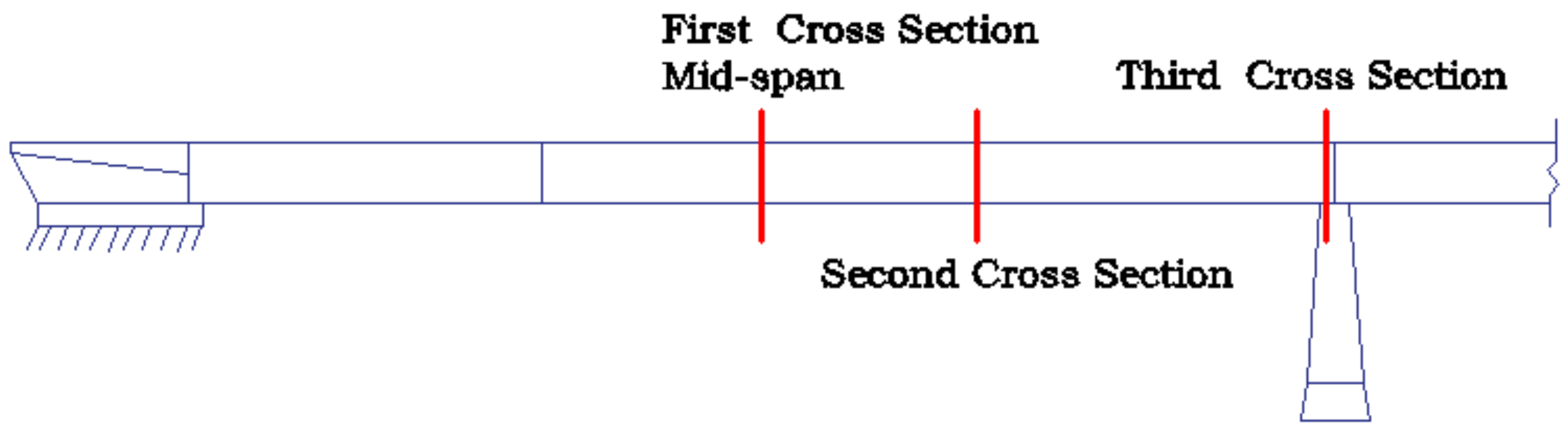

The motivation of this paper is to check the possibility of gaining insight into the uncertainty quantification before the actual monitoring of a structure. The Dutch bridge known as ‘Hollandse Brug’ is used as an example. This bridge was monitored without a previous evaluation and after its monitoring, the conclusion was that the uncertainty was too big to make any conclusive assessment. What is more, the UQ analysis in the framework of the observability method will be developed to fill the blank of the OM method, and the merit of this COM method for UQ analysis will be discussed.

This paper is organized as follows. In

Section 2, an overview of available UQ approaches is given. The principle of the constrained observability method (COM) is described in

Section 3.

Section 4 presents the case study, the Dutch bridge. The uncertainty analysis considering the effect of aleatory and epistemic by COM is shown in this section, and the analysis conducted to choose the best measurement set of sensors in different scenarios of uncertainty. In

Section 5, the comparison of COM and one existing approach, the Bayesian method, is conducted and the discussion of proposed COM. Finally, some conclusions are drawn in

Section 6.

2. Literature Review of Uncertainty Quantification

The ill-posedness of the inverse SSI problem occurs frequently and SSI is extremely susceptible to uncertainties. Uncertainty quantification is a tool to explore and improve the robustness of the SSI methods. In general, methods for quantifying uncertainty can be divided into two major categories: probabilistic and non-probabilistic approaches. Probabilistic approaches reflect the traditional approach to modeling uncertainty, set on the firm foundations of probability theory, where uncertainty is modeled by appointing unknown quantities to probability density functions (PDFs); these PDFs are then propagated to probabilistic output descriptions. Non-probabilistic methods use random matrix theory to construct an uncertain output of the prediction model operator [

4,

19].

Non-probabilistic approaches, such as interval methods [

20,

21,

22], fuzzy theory [

23] and convex model theory [

24], and probabilistic methods, such as the maximum likelihood estimation method [

25], Bayesian method [

26,

27], stochastic inverse method [

28], non-parametric minimum power method [

29], and probabilistic neural networks [

30] have been presented in the existing literature.

In the management of uncertainty, probabilistic Bayesian theory is an attractive framework. It has been widely applied, such as in the identification of material parameters in a cable-stayed bridge [

31], plate structures [

32], and steel towers [

33]. Although the probabilistic method is commonly seen as the most rigorous methodology for dealing with uncertainties effectively and is exceptionally robust to sensor errors [

16], it is not especially suitable for epistemic uncertainty modeling [

34,

35,

36]. The argumentation behind this relates to the definition of the (joint) PDFs explaining the unknown quantities: it is argued that adequate qualitative knowledge for constituting a truthful and representative probabilistic model is hardly available. However, model uncertainty has a major effect on estimating structural reliability [

37].

To respond to some obvious disadvantages/limitations of the probabilistic approach related to the construction of PDFs and the modeling of epistemic uncertainty, the last few decades have seen an increase in non-probabilistic techniques for uncertainty modeling. It was developed by Soize [

19,

38,

39,

40,

41], based on the principle of maximum entropy. Most non-probabilistic methods are generated based on the interval analysis. Interval methods are useful to consider the crisp bounds on the non-deterministic values [

20]. The non-probabilistic fuzzy approach, an extension of the interval method, was introduced in 1965 by Zadeh [

42], aiming to evaluate the response membership function with different confidence degrees [

43,

44]. Ben-Haim developed the convex model method for evaluating the model usability based on the robustness to uncertainties [

45]. Interval approaches, however, are not capable of distinguishing dependency between various model responses by themselves, which may make them severely over-conservative with regard to the real complexity in the responses to the model. Most of the non-probabilistic methods are somehow based on a hypercubic approximation of the result of the interval numerical model, and therefore neglect possible dependence between the output parameters [

46,

47].

It is worth mentioning that perturbation approaches are proved to be useful for the uncertainty analysis of discrete structural models [

48,

49,

50]. However, this method works well for the aleatory uncertainty (sensitivity to eigenvalues and eigenvectors) but not for epistemic uncertainty.

A probabilistic UQ approach was proposed in this paper to analyze the SSI through the dynamic constrained observability method, by considering both the epistemic uncertainty modeling and the aleatory uncertainty. To overcome some of the drawbacks mentioned above, different modal orders are considered separately, after that, all involved mode orders are put together to estimate the output parameters in an objective function. The method of simultaneous evaluation can appropriately take into account the dependence between various parameters.

3. Dynamic Structural System Identification Methodology

In dynamic SSI by COM, the finite element model (FEM) of the structure has to be defined first. Subsequently, the dynamic equation is obtained with no damping and no external applied forces. For illustration, assume that the system of equations is as follows:

In Equation (1), , ,, and , respectively represent the global stiffness matrix, the mass matrix, squared frequency and mode shape vector. For two-dimensional models with Bernoulli beam elements and nodes, the global stiffness matrix is composed of the characteristic of the beam elements (i.e., length , elastic moduli , area and inertia ). The mass matrix, , refers either to the consistent mass matrix or to the lumped one. In this paper, the consistent mass matrix was used applying a unit mass density, . The squared frequency, , is considered when the free-vibration happened. stands for the displacement shapes of the vibrating system, containing the corresponding information, x-direction, , y-direction, , and rotation, , at each node k for each vibration mode i. For each node, 3-dof are considered.

In the direct analysis, every element in the matrix and is assumed to be known. The squared frequency, , and mode shape vector, , are solved by Equation (1). In dynamic SSI by COM, which is an inverse analysis, the matrix is partially known. Parameters appearing in the matrices and are . It is generally assumed that the length, , the unit mass density, , and area, , are known, whereas elastic moduli, , and inertia, , are unknowns. Since the main objective of SSI is to assess the condition of the structure, the estimations of bending stiffnesses, , are of primary importance.

Once the unknowns in the matrix , boundary conditions, and measurements are determined, the COM of dynamic SSI can be conducted. Here, the measurement sets are the frequencies and the corresponding partial modal information of the vibration mode.

Firstly, the separation of the column of matrix and is conducted to place unknown variables of and into {} for the vibration mode to form a new matrix and a new matrix . After that, the modified modal shapes and of the corresponding and include known and unknown terms. Terms of and are known, whereas and include unknown terms. ,, and are the partitioned vectors of and , respectively. The dimensions of each of the elements are given by their superscripts. The modified stiffness and mass matrices, and , are given the corresponding label according to the split of the modal shapes, as shown in Equation (2). is the number of measured modes.

Secondly, the system is rearranged in order to have all the unknowns of the system in one column vector, as shown in Equation (3). Thereafter, the product variables are treated as single linear variables to linearize the system for vibration mode, such as , and instead of “”, “”, or “”.

Thirdly, the equation will be built by combining the information of several models when multiple modal frequencies are considered together. Equation (4) is an example for the first

modal system. Expression in which

is a matrix of constant coefficients,

is a fully known vector, and

contains the full set of unknown variables.

Fourth, the

is treated as a system of linear equations and its general solution is the sum of a particular solution

, and a homogeneous one

, which corresponds to the case

. The general solution is expressed as Equation (5). The value of

is critical for the result of

. If any row of

is composed by only zeros, then the corresponding particular solution will represent the unique solution of that parameter. The parameter obtained in this step is categorized as an observed parameter. New observed parameters are applied to a next iteration (steps 1–4), until no new parameters are recognized.

Lastly, an objective function, Equation (6), is applied to optimize the equation

, which is extracted from the last iteration. Here, in order to uncouple the observed variables, the potential implicit condition is constrained in the objective function, i.e.,

, .

Equation (6) is used to minimize the squared sum of frequency-related error and mode shape-related error.

is the difference between the measured

, and the estimated circular frequencies,

is the modal assurance criterion, which measures the closeness between the calculated mode shape

, obtained from the inverse analysis using the estimated stiffnesses and areas, and the measured shape,

, as shown in Equation (7).

represent the weighting factors of the mode shape components and circular frequencies coefficient components, respectively. In most analyses,

and

are assumed to be equal [

51]. In this paper, the effect of weighting factors was ignored. The specific implementation steps can be found in the literature [

11].

5. Discussion

Hollandse bridge was studied in the InfraWatch project [

49,

50,

51]. After much effort in collecting and analyzing data, no conclusive results were obtained in the structural identification process due to the large level of uncertainty. This fact has motivated the present work, because it is important to know in advance if the uncertainty related to a given SSI approach when applied to a specific structural setup is acceptable or not in real practice. With proper sensor placement, the 90% confidence interval range of the estimated stiffness was found as small as 0.222 for

and 0.183 for

when considering both sources of uncertainty (

Table 6). This means that the estimated stiffness presents around 10% of uncertainty to each direction given that the range was sensibly unbiased. This uncertainty range seems very reasonable if we consider the high level of uncertainty of the input variables (e.g., 50% in the case of the Young modulus or 30% in the rotation of the node of mode shape).

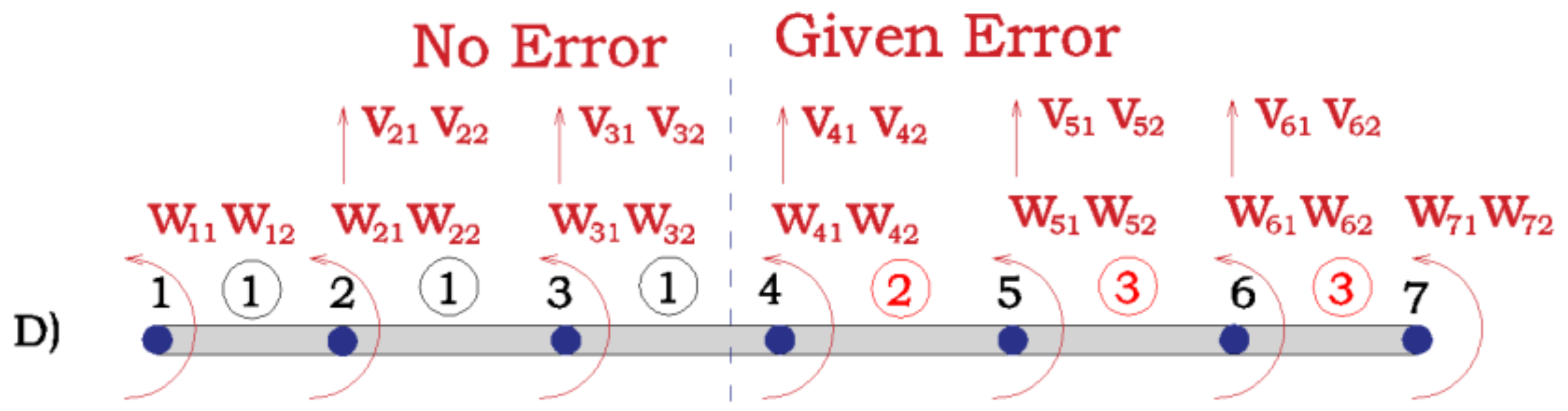

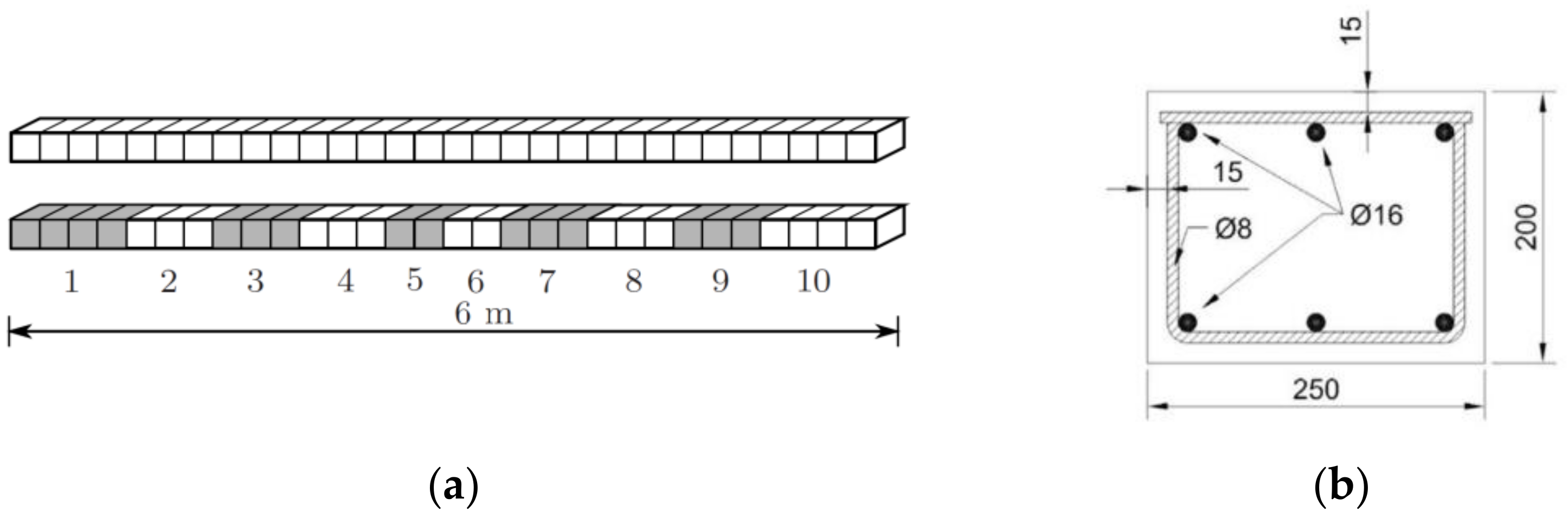

To assess to which extent the dynamic COM provides acceptable results in terms of uncertainty when compared with other SSI methods in the literature, the example proposed by [

4], and further investigated in [

11] was used (see

Figure 11). This is a reinforced concrete beam with a length of 6 m divided into 10 substructures with a uniform stiffness value, as shown in

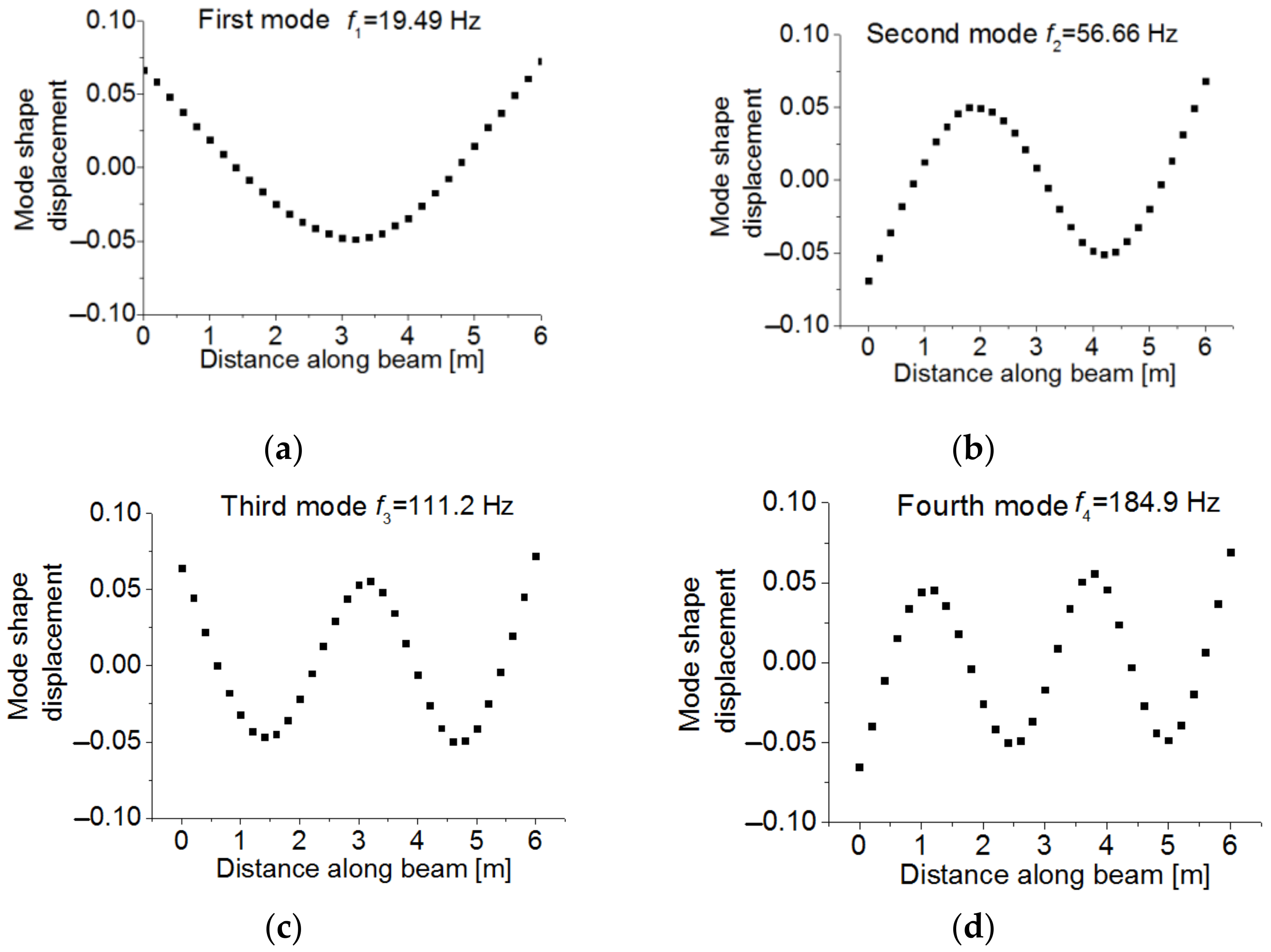

Figure 11. The measured transverse mode shape displacements were observed at equidistant positions along the beam at 31 points. The resulting mode shape measurements are shown in

Figure 12 with their corresponding natural frequencies. The stiffness of these 10 elements given in Reference [

4] were taken as the real values for this beam. The considered measurement set includes the frequencies and vertical displacement at the 31 points given by the same reference. Regarding the errors considered, to introduce the epistemic uncertainty, given that it is a free-free vibration beam with unknown stiffness, only the input parameter

is considered. It takes the common density of reinforced concrete

(probabilistic distribution

, the same as in

Table 2). The aleatory uncertainty was calculated through the difference between the experimental bending modes and frequencies and the corresponding theoretical data at each of these 31 points. The average values of the obtained uncertainty are given in

Table 7.

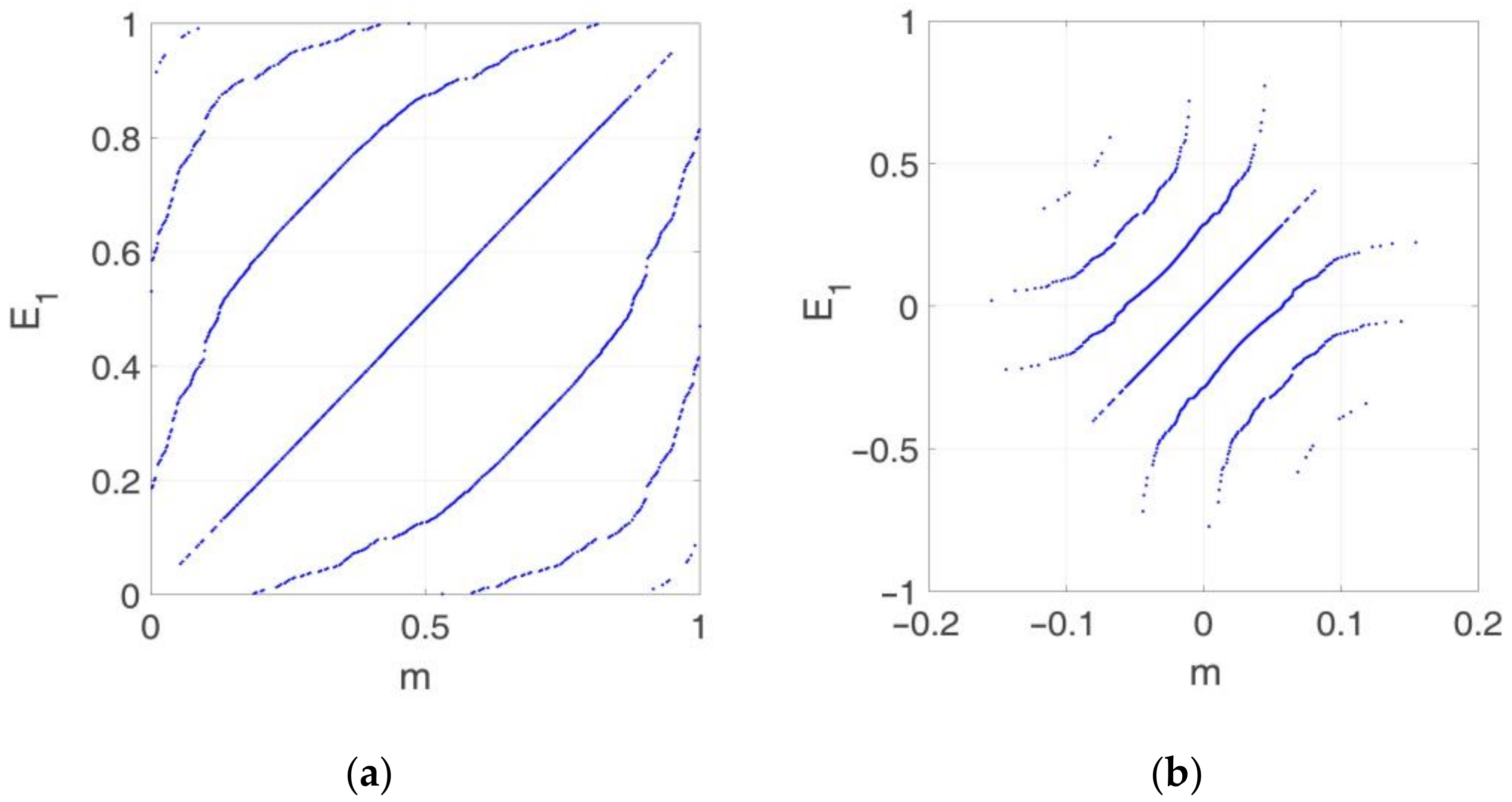

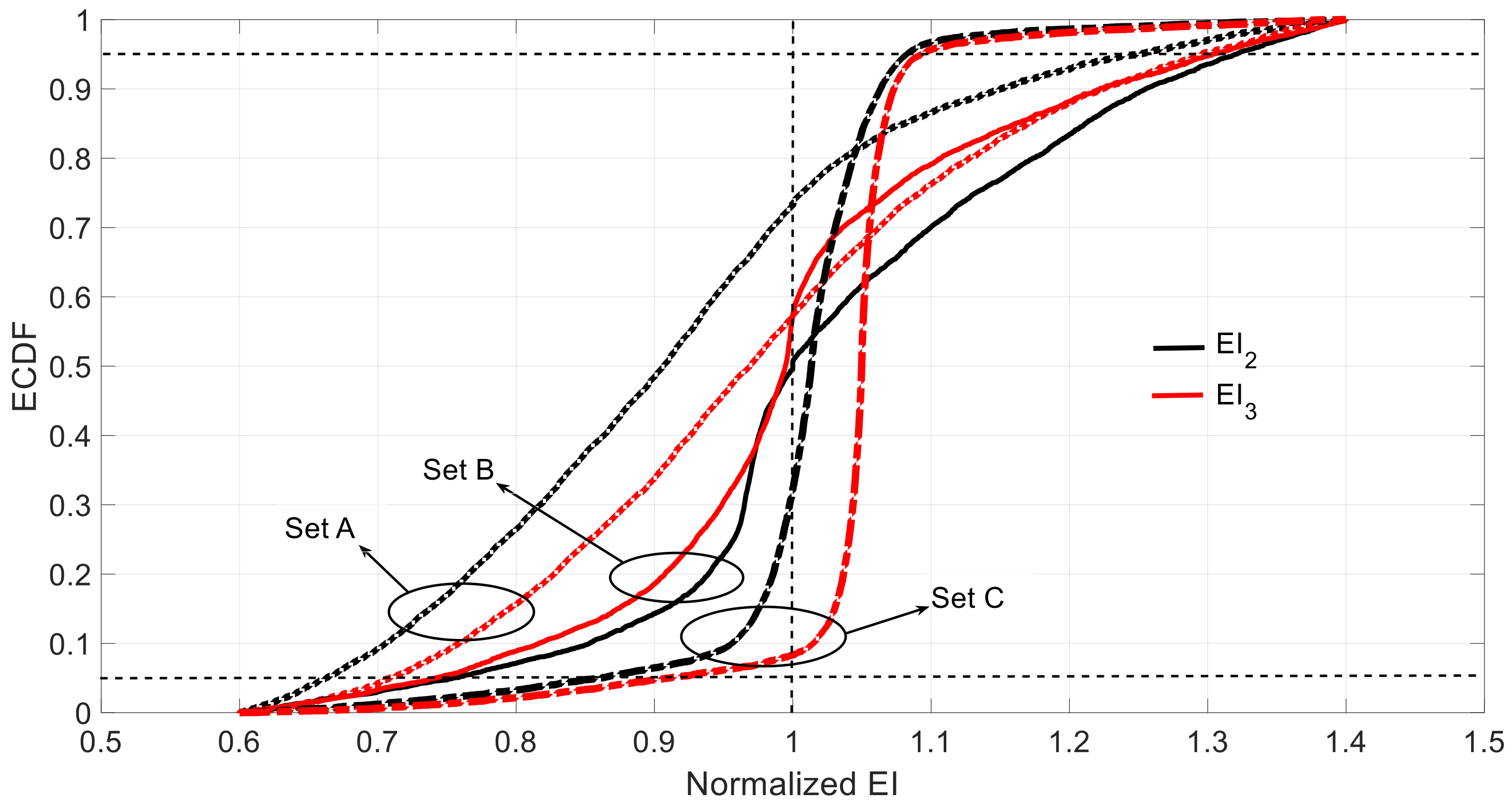

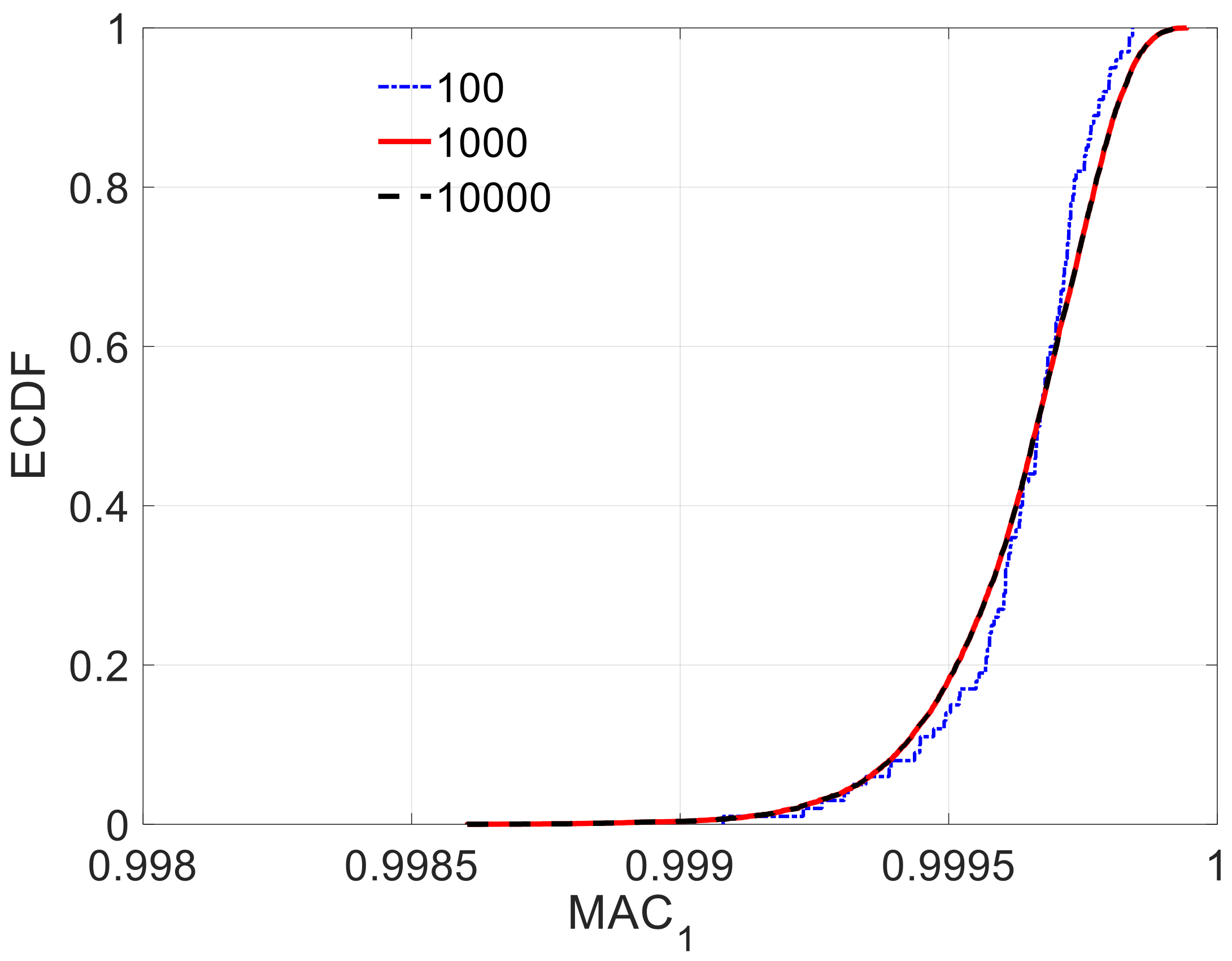

Considering the epistemic and aleatory uncertainty together, the sample size was determined based on the ECDF of

, as shown in

Figure 13. The

distributions obtained for sample sizes of 10

3 and 10

4 were very close to each other, which implies that a sample size of 10

3 was enough to guarantee the accuracy of

.

Figure 14 shows the estimated unknown stiffnesses

and their standard deviation. The COM tended to slightly underestimate the mean values of the stiffness when all mode-shape information was used. The stiffness range associated with the 99% confidence interval obtained by COM was shown in red color in

Figure 15, in comparison with the results reported by Simoen when using a Bayesian approach for the SSI (grey shadow). The real values are indicated with a thick black line. For all the elements, COM provides less uncertain estimations. All in all, this figure shows how the UQ associated with COM provides reasonable and acceptable results, and these results were slightly better than the Bayesian approach.

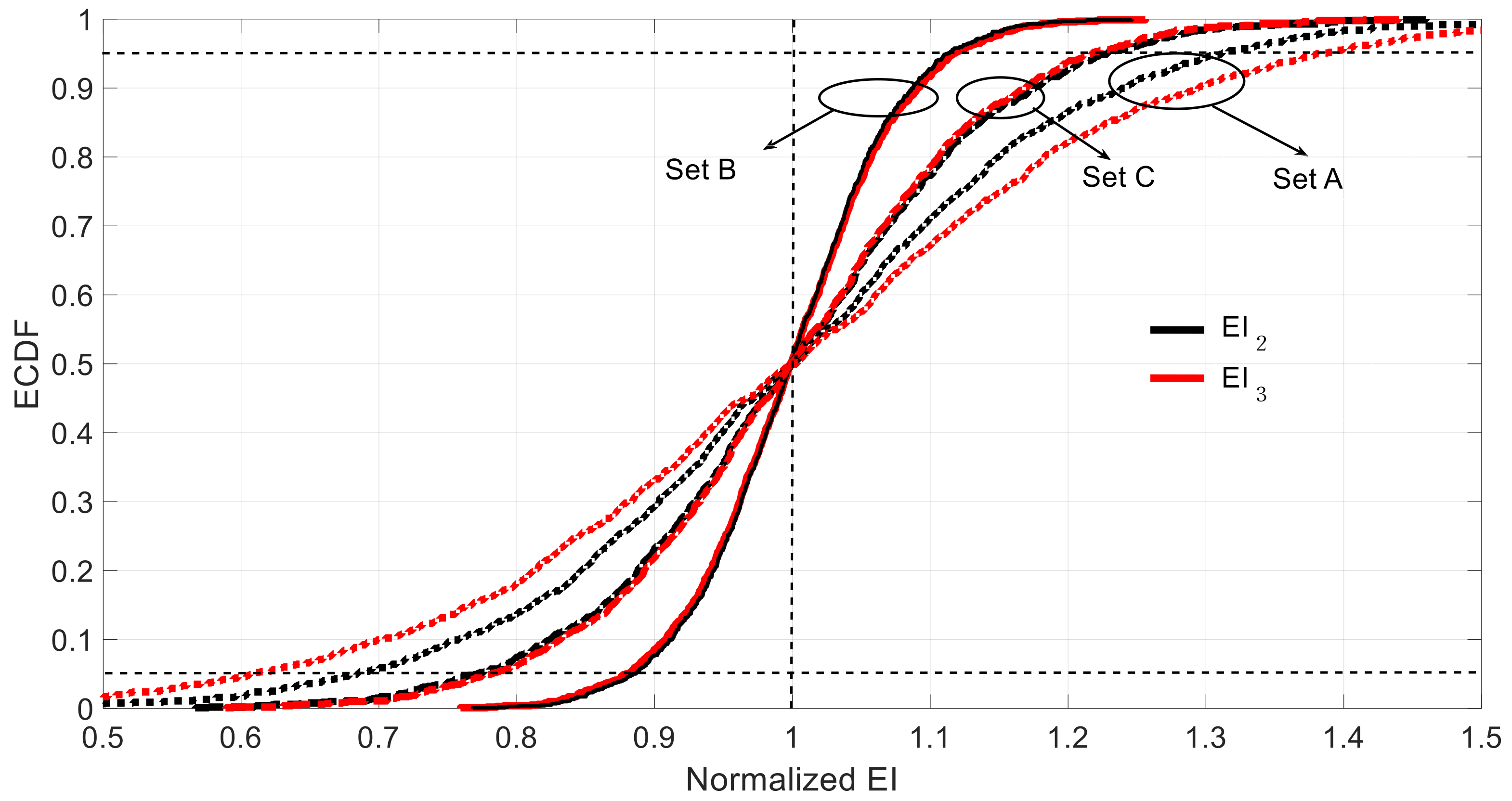

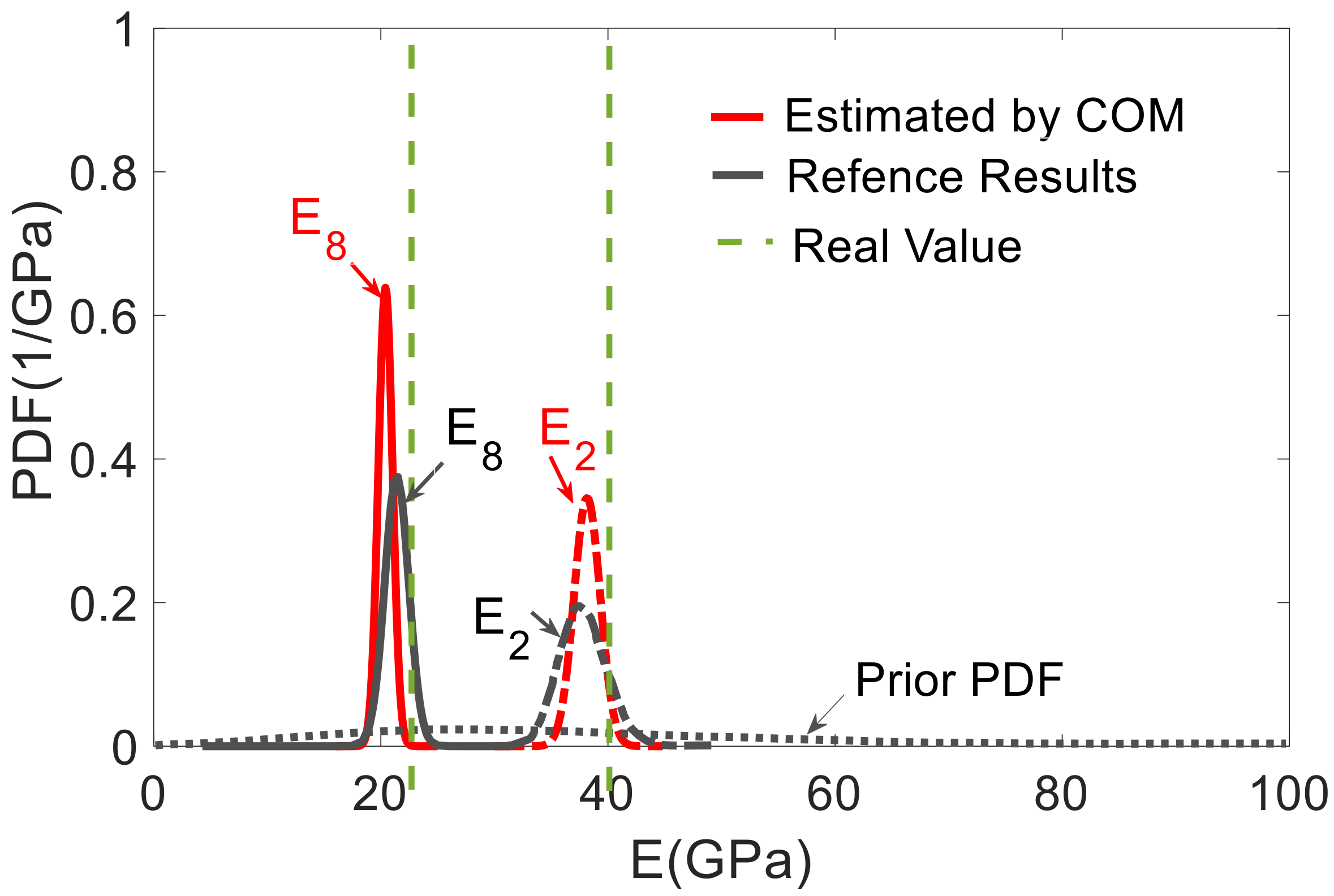

Figure 16 depicts the distribution of Young’s moduli

and

by the UQ analysis of COM (red line) and the distributions obtained by the Bayesian approach (grey line). It is shown that the proposed approach did not require a prior joint PDF to obtain an accurate stiffness probability distribution.

Even when the obtained uncertainty is acceptable, it is always desirable to minimize such an uncertainty. The analysis of the two sources of uncertainty takes relevance in this context. For instance, it is appreciated that there was no bias and skewness in

Table 3 (epistemic uncertainty), whereas obvious bias and skewness is presented in

Table 5 and

Table 6, which mean these were caused by the sensor error. Thus, increasing the sensor accuracy might reduce the bias and skewness effects. Besides, compared to the estimated data of Sets A, B and C in

Table 3,

Table 5 and

Table 6, the optimal sensor set shifts from Set B under a single source of uncertainty to Set C when considering both uncertainties. This means that selecting the optimal placement of the sensor sets is also an effective method to lower uncertainty of the output in addition to increase the sensor accuracy. However, because the aleatory uncertainty is hard to remove [

18], efforts must be made in minimizing the epistemic uncertainty involved in the problem. The more information about the structural setup, the closer the UQ of the SSI will be to the analysis of

Section 4.3.2.