Automated IoT Device Identification Based on Full Packet Information Using Real-Time Network Traffic

Abstract

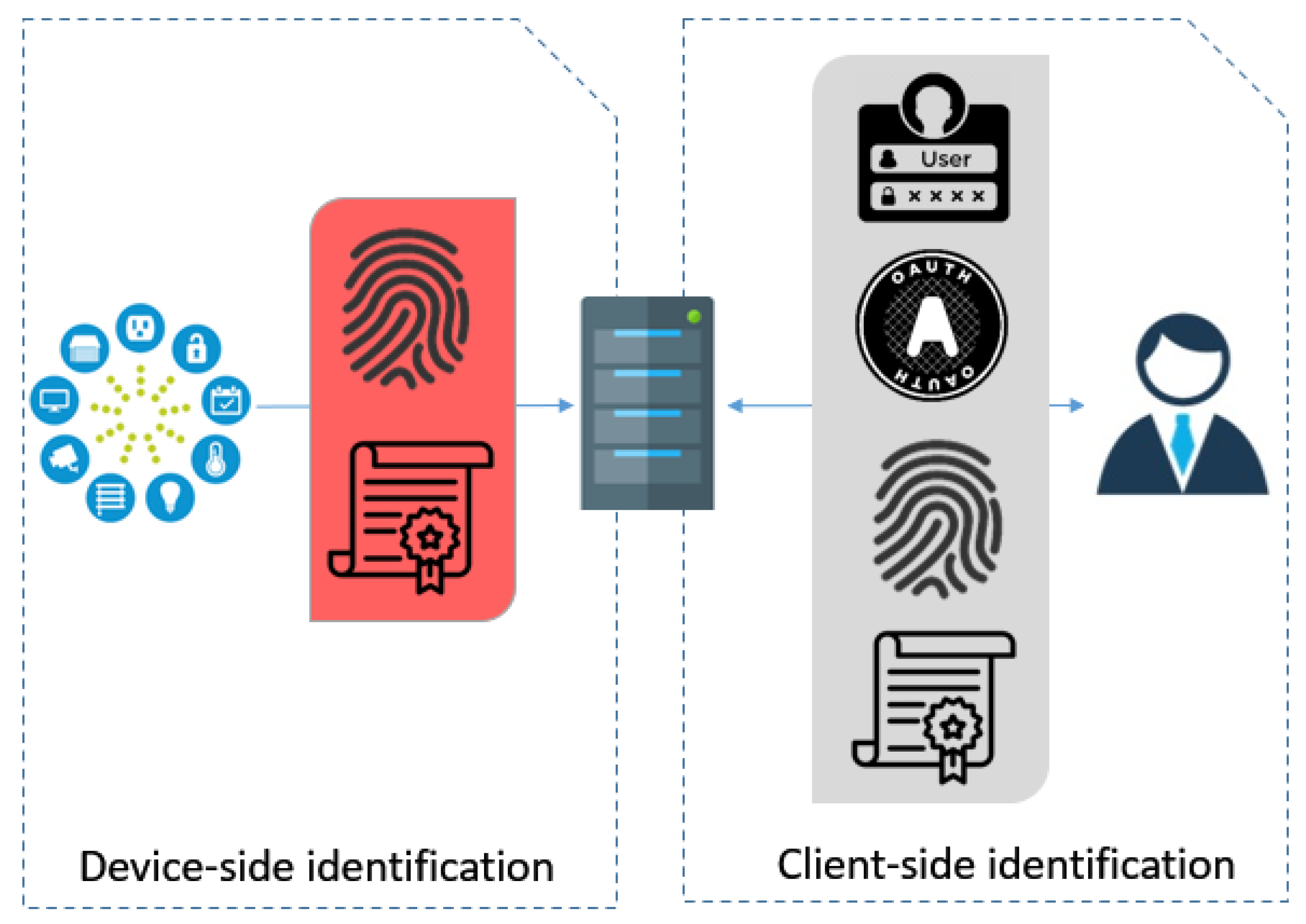

1. Introduction

2. Literature Review

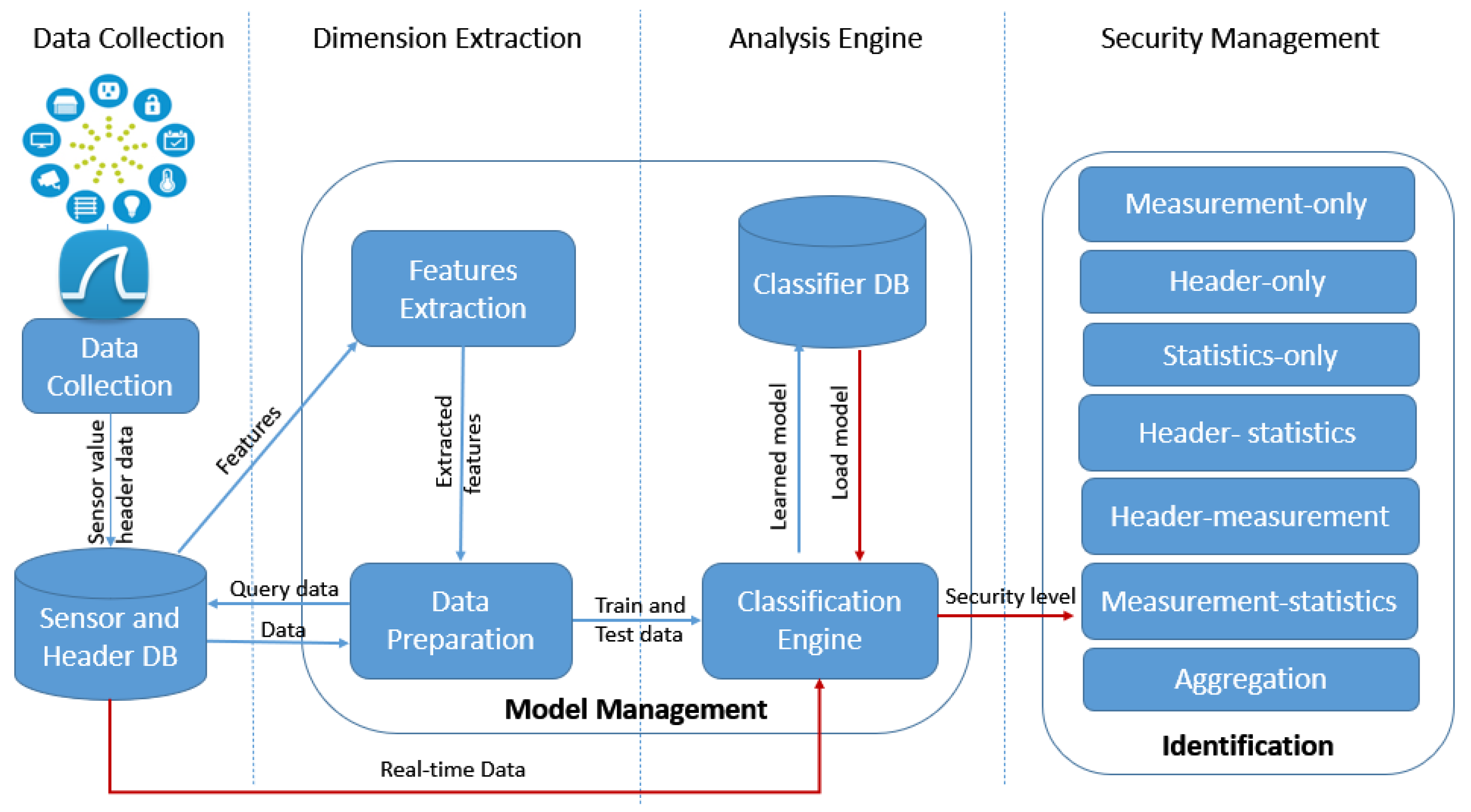

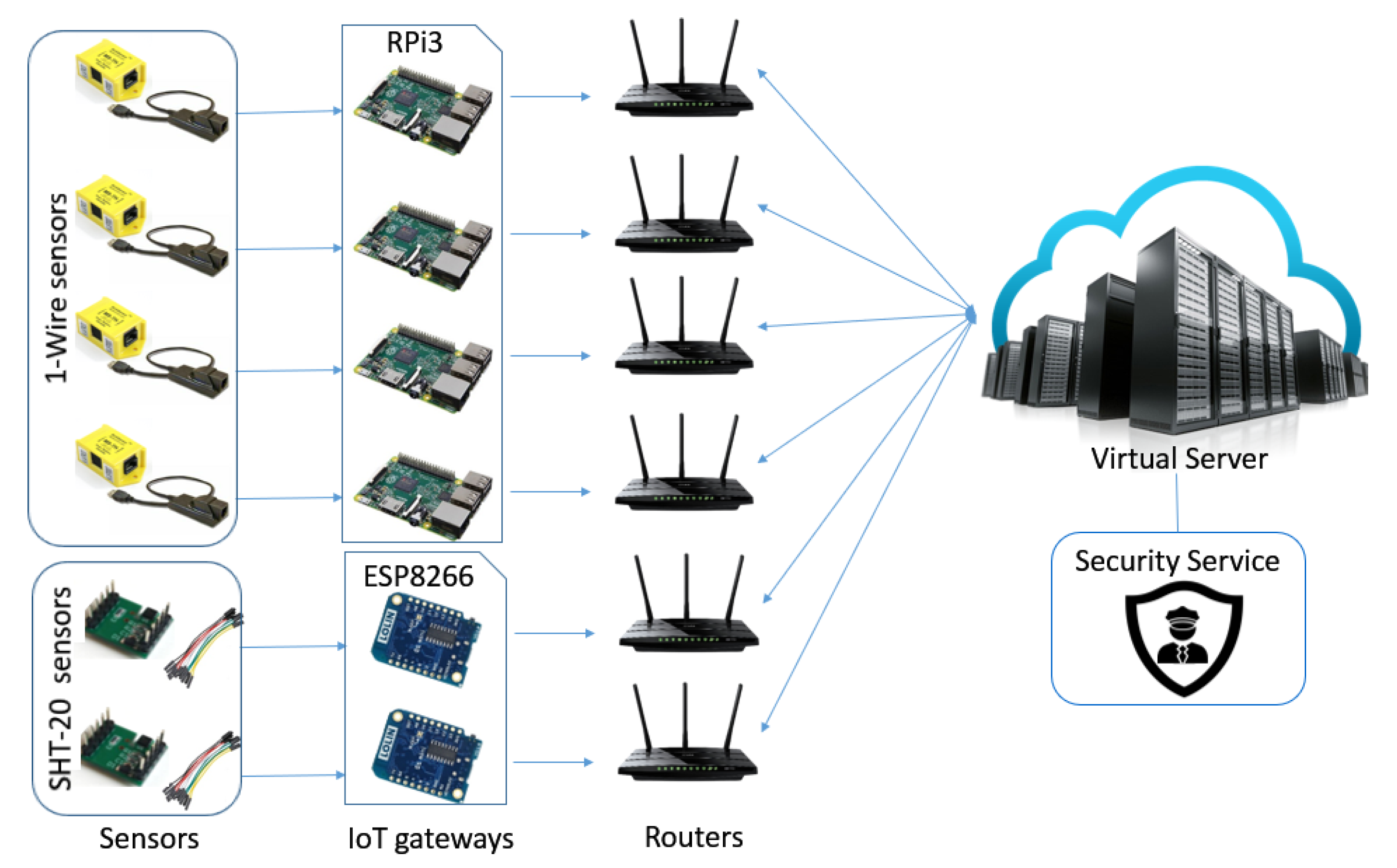

3. Device Identification Framework

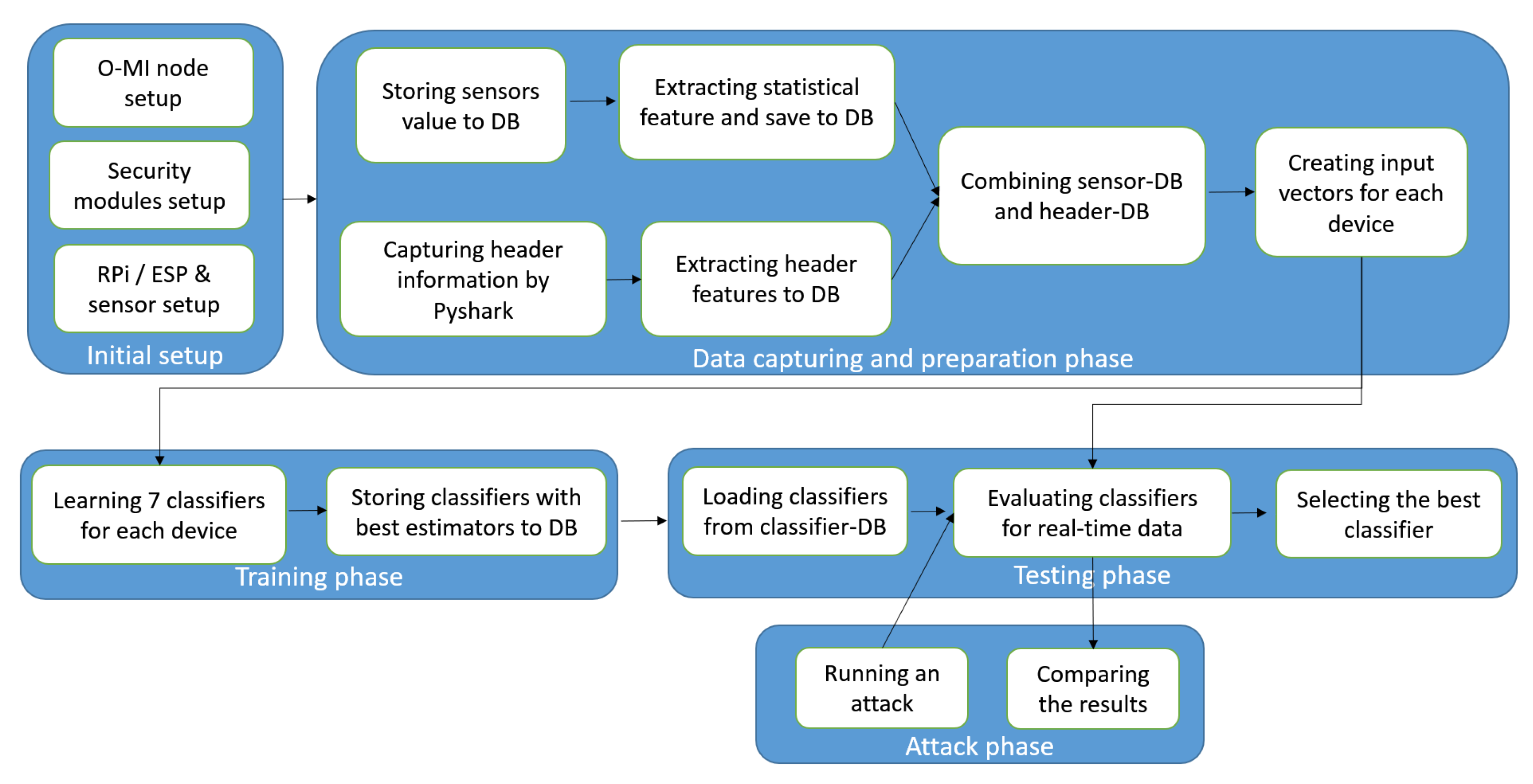

4. Enforcement

4.1. Methodology

4.2. Model Creation and Selection

4.3. Feature Extraction

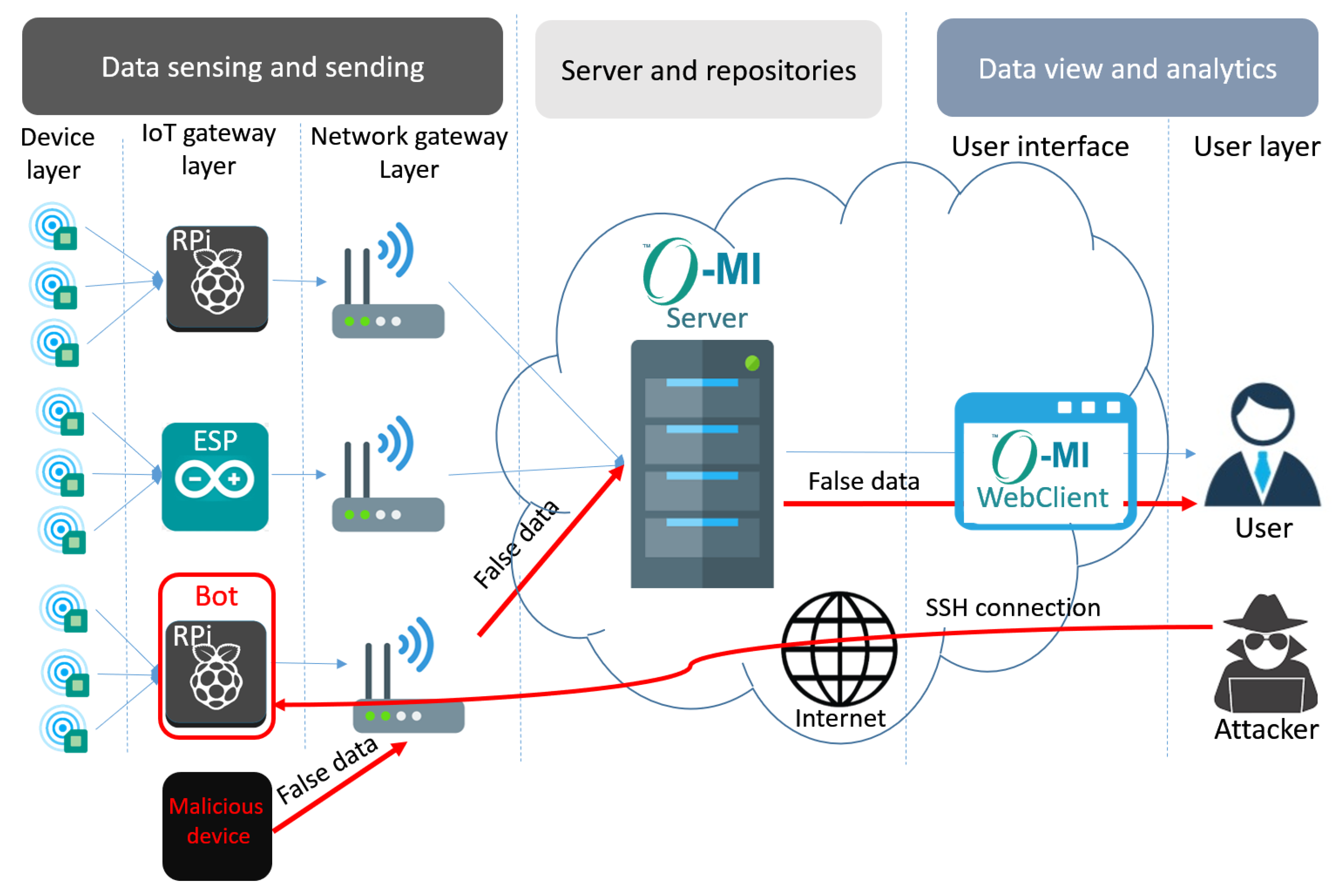

5. Adversary Model

5.1. Our Attack Model

5.1.1. Physical Attack

5.1.2. Remote Access Attack

6. Evaluation

6.1. Scenario Description

6.2. Performance Metrics

6.2.1. Confusion Matrix

- True Positive (TP): When the predicted and actual classes are identically at true class (1).

- True Negative (TN): When an element is predicted to be in False class (0) and it truly belongs in false class (0).

- False Positive (FP): When the system predicts an element to be in true class (1) but in actual it does not.

- False Negative (FN): When the system predicts that an element does not belong to a false class (0) but in actual it does.

6.2.2. Accuracy

6.2.3. Recall or Sensitivity

6.2.4. Precision

6.2.5. F-Score

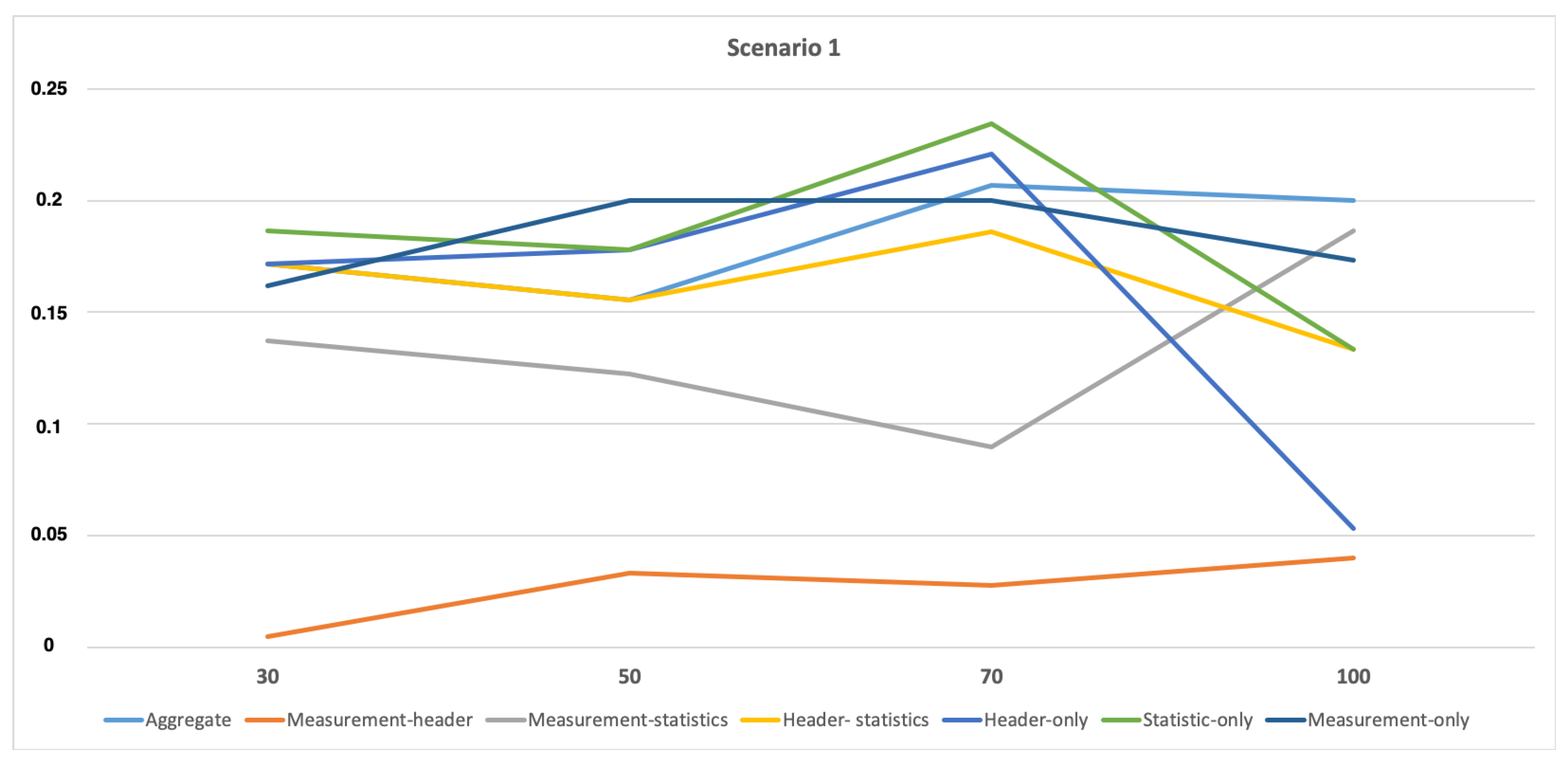

6.3. Classification Results under Normal Situation

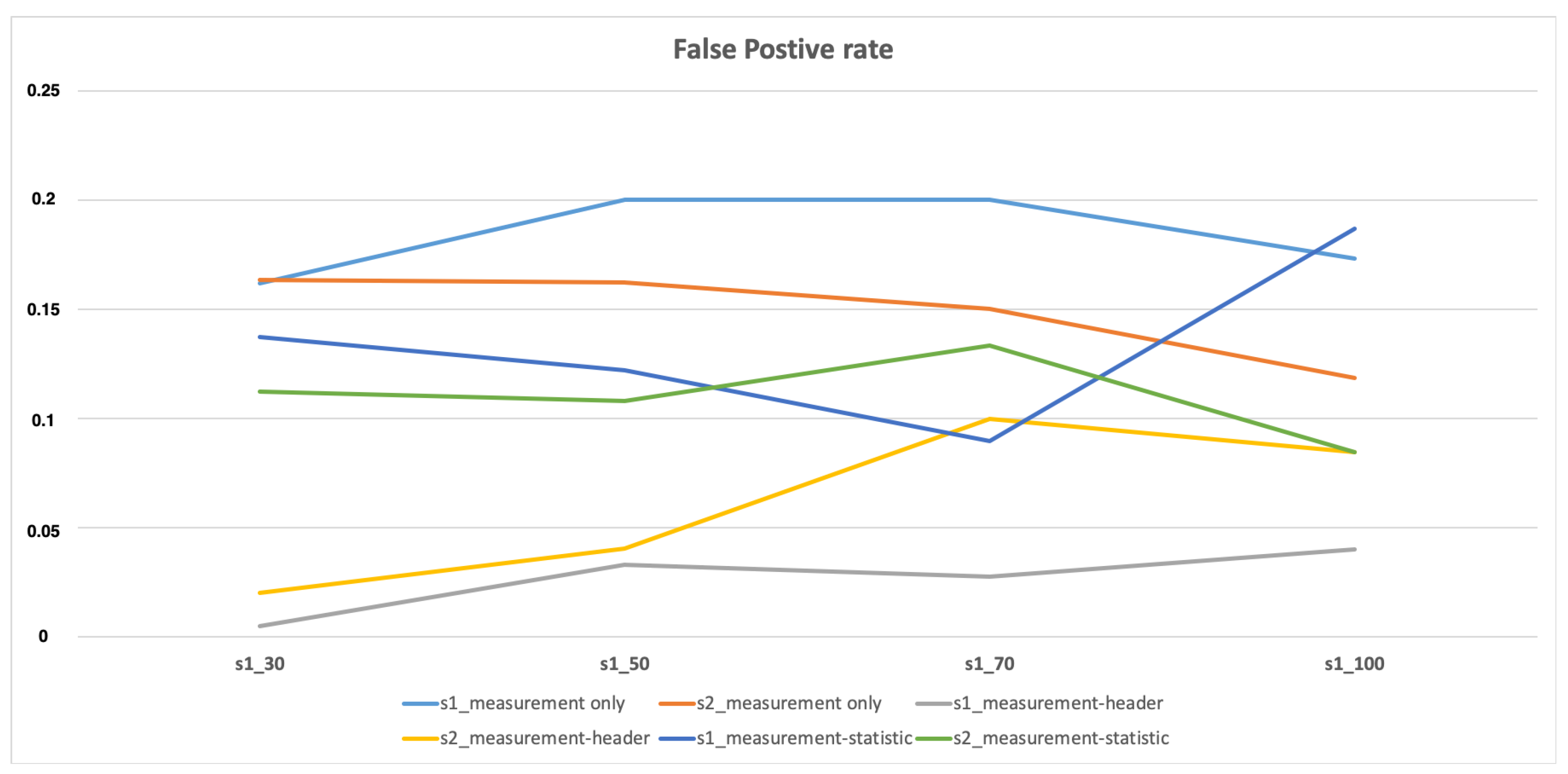

6.4. Classification Results under Attack Situation

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gratner Newsroom. 2015. Available online: https://gartner.com/en/newsroom/id/283971 (accessed on 4 June 2020).

- Bertino, E.; Islam, N. Botnets and internet of things security. Computer 2017, 50, 76–79. [Google Scholar] [CrossRef]

- Shah, T.; Venkatesan, S. Authentication of IoT device and IoT server using secure vaults. In Proceedings of the 2018 17th IEEE International Conference On Trust, Security And Privacy In Computing And Communications/12th IEEE International Conference On Big Data Science And Engineering (TrustCom/BigDataSE), New York, NY, USA, 31 July–3 August 2018; pp. 819–824. [Google Scholar]

- Aksoy, A.; Gunes, M.H. Automated IoT Device Identification using Network Traffic. In Proceedings of the 2019 IEEE International Conference on Communications, ICC 2019, Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Raval, M.; Sunkireddy, R. Hardware Root of Trust on IoT Gateway. 2019. Available online: https://www.techdesignforums.com/practice/technique/hardware-roots-of-trust-for-iot-security/ (accessed on 8 April 2021).

- Yousefnezhad, N.; Filippov, R.; Javed, A.; Buda, A.; Madhikermi, M.; Främling, K. Authentication and Access Control for Open Messaging Interface Standard. In Proceedings of the 14th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Melbourne, Australia, 7–10 November 2017; Gu, T., Kotagiri, R., Liu, H., Eds.; ACM: New York, NY, USA, 2017; pp. 20–27. [Google Scholar] [CrossRef]

- Li, B.; Gunes, M.H.; Bebis, G.; Springer, J. A supervised machine learning approach to classify host roles on line using sflow. In Proceedings of the First Edition Workshop on High Performance and Programmable Networking, New York, NY, USA, 18 June 2013; pp. 53–60. [Google Scholar]

- Yousefnezhad, N.; Madhikermi, M.; Främling, K. MeDI: Measurement-based Device Identification Framework for Internet of Things. In Proceedings of the 16th IEEE International Conference on Industrial Informatics, INDIN 2018, Porto, Portugal, 18–20 July 2018; pp. 95–100. [Google Scholar] [CrossRef]

- Sarma, A.C.; Girão, J. Identities in the future internet of things. Wirel. Pers. Commun. 2009, 49, 353–363. [Google Scholar] [CrossRef]

- Mahalle, P.; Babar, S.; Prasad, N.R.; Prasad, R. Identity management framework towards internet of things (IoT): Roadmap and key challenges. In International Conference on Network Security and Applications; Springer: Berlin/Heidelberg, Germany, 2010; pp. 430–439. [Google Scholar]

- Miettinen, M.; Heuser, S.; Kronz, W.; Sadeghi, A.; Asokan, N. ConXsense: Automated context classification for context-aware access control. In Proceedings of the 9th ACM Symposium on Information, Computer and Communications Security, ASIA CCS ’14, Kyoto, Japan, 3–6 June 2014; pp. 293–304. [Google Scholar] [CrossRef]

- Perera, C.; Zaslavsky, A.B.; Christen, P.; Georgakopoulos, D. Context Aware Computing for The Internet of Things: A Survey. IEEE Commun. Surv. Tutorials 2014, 16, 414–454. [Google Scholar] [CrossRef]

- Kohno, T.; Broido, A.; Claffy, K.C. Remote Physical Device Fingerprinting. In Proceedings of the 2005 IEEE Symposium on Security and Privacy (S&P 2005), Oakland, CA, USA, 8–11 May 2005; pp. 211–225. [Google Scholar] [CrossRef]

- Arackaparambil, C.; Bratus, S.; Shubina, A.; Kotz, D. On the reliability of wireless fingerprinting using clock skews. In Proceedings of the Third ACM Conference on Wireless Network Security, WISEC 2010, Hoboken, NJ, USA, 22–24 March 2010; pp. 169–174. [Google Scholar] [CrossRef]

- Jana, S.; Kasera, S.K. On Fast and Accurate Detection of Unauthorized Wireless Access Points Using Clock Skews. IEEE Trans. Mob. Comput. 2010, 9, 449–462. [Google Scholar] [CrossRef]

- Barbosa, R.R.R.; Sadre, R.; Pras, A. Flow whitelisting in SCADA networks. Int. J. Crit. Infrastruct. Prot. 2013, 6, 150–158. [Google Scholar] [CrossRef]

- Radhakrishnan, S.V.; Uluagac, A.S.; Beyah, R. GTID: A technique for physical device and device type fingerprinting. IEEE Trans. Dependable Secur. Comput. 2015, 12, 519–532. [Google Scholar] [CrossRef]

- Miettinen, M.; Marchal, S.; Hafeez, I.; Asokan, N.; Sadeghi, A.; Tarkoma, S. IoT SENTINEL: Automated Device-Type Identification for Security Enforcement in IoT. In Proceedings of the 37th IEEE International Conference on Distributed Computing Systems, ICDCS 2017, Atlanta, GA, USA, 5–8 June 2017; pp. 2177–2184. [Google Scholar] [CrossRef]

- Sharaf-Dabbagh, Y.; Saad, W. On the authentication of devices in the Internet of things. In Proceedings of the 17th IEEE International Symposium on A World of Wireless, Mobile and Multimedia Networks, WoWMoM 2016, Coimbra, Portugal, 21–24 June 2016; pp. 1–3. [Google Scholar] [CrossRef]

- Noguchi, H.; Kataoka, M.; Yamato, Y. Device Identification Based on Communication Analysis for the Internet of Things. IEEE Access 2019, 7, 52903–52912. [Google Scholar] [CrossRef]

- Bezawada, B.; Bachani, M.; Peterson, J.; Shirazi, H.; Ray, I.; Ray, I. Behavioral Fingerprinting of IoT Devices. In Proceedings of the 2018 Workshop on Attacks and Solutions in Hardware Security, ASHES@CCS 2018, Toronto, ON, Canada, 19 October 2018; Chang, C., Rührmair, U., Holcomb, D., Guajardo, J., Eds.; ACM: New York, NY, USA, 2018; pp. 41–50. [Google Scholar] [CrossRef]

- Meidan, Y.; Bohadana, M.; Shabtai, A.; Guarnizo, J.D.; Ochoa, M.; Tippenhauer, N.O.; Elovici, Y. ProfilIoT: A machine learning approach for IoT device identification based on network traffic analysis. In Proceedings of the Symposium on Applied Computing, SAC 2017, Marrakech, Morocco, 3–7 April 2017; Seffah, A., Penzenstadler, B., Alves, C., Peng, X., Eds.; ACM: New York, NY, USA, 2017; pp. 506–509. [Google Scholar] [CrossRef]

- Meidan, Y.; Bohadana, M.; Shabtai, A.; Ochoa, M.; Tippenhauer, N.O.; Guarnizo, J.D.; Elovici, Y. Detection of Unauthorized IoT Devices Using Machine Learning Techniques. arXiv 2017, arXiv:1709.04647. [Google Scholar]

- Sivanathan, A.; Gharakheili, H.H.; Loi, F.; Radford, A.; Wijenayake, C.; Vishwanath, A.; Sivaraman, V. Classifying IoT Devices in Smart Environments Using Network Traffic Characteristics. IEEE Trans. Mob. Comput. 2019, 18, 1745–1759. [Google Scholar] [CrossRef]

- Hamad, S.A.; Zhang, W.E.; Sheng, Q.Z.; Nepal, S. IoT Device Identification via Network-Flow Based Fingerprinting and Learning. In Proceedings of the 18th IEEE International Conference On Trust, Security And Privacy In Computing And Communications/13th IEEE International Conference On Big Data Science And Engineering, TrustCom/BigDataSE 2019, Rotorua, New Zealand, 5–8 August 2019; pp. 103–111. [Google Scholar] [CrossRef]

- Shahid, M.R.; Blanc, G.; Zhang, Z.; Debar, H. IoT Devices Recognition Through Network Traffic Analysis. In Proceedings of the IEEE International Conference on Big Data, Big Data 2018, Seattle, WA, USA, 10–13 December 2018; pp. 5187–5192. [Google Scholar] [CrossRef]

- Marchal, S.; Miettinen, M.; Nguyen, T.D.; Sadeghi, A.R.; Asokan, N. Audi: Toward autonomous iot device-type identification using periodic communication. IEEE J. Sel. Areas Commun. 2019, 37, 1402–1412. [Google Scholar] [CrossRef]

- Eom, J.h.; Han, Y.J.; Park, S.H.; Chung, T.M. Active cyber attack model for network system’s vulnerability assessment. In Proceedings of the 2008 International Conference on Information Science and Security (ICISS 2008), Jammu, India, 16–20 December 2008; pp. 153–158. [Google Scholar]

- SolarWinds. Detecting and Preventing Rogue Devices. 2017. Available online: https://www.solarwinds.com/-/media/solarwinds/swdcv2/licensed-products/user-device-tracker/resources/whitepaper/udt_wp_detect_prevent_rogue_devices.ashx?rev=7a261fece0b940ceb814f4985e1d74ff (accessed on 8 April 2021).

- Buckland, M.K.; Gey, F.C. The Relationship between Recall and Precision. J. Am. Soc. Inf. Sci. 1994, 45, 12–19. [Google Scholar] [CrossRef]

| Pap. | Purpose | Identification Method | Considered Features | Implementation | Attack Status |

|---|---|---|---|---|---|

| [20] | To identify the device type and device model | Calculating the similarity of features | Communication features extracted from header | Network cameras and factory-used devices | No attack |

| [21] | To employ behavioral fingerprinting for identification and authentication | K-nearest-neighbors (K-NN), Decision Trees (DT), gradient boosting, and majority voting | Header feature and payload-based features | 14 home IoT devices | No attack |

| [4] | To automatically classify the IoT devices using TCP/IP packets | ML algorithms (DT, K48, OneR, PART) to classify device type | GA to determine most unique features from network, transport, and application layer | a database from [18] | No attack |

| [22] | To identify IoT devices using ML algorithms on network traffic data | Two-stages classifier: I. distinguish IoT vs non-IoT II. determine device class | features from network, transport, and application layer + data from Alexa Rank and GeoIP | 9 distinct IoT devices, and PCs and smartphones | No attack |

| [23] | To identify IoT device types from the white list | multi-class classifier using RF | Features from Transmission Control Protocol/Internet Protocol (TCP/IP) sessions | 17 different IoT devices (9 device type) by different vendors | Based on local organizational security policies violations |

| [24] | To classify IoT devices using traffic characteristics | multi-stage ML: Stage-0. Naïve Bayes Stage-1. RF | statistical attributes: activity cycles, port number, signaling patterns, and cipher suites | a living lab with 28 IoT devices | User Datagram Protocol (UDP) reflection and TCP SYN attacks |

| [26] | To recognize IoT devices by analyzing the generated network traffic | RF, DT, Support Vector Machine (SVM), k-NN, Artificial Neural Network and Gaussian Naive Bayes | Size of first 10 pack sent/ received and interval times | experimental smart home network of 4 devices | No attack |

| [25] | To automatically identify white-listed device types | ML classifiers ( e.g., SVM and K-NN) | behavioural and flow-based features | 31 off-the-shelf IoT device (27 device types) | Adversaries compromising devices on network |

| [27] | To identify device-type without human intervention | unsupervised learning method | 4 types of features: periodic flaws, periodic accuracy, period duration, and period stability | a dataset comprising 33 typical commercial IoT devices | Spoofing device fingerprints |

| Our work | To identify the device using device profiling | ML methods (RF, SVM, and Logistic Regression (LR)) | header information, sensor measurements, and statistical features | 2 types of sensors in an office | physical and remote attacks (Object emulation and Botnet attack) |

| Type | Attribute | Header Sub-Attributes | Importance |

|---|---|---|---|

| Header (19) | Network layer (2) | ‘length’ ’time_to_live’ | 0.0429 0.0071 |

| Transport layer (15) | ‘source_port’ ‘stream_index’ ‘length’ ‘sequence_number’ ‘next_sequence_number’ ‘header_length’ ‘window_size_value’ ‘window_size’ ‘window_size_scalefactor’ ’options’ ‘analysis_initial_rtt’ ‘analysis_bytes_in_flight’ ‘analysis_push_bytes_sent’ ‘time_relative’ ‘time_delta’ | 0.0095 0.0034 0.0498 0.0288 0.0662 0.0069 0.011 0.0187 0.0061 0.0077 0.0017 0.0214 0.0341 0.0334 0.0037 | |

| Application layer (1) | ’content-length’ | 0.0655 | |

| Packet length | 0.0514 | ||

| Measurements (2) | Temperature | 0.2409 | |

| Humidity | 0.0597 | ||

| Statistics (2) | Flow duration | 0.1997 | |

| Inter-arrival time | 0.181 | ||

| Accuracy | Recall | Precision | F_Score | Build Time | |

|---|---|---|---|---|---|

| RF | 81.36% | 0.6666 | 0.5205 | 0.7989 | 12 |

| SVM | 86.20% | 0.6734 | 0.5574 | 0.8134 | 2.96 |

| LR | 81.33% | 0.8475 | 0.5888 | 0.8305 | 15.06 |

| Accuracy | Recall | Precision | F_Score | Build Time | |

|---|---|---|---|---|---|

| RF | 86.21% | 0.4611 | 0.4799 | 0.8232 | 13.56 |

| SVM | 88.47% | 0.5792 | 0.6949 | 0.8696 | 2.91 |

| LR | 85.41% | 0.9247 | 0.6704 | 0.8633 | 17.75 |

| Accuracy | Recall | Precision | F_Score | Build Time | |

|---|---|---|---|---|---|

| RF | 85.65% | 0.4958 | 0.4236 | 0.8334 | 12.64 |

| SVM | 89.42% | 0.7932 | 0.6664 | 0.8935 | 4.20 |

| LR | 91.79% | 0.5909 | 0.7595 | 0.8518 | 3.88 |

| Accuracy | Recall | Precision | F_Score | Build Time | |

|---|---|---|---|---|---|

| RF | 81.82% | 0.5101 | 0.3975 | 0.7871 | 12.20 |

| SVM | 81.47% | 0.5984 | 0.4803 | 0.808 | 3.13 |

| LR | 78.45% | 0.7540 | 0.5483 | 0.7959 | 16.38 |

| Accuracy | Recall | Precision | F_Score | Build Time | |

|---|---|---|---|---|---|

| RF | 89.64% | 0.5885 | 0.6786 | 0.8777 | 11.93 |

| SVM | 92.62% | 0.6461 | 0.7911 | 0.9118 | 3.4 |

| LR | 89.66% | 0.5263 | 0.5766 | 0.8609 | 2.46 |

| Accuracy | Recall | Precision | F_Score | Build Time | |

|---|---|---|---|---|---|

| RF | 80.35% | 0.3803 | 0.2884 | 0.7665 | 12.88 |

| SVM | 82.57% | 0.4482 | 0.4106 | 0.7958 | 5.95 |

| LR | 80.14% | 0.6440 | 0.4679 | 0.7922 | 19.75 |

| Accuracy | Recall | Precision | F_Score | Build Time | |

|---|---|---|---|---|---|

| RF | 71.94% | 0.2939 | 0.1719 | 0.6929 | 11.55 |

| SVM | 72.55% | 0.2915 | 0.2288 | 0.6894 | 2.2 |

| LR | 75.99% | 0.0448 | 0.05 | 0.7005 | 2.58 |

| Scenarios | Inter-Arrival Time (s) | Accuracy | Recall | Precision | F_Score |

|---|---|---|---|---|---|

| Measurement-Only | |||||

| Scenario 1 | 30 50 70 100 | 80% 76.19% 74.12% 74.44% | 0.4 0.4 0.2 0.2 | 0.2556 0.24 0.2 0.0667 | 0.815 0.7648 0.7592 0.7029 |

| Scenario 2 | 30 50 70 100 | 79.09% 77.65% 75.71% 75.71% | 0.2267 0.2 0.1 0.04 | 0.26 0.1333 0.2 0.2 | 0.8194 0.7892 0.7675 0.782 |

| Measurement-Header | |||||

| Scenario 1 | 30 50 70 100 | 93.91% 89.52% 88.24% 0.9% | 0.4 0.36 0.18 0.4 | 0.37 0.35 0.2 0.36 | 0.9131 0.8742 0.855 0.8804 |

| Scenario 2 | 30 50 70 100 | 90.91% 88.24% 81.43% 84.29% | 0.16 0.2 0.15 0.2 | 0.2 0.2 0.2 0.2 | 0.8884 0.862 0.8107 0.8331 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yousefnezhad, N.; Malhi, A.; Främling, K. Automated IoT Device Identification Based on Full Packet Information Using Real-Time Network Traffic. Sensors 2021, 21, 2660. https://doi.org/10.3390/s21082660

Yousefnezhad N, Malhi A, Främling K. Automated IoT Device Identification Based on Full Packet Information Using Real-Time Network Traffic. Sensors. 2021; 21(8):2660. https://doi.org/10.3390/s21082660

Chicago/Turabian StyleYousefnezhad, Narges, Avleen Malhi, and Kary Främling. 2021. "Automated IoT Device Identification Based on Full Packet Information Using Real-Time Network Traffic" Sensors 21, no. 8: 2660. https://doi.org/10.3390/s21082660

APA StyleYousefnezhad, N., Malhi, A., & Främling, K. (2021). Automated IoT Device Identification Based on Full Packet Information Using Real-Time Network Traffic. Sensors, 21(8), 2660. https://doi.org/10.3390/s21082660