3D Sensors for Sewer Inspection: A Quantitative Review and Analysis

Abstract

1. Introduction

- We simulate the sewer environment using two different setups: a clean laboratory environment with reflective plastic pipes and an outdoor above-ground setup with four wells connected by pipes with different diameters and topology.

- We utilize the laboratory and above-ground setup to systematically test passive stereo, active stereo, and time-of-flight 3D sensing technologies under a range of different illuminance levels.

- The laboratory setup is utilized for assessing how active stereo and time-of-flight sensors are affected by the presence of various levels of water in the sewer pipe.

- We systematically evaluate the reconstruction performance of the 3D sensors from the experiments and compare it with reference models of the sewer pipes.

2. Related Work

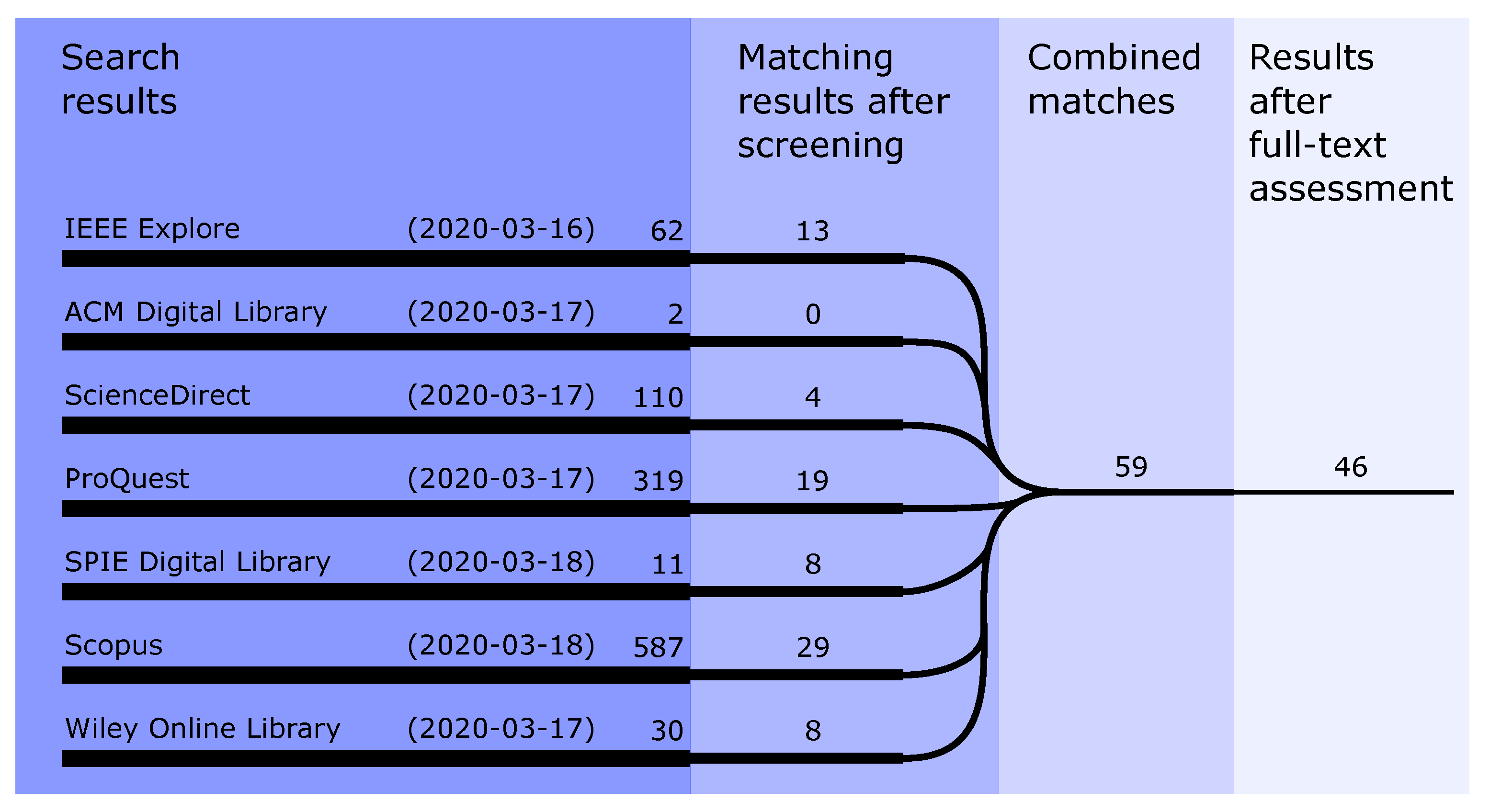

- Sewer OR pipe;

- 3D;

- Inspection OR reconstruction OR assessment.

2.1. Laser-Based Sensing

2.2. Omnidirectional Vision

2.3. Stereo Matching

2.4. Other Sensing Approaches

3. Depth Sensors

4. Materials and Methods

- Different illuminance levels in the pipe;

- Different water levels in the pipe.

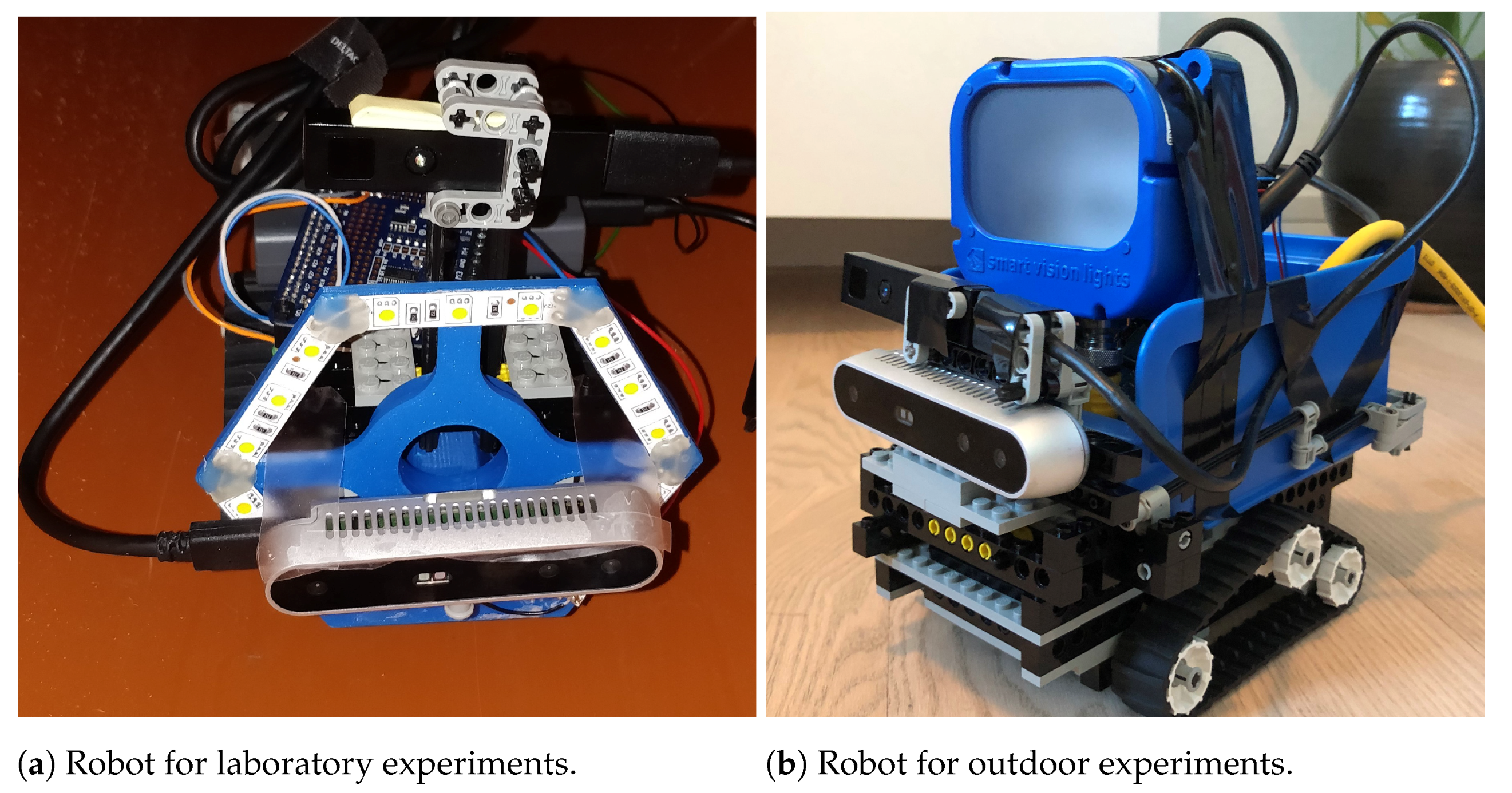

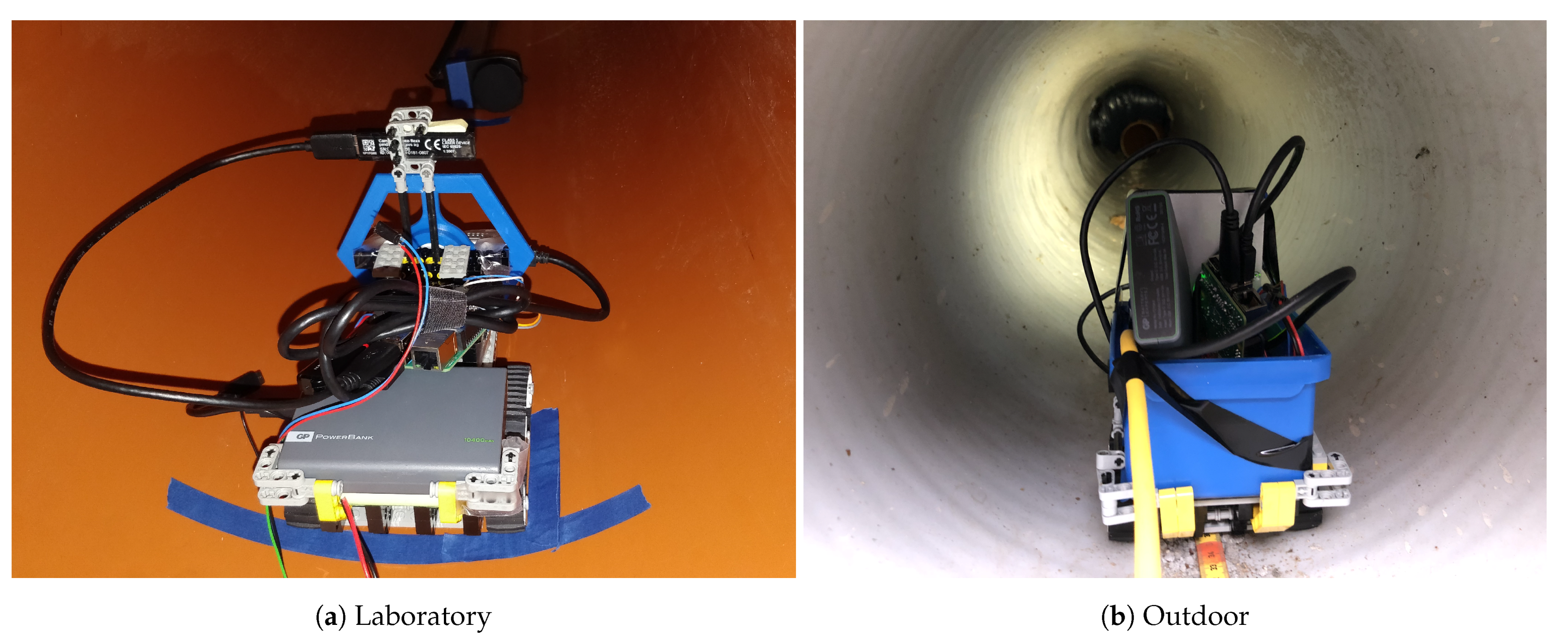

4.1. Robotic Setup

4.2. Assessing the Point Clouds

- Point-to-Point

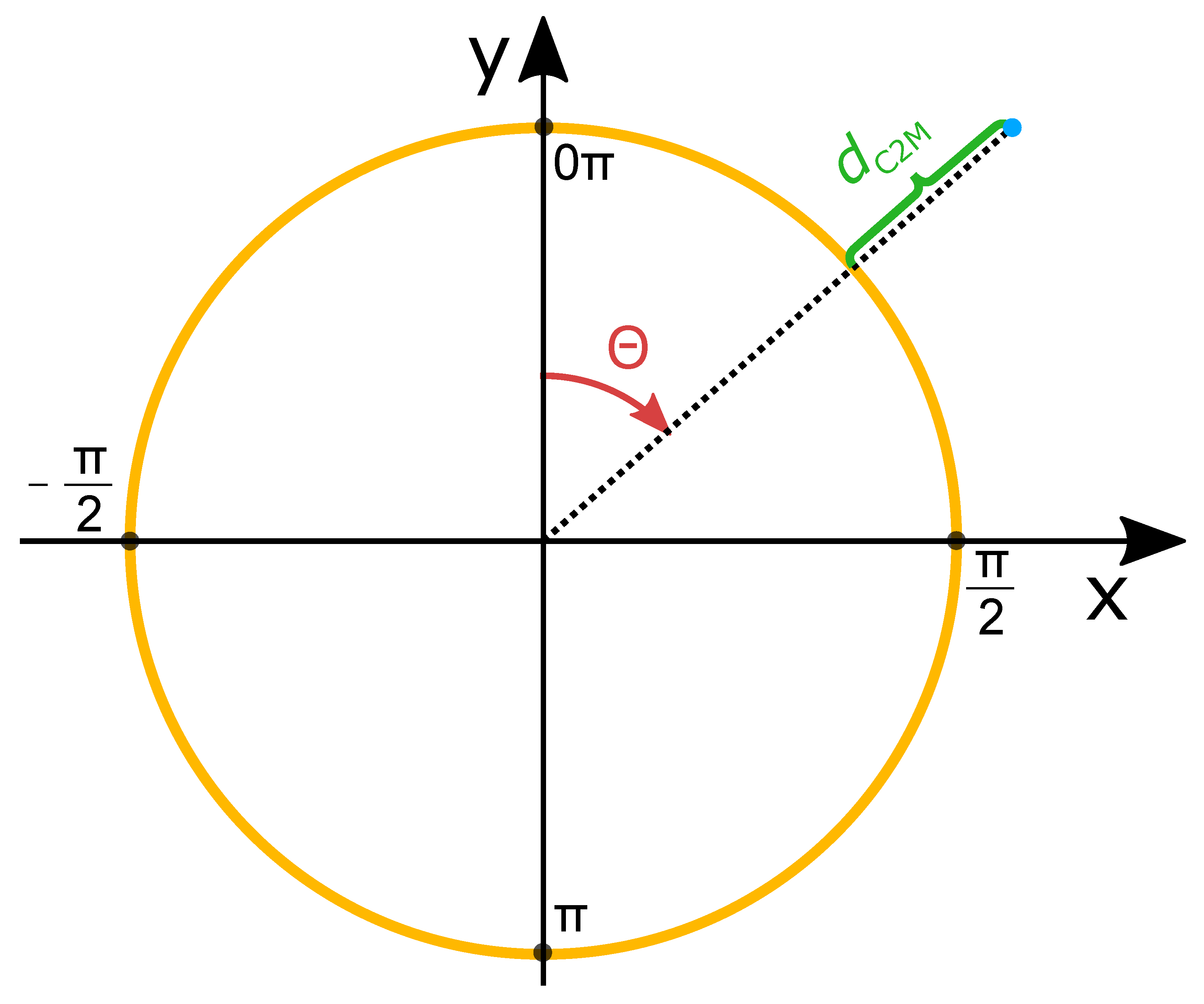

- The distance to the nearest neighbor is found by measuring distances between a given point and every point in a reference point cloud. This is illustrated in Figure 4a where the closest point in the reference point cloud is found, and distance d corresponds to the matching error for the point pair marked with green.

- Point-to-Plane

- The method estimates the surface of the reference point cloud. The surface of a point in the reference cloud is computed using the neighborhood points around the matching point in the reference cloud. In Figure 4b, the neighborhood is illustrated by the gray oval, and the estimated surface is represented by the dotted red line. The vector between the point-to-point match connected by d is projected onto the surface of the reference cloud. Together, the point-to-point vector d and the vector projected onto the reference surface are used to compute an error vector that is normal to the estimated surface in the reference cloud [73].

- Point-to-Mesh

- Distances between a point cloud and a reference mesh are defined as either the orthogonal distance from a point in the measured cloud to the triangular plane in the reference mesh or as the distance is to the nearest edge, in case the orthogonal projection of the point falls outside the triangle [74]. The two possibilities are illustrated in Figure 4c.

- A point cloud is constructed from each depth image by back-projecting the depth points by the use of the intrinsic parameters from each camera.

- A 3D model of the sewer pipe is loaded into the CloudCompare software toolbox [78]. The 3D model is made such that it matches the interior diameter of the sewer pipe.

- The point cloud of each sensor is loaded into CloudCompare and cropped if necessary.

- The loaded point cloud from the sensors are likely displaced and rotated with respect to the 3D model which means that they cannot be directly compared. There are two methods for aligning the point cloud to the 3D model:

- (a)

- Directly measuring the rotation and displacement of the depth sensor with respect to the pipe.

- (b)

- Estimating the transformation by proxy methods that works directly on the point cloud.

- For these experiments, method (b) is chosen as it was not possible to directly measure the position of the sensor with the required accuracy. A RANSAC-based method [79] available as a plug-in to CloudCompare is used to estimate cylinders from the point cloud of each sensor. If the reconstructed point cloud is noisy, the RANSAC estimation might produce several cylinders. The cylinder that resembles the physical pipe is selected manually. The affine transformation that relates the cylinder to the origin of the coordinate frame is obtained.

- The inverse of the transformation obtained from step 5 is used to align the point cloud to the 3D model, assuming that the 3D model is centered at , that the circular cross-section of the cylindrical model is parallel to the XY-plane, and that the height of the cylinder is expanding along the z-axis.

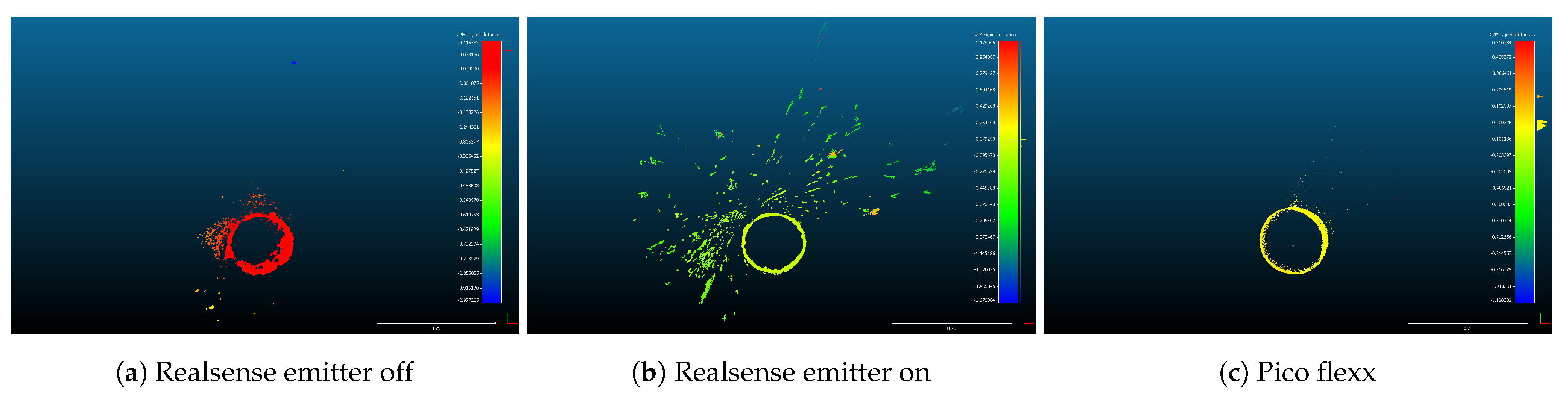

- The distance between the point cloud from the sensor and the 3D model is computed by using the “Cloud to mesh distance” tool from CloudCompare. The distance is stored as a scalar field in a new point cloud. Sample distance measurements from the Realsense and pico flexx depth sensors are shown in Figure 5.

4.3. Illumination Tests

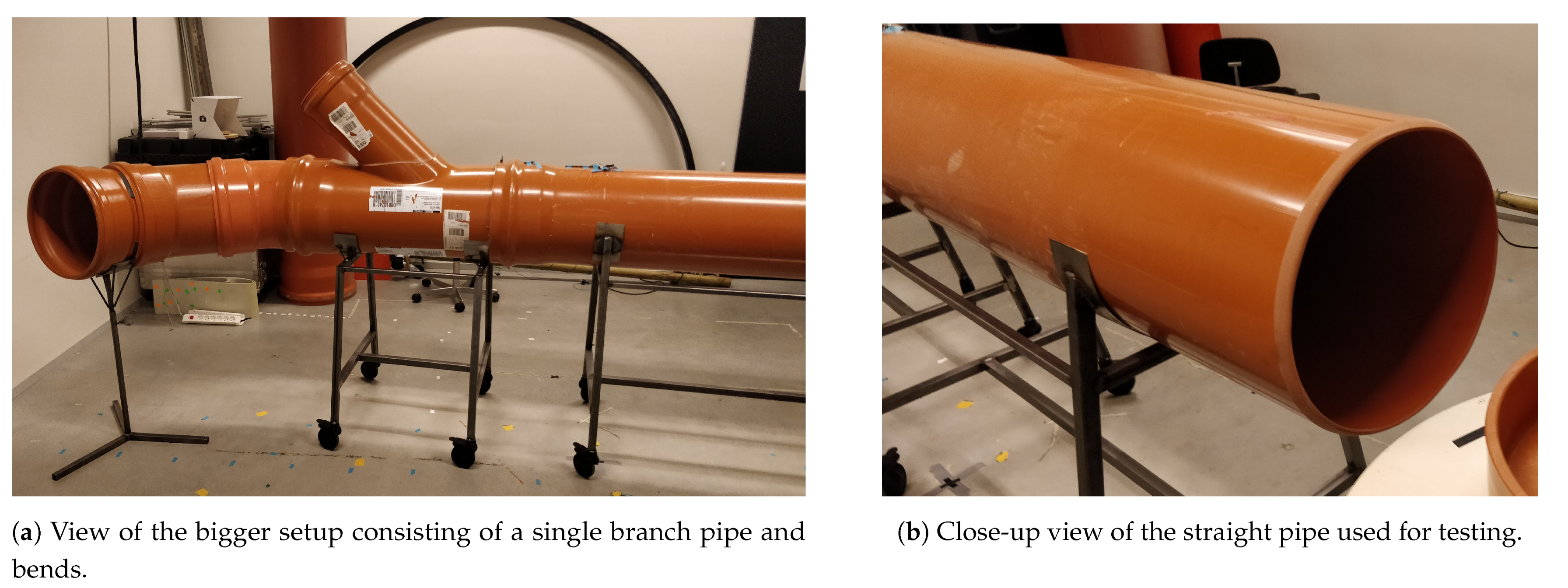

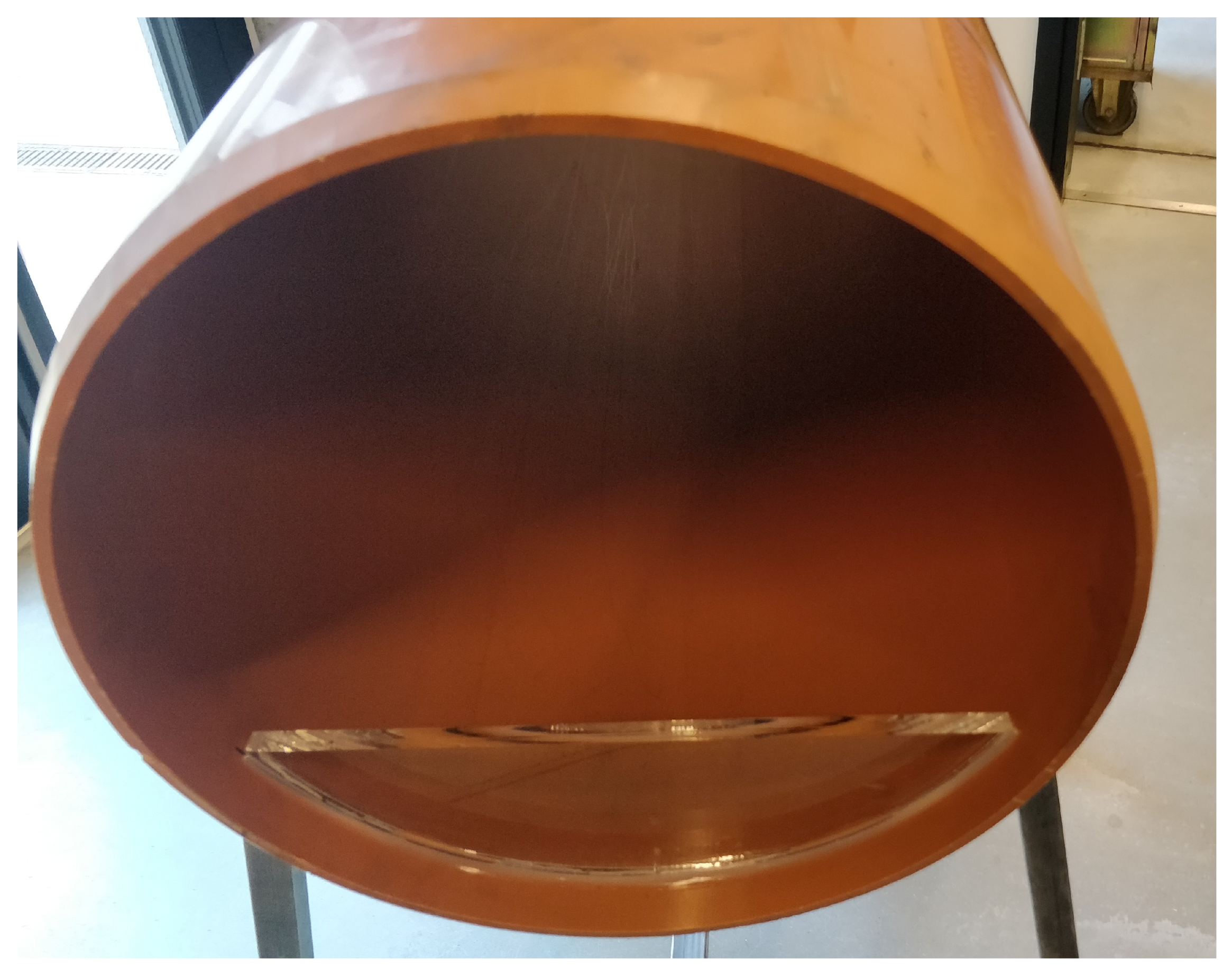

- A university laboratory containing no windows in order to emulate the complete darkness of certain sections of a sewer pipe. A standard Ø400 mm PVC pipe with a length of 2 m is located on top of a metal frame that enables easy access for scientific experimentation. The setup is shown in Figure 6.

- An above-ground outdoor test setup consisting of four wells connected by Ø200 mm and Ø400 mm PVC pipes. Each well may be covered by a wooden top to prevent direct sunlight from entering the pipe. The pipes are laid out in different configurations to simulate straight and curved pipes, branch pipes, and transitions between pipes of different materials and diameters. The pipes are covered with sand to block to block sunlight from entering the pipes and to enable rapid manipulation of the configuration of the pipes. The above-ground setup is pictured in Figure 7.

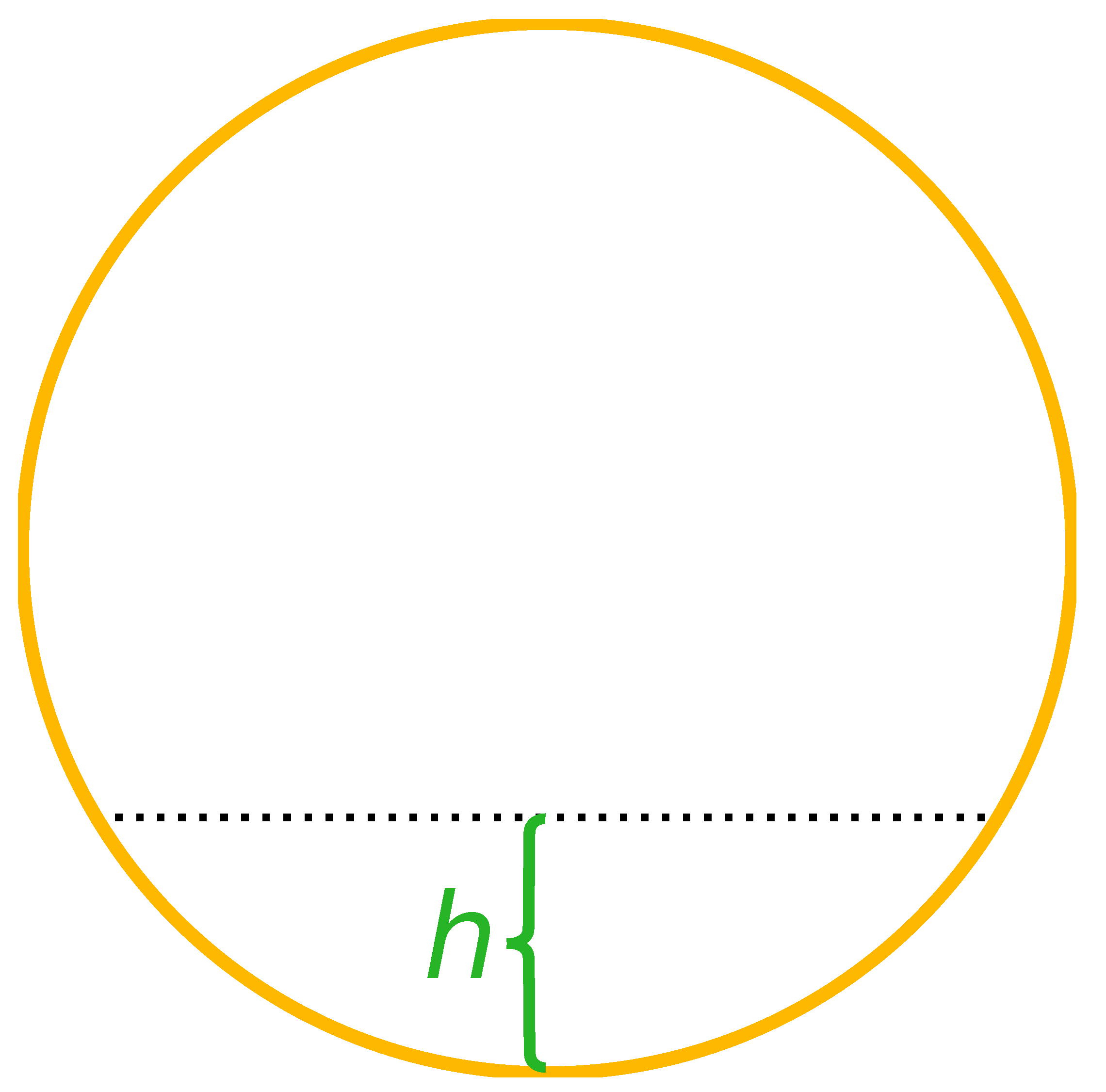

4.4. Water Level Tests

5. Experimental Results

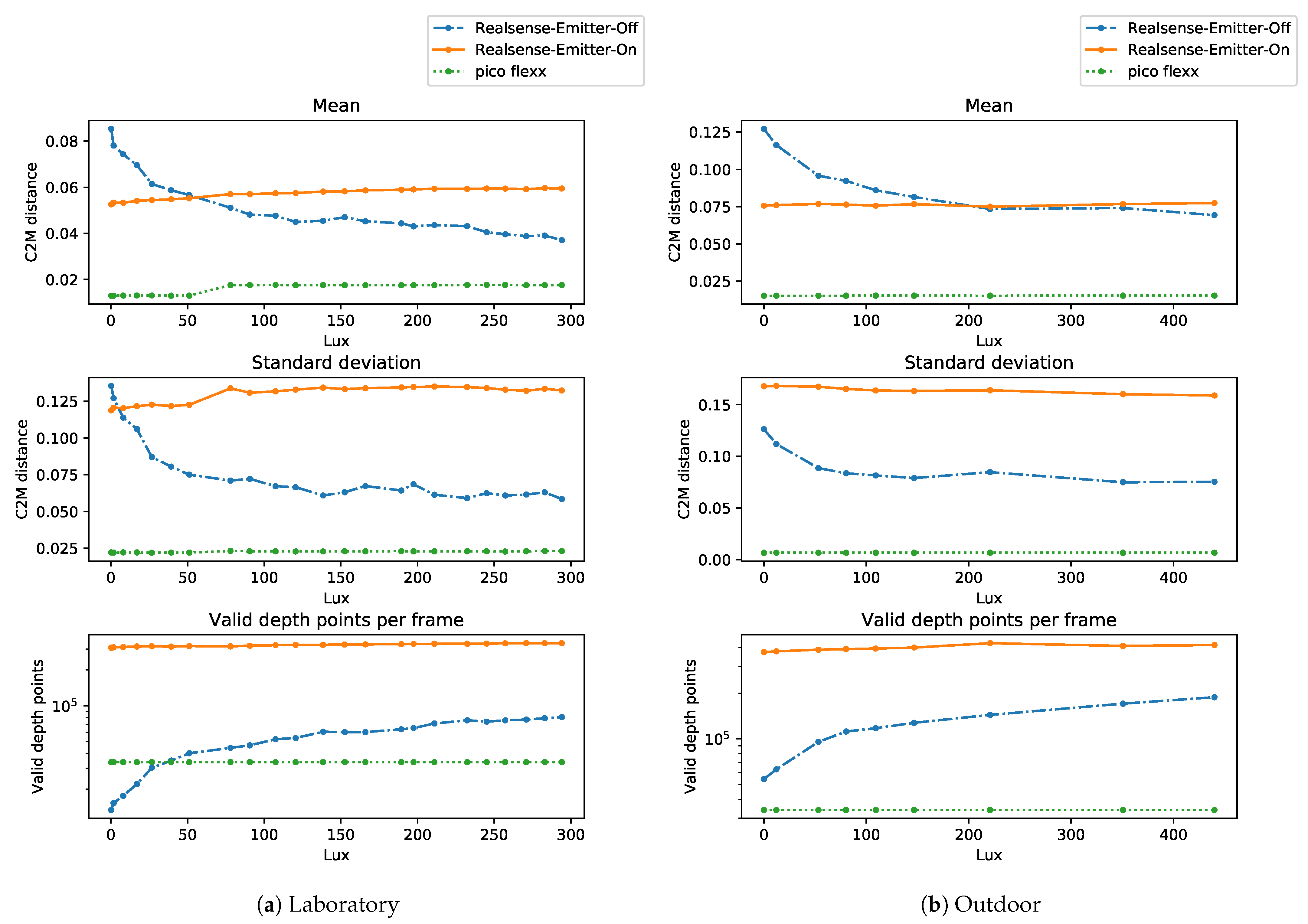

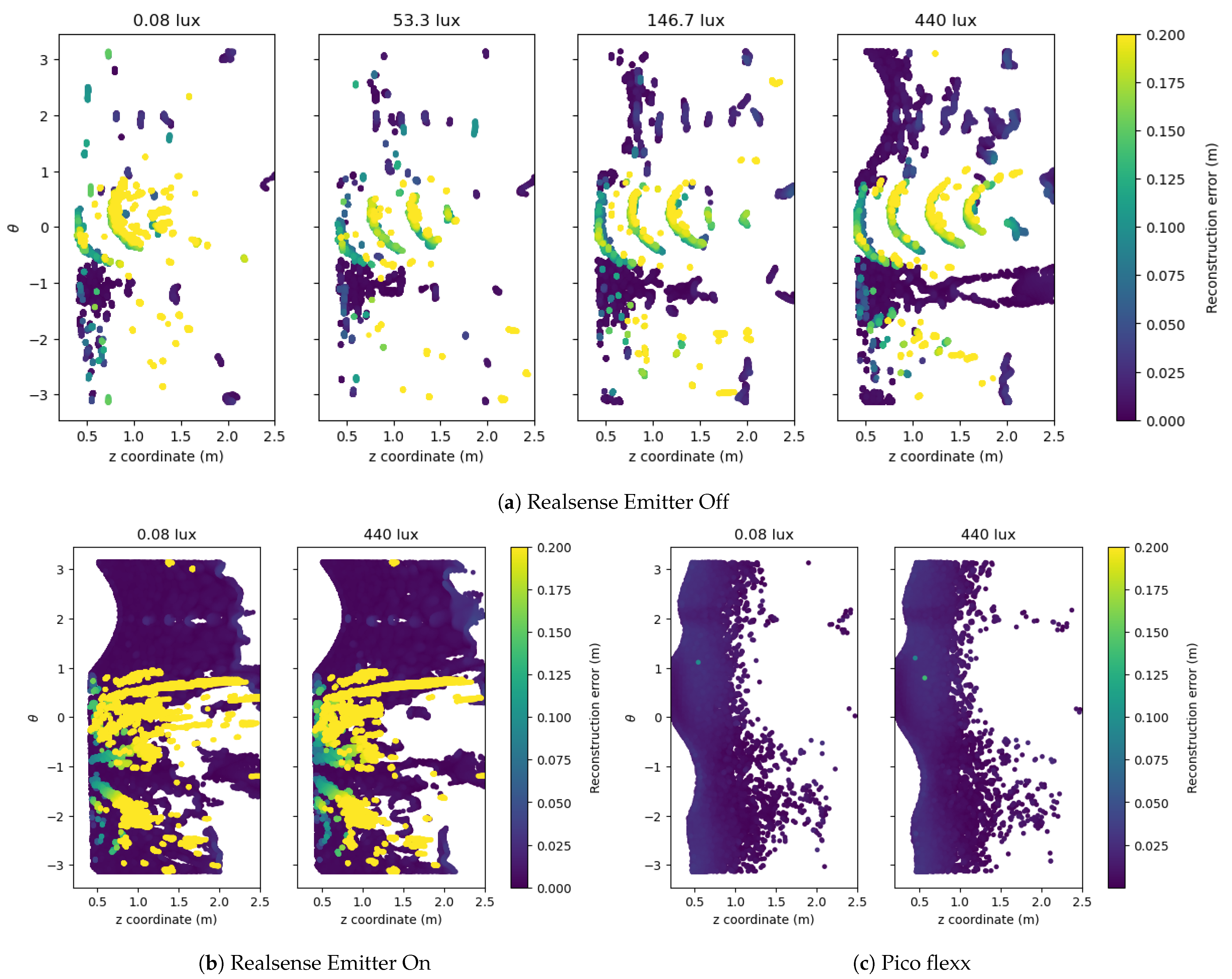

5.1. Illumination Tests

5.1.1. Reconstruction of the Cylindrical Pipe

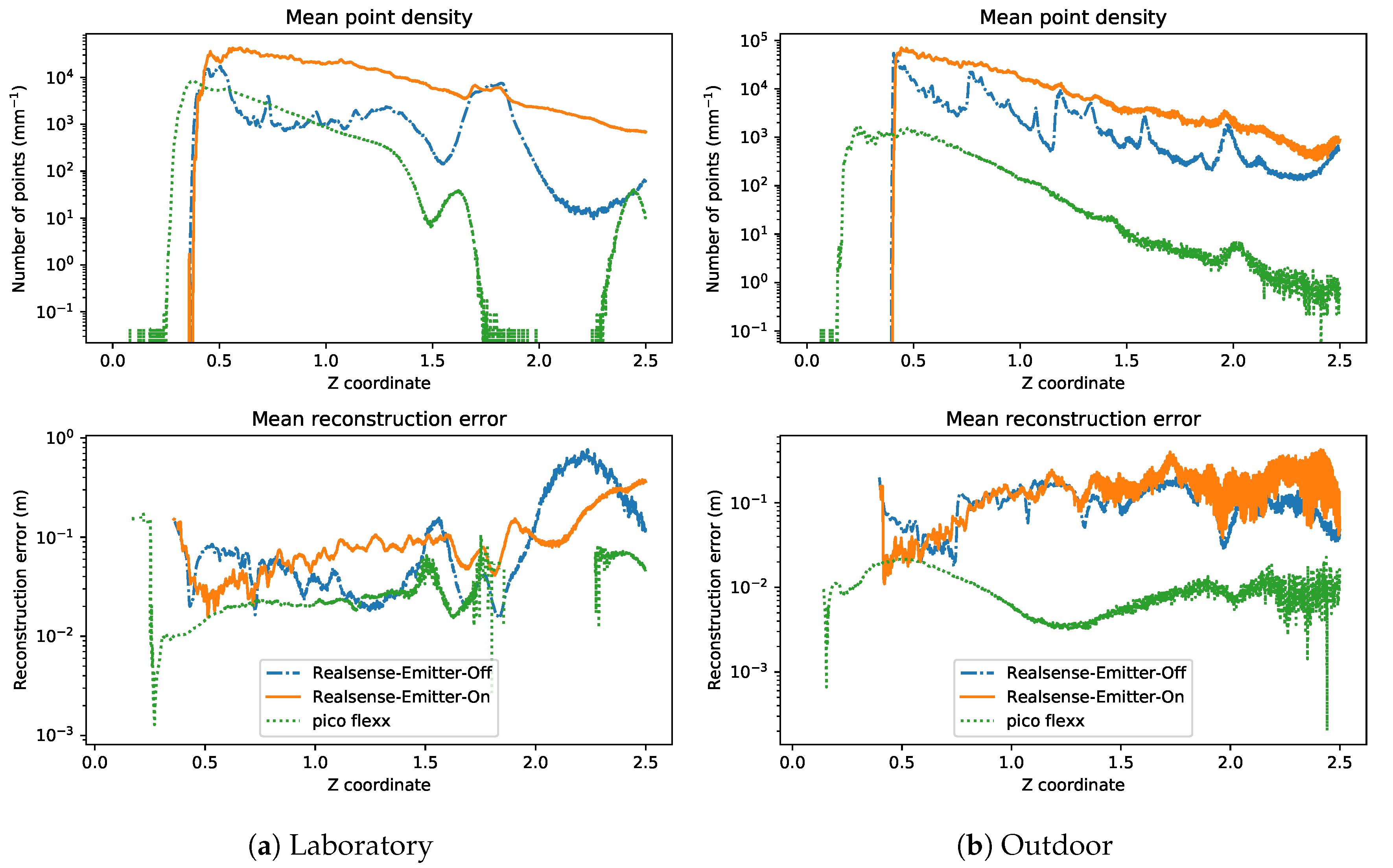

5.1.2. Reconstruction Performance Along the z-Axis

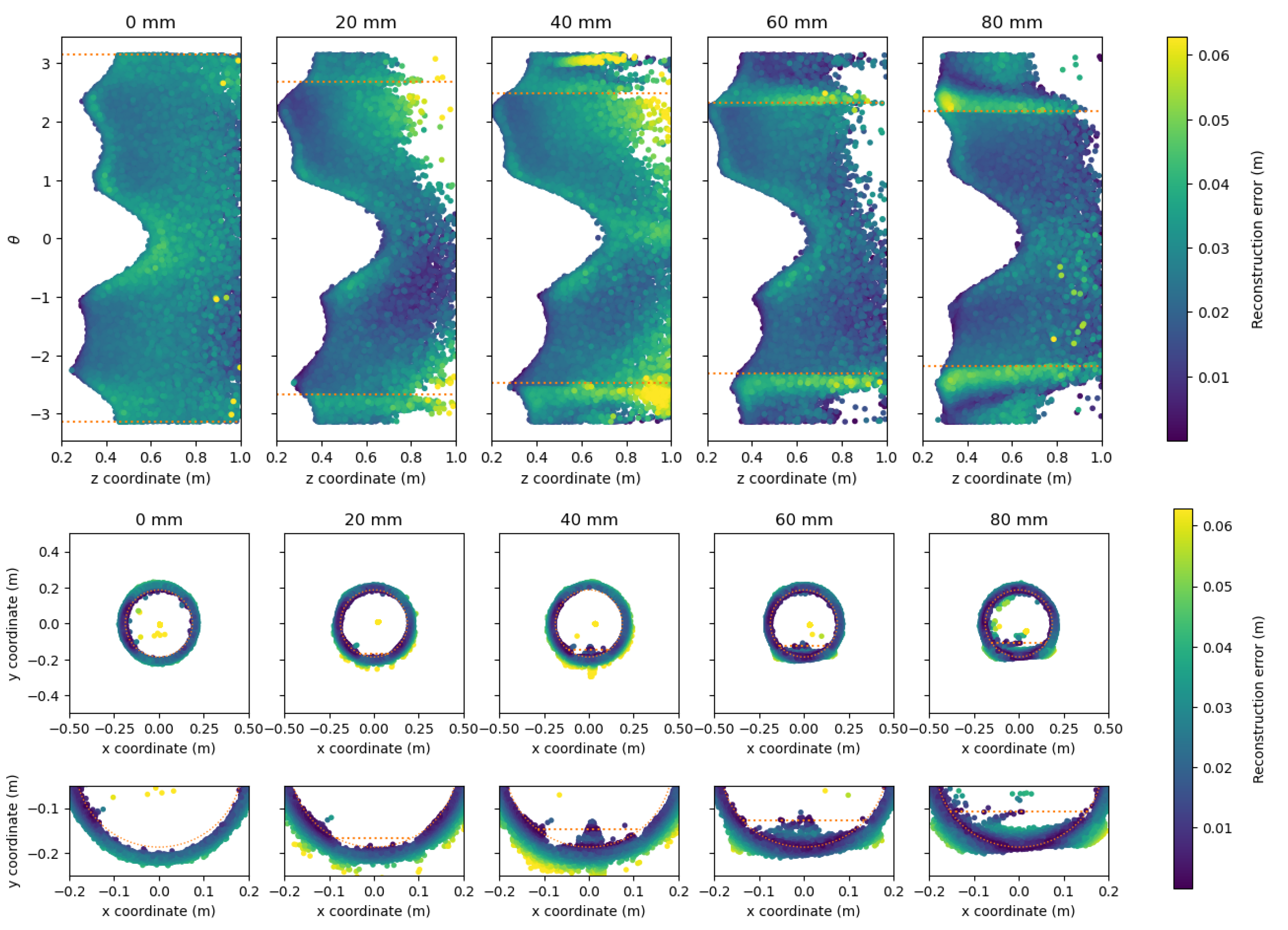

5.2. Water Level Tests

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| C2M | Cloud-to-mesh |

| CCD | Charged Coupled Device |

| CCTV | Closed-Circuit Television |

| CMOS | Complementary metal–oxide–semiconductor |

| GNSS | Global Navigation Satellite System |

| ICP | Iterative Closest Points |

| IMU | Inertial measurement unit |

| SfM | Structure-from-motion |

| SLAM | Simultaneous Localization and Mapping |

| TOF | Time-of-flight |

References

- American Society of Civil Engineers (ASCE). 2021 Infrastructure Report Card—Wastewater. 2021. Available online: https://infrastructurereportcard.org/wp-content/uploads/2020/12/Wastewater-2021.pdf (accessed on 5 April 2021).

- Statistisches Bundesamt. Öffentliche Wasserversorgung und öffentliche Abwasserentsorgung—Strukturdaten zur Wasserwirtschaft 2016; Technical Report; Fachserie 19 Reihe 2.1.3.; Statistisches Bundesamt: Wiesbaden, Gemany, 2018.

- Berger, C.; Falk, C.; Hetzel, F.; Pinnekamp, J.; Ruppelt, J.; Schleiffer, P.; Schmitt, J. Zustand der Kanalisation in Deutschland—Ergebnisse der DWA-Umfrage 2020. Korrespondenz Abwasser Abfall 2020, 67, 939–953. [Google Scholar]

- IBAK. Available online: http://www.ibak.de (accessed on 5 April 2021).

- Reverte, C.F.; Thayer, S.M.; Whittaker, W.; Close, E.C.; Slifko, A.; Hudson, E.; Vallapuzha, S. Autonomous Inspector Mobile Platform. U.S. Patent 8,024,066, 20 September 2011. [Google Scholar]

- Haurum, J.B.; Moeslund, T.B. A Survey on image-based automation of CCTV and SSET sewer inspections. Automat. Construct. 2020, 111, 103061. [Google Scholar] [CrossRef]

- Kirchner, F.; Hertzberg, J. A prototype study of an autonomous robot platform for sewerage system maintenance. Autonom. Robot. 1997, 4, 319–331. [Google Scholar] [CrossRef]

- Kolesnik, M.; Streich, H. Visual orientation and motion control of MAKRO-adaptation to the sewer environment. In Proceedings of the Seventh International Conference on the Simulation of Adaptive Behavior (SAB’02), Edinburgh, UK, 4–9 August 2002; pp. 62–69. [Google Scholar]

- Nassiraei, A.A.; Kawamura, Y.; Ahrary, A.; Mikuriya, Y.; Ishii, K. Concept and design of a fully autonomous sewer pipe inspection mobile robot “kantaro”. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 136–143. [Google Scholar]

- Chataigner, F.; Cavestany, P.; Soler, M.; Rizzo, C.; Gonzalez, J.P.; Bosch, C.; Gibert, J.; Torrente, A.; Gomez, R.; Serrano, D. ARSI: An Aerial Robot for Sewer Inspection. In Advances in Robotics Research: From Lab to Market; Springer: Berlin/Heidelberg, Germany, 2020; pp. 249–274. [Google Scholar]

- Alejo, D.; Mier, G.; Marques, C.; Caballero, F.; Merino, L.; Alvito, P. A ground robot solution for semi-autonomous inspection of visitable sewers. In ECHORD++: Innovation from LAB to MARKET; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Tur, J.M.M.; Garthwaite, W. Robotic devices for water main in-pipe inspection: A survey. J. Field Robot. 2010, 4, 491–508. [Google Scholar]

- Rome, E.; Hertzberg, J.; Kirchner, F.; Licht, U.; Christaller, T. Towards autonomous sewer robots: The MAKRO project. Urban Water 1999, 1, 57–70. [Google Scholar] [CrossRef]

- Totani, H.; Goto, H.; Ikeda, M.; Yada, T. Miniature 3D optical scanning sensor for pipe-inspection robot. In Microrobotics and Micromechanical Systems; International Society for Optics and Photonics: Bellingham, WA, USA, 1995; Volume 2593, pp. 21–29. [Google Scholar]

- Kuntze, H.B.; Haffner, H. Experiences with the development of a robot for smart multisensoric pipe inspection. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation (Cat. No.98CH36146), Leuven, Belgium, 20 May 1998; Volume 2, pp. 1773–1778. [Google Scholar]

- Kampfer, W.; Bartzke, R.; Ziehl, W. Flexible mobile robot system for smart optical pipe inspection. In Nondestructive Evaluation of Utilities and Pipelines II; International Society for Optics and Photonics: Bellingham, WA, USA, 1998; Volume 3398, pp. 75–83. [Google Scholar]

- Lawson, S.W.; Pretlove, J.R. Augmented reality and stereo vision for remote scene characterization. In Telemanipulator and Telepresence Technologies VI; International Society for Optics and Photonics: Bellingham, WA, USA, 1999; Volume 3840, pp. 133–143. [Google Scholar]

- Kolesnik, M.; Baratoff, G. 3D interpretation of sewer circular structures. In Proceedings of the 2000 ICRA, Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 2, pp. 1453–1458. [Google Scholar]

- Kolesnik, M.; Baratoff, G. Online distance recovery for a sewer inspection robot. In Proceedings of the 15th International Conference on Pattern Recognition, ICPR-2000, Barcelona, Spain, 3–7 September 2000; Volume 1, pp. 504–507. [Google Scholar]

- Lawson, S.W.; Pretlove, J.R.; Wheeler, A.C.; Parker, G.A. Augmented reality as a tool to aid the telerobotic exploration and characterization of remote environments. Pres. Teleoperat. Virtual Environ. 2002, 11, 352–367. [Google Scholar] [CrossRef]

- Nüchter, A.; Surmann, H.; Lingemann, K.; Hertzberg, J. Consistent 3D model construction with autonomous mobile robots. In Proceedings of the Annual Conference on Artificial Intelligence, Hamburg, Germany, 15–18 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 550–564. [Google Scholar]

- Yamada, H.; Togasaki, T.; Kimura, M.; Sudo, H. High-density 3D packaging sidewall interconnection technology for CCD micro-camera visual inspection system. Electron. Commun. Jpn. 2003, 86, 67–75. [Google Scholar] [CrossRef]

- Orghidan, R.; Salvi, J.; Mouaddib, E.M. Accuracy estimation of a new omnidirectional 3D vision sensor. In Proceedings of the IEEE International Conference on Image Processing 2005, Genova, Italy, 14 September 2005. [Google Scholar]

- Ma, Z.; Hu, Y.; Huang, J.; Zhang, X.; Wang, Y.; Chen, M.; Zhu, Q. A novel design of in pipe robot for inner surface inspection of large size pipes. Mech. Based Des. Struct. Mach. 2007, 35, 447–465. [Google Scholar] [CrossRef]

- Kannala, J.; Brandt, S.S.; Heikkilä, J. Measuring and modelling sewer pipes from video. Mach. Vis. Appl. 2008, 19, 73–83. [Google Scholar] [CrossRef]

- Wakayama, T.; Yoshizawa, T. Simultaneous measurement of internal and external profiles using a ring beam device. In Two-and Three-Dimensional Methods for Inspection and Metrology VI; International Society for Optics and Photonics: Bellingham, WA, USA, 2008; Volume 7066, p. 70660D. [Google Scholar]

- Chaiyasarn, K.; Kim, T.K.; Viola, F.; Cipolla, R.; Soga, K. Image mosaicing via quadric surface estimation with priors for tunnel inspection. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 537–540. [Google Scholar]

- Esquivel, S.; Koch, R.; Rehse, H. Reconstruction of sewer shaft profiles from fisheye-lens camera images. In Proceedings of the Joint Pattern Recognition Symposium, Jena, Germany, 9–11 September 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 332–341. [Google Scholar]

- Esquivel, S.; Koch, R.; Rehse, H. Time budget evaluation for image-based reconstruction of sewer shafts. In Real-Time Image and Video Processing 2010; International Society for Optics and Photonics: Bellingham, WA, USA, 2010; Volume 7724, p. 77240M. [Google Scholar]

- Saenz, J.; Elkmann, N.; Walter, C.; Schulenburg, E.; Althoff, H. Treading new water with a fully automatic sewer inspection system. In Proceedings of the ISR 2010 (41st International Symposium on Robotics) and ROBOTIK 2010 (6th German Conference on Robotics), Munich, Germany, 7–9 June 2010; pp. 1–6. [Google Scholar]

- Hansen, P.; Alismail, H.; Browning, B.; Rander, P. Stereo visual odometry for pipe mapping. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, San Francisco, CA, USA, 25–30 September 2011; pp. 4020–4025. [Google Scholar]

- Zhang, Y.; Hartley, R.; Mashford, J.; Wang, L.; Burn, S. Pipeline reconstruction from fisheye images. J. WSCG 2011, 19, 49–57. [Google Scholar]

- Buschinelli, P.D.; Melo, J.R.C.; Albertazzi, A., Jr.; Santos, J.M.; Camerini, C.S. Optical profilometer using laser based conical triangulation for inspection of inner geometry of corroded pipes in cylindrical coordinates. In Optical Measurement Systems for Industrial Inspection VIII; International Society for Optics and Photonics: Bellingham, WA, USA, 2013; Volume 8788, p. 87881H. [Google Scholar]

- Ying, W.; Cuiyun, J.; Yanhui, Z. Pipe defect detection and reconstruction based on 3D points acquired by the circular structured light vision. Adv. Mech. Eng. 2013, 5, 670487. [Google Scholar] [CrossRef]

- Kawasue, K.; Komatsu, T. Shape measurement of a sewer pipe using a mobile robot with computer vision. Int. J. Adv. Robot. Syst. 2013, 10, 52. [Google Scholar] [CrossRef]

- Larson, J.; Okorn, B.; Pastore, T.; Hooper, D.; Edwards, J. Counter tunnel exploration, mapping, and localization with an unmanned ground vehicle. In Unmanned Systems Technology XVI; International Society for Optics and Photonics: Bellingham, WA, USA, 2014; Volume 9084, p. 90840Q. [Google Scholar]

- Arciniegas, J.R.; González, A.L.; Quintero, L.; Contreras, C.R.; Meneses, J.E. Three-dimensional shape measurement system applied to superficial inspection of non-metallic pipes for the hydrocarbons transport. In Dimensional Optical Metrology and Inspection for Practical Applications III; International Society for Optics and Photonics: Bellingham, WA, USA, 2014; Volume 9110, p. 91100U. [Google Scholar]

- Dobie, G.; Summan, R.; MacLeod, C.; Pierce, G.; Galbraith, W. An automated miniature robotic vehicle inspection system. In AIP Conference Proceedings; American Institute of Physics: College Park, MD, USA, 2014; Volume 1581, pp. 1881–1888. [Google Scholar]

- Aghdam, H.H.; Kadir, H.A.; Arshad, M.R.; Zaman, M. Localizing pipe inspection robot using visual odometry. In Proceedings of the 2014 IEEE International Conference on Control System, Computing and Engineering (ICCSCE 2014), IEEE, Penang, Malaysia, 28–30 November 2014; pp. 245–250. [Google Scholar]

- Skinner, B.; Vidal-Calleja, T.; Miro, J.V.; De Bruijn, F.; Falque, R. 3D point cloud upsampling for accurate reconstruction of dense 2.5 D thickness maps. In Proceedings of the Australasian Conference on Robotics and Automation, ACRA, Melbourne, VIC, Australia, 2–4 December 2014. [Google Scholar]

- Striegl, P.; Mönch, K.; Reinhardt, W. Untersuchungen zur Verbesserung der Aufnahmegenauigkeit von Abwasserleitungen. ZfV-Zeitschrift Geodäsie Geoinformation Landmanagement. 2014. Available online: https://www.unibw.de/geoinformatik/publikationen-und-vortraege/pdf-dateien-wissenschaftliche-publikationen/zfv_2014_2_striegl_moench_reinhardt.pdf (accessed on 5 April 2021).

- Bellés, C.; Pla, F. A Kinect-Based System for 3D Reconstruction of Sewer Manholes. Comput. Aided Civ. Infrastruct. Eng. 2015, 30, 906–917. [Google Scholar] [CrossRef]

- Hansen, P.; Alismail, H.; Rander, P.; Browning, B. Visual mapping for natural gas pipe inspection. Int. J. Robot. Res. 2015, 34, 532–558. [Google Scholar] [CrossRef]

- Wu, T.; Lu, S.; Tang, Y. An in-pipe internal defects inspection system based on the active stereo omnidirectional vision sensor. In Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015; pp. 2637–2641. [Google Scholar]

- Huynh, P.; Ross, R.; Martchenko, A.; Devlin, J. Anomaly inspection in sewer pipes using stereo vision. In Proceedings of the 2015 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 19–21 October 2015; pp. 60–64. [Google Scholar]

- Huynh, P.; Ross, R.; Martchenko, A.; Devlin, J. 3D anomaly inspection system for sewer pipes using stereo vision and novel image processing. In Proceedings of the 2016 IEEE 11th Conference on Industrial Electronics and Applications (ICIEA), Hefei, China, 5–7 June 2016; pp. 988–993. [Google Scholar]

- Elfaid, A. Optical Sensors Development for NDE Applications Using Structured Light and 3D Visualization. Ph.D. Thesis, University of Colorado Denver, Denver, CO, USA, 2016. [Google Scholar]

- Stanić, N.; Lepot, M.; Catieau, M.; Langeveld, J.; Clemens, F.H. A technology for sewer pipe inspection (part 1): Design, calibration, corrections and potential application of a laser profiler. Automat. Const. 2017, 75, 91–107. [Google Scholar] [CrossRef]

- Nieuwenhuisen, M.; Quenzel, J.; Beul, M.; Droeschel, D.; Houben, S.; Behnke, S. ChimneySpector: Autonomous MAV-based indoor chimney inspection employing 3D laser localization and textured surface reconstruction. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 278–285. [Google Scholar]

- Yang, Z.; Lu, S.; Wu, T.; Yuan, G.; Tang, Y. Detection of morphology defects in pipeline based on 3D active stereo omnidirectional vision sensor. IET Image Process. 2017, 12, 588–595. [Google Scholar] [CrossRef]

- Mascarich, F.; Khattak, S.; Papachristos, C.; Alexis, K. A multi-modal mapping unit for autonomous exploration and mapping of underground tunnels. In Proceedings of the 2018 IEEE Aerospace Conference, IEEE, Big Sky, MT, USA, 3–10 March 2018; pp. 1–7. [Google Scholar]

- Reyes-Acosta, A.V.; Lopez-Juarez, I.; Osorio-Comparán, R.; Lefranc, G. 3D pipe reconstruction employing video information from mobile robots. Appl. Soft Comput. 2019, 75, 562–574. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, P.; Hu, Q.; Wang, H.; Ai, M.; Li, J. A 3D Reconstruction Pipeline of Urban Drainage Pipes Based on MultiviewImage Matching Using Low-Cost Panoramic Video Cameras. Water 2019, 11, 2101. [Google Scholar] [CrossRef]

- Jackson, W.; Dobie, G.; MacLeod, C.; West, G.; Mineo, C.; McDonald, L. Error analysis and calibration for a novel pipe profiling tool. IEEE Sens. J. 2019, 20, 3545–3555. [Google Scholar] [CrossRef]

- Chen, X.; Zhou, F.; Chen, X.; Yang, J. Mobile visual detecting system with a catadioptric vision sensor in pipeline. Optik 2019, 193, 162854. [Google Scholar] [CrossRef]

- Oyama, A.; Iida, H.; Ji, Y.; Umeda, K.; Mano, Y.; Yasui, T.; Nakamura, T. Three-dimensional Mapping of Pipeline from Inside Images Using Earthworm Robot Equipped with Camera. IFAC-PapersOnLine 2019, 52, 87–90. [Google Scholar] [CrossRef]

- Nahangi, M.; Czerniawski, T.; Haas, C.T.; Walbridge, S. Pipe radius estimation using Kinect range cameras. Automat. Construct. 2019, 99, 197–205. [Google Scholar] [CrossRef]

- Piciarelli, C.; Avola, D.; Pannone, D.; Foresti, G.L. A vision-based system for internal pipeline inspection. IEEE Trans. Indust. Inform. 2018, 15, 3289–3299. [Google Scholar] [CrossRef]

- Fernandes, R.; Rocha, T.L.; Azpúrua, H.; Pessin, G.; Neto, A.A.; Freitas, G. Investigation of Visual Reconstruction Techniques Using Mobile Robots in Confined Environments. In Proceedings of the 2020 Latin American Robotics Symposium (LARS), 2020 Brazilian Symposium on Robotics (SBR) and 2020 Workshop on Robotics in Education (WRE), Natal, Brazil, 9–13 November 2020; pp. 1–6. [Google Scholar]

- Chuang, T.Y.; Sung, C.C. Learning and SLAM Based Decision Support Platform for Sewer Inspection. Remote Sens. 2020, 12, 968. [Google Scholar] [CrossRef]

- Kolvenbach, H.; Wisth, D.; Buchanan, R.; Valsecchi, G.; Grandia, R.; Fallon, M.; Hutter, M. Towards autonomous inspection of concrete deterioration in sewers with legged robots. J. Field Robot. 2020, 37, 1314–1327. [Google Scholar] [CrossRef]

- Yoshimoto, K.; Watabe, K.; Tani, M.; Fujinaga, T.; Iijima, H.; Tsujii, M.; Takahashi, H.; Takehara, T.; Yamada, K. Three-Dimensional Panorama Image of Tubular Structure Using Stereo Endoscopy. Available online: www.ijicic.org/ijicic-160302.pdf (accessed on 5 April 2021).

- Zhang, G.; Shang, B.; Chen, Y.; Moyes, H. SmartCaveDrone: 3D cave mapping using UAVs as robotic co-archaeologists. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1052–1057. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Brostow, G.J. Unsupervised monocular depth estimation with left-right consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 270–279. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Firman, M.; Brostow, G.J. Digging into self-supervised monocular depth estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3828–3838. [Google Scholar]

- Tölgyessy, M.; Dekan, M.; Chovanec, L.; Hubinskỳ, P. Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Chen, S.; Chang, C.W.; Wen, C.Y. Perception in the dark—Development of a ToF visual inertial odometry system. Sensors 2020, 20, 1263. [Google Scholar] [CrossRef]

- Khattak, S.; Papachristos, C.; Alexis, K. Vision-depth landmarks and inertial fusion for navigation in degraded visual environments. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 19–21 November 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 529–540. [Google Scholar]

- pmdtechnologies ag. Development Kit Brief CamBoard Pico Flexx. Available online: https://pmdtec.com/picofamily/wp-content/uploads/2018/03/PMD_DevKit_Brief_CB_pico_flexx_CE_V0218-1.pdf (accessed on 8 January 2021).

- Intel. Intel®RealSense™D400 Series Product Family—Datasheet. Available online: https://www.intel.com/content/dam/support/us/en/documents/emerging-technologies/intel-realsense-technology/Intel-RealSense-D400-Series-Datasheet.pdf (accessed on 8 January 2021).

- Smart Vision Lights. S75 Brick Light Spot Light. Available online: https://smartvisionlights.com/wp-content/uploads/S75_Datasheet-2.pdf (accessed on 5 April 2021).

- pmdtechnologies ag, Am Eichengang 50, 57076 Siegen, Germany. CamBoard pico flexx—Getting Started, 2018.

- Javaheri, A.; Brites, C.; Pereira, F.; Ascenso, J. Subjective and objective quality evaluation of 3D point cloud denoising algorithms. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 1–6. [Google Scholar]

- Cignoni, P.; Rocchini, C.; Scopigno, R. Metro: Measuring Error on Simplified Surfaces; Computer graphics forum; Wiley: Hoboken, NJ, USA, 1998; Volume 17, pp. 167–174. [Google Scholar]

- Su, H.; Duanmu, Z.; Liu, W.; Liu, Q.; Wang, Z. Perceptual quality assessment of 3D point clouds. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3182–3186. [Google Scholar]

- Mekuria, R.; Blom, K.; Cesar, P. Design, implementation, and evaluation of a point cloud codec for tele-immersive video. IEEE Trans. Circ. Syst. Video Technol. 2016, 27, 828–842. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (NZ). ISPRS J. Photogram. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D. CloudCompare. 2016. Available online: https://www.danielgm.net/cc (accessed on 5 April 2021).

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection; Computer graphics forum; Wiley: Hoboken, NJ, USA, 2007; Volume 26, pp. 214–226. [Google Scholar]

- Elma Instruments A/S. Manual Elma 1335.

- Haurum, J.B.; Moeslund, T.B. Sewer-ML: A Multi-Label Sewer Defect Classification Dataset and Benchmark. arXiv 2021, arXiv:2103.10895. [Google Scholar]

| Paper | Year | Camera | Stereo | Laser | Omni-Directional | Other Sensors | Reconstruction Technique |

|---|---|---|---|---|---|---|---|

| [14] | 1995 | X | Laser profilometry | ||||

| [13,15] | 1998 | X | X | Ultras. | Laser profilometry | ||

| [16] | 1998 | X | X | Laser profilometry | |||

| [17] | 1999 | X | X | None | |||

| [18] | 2000 | X | Image proc. + Hough transform | ||||

| [19] | 2000 | X | X | Image proc. + Hough transform | |||

| [20] | 2002 | X | X | None | |||

| [21] | 2003 | LiDAR | ICP | ||||

| [22] | 2003 | X | Laser profilometry | ||||

| [23] | 2005 | X | X | X | Laser profilometry | ||

| [24] | 2007 | X | Laser profilometry | ||||

| [9] | 2007 | X | X | X | Laser profilometry | ||

| [25] | 2007 | X | X | SfM + cylinder fitting | |||

| [26] | 2008 | X | X | Laser profilometry | |||

| [27] | 2009 | X | Sparse 3D + mosaicing | ||||

| [28,29] | 2009 | X | X | SfM | |||

| [30] | 2010 | X | X | X | Ultras. | Image proc. + feat. match. | |

| [31] | 2011 | X | X | Dense stereo matching | |||

| [32] | 2011 | X | X | Dense stereo matching | |||

| [33] | 2013 | X | Laser profilometry | ||||

| [34] | 2013 | X | X | Laser profilometry | |||

| [35] | 2013 | X | X | Laser profilometry | |||

| [36] | 2014 | X | LiDAR | ICP | |||

| [37] | 2014 | X | X | Structured light | |||

| [38] | 2014 | X | X | Dense stereo matching | |||

| [39] | 2014 | X | Fourier image correspond. | ||||

| [40] | 2014 | X | Commercial laser scanner | ||||

| [41] | 2014 | X | ToF | Hough transf. (cylinder) | |||

| [42] | 2015 | X | X | Kinect | RGB-D SLAM | ||

| [43] | 2015 | X | X | Structured light + sparse 3D | |||

| [44] | 2015 | X | X | X | X | Laser profilometry | |

| [45] | 2015 | X | X | Dense stereo matching | |||

| [46] | 2016 | X | X | Dense stereo matching | |||

| [47] | 2016 | X | X | Laser profilometry | |||

| [48] | 2017 | X | X | Laser profilometry | |||

| [49] | 2017 | X | X | LiDAR | Dense stereo matching | ||

| [50] | 2018 | X | X | X | X | Laser profilometry | |

| [51] | 2018 | X | X | ToF | Visual inertial odometry | ||

| [52] | 2019 | X | Dense stereo matching | ||||

| [53] | 2019 | X | X | Dense stereo matching + SfM | |||

| [54] | 2019 | X | X | Laser profilometry | |||

| [55] | 2019 | X | X | X | Laser profilometry | ||

| [56] | 2019 | X | Dense stereo matching | ||||

| [57] | 2019 | X | LiDAR | Radius estimation from curvature | |||

| [58] | 2019 | X | X | Dense stereo matching | |||

| [59] | 2020 | X | X | X | LiDAR | RGB-D SLAM | |

| [60] | 2020 | X | X | Kinect | ICP + visual SLAM | ||

| [61] | 2020 | X | X | LiDAR | ICP | ||

| [62] | 2020 | X | X | Dense stereo matching |

| Camera | Resolution (Pixels) | Frame Rate (fps) | Range (m) | Power (w) | Size (mm) | Weight (g) |

|---|---|---|---|---|---|---|

| PMD CamBoard pico flexx | 224 × 171 | 45 | 0.1–4 | ≈0.3 | 68 × 17 × 7.25 | 8 |

| RealSense D435 | 1920 × 1080 | 30 | 0.3–3 | <2 | 90 × 25 × 25 | 9 |

| Test Setup | Pipe | Light Source | Illuminance Levels | # Levels | |

|---|---|---|---|---|---|

| Low | High | ||||

| Lab | Ø400 PVC | Custom ring light | 0.02 lx | 294 lx | 23 |

| Outdoor | Ø400 PVC | Smart Vision Lights S75-Whi [71] | 0.08 lx | 440 lx | 9 |

| Water Level | Amount of Ground Coffee | Amount of Milk |

|---|---|---|

| 0 mm | 0 g | 0.0% |

| 20 mm | 35 g | 0.5% |

| 40 mm | 97 g | 0.2% |

| 60 mm | 97 g | 0.5% |

| 80 mm | 270 g | 0.5% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bahnsen, C.H.; Johansen, A.S.; Philipsen, M.P.; Henriksen, J.W.; Nasrollahi, K.; Moeslund, T.B. 3D Sensors for Sewer Inspection: A Quantitative Review and Analysis. Sensors 2021, 21, 2553. https://doi.org/10.3390/s21072553

Bahnsen CH, Johansen AS, Philipsen MP, Henriksen JW, Nasrollahi K, Moeslund TB. 3D Sensors for Sewer Inspection: A Quantitative Review and Analysis. Sensors. 2021; 21(7):2553. https://doi.org/10.3390/s21072553

Chicago/Turabian StyleBahnsen, Chris H., Anders S. Johansen, Mark P. Philipsen, Jesper W. Henriksen, Kamal Nasrollahi, and Thomas B. Moeslund. 2021. "3D Sensors for Sewer Inspection: A Quantitative Review and Analysis" Sensors 21, no. 7: 2553. https://doi.org/10.3390/s21072553

APA StyleBahnsen, C. H., Johansen, A. S., Philipsen, M. P., Henriksen, J. W., Nasrollahi, K., & Moeslund, T. B. (2021). 3D Sensors for Sewer Inspection: A Quantitative Review and Analysis. Sensors, 21(7), 2553. https://doi.org/10.3390/s21072553