The major goal of developing the augmented reality eye tracking toolkit is to enable researchers to easily use eye tracking in AR settings with the HoloLens 2. It should allow an efficient integration to Unity 3D scenes, enable recordings of a comprehensive set of eye tracking signals (see

Table 1), and a seamless analysis of the data via our R package. This would simplify integration of eye tracking into existing AR research, like Strzys et al. [

37] and Kapp et al. [

38]. Independently from the study reported in this publication, our toolkit is currently being used in two ongoing research studies which provide first evidences in this direction. One study utilizes the Microsoft HoloLens 2 to display two dimensional plots at a fixed distance while the participant is moving while another study investigates stationary augmentations on a table. The initial feedback from the study organizers, the developers of the AR application, and the experimenters is positive. No major issues occurred during the recordings, which certifies a high robustness, and the ease-of-use of the web interface was, informally, rated high.

Our toolkit can also be used for facilitating gaze-based interaction and real-time adaptive applications using the data provider module. For instance, prior research proposed to use eye tracking and HMDs to augment the episodic memory of dementia patients by storing artificial memory sequences and presenting them when needed [

39]. Other works include approaches for gaze-based analysis of the users’ attention engagement and cognitive states for proactive content visualization [

40], and multi-focal plane interaction, such as object selection and manipulation at multiple fixation distances [

41]. It can also be used in research regarding selection techniques in AR [

42,

43]. The utility of the toolkit for realizing real-time adaptive applications has been shown in Reference [

44]. The presented prototype uses video and gaze information via our toolkit to automatically recognize and augment attended objects in an uninstrumented environment.

5.1. Evaluation of Accuracy and Precision

The results from our evaluation show significant differences in spatial accuracy for varying distances in setting I and II. This supports our hypothesis H1. However, for setting I, the pairwise comparisons reveal that only the results for the smallest distance m and the distances and m differ significantly. For setting II, the results significantly differ for all pairs except for the two farthest distances of m and m. Further, our results confirm the hypothesis H2 and H3: the accuracies and precision for each distance differ significantly between setting I and setting II while the results for setting II are poorer.

Our observations also show that the spatial accuracy in degrees of visual angle increases with increasing distance (see

Table 2 and

Table 4). Findings from the literature suggest that the accuracy decreases with increasing deviation from the calibration distance, i.e., the distance at which the fixation targets of the calibration routine are shown [

18,

20,

45]. This leads to our assumption that the built-in calibration routine of HoloLens 2 is placed at 2 to 4 m from the user, which is supported by the fact that Microsoft recommends an interaction distance of 2 m [

46]. It is possible that this increase in angular accuracy is an effect of the vergence-accommodation conflict [

47] as only a combined gaze ray is made available by the device.

The official HoloLens 2 documentation reports a vague range for the spatial accuracy of “approximately within 1.5 degrees” with “slight imperfections” to be expected [

26]. Basically, our results coincide with these specifications, but are much more fine-grained. For the resting setting (I), we observe better spatial accuracy values ranging from

degrees of visual angle for a

m distance to

degrees for

m. For the walking setting (II), which has a lower spatial accuracy overall, the results for

m and

m are outside the official range with

and

degrees of visual angle, respectively. The two other conditions lie within the specified boundary of

degrees. The documented sampling rate of “approximately 30 Hz” was also met with a new gaze sample being observed every

ms.

Based on our findings, we suggest minimum target sizes for eye tracking research and gaze-based interaction with the HoloLens 2. Similar to Feit et al. [

34], who investigate the gaze estimation error for remote eye tracking, we calculate the minimum size such that

of all gaze samples hit the target. We use their formula that computes the minimum size based on a 2-dimensional Gaussian function as

with the spatial accuracy of the eye tracker as offset

O and the spatial precision of the gaze signal as

. The resulting minimum target sizes for varying distances are listed in

Table 9. For a distance of

m, Microsoft recommends a target size of 5–10 cm, which conforms with our findings for setting I: we suggest a target size of

cm in this case. However, if the participant is meant to move around, the targets should be significantly larger.

In setting III, we explore the characteristics of the gaze estimation error for stationary targets. The average distance to the stationary target of

cm is comparable to the

m distance in setting I. However, the mean spatial accuracy is better and the precision is lower. This better result for spatial accuracy could be explained by the longer fixation durations and the varying viewing angles in setting III: on average, the mean gaze positions seem to balance around the fixation target, while the dispersion stays high (see

Figure 9). Based on Feit et al. [

34], we suggest a minimum target size of

cm. This is

larger than the recommendation for setting I, and

of the recommended size for setting II. Altogether, the results suggest that the fixation duration and the user condition, i.e., walking versus not walking, influences the spatial accuracy and precision, which should be considered when designing interactive and, potentially, mobile research applications.

Finally, we compare the results of the HoloLens 2 eye tracker to available head-mounted eye trackers without an HMD. Macinnes et al. [

48] evaluated the spatial accuracy and precision of three mobile eye trackers for multiple distances while participants were seated. They included (i) the Pupil Labs 120 Hz Binocular glasses with an accuracy of

and a precision of

, (ii) the SensoMotoric Instruments (SMI) Eye Tracking Glasses 2 with an accuracy of

and a precision of

, and (iii) the Tobii Pro Glasses 2 with an accuracy of

and a precision of

. On average, our results for the HoloLens 2 in setting I, which is the closest match to the setting in Reference [

48], yield an accuracy of

and a precision of

. This is similar to the results of the Pupil Labs glasses that ranged best in the experiment by Macinnes et al. [

48] and suggests that the eye tracking data from HoloLens 2 can effectively be used in research experiments. However, one drawback is that the sampling rate of 30 Hz is lower compared to the devices evaluated in their experiment.

5.2. Limitations

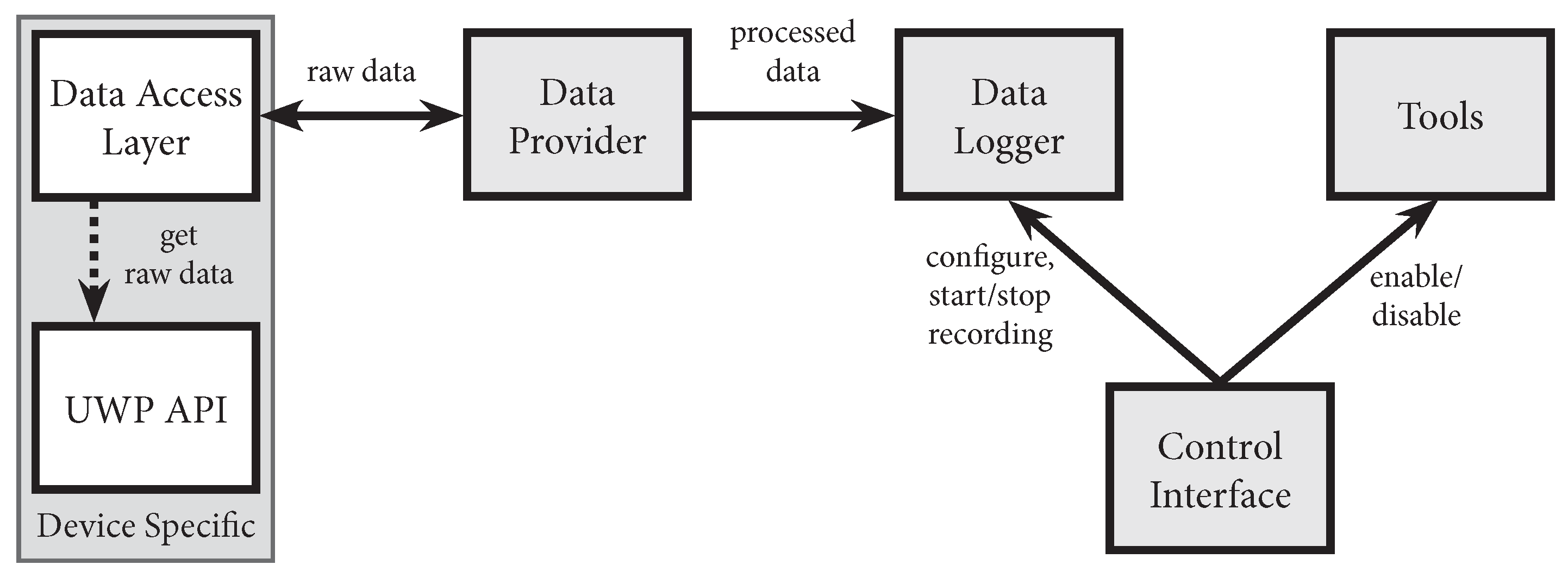

Our toolkit enables access to raw gaze data and provides additional tools for processing them. However, it is limited to the data that is made available through APIs of the device. For instance, the API reports a joint gaze vector for both eyes, while many commercial binocular eye tracking glasses report separate gaze rays. This forces to intersect the gaze ray with the virtual environment to receive a point of gaze. Separate rays can be intersected to extract gaze points without intersecting any surface, and to infer the fixation depth. In addition, this gaze point can be used to find close-by AOIs. Our evaluation focuses on limited set of interaction settings that probably do not generalize to all possible settings in AR environments. However, with setting III, we include a more realistic setting that closer matches typical AR environments with a moving user and fixed visualizations. We cannot rule out effects due to the experiment order as it was identical for all participants.

Currently, our toolkit is constrained to the Microsoft HoloLens 2 as eye tracking device. However, all device specific functionality is encapsulated in the data access layer. This makes it possible to adapt the toolkit to other eye tracking enabled AR devices in the future. However, the gaze estimation is device-specific: the results from our evaluation on spatial accuracy and spatial precision do not hold for other devices. In addition, the sampling rate might change which needs to be addressed by re-configuring the data pulling rate. The data access layer could also subscribe gaze events or connect to a signal stream, if this is supported by the new device.