Stochastic Decision Fusion of Convolutional Neural Networks for Tomato Ripeness Detection in Agricultural Sorting Systems

Abstract

1. Introduction

2. Materials and Methods

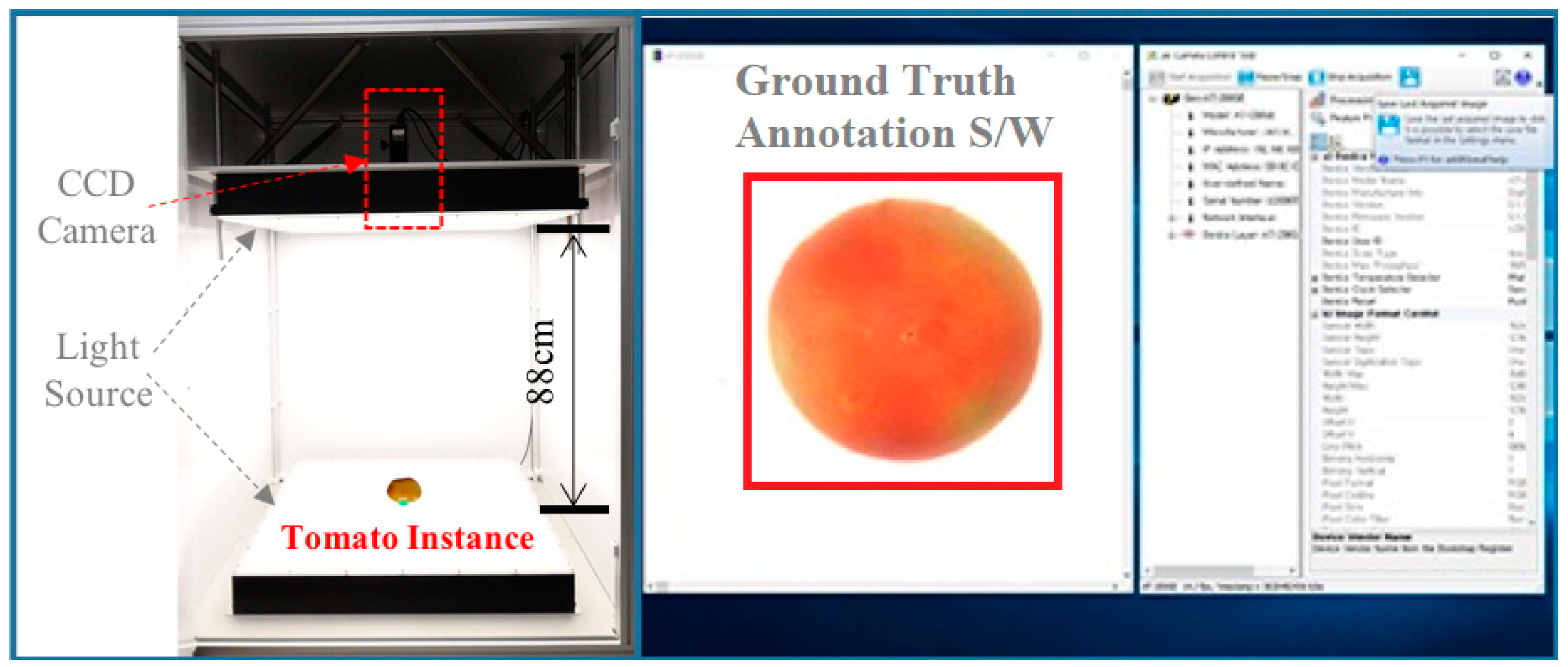

2.1. Tomato Image Acquisition

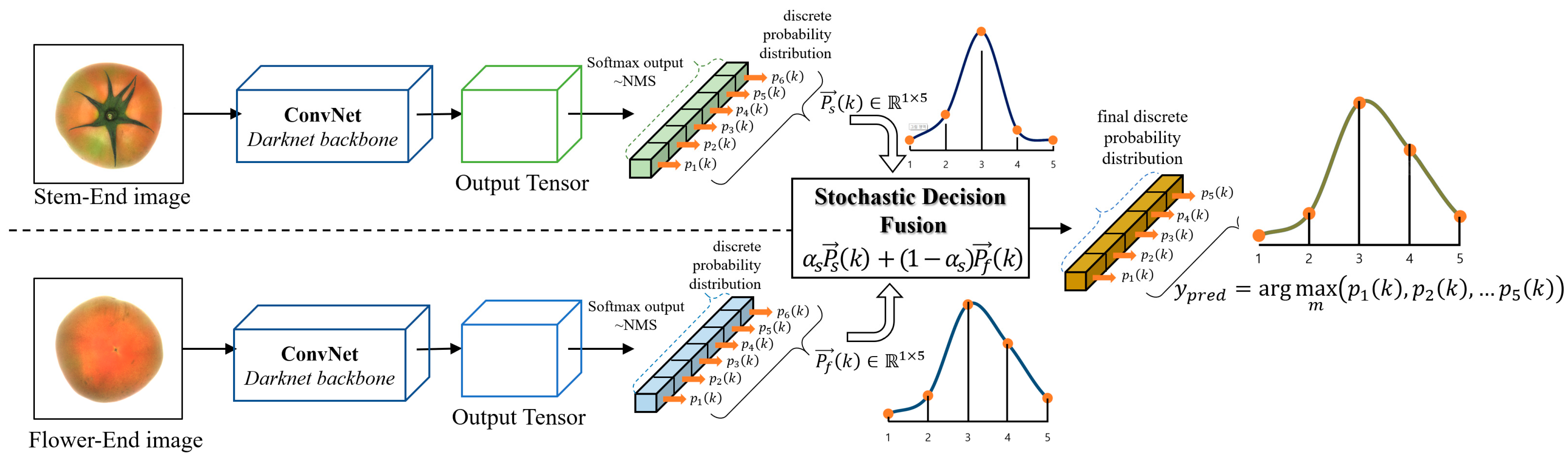

2.2. Accurate Tomato Ripeness Detection Using the SDF-ConvNets

2.2.1. Initial Tomato Ripeness Detection Based on YOLOv3

- Learning rate: 0.01 (scale 0.1 at step 25,000, 35,000);

- Max. training iteration: 50,000;

- Size of mini-batch/subdivision: 32/8;

- Weight decay: 0.0005;

- Learning momentum: 0.9;

- Total number of convolution layers: 79;

- Scales of final feature map: 8, 16, 32.

2.2.2. Stochastic Decision Fusion

3. Results

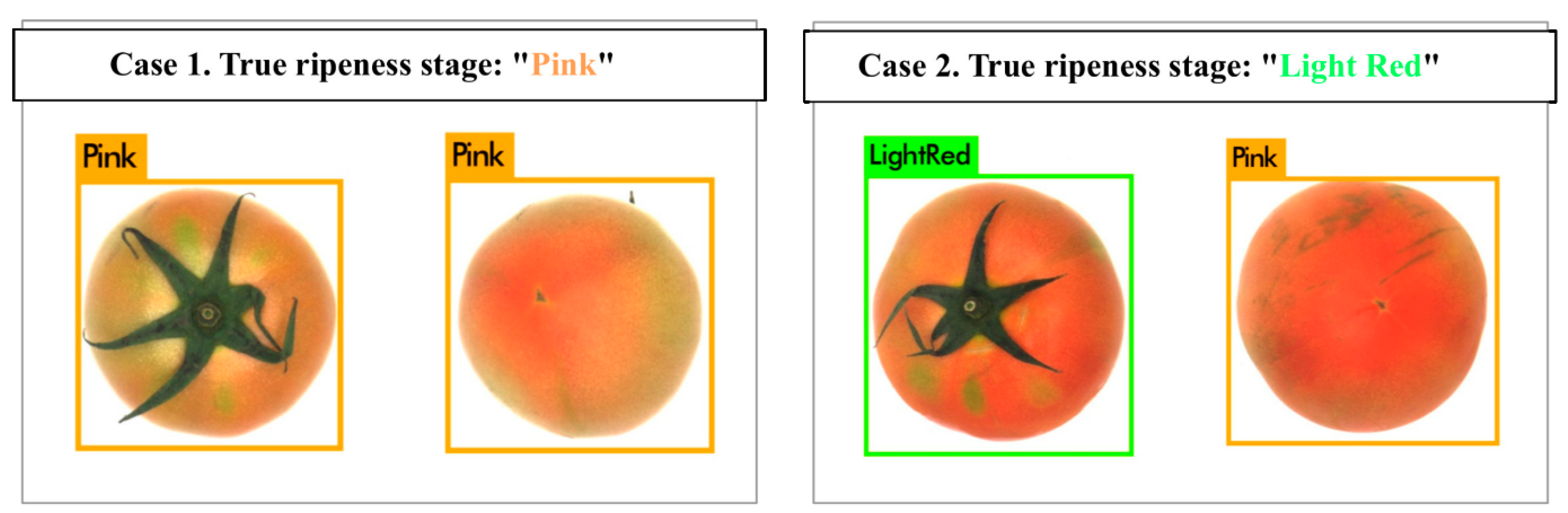

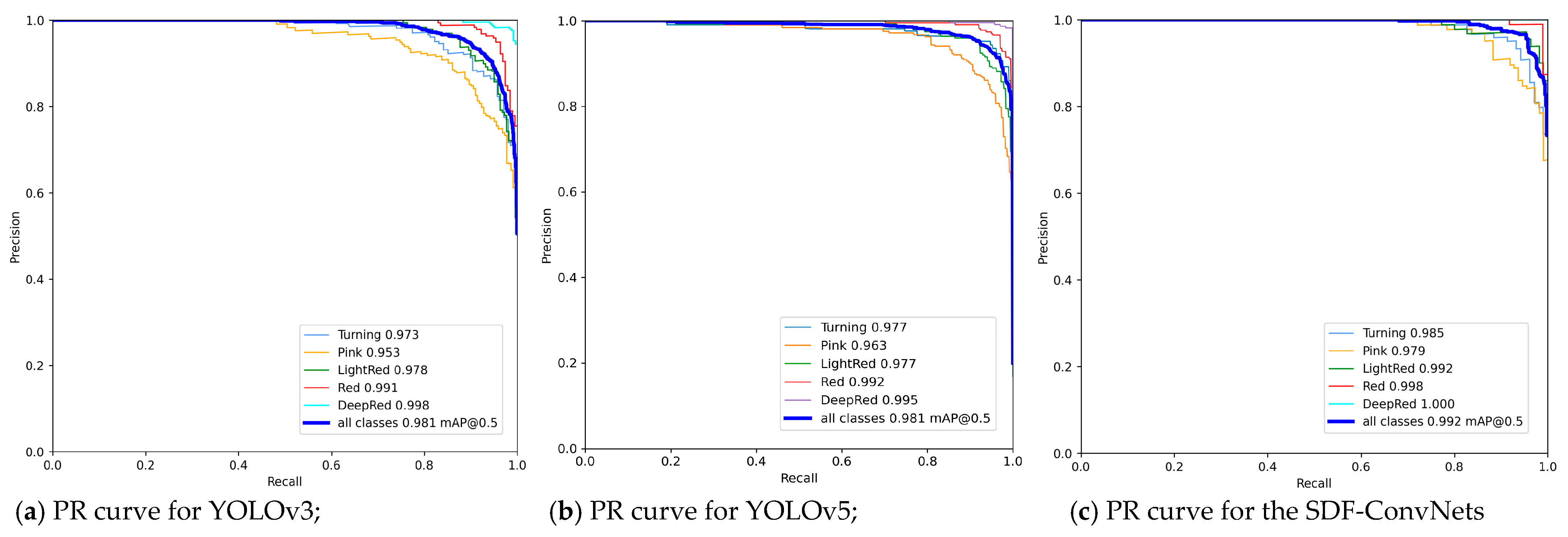

3.1. Experiments for Tomato Ripeness Detection

3.2. Experiments for Stochastic Decision Fusion

3.3. Comparison of State-of-the-Art Algorithms

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nagy, A.; Riczu, P.; Tamás, J. Spectral evaluation of apple fruit ripening and pigment content alteration. Sci. Hortic. 2016, 201, 256–264. [Google Scholar] [CrossRef]

- Dumas, Y.; Dadomo, M.; Di Lucca, G.; Grolier, P. Effects of environmental factors and agricultural techniques on antioxidant content of tomatoes. J. Sci. Food Agric. 2003, 83, 369–382. [Google Scholar] [CrossRef]

- Radzevičius, A.; Karklelienė, R.; Viskelis, P.; Bobinas, C.; Bobinaite, R.; Sakalauskiene, S. Tomato (Lycopersicon esculentum Mill.) fruit quality and physiological parameters at different ripening stages of Lithuanian cultivars. Agron. Res. 2009, 7, 712–718. [Google Scholar]

- Gautier, H.; Diakou-Verdin, V.; Bénard, C.; Reich, M.; Buret, M.; Bourgaud, F.; Poëssel, J.L.; Caris-Veyrat, C.; Génard, M. How Does Tomato Quality (Sugar, Acid, and Nutritional Quality) Vary with Ripening Stage, Temperature, and Irradiance? J. Agric. Food Chem. 2008, 56, 1241–1250. [Google Scholar] [CrossRef] [PubMed]

- Klee, H.J.; Giovannoni, J.J. Genetics and Control of Tomato Fruit Ripening and Quality Attributes. Annu. Rev. Genet. 2011, 45, 41–59. [Google Scholar] [CrossRef] [PubMed]

- Hoeberichts, F.A.; Der Plas, L.H.W.; Woltering, E.J. Ethylene perception is required for the expression of tomato ripening-related genes and associated physiological changes even at advanced stages of ripening. Postharvest Biol. Technol. 2002, 26, 125–133. [Google Scholar] [CrossRef]

- Barua, S.; Rahi, T.; Hossain, O.; Mazumder, A.; Sharmin, R.; Zaman, T.; Ghosh, D.; Ahmed, S. Optimization of Ethylene inhibitor-mediated controlled ripening of tomato (Solanum lycopersicum L.). Adv. Agric. Sci. 2018, 6, 49–60. [Google Scholar]

- Jia, W.; Li, Y.; Qu, R.; Baranowski, T.; Burke, L.E.; Zhang, H.; Bai, Y.; Mancino, J.M.; Xu, G.; Mao, Z.-H.; et al. Automatic food detection in egocentric images using artificial intelligence technology. Public Health Nutr. 2019, 22, 1168–1179. [Google Scholar] [CrossRef]

- Ege, T.; Yanai, K. Image-based food calorie estimation using recipe information. IEICE Trans. Inf. Syst. 2018, 101, 1333–1341. [Google Scholar] [CrossRef]

- Kaur, G.; Kaushik, A.; Sharma, S. Cooking is creating emotion: A study on hinglish sentiments of youtube cookery channels using semi-supervised approach. Big Data Cogn. Comput. 2019, 3, 37. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Zhou, B.; Huang, Y.; Liu, C. Detecting tomatoes in greenhouse scenes by combining AdaBoost classifier and colour analysis. Biosyst. Eng. 2016, 148, 127–137. [Google Scholar] [CrossRef]

- Liu, G.; Mao, S.; Kim, J.H. A mature-tomato detection algorithm using machine learning and color analysis. Sensors (Switzerland) 2019, 19, 2023. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Liu, X.; Pan, Z.; Li, P. Automatic Detection of Single Ripe Tomato on Plant Combining Faster R-CNN and Intuitionistic Fuzzy Set. IEEE Access 2019, 7, 154683–154696. [Google Scholar] [CrossRef]

- Sun, J.; He, X.; Wu, M.; Wu, X.; Shen, J.; Lu, B. Detection of tomato organs based on convolutional neural network under the overlap and occlusion backgrounds. Mach. Vis. Appl. 2020, 31, 31. [Google Scholar] [CrossRef]

- Goel, N.; Sehgal, P. Fuzzy classification of pre-harvest tomatoes for ripeness estimation {\textendash} An approach based on automatic rule learning using decision tree. Appl. Soft Comput. J. 2015, 36, 45–56. [Google Scholar] [CrossRef]

- El-Bendary, N.; El Hariri, E.; Hassanien, A.E.; Badr, A. Using machine learning techniques for evaluating tomato ripeness. Expert Syst. Appl. 2015, 42, 1892–1905. [Google Scholar] [CrossRef]

- Wan, P.; Toudeshki, A.; Tan, H.; Ehsani, R. A methodology for fresh tomato maturity detection using computer vision. Comput. Electron. Agric. 2018, 146, 43–50. [Google Scholar] [CrossRef]

- Nanni, L.; Ghidoni, S.; Brahnam, S. Handcrafted vs. non-handcrafted features for computer vision classification. Pattern Recognit. 2017, 71, 158–172. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Li, C.; Cao, Q.; Guo, F. A method for color classification of fruits based on machine vision. WSEAS Trans. Syst. 2009, 8. [Google Scholar]

- Arakeri, M.P. Lakshmana Computer Vision Based Fruit Grading System for Quality Evaluation of Tomato in Agriculture industry. Procedia Comput. Sci. 2016, 79, 426–433. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Two-Stream Convolutional Networks for Action Recognition in Videos. In Advances in Neural Information Processing Systems 27; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; pp. 568–576. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. pjreddie.com. 2018. Available online: https://pjreddie.com/media/files/papers/YOLOv3.pdf (accessed on 14 January 2021).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. 2012, 2, 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June–3 July 2016; Volume 2016, pp. 770–778. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Yang, Z.; Yu, Y.; You, C.; Steinhardt, J.; Ma, Y. Rethinking bias-variance trade-off for generalization of neural networks. arXiv 2020, arXiv:2002.11328. [Google Scholar]

- Redmon, J. Darknet: Open Source Neural Networks in C. Available online: https://pjreddie.com/darknet/ (accessed on 14 January 2021).

- Kumar, S.D.; Esakkirajan, S.; Bama, S.; Keerthiveena, B. A Microcontroller based Machine Vision Approach for Tomato Grading and Sorting using SVM Classifier. Microprocess. Microsyst. 2020, 76, 103090. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Detecting Apples in Orchards Using YOLOv3 and YOLOv5 in General and Close-Up Images. In Proceedings of the International Symposium on Neural Networks, Cairo, Egypt, 3–4 December 2020; pp. 233–243. [Google Scholar]

| Ripening Stages | Turning (T) | Pink (P) | Light Red (L) | Red (R) | Deep Red (D) | |

|---|---|---|---|---|---|---|

| Sample Image (Dafnis) | Flower-end viewpoint |  |  |  |  |  |

| Stem-end viewpoint |  |  |  |  |  | |

| Number of Samples for Training Set | 416 | 417 | 458 | 429 | 444 | |

| Number of Samples for Test Set | 105 | 114 | 92 | 119 | 118 | |

| Stem-End View Image | Prediction | Flower-End View Image | Prediction | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| T | P | L | R | D | T | P | L | R | D | ||||

| True | T | 0.9 | 0.04 | 0 | 0 | 0 | True | T | 0.8 | 0.1 | 0.03 | 0 | 0 |

| P | 0.1 | 0.92 | 0.05 | 0 | 0 | P | 0.15 | 0.8 | 0.06 | 0 | 0 | ||

| L | 0 | 0.04 | 0.9 | 0.02 | 0.02 | L | 0.05 | 0.1 | 0.82 | 0.1 | 0.05 | ||

| R | 0 | 0 | 0.05 | 0.9 | 0.08 | R | 0 | 0 | 0.06 | 0.8 | 0.1 | ||

| D | 0 | 0 | 0 | 0.08 | 0.9 | D | 0 | 0 | 0.03 | 0.1 | 0.85 | ||

| Precision | Precision | ||||||||||||

| Average Performance of the Ripeness Detection for Flower-End Image Set | Average Performance of the Ripeness Detection for Stem-End Image Set | ||||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | ||

| Turning | 0.942 | 0.924 | 0.932 | Turning | 0.960 | 0.896 | 0.926 |

| Pink | 0.870 | 0.914 | 0.888 | Pink | 0.864 | 0.898 | 0.878 |

| Light Red | 0.920 | 0.920 | 0.918 | Light Red | 0.912 | 0.956 | 0.936 |

| Red | 0.980 | 0.972 | 0.978 | Red | 0.942 | 0.952 | 0.946 |

| Deep Red | 0.996 | 0.988 | 0.994 | Deep Red | 0.976 | 0.952 | 0.966 |

| Average | 0.942 | 0.944 | 0.942 | Average | 0.931 | 0.931 | 0.931 |

| Equal Weight Decision Fusion | Stochastic Decision Fusion | ||||||

|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Precision | Recall | F1-Score | ||

| Turning | 0.960 | 0.928 | 0.944 | Turning | 0.964 | 0.934 | 0.948 |

| Pink | 0.904 | 0.942 | 0.924 | Pink | 0.908 | 0.948 | 0.930 |

| Light Red | 0.962 | 0.962 | 0.964 | Light Red | 0.964 | 0.962 | 0.964 |

| Red | 0.986 | 0.984 | 0.988 | Red | 0.986 | 0.982 | 0.986 |

| Deep Red | 0.998 | 0.992 | 0.998 | Deep Red | 0.996 | 0.992 | 0.998 |

| Average | 0.962 | 0.962 | 0.964 | Average | 0.964 | 0.964 | 0.965 |

| Model | Category | No. of Classes | No. of Images | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| Proposed (equal weighting) | Tomato | 5 | 548 | 0.962 | 0.962 | 0.964 |

| Proposed (stochastic weighting) | Tomato | 5 | 548 | 0.964 | 0.964 | 0.965 |

| YOLOv3 (w/o decision fusion) | Tomato | 5 | 548 | 0.937 | 0.938 | 0.937 |

| YOLOv3 [33] | Apple | 2 | 878 | 0.908 | 0.922 | 0.915 |

| ANN [17] | Tomato | 3 | 768 | - | - | 0.902 |

| SVM [32] | Tomato | 2 | 82 | 0.976 | 0.988 | 0.982 |

| LDA+SVM [16] | Tomato | 5 | 250 | - | - | 0.908 |

| Fuzzy classifier [15] | Tomato | 6 | 36 | 0.952 | 0.967 | 0.953 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ko, K.; Jang, I.; Choi, J.H.; Lim, J.H.; Lee, D.U. Stochastic Decision Fusion of Convolutional Neural Networks for Tomato Ripeness Detection in Agricultural Sorting Systems. Sensors 2021, 21, 917. https://doi.org/10.3390/s21030917

Ko K, Jang I, Choi JH, Lim JH, Lee DU. Stochastic Decision Fusion of Convolutional Neural Networks for Tomato Ripeness Detection in Agricultural Sorting Systems. Sensors. 2021; 21(3):917. https://doi.org/10.3390/s21030917

Chicago/Turabian StyleKo, KwangEun, Inhoon Jang, Jeong Hee Choi, Jeong Ho Lim, and Da Uhm Lee. 2021. "Stochastic Decision Fusion of Convolutional Neural Networks for Tomato Ripeness Detection in Agricultural Sorting Systems" Sensors 21, no. 3: 917. https://doi.org/10.3390/s21030917

APA StyleKo, K., Jang, I., Choi, J. H., Lim, J. H., & Lee, D. U. (2021). Stochastic Decision Fusion of Convolutional Neural Networks for Tomato Ripeness Detection in Agricultural Sorting Systems. Sensors, 21(3), 917. https://doi.org/10.3390/s21030917