Abstract

Tracking consumer empathy is one of the biggest challenges for advertisers. Although numerous studies have shown that consumers’ empathy affects purchasing, there are few quantitative and unobtrusive methods for assessing whether the viewer is sharing congruent emotions with the advertisement. This study suggested a non-contact method for measuring empathy by evaluating the synchronization of micro-movements between consumers and people within the media. Thirty participants viewed 24 advertisements classified as either empathy or non-empathy advertisements. For each viewing, we recorded the facial data and subjective empathy scores. We recorded the facial micro-movements, which reflect the ballistocardiography (BCG) motion, through the carotid artery remotely using a camera without any sensory attachment to the participant. Synchronization in cardiovascular measures (e.g., heart rate) is known to indicate higher levels of empathy. We found that through cross-entropy analysis, the more similar the micro-movements between the participant and the person in the advertisement, the higher the participant’s empathy scores for the advertisement. The study suggests that non-contact BCG methods can be utilized in cases where sensor attachment is ineffective (e.g., measuring empathy between the viewer and the media content) and can be a complementary method to subjective empathy scales.

1. Introduction

Empathy, a crucial factor in successful digital content marketing [1], is generally conceptualized as a multidimensional construct that includes both cognitive and affective responses to others in dyadic interactions [2,3,4]. However, empathy for digital content involves the emotional engagement of a viewer with a character in a causal and probable narrative [5]. For example, eliciting a consumer’s emotions congruent to content emotions may maximize an advertisement’s effect. Viewers empathizing with content tend to better understand the story and have more positive attitudes. They are more attentive and engaged [6,7,8], feel favorably toward products and brands [9,10], and are less likely to skip an advertisement [11,12]. Moreover, heightened empathy promotes the consumption of content in addition to attitudinal acceptance [13,14]. Such behavioral acceptance implies that viewer empathy is a critical predictor of the success of media content.

Empathy has been measured to predict the success of commercials. Escalas and Stern developed a battery of scale items to measure empathy toward advertisements, which has been widely used in consumer research [15]. Other prominent subjective measures include Schlinger’s Viewer Response Profile [16,17,18], the Balanced Emotional Empathy Scale [19], the Empathy Quotient [20], the Toronto Empathy Questionnaire [21], the Interpersonal Reactivity Index [22,23], the Basic Empathy Scale [24], and the Hogan Empathy Scale [25].

However, such subjective evaluations cannot measure the dynamics of empathy over time. Empathic questionnaires are limited to assessing dispositional empathy, which refers to an individual’s capability (i.e., personality trait) to empathize with others.

The dynamics of empathy when consuming digital content require a novel measurement that can capture the fluctuation of emotions over time. The ever-changing interplay between the viewer’s emotions and the content emotions demands a more direct, sensitive, and real-time measurement, such as physiological measures, to properly assess the degree of empathy. The unconscious level of empathy that is not verbally reportable (i.e., subjective evaluation) can be acquired through more direct physiological measures.

1.1. Psychophysiological Basis of Empathy

Empathy includes motor mimicry and emotional contagion associated with autonomically activated neural mechanisms of the other’s feelings [26,27,28,29]. It also includes mirroring responses between people, in which explicit and implicit physiology become synchronized [30,31,32]. Explicit responses from empathy involve the synchronization of faces, gestures, and body movements. Changes in body motion synchronization are associated with the degree of empathy during face-to-face communication [33,34]. Greater synchronization of head motion was observed when a listener empathized with a speaker in a lecture [35]. In addition, body synchronization was reported between counselors and clients when they shared empathy [36,37].

Such observable synchronized behavior is a result of an implicit empathic response. The implicit process constitutes the synchronization of physiological activities between individuals [38], which can be measured through electroencephalography (EEG) [39,40], electrocardiography (ECG) [41,42,43,44], and skin conductance [45,46]. For example, the synchronization of electrodermal activities (i.e., skin response) between a therapist and a patient correlates to the patient’s perceived empathy toward the therapist [47,48].

Neuroscientific bases have been identified for the synchronization of brain activity among participants during empathic communication [49,50,51]. Empathy researches using EEG have been mainly focused on understanding the sharing of painful experiences. Several asymmetries or activations in the pain-related brain areas have been reported, which were elicited by empathy. The left frontal asymmetry has been related to the suffering of the other, and the right frontal asymmetry has been associated with the pain and sorrow of the other [52]. Moreover, empathy-related activation in fronto-insula and anterior cingulate cortices was reported, which have been related to pain [53]. Peng et al. have shown that brain-to-brain synchronization could be triggered by sharing painful experiences and could strengthen social bonds [54].

1.2. Cardiovascular Measures of Empathy

Measures of cardiovascular activity reflect both attentional and affective states [55]. Cardiovascular measures can be achieved using a piezoelectric transducer, ECG, or analysis of facial micromovements. Cardiovascular activity in empathy research has been understudied compared to other physiological measures [56], but recent advances in vision technology have shed light on novel and innovative methodologies such as remote ballistocardiography (rBCG).

Kodama et al. [57] examined a psychotherapy session between a counselor and a client and found synchronization in heart rate, suggesting a promising indicator that leads to the building of rapport and empathy. Salminen et al. [58] found that higher synchrony in respiration rates, which has a positive relationship with heart rate, is associated with higher empathy. The synchronization of the heart rate can also enhance closeness [59] and intimacy [60].

However, the measurement of synchronization between the cardiovascular activities of viewers and people in media content has been less studied, mainly due to multiple technical issues.

First, viewers need a sensor attachment to capture physiological measurements, which is a significant barrier to general adoption. Second, to evaluate empathy, measuring dyadic synchronization is paramount. The cardiovascular information of both the viewer and the person in the media content must be obtained and analyzed. Obviously, acquiring the latter is impossible with sensor attachment because it is digital content.

However, advances in vision technology for cardiac measurements, such as remote photoplethysmography (rPPG) and rBCG, suggest promising methods for overcoming these challenges. The rPPG evolved to detect changes in blood volume remotely without direct contact between the photosensor (i.e., PPG) and the skin [61]. Non-contact data acquisition is possible through various means, including infrared [62], thermal [63], and RGB [64] cameras. The rPPG uses band-pass filters to eliminate motion components in images [65] but has less effect on cardiovascular activities that include the motion itself, referred to as ballistocardiography motion [66]. The rBCG is a measurement of ballistocardiographic head movements through remote means using a camera and vision-based analysis. These vision technologies have improved considerably in recent years, enabling the estimation of the heartbeat signals of both the viewer and the person in the digital content without needing skin contact.

Specifically, BCG motion causes microscopic vibration (i.e., micro-movement), which appears in the face through the carotid artery [67]. Micro-movement implies the subtle movement of a face that the human eye cannot easily see. This is caused by regular vibrations from the heart that are transmitted to the face. Micro-movement can be obtained by filtering the frequency corresponding to the regular heart rate band from the frontal facial video capture [68,69,70,71]. Analyzing the similarity of micro-movement between viewers and digital content (e.g., advertisements) may provide insights into whether the viewer is empathizing with the content. We intended to analyze the similarity of micro-movements through cross-entropy analysis and compare it to the participants’ subjective empathy through a questionnaire. To our knowledge, no study has investigated the relationship of micro-movements through an rBCG method for a participant and a person in real-world media content, such as an advertisement.

2. Materials and Methods

2.1. Research Hypothesis

This study sought to verify the following hypothesis:

Hypothesis 1 (H1).

The more similar the micro-movements between the participant and the person in the advertisement, the higher the participant’s empathy scores for the advertisement.

The following section explains our operational definition of micro-movement signals, how the signals were measured from the participant and the advertisement, and how the participant’s subjective assessment of empathy was acquired.

2.2. Experimental Design

The main experiment was a one-factor design (empathy factor) with two levels (empathy and non-empathy). Each participant viewed two empathy conditions (i.e., within-subject design), manifested in an empathy or non-empathy advertisement, and responded to an empathy questionnaire. The design of the stimuli (i.e., advertisement) and the questionnaire are explained in Section 2.3.

The dependent measurements involved the similarity of micro-movements between the participant and the stimulus, specifically, the similarity between the micro-movement signals extracted from the participant and those from the person in the advertisement. Cross-entropy was used as a similarity metric. Cross-entropy is suitable for the comparison of periodic distributions. The more similar the two distributions, the closer the cross-entropy is to zero [72]. This study extracted the micro-movement signals by filtering the power spectrum between 0.75 Hz and 2.5 Hz corresponding to 45~150 bpm when static. However, this filtering range may vary according to the context, situation, and use cases. The details of the analysis are explained in Section 3.

2.3. Participants

Thirty participants (15 males and 15 females) voluntarily participated in the experiment. The mean age of participants was 22 (±2) years. None of the participants had a medical history of cardiovascular disease. The participants had an uncorrected or corrected visual acuity of 0.6 or better and were able to wear soft contact lenses but not glasses. Written informed consent was obtained from all the participants prior to the experiment. All participants were compensated for their participation.

Empathy varies with demographic characteristics, such as age [28], race [73], education [74], and gender [75]. Researchers have suggested an inverse-U-shaped pattern as a function of age, with middle-aged adults showing higher empathy than young adults [28]. Meta-analyses of gender differences in empathy support that women have more empathy than men [28,75,76]. One study reported a decline in empathy among undergraduate nursing students as they advanced through training [74]. The empathic neural response is increased for members of the same race, but not for other races [73]. Due to such demographic variance, the most recent (2021) massive survey (n = 3486) on the experience of empathy [77] quota sampled to reflect the U.S. population on demographic parameters. However, all empirical lab studies on empathy, including ours, have limitations when generalizing. We balanced the N of gender (15) and confirmed that gender did not have an effect on the dependent measures and ensured that the ethnicity of the participants (i.e., Korean) was consistent with the characters in the video stimuli. However, we acknowledge the limitation for generalizing the findings, such that the results may only apply to younger adults. Further studies are needed to confirm this hypothesis.

2.4. Procedures and Materials

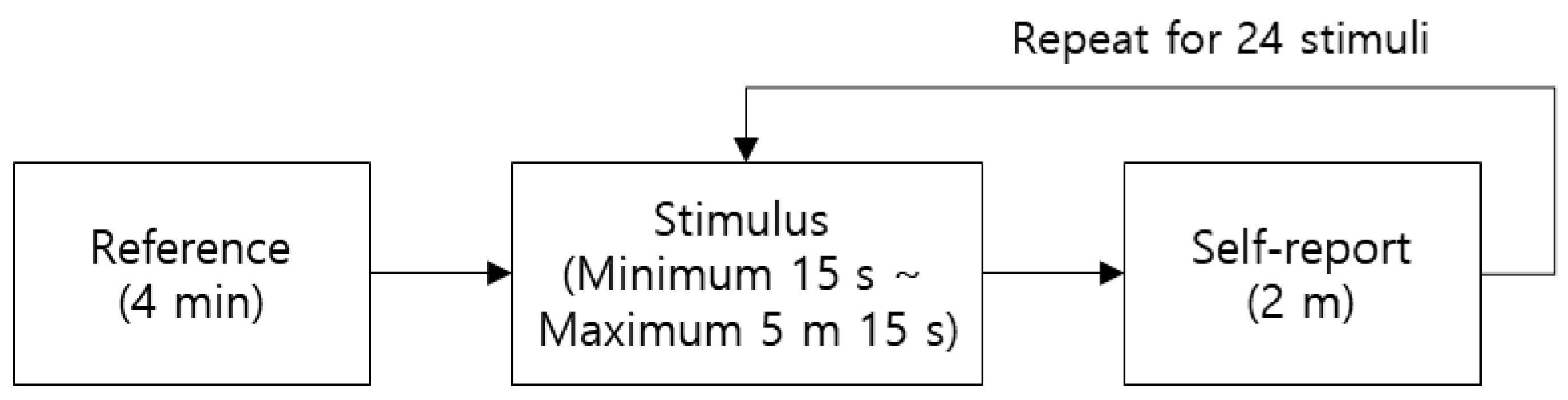

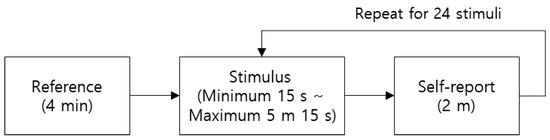

The experimental procedure is shown in Figure 1. The participants stared at the blank screen for four minutes to stabilize their physiological state. For each stimulus, participants viewed an advertisement video and responded to a self-report questionnaire. Each condition (empathy and non-empathy) had 12 stimuli, so participants viewed 24 advertisements in total. The stimuli were presented in random order.

Figure 1.

Experimental procedure.

Participants’ frontal views, which were necessary for extracting the micro-movement signals, were recorded at 30 fps, 1920 × 1080 pixels, using a web camera installed on the monitor while they viewed the stimuli, as shown in Figure 2.

Figure 2.

Experimental environment.

2.4.1. Video Stimuli (Advertisements)

Marketing researchers have explored empathy as a construct for estimating advertising effects. Escalas and Stern suggested that well-developed stories elicit higher levels of empathy than poorly developed ones [15]. Classical drama advertisements that have clear causality have been better able to hook viewers into commercials than vignettes. Emotionally driven advertisements have a positive impact on consumers’ engagement and empathy [8,78,79]. In short, advertisements that elicit viewers’ empathy tend to provide a clear context behind the story, in addition to an emotional appeal [14,79,80]. As a result, we chose three criteria for selecting the video stimuli: (1) causality of the storyline, (2) advertising appeal type, and (3) the degree of empathy.

Nine emotion researchers viewed and assessed 50 candidate advertisements. The candidates were limited to those targeting the younger generation in their 20 s and 30 s, consistent with the participant pool. For each criterion related to the candidate, the researchers responded from −3 to +3 on a six-point Likert scale. Per criteria 1, researchers scored from −3 (ambiguous causality) to +3 (clear causality) for the story of the advertisement. Per criteria 2, they scored from −3 (rational appeal) to +3 (emotional appeal) for the advertising appeal type. Finally, according to criteria 3, they scored from −3 (not empathetic) to +3 (empathetic).

We classified the candidates into empathy advertisements if the average score for the evaluators was above zero for all three criteria. Conversely, we classified them into non-empathy advertisements if the score was below zero. For each advertisement group (empathy and non-empathy), we sorted the advertisements into four product advertisements (energy boosters, snacks, computer peripheral devices (e.g., printer)) and selected the three best advertisements for each product group. That is, we selected 12 advertisements for each condition (empathy and non-empathy).

Empathy advertisements tend to be longer than non-empathy advertisements because the viewer requires some time for the narrative to “sink in”. In contrast, non-empathy advertisements focus on the presentation of prominent models and products. For example, an energy booster’s empathy advertisement has a story involving a student exhausted from studying being revitalized after drinking an energy drink. The non-empathy advertisement, however, featured a character dancing with an energy drink and did not have a particular narrative.

2.4.2. Subjective Evaluations

As empathy is a multifaceted construct that includes both cognitive and affective processes, we adopted a comprehensive and empirically validated questionnaire with the participants’ ethnicity (i.e., Korean). We used the Consumer Empathic Response to Advertising Scale [81,82], which consists of 11 items, as shown in Table 1. The factor loading exceeded 0.4 and Cronbach’s alpha exceeded 0.8. The questionnaire included three empathy factors: cognitive empathy, affective empathy, and identification empathy. The dependent variable for analysis was the sum of all 11 items.

Table 1.

Questionnaire about Empathy to Video Contents.

All questions were rated on a seven-point Likert scale. We asked for the degree of agreement with each empathy statement, with the lowest scale labeled “strongly disagree” and the highest scale labeled “strongly agree”. The survey was collected through a web survey rather than a paper questionnaire.

3. Analysis

This study aimed to analyze whether the similarity of micro-movement signals between participants and advertisements differs according to the user’s perceived empathy (i.e., subjective evaluation) with the advertisement video. The signal processing to filter only the micro-movements caused by the heartbeat is described in detail in Section 3.1. In addition, a method for calculating the cross-entropy, an indicator of similarity between the two signals, is described. Section 3.2 describes the statistical difference in the similarity between the participant and advertisement measured by cross-entropy according to the empathy score.

3.1. Signal Processing

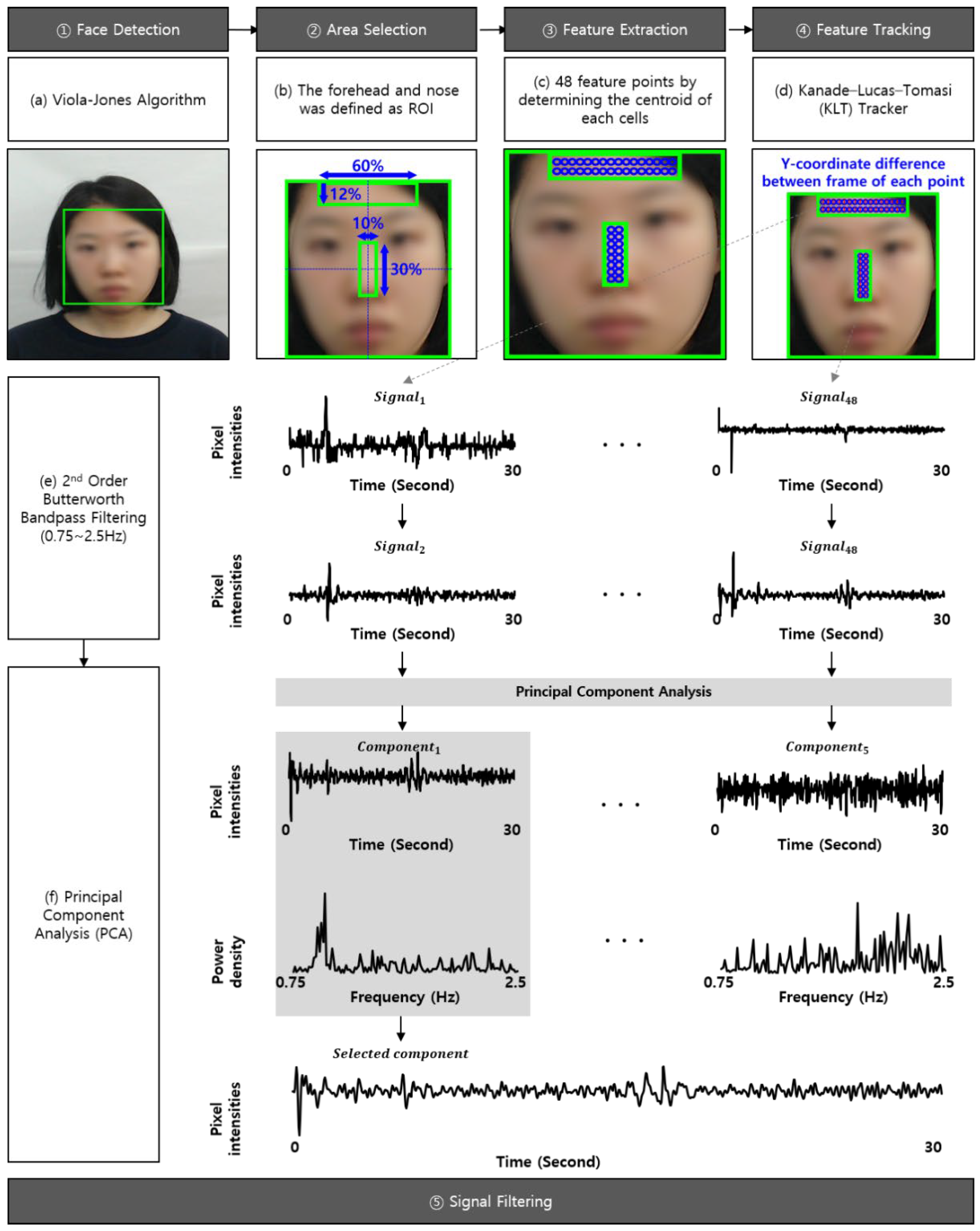

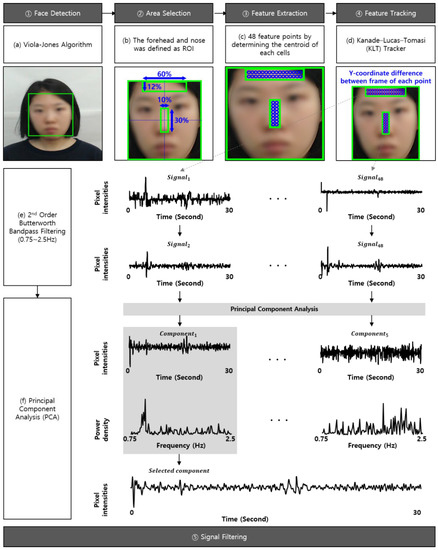

The micro-movement signals were measured from the participant’s facial videos, as shown in Figure 3. Ballistocardiographic changes are reflected to the face and can be measured at a distance, as validated by Balakrishnan [68]. The face was detected from the facial video using the Viola-Jones face detector and was defined as a region of interest (ROI). As the forehead and nose were more robust to facial expressions than other facial regions, the ROI was divided into multiple ROIs by cropping to the middle 60% of the width and top 12% of the height (i.e., forehead region) and the middle 10% of the width and middle 30% of the height (i.e., nose region).

Figure 3.

Signal processing of the micro-movements [71]. (a) Face detection using Viola-Jones algorithm; (b) Area selection using the forehead and nose defined as ROIs; (c) Feature extraction using the GFTT algorithm; (d) Feature tracking using the KLT tracker; (e) Bandpass filtering for signals in 30 s window buffer using the second order Butterworth filter; (f) Decomposition of noise using PCA.

Determining the feature point within multiple ROIs was necessary to measure the movements induced by the BCG. Although several studies on remote BCG employed the good-feature-to-track (GFTT) algorithm [83,84], their feature point numbers were not fixed because the algorithm determined the feature points based on the solid edge components. It was difficult to employ the GFTT algorithm in this study because the feature points needed to be re-determined quickly owing to the frequent change of the screen and the face movement.

Thus, the ROIs of the forehead and nose regions were divided into cells using 16 × 2 and 2 × 8 grids, respectively. This study employed 48 feature points by determining the centroid of each cell as a feature point. The movements were measured by tracking the y-coordinate difference between frames of each feature point using the Kanade-Lucas-Tomasi (KLT) tracker because the BCG movements were generated up and down by the heartbeat [85,86,87].

The movements measured from the face are a combination of facial expressions, voluntary head movements, and micro-movements. Therefore, it is essential to remove motion artifacts due to facial expressions and voluntary head movements from the measured movements. First, the movements were filtered by a second order Butterworth bandpass filter with a cut-off 0.75–2.5 Hz corresponding to 45–150 bpm. Then, the movements were normalized from their mean value (i.e., μ) and standard deviation (σ) by z-score. If the movements exceeded the μ + −2σ, they were determined to be noise, due to the subtle movements, and their mean value (i.e., μ) was corrected. Finally, principal component analysis (PCA) was performed to estimate the micro-movement from the mixed movements by decomposing the noise from facial expressions and voluntary head movements. This study extracted five components using PCA and then selected one component with the highest peak in their power spectrum converted using a fast Fourier transform. The selected component was finally determined to be micro-movements.

3.2. Statistical Analysis

As empathy is an individualized experience, the manner in which each stimulus affects each participant varies. Individualized response is affected by factors, such as the individual’s empathy capability, predisposed tendency, and past experience (for an extensive review of empathy as a concept, see [88]). The observer’s (i.e., the person who empathizes) mood and personality are also an important modulating factor [89]. Such individual differences mean that, in our study, the empathy stimuli selected by the emotion experts do not necessarily elicit empathy from the participants. Therefore, we applied an inclusion criterion to the participants’ subjective empathy scores to select response sets from certain stimuli for analysis. We selected data obtained from stimuli that scored, on average (i.e., the mean of all 30 participants), on or higher than four for the empathy condition. In the seven-point Likert scale, four was the middle point, labeled as “Neutral”. Conversely, we selected data obtained from stimuli that scored less than four on average for the non-empathy condition. This selection process yielded response sets from four out of the original 12 stimuli in the empathy condition and six out of the original 12 stimuli in the non-empathy condition.

In short, we analyzed 60 samples (30 participants in two empathy conditions) consisting of subjective empathy scores and cross-entropy data. A paired t-test was used to test this hypothesis.

4. Results

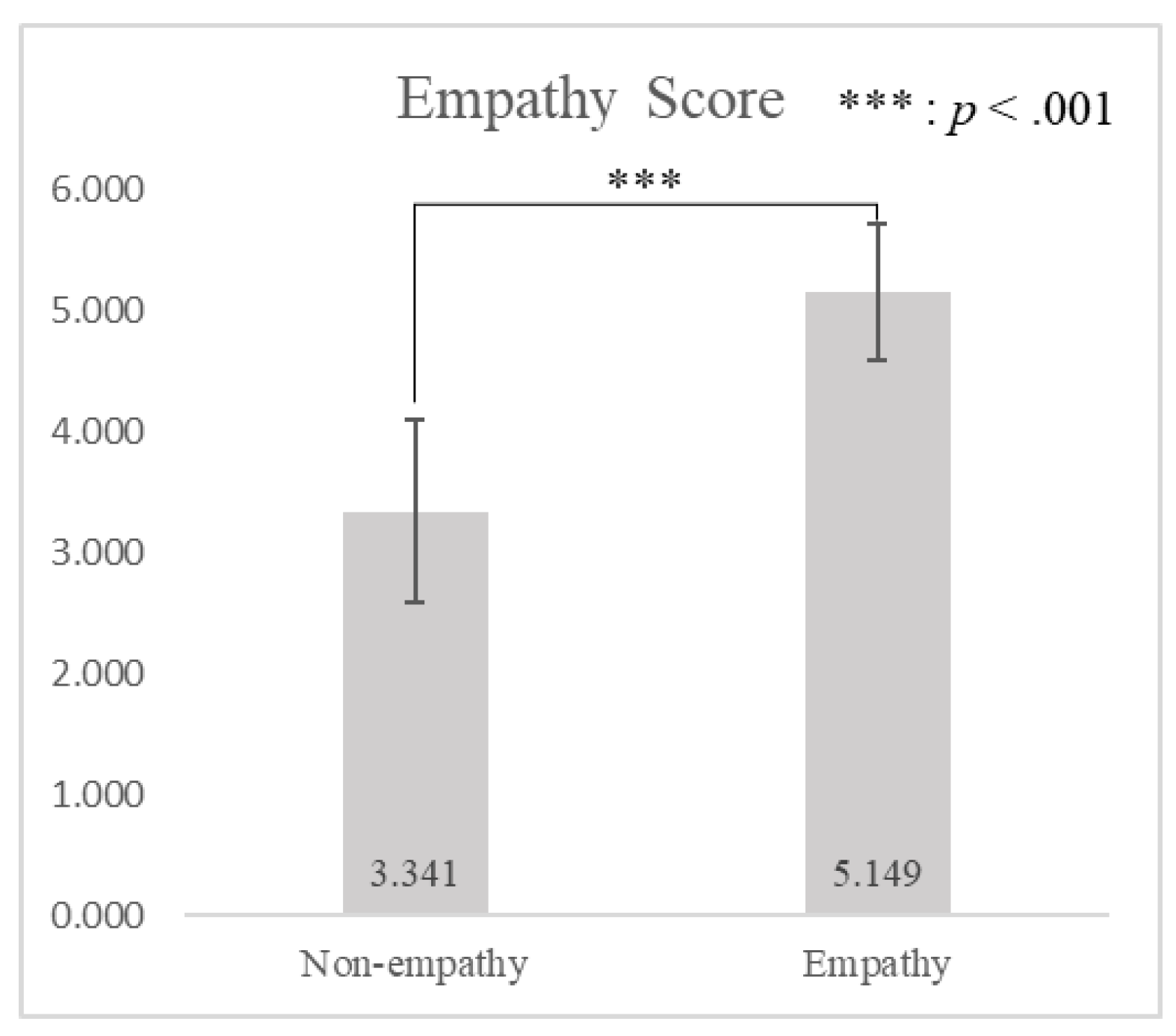

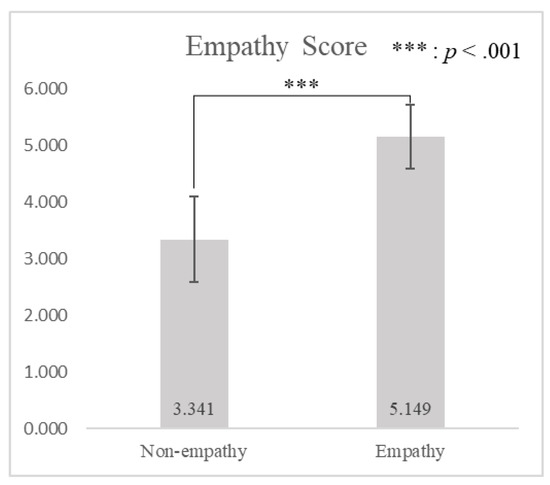

The study analyzed differences in the micro-movement similarity between empathy and non-empathy conditions using a t-test. The results showed that there was a significant difference in the subjective empathy score between empathy and non-empathy conditions induced by advertisements (t(29) = −11.754, p < 0.001), as shown in Figure 4. The subjective empathy score was significantly higher when watching empathy advertisements (μ = 5.149, σ = 0.564) than non-empathy advertisements (μ = 3.341, σ = 0.759).

Figure 4.

A comparison of empathy scores for non-empathy and empathy advertisements by paired t-test.

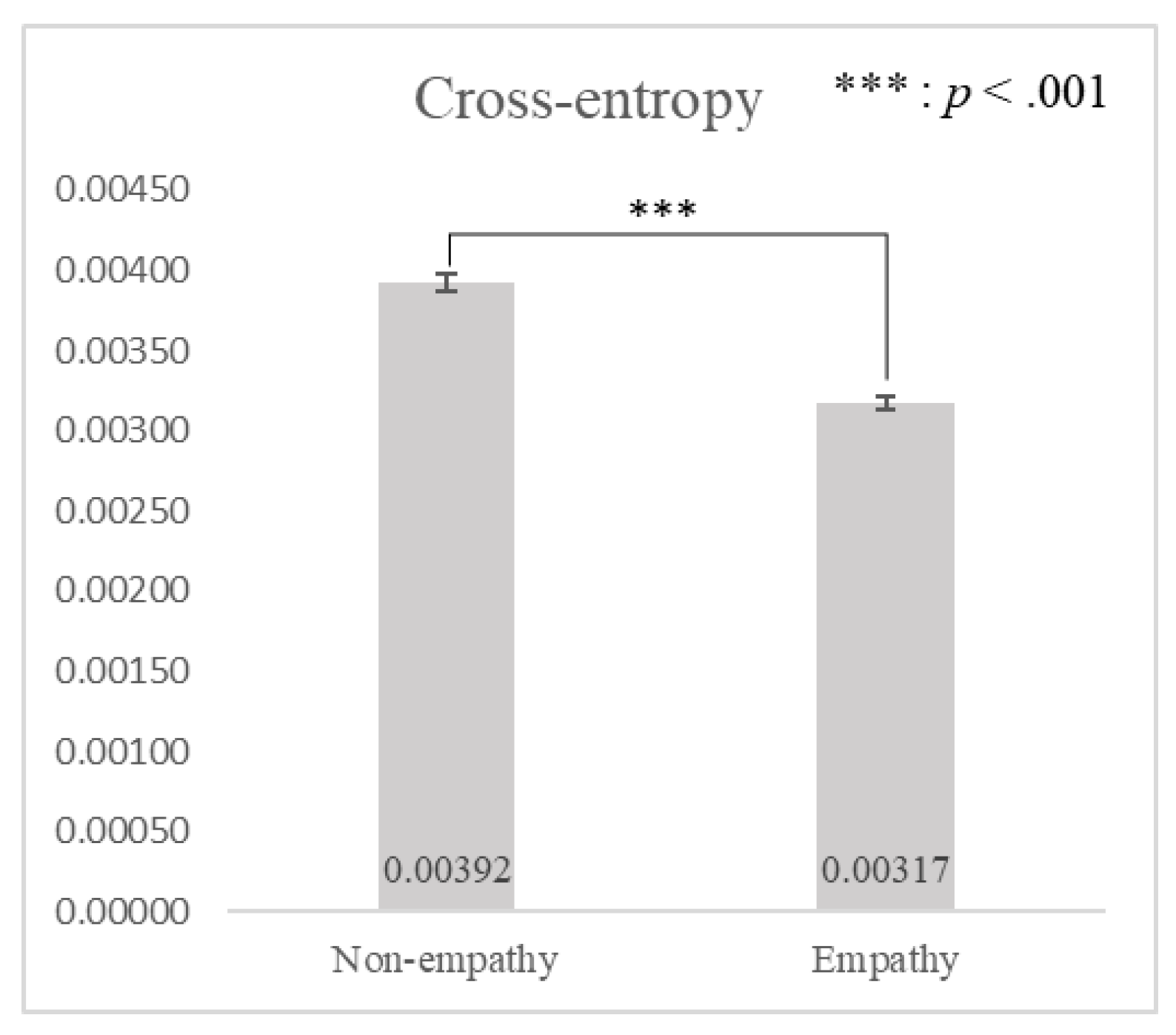

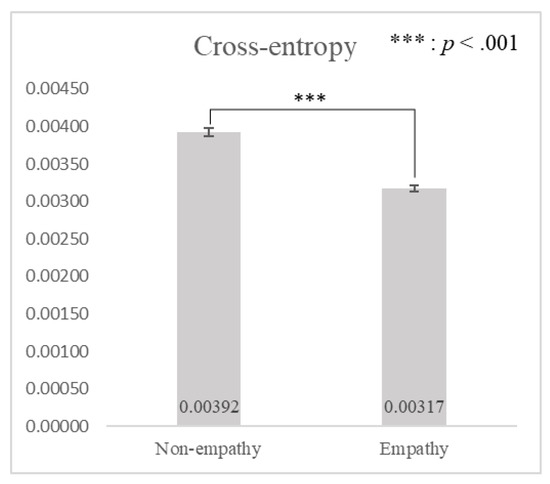

There was a significant statistical difference in cross-entropy between empathy and non-empathy advertisements (t(29) = 61.019, p < 0.001), as shown in Figure 5. As predicted, cross-entropy was significantly lower when watching empathy advertisements (μ = 0.00317, σ = 0.00005) than non-empathy advertisements (μ = 0.00392, σ = 0.00005). This supported hypothesis H1, which stated that the more similar the micro-movements (i.e., the lower the cross-entropy) between the participant and person in the advertisement, the higher the participant’s empathy scores for the advertisement (i.e., empathy advertisements).

Figure 5.

A comparison of cross-entropy between non-empathy and empathy advertisements by paired t-test.

The Pearson correlation indicated that cross-entropy was also significantly associated with empathy score (r = −0.796, p < 0.001), indicating an inverse relationship between cross-entropy and the empathy scores. That is, the lesser cross-entropy, the higher the empathy scores.

5. Discussion

In summary, our study invited participants to view advertisements classified as empathy or non-empathy advertisements by experts. During each viewing of the advertisement, we recorded their facial data and obtained their subjective empathy scores after each viewing. We analyzed the cross-entropy between the participant’s and the person’s facial data and found that it was significantly lower when viewing empathy advertisements than when viewing non-empathy advertisements.

To the best of our knowledge, this is the first study to apply remote BCG methods to understand empathy-based micro-movement synchronization in a real-world use case (i.e., viewing an advertisement). Our research confirmed that the higher the similarity of micro-movement between the participants and the advertisements, the higher the subjective empathy. The results validate the remote BCG methods with the accompanying analysis process (e.g., cross-entropy analysis), suggesting an alternative or complementary method to the subjective empathy scales.

Our findings also provide implications for understanding the empathic interactions of human dyads. In human communication, information is shared through natural language (i.e., explicit channels), whereas empathy is mainly shared through embodied synchrony (i.e., implicit channels). The latter synchronization is widely observed in human communication and is reflected in the harmonization of the heart rhythm. In other words, the heartbeat tends to follow the rhythm of someone who empathizes. Such mutual entrainment has been defined as two interacting nonlinear oscillating systems with different periods becoming a common period [90]. Although challenging, advances in technology enable us to tap into heartbeat traces through the carotid artery, reflected in the facial micro-movement. Our study confirmed that microscopic vibration is a valid indicator of dyadic empathy synchronization in an ecologically valid scenario.

In previous studies that measured empathy based on unconscious physiological responses [41,43], it was also verified that the correlation between the heartbeat patterns of two people was higher in the empathy condition than in the non-empathy condition. They measured heart rate patterns by attaching an ECG sensor to the participant’s skin. The task of eliciting empathy was overly simplified, such as facing each other, and only momentary emotions were of concern, resulting in limitations to generalization. Although they can effectively elicit a definite empathic response, the emotion dynamics were not considered.

In addition, there were fewer applications measuring empathy for digital content because of the challenge in solving the barrier of obtrusive measurement and consideration of the dynamic nature of empathy. This study suggested a practical method for measuring empathy that complements the issue of contact-based empathy measurement that obstructs users’ immersion in the content.

The hypothesis of the present study was tested under experimental conditions by manipulating product advertisements. This study acknowledges that there were large differences among the durations of the stimuli, and the stimuli were only focused on product commercials. However, the differences in time duration among stimuli did not affect the similarity because the similarity between the two signals was analyzed in the frequency domain. That is, because the similarity of the periodicity of the two signals was analyzed, the time length of the signal did not have a significant effect. Even if there was an effect, the empathy stimuli, which had a long duration, were difficult to make similar to the non-empathy stimuli, which had a short duration, because they had to vibrate at a similar frequency for a longer period of time.

This study suggests an application framework for evaluating empathy in interaction (e.g., viewing) with digital content. As our suggested method is non-contact and unobtrusive to real-life behavior (e.g., consuming media), future research agendas seem promising. Specifically, future studies may investigate content in other media domains (e.g., movies, TV shows, video games).

However, we acknowledge that a larger N would be needed to achieve the appropriate power to completely rule out false positives. We acknowledge that our N is small (30) and, as such, we conducted a post hoc power analysis with the program G*Power [91] with power set at 0.8 and α = 0.05, d = 0.5, two-tailed. The results suggest that an N value of approximately 34 would be needed to achieve appropriate statistical power.

Empathy is a multifaceted social psychological construct that is affected by many factors, such as the relationship and history between the observer (i.e., empathizer) and the observed. Such social relationships are also shaped by intimacy, while favorability also comes into play. As empathy is dependent on context and task [89,92], our study has an inherent limitation in generalization.

We also acknowledge that empathic expression is a result of a combination of many nonverbal modalities (e.g., voice, facial expression, posture). We focused on a singular modality, the facial movements captured from the involuntary heartbeat, because such measures could also be confounded by noise. Moreover, there can be a gap between the actual emotion the actor felt and the physiological measurement we acquired. Such a gap can be measured through a combination of expressive measures (facial muscle movement, gestures) and implicit measures (heart rate, GSR). Future studies may investigate multimodal recognition of empathy, in addition to facial micro-movements.

We strived to filter out the signals that represent empathy from the signal spectrum as closely as possible to the target population by guiding the participant not to move and to refrain from exaggerating facial expressions. We did not include any participants who may have made significant movements that would confound our results, such as participants with Tourette syndrome or a person with bruxism.

Privacy issues that may arise from identifying individuals can be crucial in research that considers prosocial behaviors. However, the suggested method of recognizing empathy can enhance privacy by not saving personal identification data (i.e., original record video) in the database. Only the processed secondary data (i.e., micro-movement signals) can be saved in the database by analyzing video frames in real-time without recording the face images. Then, the synchronization data can be analyzed if only a key can match (i.e., random number) an advertisement and a viewer. The analyzed micro-movement features are hardly restored to the original facial image, so it is impossible to identify its data.

Author Contributions

A.C. designed the study with an investigation of previous studies and performed the experiments and original draft preparation; S.P. wrote the review and edited with an investigation of previous studies; H.L. analyzed raw data and developed micromovement algorithm; M.W. conceived the study and was in charge of overall direction and planning. All authors have read and agreed to the published version of the manuscript.

Funding

“This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF-2020R1A2B5B02002770)” and “This work was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2021R1I1A1A01045641)”.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of the Sangmyung University, Seoul, Korea (SMUIRB C-2019-015).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zillmann, D. Empathy: Affective reactivity to others’ emotional experiences. Psychol. Entertain. 2006, 151–181. [Google Scholar]

- Hoffman, M.L. Interaction of affect and cognition in empathy. Emot. Cogn. Behav. 1984, 103–131. [Google Scholar]

- Kerem, E.; Fishman, N.; Josselson, R. The Experience of Empathy in Everyday Relationships: Cognitive and Affective Elements. J. Soc. Pers. Relatsh. 2001, 18, 709–729. [Google Scholar] [CrossRef]

- Davis, M.H. Empathy. In Handbook of the Sociology of Emotions; Springer: Boston, MA, USA, 2006; pp. 443–466. [Google Scholar]

- Busselle, R.; Bilandzic, H. Measuring Narrative Engagement. Media Psychol. 2009, 12, 321–347. [Google Scholar] [CrossRef]

- Eisenberg, N. Empathy and Sympathy: A Brief Review of the Concepts and Empirical Literature. Anthrozoös 1988, 2, 15–17. [Google Scholar] [CrossRef]

- Galimberti, C.; Gaggioli, A.; Brivio, E.; Caroli, F.; Chirico, A.; Rampinini, L.; Trognon, A.; Vergine, I. Transformative Conversations. Questioning collaboration in digitally mediated interactions. Ann. Rev. Cyberther. Telemed. 2020, 18, 77–80. [Google Scholar]

- Teixeira, T.; Wedel, M.; Pieters, R. Emotion-Induced Engagement in Internet Video Advertisements. J. Mark. Res. 2012, 49, 144–159. [Google Scholar] [CrossRef]

- Batra, R.; Ray, M.L. Affective Responses Mediating Acceptance of Advertising. J. Consum. Res. 1986, 13, 234–249. [Google Scholar] [CrossRef]

- Howard, D.J.; Gengler, C. Emotional Contagion Effects on Product Attitudes: Figure 1. J. Consum. Res. 2001, 28, 189–201. [Google Scholar] [CrossRef]

- Belanche, D.; Flavián, C.; Rueda, A.P. Understanding Interactive Online Advertising: Congruence and Product Involvement in Highly and Lowly Arousing, Skippable Video Ads. J. Interact. Mark. 2017, 37, 75–88. [Google Scholar] [CrossRef]

- Jeon, Y.A. Skip or Not to Skip: Impact of Empathy and Ad Length on Viewers’ Ad-Skipping Behaviors on the Internet. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2018; pp. 261–265. [Google Scholar]

- Adelaar, T.; Chang, S.; Lancendorfer, K.M.; Lee, B.; Morimoto, M. Effects of Media Formats on Emotions and Impulse Buying Intent. J. Inf. Technol. 2003, 18, 247–266. [Google Scholar] [CrossRef]

- Deighton, J.; Romer, D.; McQueen, J. Using Drama to Persuade. J. Consum. Res. 1989, 16, 335–343. [Google Scholar] [CrossRef]

- Escalas, J.E.; Stern, B.B. Sympathy and Empathy: Emotional Responses to Advertising Dramas. J. Consum. Res. 2003, 29, 566–578. [Google Scholar] [CrossRef]

- Steyn, P.; Pitt, L.; Chakrabarti, R. Financial services ads and viewer response profiles: Psychometric properties of a shortened scale. J. Financ. Serv. Mark. 2011, 16, 210–219. [Google Scholar] [CrossRef]

- Stout, P.A.; Rust, R.T. Emotional Feelings and Evaluative Dimensions of Advertising: Are They Related? J. Advert. 1993, 22, 61–71. [Google Scholar] [CrossRef]

- Hyun, S.S.; Kim, W.; Lee, M.J. The impact of advertising on patrons’ emotional responses, perceived value, and behavioral intentions in the chain restaurant industry: The moderating role of advertising-induced arousal. Int. J. Hosp. Manag. 2011, 30, 689–700. [Google Scholar] [CrossRef]

- Balconi, M.; Canavesio, Y. Emotional contagion and trait empathy in prosocial behavior in young people: The contribution of autonomic (facial feedback) and Balanced Emotional Empathy Scale (BEES) measures. J. Clin. Exp. Neuropsychol. 2013, 35, 41–48. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, E.J.; Shaw, P.; Baker, D.; Baron-Cohen, S.; David, A.S. Measuring empathy: Reliability and validity of the Empathy Quotient. Psychol. Med. 2004, 34, 911–920. [Google Scholar] [CrossRef] [Green Version]

- Spreng, R.N.; McKinnon, M.C.; Mar, R.A.; Levine, B. The Toronto Empathy Questionnaire: Scale Development and Initial Validation of a Factor-Analytic Solution to Multiple Empathy Measures. J. Pers. Assess. 2009, 91, 62–71. [Google Scholar] [CrossRef] [Green Version]

- Jabbi, M.; Swart, M.; Keysers, C. Empathy for positive and negative emotions in the gustatory cortex. NeuroImage 2007, 34, 1744–1753. [Google Scholar] [CrossRef]

- De Corte, K.; Buysse, A.; Verhofstadt, L.L.; Roeyers, H.; Ponnet, K.; Davis, M.H. Measuring Empathic Tendencies: Reliability And Validity of the Dutch Version of the Interpersonal Reactivity Index. Psychol. Belg. 2007, 47, 235–260. [Google Scholar] [CrossRef]

- Albiero, P.; Matricardi, G.; Speltri, D.; Toso, D. The assessment of empathy in adolescence: A contribution to the Italian validation of the “Basic Empathy Scale. J. Adolesc. 2009, 32, 393–408. [Google Scholar] [CrossRef] [PubMed]

- Hogan, R. Development of an empathy scale. J. Consult. Clin. Psychol. 1969, 33, 307–316. [Google Scholar] [CrossRef]

- Singer, T.; Klimecki, O.M. Empathy and compassion. Curr. Biol. 2014, 24, R875–R878. [Google Scholar] [CrossRef] [Green Version]

- Pfeifer, J.H.; Iacoboni, M.; Mazziotta, J.C.; Dapretto, M. Mirroring others’ emotions relates to empathy and interpersonal compe-tence in children. Neuroimage 2008, 39, 2076–2085. [Google Scholar] [CrossRef] [Green Version]

- O’Brien, E.; Konrath, S.; Grühn, D.; Hagen, L. Empathic Concern and Perspective Taking: Linear and Quadratic Effects of Age Across the Adult Life Span. J. Gerontol. Ser. B 2013, 68, 168–175. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Craighero, L. The mirror-neuron system. Annu. Rev. Neurosci. 2004, 27, 169–192. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barsade, S.G. The Ripple Effect: Emotional Contagion and its Influence on Group Behavior. Adm. Sci. Q. 2002, 47, 644–675. [Google Scholar] [CrossRef] [Green Version]

- Husserl, E. Phenomenology and the Foundations of the Sciences; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Husserl, E. Cartesian Meditations: An Introduction to Phenomenology; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Duffy, K.A.; Chartrand, T.L. Mimicry: Causes and consequences. Curr. Opin. Behav. Sci. 2015, 3, 112–116. [Google Scholar] [CrossRef]

- Bavelas, J.B.; Black, A.; Lemery, C.R.; Mullett, J. I show how you feel”: Motor mimicry as a communicative act. J. Pers. Soc. Psychol. 1986, 50, 322. [Google Scholar] [CrossRef]

- Yokozuka, T.; Ono, E.; Inoue, Y.; Ogawa, K.-I.; Miyake, Y. The Relationship between Head Motion Synchronization and Empathy in Unidirectional Face-to-Face Communication. Front. Psychol. 2018, 9. [Google Scholar] [CrossRef]

- Komori, M.; Nagaoka, C. The relationship between body movements of clients and counsellors in psychother-apeutic counselling: A study using the video-based quantification method. Jpn. J. Cogn. Psychol. 2011, 8, 1–9. [Google Scholar]

- Nagaoka, C.; Komori, M. Body Movement Synchrony in Psychotherapeutic Counseling: A Study Using the Video-Based Quantification Method. IEICE Trans. Inf. Syst. 2008, E91-D, 1634–1640. [Google Scholar] [CrossRef] [Green Version]

- Palumbo, R.V.; Marraccini, M.E.; Weyandt, L.L.; Wilder-Smith, O.; McGee, H.A.; Liu, S.; Goodwin, M.S. Interpersonal Autonomic Physiology: A Systematic Review of the Literature. Pers. Soc. Psychol. Rev. 2016, 21, 99–141. [Google Scholar] [CrossRef] [PubMed]

- Stratford, T.; Lal, S.; Meara, A. Neurophysiology of therapeutic alliance. Gestalt. J. Aust. 2009, 5, 19–47. [Google Scholar]

- Stratford, T.; Lal, S.; Meara, A. Neuroanalysis of therapeutic alliance in the symptomatically anxious: The physiological con-nection revealed between therapist and client. Am. J. Psychother. 2012, 66, 1–21. [Google Scholar] [CrossRef]

- Park, S.; Choi, S.J.; Mun, S.; Whang, M. Measurement of emotional contagion using synchronization of heart rhythm pattern between two persons: Application to sales managers and sales force synchronization. Physiol. Behav. 2019, 200, 148–158. [Google Scholar] [CrossRef]

- Ferrer, E.; Helm, J.L. Dynamical systems modeling of physiological coregulation in dyadic interactions. Int. J. Psychophysiol. 2013, 88, 296–308. [Google Scholar] [CrossRef]

- Chatel-Goldman, J.; Congedo, M.; Jutten, C.; Schwartz, J.-L. Touch increases autonomic coupling between romantic partners. Front. Behav. Neurosci. 2014, 8, 95. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reed, R.G.; Randall, A.K.; Post, J.H.; Butler, E.A. Partner influence and in-phase versus anti-phase physiological linkage in romantic couples. Int. J. Psychophysiol. 2013, 88, 309–316. [Google Scholar] [CrossRef]

- Feijt, M.A.; de Kort, Y.A.; Westerink, J.H.; Okel, S.; IJsselsteijn, W.A. The effect of simulated feedback about psychophysiological synchronization on perceived empathy and connectedness. Annu. Rev. Cyberther. Telemed. 2020, 117. [Google Scholar]

- Marci, C.D.; Ham, J.; Moran, E.; Orr, S.P. Physiologic Correlates of Perceived Therapist Empathy and Social-Emotional Process during Psychotherapy. J. Nerv. Ment. Dis. 2007, 195, 103–111. [Google Scholar] [CrossRef] [PubMed]

- Koole, S.L.; Tschacher, W. Synchrony in Psychotherapy: A Review and an Integrative Framework for the Therapeutic Alliance. Front. Psychol. 2016, 7, 862. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ramseyer, F.; Tschacher, W. Nonverbal synchrony of head-and body-movement in psychotherapy: Different sig-nals have different associations with outcome. Front. Psychol. 2014, 5, 979. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stephens, G.; Silbert, L.J.; Hasson, U. Speaker-listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. USA 2010, 107, 14425–14430. [Google Scholar] [CrossRef] [Green Version]

- Yun, K.; Watanabe, K.; Shimojo, S. Interpersonal body and neural synchronization as a marker of implicit social interaction. Sci. Rep. 2012, 2, srep00959. [Google Scholar] [CrossRef] [Green Version]

- Zaki, J.; Ochsner, K.N. The neuroscience of empathy: Progress, pitfalls and promise. Nat. Neurosci. 2012, 15, 675–680. [Google Scholar] [CrossRef]

- Tullett, A.M.; Harmon-Jones, E.; Inzlicht, M. Right frontal cortical asymmetry predicts empathic reactions: Support for a link between withdrawal motivation and empathy. Psychophysiology 2012, 49, 1145–1153. [Google Scholar] [CrossRef] [PubMed]

- Singer, T.; Seymour, B.; O’Doherty, J.P.; Stephan, K.E.; Dolan, R.J.; Frith, C.D. Empathic neural responses are modulated by the perceived fairness of others. Nature 2006, 439, 466–469. [Google Scholar] [CrossRef]

- Peng, W.; Lou, W.; Huang, X.; Ye, Q.; Tong, R.K.-Y.; Cui, F. Suffer together, bond together: Brain-to-brain synchronization and mutual affective empathy when sharing painful experiences. NeuroImage 2021, 238, 118249. [Google Scholar] [CrossRef]

- McGuigan, F.J.; Andreassi, J.L. Psychophysiology—Human Behavior and Physiological Response. Am. J. Psychol. 1981, 94, 359. [Google Scholar] [CrossRef]

- Neumann, D.L.; Westbury, H.R. The psychophysiological measurement of empathy. In Psychology of Empathy; Griffith University: Queenland, Australia, 2011; pp. 119–142. [Google Scholar]

- Kodama, K.; Tanaka, S.; Shimizu, D.; Hori, K.; Matsui, H. Heart Rate Synchrony in Psychological Counseling: A Case Study. Psychology 2018, 09, 1858–1874. [Google Scholar] [CrossRef] [Green Version]

- Salminen, M.; Jarvela, S.; Ruonala, A.; Harjunen, V.; Jacucci, G.; Hamari, J.; Ravaja, N. Evoking Physiological Synchrony and Empathy Using Social VR with Biofeedback. IEEE Trans. Affect. Comput. 2019, 3045, 1. [Google Scholar] [CrossRef]

- Werner, J.; Hornecker, E. United-Pulse: Feeling Your Partner’s Pulse. In Proceedings of the MobileHCI, Amsterdam, The Netherlands, 2–5 September 2008; ACM: New York, NY, USA, 2008; pp. 535–538. [Google Scholar]

- Janssen, J.H.; Bailenson, J.N.; Ijsselsteijn, W.; Westerink, J.H.D.M. Intimate Heartbeats: Opportunities for Affective Communication Technology. IEEE Trans. Affect. Comput. 2010, 1, 72–80. [Google Scholar] [CrossRef] [Green Version]

- Rouast, P.; Adam, M.; Chiong, R.; Cornforth, D.; Lux, E. Remote heart rate measurement using low-cost RGB face video: A technical literature review. Front. Comput. Sci. 2018, 12, 858–872. [Google Scholar] [CrossRef]

- Van Gastel, M.M.; Stuijk, S.; De Haan, G.G. Motion Robust Remote-PPG in Infrared. IEEE Trans. Biomed. Eng. 2015, 62, 1425–1433. [Google Scholar] [CrossRef]

- Blanik, N.; Abbas, A.K.; Venema, B.; Blazek, V.; Leonhardt, S. Hybrid optical imaging technology for long-term remote monitoring of skin perfusion and temperature behavior. J. Biomed. Opt. 2014, 19, 016012. [Google Scholar] [CrossRef] [Green Version]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef] [Green Version]

- Kamshilin, A.A.; Miridonov, S.; Teplov, V.; Saarenheimo, R.; Nippolainen, E. Photoplethysmographic imaging of high spatial resolution. Biomed. Opt. Express 2011, 2, 996–1006. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moco, A.V.; Stuijk, S.; De Haan, G. Ballistocardiographic artifacts in PPG imaging. IEEE Trans. Biomed. Eng. 2015, 63, 1804–1811. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Starr, I.; Rawson, A.J.; Schroeder, H.A.; Joseph, N.R. Studies on the Estimation of Cardiac Output in Man, and of Abnor-malities in Cardiac Function, from the heart’s Recoil and the blood’s Impacts; the Ballistocardiogram. Am. J. Physiol. Leg. Content 1939, 127, 1–28. [Google Scholar] [CrossRef]

- Balakrishnan, G.; Durand, F.; Guttag, J. Detecting Pulse from Head Motions in Video. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3430–3437. [Google Scholar]

- Shan, L.; Yu, M. Video-based heart rate measurement using head motion tracking and ICA. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Melbourne, Australia, 15–18 September 2013; Volume 1, pp. 160–164. [Google Scholar]

- Hassan, M.A.; Malik, A.S.; Fofi, D.; Saad, N.M.; Ali, Y.S.; Meriaudeau, F. Video-Based Heartbeat Rate Measuring Method Using Ballistocardiography. IEEE Sens. J. 2017, 17, 4544–4557. [Google Scholar] [CrossRef]

- Lee, S.; Cho, A.; Whang, M. Vision-Based Measurement of Heart Rate from Ballistocardiographic Head Movements Using Unsupervised Clustering. Sensors 2019, 19, 3263. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, W.; Pokharel, P.P.; Principe, J.C. Correntropy: A localized similarity measure. In Proceedings of the 2006 IEEE international Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16 July 2006; pp. 4919–4924. [Google Scholar]

- Chiao, J.Y.; Mathur, V.A. Intergroup Empathy: How Does Race Affect Empathic Neural Responses? Curr. Biol. 2010, 20, R478–R480. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ward, J.; Cody, J.; Schaal, M.; Hojat, M. The Empathy Enigma: An Empirical Study of Decline in Empathy among Undergraduate Nursing Students. J. Prof. Nurs. 2012, 28, 34–40. [Google Scholar] [CrossRef]

- Christov-Moore, L.; Simpson, E.A.; Coudé, G.; Grigaityte, K.; Iacoboni, M.; Ferrari, P.F. Empathy: Gender effects in brain and be-havior. Neurosci. Biobehav. Rev. 2014, 46, 604–627. [Google Scholar] [CrossRef] [Green Version]

- Thompson, A.; Voyer, D. Sex differences in the ability to recognise non-verbal displays of emotion: A meta-analysis. Cogn. Emot. 2014, 28, 1164–1195. [Google Scholar] [CrossRef]

- Depow, G.J.; Francis, Z.; Inzlicht, M. The Experience of Empathy in Everyday Life. Psychol. Sci. 2021, 32, 1198–1213. [Google Scholar] [CrossRef]

- Bagozzi, R.P.; Moore, D.J. Public service advertisements: Emotions and empathy guide prosocial behavior. J. Mark. 1994, 58, 56–70. [Google Scholar] [CrossRef]

- Rawal, M.; Saavedra Torres, J.L. Empathy for Emotional Advertisements on Social Networking Sites: The Role of Social Identity. 2017. Available online: http://www.mmaglobal.org/publications/MMJ/MMJ-Issues/2017-Fall/MMJ-2017-Fall-Vol27-Issue2-Rawal-SaavedraTorres-pp88-102.pdf (accessed on 10 October 2021).

- Green, M.C.; Brock, T.C. In the Mind’s Eye: Transportation-Imagery Model of Narrative Persuasion; Psychology Press: New York, NY, USA, 2002. [Google Scholar]

- Soh, H. Measuring Consumer Empathic Response to Advertising Drama. J. Korea Contents Assoc. 2014, 14, 133–142. [Google Scholar] [CrossRef]

- Yoo, S.; Whang, M. Vagal Tone Differences in Empathy Level Elicited by Different Emotions and a Co-Viewer. Sensors 2020, 20, 3136. [Google Scholar] [CrossRef]

- Haque, M.A.; Irani, R.; Nasrollahi, K.; Moeslund, T.B. Facial video-based detection of physical fatigue for maximal muscle activity. IET Comput. Vis. 2016, 10, 323–330. [Google Scholar] [CrossRef] [Green Version]

- Tommasini, T.; Fusiello, A.; Trucco, E.; Roberto, V. Making good features track better. In Proceedings of the 1998 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (Cat. No.98CB36231), Santa Barbara, CA, USA, 6 August 2002; pp. 178–183. [Google Scholar]

- Bouguet, J.Y. Pyramidal implementation of the affine lucas kanade feature tracker description of the algorithm. Intel. Corp. 2001, 5, 4. [Google Scholar]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. WIDER FACE: A Face Detection Benchmark. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 5525–5533. [Google Scholar]

- Tomasi, C.; Kanade, T.T. Detection and Tracking of Point Features, Carnegie Mellon University Technical Report CMU-CS-91-132, April 1991. Available online: https://cecas.clemson.edu/~stb/klt/tomasi-kanade-techreport-1991.pdf (accessed on 10 October 2021).

- Cuff, B.; Brown, S.; Taylor, L.; Howat, D.J. Empathy: A Review of the Concept. Emot. Rev. 2016, 8, 144–153. [Google Scholar] [CrossRef]

- De Vignemont, F.; Singer, T. The empathic brain: How, when and why? Trends Cogn. Sci. 2006, 10, 435–441. [Google Scholar] [CrossRef] [Green Version]

- Schimansky-Geier, L.; Kuramoto, Y. Chemical Oscillations, Waves, and Turbulence; Springer Series in Synergetics 19; Springer: Berlin/Heidelberg, Germany; New York, NY, USA; Tokyo, Japan, 1984; ISBN 3-540-13322-4. [Google Scholar]

- Erdfelder, E.; Faul, F.; Buchner, A. GPOWER: A general power analysis program. Behav. Res. Methods Instrum. Comput. 1996, 28, 1–11. [Google Scholar] [CrossRef]

- Davis, M.H. Empathy: A Social Psychological Approach; Routledge: London, UK, 2018. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).