1. Introduction

Affective computing, the study, analysis, and interpretation of human emotional reactions by means of artificial intelligence [

1], has become a hot topic in the scientific community. Possible applications include accurate neuromarketing techniques, more efficient human-machine interfaces and new wellness and/or healthcare practices, with innovative therapies for phobias and mental illnesses [

2,

3,

4,

5,

6]. Recently, the prevention of violent attacks on vulnerable people by means of the early detection of fear or panic emotional reactions is under research in this area [

7].

In affective computing, many research areas merge to provide efficient and accurate systems capable of classifying the emotion felt by a person. Apart from psychology, neuroscience and physiology, other disciplines are required to automate the emotion detection process as well as to allow in-depth data analysis and useful feedback.

Human emotions are the consequence of biochemical reactions in the brain. External stimuli are processed in certain brain regions such as the amygdala, insula and prefrontal cortex [

8,

9,

10]. These areas activate the autonomic nervous system, which triggers physiological changes as an emotional response. From the global emotional response, we can distinguish conscious and unconscious processes. The cognitive component in the emotion obtains a high degree of consciousness and can feedback the physiological reactions chain.

The measuring and processing of these physiological reactions allow automatizing the emotion detection and classification process, known as affective computing. If this detection involves several sources of information, it is known as multimodal affective computing. Validity and corroboration issues have made physiological variables the most attractive to researchers. Multimodal recordings commonly used are Galvanic Skin Response (GSR), ElectroMyoGraphy (EMG) (frequency of muscle tension), Heart Rate (HR), Respiration Rate (RR), ElectroEncephaloGraphy (EEG), functional Magnetic Resonance Imaging (fMRI), and Positron Emission Tomography (PET) [

11], even though behavioural measurements such as facial expressions, voice, movement, and subjective self-reporting can also be useful for experimental purposes.

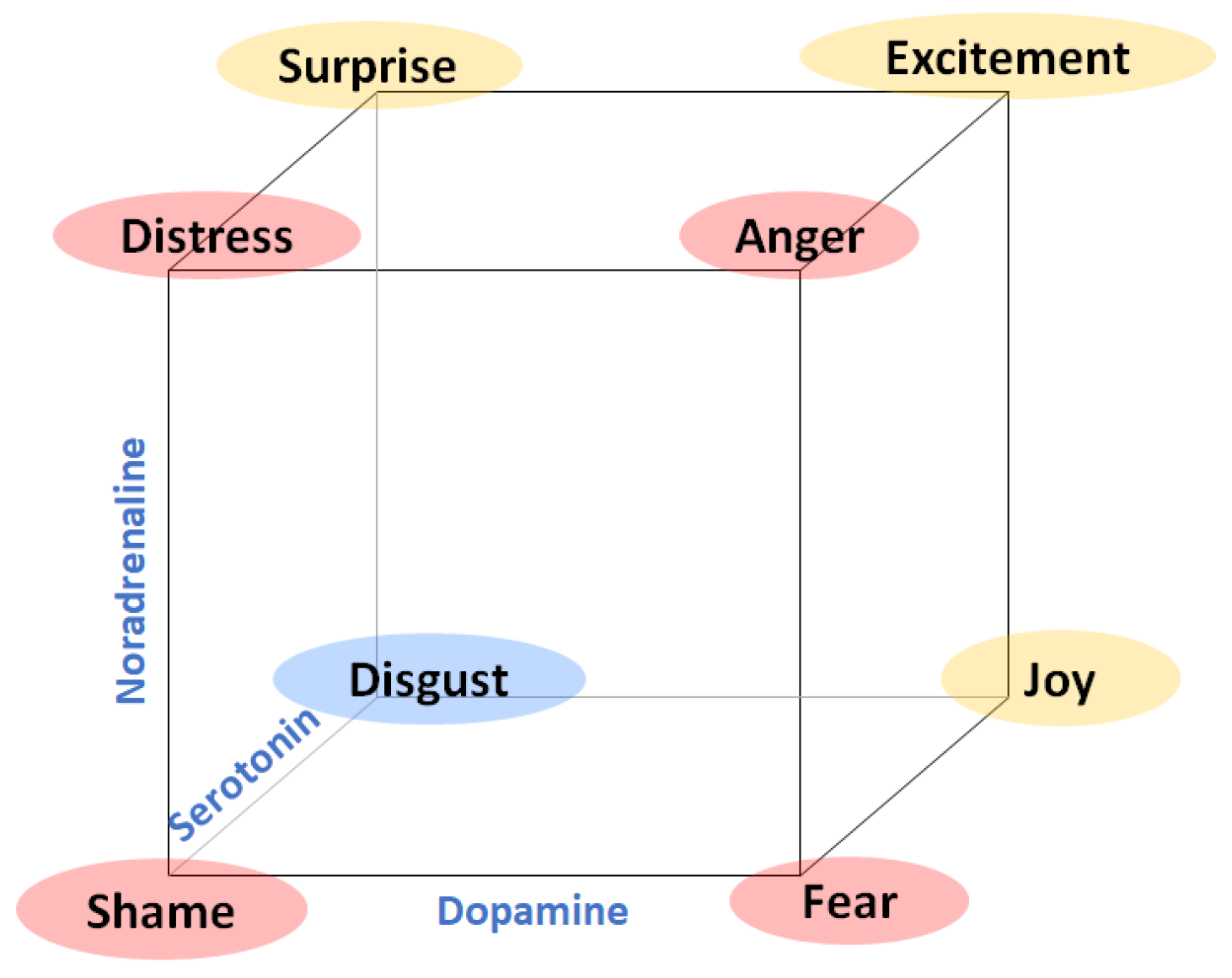

In this sense, some authors have related non-external physiological variables with emotional reactions [

12]. For example, the levels of neurotransmitters in the brain or circulating catecholamines vary depending on a person’s emotional state, affecting activity of physiological variables. Although their measures are very invasive, the relation between physiological variable changes and the concentration of these molecules makes them interesting in some applications of affective computing. For example, in risk situations, this early detection of fear or panic emotions would trigger a protection response for the person in danger. To date, there is no study using catecholamine concentration in blood plasma for emotion detection that includes an experimental sample in humans, just theoretical studies.

The concentration of catecholamines is usually measured in urine to diagnose or rule out the presence of certain tumours such as pheochromocytoma or neuroblastoma because these tumours raise the levels significantly. However, in basal conditions, the levels are low and can be detected in blood by high-performance liquid chromatography (HLPC) techniques.

Continuous and autonomous measurement of these molecules is not available currently, but if they prove useful, wearable analysis devices could be designed and developed, similar to insulin micropumps [

13].

In this work, a methodology and protocol are proposed to connect the elicitation of human emotions with the variation of plasma catecholamine concentration. For this first test, fear is chosen as the target emotion for two main reasons. On the one hand, the relationship between neurotransmitters and stress or fear is well documented in the literature, as they are responsible for the activation of the body’s fight or flight mechanisms. On the other hand, the protection of women against gender-based violence has been chosen as a target application. For this purpose, the objective is to be able to detect fear automatically so that an alarm is triggered to protect women in danger. Although there is already work in this area, so far only physiological variables have been used. In order to validate if the inclusion of catecholamine plasma concentration improves the results, an immersive virtual reality environment has been arranged to provoke realistic situations where the volunteer could have intense emotional reactions. Continuous monitoring of physiological variables, with a research toolkit system (for the sake of comparison with other affective computing research works), is connected with the virtual environment, as well as to an interface for the classification of the emotions elicited. The detection of emotions in humans through the plasma concentration of catecholamines has been analysed and compared with externally measured physiological variables, such as SKT, HR and EDA. The main obtained results are very positive with regard to physiological variables while they are not conclusive for the levels of catecholamine concentration in blood plasma.

The main contributions of this work can be summarized as:

The design of a methodology for plasma catecholamine concentration measurement along with physiological variables under audiovisual stimuli for automatic fear detection.

An experimental test involving 21 volunteers where dopamine, adrenaline and noradrenaline are measured along with blood volume pulse, skin temperature, galvanic skin response, respiration rate, and electromyography.

An analysis of the data collected, including both physiological variables and catecholamine concentration separately and also combined.

An implementation and comparison of three artificial intelligence methods for fear detection using the measurements collected in the experimental test in order to validate the convenience of including plasma catecholamine concentration in fear detection systems.

The rest of this paper is organized as follows:

Section 2 provides a review of the state of the art regarding emotion theory, automatic emotion detection, and physiological response related to catecholamines and emotion. As result, we can formulate the hypothesis of this work.

Section 3 describes the methodology used in this work for the experimental setup, including the sample description, the design of the study, the stimuli used, the labelling method, and the collected measurements.

Section 4 presents the experimental results (for labelling, physiological variables and catecholamine concentration). Additionally, we present an artificial intelligence algorithm analysis in order to validate the hypothesis formulated previously. The discussion is presented in

Section 5, and finally,

Section 6 concludes the work.

3. Material and Methods

In this section, we present the proposed methodology for data collection of both physiological variables and catecholamines in an immersive environment for emotion elicitation. Since the design of this experiment involves the extraction of blood samples for the analysis of catecholamines in blood plasma, and the number of samples cannot be high, fear has been chosen as the target emotion, since, as discussed in

Section 2, it is highly related to the release of catecholamines.

In addition, some considerations have to be taken into account. As stated before, one of the objectives of the authors is to apply multimodal affective computing to the protection of women victims of gender-based violence. For this reason, the sample of this study is entirely composed of women, and the proposed final application also influences the choice of one of the audio-visual stimuli, which is directly related to gender violence.

3.1. Sample of the Study

The study population consisted of 21 volunteers, all of them apparently healthy women. All of them were Spanish women, and healthcare workers. Study subjects were not allowed to perform strenuous exercise, smoke, eat some foods, or take drugs or some medicines (

Table 1) at least 24 h before analysis, to avoid interference with catecholamines measurement.

Main data of female volunteers are registered in

Table 2. The mean age of the volunteers is 36. Only 5 of them had one child, and 13 volunteers were single. With regard to Body Mass Index (BMI), only 4 volunteers presented values between 25 and 30, overweight indicative. Finally, 4 volunteers are in their menopause. Some volunteers (6) were taking treatments for chronic illnesses (hypertension, chronic pain, heart failure, ulcerative colitis, anaemia, and diabetes).

The study conforms to the ethical principles outlined in the Declaration of Helsinki. Design of the study was approved by the Research Ethics Committee (REC) of Principe de Asturias Hospital with protocol number: CLO (LIB 10/2019). All participants received a detailed description of the purpose and design of the study and signed informed consent approved by the REC.

3.2. Design of the Study

The study consisted in measuring the physiological variables of a set of volunteers while they were watching a set of 4 emotion-related videos in an immersive virtual reality environment. Additionally, several blood extractions were performed after the visualization of three of these videos to analyse the plasma catecholamine levels (dopamine, adrenaline, and nor-adrenaline). Besides, after every video watching, the volunteer labelled the emotions elicited during the visualization.

Each participant fasted at least twelve hours before the experiment. Previously to the experiment, the participant filled in a form providing information such as personality traits, sex, age group, recent physical activity, or medication (which could alter the participant’s physiological response), self-identified emotional loads, and mood bias (fears, phobias, or traumatic experiences), summarized in

Table 2. This information could be relevant and informative to the emotional reactions of the participants during the experiment, affecting their cognition, appraisal, and attention.

The experiment was designed to last globally 2 h. In

Figure 3, the schedule of the experiment is shown. After the interview, filling in the questionnaire, and signing the informed consent, the test schedule and protocol were explained to every volunteer and some demo was performed in relation to the virtual reality environment. Then, the sensors for measuring the physiological variables were located. The BioSignalPlux

® research toolkit system was used to register the physiological variables evolution throughout the study, such as forearm skin temperature, galvanic skin response, finger blood volume pulse (BVP), trapezoidal electromyogram, and chest respiration. The system is placed in different locations in the volunteer’s body (arm, hand, chest, and finger), (

Figure 4). These physiological signals were selected because they could be easily implemented in an inconspicuous and comfortable wearable device, avoiding any disadvantage to the user. There are smartwatches that already integrate BVP, GSR, and SKT sensors. Respiration and EMG could be integrated into a patch or band. This characteristic is mandatory for this type of application.

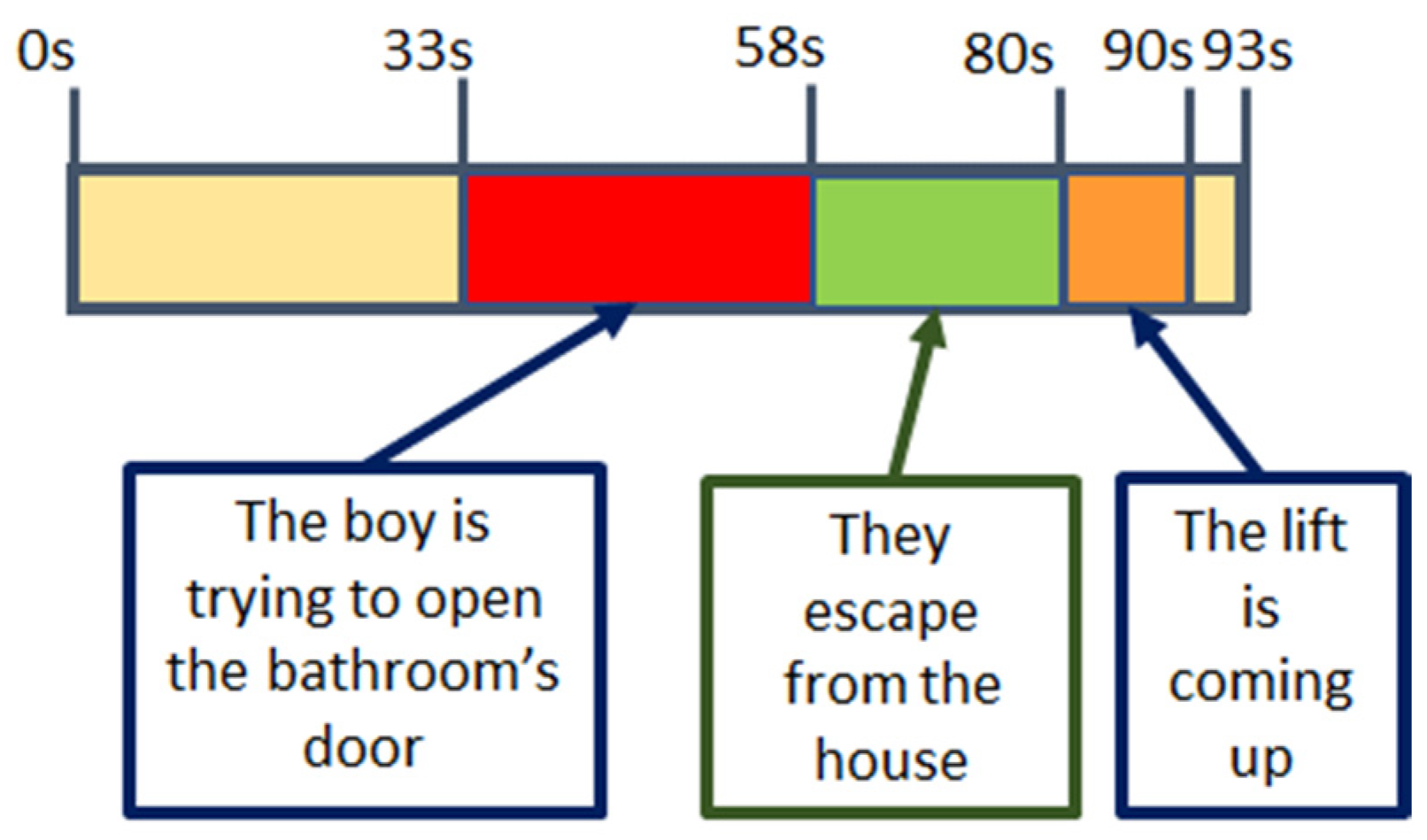

Once explained how to handle the equipment to label each video, the nurse proceeded to put a via in the antecubital vein to extract blood samples at different time points of the study, at the beginning (basal point) and after each video (5 samples). Each subject watched four unexpected videos related to different emotions that had to be labelled according to what she was feeling at that moment. Just after finishing each video a blood sample was taken. After videos 2, 3 and 4, five samples were collected, separated 1 min each, to monitor the changes in catecholamine levels, (

Figure 5).

3.3. Audiovisual Stimulus

Every subject watched four videos, two of them related to the emotion of fear, one related to calm and the other one related to joy. The schedule is Calm Fear Joy Fear. The order of fear-related videos is randomly set for each volunteer.

The video clips used for the experiment were selected from the UC3M4Safety Database of audiovisual stimuli aimed to elicit different emotional reactions through an immersive virtual reality environment [

62] (see

Figure 6). Most of the clips were 360-degree scenes providing more realistic experiences.

The Oculus™ Rift S Headset was used under an application built on Unity™ that connects the video clips projection to the physiological monitoring system and records the emotion labelling. The whole data recording system was initiated by the virtual reality environment that manages both video stimuli and sensor measurement. A TCP/IP port connection was created at the beginning of the trial to communicate with the OpenSignals application. The information storage was divided by scenes, meaning each file contained the information collected between two timestamps (start and end of each screen) set by the environment, thus enabling synchronization.

The four video clips were V1, V2, V3, and V4, aimed to provoke calm, fear (gender-based violence related), joy and fear, respectively.

V1: “Nature”—calm

V2: “Refugiado”—fear related to gender-based violence

V3: “Don’t stop me now”—joy

V4: “Inside chamber of horrors”—general fear

These videos obtained a very good unanimity in discrete emotion, higher in the case of women for the fear and joy clips while the mean and standard deviations in the PAD affective space dimension are also closer than expected for fear clips and for women, (

Table 3). In this table, the discrete emotion labelled for every video is shown for the experiment detailed in [

28], as well as the three dimensions of the PAD affective space. As it could be seen, V2 has a very high unanimity in the discrete emotion of fear in women, and also V4. Regarding PAD variables, the dispersion and the mean are complying with the expected ranges.

3.4. Labelling

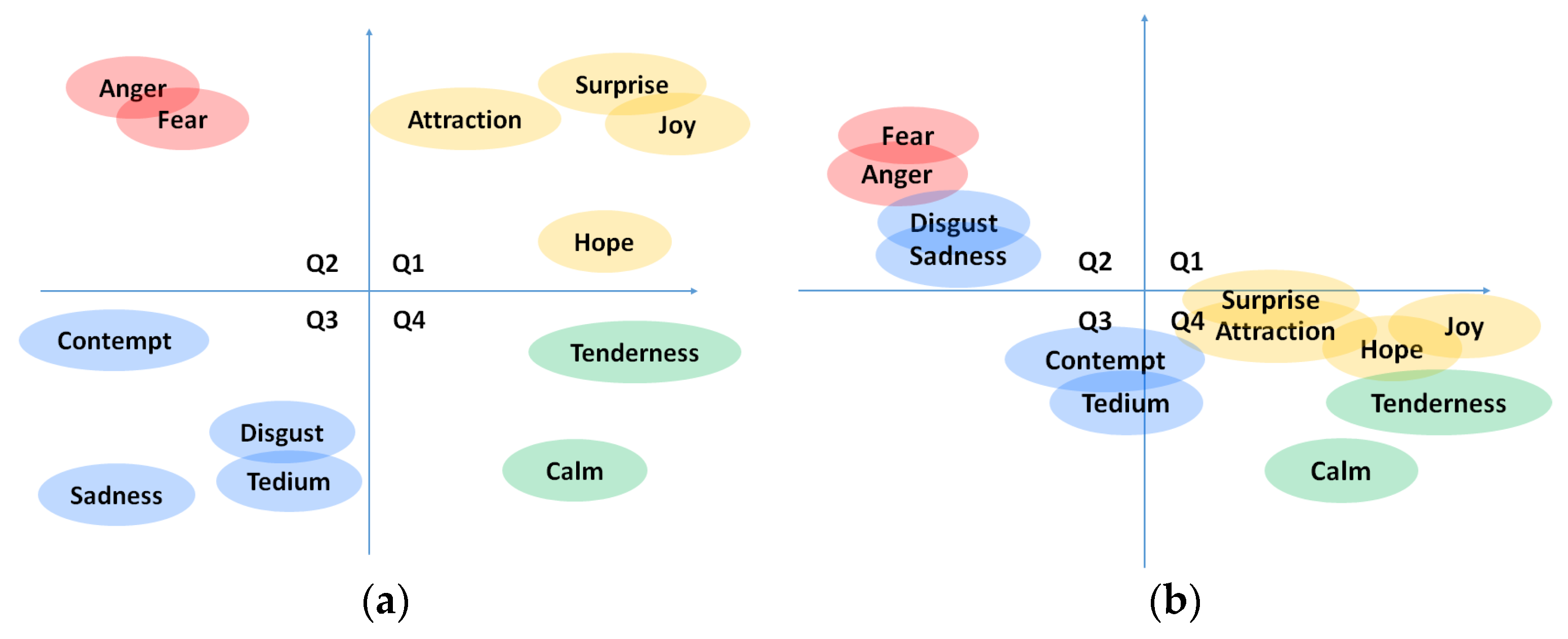

In order to try to overcome the problems related to labelling method mentioned above, in this work, we have decided to include both a discrete classification of emotions (joy, hope, surprise, attraction, tenderness, calm, tedium, contempt, sadness, fear, disgust, and anger), plus an indicator of emotional intensity to be able to detect more nuances, and the classification in the PAD affective space using the SAM methodology [

63] (see

Figure 7). As depicted in

Figure 3, the labelling is carried out just after the blood sample collection.

3.5. Measurement of Dopamine, Adrenaline and Noradrenaline

We have carried out the determination of catecholamines in 3 mL of plasma by high-performance liquid chromatography (HPLC). Blood samples were collected in pre-chilled EDTA-treated tubes, in the morning after a 12-h overnight fast and resting period. As several samples had to be taken every few times after watching each video, a via was placed to assist sample collection from each point of the study. Plasmas were immediately separated, to prevent catecholamines degradation, by centrifugation at 2000× g for 15 min at 4 °C. After that, the plasma was collected in clean and pre-chilled tubes and then stored at −80 °C until measured. All plasmas were properly submitted to Reference Laboratory S.A. (L’Hospitalet de Llobregat, Barcelona, Spain) to measure by HPLC the adrenaline, noradrenaline and dopamine in each sample.

Measurement of serotonin requires serum instead of plasma, needing the extraction of additional 5 mL blood samples from each volunteer. Apart from the extra cost, equivalent to measuring the other three catecholamines, the large number of samples required has prevented the authors from analysing the evolution of serotonin concentrations during the study.

5. Discussion

The study conducted in this work presents four main results. First, a methodology and protocol have been defined to connect the elicitation of human emotions with the variation of plasma catecholamine concentration. An immersive virtual reality environment has been arranged to provoke realistic situations where the volunteer could have intense emotional reactions. A continuous monitoring of physiological variables, with a research toolkit system (for the sake of comparison with other affective computing research works), is connected with the virtual environment, as well as a labelling procedure for discrete emotions and continuous PAD affective space dimensions. These three elements have been presented in previous works by the authors [

65]. The novelty added to this method is to determine whether a person’s emotions can be reliably recorded, assessing the differences or similarities between recording different physiological variables and measuring plasma catecholamine levels. The blood extraction must be performed after the video clip visualization to not interfere in the emotion elicitation but as soon as possible to detect the concentration peaks and valleys due to the emotion processed in the brain, which provokes a change in plasma catecholamine concentration. A pattern in the concentration variation has been looked for, as well as different classifiers, typical in affective computing, to determine the feasibility of using catecholamines for detecting fear emotions in a person.

Second, the emotion labels obtained during the study guaranteed the elicitation of the target emotions. The video clips selected were those with the best scores in terms of unanimity, in discrete and continuous emotions classifications, from the UC3M4Safety database [

62]. The video clips’ durations were between 60 s and 119 s. The 21 volunteers labelled the emotion felt during the video clip visualization in a very close way to the target emotion, especially for video clips V04 (fear) and V01 (calm), while for the other clips, at least the PA quadrant is maintained, (

Figure 8). Every video clip provoked the target emotions, and, except for two volunteers, every volunteer labelling process matched with the rest of them, (

Figure 9). Therefore, the variation in the measures of physiological variables and plasma catecholamine concentration per video clip, whatever they were, can be associated with a specific emotion.

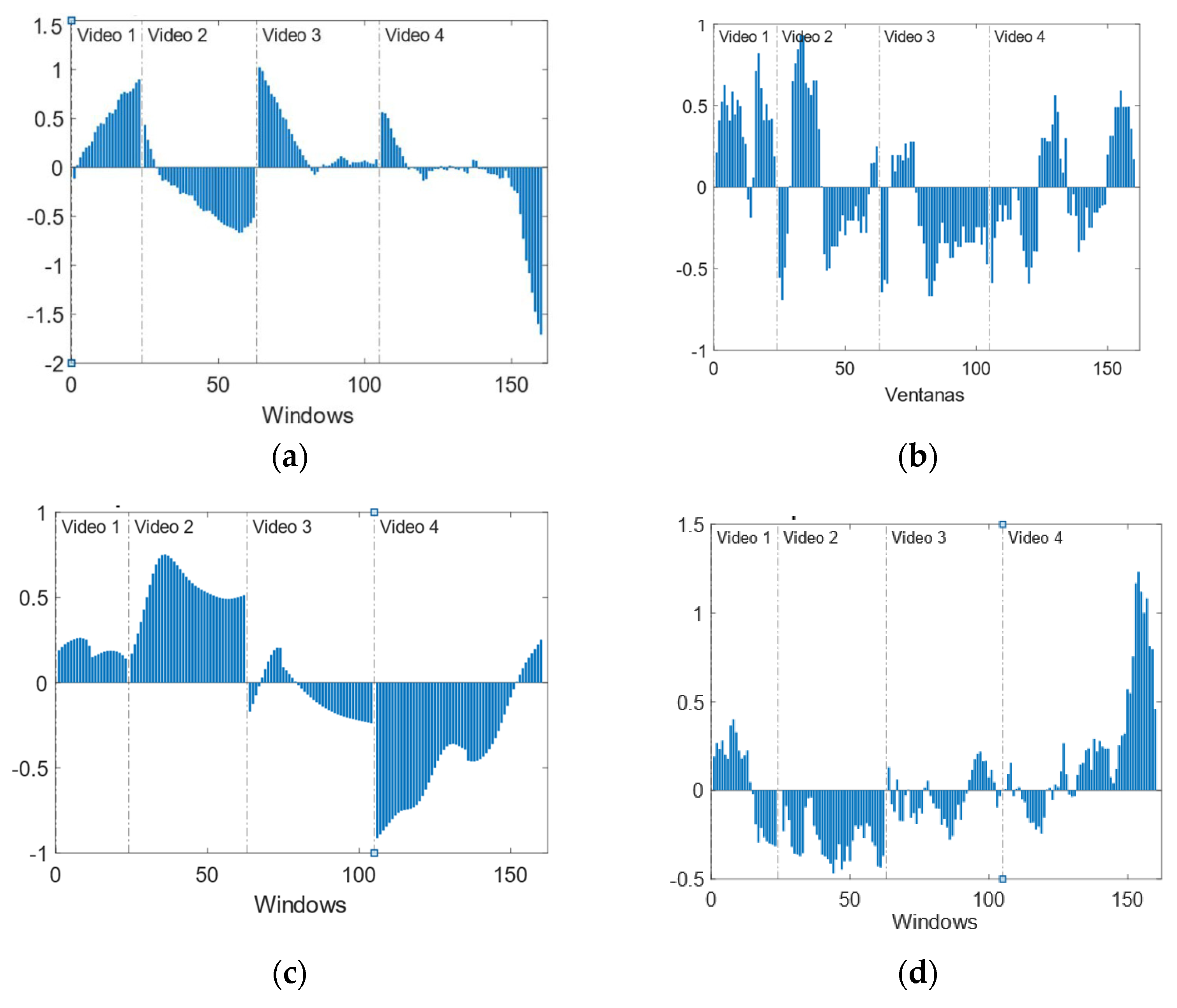

Third, the physiological variables measured during the study, and the features extracted from them (IBI, GSR number of peaks, GSR mean and HRV) present similar behaviour as in previous works [

7,

65]. Statistically representative differences between fear-related video clip V04 and joy and calm clips (V03 and V01) were found for the GSR mean, as well as between V01 (calm), V02 (fear related to Gender-based violence) and V04 (fear) for IBI. The classifiers applied to generate an artificial intelligence algorithm to detect fear emotional reactions present good results for windows of 20 s and 60 s, although the results were better for wider windows, and ENS model, with a True Negative Rate of 1 and a True Positive Rate of 0.83, (

Table 8).

It should be noted that the amount of data compiled during the experiment was large due to the sampling frequency (200 Hz), making easier the training and testing processes for affective computing tasks.

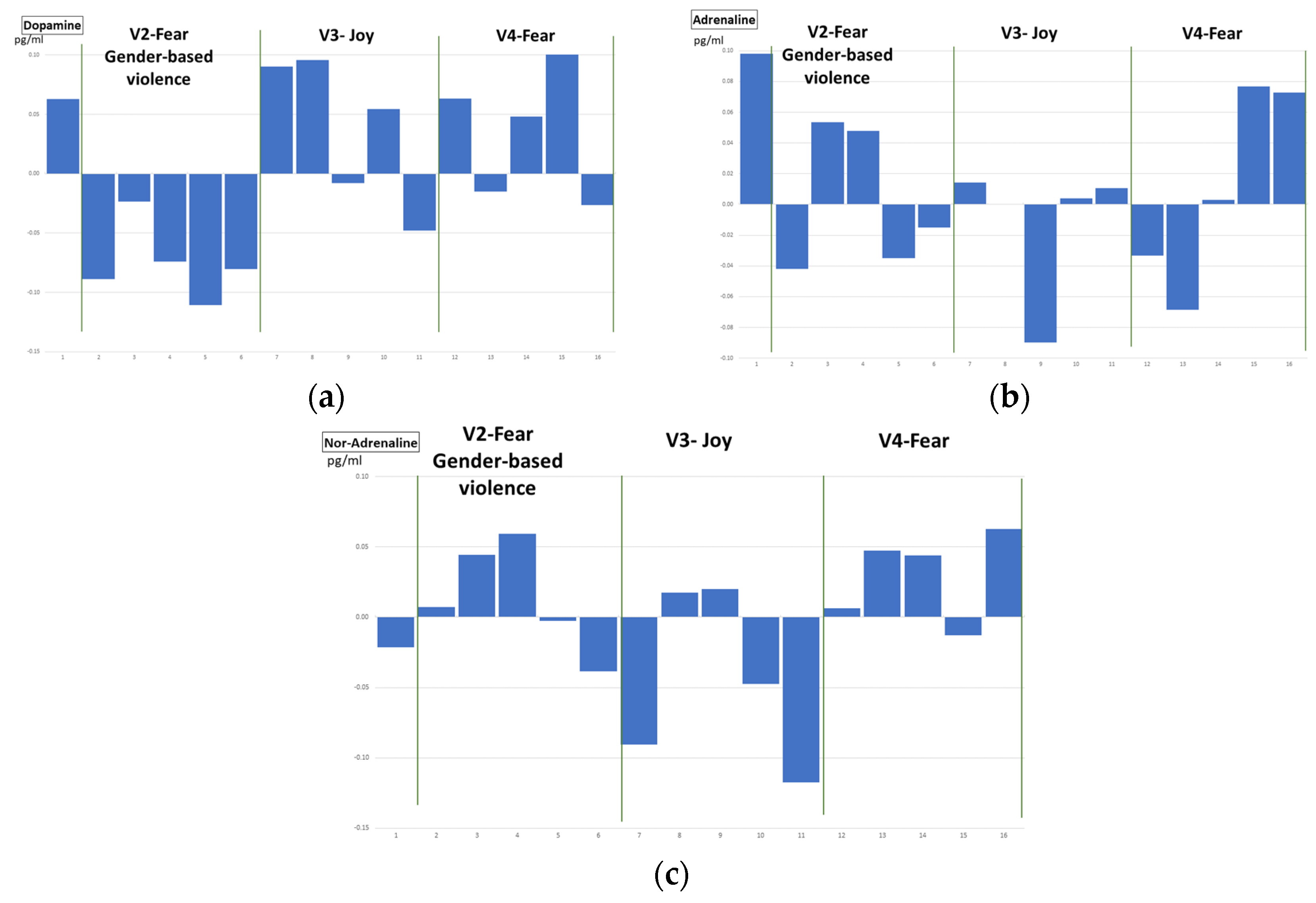

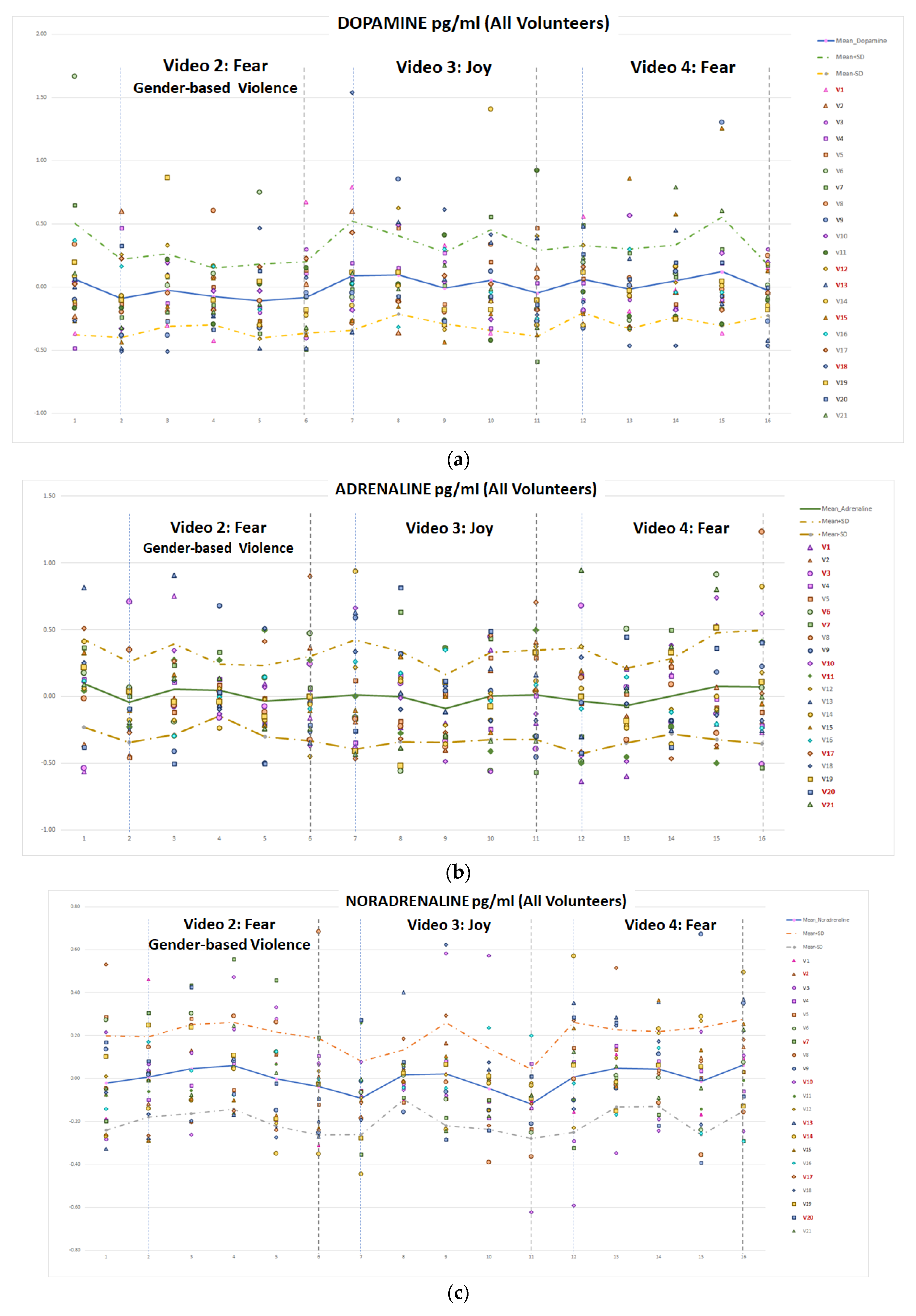

Finally, the plasma catecholamine concentration measurements provided data with apparently no connection with the emotion elicited. The ANOVA analysis provided no significant differences between the levels of catecholamines in blood plasma after visualizing the video clips of the different emotions. Besides, the clustering analysis (fear/no-fear emotions) on the data obtained from the 21 volunteers did not produce a valid result. Moreover, the classifiers selected as artificial intelligence algorithms to detect fear emotional reactions present poor-quality metrics, mainly due to the lack of enough data for training, testing and generalizing.

This problem of insufficient data on plasma catecholamine concentration (only five samples per video, i.e., per emotion) is difficult to solve. Even in an experimental study, the ethical research advises to not make volunteers suffer unnecessarily. Sixteen blood samples per session per volunteer, although taken through a via, while visualizing emotional intensive video clips within a virtual reality environment, are a fairly good number to test the hypothesis of the research work. In the literature, up to our knowledge, there is no similar study, with most of the proposals being theoretical hypotheses and/or based on analysing previous experimental results for other purposes.

However, the data obtained should have provided some patterns of responses to different target emotions and, although in the temporal evolution of the concentration levels of adrenaline and nor-adrenaline a similar behaviour can be observed after both V02 and V04 fear-related clips, neither statistically significant relations have been found nor affective computing classifiers provided good results.

It is true, that the plasma catecholamine levels are altered by the effect of some foods, drinks, and medicines or drugs, as well as by strong physical exercise and/or recent intense stressful episodes. Amines found in banana, avocado, walnuts, beans, cheese, beer and red wine can modify the concentration of these hormones in the blood. Additionally, foods/drinks with cocoa, coffee, tea, chocolate, liquorice, or vanilla, as well as drugs (nicotine, cocaine and ethanol) and medicines (aspirin, tricycle antidepressants, tetracycline, theophylline, blood pressure control agents, and nitro-glycerine) have similar effects.

Besides, the emotional response is altered by prior experiences during a lifetime, and so does the emotional response to stress and the conditioned response to fear. Traumatic stress-induced fear memories may affect the physiological response and plasma catecholamine levels. There is strong evidence supporting that central catecholamines are involved in the regulation of fear memory, by activation of the sympathetic nervous system with elevated basal catecholamine levels are common in patients suffering from post-traumatic stress disorder (PTSD).

In the study presented, attention is paid to the activity of the volunteers before the experiment, as well as the different substances taken and, also, previous traumatic stressful experiences.

Although we previously informed about the recommendations, the volunteers reported the following data. With regard to medicines as regular treatment, six volunteers reported five chronic diseases: diabetes mellitus (1), hypertension (2), cardiac failure (1), ulcerative colitis (1), anaemia (1), and chronic pain (1). Additionally, one volunteer was taking contraceptives. On the other hand, four volunteers were taking ibuprofen or another type of anti-inflammatory drugs for the two days prior to the experiment. Respect to avoiding stimulants in food, drinks and drugs in the 24 h prior to the experiment, 13 volunteers took coffee or tea in that period of time, and one volunteer drank alcohol. Additionally, three of them ate citric fruits in that period.

Only four volunteers (v06, v11, v13, v19) exactly complied with the recommendations with regard to avoiding stimulant foods, drinks and drugs; and did not take any medication. They were young women with ages 23, 30, 29, and 23, respectively. Likewise, three volunteers (v01, v04, and v17) only had a coffee, complying with the rest of the recommendations, and did not take any medication either. Their ages were 21, 55, and 24 respectively. There are seven volunteers that only took a coffee and medicaments not presenting differences in the levels of catecholamine concentrations (v02, v05, v09, v12, v14, v15, and v20). In summary, we can consider that 14 volunteers were fully compliant and 7 could have some objection with respect to regular catecholamine activity.

Regarding prior stressful experiences, or specific fears, seven volunteers reported some previous traumas that activate themselves in situations like video clips V02 and V04, (v01, v03, v04, v12, v15, v16, and v20). Two of them identified as gender-based violence victims. However, the evolution of their plasma catecholamine concentration levels were not different from the other volunteers’, (

Figure 15 and

Figure 16).

Apart from the extrinsic and intrinsic factors that can be affecting the results of the study, the authors wish to highlight the low levels of the concentration of these catecholamines present in the blood plasma. We tested the technique ELISA that produced worse results in terms of sensitivity of these catecholamines. Nine women volunteers followed a similar experimental study, and 15 blood samples per volunteer were analysed with ELISA kits.

With respect to the hypothesis stated in this work, the measurement of the levels of dopamine, noradrenaline and adrenaline concentration in blood plasma is neither providing better classifications nor a more accurate differentiation of fear-emotion reactions in women.

6. Conclusions

In this work, a methodology and a protocol have been proposed to connect the elicitation of human emotions with the variation of plasma catecholamine concentration. For them, an immersive virtual reality environment has been arranged to provoke realistic situations where the volunteer could have intense emotional reactions. A continuous monitoring of physiological variables, with a research toolkit system (for the sake of comparison with other affective computing research works) was connected to the virtual environment, as well as a labelling procedure for discrete emotions and continuous PAD affective space dimensions.

Using this methodology, an experimental study with 21 volunteers has been conducted, using fear as a target emotion, thus provoking fear and non-fear while measuring physiological variables and extracting blood samples after the visualization of every video stimulus. In this first study, 16 blood samples have been extracted per volunteer; 1 for basal measure and 5 after the three emotion-related video clips (fear (gender-based violence related), joy and fear). These samples have been extracted in 1-min intervals after the visualization of the video clip. Along with the blood sample for catecholamine plasma analysis, physiological variables have been measured during the visualization of the video clips. Skin temperature, galvanic skin response, blood volume pulse, respiration, and Trapezoidal Electromyogram were the selected variables, measured with a commercial research toolkit.

Additionally, the emotion labelling for every video clip by all the volunteers has been analysed and there is a high degree of agreement in the discrete emotion, which was even better in the PAD affective space dimensions, especially for fear-related video V04. Therefore, we can affirm that the selected video clips are meaningful for the experiment.

The results for the evolution of the features extracted from the physiological variables, as well as an ANOVA statistical analysis, are in accordance with previous works. Differences between features measured during fear-related and during calm and joy-related video clips have been found for the mean of GSR (60 s windows). Additionally, differences have been found between calm-related and fear/gender-based-violence fear-related video clips for the IBI (for heart rate,). Furthermore, the temporal evolution of these features has been analysed and correlated with the fear-related video clips, identifying precise moments where the features’ behaviour can be associated with the scene development.

We can conclude that there are no significant p-values (ANOVA statistical analysis performed) that allow differentiating the emotion elicited using only the evolution of the plasma catecholamine concentration levels as a variable. Additionally, the temporal evolution of these levels has been analysed, not identifying precise patterns for fear-related video clips different from the joy-related video clip.

Finally, artificial intelligence algorithms for fear classification with physiological variables and plasma catecholamine concentration levels (separately and together) have been tested. The best results have been obtained with the features extracted from the physiological variables. Adding the maximum variation of catecholamines during the five minutes after the video clip visualization, as well as adding the five measurements (1-min interval) of these levels, do not provide better performance in the classifiers.

The small number of samples together with the low concentration of catecholamines in blood plasma make it not possible to use these data for machine learning techniques for fear classification in this experiment.

Finally, we can state that research on this topic should continue considering the following future actions:

Although it is true that the results of this study show that the measurement of catecholamine concentration does not improve the detection and identification of emotions, it would be desirable to have a larger sample of volunteers in order to detect patterns of variation in this concentration that validate this conclusion.

Following Lovehëim’s theory work, adding the measurement of blood serotonin concentration would be recommendable since it could allow us to improve the classification of fear from joy, which are both emotions with a high theoretical degree of activation. For this study, although its inclusion was considered, adding the serotonin measurement entailed the use of another analysis technique, which meant extracting twice as many samples from each volunteer, which was not recommended from an ethical point of view.

In the search for non-invasive emotion detection systems, it would be interesting to analyse the effect of the concentration of catecholamine in sweat (cortisol) or in saliva (alpha-amylase). If significant differences were found, it would be possible to include these variables in automatic emotion detection systems design.

However, in the search for any other extra information, instead of clustering fear and not-fear emotions, a behaviour pattern for each volunteer was examined according to Khrone [

66] which suggests that there are two main strategies in stress reaction: vigilance and avoidance. From an unsupervised standpoint and after applying k-means algorithms four clear groups were observed, two of them being a symmetrical representation of the other two. In two of the groups, the third clip contains a negative variation, which is below the other two clips. On the other hand, the other two groups have a peak in the third clip (V3) which is above the values representing the other two videos.