Abstract

Invasive or uncomfortable procedures especially during healthcare trigger emotions. Technological development of the equipment and systems for monitoring and recording psychophysiological functions enables continuous observation of changes to a situation responding to a situation. The presented study aimed to focus on the analysis of the individual’s affective state. The results reflect the excitation expressed by the subjects’ statements collected with psychological questionnaires. The research group consisted of 49 participants (22 women and 25 men). The measurement protocol included acquiring the electrodermal activity signal, cardiac signals, and accelerometric signals in three axes. Subjective measurements were acquired for affective state using the JAWS questionnaires, for cognitive skills the DST, and for verbal fluency the VFT. The physiological and psychological data were subjected to statistical analysis and then to a machine learning process using different features selection methods (JMI or PCA). The highest accuracy of the kNN classifier was achieved in combination with the JMI method (81.63%) concerning the division complying with the JAWS test results. The classification sensitivity and specificity were 85.71% and 71.43%.

1. Introduction

Effective disease management requires a constant search for factors relevant to improving the quality of care and patient safety [1,2]. Analysis of affective state refers to the way the person feels at any given time. The affective state consists of emotional responses, including experience, expression, and physiology. Those components aid in the interpretation or appraisal of the situation that provokes a given emotional response. It could be helpful in clinical practice to identify factors responsible for patient activation and engagement in the therapy [3]. Results of longitudinal studies suggest that patient activation predicts future health outcomes [4] and that it is possible to affect whether the patient successfully participates in the treatment and feels responsible for the healthcare process [5]. Emotions during healthcare are mainly triggered by invasive or frightening treatment experiences and pain during surgical procedures [6,7]. Technological development of the equipment and systems for monitoring and recording psychophysiological functions enables continuous observation of the changes in responding to a situation, making it possible to supplement the psychological data obtained through self-descriptive questionnaires with physiological ones [8,9,10].

According to classic theories, emotions, as complex ways of reacting, emerge in a multi-stage process [11,12]. Emotions are triggered when an event important for safety occurs. The response is formed due to the nervous system activation, cognitive assessment and behavioural reaction [13,14,15]. To evoke an emotion, physiological excitation has to be maintained to determine the situation significance for the individual [16]. According to Izard’s concept, four systems are responsible for emotion activation. They include the neural system, which is based on electric stimulation of the brain; the sensorimotor system, which activates the sensations through the body posture and facial expression; the motivation system in which emotions are generated as a result of sensory experiences and drives; and the cognitive system which applies to the categorization, assessment and comparison processes [11,12]. Russell and Carroll introduced differentiation of emotions based on arousal (presence or absence of stimulation) and valence (pleasure or displeasure) [17]. The arousal includes emotions on the biological level, in the form of physiological stimulation, while valence applies to the mental experiencing of emotions. The theory developed by van Katwyk et al. [18] includes, in addition to Russel and Carroll’s concept [17], the findings on the occurrence and course of a stress reaction [13,19]. In this approach, positive emotions with a high activation level are described as eustress, while negative emotions with a high-intensity degree are referred to as distress [18].

The previous results indicate the significance of neuroticism and negative emotions for intensifying stress experience during physiotherapeutic procedures in a non-clinical group [9,10]. Anxiety and stress are interrelated and condition one another [20,21,22]. Anxiety disturbs the correct rhythm of a person’s reaction and leads to exaggerated and inadequate reactions even to weak stimuli [23]. Persons characterized by a higher anxiety level tend to be shy, lack self-confidence, be reluctant to challenges, and not be able to cope with stress effectively. If persons with high anxiety factors are subject to assessment, they trigger many useless behaviours for fulfilling the task or even hinder it [24]. While negative emotions limit the number of cognitively available solutions to the problem, positive emotions extend the range of solutions [25,26,27]. Moreover, positive emotions play an essential role in building resistance to stress [28,29]. Experiencing negative affective states is also linked to low involvement in the action, which means that the persons devote less energy and reveal less will to undertake and continue the effort to complete the task and fulfil the requirements set to them [30]. According to Kahn’s assumptions, the persons who invest higher energy resources in doing a task have a broader perspective and are open to the possibilities and opportunities, which results in them facilitating other’s work [31].

In the biological approach, the emotions triggering is attributed to the amygdala [32]. It is responsible for the body’s defence reactions, stimulating the sympathetic nervous system [33]. The stimulation of sympathetic nerve fibres leads to the activation of eccrine glands, which immediately contributes to the changes in the skin conductivity and can be measured using the skin’s electrodermal activity (EDA) signal [34]. The EDA is one of the most often used signals for al and physiological analysis, as it represents both the prescriptive and pathological condition [35]. The sympathetic activity of the nervous system manages the cognitive and affective states, so the analysis of the EDA signal provides information about the autonomous emotion regulations [36]. The sympathetic nervous system stimulants affect the heart function as well—they improve conduction in the sinoatrial and atrioventricular nodes, which is reflected in such signals as the blood volume pulse (BVP) or heart rate (HR) values [37], being the second most commonly analyzed group of physiological data which characterize emotional states [38]. In addition, the acceleration signal indicates the subject’s movement, and the body or head movements can be used to detect emotions and their magnitude [39].

There is an increasing demand for finding solutions that support traditional assessment of the affective and cognitive states based on of the analysis of physiological signals (e.g., EDA, BVP, ECG or temperature) and employing machine or deep learning methods, which makes an independent application that predicts the patient’s condition and maximizes diagnosis accuracy [40,41].

The effectiveness of using machine learning methods and data from physiological signals (EDA), compared to the subjective assessment of depression was tested [8,42]. A multimodal approach was using similar bio-signals like in the presented article (BVP, EDA, EMG, temperature, and respiration) to develop a classifier to recognize negative emotions [43]. Another system based on ECG, EDA, skin temperature signals and SVM classifier were designed to assess emotions independently and reflect emotions on the autonomic nervous system [44]. An application has been developed using a photoplethysmographic and EDA signals recorded from a wearable device to analyse the user’s experienced emotions evoked by the presented affective images [45]. Repeated episodes of stress can disrupt a person’s physical and mental stability. Monitoring stress levels has therefore become an important and frequently addressed issue in recent years [40]. The stress level was assessed in a study using the Empatica E4 wristband and EDA signal analysis [46]. Efforts were made several times to predict the stress symptoms, the occurrence of anxiety or depression symptoms by using different machine-learning algorithms based on the results obtained with a complex DASS 42 questionnaire [47]. The effectiveness of the Matching Pursuit algorithm in recognizing emotions based on electrocardiogram and galvanic skin response signals while listening to music was also investigated [48] or during sleep to assess mental health [49]. In the study by Gou et al., the HRV signal determined from ECG, combined with SVM, enables recognizing states such as sadness, anger, fear, happiness, and relaxation [50]. The emotions were also evoked by visual and auditory stimuli (4 different videos) [51] and in addition, the ambient temperature was adjusted while watching the videos to enhance the experience. Based on features extracted from the recorded physiological signals (EEG, PPG, EDA), similar emotions as before—happy, relaxed, angsty, and sad—were recognised. Other studies have also proposed a system to recognize emotions (happiness, sadness, anger, fear) evoked by video recordings based on EDA signals combined with different classifiers—gradient-boosting decision tree, logistic regression, and random forest [52]. The differentiation of six other states (joy, sadness, fear, disgust, neutrality, and amusement) based on physiological signals was also the subject of an analysis based, among other things, on skin temperature (SKT), skin conductance (SKC), blood volume pulse (BVP) and heart rate (HR) [53]. Subsequent studies attempted to distinguish between another set of emotions—sadness, anger, stress and surprise, based on short fragments of the electrocardiogram signal, skin temperature variation, electrodermal activity and classification by machine learning techniques [44]. A tool developed by a team led by Carpenter [54] is an example of another screening tool constructed using machine learning to evaluate the risk of anxiety disorders occurrence. Physiological signals were often used to analyse emotional states because they represented the most authentic response of the organism, which is beyond human control. Therefore, in combination with machine learning or deep learning, high accuracies were achieved when distinguishing between individual emotions [55,56]. The paper by Wei et al. proposes a different classification method than previously presented, the Weight Fusion strategy, for the recognition of emotional states based on signals such as electroencephalography (EEG), electrocardiogram (ECG), respiration amplitude (RA) and electrodermal activity (EDA) [57]. The paper by Pinto et al. demonstrated a qualitative approach to the analysis of emotions based on physiological signals (ECG, EDA, EMG) [58]. They investigated whether and which signals carry more information in emotional state recognition systems. The general broad interest in methods of using artificial intelligence is reflected not only in assessments of emotional states but also in direct support of medical science for example to improve the performance of conventional contactless methods for heart rate measurement [59] or to detect and classify cardiac arrhythmia [60].

There were also a lot of different approaches to emotion assessment based on data other than physiological signals from wearable devices. Several methods used neuroimaging combined with machine learning algorithms to objectivize psychological diagnostics of patients [61,62] and search for the biomarkers that identify the particular emotional state [63]. Another approach was for systems to recognise emotions based on facial expression [64,65,66,67] or facial micromovements [67]. However, in each of the systems mentioned above, no attempt was made to objectify the emotions experienced, as in each case, the label of the emotional state was merely the subjective evaluation of the subject induced intentionally by a particular activity. The problem remained in recent research and developments. Emotion recognition using Convolutional Neural Networks (CNN) or other deep learning methods (like ResNet, LSTM) was based on the signals recorded when these emotions were evoked [68]. The two largest learning databases, DEAP [69] and MAHNOB-HCI [70], were collected during an experiment where the patients’ physiological signals were recorded when they were watching images or videos intended to evoke specific emotions. The problem of objectifying judgments independent of the subject is a problem that has been studied in many fields, but in the case of artificial intelligence, these classifiers were based on learning sets containing emotions that were intentionally elicited in the subject [71,72].

To the best of the author’s knowledge, there is a gap in identifying the affective states that occur during physiotherapeutic procedures and the description of their significance for an individual’s actions results. Based on the patient’s visual observation during rehabilitation, it is hard to unambiguously determine if the implemented therapy maximizes the effects at the patient’s moderate own effort. This study focuses on the analysis of the individual’s affective state. The results reflect the excitation expressed by the subjects’ statements acquired with psychological questionnaires. They are also expressed by the values of the characteristics determined based on physiological signals. The changes in the motivation to take action are another effect of the excitation. They are significant for the levels of energy, enthusiasm, and concentration on the fulfilled tasks [73]. A reduced effort in the exercise, doing the exercise slower, or ignoring the therapist’s recommendations can manifest the consequences of a lack of involvement resulting from low intensity of emotions or prevalence of negative emotions.

2. Materials and Methods

2.1. Research Group

The research group consisted of 49 students (22 women and 25 men) of the Jerzy Kukuczka Academy of Physical Education in Katowice aged 19–26. Individuals with a current medical condition, current medication intake, or lifetime history of any neurological or psychiatric disorders were excluded from the experiment. All participants were provided written informed consent before the beginning of testing. The Bioethics Committee of the Jerzy Kukuczka Academy of Physical Education in Katowice approved the study protocol (No. 3/2019) and conducted it according to the Declaration of Helsinki.

2.2. Study Design

The research was carried out in a laboratory of the Institute of Physiotherapy and Health Science of the Jerzy Kukuczka Academy of Psychical Education in Katowice in two interconnecting rooms. The rooms were adapted to the research requirements, creating a functional and comfortable space. One room was dedicated to welcome the volunteers and fill out the consent for research participation. The second room had exercise areas and was adjusted to take subjective and sensor-based measurements while remaining private.

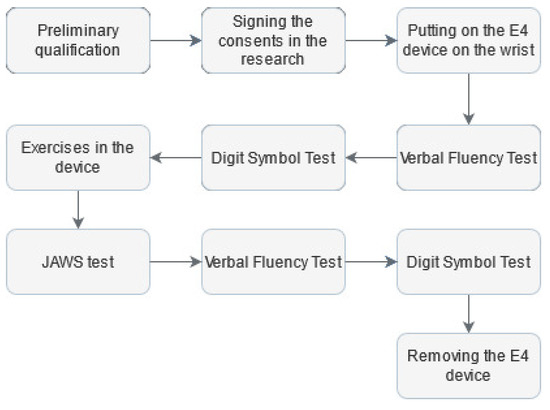

The measurement protocol (Figure 1) was based on a previously proposed concept [9]. This approach used both quantitative and qualitative assessments.

Figure 1.

Research protocol.

To record physiological signals and continuously monitor the participants during the research, an FDA-approved Empatica E4 wristband was placed on the wrist of the non-dominant hand [74]. Then, to assess executive abilities, the participant was exposed to the Verbal Fluency Test (VFT) in the phonetic version [75]. The VFT is often used in neuropsychology, both in clinical and experimental assessment, due to its performance simplicity. Its correct performance depends on executive functions efficiency, working memory and linguistic resources in long-term memory [76]. Next, the Digit Symbol Test (DTS) was used to assess cognitive functions, including learning, maintaining attention, and solving tasks [77]. After completing the above tests, each person was asked to go to a room where a prototype of the Disc4Spine (D4S) diagnostic and therapeutic system for supporting the rehabilitation of postural defects was located. The standing exercise module is a therapeutic device for corrective exercises involving single-step short muscles responsible for motor control [78]. Immediately after leaving the D4S, the subject was requested to fill out scales assessing their affective state during exercise activity by the study-adapted measure. Original Job-Related Affective Well-Being Scale (JAWS) measures the varied affective responses to perceived job conditions and outcomes, consisting of modified instructions to place them in the research context [18]. The new version was as follows: “Please use the following scale to answer the question: how often do you feel the following during the exercises?” The study used a 12-item version. It comprises 12 various emotions, both positive and negative. The responses format was a 5-point Likert scale from (1 = never, 2 = rarely, 3 = sometimes, 4 = often, 5 = ery often).

The final elements of the research protocol were to retake the VFT for a different letter than at the start of the study and retake the DST. After that, the Empatica E4 wristband was dismounted.

2.3. Data Acquisition

The first device used was the Empatica E4. It consists of a thermometer and a photoplethysmographic sensor measuring cardiac signals such as blood volume pulse (BVP), from which heart rate (HR) and inter-beats interval (IBI) are then computed. Each HR sample was averaged over the previous ten seconds. An electrodermal activity (EDA) sensor measured changes in the skin electrical conductivity, and a triaxial accelerometer (ACC X/Y/Z) monitored the patient’s activity during the tests. The BVP signal was recorded at 64 Hz, and the HR signal was sampled at 1 Hz, EDA, and temperature—at 4 Hz, and acceleration at 32 Hz. In the Verbal Fluency Test, the subject’s goal was to generate within 60 s as many words as possible in the native language, which start with a given letter, each time chosen randomly (excluding X, Y, and all Polish diacritic characters). A proprietary mobile application was used to count the number of uttered words, whereas the leading letter and the remaining time were continuously presented. The participant received a sheet of paper on which there were two lines in the upper part to perform the DST—the first line contained consecutive numbers from 1 to 9, and the second, lower line, included symbols corresponding to those numbers. The task was to write down as many symbols as possible corresponding to consecutive numbers from the range 1–9, given at random, within 60 s. In the D4S module, while the research participant was correctly positioned and secured, they were asked to perform three consecutive exercises of varying difficulty activating muscles under the physiotherapist’s supervision. The first exercise consisted of alternating forward and backward pelvic tilt for 60 s at a frequency of one sequence per second. Then the subject was asked to perform the external rotation of feet for 10 s with maximal force, which was measured by a resistance bar that was a component of the system. The third exercise consisted of the following sequences: external rotation of the feet, external rotation of the knees, anterior tilting of the pelvis, shoulder retraction, and, finally, elongation of the spine and holding this position for 10 s. In the next step, the paper version of the JAWS questionnaire was filled.

3. Data Analysis

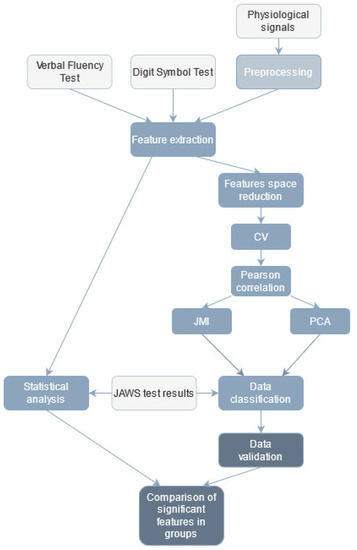

The workflow of data analysis is presented in Figure 2.

Figure 2.

Workflow of data analysis (light grey—input data, medium grey—data classification, dark grey—data validation).

The research data were combined and constituted one extensive database of coefficients subjected to further analysis.

3.1. Executive and Cognitive Abilities

The analysis of the Verbal Fluency Test results (before and after exercise, independently) was focused on the number of spoken words, the mean time between utterances, as well as the popularity of a given letter in the Polish language according to the National Corpus of Polish [79] and the author’s fluency coefficient defined as the quotient of the number of uttered words and the letter popularity. Additionally, the differences between the number of spoken words, the mean time, the letter popularity, the fluency coefficients before and after therapy were calculated. In total, 12 features were obtained. A dedicated application was used to facilitate the VFT subsequent analysis, which generated a .csv file with the results after the test was performed. The differences between the coefficients mentioned above for the test performed before and after the exercises were also analyzed. These results were the essential piece of information, as they indicated verbal-letter fluency.

Based on of the Digit Symbol Test results analysis, a total number of nine features (before and after exercising, independently) were determined, such as the number of matches, the number of correct matches, the author’s match rate (calculated as the quotient of the number of correct to all matches). Furthermore, the differences between values obtained before and after exercises of the following coefficients were calculated: the number of all matches, the number of correct matches, the difference in match rates. In this DST version, the participant’s most crucial task was to match as many number-symbol pairs correctly as possible. It was also important to remember that they had to do it one by one, according to the given sequence of digits. To evaluate the test, a manual analysis was carried out.

3.2. Psychological Measurement

The intensity of current feelings as a reaction to the research situation by computing the sum of the answers of 12 items was assessed. The calculations were conducted in PRO IMAGO 7.0. The internal reliability of the scale measured with Cronbach’s was 0.85. The cut-off for dividing the research group is the median score (value 44), assuring the distribution-independent division. There were 15 people in the below-median group and 34 in the above-median group.

3.3. Signal Preprocessing

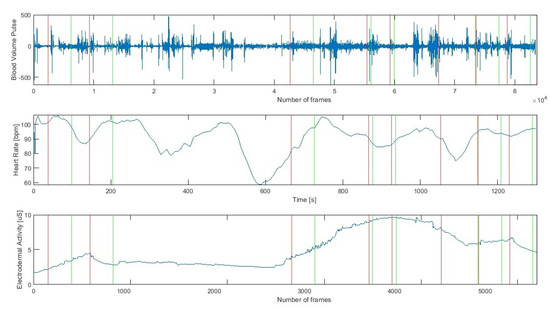

The Empatica E4 wristband allows recording the time markers indicating the beginning of the occurrence of a given protocol element (Figure 3). On this basis, each of the analysed signals was divided into time segments such as:

Figure 3.

Cardiac (Heart Rate, Blood Volume Pulse) and Electrodermal Activity raw signals during successive stages of the research protocol (a red line indicates the beginning and a green line the end of a stage).

- VFT before exercise (vft1),

- DST before exercise (dst1),

- exercise no 1 (ex1),

- exercise no 2 (ex2),

- exercise no 3 (ex3),

- psychological test (ptest),

- VFT after exercise (vft2),

- DST after exercise (dst2).

For each signal, features were determined from the individuals’ time intervals. Finally, from all modalities, 1133 features were extracted.

3.3.1. Heart Signals

Empatica E4 captured the Blood Volume Pulse signal. The HR signal was determined from the BVP by the algorithm proposed by the Empatica wristband designers. The analysis method was the same for both BVP and HR and involved short-term signal fragments and the determination of statistical and entropy-based features [80,81]. Due to the characteristics of the HR signal, it was not filtered before further calculations. The following statistical parameters were evaluated for each segment in every signal: mean, median, mode, 25th, and 75th percentile, quartile deviation, minimum and maximum value, variance, the fourth and fifth-order moments, skewness, kurtosis, root mean square, range, and the total sum of values. In addition, the following entropy-based features were determined for each of the fragments: total energy, mean and median energy of the signal, and entropy. In total, 20 parameters were calculated for each signal segment.

3.3.2. EDA

The next signal to be analyzed, recorded using Empatica E4, was the electrodermal activity. A wavelet transformation from the Symlet wavelet family was used to filter the signal [82]. The maximum value of the decomposition level was set according to Equation (1).

Based on the available literature [9,10,34,35,81], for each part of the EDA, the same statistical parameters as for the heart signals were computed. Furthermore, the following coefficients were also computed from the EDA signal: total energy, mean and median energy of the signal, entropy, coefficient of the slope of the regression line allowing to determine the trend—tonicity of signal, coefficients of regression line shift, a distance of values of consecutive EDA samples from the regression line, number of signal intersections with the regression line and the quadratic metric of the discrepancy between predicted and observed data (obj). Characteristic parameters were also determined from the EDA signal. The first of them was Galvanic Skin Response (GSR), which presents sudden changes in skin electrical resistance caused by momentary emotional stimulation, increasing the sympathetic nervous system activity [34]. On this basis, the number of GSR responses per minute—rpm, their energy, and the number and energy of significant GSR, i.e., those with a value above 1.5 uS, were computed. In total, 264 features–33 features per stage—were determined for the whole EDA signal considering the individual steps.

3.3.3. ACC

The acceleration was recorded in three axes—X, Y, and Z using the E4 wristband. The ACC signal was analyzed independently for each axis, according to the same scheme. The first step was to divide the signal into time segments. Then, for each segment, statistical parameters were calculated: mean, median, variance, 25th and 75th percentile, quartile deviation, minimum and maximum value, signal range, the fourth and fifth-order moments, skewness, kurtosis, RMS, total sum, mean and median of the ACC signal energy. For the ACC signal, 20 features were determined for each stage, for a total of 160 features for each axis.

3.4. Statistical Analysis

The results of the JAWS (jw) tests were converted to dichotomous factor variables according to the following criteria:

Next, all the computed variables, which were all quantitative, were compared according to the JAWS division using the Student’s t-test for independent samples, the Welch’s t-test, or the U Mann-Whitney test, depending on which assumptions were fulfilled. They were verified using the Shapiro-Wilk test (normality), the F-test (homogeneity of variances), and the chi-squared test (equal sample sizes). The significance level alpha was set to 0.05 for each test.

Additionally, based on the distribution presented above (Equation (2)), the statistical significance of the difference of the JAWS test results between groups was tested with the Shapiro-Wilk W test (normality of distribution) and Mann-Whitney test (equality of medians), at an alpha significance level of 0.05 for each test.

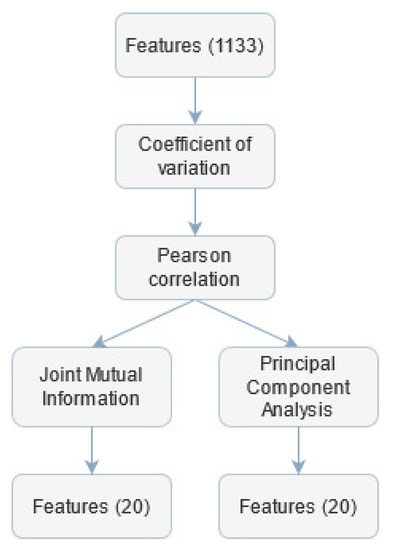

3.5. Features Selection

The parameters extraction stage provided a set of far too numerous features to avoid classification overfitting problems (Figure 4). Therefore features selection was performed to decrease the classifier test error.

Figure 4.

Subsequent steps of feature selection.

First, the coefficient of variation () for each feature was calculated. If the value of for the given parameter was lower than 0.02, this feature was considered quasi-constant and removed from further analysis. The threshold for the was set to such a low value to avoid rejecting informative coefficients.

Next, the Pearson correlation matrix was calculated. Features for which this coefficient was greater than 0.98 were labelled as candidates for being removed. Among this set, the feature was found, which was over-threshold correlated with the largest number of other variables and was then excluded from the final set. This procedure was repeated until there were no over-correlated coefficients. The threshold value was chosen based on other work concerning similar topics [83]. The computed features had different ranges, so there was a need to rescale them before employing further analysis. This step was necessary because feature selection and classification algorithms can be sensitive to extreme values. The rescaling was performed using min-max normalization. To discriminate features with small differences, the transformation of features values was proposed, according to exponential function with base equal to 1.1. This value was chosen empirically—such transformation was searched to strengthen the differentiation between values within one feature.

Furthermore, a non-linear Joint Mutual Information (JMI) optimization method was used. It focuses on increasing the complementary information between features using a minimum/maximum criterion to solve the information overestimation problem [84]. The first 20 features with the highest JMI values were selected for further classification independently from class division, according to JAWS.

Another approach to select features was employed—the Principal Component Analysis (PCA) method. The PCA is an unsupervised dimensionality reduction technique that analyses correlations between different features based on their linear combination and reduces the number of variables while maximizing their variability [85]. In the presented research, the input data were transformed using the PCA into a new feature space, and the 20 most differentiating variables were selected.

3.6. Classification

For classification purposes, the k-Nearest Neighbours (kNN) classifier was used in supervised learning [86]. The supervised classification task was to estimate the label of a given feature vector based on data distribution. In the presented approach, the value of k was set empirically to 10, and for the similarity evaluations, the cosine metric was employed. Other metrics were also tested, but the achieved results were less differentiating. It was decided to choose machine learning (ML) over deep learning (DL) methods because in DL, the datasets are based on whole signal waveforms, divided into frames, and recorded over a longer time. In the presented work, due to the short time segments (30–60 s), only features for individual stages were extracted from the analysed signals. The research presented here is carried out in laboratory conditions using the prototype of the D4S device, which means that we are dealing with a simulation of short therapy sessions. Emotional changes, both psychological and physiological, occurring in the patient’s body during such exercises are not as fast-changing as in studies/experiments analysing emotions of everyday life. The patient will not experience such intense and sudden emotional stimuli as, for example, when receiving tragic information because our situation is related to regular physical exercise. The therapy of people with scoliosis is a long-term process that does not provide momentary strong pain stimuli. Hence, fatigue has different characteristics than in random situations. In the choice of optimisation (PCA and JMI) and classification methods presented above, less complex approaches were chosen due to the work’s interdisciplinary nature. The way these methods work and the results obtained are understandable for psychologists and physiotherapists who are members of the authors’ team.

3.7. Method Validation

The basic characteristics of classification, indicating the accuracy and quality of the classifier performance were calculated, i.e., accuracy (ACC), sensitivity (TPR, True Positive Rate), specificity (TNR, True Negative Rate), precision (PPV, the number of positive class predictions that belong to the positive class) and F1-score (the harmonic mean between precision and sensitivity) according to the following formulas:

True Positive (TP) values are the number of input feature observations classified as positive by the kNN classifier. False Negative (FN) refers to positive observations classified in the negative group. Whereas the True Negative (TN) is the real negative samples, and False Positive (FP) refers to the negative group positive observations. The above coefficients were based on the study group model division into positive/negative classes to the JAWS test results.

4. Results

4.1. Statistical Analysis of the Signals

For each signal Table 1 shows the lists of the statistically significant features, indicating from which stage of the research protocol they came.

Table 1.

Statistically significant features according to JAWS division, signal analysed, and protocol stage.

The greatest number of statistically significant features was determined for the ACC X signal to 34 features. The ACC Y signal was also characterized by many statistically significant coefficients (27 features). The ACC Z and the BVP and HR cardiac signals exposed a small number of statistically significant features. The EDA signal had only one significant feature.

4.2. Psychological Measurement

Due to the non-normal distribution of the JAWS values in the two groups (), the analysis of differences between those groups (below the median and above the median) was computed using the Mann-Whitney U-test. Descriptive statistics for each group are shown in Table 2.

Table 2.

Descriptive statistics of psychological data.

It is found that the intensity of current feelings was significantly higher ( ) in the group above the median (M = 47.92) than in the group below the median (M = 40.00). It shows that the experiences of affective states were characterized by higher frequency and intensity of emotions. The less intensive reaction to the stimuli, characteristic for the group below the median, in behavioural categories, can be described as restraining from the spontaneous expression of emotions, and consequently gestures and behaviour. A more detailed analysis of the differences in the intensity of individual emotions is presented in Table 3.

Table 3.

The intensity of individual emotions measured by the JAWS test.

Results show that such emotions as gloomy, energetic, inspired, relaxed, and satisfied are more intensive of the group above the median. Cohen’s d value indicates a medium to large effect size (Cohen’s ). The state described as at ease intensity is less for the group above the median (Cohen’s ). There are no differences between each group for emotions like anger, anxiety, discouragement, disgust, excitement, and fatigue.

4.3. Executive and Cognitive Abilities

Table 4 presents the mean values of the individual features computed for the VFT and DST before and after exercising.

Table 4.

Results of the Verbal Fluency Test and the Digit Symbol Test (bold statistically significant features).

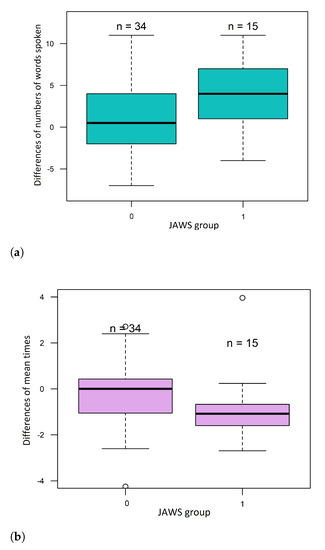

It can be noted that there was an increase in the number of words spoken by the subject. This difference was statistically significant according to the JAWS division. Subjects from JAWS group 1 had a significantly higher median difference of words spoken than group 0–four words more after exercising than before, whereas patients from group JAWS 0–0.5 words more after exercising than before (Figure 5a).

Figure 5.

Boxplots of (a) the numbers of differences of words spoken and (b) the differences in mean times between words.

The mean time between consecutive spoken words decreased. This difference was also statistically significant according to the JAWS division. The mean time in JAWS 1 subjects decreased by 1.1 [s] (median difference in the mean time), while in the JAWS 0 group, the mean time did not change—the median difference was 0.01 [s] (Figure 5b). The popularity of chosen letters in the second VFT was lower than in the first approach. However, this difference was not statistically significant. The mean value of the fluency coefficient also changed. It was higher in the VFT after the exercises than before but without statistically significant differences.

In the second DST attempt, all obtained parameters increased. The number of total matches increased by about six number-symbol pairs, the accuracy of matching symbols to numbers was also improved as well as the digit rate. However, none of the mentioned features showed statistical significance against the JAWS division.

4.4. Data Classification

4.4.1. Features Selection

Based on the value of and the correlation coefficient, 636 features were selected for further analysis. The JMI algorithm provided the JMI coefficient value for each trait. 20 features with the highest JMI values were selected, with a cut-off limit of 5.485623, ranging from 0 to 5.6147.

The selected features are presented in Table 5.

Table 5.

Features selected using the JMI method.

The second approach to optimizing the number of features was the PCA transformation. The number of components was limited to a maximum of 20 of those with the highest variance.

4.4.2. Machine Learning

Table 6 presents the kNN classifier performance for 20 features selected independently with the JMI and PCA algorithms for the division into two classes relative to the JAWS labels.

Table 6.

Evaluation of the kNN classifier.

The highest classifier accuracy was achieved for the kNN in combination with JMI (81.63%). The highest sensitivity (TPR) was achieved for the kNN with PCA. However, for the same combination, a low specificity was obtained, reflecting a low accuracy of the classifier compared to kNN with JMI. The -score, which shows the harmony between the precision and sensitivity values, reached its highest value (0.90) for kNN with JMI.

Overall, the kNN classifier presented the best performance in combination with JMI—it achieved the highest accuracy, precision, -score, and sensitivity value was comparable to specificity. The PCA method in combination with kNN resulted in slightly lower results but still satisfactory.

5. Discussion

Physiotherapy as a sum of medical influences on the patient, whose fundamental objective is to restore the highest functional performance possible [87], can be strengthened by monitoring the affective state that reflects the attitude to the effort. The information for the therapists on the emotions experienced by patients can promote relationship building and strengthen [88], and support coordination of actions [73], similarly to the affective contagion phenomenon [89].

The protocol presented in this paper was based on the acquisition of the electrodermal activity signal, cardiac signals, and accelerometric signals in three axes. Subjective assessments were gathered using questionnaires that measured the intensity and diversification of emotions (JAWS), cognitive skills (learning, attention focusing and shifting, processing speed—DST), and verbal fluency (VFT). The physiological and psychological data were subjected to statistical analysis and then to a machine-learning process using different feature selection methods (JMI or PCA). In the JMI method, the twenty most promising features were selected, but they were only BVP and EDA signal features. It is a likely confirmation of the significance of both those signals in the psychophysiological analysis to the sympathetic nervous system stimulation and experiencing affective states. Comparing the statistically significant features and those directly indicated as the differentiating ones with the JMI method, it should be noted that only one was selected in both cases. Hence, a conclusion can be drawn that some features indicated as statistically significant ones are the differentiating features for machine learning methods at the same time. The highest accuracy of the kNN classifier employed in this study was achieved in combination with JMI (81.63%). The classification sensitivity and specificity were 85.71% and 71.43%, respectively, which concludes that most physiological observations were classified correctly according to the results of psychological analyses. Both factors are essential in medical examinations because they are the evidence of the correct diagnosis [90]. The factors in this paper determined the classification of the subjects’ intensity and diversification of the emotions experienced.

Psychological measurement tests enable measuring emotions directly after they were induced rather than during their occurrence [91]. The use of measurement sensors can take over the function by continuous measurement of physiological reactions. It also helps to avoid referring to the subjects’ retrospective, which matters when emotions are measured because humans tend to overestimate the frequency and intensity of the emotions they experience when they evaluate the emotions retrospectively [92]. Based on the obtained results, it should be noted that BVP and EDA signals are objective measures supporting the psychological assessment. It offers the opportunity to support behavioural diagnostic and analyze the subjects’ physiological reactions in various everyday situations more thoroughly. The approach based on the EDA signal analysis and its features is an example of such attempts [10]. To objectivize the patient’s emotional state during therapy, a set of analytical parameters—signal errors was proposed, based on which the affect classification accuracy reached the level of 86.37%.

There are papers on similar topics based on an analysis of physiological data correlated with psychological data that evaluate psychic disturbances, such as depression or anxiety states. Ghandeharioun et al., in their analyses, by using different machine-learning methods - Random Forest and adaptive boosting (AdaBoost) obtained a low RMSE (Root Mean Square Error) value. It concluded that depression symptoms could be measured continuously using data from sensors [8]. An analogy can be discovered between the studies mentioned by Ghandeharioun and those presented in this paper. Both analyses were based on a subjective assessment of psychological tests, and the studies aimed to objectivize the behavioural state based on physiological data. In the paper authored by Richter et al., the STAI questionnaire was used to evaluate anxiety and depression levels [42]. The data obtained from a set of behavioural tests (from a ready-made database) and their analysis through machine learning methods—Random Forest algorithm—helped to detect unique patterns characterizing depression and anxiety states at the maximum accuracy of 74.18%. The key cognitive mechanisms in anxiety and depression were also indicated, but the whole research protocol was based on subjective data from psychological tests. The research was not objectified using physiological data, which can significantly affect the results.

Carpenter et al. proposed a screening tool for assessing the risk of anxiety disorders in children based on the Preschool Psychiatric Assessment (PAPA) test and machine learning algorithm [54]. They used the Alternating decision trees classifier (ADtrees) and data from a public database. The works revealed that machine learning use helped to reduce the number of items necessary to identify anxiety disorders in children by one order of magnitude with 96% accuracy. Still, it can be noticed that this is a subjective assessment based only on psychological data not supported by any physiological data. In the paper by Priya et al., the predictions concerning anxiety, depression, and stress were made using different machine learning algorithms [47]. The psychological data originated from the Depression, Anxiety and Stress Scale (DASS 21). The best results for the above-mentioned emotional states predictions were achieved with the Random Forest classifier—79.80% for depression states. The kNN method was also employed, but it did not render satisfactory results. The score factor was revealed to be an essential parameter for such algorithms, combined with behavioural data, while the specificity parameter revealed that the algorithms were susceptible to negative results. In the studies presented in this paper, there is an analogy with regard to the evaluated behavioural states and the applied classification methods, with such an advantage that the classification accuracy achieved by Priya et al. with the kNN method for anxiety reached 69.8%, which is a worse result compared to the results obtained in this paper, i.e., 77.5%. Moreover, only subjective questionnaire data, with no objectivisation attempt, were used. A different approach to emotional state assessment was presented in a paper authored by Boeke et al. [63]. The authors sought biomarkers that describe the patient’s mental condition based on neuroimaging combined with machine-learning algorithms. Still, no significant biomarkers were found. The cost of neuroimaging test and its availability, compared to easy monitoring of the patient through continuous recording of physiological signals, is another uncertainty.

In the D4S system, chronically ill people with scoliosis will be subjected to therapy. Their emotions will mainly depend on their character and not on the momentary state induced by the exercise performed with the therapist. We assume that the research carried out in the future will make it possible to determine the behavioural profile of the patient and later will allow us to develop a system for the physiological and behavioural assessment of the patient during the therapy. The laboratory conditions in the presented research protocol were chosen deliberately because the D4S system will be used in therapeutic sessions when the physiotherapist is working with patients and not during everyday activities. Our further work will be focused on extending the presented classification with real-time signal analysis, which could improve the therapy and allows to alert the therapist and make changes on spot.

6. Conclusions

In affective states accompanied by a high degree of excitation, a clear emotional expression is observed. Psychological measurement can provide data on the intensity and diversification of emotional reactions, which detection is possible by measuring selected physiological signals. The EDA and BVP signals demonstrate the highest dependence of the behavioural state on the physiological state. The tested protocol can be a part of a system, oriented towards equipping both the patient and the professional with the tools to manage diseases like scoliosis. The analysis of the patient’s functioning during the therapy offers the possibility of developing interventions that will be aimed at controlling and regulating the experienced emotions to a level that will help the patient to recover. In the approach of applying behavioural medicine to rehabilitation, the therapist should systematically consider the biopsychosocial factors that are relevant to the patient’s activity, both temporarily and, what is more important, in the long term.

Author Contributions

Conceptualization, P.R.-K., A.P. and A.W.M.; Investigation, P.R.-K., A.P., D.K. and M.D.-W.; Methodology, P.R.-K., A.P., M.D.B., M.N.B., D.K. and A.M.; Supervision, A.W.M.; Validation, P.R.-K. and M.D.B.; Visualization, P.R.-K. and M.D.B.; Writing—original draft, P.R.-K., A.P., M.D.B. and M.N.B.; Writing—review & editing, P.R.-K., A.P., M.N.B., D.K., M.D.-W. and A.W.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Polish Ministry of Science and Silesian University of Technology statutory financial support No. 07/010/BK_21/1006 (BK-296/RIB1/2021).

Institutional Review Board Statement

The study was conducted following the Declaration of Helsinki, and the protocol was approved by the Bioethics Committee of the Jerzy Kukuczka Academy of Physical Education in Katowice (No. 3/2019, 17 January 2019).

Informed Consent Statement

All subjects gave their informed consent for inclusion before they participated in the study.

Data Availability Statement

Data not available due to privacy and ethical restrictions.

Acknowledgments

The data was collected as a part of the project “DISC4SPINE dynamic individual stimulation and control for spine and posture interactive rehabilitation” (grant no. POIR.04.01.02-00-0082/17-00).

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ACC X/Y/Z | Accelerometer X/Y/Z |

| ACC | Accuracy |

| BVP | Blood Volume Pulse |

| coefficient of variation | |

| D4S | Disc4Spine Project |

| DL | Deep Learning |

| EDA | Electroderma Activity |

| FN | False Negative |

| FP | False Positive |

| GSR | Galvanic Skin Response |

| HR | Heart Rate |

| JAWS | Job-Related Affective Well–being Scale |

| JMI | Joint Mutual Information |

| kNN | k-Nearest Neighbours |

| ML | Machine Learning |

| obj | observed data of EDA |

| PCA | Principal Component Analysis |

| PPV | precision |

| TN | True Negative |

| TNR | True Negative Rate |

| TP | True Positive |

| TPR | True Positive Rate |

| VFT | Verbal Fluency Test |

References

- Lemmens, K.M.M.; Nieboer, A.P.; Van Schayck, C.P.; Asin, J.D.; Huijsman, R. A model to evaluate quality and effectiveness of disease management. BMJ Qual. Saf. 2008, 17, 447–453. [Google Scholar] [CrossRef]

- Casalino, L.P. Disease management and the organization of physician practice. JAMA 2005, 293, 485–488. [Google Scholar] [CrossRef]

- Greene, J.; Hibbard, J.H. Why does patient activation matter? An examination of the relationships between patient activation and health-related outcomes. J. Gen. Intern. Med. 2012, 27, 520–526. [Google Scholar] [CrossRef] [Green Version]

- Gagnon, M.P.; Ndiaye, M.A.; Larouche, A.; Chabot, G.; Chabot, C.; Buyl, R.; Fortin, J.-P.; Giguère, A.; Leblanc, A.; Légaré, F.; et al. Optimising patient active role with a user-centred eHealth platform (CONCERTO+) in chronic diseases management: A study protocol for a pilot cluster randomised controlled trial. BMJ Open 2019, 9, e028554. [Google Scholar] [CrossRef]

- Friedberg, M.W.; SteelFisher, G.K.; Karp, M.; Schneider, E.C. Physician groups’ use of data from patient experience surveys. J. Gen. Intern. Med. 2011, 26, 498–504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Harper, F.W.; Peterson, A.M.; Uphold, H.; Albrecht, T.L.; Taub, J.W.; Orom, H.; Phipps, S.; Penner, L.A. Longitudinal study of parent caregiving self-efficacy and parent stress reactions with pediatric cancer treatment procedures. Psycho Oncol. 2013, 22, 1658–1664. [Google Scholar] [CrossRef]

- Terry, R.; Niven, C.; Brodie, E.; Jones, R.; Prowse, M. An exploration of the relationship between anxiety, expectations and memory for postoperative pain. Acute Pain 2007, 9, 135–143. [Google Scholar] [CrossRef]

- Ghandeharioun, A.; Fedor, S.; Sangermano, L.; Ionescu, D.; Alpert, J.; Dale, C.; Sontag, D.; Picard, R. Objective assessment of depressive symptoms with machine learning and wearable sensors data. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 325–332. [Google Scholar]

- Romaniszyn, P.; Kania, D.; Bugdol, M.N.; Pollak, A.; Mitas, A.W. Behavioral and Physiological Profile Analysis While Exercising—Case Study. In Information Technology in Biomedicine; Springer: Cham, Switzerland, 2021; pp. 161–173. [Google Scholar]

- Romaniszyn-Kania, P.; Pollak, A.; Danch-Wierzchowska, M.; Kania, D.; Myśliwiec, A.P.; Piętka, E.; Mitas, A.W. Hybrid System of Emotion Evaluation in Physiotherapeutic Procedures. Sensors 2020, 20, 6343. [Google Scholar] [CrossRef]

- Izard, C.E. Four systems for emotion activation: Cognitive and noncognitive processes. Psychol. Rev. 1993, 100, 68. [Google Scholar] [CrossRef] [PubMed]

- Ackerman, B.P.; Abe, J.A.A.; Izard, C.E. Differential emotions theory and emotional development. In What Develops in Emotional Development? Springer: Boston, MA, USA, 1998; pp. 85–106. [Google Scholar]

- Lazarus, R.S.; Folkman, S. Stress, Appraisal, and Coping; Springer: New York, NY, USA, 1984. [Google Scholar]

- Lazarus, R.S.; Smith, C.A. Knowledge and appraisal in the cognition—Emotion relationship. Cogn. Emot. 1988, 2, 281–300. [Google Scholar] [CrossRef]

- Beck, A.T.; Clark, D.A. An information processing model of anxiety: Automatic and strategic processes. Behav. Res. Ther. 1997, 35, 40–58. [Google Scholar] [CrossRef]

- Parkinson, B. Untangling the appraisal-emotion connection. Personal. Soc. Psychol. Rev. 1997, 1, 62–79. [Google Scholar] [CrossRef]

- Russell, J.A.; Carroll, J.M. On the bipolarity of positive and negative affect. Psychol. Bull. 1999, 125, 3. [Google Scholar] [CrossRef]

- Van Katwyk, P.T.; Fox, S.; Spector, P.E.; Kelloway, E.K. Using the Job-Related Affective Well-Being Scale (JAWS) to investigate affective responses to work stressors. J. Occup. Health Psychol. 2000, 5, 219. [Google Scholar] [CrossRef]

- Selye, H. The Stress of Life; McGraw-Hill Book Company: New York, NY, USA, 1956. [Google Scholar]

- Buchwald, P.; Schwarzer, C. The exam-specific Strategic Approach to Coping Scale and interpersonal resources. Anxiety Stress Coping 2003, 16, 281–291. [Google Scholar] [CrossRef]

- Schachter, R. Enhancing performance on the scholastic aptitude test for test-anxious high school students. Biofeedback 2007, 35, 105–109. [Google Scholar]

- Shaikh, B.; Kahloon, A.; Kazmi, M.; Khalid, H.; Nawaz, K.; Khan, N.; Khan, S. Students, stress and coping strategies: A case of Pakistani medical school. Educ. Health Chang. Learn. Pract. 2004, 17, 346–353. [Google Scholar] [CrossRef] [PubMed]

- Cameron, L.D. Anxiety, cognition, and responses to health threats. In The Self-Regulation of Health and Illness Behaviour; Cameron, L.D., Leventhal, H., Eds.; Routledge: London, UK, 2003; pp. 157–183. [Google Scholar]

- Wine, J.D. Evaluation anxiety: A cognitive-attentional construct. In Series in Clinical & Community Psychology: Achievement, Stress & Anxiety; American Psychological Association: Washington, DC, USA, 1982; pp. 207–219. [Google Scholar]

- Fredrickson, B.L.; Mancuso, R.A.; Branigan, C.; Tugade, M.M. The undoing effect of positive emotions. Motiv. Emot. 2000, 24, 237–258. [Google Scholar] [CrossRef] [PubMed]

- Fredrickson, B.L.; Joiner, T. Positive emotions trigger upward spirals toward emotional well-being. Psychol. Sci. 2002, 13, 172–175. [Google Scholar] [CrossRef] [PubMed]

- Fredrickson, L.B.; Levenson, R.W. Positive emotions speed recovery from the cardiovascular sequelae of negative emotions. Cogn. Emot. 1998, 12, 191–220. [Google Scholar] [CrossRef] [Green Version]

- Tugade, M.M.; Fredrickson, B.L. Regulation of positive emotions: Emotion regulation strategies that promote resilience. J. Happiness Stud. 2007, 8, 311333. [Google Scholar] [CrossRef]

- Gable, S.L.; Reis, H.T.; Impett, E.A.; Asher, E.R. What Do You Do When Things Go Right? The Intrapersonal and Interpersonal Benefits of Sharing Positive Events. J. Personal. Soc. Psychol. 2004, 87, 228–245. [Google Scholar] [CrossRef] [Green Version]

- Bakker, A.B.; Demerouti, E. The job demands-resources model: State of the art. J. Manag. Psychol. 2007, 22, 309–328. [Google Scholar] [CrossRef] [Green Version]

- Kahn, W.A. Psychological conditions of personal engagement and disengagement at work. Acad. Manag. J. 1990, 33, 692–724. [Google Scholar]

- LeDoux, J. The Emotional Brain: The Mysterious Underpinnings of Emotional Life; Simon and Schuster: New York, NY, USA, 1998. [Google Scholar]

- Buijs, R.M.; Van Eden, C.G. The integration of stress by the hypothalamus, amygdala and prefrontal cortex: Balance between the autonomic nervous system and the neuroendocrine system. Prog. Brain Res. 2000, 126, 117–132. [Google Scholar]

- Boucsein, W. Electrodermal Activity; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Posada-Quintero, H.F.; Chon, K.H. Innovations in electrodermal activity data collection and signal processing: A systematic review. Sensors 2020, 20, 479. [Google Scholar] [CrossRef] [Green Version]

- Carlson, N.R. Physiology of Behavior; Pearson Higher, Ed.: Amherst, MA, USA, 2012. [Google Scholar]

- Benarroch, E.E. The central autonomic network: Functional organization, dysfunction, and perspective. Mayo Clin. Proc. 1993, 68, 988–1001. [Google Scholar] [CrossRef]

- Jerritta, S.; Murugappan, M.; Nagarajan, R.; Wan, K. Physiological signals based human emotion recognition: A review. In Proceedings of the 2011 IEEE 7th International Colloquium on Signal Processing and its Applications, Penang, Malaysia, 4–6 March 2011; pp. 410–415. [Google Scholar]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Nath, R.K.; Thapliyal, H.; Caban-Holt, A. Machine learning based stress monitoring in older adults using wearable sensors and cortisol as stress biomarker. J. Signal Process. Syst. 2021, 1–13. [Google Scholar] [CrossRef]

- Bota, P.; Wang, C.; Fred, A.; Silva, H. Emotion assessment using feature fusion and decision fusion classification based on physiological data: Are we there yet? Sensors 2020, 20, 4723. [Google Scholar] [CrossRef]

- Richter, T.; Fishbain, B.; Markus, A.; Richter-Levin, G.; Okon-Singer, H. Using machine learning-based analysis for behavioral differentiation between anxiety and depression. Sci. Rep. 2020, 10, 1–12. [Google Scholar]

- Maaoui, C.; Pruski, A. Emotion recognition through physiological signals for human-machine communication. Cut. Edge Robot. 2010, 2010, 11. [Google Scholar]

- Kim, K.H.; Bang, S.W.; Kim, S.R. Emotion recognition system using short-term monitoring of physiological signals. Med. Biol. Eng. Comput. 2004, 42, 419–427. [Google Scholar] [CrossRef] [PubMed]

- Udovičić, G.; Ðerek, J.; Russo, M.; Sikora, M. Wearable emotion recognition system based on GSR and PPG signals. In Proceedings of the 2nd International Workshop on Multimedia for Personal Health and Health Care, Mountain View, CA, USA, 23 October 2017; pp. 53–59. [Google Scholar]

- Gouverneur, P.; Jaworek-Korjakowska, J.; Köping, L.; Shirahama, K.; Kleczek, P.; Grzegorzek, M. Classification of Physiological Data for Emotion Recognition. In Proceedings of the International Conference on Artificial Intelligence and Soft Computing, Zakopane, Poland, 11–15 June 2017; Springer: Cham, Switzerland, 2017; pp. 619–627. [Google Scholar]

- Priya, A.; Garg, S.; Tigga, N.P. Predicting anxiety, depression and stress in modern life using machine learning algorithms. Procedia Comput. Sci. 2020, 167, 1258–1267. [Google Scholar] [CrossRef]

- Goshvarpour, A.; Abbasi, A.; Goshvarpour, A. An accurate emotion recognition system using ECG and GSR signals and matching pursuit method. Biomed. J. 2017, 40, 355–368. [Google Scholar] [CrossRef]

- Park, S.; Li, C.T.; Han, S.; Hsu, C.; Lee, S.W.; Cha, M. Learning sleep quality from daily logs. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2421–2429. [Google Scholar]

- Guo, H.W.; Huang, Y.S.; Lin, C.H.; Chien, J.C.; Haraikawa, K.; Shieh, J.S. Heart rate variability signal features for emotion recognition by using principal component analysis and support vectors machine. In Proceedings of the 2016 IEEE 16th International Conference on Bioinformatics and Bioengineering (BIBE), Taichung, Taiwan, 31 October–2 November 2016; pp. 274–277. [Google Scholar]

- Raheel, A.; Majid, M.; Alnowami, M.; Anwar, S.M. Physiological sensors based emotion recognition while experiencing tactile enhanced multimedia. Sensors 2020, 20, 4037. [Google Scholar] [CrossRef]

- Chen, S.; Jiang, K.; Hu, H.; Kuang, H.; Yang, J.; Luo, J.; Chen, X.; Li, Y. Emotion Recognition Based on Skin Potential Signals with a Portable Wireless Device. Sensors 2021, 21, 1018. [Google Scholar] [CrossRef]

- Gouizi, K.; Bereksi Reguig, F.; Maaoui, C. Emotion recognition from physiological signals. J. Med. Eng. Technol. 2011, 35, 300–307. [Google Scholar] [CrossRef] [PubMed]

- Carpenter, K.L.; Sprechmann, P.; Calderbank, R.; Sapiro, G.; Egger, H.L. Quantifying risk for anxiety disorders in preschool children: A machine learning approach. PLoS ONE 2016, 11, e0165524. [Google Scholar] [CrossRef] [Green Version]

- Zhuang, J.R.; Guan, Y.J.; Nagayoshi, H.; Muramatsu, K.; Watanuki, K.; Tanaka, E. Real-time emotion recognition system with multiple physiological signals. J. Adv. Mech. Des. Syst. Manuf. 2019, 13, JAMDSM0075. [Google Scholar] [CrossRef] [Green Version]

- Domínguez-Jiménez, J.A.; Campo-Landines, K.C.; Martínez-Santos, J.C.; Delahoz, E.J.; Contreras-Ortiz, S.H. A machine learning model for emotion recognition from physiological signals. Biomed. Signal Process. Control 2020, 55, 101646. [Google Scholar] [CrossRef]

- Wei, W.; Jia, Q.; Feng, Y.; Chen, G. Emotion recognition based on weighted fusion strategy of multichannel physiological signals. Comput. Intell. Neurosci. 2018, 2018, 5296523. [Google Scholar] [CrossRef] [Green Version]

- Pinto, G.; Carvalho, J.M.; Barros, F.; Soares, S.C.; Pinho, A.J.; Brás, S. Multimodal emotion evaluation: A physiological model for cost-effective emotion classification. Sensors 2020, 20, 3510. [Google Scholar] [CrossRef]

- Ni, A.; Azarang, A.; Kehtarnavaz, N. A Review of Deep Learning-Based Contactless Heart Rate Measurement Methods. Sensors 2021, 21, 3719. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.M.; Huang, C.H.; Shih, E.S.; Hu, Y.F.; Hwang, M.J. Detection and classification of cardiac arrhythmias by a challenge-best deep learning neural network model. Iscience 2020, 23, 100886. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Apicella, A.; Arpaia, P.; Mastrati, G.; Moccaldi, N.; Prevete, R. Preliminary validation of a measurement system for emotion recognition. In Proceedings of the 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Bari, Italy, 1 June–1 July 2020; pp. 1–6. [Google Scholar]

- Choppin, A. EEG-Based Human Interface for Disabled Individuals: Emotion Expression with Neural Networks. Master’s Thesis, Tokyo Institute of Technology, Yokohama, Japan, 2000, unpublished. [Google Scholar]

- Boeke, E.A.; Holmes, A.J.; Phelps, E.A. Toward robust anxiety biomarkers: A machine learning approach in a large-scale sample. Biol. Psychiatry: Cogn. Neurosci. Neuroimaging 2020, 5, 79. [Google Scholar] [CrossRef] [PubMed]

- Baltrušaitis, T.; Robinson, P.; Morency, L.P. Openface: An open source facial behavior analysis toolkit. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–10. [Google Scholar]

- Busso, C.; Deng, Z.; Yildirim, S.; Bulut, M.; Lee, C.M.; Kazemzadeh, A.; Lee, S.; Neumann, U.; Narayanan, S. Analysis of emotion recognition using facial expressions, speech and multimodal information. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 13–15 October 2004; pp. 205–211. [Google Scholar]

- Den Uyl, M.J.; Van Kuilenburg, H. The FaceReader: Online facial expression recognition. In Proceedings of the Measuring Behavior, Wageningen, The Netherlands, 30 August–2 September 2005; pp. 589–590. [Google Scholar]

- Park, S.; Lee, S.W.; Whang, M. The Analysis of Emotion Authenticity Based on Facial Micromovements. Sensors 2021, 21, 4616. [Google Scholar] [CrossRef]

- Yu, D.; Sun, S. A Systematic Exploration of Deep Neural Networks for EDA-Based Emotion Recognition. Information 2020, 11, 212. [Google Scholar] [CrossRef] [Green Version]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A multimodal database for affect recognition and implicit tagging. IEEE Trans. Affect. Comput. 2011, 3, 42–55. [Google Scholar] [CrossRef] [Green Version]

- Al Machot, F.; Elmachot, A.; Ali, M.; Al Machot, E.; Kyamakya, K. A deep-learning model for subject-independent human emotion recognition using electrodermal activity sensors. Sensors 2019, 19, 1659. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ganapathy, N.; Veeranki, Y.R.; Swaminathan, R. Convolutional neural network based emotion classification using electrodermal activity signals and time-frequency features. Expert Syst. Appl. 2020, 159, 113571. [Google Scholar] [CrossRef]

- Emery, C.F.; Leatherman, N.E.; Burker, E.J.; MacIntyre, N.R. Psychological outcomes of a pulmonary rehabilitation program. Chest 1991, 100, 613–617. [Google Scholar] [CrossRef]

- E4 Wristband User’s Manual 20150608; Empatica: Milano, Italy, 2015; pp. 5–16.

- Troyer, A.K.; Moscovitch, M.; Winocur, G.; Leach, L.; Freedman, M. Clustering and switching on verbal fluency tests in Alzheimer’s and Parkinson’s disease. J. Int. Neuropsychol. Soc. 1998, 4, 137–143. [Google Scholar] [CrossRef]

- Miller, E. Verbal fluency as a function of a measure of verbal intelligence and in relation to different types of cerebral pathology. Br. J. Clin. Psychol. 1984, 23, 53–57. [Google Scholar] [CrossRef]

- Conn, H.O. Trailmaking and number-connection tests in the assessment of mental state in portal systemic encephalopathy. Dig. Dis. Sci. 1977, 22, 541–550. [Google Scholar] [CrossRef] [PubMed]

- Szurmik, T.; Bibrowicz, K.; Lipowicz, A.; Mitas, A.W. Methods of Therapy of Scoliosis and Technical Functionalities of DISC4SPINE (D4S) Diagnostic and Therapeutic System. In Information Technology in Biomedicine; Springer: Cham, Switzerland, 2021; pp. 201–212. [Google Scholar]

- Przepiórkowski, A. Narodowy Korpus Języka Polskiego; Naukowe PWN: Warsaw, Poland, 2012. [Google Scholar]

- Pradhan, B.K.; Pal, K. Statistical and entropy-based features can efficiently detect the short-term effect of caffeinated coffee on the cardiac physiology. Med. Hypotheses 2020, 145, 110323. [Google Scholar] [CrossRef] [PubMed]

- Mańka, A.; Romaniszyn, P.; Bugdol, M.N.; Mitas, A.W. Methods for Assessing the Subject’s Multidimensional Psychophysiological State in Terms of Proper Rehabilitation. In Information Technology in Biomedicine; Springer: Cham, Switzerland, 2021; pp. 213–225. [Google Scholar]

- Nielsen, O.M. Wavelets in Scientific Computing. Ph.D. Thesis, Technical University of Denmark, Lyngby, Denmark, 1998. [Google Scholar]

- Shukla, J.; Barreda-Angeles, M.; Oliver, J.; Nandi, G.C.; Puig, D. Feature extraction and selection for emotion recognition from electrodermal activity. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Bennasar, M.; Hicks, Y.; Setchi, R. Feature selection using joint mutual information maximisation. Expert Syst. Appl. 2015, 42, 8520–8532. [Google Scholar] [CrossRef] [Green Version]

- Ku, W.; Storer, R.H.; Georgakis, C. Disturbance detection and isolation by dynamic principal component analysis. Chemom. Intell. Lab. Syst. 1995, 30, 179–196. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Ward, T.; Maruna, S. Rehabilitation; Routledge: London, UK, 2007. [Google Scholar]

- Bartel, C.A.; Saavedra, R. The collective construction of work group moods. Adm. Sci. Q. 2000, 45, 197–231. [Google Scholar] [CrossRef] [Green Version]

- Barsade, S.G. The ripple effect: Emotional contagion and its influence on group behavior. Adm. Sci. Q. 2002, 47, 644–675. [Google Scholar] [CrossRef] [Green Version]

- Lachenbruch, P.A. Sensitivity, specificity, and vaccine efficacy. Control. Clin. Trials 1998, 19, 569–574. [Google Scholar] [CrossRef]

- Wertz, F.J. The question of the reliability of psychological research. J. Phenomenol. Psychol. 1986, 17, 181–205. [Google Scholar] [CrossRef]

- Diener, E.; Smith, H.; Fujita, F. The personality structure of affect. J. Personal. Soc. Psychol. 1995, 69, 130. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).