Inertial Measurement Unit Sensors in Assistive Technologies for Visually Impaired People, a Review

Abstract

1. Introduction

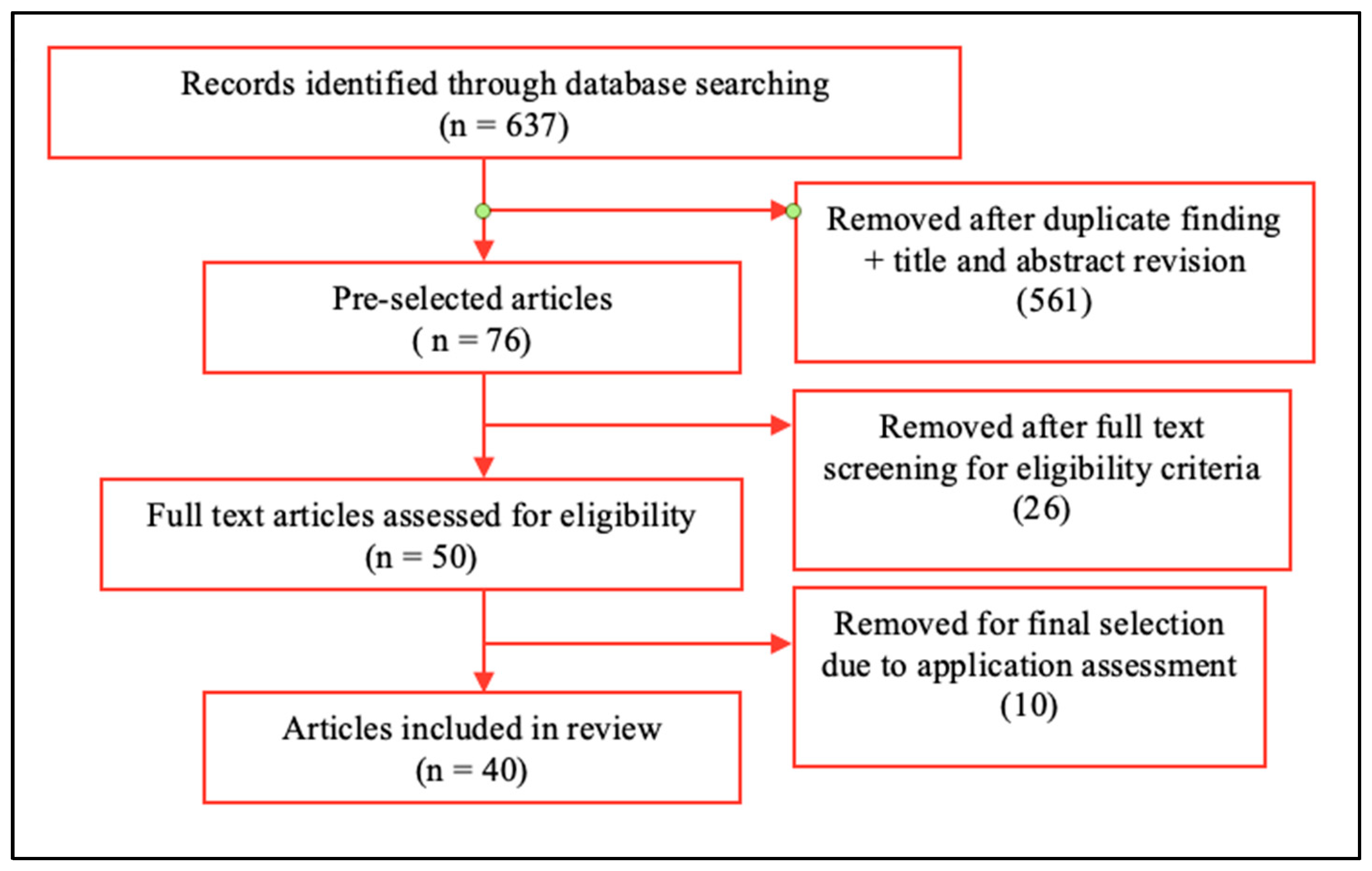

2. Materials and Methods

2.1. Literature Search Method

2.2. Eligibility Criteria

2.3. Inclusion Criteria

3. Results

3.1. Accelerometer

3.2. Gyroscope

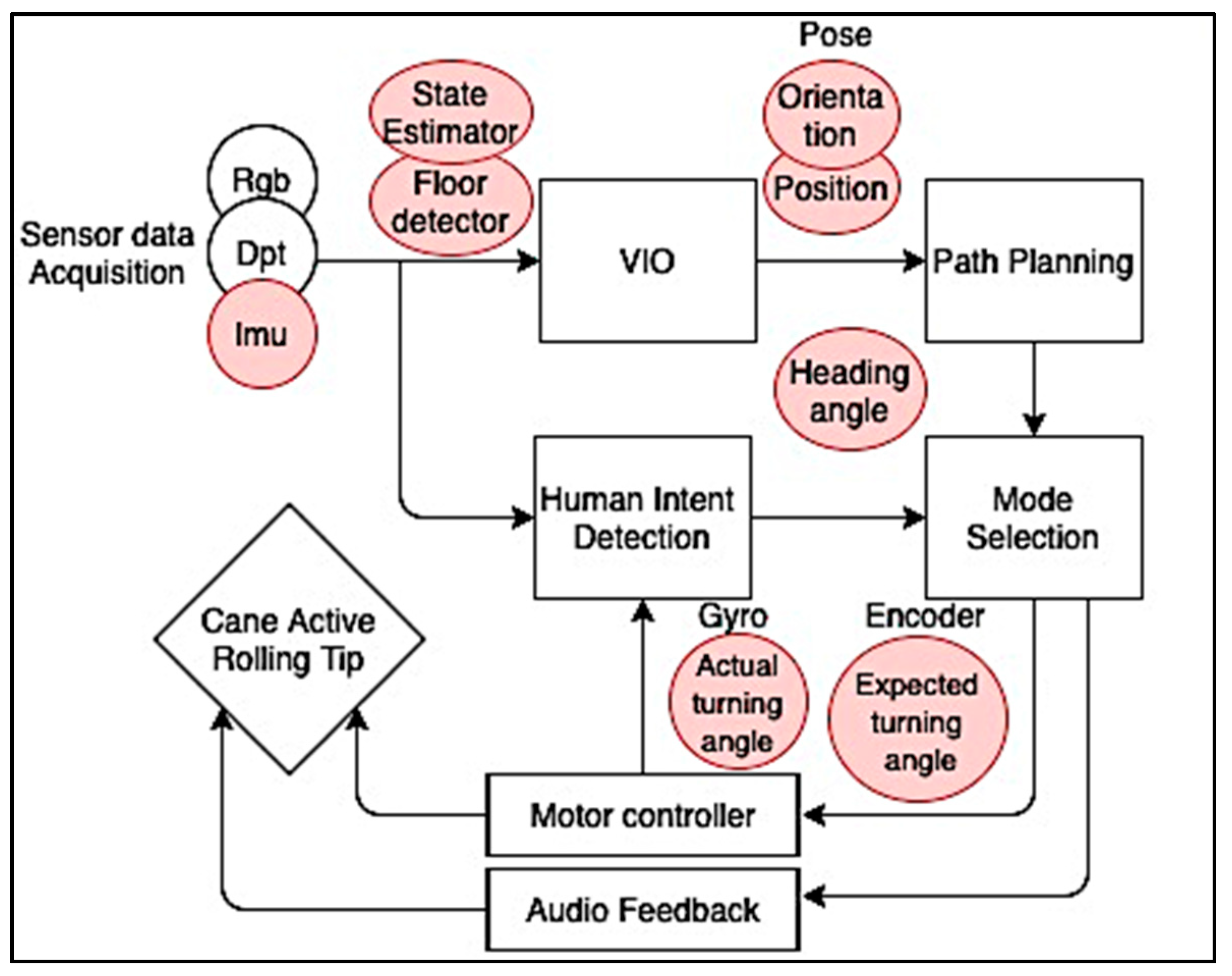

3.3. Accelerometer and Gyroscope Fusion

| Role | IMU | Sensor Fusion | RE | Ref. |

|---|---|---|---|---|

| Pedestrian dead reckoning | MPU-6050 | RGB-D camera, GPS | 0.41 m | [73] |

| Motion detection | MPU-6050 | RGB camera, ultrasonic sensor | - | [75] |

| Position estimation and orientation | MPU-6050 | CMOS camera, line laser | 0.4–1 m | [76] |

| Fall detection and attitude estimation | Not specified | RGB-D camera, GPS, velocity sensor | - | [77] |

| Fall detection | Smartphone IMU | Ultrasonic sensor, GPS | 10–20 m | [78] |

| Orientation | MPU-6050 | GPS, ultrasonic, and wet floor sensors | - | [79] |

| Attitude estimation | Not specified | RGB-D camera | - | [80] |

| Step counting | Not specified | Ultrasonic sensor, GPS | - | [81] |

| Orientation and Height estimation | Not specified | RGB-D camera | - | [82] |

| Heading estimation | Not specified | GPS, compass | 2.9–1.7 m | [83] |

| Angular velocity and acceleration | Smartphone IMU | Strain gauges | - | [84] |

| Pose estimation | LSM9DS1 | RGB-D camera | - | [85] |

| Orientation of the head and hand | BMI055 Bosch | UWB FMCW radar sensor | - | [86] |

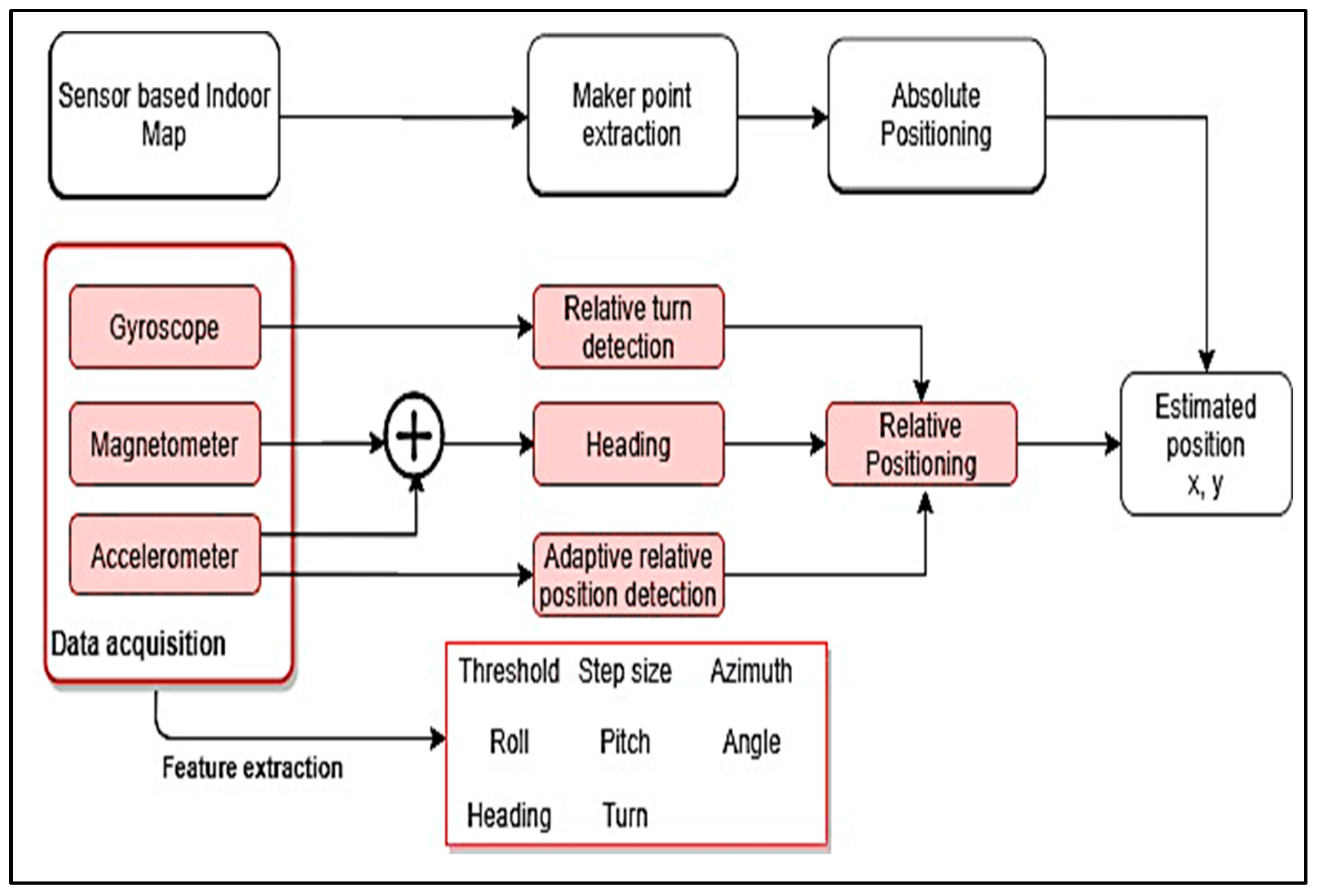

3.4. Accelerometer, Gyroscope, and Magnetometer Fusion

4. Discussion

4.1. Technical Analysis of the IMU’s Roles

4.1.1. Motion Measurement, Angular Velocity, and Fall Detection

4.1.2. Orientation/Attitude Estimation and Heading

4.1.3. Positioning and Tracking

4.2. Usability

4.3. Field of Applications

4.4. New Avenues of Research and Missing Elements

4.4.1. Artificial Intelligence Integration

4.4.2. Biomechanical Analysis

4.5. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization. World Report on Vision; World Health Organization: Geneva, Switzerland, 2019; Volume 214, ISBN 9789241516570. [Google Scholar]

- Brady, E.; Morris, M.R.; Zhong, Y.; White, S.; Bigham, J.P. Visual challenges in the everyday lives of blind people. Conf. Hum. Factors Comput. Syst. Proc. 2013, 2117–2126. [Google Scholar] [CrossRef]

- Real, S.; Araujo, A. Navigation systems for the blind and visually impaired: Past work, challenges, and open problems. Sensors 2019, 19, 3404. [Google Scholar] [CrossRef] [PubMed]

- Aciem, T.M.; da Silveira Mazzotta, M.J. Personal and social autonomy of visually impaired people who were assisted by rehabilitation services. Rev. Bras. Oftalmol. 2013, 72, 261–267. [Google Scholar] [CrossRef][Green Version]

- Kacorri, H.; Kitani, K.M.; Bigham, J.P.; Asakawa, C. People with visual impairment training personal object recognizers: Feasibility and challenges. Conf. Hum. Factors Comput. Syst. Proc. 2017, 5839–5849. [Google Scholar] [CrossRef]

- Pigeon, C.; Li, T.; Moreau, F.; Pradel, G.; Marin-Lamellet, C. Cognitive load of walking in people who are blind: Subjective and objective measures for assessment. Gait Posture 2019. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Al-garadi, M.A.; Alo, U.R. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Duman, S.; Elewi, A.; Yetgin, Z. In Design and Implementation of an Embedded Real-Time System for Guiding Visually Impaired Individuals. In Proceedings of the 2019 International Conference on Artificial Intelligence and Data Processing Symposium, IDAP 2019, Malatya, Turkey, 21–22 September 2019. [Google Scholar] [CrossRef]

- Borelli, E.; Paolini, G.; Antoniazzi, F.; Barbiroli, M.; Benassi, F.; Chesani, F.; Chiari, L.; Fantini, M.; Fuschini, F.; Galassi, A.; et al. HABITAT: An IoT solution for independent elderly. Sensors 2019, 19, 1258. [Google Scholar] [CrossRef]

- Kale, H.; Mandke, P.; Mahajan, H.; Deshpande, V. Human posture recognition using artificial neural networks. In Proceedings of the 2018 IEEE 8th International Advance Computing Conference (IACC), Greater Noida, India, 14–15 December 2018; pp. 272–278. [Google Scholar] [CrossRef]

- Syed, S.; Morseth, B.; Hopstock, L.; Horsch, A. A novel algorithm to detect non-wear time from raw accelerometer data using convolutional neural networks. Sci. Rep. 2020. [Google Scholar] [CrossRef]

- Murad, A.; Pyun, J.Y. Deep recurrent neural networks for human activity recognition. Sensors 2017, 17, 2556. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, M.; Ordieres-Meré, J. Comparison of data preprocessing approaches for applying deep learning to human activity recognition in the context of industry 4.0. Sensors 2018, 18, 2146. [Google Scholar] [CrossRef]

- Niemann, F.; Reining, C.; Rueda, F.M.; Nair, N.R.; Steffens, J.A.; Fink, G.A.; Hompel, M. Ten Lara: Creating a dataset for human activity recognition in logistics using semantic attributes. Sensors 2020, 20, 4083. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y. Miniature inertial measurement unit. In Space Microsystems and Micro/Nano Satellites; Butterworth Heinemann—Elsevier: Oxford, UK, 2018; pp. 233–293. ISBN 9780128126721. [Google Scholar]

- Zhou, H.; Hu, H. Inertial sensors for motion detection of human upper limbs. Sens. Rev. 2007. [Google Scholar] [CrossRef]

- Langfelder, G.; Tocchio, A. Microelectromechanical Systems Integrating Motion and Displacement Sensors; Elsevier Ltd.: Amsterdam, The Netherlands, 2018; ISBN 9780081020562. [Google Scholar]

- Bernieri, G.; Faramondi, L.; Pascucci, F. Augmenting white cane reliability using smart glove for visually impaired people. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 8046–8049. [Google Scholar] [CrossRef]

- Chaccour, K.; Eid, J.; Darazi, R.; El Hassani, A.H.; Andres, E. Multisensor guided walker for visually impaired elderly people. In Proceedings of the 2015 International Conference on Advances in Biomedical Engineering (ICABME), Beirut, Lebanon, 16–18 September 2015; pp. 158–161. [Google Scholar] [CrossRef]

- Basso, S.; Frigo, G.; Giorgi, G. A smartphone-based indoor localization system for visually impaired people. In Proceedings of the 2015 IEEE International Symposium on Medical Measurements and Applications (MeMeA) Proceedings, Turin, Italy, 7–9 May 2015; pp. 543–548. [Google Scholar] [CrossRef]

- Li, B.; Pablo Muñoz, J.; Rong, X.; Xiao, J.; Tian, Y.; Arditi, A. ISANA: Wearable context-aware indoor assistive navigation with obstacle avoidance for the blind. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2016; Volume 9914. [Google Scholar]

- Yang, G.; Saniie, J. Indoor navigation for visually impaired using AR markers. In Proceedings of the IEEE International Conference on Electro Information Technology, Lincoln, NE, USA, 14–17 May 2017. [Google Scholar]

- Al-Khalifa, S.; Al-Razgan, M. Ebsar: Indoor guidance for the visually impaired. Comput. Electr. Eng. 2016, 54. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Mascetti, S.; Oh, U.; Asakawa, C. Turn right: Analysis of rotation errors in turn-by-turn navigation for individuals with visual impairments. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, Galway, Ireland, 22–24 October 2018; pp. 333–339. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Mascetti, S.; Bernareggi, C.; Guerreiro, J.; Oh, U.; Asakawa, C. Deep learning compensation of rotation errors during navigation assistance for people with visual impairments or blindness. ACM Trans. Access. Comput. 2019, 12. [Google Scholar] [CrossRef]

- Sato, D.; Oh, U.; Guerreiro, J.; Ahmetovic, D.; Naito, K.; Takagi, H.; Kitani, K.M.; Asakawa, C. Navcog3 in the wild: Large-scale Blind Indoor Navigation Assistant with Semantic Features. ACM Trans. Access. Comput. 2019, 12. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K.; Takagi, H.; Asakawa, C. NavCog: A navigational cognitive assistant for the blind. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 90–99. [Google Scholar] [CrossRef]

- Kayukawa, S.; Ishihara, T.; Takagi, H.; Morishima, S.; Asakawa, C. Guiding Blind Pedestrians in Public Spaces by Understanding Walking Behavior of Nearby Pedestrians. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–22. [Google Scholar] [CrossRef]

- Kayukawa, S.; Higuchi, K.; Guerreiro, J.; Morishima, S.; Sato, Y.; Kitani, K.; Asakawa, C. BBEEP: A sonic collision avoidance system for blind travellers and nearby pedestrians. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019. [Google Scholar] [CrossRef]

- Mahida, P.T.; Shahrestani, S.; Cheung, H. Indoor positioning framework for visually impaired people using Internet of Things. In Proceedings of the 2019 13th International Conference on Sensing Technology (ICST), Sydney, NSW, Australia, 2–4 December 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Amirgholy, M.; Golshani, N.; Schneider, C.; Gonzales, E.J.; Gao, H.O. An advanced traveler navigation system adapted to route choice preferences of the individual users. Int. J. Transp. Sci. Technol. 2017, 6, 240–254. [Google Scholar] [CrossRef]

- Asakawa, S.; Guerreiro, J.; Sato, D.; Takagi, H.; Ahmetovic, D.; Gonzalez, D.; Kitani, K.M.; Asakawa, C. An independent and interactive museum experience for blind people. In Proceedings of the 16th International Web for All Conference, San Francisco, CA, USA, 13–15 May 2019. [Google Scholar] [CrossRef]

- Guerreiro, J.; Ahmetovic, D.; Kitani, K.M.; Asakawa, C. Virtual navigation for blind people: Building sequential representations of the real-world. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, Baltimore, MD, USA, 20 October–1 November 2017; pp. 280–289. [Google Scholar] [CrossRef]

- Cobo, A.; Guerrón, N.E.; Martín, C.; del Pozo, F.; Serrano, J.J. Differences between blind people’s cognitive maps after proximity and distant exploration of virtual environments. Comput. Hum. Behav. 2017, 77, 294–308. [Google Scholar] [CrossRef]

- Real, S.; Araujo, A. VES: A mixed-reality system to assist multisensory spatial perception and cognition for blind and visually impaired people. Appl. Sci. 2020, 10, 523. [Google Scholar] [CrossRef]

- Elmannai, W.M.; Elleithy, K.M. A Highly Accurate and Reliable Data Fusion Framework for Guiding the Visually Impaired. IEEE Access 2018, 6. [Google Scholar] [CrossRef]

- Cheraghi, S.A.; Namboodiri, V.; Walker, L. GuideBeacon: Beacon-based indoor wayfinding for the blind, visually impaired, and disoriented. In Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications (PerCom), Kona, HI, USA, 13–17 March 2017; pp. 121–130. [Google Scholar] [CrossRef]

- Mekhalfi, M.L.; Melgani, F.; Zeggada, A.; De Natale, F.G.B.; Salem, M.A.M.; Khamis, A. Recovering the sight to blind people in indoor environments with smart technologies. Expert Syst. Appl. 2016. [Google Scholar] [CrossRef]

- Martinez, M.; Roitberg, A.; Koester, D.; Stiefelhagen, R.; Schauerte, B. Using Technology Developed for Autonomous Cars to Help Navigate Blind People. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops, ICCVW, Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Guerreiro, J.; Sato, D.; Asakawa, S.; Dong, H.; Kitani, K.M.; Asakawa, C. Cabot: Designing and evaluating an autonomous navigation robot for blind people. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; pp. 68–82. [Google Scholar] [CrossRef]

- Adebiyi, A.; Sorrentino, P.; Bohlool, S.; Zhang, C.; Arditti, M.; Goodrich, G.; Weiland, J.D. Assessment of feedback modalities for wearable visual AIDS in blind mobility. PLoS ONE 2017, 12, e0170531. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Munoz, J.P.; Rong, X.; Chen, Q.; Xiao, J.; Tian, Y.; Arditi, A.; Yousuf, M. Vision-Based Mobile Indoor Assistive Navigation Aid for Blind People. IEEE Trans. Mob. Comput. 2019, 18. [Google Scholar] [CrossRef] [PubMed]

- Katzschmann, R.K.; Araki, B.; Rus, D. Safe local navigation for visually impaired users with a time-of-flight and haptic feedback device. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26. [Google Scholar] [CrossRef]

- Yang, Z.; Ganz, A. A Sensing Framework for Indoor Spatial Awareness for Blind and Visually Impaired Users. IEEE Access 2019, 7. [Google Scholar] [CrossRef]

- Foster, M.; Brugarolas, R.; Walker, K.; Mealin, S.; Cleghern, Z.; Yuschak, S.; Clark, J.C.; Adin, D.; Russenberger, J.; Gruen, M.; et al. Preliminary Evaluation of a Wearable Sensor System for Heart Rate Assessment in Guide Dog Puppies. IEEE Sens. J. 2020, 20, 9449–9459. [Google Scholar] [CrossRef]

- Islam, M.M.; Sadi, M.S.; Zamli, K.Z.; Ahmed, M.M. Developing Walking Assistants for Visually Impaired People: A Review. IEEE Sens. J. 2019, 19, 2814–2828. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Zaharia, T. Wearable assistive devices for visually impaired: A state of the art survey. Pattern Recognit. Lett. 2018. [Google Scholar] [CrossRef]

- Filippeschi, A.; Schmitz, N.; Miezal, M.; Bleser, G.; Ruffaldi, E.; Stricker, D. Survey of motion tracking methods based on inertial sensors: A focus on upper limb human motion. Sensors 2017, 17, 1257. [Google Scholar] [CrossRef]

- Qi, J.; Yang, P.; Waraich, A.; Deng, Z.; Zhao, Y.; Yang, Y. Examining sensor-based physical activity recognition and monitoring for healthcare using Internet of Things: A systematic review. J. Biomed. Inform. 2018, 87, 138–153. [Google Scholar] [CrossRef]

- Bet, P.; Castro, P.C.; Ponti, M.A. Fall detection and fall risk assessment in older person using wearable sensors: A systematic review. Int. J. Med. Inform. 2019, 130, 103946. [Google Scholar] [CrossRef]

- Heinrich, S.; Springstübe, P.; Knöppler, T.; Kerzel, M.; Wermter, S. Continuous convolutional object tracking in developmental robot scenarios. Neurocomputing 2019, 342, 137–144. [Google Scholar] [CrossRef]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF human motion tracking using miniature inertial sensors. Xsens Motion Technol. BV. Tech. Rep. 2013, 3, 1–9. [Google Scholar]

- Hamzaid, N.A.; Mohd Yusof, N.H.; Jasni, F. Sensory Systems in Micro-Processor Controlled Prosthetic Leg: A Review. IEEE Sens. J. 2020, 20, 4544–4554. [Google Scholar] [CrossRef]

- Shaeffer, D.K. MEMS inertial sensors: A tutorial overview. IEEE Commun. Mag. 2013, 51, 100–109. [Google Scholar] [CrossRef]

- Simdiankin, A.; Byshov, N.; Uspensky, I. A method of vehicle positioning using a non-satellite navigation system. In Proceedings of the Transportation Research Procedia; Elsevier: Amsterdam, The Netherlands, 2018; Volume 36, pp. 732–740. [Google Scholar]

- Munoz Diaz, E.; Bousdar Ahmed, D.; Kaiser, S. A Review of Indoor Localization Methods Based on Inertial Sensors; Elsevier Inc.: Amsterdam, The Netherlands, 2019; ISBN 9780128131893. [Google Scholar]

- Yuan, Q.; Asadi, E.; Lu, Q.; Yang, G.; Chen, I.M. Uncertainty-Based IMU Orientation Tracking Algorithm for Dynamic Motions. IEEE/ASME Trans. Mechatron. 2019, 24, 872–882. [Google Scholar] [CrossRef]

- Shelke, S.; Aksanli, B. Static and dynamic activity detection with ambient sensors in smart spaces. Sensors 2019, 19, 804. [Google Scholar] [CrossRef]

- Trivedi, U.; Mcdonnough, J.; Shamsi, M.; Ochoa, A.I.; Braynen, A.; Krukauskas, C.; Alqasemi, R.; Dubey, R. A wearable device for assisting persons with vision impairment. In Proceedings of the ASME 2017 International Mechanical Engineering Congress and Exposition IMECE2017, Tampa, FL, USA, 3–9 November 2017; pp. 1–8. [Google Scholar]

- Zhu, X.; Haegele, J.A. Reactivity to accelerometer measurement of children with visual impairments and their family members. Adapt. Phys. Act. Q. 2019, 36, 492–500. [Google Scholar] [CrossRef]

- Da Silva, R.B.P.; Marques, A.C.; Reichert, F.F. Objectively measured physical activity in brazilians with visual impairment: Description and associated factors. Disabil. Rehabil. 2018, 40, 2131–2137. [Google Scholar] [CrossRef]

- Brian, A.; Pennell, A.; Haibach-Beach, P.; Foley, J.; Taunton, S.; Lieberman, L.J. Correlates of physical activity among children with visual impairments. Disabil. Health J. 2019, 12, 328–333. [Google Scholar] [CrossRef]

- Keay, L.; Dillon, L.; Clemson, L.; Tiedemann, A.; Sherrington, C.; McCluskey, P.; Ramulu, P.; Jan, S.; Rogers, K.; Martin, J.; et al. PrevenTing Falls in a high-risk, vision-impaired population through specialist ORientation and Mobility services: Protocol for the PlaTFORM randomised trial. Inj. Prev. 2017, 1–8. [Google Scholar] [CrossRef]

- Hirano, T.; Kanebako, J.; Saraiji, M.H.D.Y.; Peiris, R.L.; Minamizawa, K. Synchronized Running: Running Support System for Guide Runners by Haptic Sharing in Blind Marathon. In Proceedings of the 2019 IEEE World Haptics Conference (WHC), Tokyo, Japan, 9–12 July 2019; pp. 25–30. [Google Scholar] [CrossRef]

- Qi, J.; Xu, J.W.; De Shao, W. Physical activity of children with visual impairments during different segments of the school day. Int. J. Environ. Res. Public Health 2020, 17, 6897. [Google Scholar] [CrossRef]

- Haegele, J.A.; Zhu, X.; Kirk, T.N. Physical Activity among Children with Visual Impairments, Siblings, and Parents: Exploring Familial Factors. Matern. Child Health J. 2020. [Google Scholar] [CrossRef]

- Nkechinyere, N.M.; Washington, M.; Uche, O.R.; Gerald, N.I. Monitoring of the Aged and Visually Impaired for Ambulation and Activities of Daily Living. In Proceedings of the 2017 IEEE 3rd International Conference on Electro-Technology for National Development (NIGERCON) Monitoring, Owerri, Nigeria, 7–10 November 2017; pp. 634–638. [Google Scholar]

- Borenstein, J. The navbelt-a computerized multi-sensor travel aid for active guidance of the blind. In Proceedings of the CSUN’s Fifth Annual Conference on Technology and Persons with Disabilities, Los Angeles, CA, USA, 21–24 March 1990; pp. 107–116. [Google Scholar]

- Razavi, J.; Shinta, T. A novel method of detecting stairs for the blind. In Proceedings of the 2017 IEEE Conference on Wireless Sensors (ICWiSe), Miri, Malaysia, 13–14 November 2017; pp. 18–22. [Google Scholar] [CrossRef]

- Dastider, A.; Basak, B.; Safayatullah, M.; Shahnaz, C.; Fattah, S.A. Cost efficient autonomous navigation system (e-cane) for visually impaired human beings. In Proceedings of the 2017 IEEE Region 10 Humanitarian Technology Conference (R10-HTC), Dhaka, Bangladesh, 21–23 December 2017; pp. 650–653. [Google Scholar] [CrossRef]

- Oommen, J.; Bews, D.; Hassani, M.S.; Ono, Y.; Green, J.R. A wearable electronic swim coach for blind athletes. In Proceedings of the 2018 IEEE Life Sciences Conference (LSC), Montreal, QC, Canada, 28–30 October 2018; pp. 219–222. [Google Scholar] [CrossRef]

- Kim, Y.; Moncada-Torres, A.; Furrer, J.; Riesch, M.; Gassert, R. Quantification of long cane usage characteristics with the constant contact technique. Appl. Ergon. 2016, 55, 216–225. [Google Scholar] [CrossRef]

- Croce, D.; Giarré, L.; Pascucci, F.; Tinnirello, I.; Galioto, G.E.; Garlisi, D.; Lo Valvo, A. An indoor and outdoor navigation system for visually impaired people. IEEE Access 2019, 7, 170406–170418. [Google Scholar] [CrossRef]

- Weinberg, H. Using the ADXL202 in Pedometer and Personal Navigation Applications; Analog Devices: Norwood, MA, USA, 2002; Available online: https://www.analog.com/media/en/technical-documentation/application-notes/513772624AN602.pdf (accessed on 12 July 2021).

- Silva, C.S.; Wimalaratne, P. Towards a grid based sensor fusion for visually impaired navigation using sonar and vision measurements. In Proceedings of the 2017 IEEE Region 10 Humanitarian Technology Conference (R10-HTC), Dhaka, Bangladesh, 21–23 December 2017; pp. 784–787. [Google Scholar] [CrossRef]

- Fan, K.; Lyu, C.; Liu, Y.; Zhou, W.; Jiang, X.; Li, P.; Chen, H. Hardware implementation of a virtual blind cane on FPGA. In Proceedings of the 2017 IEEE International Conference on Real-time Computing and Robotics (RCAR), Okinawa, Japan, 14–18 July 2017; pp. 344–348. [Google Scholar] [CrossRef]

- Chen, R.; Tian, Z.; Liu, H.; Zhao, F.; Zhang, S.; Liu, H. Construction of a voice driven life assistant system for visually impaired people. In Proceedings of the 2018 International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 26–28 May 2018; pp. 87–92. [Google Scholar] [CrossRef]

- Wang, B.; Xiang, W.; Ma, K.; Mu, Y.Q.; Wu, Z. Design and implementation of intelligent walking stick based on OneNET Internet of things development platform. In Proceedings of the 2019 28th Wireless and Optical Communications Conference (WOCC), Beijing, China, 9–10 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Meshram, V.V.; Patil, K.; Meshram, V.A.; Shu, F.C. An astute assistive device for mobility and object recognition for visually impaired people. IEEE Trans. Hum. Mach. Syst. 2019, 49, 449–460. [Google Scholar] [CrossRef]

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable travel aid for environment perception and navigation of visually impaired people. Electronics 2019, 8, 697. [Google Scholar] [CrossRef]

- Bastaki, M.M.; Sobuh, A.A.; Suhaiban, N.F.; Almajali, E.R. Design and implementation of a vision stick with outdoor/indoor guiding systems and smart detection and emergency features. In Proceedings of the 2020 Advances in Science and Engineering Technology International Conferences (ASET), Dubai, United Arab Emirates, 4 February–9 April 2020; pp. 15–18. [Google Scholar] [CrossRef]

- Li, Z.; Song, F.; Clark, B.C.; Grooms, D.R.; Liu, C. A Wearable Device for Indoor Imminent Danger Detection and Avoidance with Region-Based Ground Segmentation. IEEE Access 2020, 8, 184808–184821. [Google Scholar] [CrossRef]

- Zhong, Z.; Lee, J. Virtual Guide Dog: Next-generation pedestrian signal for the visually impaired. Adv. Mech. Eng. 2020, 12, 1–9. [Google Scholar] [CrossRef]

- Gill, S.; Seth, N.; Scheme, E. A multi-sensor cane can detect changes in gait caused by simulated gait abnormalities and walking terrains. Sensors 2020, 20, 631. [Google Scholar] [CrossRef] [PubMed]

- Jin, L.; Zhang, H.; Shen, Y.; Ye, C. Human-Robot Interaction for Assisted Object Grasping by a Wearable Robotic Object Manipulation Aid for the Blind. In Proceedings of the 2020 IEEE International Conference on Human-Machine Systems (ICHMS), Rome, Italy, 7–9 September 2020; pp. 15–20. [Google Scholar] [CrossRef]

- Orth, A.; Kwiatkowski, P.; Pohl, N. A Radar-Based Hand-Held Guidance Aid for the Visually Impaired. In Proceedings of the 2020 German Microwave Conference (GeMiC), Cottbus, Germany, 9–11 March 2020; pp. 180–183. [Google Scholar]

- Bai, J.; Lian, S.; Liu, Z.; Wang, K.; Liu, D. Virtual-Blind-Road Following-Based Wearable Navigation Device for Blind People. IEEE Trans. Consum. Electron. 2018, 64, 136–143. [Google Scholar] [CrossRef]

- Bai, J.; Lian, S.; Liu, Z.; Wang, K.; Liu, D. Smart guiding glasses for visually impaired people in indoor environment. IEEE Trans. Consum. Electron. 2017, 63, 258–266. [Google Scholar] [CrossRef]

- Zhang, H.; Ye, C. Human-Robot Interaction for Assisted Wayfinding of a Robotic Navigation Aid for the Blind. In Proceedings of the 2019 12th International Conference on Human System Interaction (HSI), Richmond, VA, USA, 25–27 June 2019; pp. 137–142. [Google Scholar] [CrossRef]

- Zegarra Flores, J.V.; Rasseneur, L.; Galani, R.; Rakitic, F.; Farcy, R. Indoor navigation with smart phone IMU for the visually impaired in university buildings. J. Assist. Technol. 2016, 10, 133–139. [Google Scholar] [CrossRef]

- Moder, T.; Reitbauer, C.R.; Wisiol, K.M.D.; Wilfinger, R.; Wieser, M. An Indoor Positioning and Navigation Application for Visually Impaired People Using Public Transport. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Ferrand, S.; Alouges, F.; Aussal, M. An Augmented Reality Audio Device Helping Blind People Navigation; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 10897, ISBN 9783319942735. [Google Scholar]

- Simoes, W.C.S.S.; De Lucena, V.F. Blind user wearable audio assistance for indoor navigation based on visual markers and ultrasonic obstacle detection. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–11 January 2016; pp. 60–63. [Google Scholar] [CrossRef]

- Dang, Q.K.; Chee, Y.; Pham, D.D.; Suh, Y.S. A virtual blind cane using a line laser-based vision system and an inertial measurement unit. Sensors 2016, 16, 95. [Google Scholar] [CrossRef] [PubMed]

- Botezatu, N.; Caraiman, S.; Rzeszotarski, D.; Strumillo, P. Development of a versatile assistive system for the visually impaired based on sensor fusion. In Proceedings of the 2017 21st International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 19–21 October 2017; pp. 540–547. [Google Scholar] [CrossRef]

- Grewe, L.; Overell, W. Road following for blindBike: An assistive bike navigation system for low vision persons. Signal Process. Sens. Inf. Fusion Target Recognit. XXVI 2017, 10200, 1020011. [Google Scholar] [CrossRef]

- Biswas, M.; Dhoom, T.; Pathan, R.K.; Sen Chaiti, M. Shortest Path Based Trained Indoor Smart Jacket Navigation System for Visually Impaired Person. In Proceedings of the 2020 IEEE International Conference on Smart Internet of Things (SmartIoT), Beijing, China, 14–16 August 2020; pp. 228–235. [Google Scholar] [CrossRef]

- Ferrand, S.; Alouges, F.; Aussal, M. An electronic travel aid device to help blind people playing sport. IEEE Instrum. Meas. Mag. 2020, 23, 14–21. [Google Scholar] [CrossRef]

- Mahida, P.; Shahrestani, S.; Cheung, H. Deep learning-based positioning of visually impaired people in indoor environments. Sensors 2020, 20, 6238. [Google Scholar] [CrossRef]

- Zhang, H.; Ye, C. A visual positioning system for indoor blind navigation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9079–9085. [Google Scholar] [CrossRef]

- Ciobanu, A.; Morar, A.; Moldoveanu, F.; Petrescu, L.; Ferche, O.; Moldoveanu, A. Real-time indoor staircase detection on mobile devices. In Proceedings of the 2017 21st International Conference on Control Systems and Computer Science (CSCS), Bucharest, Romania, 29–31 May 2017; pp. 287–293. [Google Scholar] [CrossRef]

- Ong, J.C.; Arnedt, J.T.; Gehrman, P.R. Insomnia diagnosis, assessment, and evaluation. In Principles and Practice of Sleep Medicine; Elsevier: Amsterdam, The Netherlands, 2017; pp. 785–793. [Google Scholar]

- Manber, R.; Bootzin, R.R.; Loewy, D. Sleep Disorders. In Comprehensive Clinical Psychology; Elsevier: Amsterdam, The Netherlands, 1998; pp. 505–527. [Google Scholar]

- Ong, S.R.; Crowston, J.G.; Loprinzi, P.D.; Ramulu, P.Y. Physical activity, visual impairment, and eye disease. Eye 2018, 32, 1296–1303. [Google Scholar] [CrossRef]

- Khemthong, S.; Packer, T.L.; Dhaliwal, S.S. Using the Actigraph to measure physical activity of people with disabilities: An investigation into measurement issues. Int. J. Rehabil. Res. 2006, 29, 315–318. [Google Scholar] [CrossRef]

- Manos, A.; Klein, I.; Hazan, T. Gravity-based methods for heading computation in pedestrian dead reckoning. Sensors 2019, 19, 1170. [Google Scholar] [CrossRef]

- Ricci, L.; Taffoni, F.; Formica, D. On the orientation error of IMU: Investigating static and dynamic accuracy targeting human motion. PLoS ONE 2016, 11, e0161940. [Google Scholar] [CrossRef]

- Kok, M.; Hol, J.D.; Schön, T.B. Using Inertial Sensors for Position and Orientation Estimation. Found. Trends Signal Process. 2017, 11, 1–153. [Google Scholar] [CrossRef]

- Fernandes, H.; Costa, P.; Filipe, V.; Paredes, H.; Barroso, J. A review of assistive spatial orientation and navigation technologies for the visually impaired. Univers. Access Inf. Soc. 2019, 18, 155–168. [Google Scholar] [CrossRef]

- Yoon, P.K.; Zihajehzadeh, S.; Kang, B.S.; Park, E.J. Robust Biomechanical Model-Based 3-D Indoor Localization and Tracking Method Using UWB and IMU. IEEE Sens. J. 2017, 17, 1084–1096. [Google Scholar] [CrossRef]

- Huang, X.; Wang, F.; Zhang, J.; Hu, Z.; Jin, J. A posture recognition method based on indoor positioning technology. Sensors 2019, 19, 1464. [Google Scholar] [CrossRef] [PubMed]

- Gong, X.; Chen, L. A conditional cubature Kalman filter and its application to transfer alignment of distributed position and orientation system. Aerosp. Sci. Technol. 2019, 95, 105405. [Google Scholar] [CrossRef]

- Ramazi, R.; Perndorfer, C.; Soriano, E.; Laurenceau, J.P.; Beheshti, R. Multi-modal predictive models of diabetes progression. In Proceedings of the 10th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, Niagara Falls, NY, USA, 7–10 September 2019; pp. 253–258. [Google Scholar] [CrossRef]

- Movahedia, A.; Mojtahedia, H.; Farazyanib, F. Differences in socialization between visually impaired student-athletes and non-athletes. Res. Dev. Disabil. 2011, 32, 58–62. [Google Scholar] [CrossRef]

- International Blind Sports Federation IBSA. Available online: https://www.ibsasport.org/ (accessed on 12 July 2021).

- Stelmack, J. Quality of life of low-vision patients and outcomes of low-vision rehabilitation. Optom. Vis. Sci. 2001, 78, 335–342. [Google Scholar] [CrossRef]

- Lopera, G.; Aguirre, Á.; Parada, P.; Baquet, J. Manual Tecnico De Servicios De Rehabilitacion Integral Para Personas Ciegas O Con Baja Vision En America Latina; Unión Latinoamericana De Ciegos-Ulac: Montevideo, Uruguay, 2010. [Google Scholar]

- Organización Nacional de Ciegos Españoles. Discapacidad Visual y Autonomía Personal. Enfoque Práctico de la Rehabilitación; Organización Nacional de Ciegos Españoles: Madrid, Spain, 2011; ISBN 978-84-484-0277-8. [Google Scholar]

- Health Vet VistA, Blind rehabilitation user manual, Version 5.0.29, Department of Veterans Affairs, USA. August 2011. Available online: https://www.va.gov/vdl/documents/Clinical/Blind_Rehabilitation/br_user_manual.pdf (accessed on 12 July 2021).

- Muzny, M.; Henriksen, A.; Giordanengo, A.; Muzik, J.; Grøttland, A.; Blixgård, H.; Hartvigsen, G.; Årsand, E. Wearable sensors with possibilities for data exchange: Analyzing status and needs of different actors in mobile health monitoring systems. Int. J. Med. Inform. 2020, 133, 104017. [Google Scholar] [CrossRef]

- Tamura, T. Wearable Inertial Sensors and Their Applications; Elsevier Inc.: Amsterdam, The Netherlands, 2014; ISBN 9780124186668. [Google Scholar]

- Lu, Y.S.; Wang, H.W.; Liu, S.H. An integrated accelerometer for dynamic motion systems. Meas. J. Int. Meas. Confed. 2018. [Google Scholar] [CrossRef]

- Chen, Y.; Abel, K.T.; Janecek, J.T.; Chen, Y.; Zheng, K.; Cramer, S.C. Home-based technologies for stroke rehabilitation: A systematic review. Int. J. Med. Inform. 2019, 123, 11–22. [Google Scholar] [CrossRef]

- Porciuncula, F.; Roto, A.V.; Kumar, D.; Davis, I.; Roy, S.; Walsh, C.J.; Awad, L.N. Wearable movement sensors for rehabilitation: A focused review of technological and clinical advances. PM R 2018, 10, S220–S232. [Google Scholar] [CrossRef] [PubMed]

- Vienne-Jumeau, A.; Quijoux, F.; Vidal, P.P.; Ricard, D. Wearable inertial sensors provide reliable biomarkers of disease severity in multiple sclerosis: A systematic review and meta-analysis. Ann. Phys. Rehabil. Med. 2019. [Google Scholar] [CrossRef]

- European Blind Union, Rehabilitation for blind and partially sighted people in Europe. 3 EBU Position Pap. Rehabil. Jt. 2015. Available online: http://www.euroblind.org/sites/default/files/media/position-papers/EBU-joint-position-paper-on-Rehabilitation.pdf (accessed on 12 July 2021).

- da Silva, M.R.; de Souza Nobre, M.I.R.; de Carvalho, K.M.; de Cássisa Letto Montilha, R. Visual impairment, rehabilitation and International Classification of Functioning, Disability and Health. Rev. Bras. Oftalmol. 2014, 73. [Google Scholar] [CrossRef]

- Xu, C.; Chai, D.; He, J.; Zhang, X.; Duan, S. InnoHAR: A deep neural network for complex human activity recognition. IEEE Access 2019, 7, 9893–9902. [Google Scholar] [CrossRef]

- Yang, C.; Chen, Z.; Yang, C. Classification Using Convolutional Neural Network by Encoding Multivariate Time Series as Two-Dimensional Colored Images. Sensors 2020, 20, 168. [Google Scholar] [CrossRef]

- Flores, G.H.; Manduchi, R. WeAllWalk: An Annotated Data Set of Inertial Sensor Time Series from Blind Walkers. ACM Trans. Access. Comput. 2018, 11, 1–28. [Google Scholar] [CrossRef]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef]

- Li, Y.; Chen, R.; Niu, X.; Zhuang, Y.; Gao, Z.; Hu, X.; El-Sheimy, N. Inertial Sensing Meets Artificial Intelligence: Opportunity or Challenge? arXiv 2020, arXiv:2007.06727, 1–14. [Google Scholar]

- Gazzellini, S.; Lispi, M.L.; Castelli, E.; Trombetti, A.; Carniel, S.; Vasco, G.; Napolitano, A.; Petrarca, M. The impact of vision on the dynamic characteristics of the gait: Strategies in children with blindness. Exp. Brain Res. 2016, 234, 2619–2627. [Google Scholar] [CrossRef] [PubMed]

- Morriën, F.; Taylor, M.J.D.; Hettinga, F.J. Biomechanics in paralympics: Implications for performance. Int. J. Sports Physiol. Perform. 2017, 12, 578–589. [Google Scholar] [CrossRef] [PubMed]

- Mihailovic, A.; Swenor, B.K.; Friedman, D.S.; West, S.K.; Gitlin, L.N.; Ramulu, P.Y. Gait implications of visual field damage from glaucoma. Transl. Vis. Sci. Technol. 2017, 6, 23. [Google Scholar] [CrossRef]

- da Silva, E.S.; Fischer, G.; da Rosa, R.G.; Schons, P.; Teixeira, L.B.T.; Hoogkamer, W.; Peyré-Tartaruga, L.A. Gait and functionality of individuals with visual impairment who participate in sports. Gait Posture 2018, 62, 355–358. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Li, Q. Inertial sensor-based methods in walking speed estimation: A systematic review. Sensors 2012, 12, 6102–6116. [Google Scholar] [CrossRef]

- Zrenner, M.; Gradl, S.; Jensen, U.; Ullrich, M.; Eskofier, B.M. Comparison of different algorithms for calculating velocity and stride length in running using inertial measurement units. Sensors 2018, 18, 4194. [Google Scholar] [CrossRef] [PubMed]

- Gill, S.; Seth, N.; Scheme, E. A multi-sensor matched filter approach to robust segmentation of assisted gait. Sensors 2018, 18, 2970. [Google Scholar] [CrossRef]

- Gill, S.; Hearn, J.; Powell, G.; Scheme, E. Design of a multi-sensor IoT-enabled assistive device for discrete and deployable gait monitoring. In Proceedings of the 2017 IEEE Healthcare Innovations and Point of Care Technologies (HI-POCT), Bethesda, MD, USA, 6–8 November 2017; pp. 216–220. [Google Scholar] [CrossRef]

- Mannini, A.; Sabatini, A.M. Walking speed estimation using foot-mounted inertial sensors: Comparing machine learning and strap-down integration methods. Med. Eng. Phys. 2014, 36, 1312–1321. [Google Scholar] [CrossRef]

- Emerson, R.W.; Kim, D.S.; Naghshineh, K.; Myers, K.R. Biomechanics of Long Cane Use. J. Vis. Impair. Blind. 2019, 113, 235–247. [Google Scholar] [CrossRef] [PubMed]

| Role | IMU | Sensor Fusion | Ref. |

|---|---|---|---|

| Identify human movement | ADXL345 | RGB Camera, Ultrasonic Sensor | [59] |

| Measures physical activity | ActiGraph GT3x | N/A | [60] |

| Measures physical activity | ActiGraph wGT3X-BT | N/A | [61] |

| Measures physical activity | ActiGraph wGT3X-BT | N/A | [62] |

| Measures physical activity | ActiGraph wGT3x+ | N/A | [63] |

| Sense the foot movements | KXR94-2050 | N/A | [64] |

| Measures physical activity | ActiGraph GT3x | N/A | [65] |

| Measures physical activity | ActiGraph GT3x | N/A | [66] |

| Monitoring of human activities | Not specified | N/A | [67] |

| Role | IMU | Sensor Fusion | RE | Ref. |

|---|---|---|---|---|

| Measure the tilt angle | MPU-6050 | Ultrasonic sensor, GPS | 0.2–0.5 FA/m | [69] |

| Detect rotation and movements | MPU 6050 | Ultrasonic sensor, GPS | 1–7 cm | [70] |

| Track the direction of gravity, orientation | Smartphone IMU | Camera | - | [71] |

| Sweeping velocity | Re Sense | Camera | - | [72] |

| Role | IMU | Sensor Fusion | RE | Ref. |

|---|---|---|---|---|

| Device and pose estimation | VN-100 IMU/AHRS | RGB-D camera | 0.2 m | [89] |

| Step counting, body orientation | Smartphone IMU | Barometer | - | [90] |

| Attitude estimation and orientation | Smartphone IMU | Beacons, GPS | 5–6 m | [91] |

| Pedestrian dead reckoning | Smartphone IMU | Beacons | 1.5–2 m | [30] |

| Body orientation | BNO-055 | Optical flow sensors, UWB | 0.5 m | [92] |

| Path calculation | Not specified | RGB camera, ultrasonic sensor | - | [93] |

| Acceleration and angular rate | Xsens IMU | RGB camera, line laser | 6.17 cm | [94] |

| Tracking the head and body movement | LPMS-CURS2 | RGB camera, structure sensor PS1080 | 25–104 cm | [95] |

| Detect the right edge of the road | Smartphone IMU | RGB-D camera, GPS | - | [96] |

| Step counting and Heading estimation | MPU-9250 | Ultrasonic sensor, Pi Camera | - | [97] |

| Head tracking | MPU-9250 | GPS/GLONASS and UWB | 10–20 cm | [98] |

| Positioning and step size estimation | Smartphone IMU | N/A | - | [99] |

| Pose estimation | VN 100 IMU/AHRS | RGB-D Camera | 1.5 m | [100] |

| Absolute orientation | Smartphone IMU | RGB-D Camera | - | [101] |

| Visually Impaired Subjects | Usability Test | Usability Questionnaire | Commentary | Ref. |

|---|---|---|---|---|

| 7 | Yes | No | Subjects were participants on the blind marathon sponsored by Japan Blind Marathon Association. | [64] |

| 1 | No | No | - | [67] |

| 10 | N/A | No | Subjects recruited through the foundation Access for All (Swiss nonprofit organization). | [72] |

| No described | Yes | No | - | [73] |

| 60 | Yes | No | There were 30 subjects who were totally blind and the others had low vision. In addition, the authors involved physiotherapists, rehabilitation workers, and social workers for the development of the usability test. | [79] |

| 20 | Yes | Yes | Ten subjects were totally blind and the others were partially sighted. The authors followed the protocol approved by the Beijing Fangshan District Disabled Persons’ Federation for recruitment and experiments. | [80] |

| 3 | Yes | No | The subjects were student volunteers from the university. A mobility and orientation instructor evaluated their traveling techniques with a long cane to use the application. | [90] |

| 11 | Yes | No | The system was implemented and tested at the railway station in Graz, Austria. | [91] |

| 2 | Yes | No | - | [92] |

| 10 | Yes | Yes | The navigation profile of the users was considerate (height, walking speed, and step distance). | [93] |

| No described | Yes | Yes | - | [95] |

| 2 | Yes | No | - | [98] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reyes Leiva, K.M.; Jaén-Vargas, M.; Codina, B.; Serrano Olmedo, J.J. Inertial Measurement Unit Sensors in Assistive Technologies for Visually Impaired People, a Review. Sensors 2021, 21, 4767. https://doi.org/10.3390/s21144767

Reyes Leiva KM, Jaén-Vargas M, Codina B, Serrano Olmedo JJ. Inertial Measurement Unit Sensors in Assistive Technologies for Visually Impaired People, a Review. Sensors. 2021; 21(14):4767. https://doi.org/10.3390/s21144767

Chicago/Turabian StyleReyes Leiva, Karla Miriam, Milagros Jaén-Vargas, Benito Codina, and José Javier Serrano Olmedo. 2021. "Inertial Measurement Unit Sensors in Assistive Technologies for Visually Impaired People, a Review" Sensors 21, no. 14: 4767. https://doi.org/10.3390/s21144767

APA StyleReyes Leiva, K. M., Jaén-Vargas, M., Codina, B., & Serrano Olmedo, J. J. (2021). Inertial Measurement Unit Sensors in Assistive Technologies for Visually Impaired People, a Review. Sensors, 21(14), 4767. https://doi.org/10.3390/s21144767