Three-Dimensional Reconstruction Method of Rapeseed Plants in the Whole Growth Period Using RGB-D Camera

Abstract

:1. Introduction

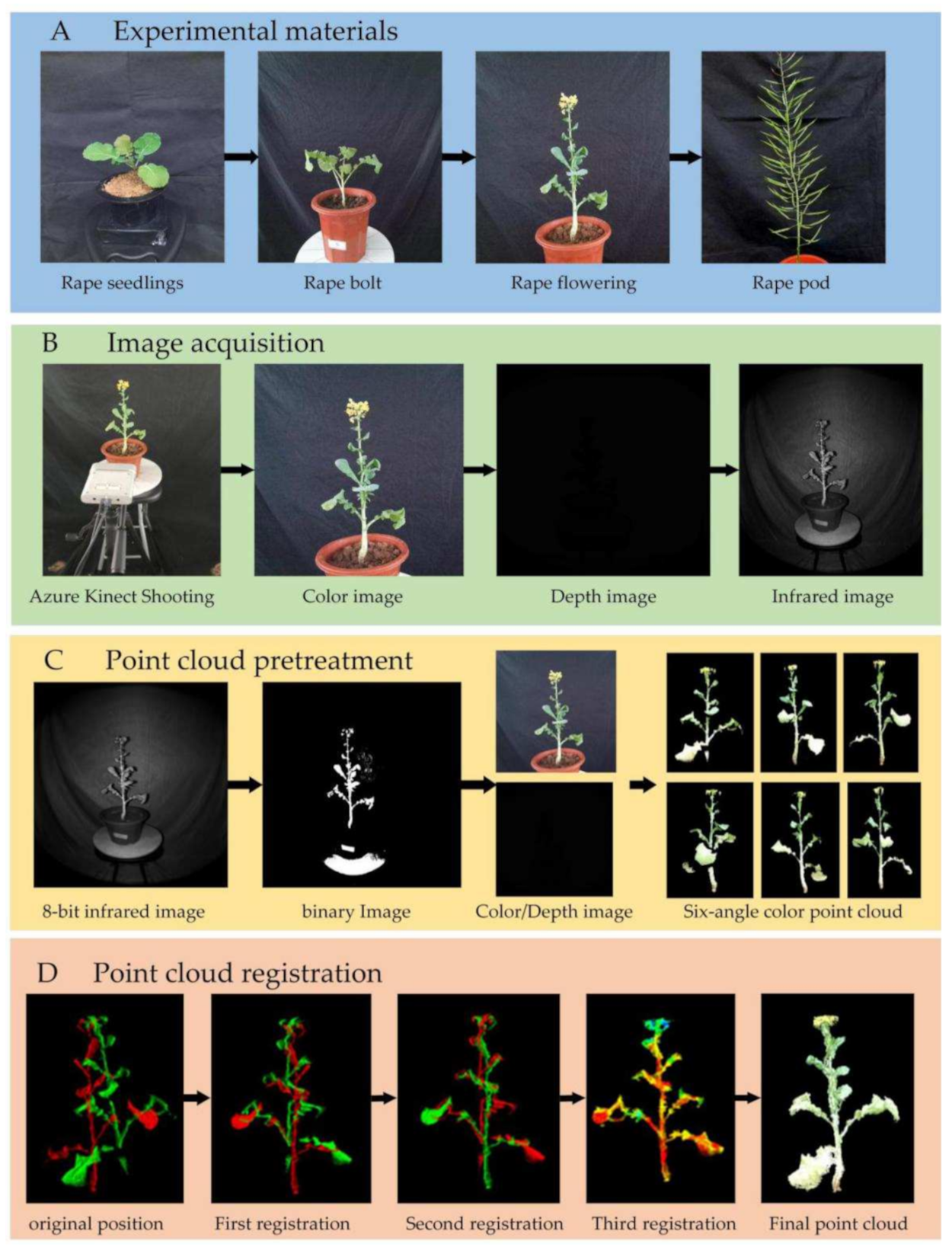

2. Materials and Methods

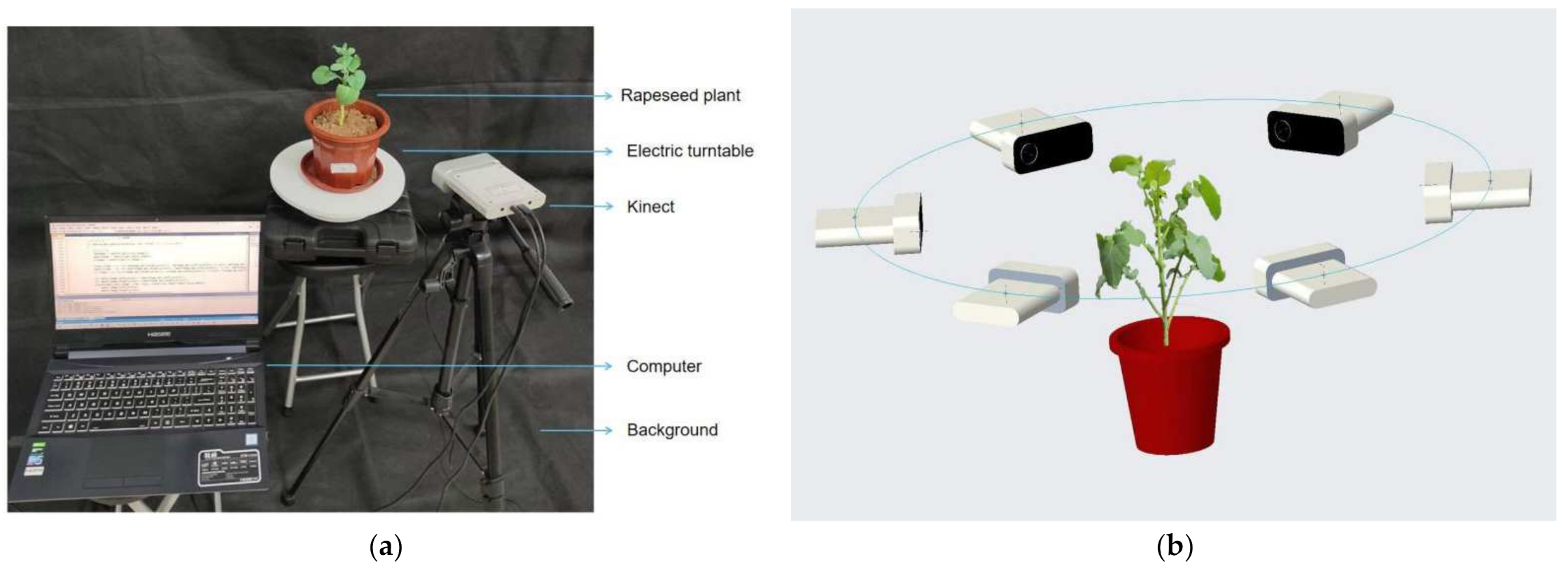

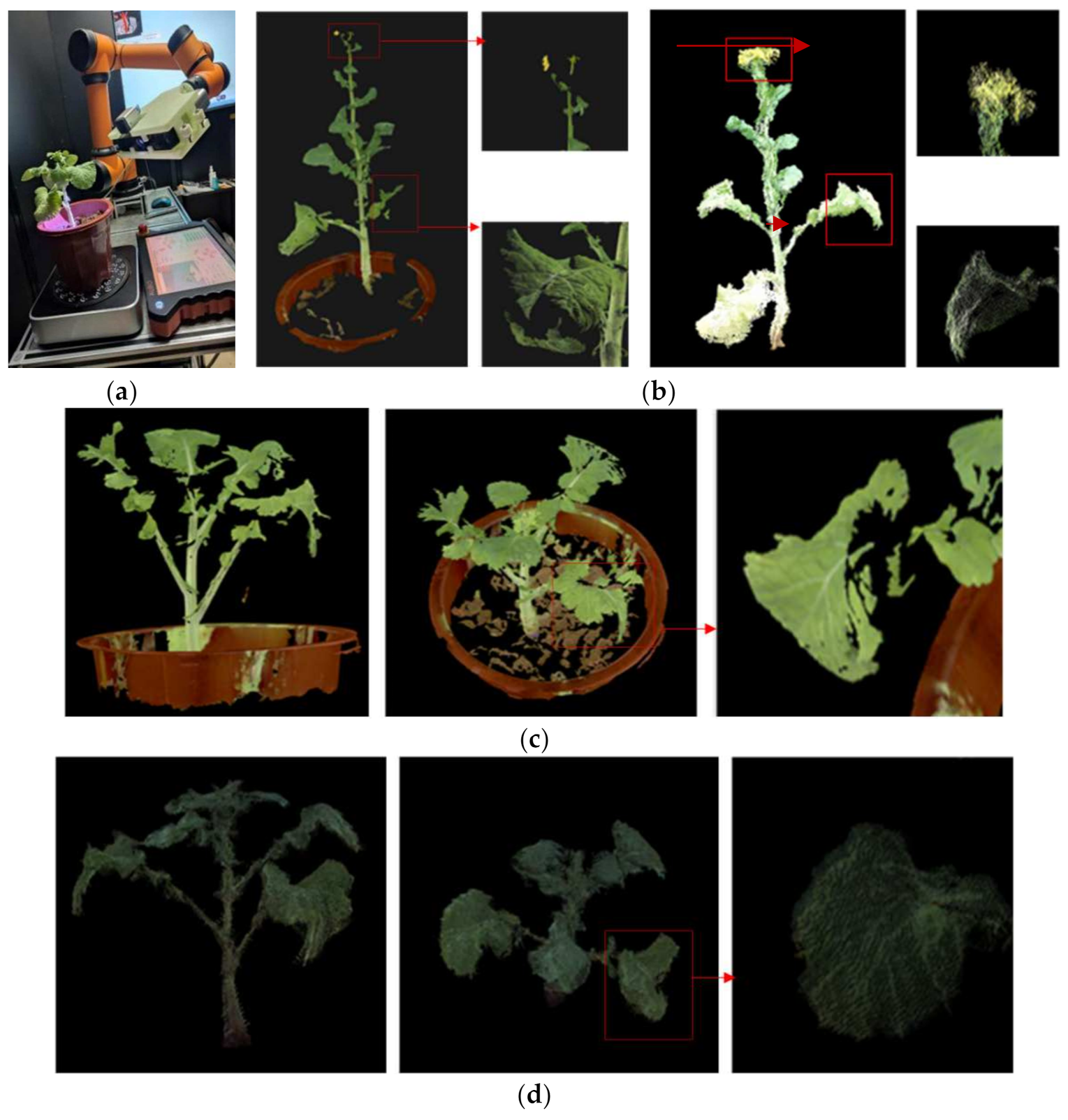

2.1. Experimental Setup and Data Acquisition

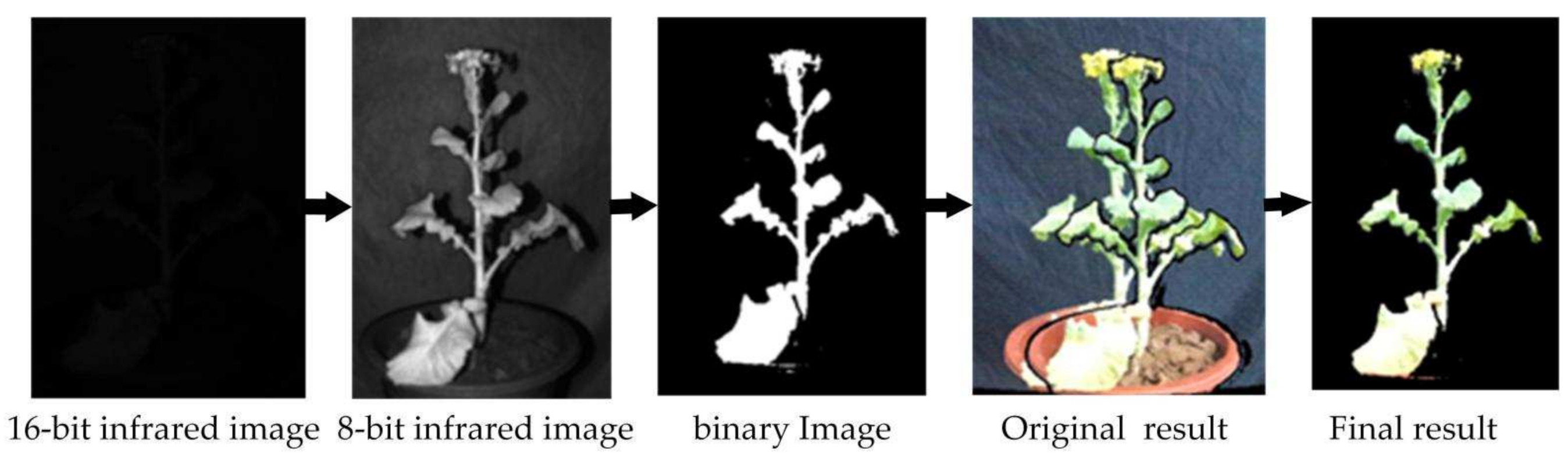

2.2. How to Obtain a Color Point Cloud in a Single-View

2.2.1. RGB-D Image Alignment in a Single-View

2.2.2. Color Point Cloud Acquisition in a Single-View

Floating Point Denoising

Color Point Cloud Conversion

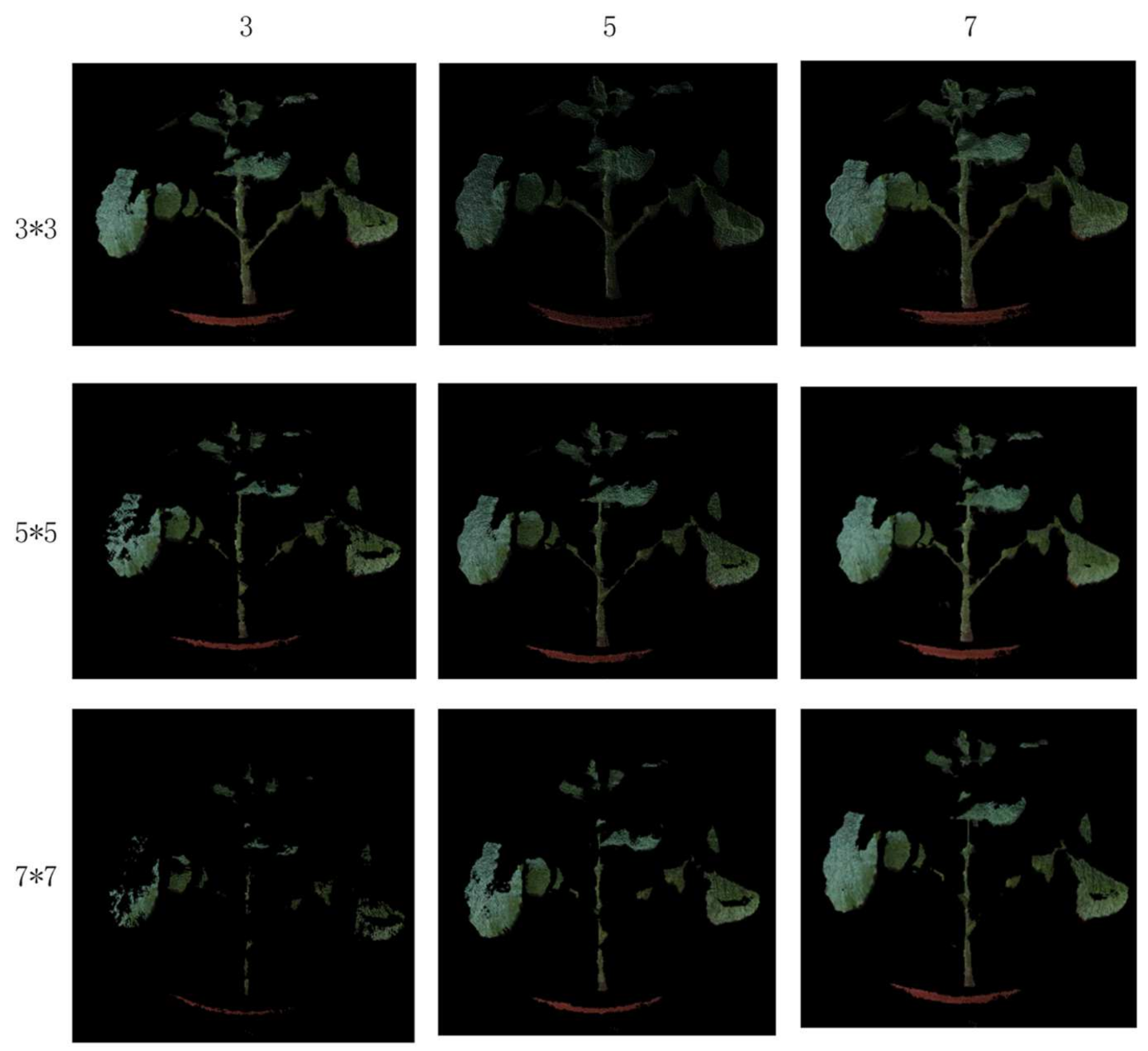

Point Cloud Filtering

2.3. Registration for Multi-View Point Clouds

Registration Optimization Based on ICP Method

3. Results

3.1. RGB-D Image Alignment Test

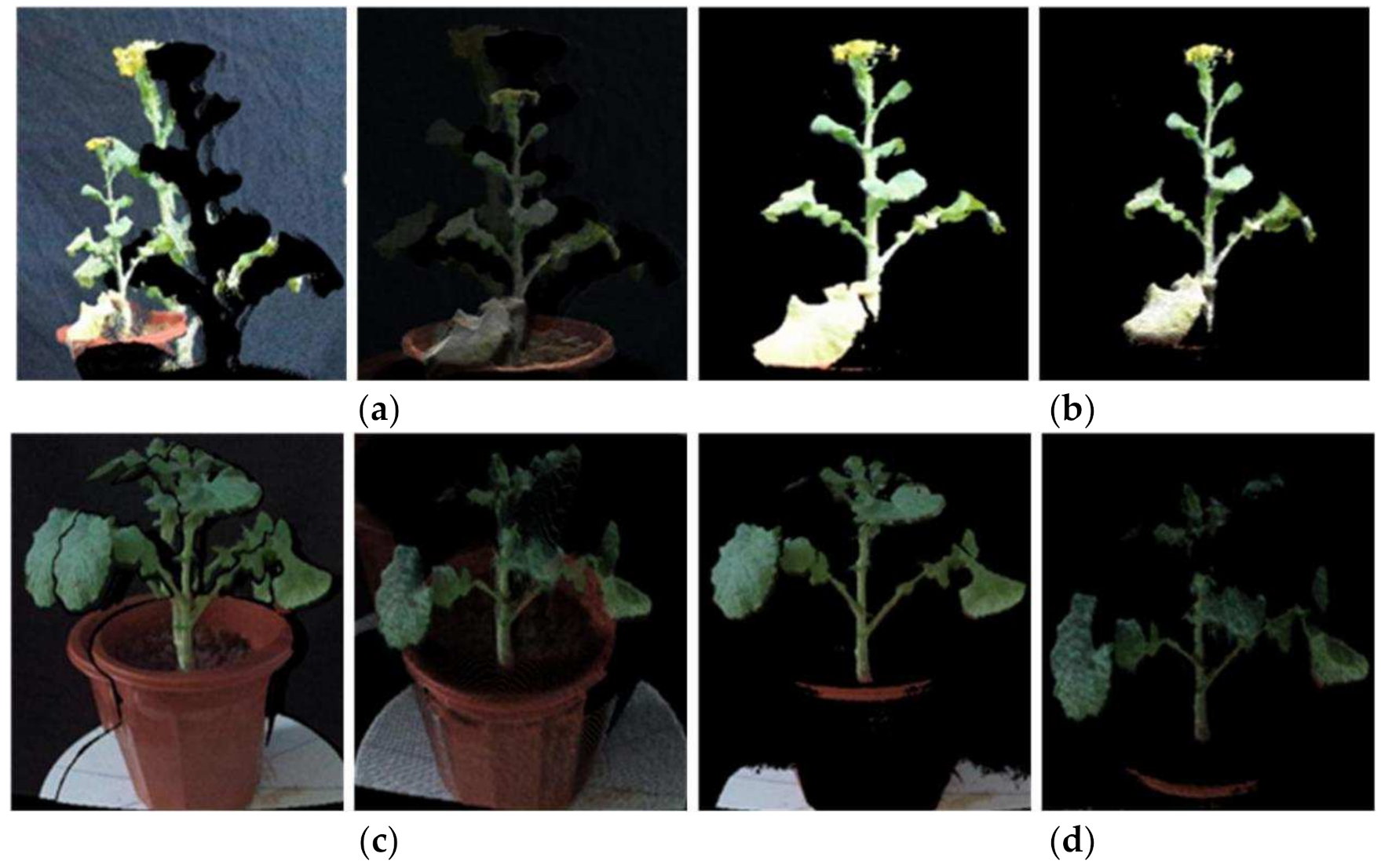

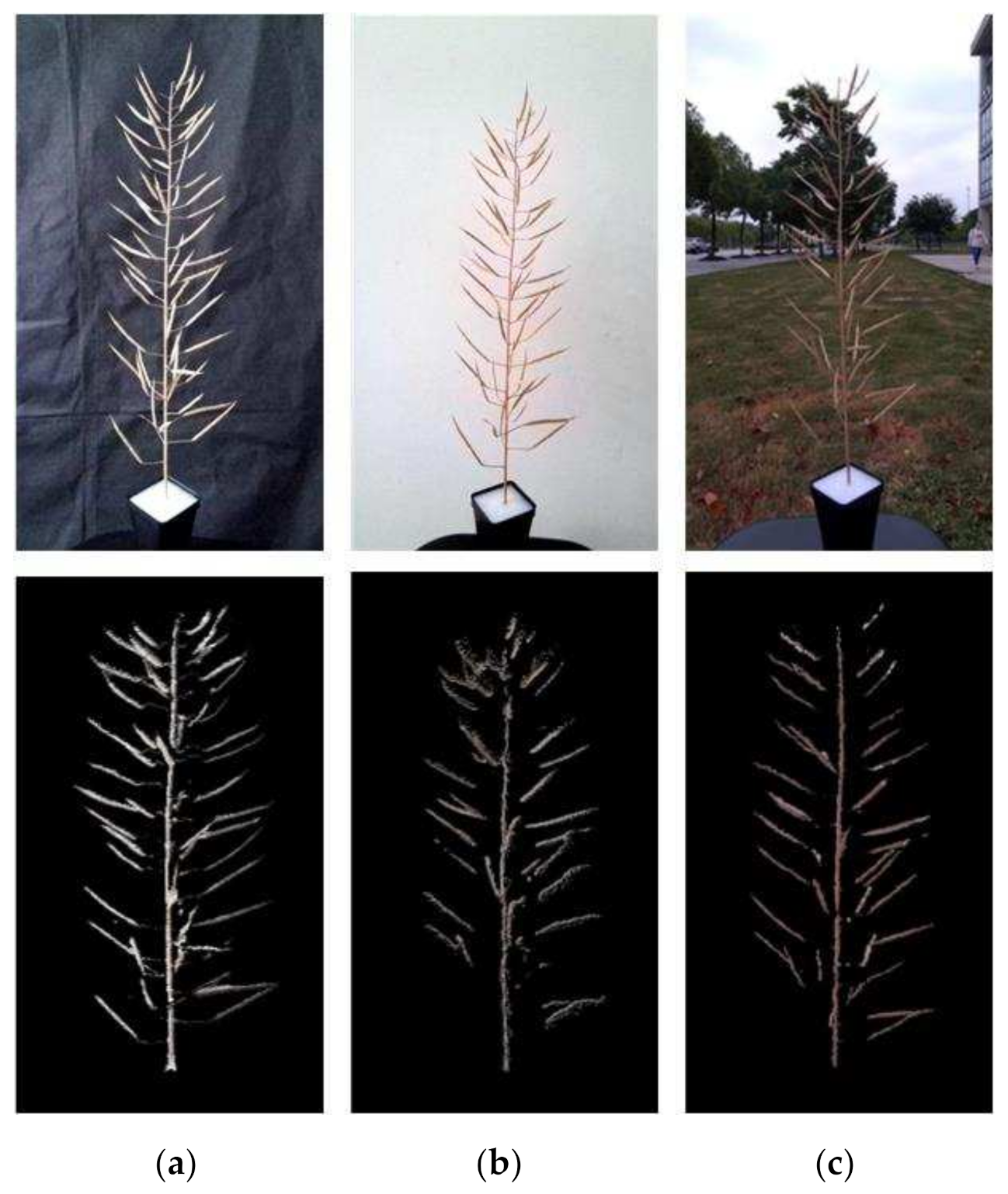

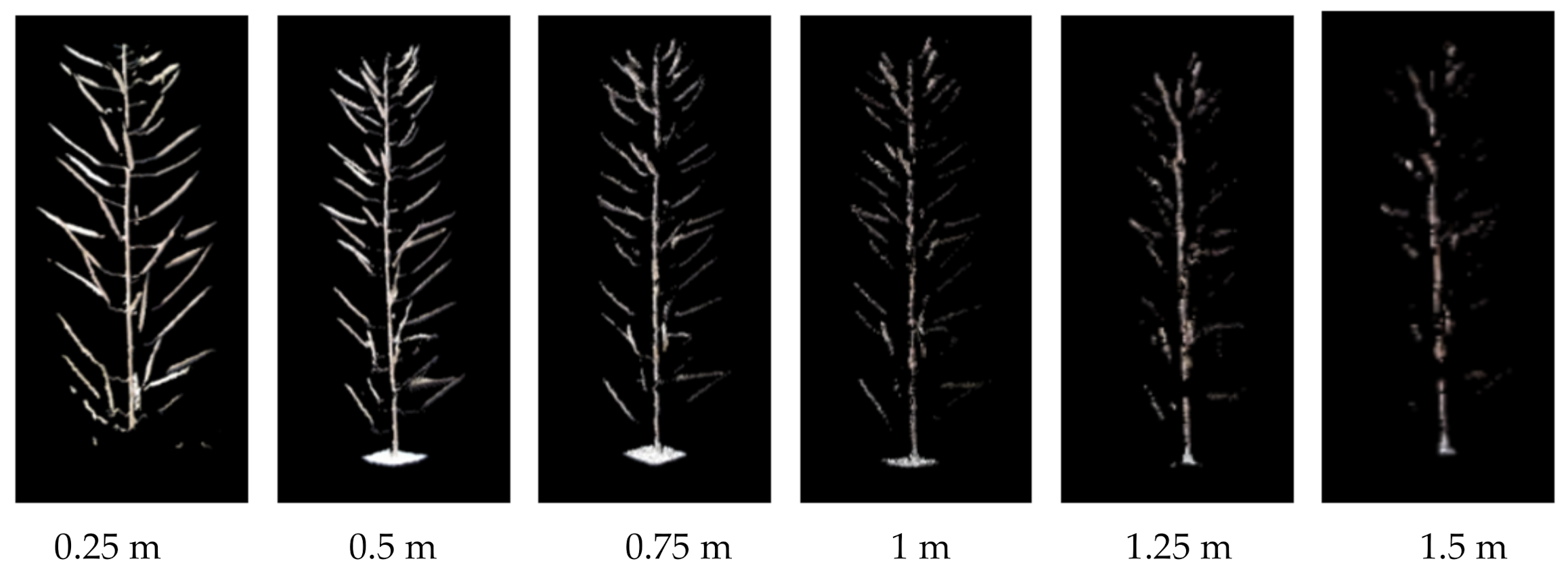

3.2. The Influence of Shooting Background and Distance on 3D Reconstruction

3.3. Point Cloud Noise Removal Test

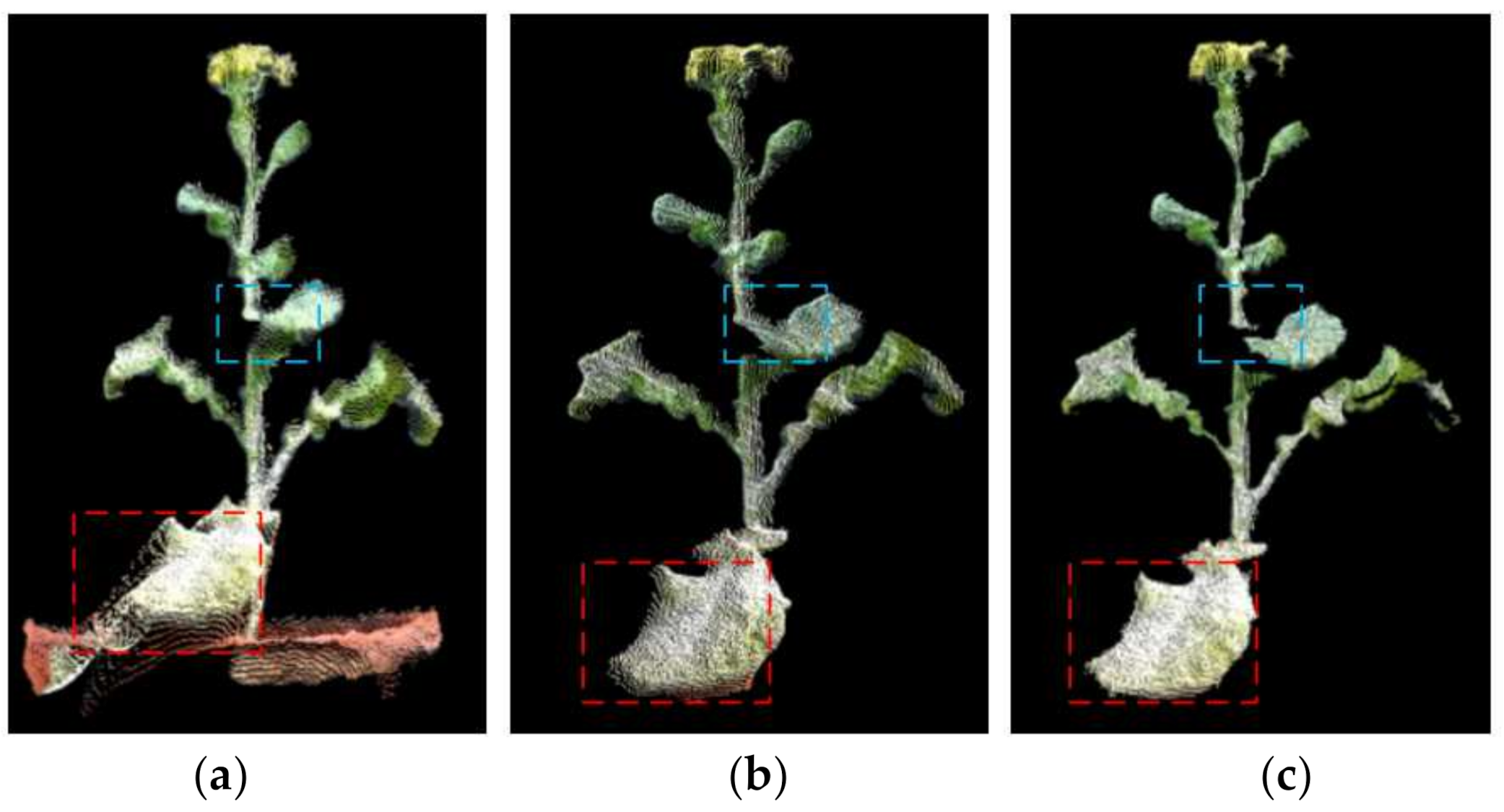

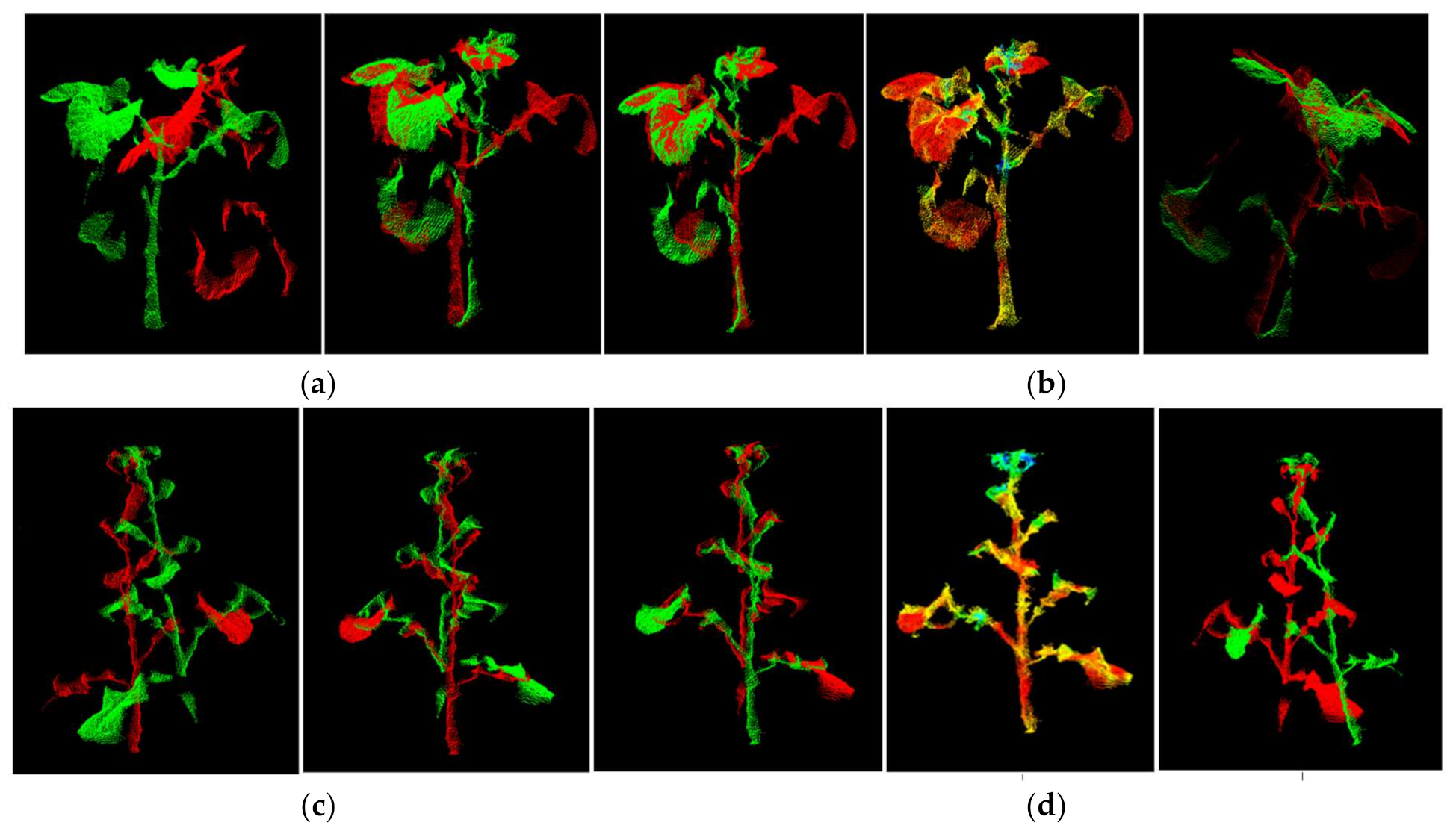

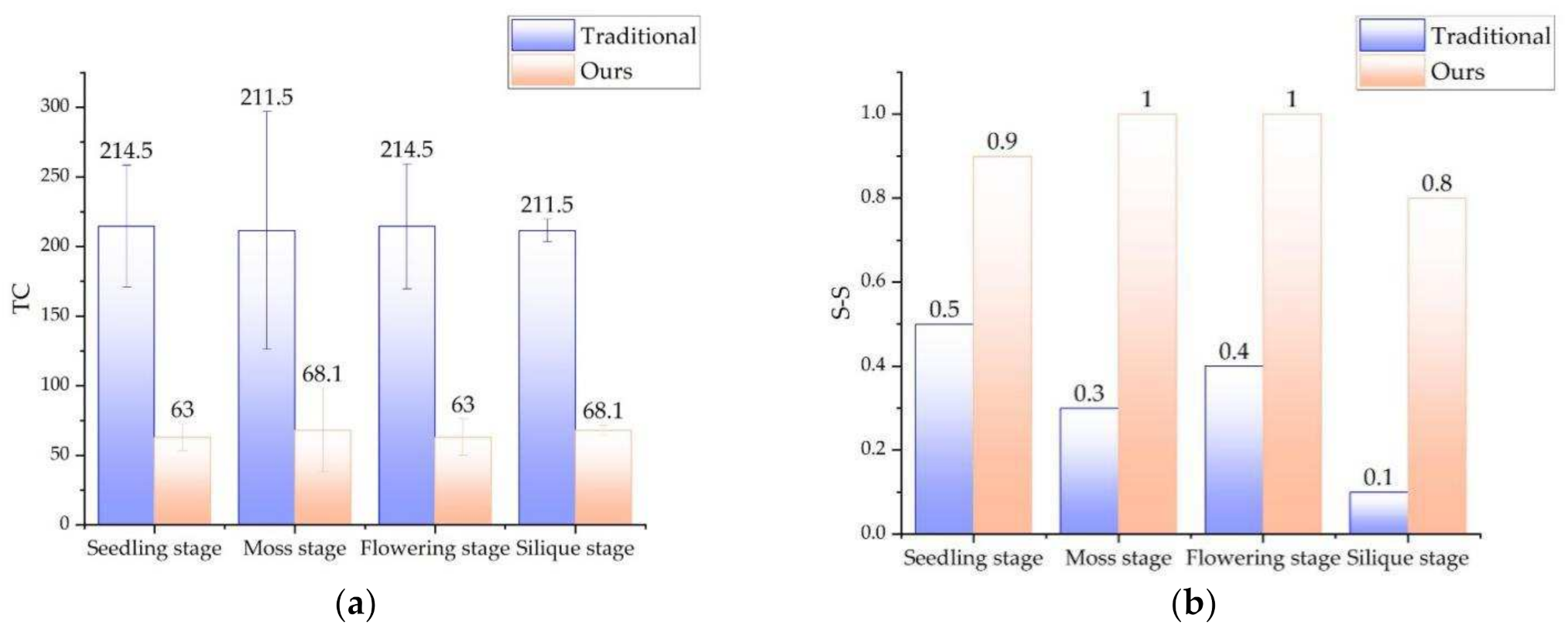

3.4. Point Cloud Registration Experiment

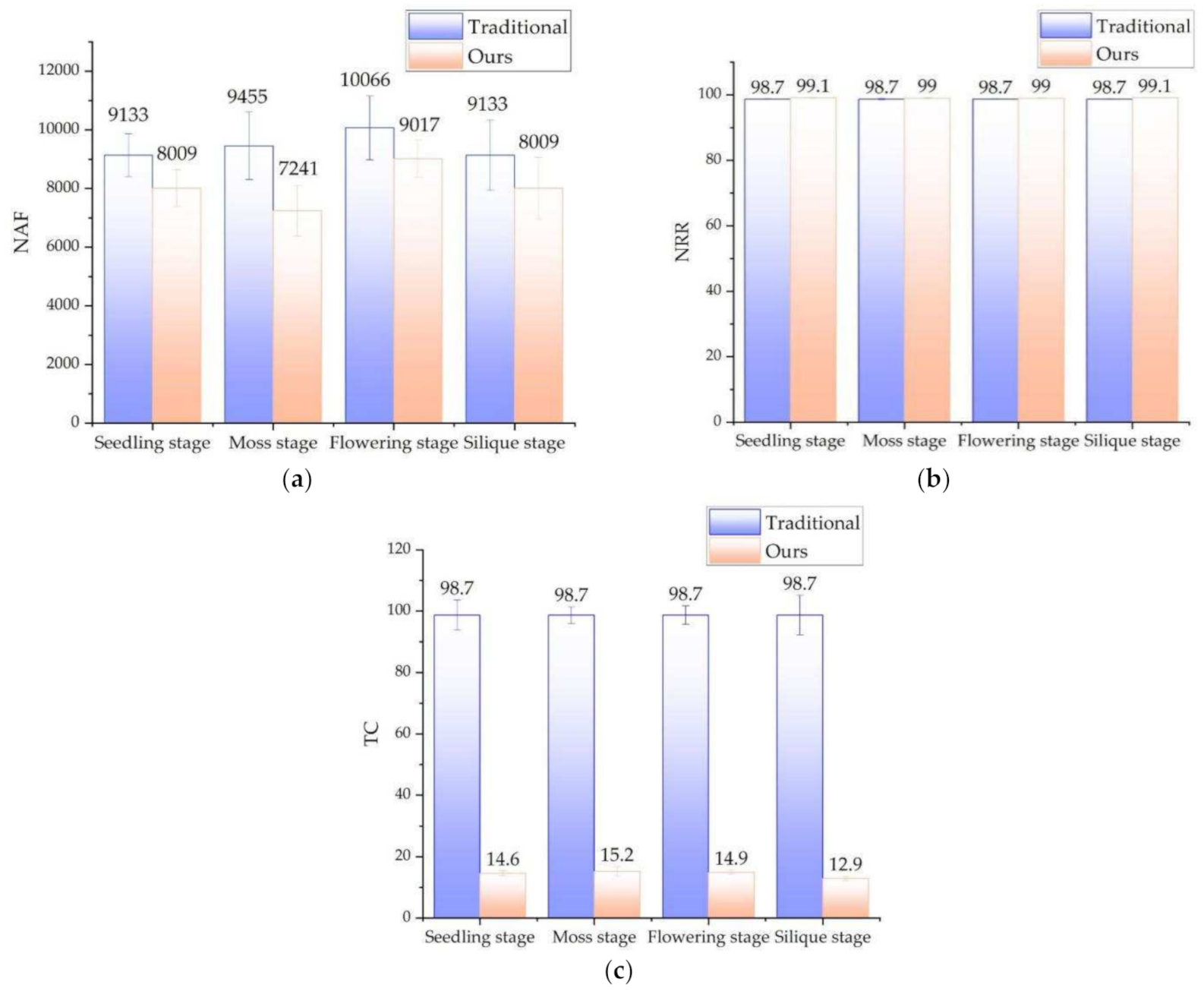

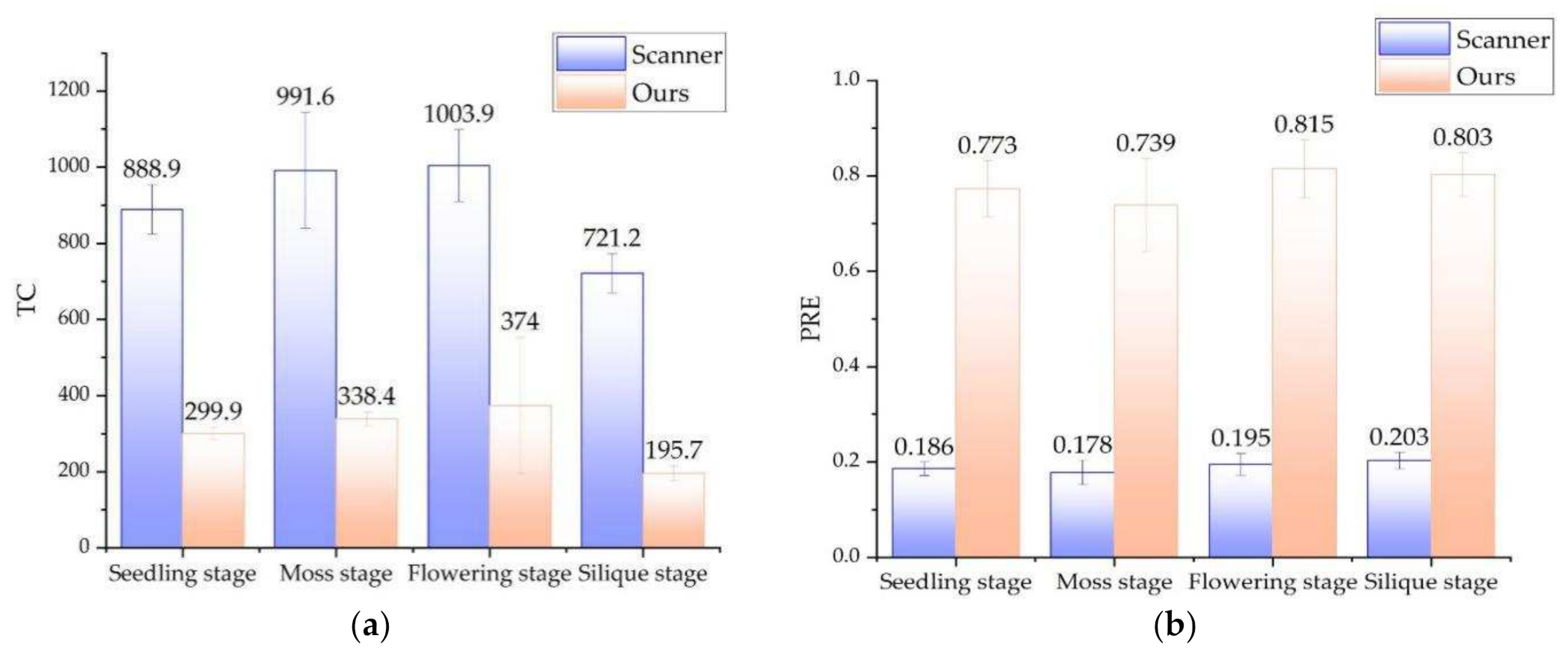

3.5. Integral 3D Reconstruction Experiment

3.6. Analysis and Discussion of 3D Reconstruction of Rapeseed Plants

- (1)

- Seedling stage. The rapeseed plants in this period are very short, and the camera can take images at a relatively close distance, so that the accuracy of the rape point cloud obtained is higher. But also because the plant is too small, the point cloud curvature characteristics are not obvious, so that the matching point pairs are too few and the registration is easy to fail.

- (2)

- Seedling stage and moss stage. The morphological differences of rapeseed plants at this stage are not obvious, but they are significantly larger than the seedling stage, so the shooting distance has to be increased to ensure that a complete plant image is captured, which makes the difference between the point cloud quality and the seedling stage insignificant. However, due to the richer morphological structure of rape at this stage, the registration success rate is greatly improved.

- (3)

- Silique stage. The plant type of rapeseed in the silique stage is tall (often more than 2 m in height), and it is necessary to cut the rapeseed into branches before shooting. The branch size is similar to the moss stage. However, the stalks and stems in this branch are very small (often less than 1 mm), which is close to the minimum spatial resolution of the method in this paper. At this time, the point cloud is often missing, the number of matching point pairs is sharply reduced, the traditional ICP method is almost unusable, and the registration success rate of the method in this paper has also decreased. All in all, the method in this paper can achieve a higher 3D reconstruction success rate and better point cloud quality for the whole growing period of rapeseed plants.

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, G.; Tang, Y.; Zou, X.; Cheng, J.; Xiong, J. Fruit detection in natural environment using partial shape matching and probabilistic Hough transform. Int. J. Adv. Precis. Agric. 2020, 21, 160–177. [Google Scholar] [CrossRef]

- Alwaseela, A.; Haiyan, C.; Liang, W.; Khalid, M.; He, Y. Nutrient Status Diagnosis of Infield Oilseed Rape via Deep Learning-Enabled Dynamic Model. IEEE Trans. Ind. Inform. 2021, 17, 4379–4389. [Google Scholar]

- Singh, K.D.; Hema, S.N.; Sally, V.; Parkin, I.; Shirtliffe, S.J. UAV-Based Hyperspectral Imaging Technique to Estimate Canola (Brassica napus L.) Seedpods Maturity. Can. J. Remote Sens. 2021, 47, 33–47. [Google Scholar] [CrossRef]

- Paulus, S.; Dupuis, J.; Mahlein, A.K.; Kuhlmann, H. Surface feature based classification of plant organs from3D laser scanned point clouds for plant phenotyping. BMC Bioinform. 2013, 14, 238. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, H.; Hu, B.; Xu, Z.; Cai, Z.; Liu, B.; Wang, X.; Geng, T.; Zhong, S.; Zhao, J. Visual Navigation and Landing Control of an Unmanned Aerial Vehicle on a Moving Autonomous Surface Vehicle via Adaptive Learning. IEEE Trans. Neural Net. Learn. Syst. 2021, 1–11. [Google Scholar] [CrossRef]

- Wu, D.; Guo, Z.; Ye, J.; Feng, H.; Liu, J.; Chen, G.; Zheng, J.; Yan, D.; Yang, X.; Xiong, X. Combining high-throughput micro-CT-RGB phenotyping and genome-wide association study to dissect the genetic architecture of tiller growth in rice. J. Exp. Bot. 2019, 70, 545–561. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Theodore, M.; David, D.; Jan, V.; Abraham, J. Using functional–structural plant models to study, understand and integrate plant development and ecophysiology. Ann. Bot. 2011, 108, 987–989. [Google Scholar]

- Fiorani, F.; Schurr, U. Future Scenarios for Plant Phenotyping. Plant Biol. 2013, 64, 267–291. [Google Scholar] [CrossRef] [Green Version]

- Sun, S.; Li, C.; Paterson, A.H. In-Field High-Throughput Phenotyping of Cotton Plant Height Using LiDAR. Remote Sens. 2017, 9, 377. [Google Scholar] [CrossRef] [Green Version]

- Katrine, K.; Carl-Otto, O. 3D Laser Triangulation for Plant Phenotyping in Challenging Environments. Sensors 2015, 15, 13533–13547. [Google Scholar]

- Ana, C.; Miguel, M.; Rocio, B.; David, H. Geometric Characterization of Vines from 3D Point Clouds Obtained with Laser Scanner Systems. Remote Sens. 2019, 11, 2365. [Google Scholar]

- Su, Y.; Wu, F.; Ao, Z.; Jin, S.; Qin, F.; Liu, B.; Pang, S.; Liu, L.; Guo, Q. Evaluating maize phenotype dynamics under drought stress using terrestrial lidar. Plant Methods 2019, 15, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Y.; Wen, W.; Wu, S.; Wang, C.; Yu, Z.; Guo, X.; Zhao, C. Maize Plant Phenotyping: Comparing 3D Laser Scanning, Multi-View Stereo Reconstruction, and 3D Digitizing Estimates. Remote Sens. 2018, 11, 63. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.; Zhou, F.; Chen, H.; Liang, B.; Xu, X.; Yang, W. Three-dimensional Maize Plants Reconstruction and Traits Extraction Based on Structure from Motion. Trans. Chin. Soc. Agric. Mach. 2020, 51, 209–219. [Google Scholar]

- Wei, F.; Hui, F.; Yang, W.; Duan, L.; Chen, G.; Xiong, L.; Liu, Q. High-throughput volumetric reconstruction for 3D wheat plant architecture studies. J. Innov. Opt. Health Sci. 2016, 9, 1650037. [Google Scholar]

- Xiong, J.; He, Z.; Lin, R.; Liu, Z.; Bu, R.; Yang, Z.; Peng, H.; Zou, X. Visual positioning technology of picking robots for dynamic litchi clusters with disturbance. Comput. Electron. Agric. 2018, 151, 226–237. [Google Scholar] [CrossRef]

- Xiong, X.; Yu, L.; Yang, W.; Liu, M.; Jiang, N.; Wu, D.; Chen, G.; Xiong, L.; Liu, K.; Liu, Q. A high-throughput stereo-imaging system for quantifying rape leaf traits during the seedling stage. Plant Methods 2017, 13, 1–17. [Google Scholar] [CrossRef]

- Johann, R.; Stefan, P.; Heiner, K. Accuracy Analysis of a Multi-View Stereo Approach for Phenotyping of Tomato Plants at the Organ Level. Sensors 2015, 15, 9651–9665. [Google Scholar]

- Chen, M.; Tang, Y.; Zou, X.; Huang, K.; Huang, H.; Zhou, H.; Wang, C.; Lian, G. Three-dimensional perception of orchard banana central stock enhanced by adaptive multi-vision technology. Comput. Electron. Agric. 2020, 174, 105508. [Google Scholar] [CrossRef]

- Hämmerle, M.; Höfle, B. Direct derivation of maize plant and crop height from low-cost time-of-flight camera measurements. Plant Methods 2016, 12, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ma, Z.; Sun, D.; Xu, H.; Zhu, Y.; He, Y.; Cen, H. Optimization of 3D Point Clouds of Oilseed Rape Plants Based on Time-of-Flight Cameras. Sensors 2021, 21, 664. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Hou, J.; Yu, L.; Fei, S. 3D Reconstruction system for collaborative scanning based on multiple RGB-D cameras. Pattern Recognit. Lett. 2019, 128, 505–512. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, L.; Xiang, L.; Wu, Q.; Jiang, H. Automatic Non-Destructive Growth Measurement of Leafy Vegetables Based on Kinect. Sensors 2018, 18, 806. [Google Scholar] [CrossRef] [Green Version]

- Andrea, P.; Marcella, G.; Luigi, S.; Luciano, A.; Francesco, M. On-barn pig weight estimation based on body measurements by a Kinect v1 depth camera. Comput. Electron. Agric. 2018, 148, 29–36. [Google Scholar]

- Hu, Y.; Wu, Q.; Wang, L.; Jiang, H. Multiview point clouds denoising based on interference elimination. J. Electron. Imaging 2018, 27, 023009. [Google Scholar] [CrossRef]

- Xu, S.; Lu, K.; Pan, L.; Liu, T.; Zhou, Y.; Wang, B. 3D Reconstruction of Rape Branch and Pod Recognition Based on RGB-D Camera. Trans. Chin. Soc. Agric. Mach. 2019, 50, 21–27. [Google Scholar]

- Vlaminck, M.; Hiep, L.; Wilfried, P. Multi-resolution ICP for the efficient registration of point clouds based on octrees. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 334–337. [Google Scholar]

- Sun, G.; Wang, X. Three-Dimensional Point Cloud Reconstruction and Morphology Measurement Method for Greenhouse Plants Based on the Kinect Sensor Self-Calibration. Agronomy 2019, 9, 596. [Google Scholar] [CrossRef] [Green Version]

- Tölgyessy, M.; Dekan, M.; Chovanec, L.; Hubinský, P. Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Huang, C.; Li, Y.; Luo, S.; Yang, W.; Zhu, L. Cotton Seedling Leaf Traits Extraction Method from 3D Point CloudBased on Structured Light Imaging. Trans. Chin. Soc. Agric. 2019, 50, 243–248. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teng, X.; Zhou, G.; Wu, Y.; Huang, C.; Dong, W.; Xu, S. Three-Dimensional Reconstruction Method of Rapeseed Plants in the Whole Growth Period Using RGB-D Camera. Sensors 2021, 21, 4628. https://doi.org/10.3390/s21144628

Teng X, Zhou G, Wu Y, Huang C, Dong W, Xu S. Three-Dimensional Reconstruction Method of Rapeseed Plants in the Whole Growth Period Using RGB-D Camera. Sensors. 2021; 21(14):4628. https://doi.org/10.3390/s21144628

Chicago/Turabian StyleTeng, Xiaowen, Guangsheng Zhou, Yuxuan Wu, Chenglong Huang, Wanjing Dong, and Shengyong Xu. 2021. "Three-Dimensional Reconstruction Method of Rapeseed Plants in the Whole Growth Period Using RGB-D Camera" Sensors 21, no. 14: 4628. https://doi.org/10.3390/s21144628

APA StyleTeng, X., Zhou, G., Wu, Y., Huang, C., Dong, W., & Xu, S. (2021). Three-Dimensional Reconstruction Method of Rapeseed Plants in the Whole Growth Period Using RGB-D Camera. Sensors, 21(14), 4628. https://doi.org/10.3390/s21144628