Abstract

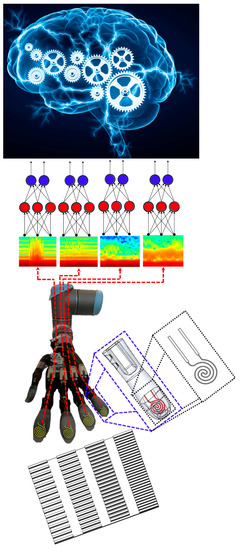

Multifunctional flexible tactile sensors could be useful to improve the control of prosthetic hands. To that end, highly stretchable liquid metal tactile sensors (LMS) were designed, manufactured via photolithography, and incorporated into the fingertips of a prosthetic hand. Three novel contributions were made with the LMS. First, individual fingertips were used to distinguish between different speeds of sliding contact with different surfaces. Second, differences in surface textures were reliably detected during sliding contact. Third, the capacity for hierarchical tactile sensor integration was demonstrated by using four LMS signals simultaneously to distinguish between ten complex multi-textured surfaces. Four different machine learning algorithms were compared for their successful classification capabilities: K-nearest neighbor (KNN), support vector machine (SVM), random forest (RF), and neural network (NN). The time-frequency features of the LMSs were extracted to train and test the machine learning algorithms. The NN generally performed the best at the speed and texture detection with a single finger and had a 99.2 ± 0.8% accuracy to distinguish between ten different multi-textured surfaces using four LMSs from four fingers simultaneously. The capability for hierarchical multi-finger tactile sensation integration could be useful to provide a higher level of intelligence for artificial hands.

1. Introduction

The sensation of touch for prosthetic hands is necessary to improve the upper limb amputee experience in everyday activities [1]. Commercially available prosthetic hands like the i-limb Ultra (Figure 1), which has six degrees of freedom (DOF), and the BeBionic prosthetic hand that has five powered DOFs, demonstrate the trend of increasing prosthesis dexterity [2], yet these state of the art prosthetic limbs lack tactile sensation capabilities when interacting with the environment and manipulating objects. The absence of sensory feedback can lead to a frustrating problem when grasped objects are crushed or dropped since the amputee is not directly aware of the prosthetic fingertip forces after the afferent neural pathway is severed [3,4]. Human hand control strategies depend heavily on touch sensations for object manipulation [5]; however, people with upper limb amputation are missing tactile sensations. Significant research has been done on tactile sensors for artificial hands [6], but there is still a need for advances in lightweight, low-cost, robust multimodal tactile sensors [7].

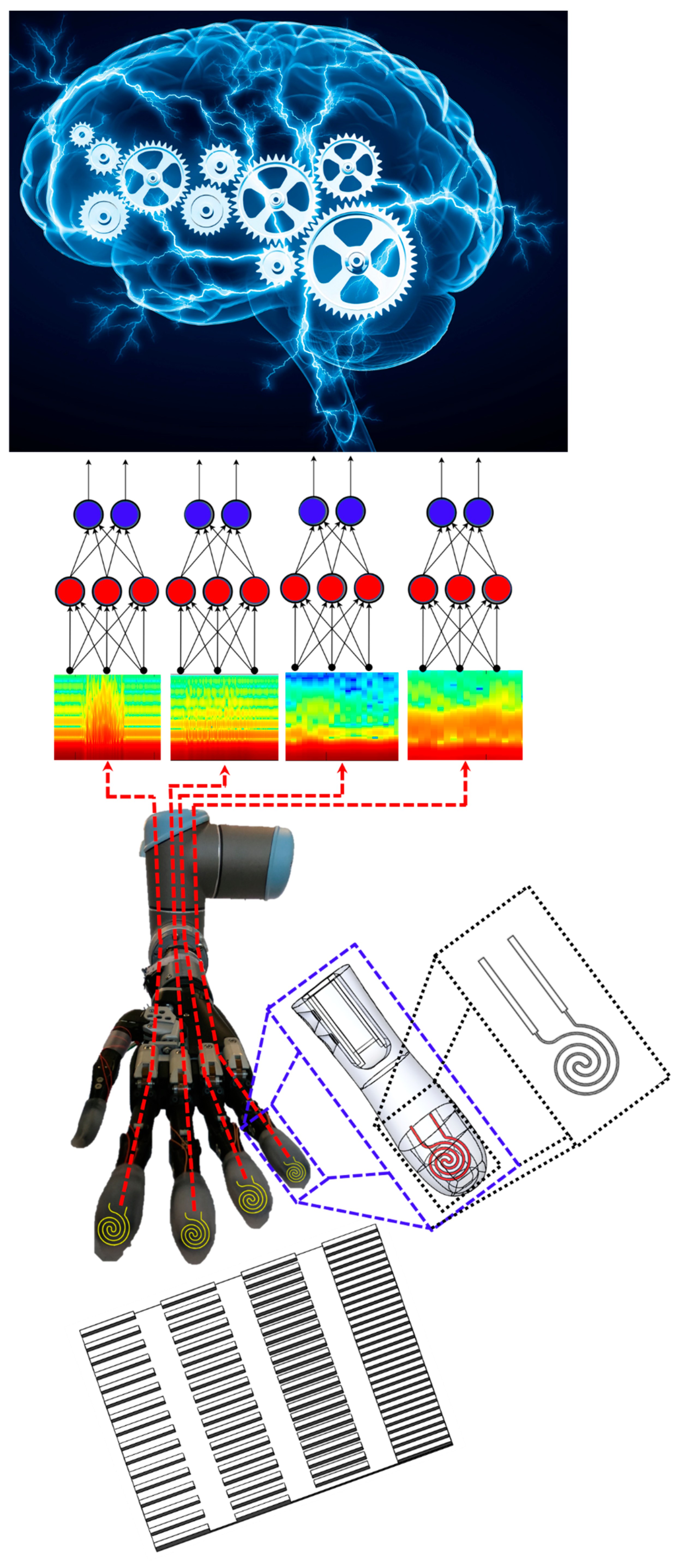

Figure 1.

Liquid metal tactile sensors were integrated into the fingertips of the prosthetic hand. Individual LMS were used to distinguish between different textures and to discern the speed of sliding contact. Furthermore, LMS signals from four fingertips were simultaneously used to distinguish between complex surfaces comprised of multiple kinds of textures, demonstrating a new hierarchical form of intelligence.

Tactile sensors have evolved from rigid components to now being completely flexible elastomers which have many applications in robotics [8,9,10,11,12]. Flexible pressure sensors are compatible with conventional microfabrication techniques which make them promising candidates for a tactile sensing solution in human-robot interfaces. Recently, research groups focused on stretchable tactile sensors for a soft prosthetic hand [13], while other groups have increased the capability of the tactile sensors for multimodal sensations [14]. Liquid metal (LM)—eutectic gallium–indium—can be encapsulated in silicone-based elastomers to create several key advantages over traditional sensors, including high conductivity, compliance, flexibility and stretchability [15]. These properties have been previously exploited for three-axis tactile force sensors [16] and shear force detection [17]. While sophisticated tactile sensors such as the BioTac have been previously used to distinguish between many different types of textures [18] and to detect sliding motion [19], this paper is the first to demonstrate such capabilities with a LMS for a prosthetic hand (Figure 1), to the best knowledge of the authors.

A manipulator with the ability to recognize the surface features of an object can lead to a higher level of autonomy or intelligence [20,21,22,23,24]. Furthermore, this information can be useful to provide haptic feedback for amputees who use prosthetic hands—to reconnect a previously severed sense of touch [25]. For the recognition of surface texture, it is very important to choose a suitable tactile sensor [26,27,28,29,30,31], that will be paired with machine learning algorithms to classify surface features [32,33,34,35,36].

There are three sources of novelty in this paper. First, we will use the LMS to discern the speed of sliding contact against different surfaces. Second, we will show that LMSs can be used to distinguish between different textures. And third, we will integrate tactile information from LMSs on four prosthetic hand fingertips simultaneously to distinguish between complex multi-textured surfaces, demonstrating a new form of hierarchical intelligence (Figure 1). Furthermore, the classification accuracy of four different machine learning algorithms to perform these three tasks will be compared.

2. Materials and Methods

2.1. Liquid Metal Tactile Sensor Operational Principle

The electrical resistance of the LMS changes in response to externally applied forces. The conductive LM injected into the microfluidic channels (Figure 1) changes resistance when an external force impacts the microfluidic channel dimensions (Figure S1). The microfluidic channels increase in length and diminish in cross-sectional area when an external force is applied. The relation between the changes in resistance and changes in the microfluidic channel dimensions can be described by:

where R is the resistance across the terminals of the LM conductor and is the resistivity. Geometric dimensions L (length of the LM conductor) and A (cross-sectional area of the microfluidic channel) change as external forces deform the sensor, producing a measurable change in the electrical resistance of the LM conductor (Figure S2).

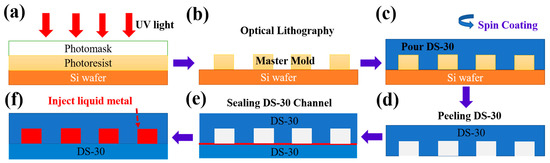

2.2. Microfabrication of Liquid Metal Sensor Mold

The LMS mold was microfabricated via photolithography. SolidWorks (SolidWorks, Waltham, MA, USA) was used to design the LMS microchannel cross-section with 400 µm × 100 µm dimensions. The design was then printed onto a photomask to project the ultraviolet waves to the right dimensions. The silicon wafer was prepared first to create the 100 µm layer thickness of the mold. SU 8 50 was spin-coated (Ni-Lo 4 Vacuum Holder Digital Spin Coater, Ni-Lo Scientific, Ottawa, ON, Canada) at 1000 RPM for 30 s with an acceleration of 300 rad/s2. Soft bake was done at 65 °C for 10 min then at 95 °C for 30 min. An OAI 800 Mask Aligner (OAI, Milpitas, CA, USA) was used to perform the photolithography process. The photomask was placed on top of the SU 8 50 coated silicon wafer and the ultraviolet energy of 375 mJ/cm2 was used to transfer the microchannel patterns from the photomask to the coated silicon wafer (Figure 2a). After exposure, the coated silicon wafer was post-baked on a hotplate at 65 °C for 5 min and then 95 °C for 10 min. The sensor pattern was developed using SU 8 developer (Figure 2b).

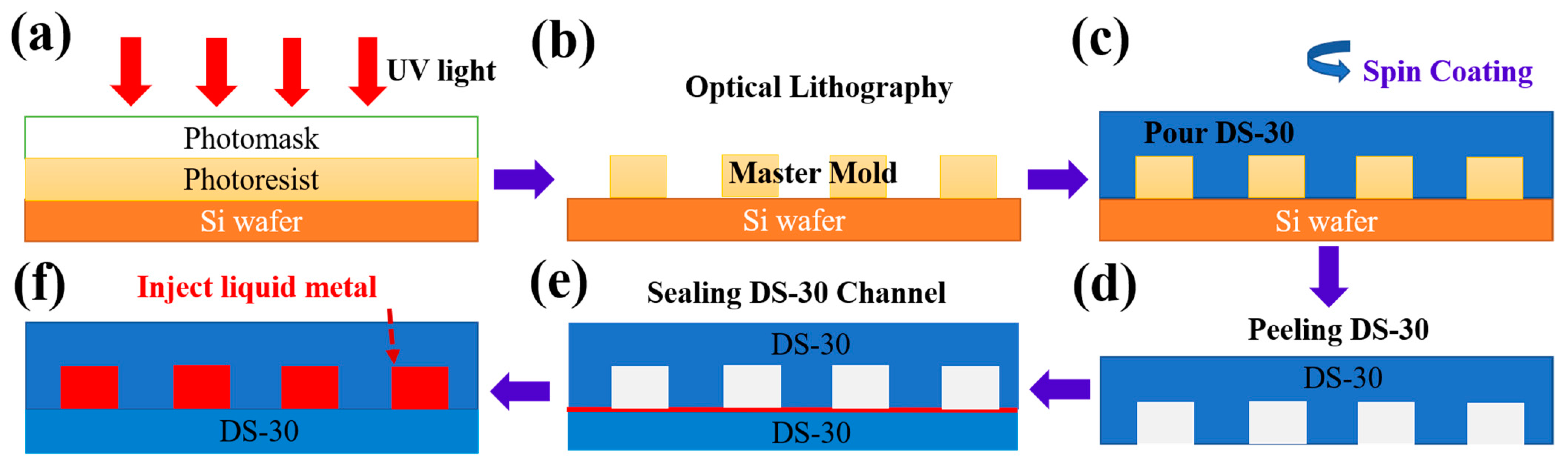

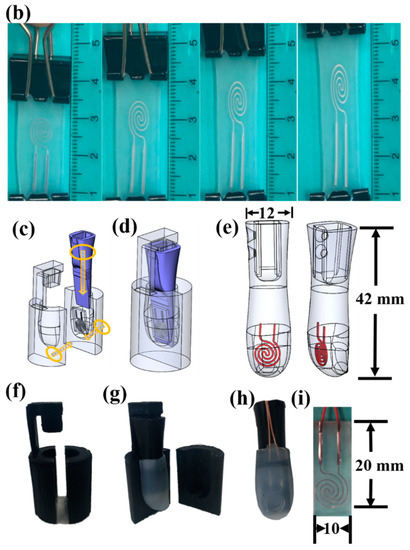

Figure 2.

Liquid metal sensor manufacturing process. (a) Photolithography was used to manufacture the (b) Master mold. (c) Spin coating was used to manufacture the top and bottom layers. (d) The top part of the microfluidic channels was peeled off the mold. (e) A thin layer of DS-30 (red line) was used to bond and seal the top and bottom layers together. (f) After curing, liquid metal was injected into the sealed microchannels with a syringe. Adapted with permission from ref [4]. Copyright 2020 IEEE.

2.3. Liquid Metal Sensor Manufacturing Process

The LMS has two layers, the top and the bottom layer. To manufacture the top and the bottom layers, Dragon-Skin 30 (DS-30, Smooth-On, Macungie, PA, USA) material was used since the DS-30 material is flexible. A spin coating technique was used to manufacture and bond the top and the bottom layers. DS-30 was spread atop the surface of a blank wafer after it was centered, and the spin coater was run at a speed of 500 RPM with an acceleration of 100 rad⁄s2 for 60 s to create an even layer of DS-30 that was 250 µm thick (Figure 2c). This was next put into an oven at 60 °C for 10 min to cure. This process was repeated twice to obtain 500 µm thicknesses for both the top (Figure 2d) and the bottom layers.

To bond the top and the bottom layers together, a 7 µm layer of DS-30 was obtained by operating the spin coater at 4000 RPM with an acceleration of 500 rad⁄s2 for 60 s. This layer was left to air dry at room temperature for 7 min so that it was firm but not yet cured. The patterned layer was lain atop the curing layer to form the microfluidic channel cavity (Figure 2e). This was then put into the oven to cure for five minutes.

2.4. Liquid Metal Injection Process

Next, LM was injected into the sealed microfluidic channel (Figure 2f) using two 31-gauge syringes. The first syringe was used to inject the LM into the microchannel while the second syringe was used simultaneously to extract the air trapped within the microchannel cavity (Figure 3a). Solid core electrical wires (gauge 30) were stripped a length of 0.75 cm and inserted to establish the electrical connectivity with the liquid metal material and to complete the fabrication of the LMS (Figure 3i).

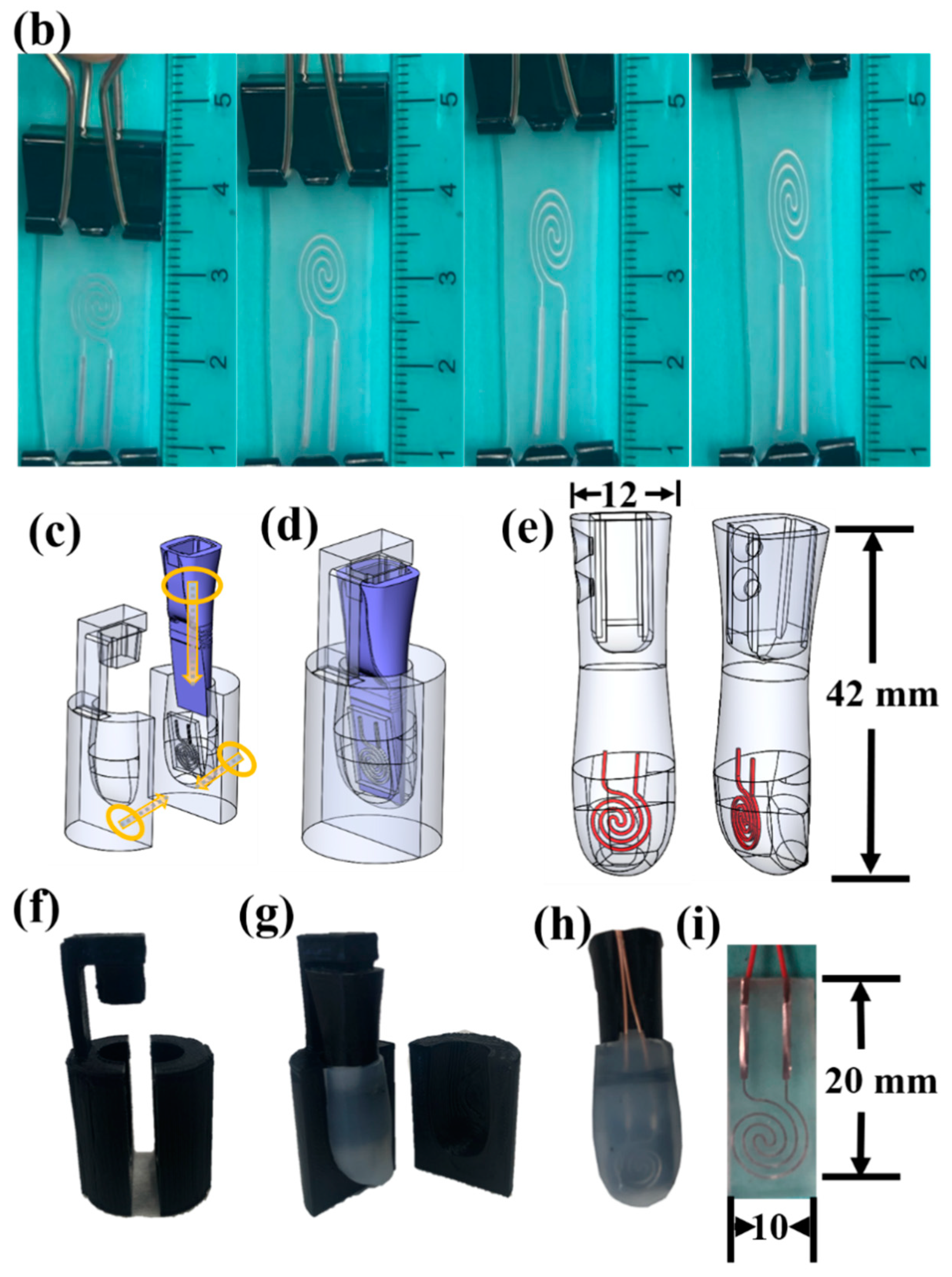

Figure 3.

(a) The liquid metal was injected with one syringe while air within the microchannel cavity was simultaneously extracted with another syringe. (b) The LMS is highly stretchable (units of cm). (c) Fabrication of the first mold to create the inner part of the fingertip assembly: exploded view. (d) Assembled view for finger casting procedure. (e) Finger after removing from the cast. (f) 3D-printed finger-shaped cast. (g) 3D-printed inner finger part upon which the LMS was placed. (h) The completed fingertip with (i) integrated liquid metal sensor.

2.5. Design and Assembly of Fingertip Tactile Sensor

Distal phalanx support structures were designed to integrate the LMS in the fingertips of the i-limb Ultra prosthetic hand. The support structures were designed using SolidWorks and 3D printed with ABS using Ultimaker S3 (Ultimaker, Waltham, MA USA), (Figure 3c–e). Each LMS was tied against the flat face of the distal phalanx support structure using nylon string. Afterward, vertical stabilizers were printed to hold the LMS support structures motionless as the DS-30 was poured into the two-part outer cast (Figure 3f–g). The two halves were aligned, and the mold was taped shut to cure for 24 h. After curing, the mold was opened, the LMS was detached from the mold, and flash was trimmed (Figure 3h).

2.6. Robotic System Configuration

The original, sensorless fingertips of the i-limb were removed and the new fingertips with LMSs (Figure 3h) were mounted onto the i-limb using the same connection points (Figure 4). The prosthetic hand with the LMSs were next mounted onto a six DOF robotic arm (UR-10 (Universal Robots, Odense, Denmark)) to slide the hand along different surfaces and textures at different speeds (Figure 1). The hand was attached to the arm via a 3D printed mechanical adaptor with a coaxial electrical connector and a prosthetic hand lamination collar (Ossur, Reykjavik, Iceland) for stability and electrical connectivity. The robotic arm was programmed using the teach pendant to repeatedly perform the sliding contact against different surfaces and textures.

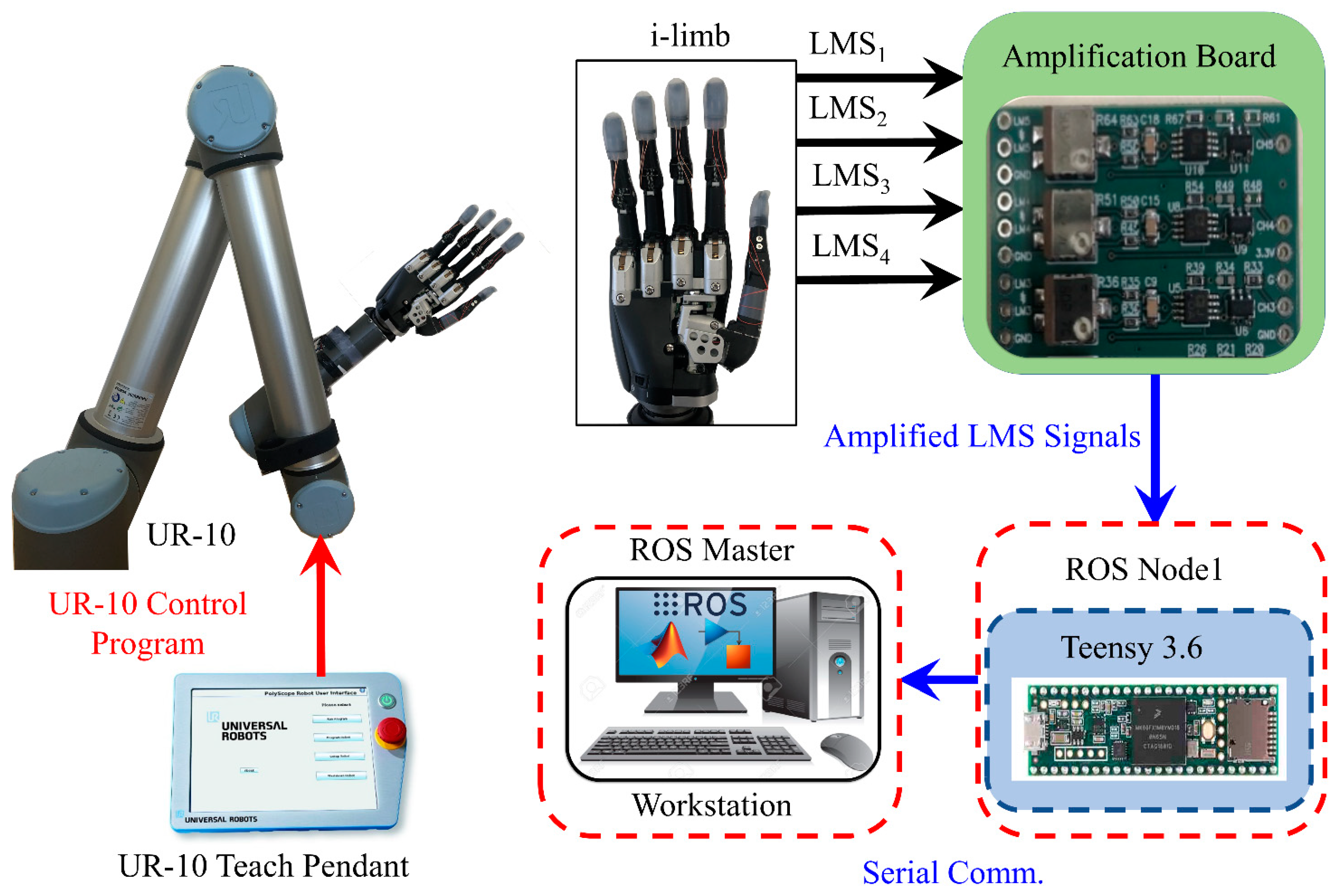

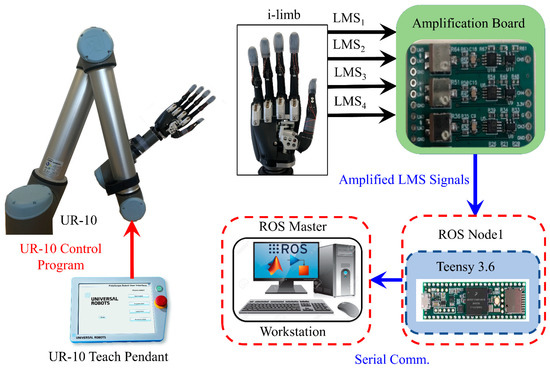

Figure 4.

Robotic system configuration. The i-limb hand was attached to the robotic arm and the LMS tactile sensors were embedded in the fingertips. The LMS tactile sensor signals were amplified using the amplification board and recorded in MATLAB/Simulink via the ROS environment.

LM has high conductivity so the resistance of each LMS was approximately 1 Ω. A five-channel printed circuit board was designed to amplify the LMS signals using Wheatstone bridges (Figure S2). The amplification board was powered by the Teensy 3.6 microcontroller (PJRC, Portland, OR, USA) with 3.3 V. The LMSs were connected to the amplifier board and then sampled through the Teensy as a ROS node. The ROS master was initiated in Simulink (The Mathworks, Natick, MA, USA) and the Teensy 3.6 was a slave node that sampled and published the LMS signals to the master in Simulink. Data were recorded in Simulink with a 1 kHz sample rate (Figure 4).

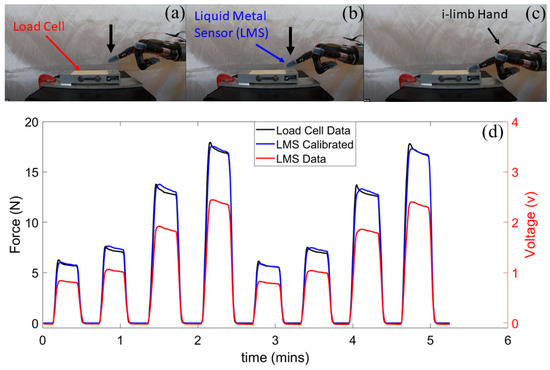

2.7. Liquid Metal Sensor Calibration

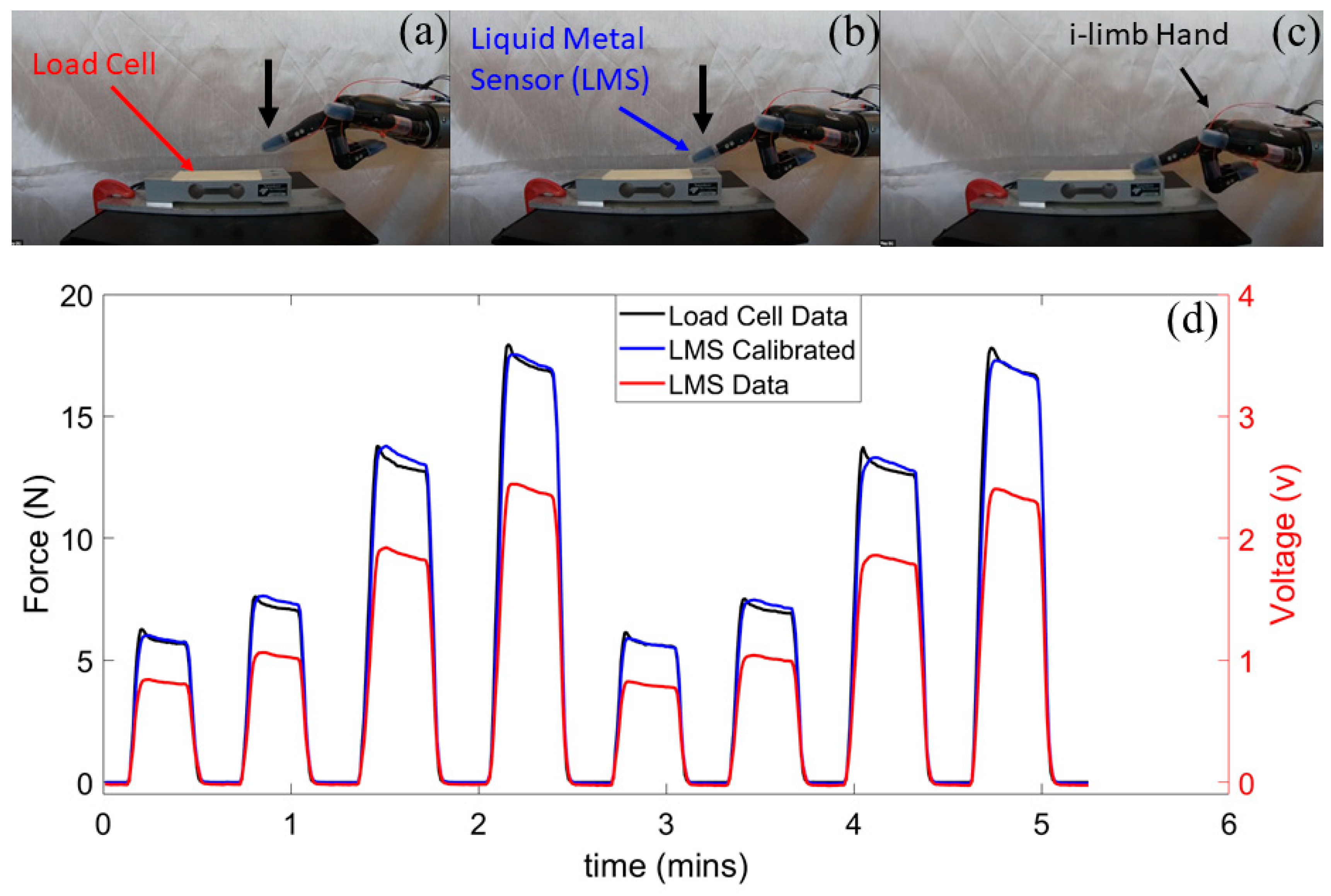

The LMS then was calibrated with an ESP-35 load cell (Transducer Technique, Temecula, CA, USA), (Figure 5a–c), Video Supplement). In the calibration process, the UR-10 arm was used to press the i-limb prosthetic hand fingertips against the load cell to produce the calibration forces consistently for each fingertip. The load cell and the LMS were connected to the same ROS network and had their data streamed into Simulink. The Curve Fitting MATLAB application was used to create calibration equations to correlate the load cell data to the LMS data for each individual fingertip (Figure 5d and Figure S3).

Figure 5.

LMS calibration process. (a–c) The UR-10 robotic arm was used to press the LMS on the fingertip of the i-limb against a load cell as an external reference to (d) calibrate the LMS. See also Figure S3.

2.8. Experiment Design

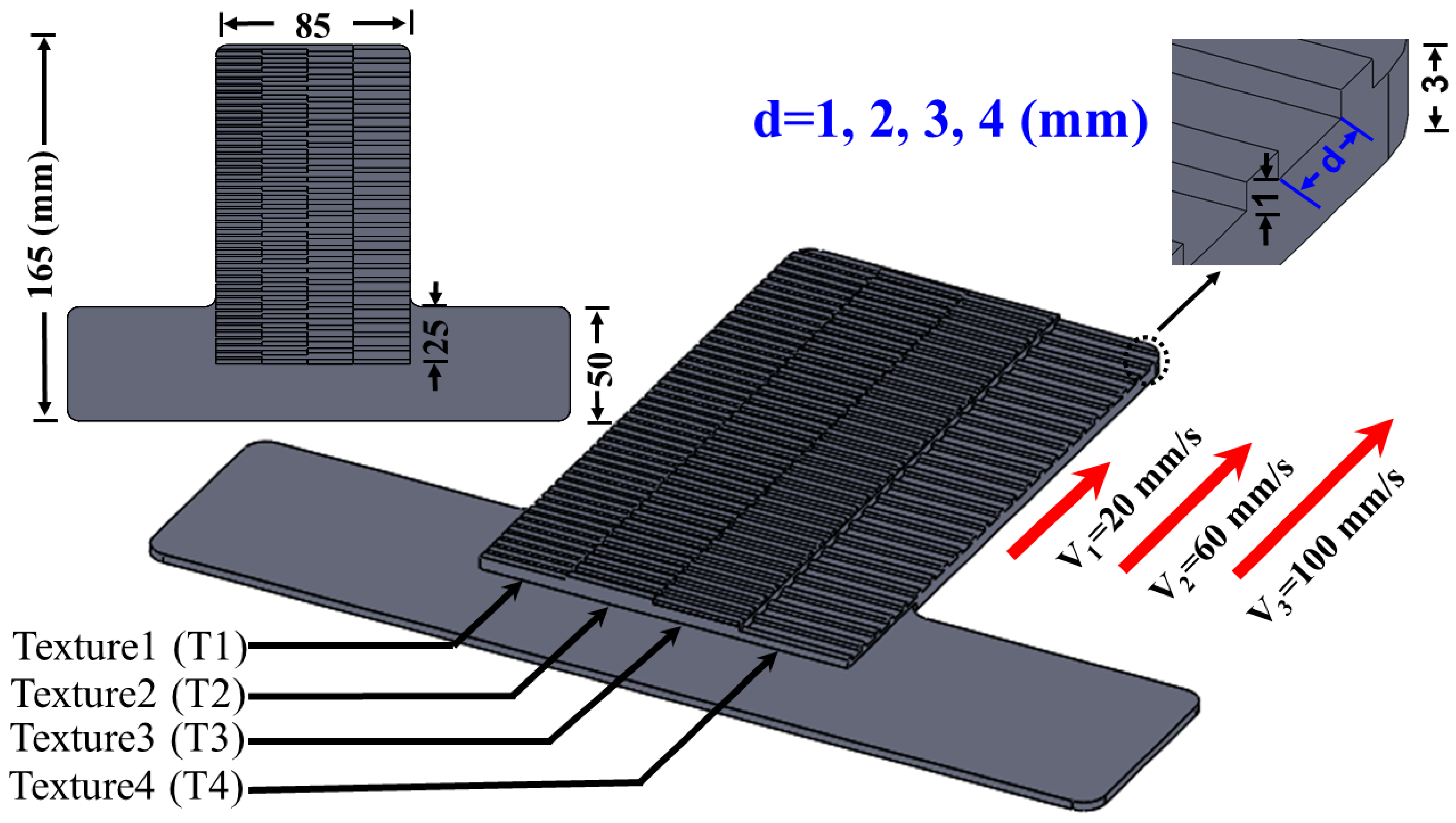

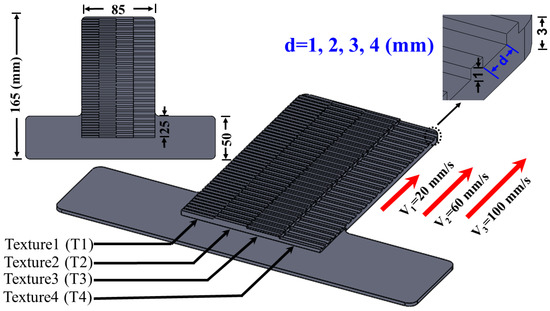

To create a well-controlled experiment, we designed four different textures that had one variable parameter: the distance between the ridges (d). Texture 1 (T1) has d = 1 mm, texture 2 (T2) has d = 2 mm, texture 3 (T3) has d = 3 mm, and texture 4 (T4) has d = 4 mm (Figure 6). Different combinations of these four textures were combined to form surfaces upon which the four LMSs on the four prosthetic fingertips slid. Each surface was designed using SolidWorks and 3D printed using Ultimaker 5 with PLA material. To establish a stable contact with the LMSs, each texture was designed with different heights on the surface, depending on which texture the different fingers were intended to contact, due to the mechanical form factor of the prosthetic hand.

Figure 6.

CAD model showing the four different texture dimensions and the three different sliding speeds. Units of mm.

2.8.1. Individual Fingertip Sensors to Detect Texture and Speed of Sliding Contact

The first goal of this paper was to use individual LMS signals to distinguish between different sliding speeds (20 mm/s, 60 mm/s, and 100 mm/s). The second goal of this experiment was to use individual LMS signals to recognize four different textures (T1, T2, T3, T4, Figure 6). To these ends, four different surfaces were 3D printed: ST1, ST2, ST3, and ST4. Surface ST1 had four copies of texture T1 for each fingertip to simultaneously contact. Likewise, surfaces ST2, ST3, and ST4 each had four copies of textures T2, T3, and T4 to respectively contact and slide across (Figure S4). We hypothesized that the slip speeds and inter-ridge spacing (d, Figure 6) of the different textures would impact the power spectral distribution of the LMS signals [3,4,17]. The mechanism to detect the textures and speeds was to use the spectral components of the LMS signals to train machine learning algorithms to distinguish between the time-frequency signatures specific to each texture and speed [4].

To achieve the first and the second goals, 20 sliding trials were collected for each of the four aforementioned surfaces (ST1–ST4) with each of the three sliding speeds (20 mm/s, 60 mm/s, and 100 mm/s), producing 960 datasets for classification. The robotic arm was programmed to press the LMSs on the four prosthetic fingertips onto each surface. A momentary delay separated the initial contact and the subsequent sliding motion to ensure that the machine learning algorithms were trained using only the portions of time containing the slip events (see also Supplemental Video). The data from each finger were used to train and test four different machine learning algorithms (KNN, SVM, RF, and NN) for two different purposes: speed and texture detection. For specific textures, the algorithms were trained to detect the three speeds. For a given speed, the algorithms were trained to detect the four different textures.

2.8.2. Hierarchical Touch Sensation Integration to Detect Complex Multi-Textured Surfaces

The third goal of this paper was to simultaneously use the LMS signals from the four fingertips of the prosthetic hand to recognize complex surfaces comprised of multiple textures. The hierarchical approach relied first upon successful texture detection localized to individual fingertips. This textural information from the four individual fingertips was integrated together to produce a higher state of knowledge at the level of the whole hand regarding the spatial layout of the multi-textured surfaces. To achieve this third goal, ten different multi-textured surfaces (S1–S10) were 3D printed using permutations of the four textures randomly generated by the MATLAB randperm function (Figure S5). An example of a complex surface comprised of four different textures is shown in Figure 7a–d. For each of the ten surfaces, 20 trials were collected to test the ability of the machine learning algorithms to distinguish between the ten different complex surfaces comprised of randomly generated permutations of four different textures. A MATLAB program was written to use the detected texture at each of the four fingertips to predict the spatial layout of the multi-textured surface that was contacted. This prediction was compared to the known database of ten surfaces comprised of multiple textures. The percentage of correct predictions was used to establish a success rate metric to quantify the capability to distinguish between the multi-textured surfaces. The speed of slip for these experiments was 20 mm/s.

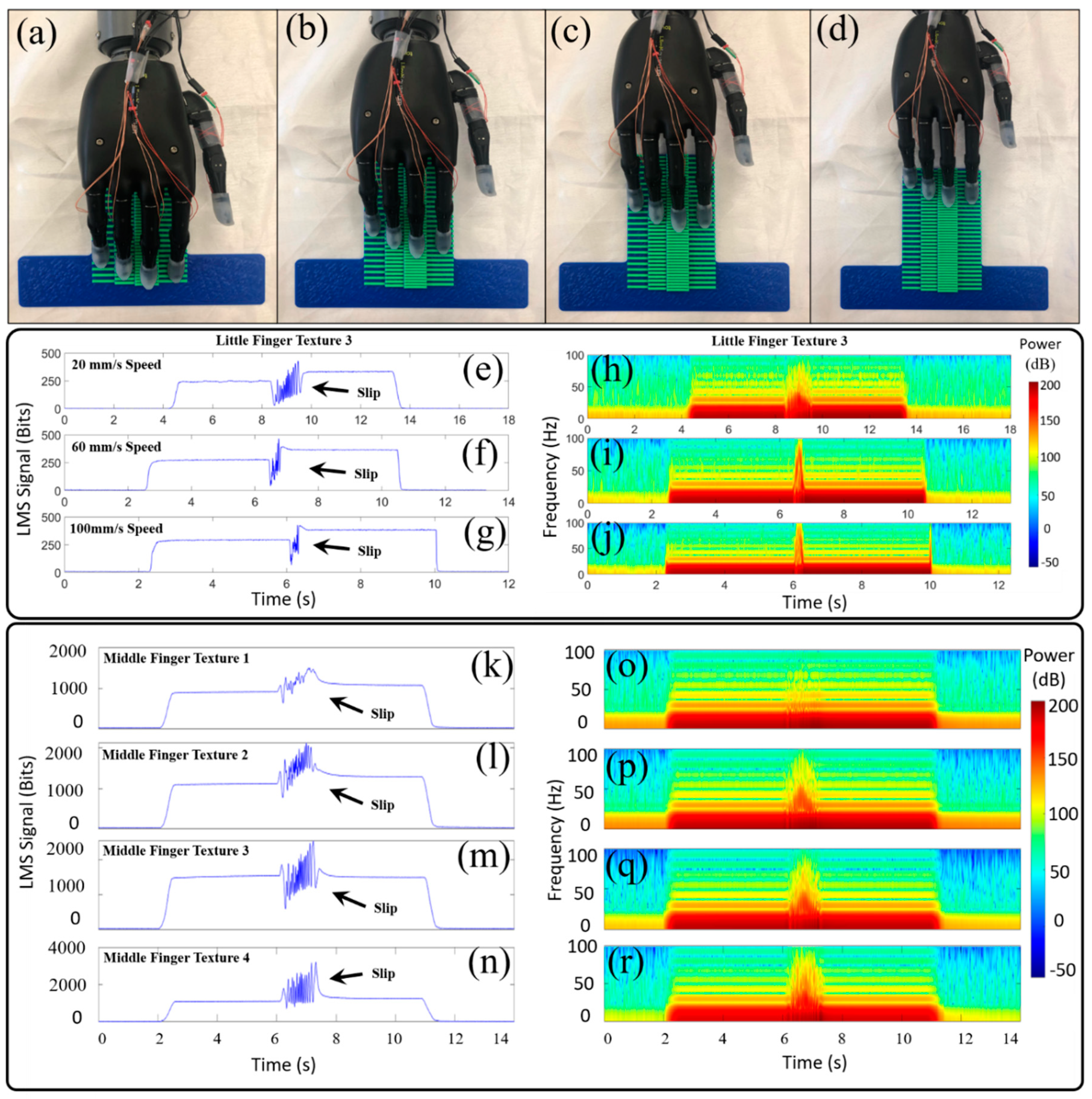

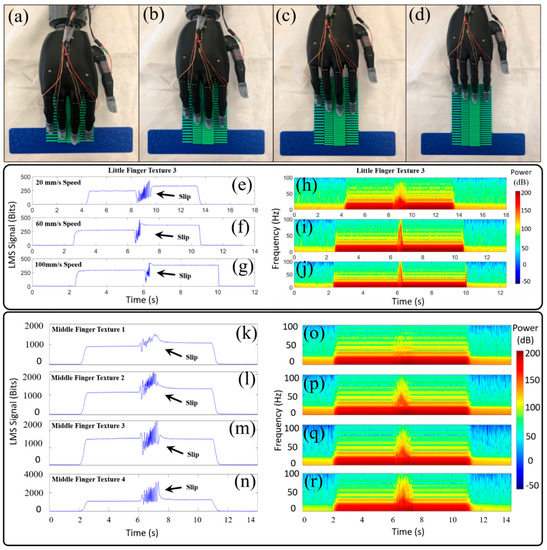

Figure 7.

(a–d) The prosthetic hand with four LMSs slid while in contact with the multi-textured surface. (e) Illustrative data from the little finger LMS showed different responses when sliding on texture 3 at 20 mm/s, (f) 60 mm/s, and (g) 100 mm/s. (h) Corresponding spectrograms showed increasing power concentrations in higher frequency bands as the sliding speed increased from 20 mm/s to (i) 60 mm/s and (j) 100 mm/s. (k) Representative time domain LMS signals from the middle finger showed different activation patterns as it slid at 20 mm/s on texture 1 (l) texture 2, (m) texture 3, and (n) texture 4. (o) Corresponding spectrogram features revealed different frequency-domain signatures specific to texture 1, (p) texture 2, (q) texture 3, and (r) texture 4.

2.9. Machine Learning Classification Approach

LMS time-domain data (Figure 7e–g,k–n) were trimmed and labeled. Time-frequency features (spectrogram) were extracted from the time domain to train the machine learning classifiers in MATLAB (Figure 7h–j,o–r). The frequency features extracted from the time domain data were calculated using a 512-point FFT with 0.08 s frame length and a Hanning window with 90% overlap. After running the spectrogram analysis, the time-frequency power distribution matrix was used to train the four machine learning algorithms.

The SVM algorithm created a hyperplane to separate the extracted features into different classes, in this case, surfaces upon which the LMS contacted [37]. The KNN calculated the shortest distance between a query and all the points in the extracted features and selected the specified k number closest to the query to vote for the most frequent class label [38]. The RF algorithm was designed with 500 trees to perform regression and classification tasks [39]. Its ensembles of tree structure classifiers were trained separately to create a forest with a group of decision trees. In general, the more trees in the forest the more robust the prediction which leads to higher reported classification accuracy. The NN Pattern Recognition toolbox in MATLAB was used to create and train the NN, and evaluate its performance using cross-entropy and confusion matrices [40]. A two-layer feed-forward network with 100 sigmoid hidden and softmax output neurons was used to classify the collected data. The network was trained with scaled conjugate gradient backpropagation.

For the NN, the collected data were subdivided into 3 categories: 70% training dataset, 15% validation dataset, and 15% testing dataset. The training dataset was used to train the network, and the network was tuned according to its error. The validation dataset was used to measure network generalization, and to stop training when generalization stopped improving, while the testing data set provided an independent measure of network performance after training. Training automatically stopped when generalization error stopped improving, as indicated by an increase in the cross-entropy error of the validation samples.

For the KNN, SVM, and RF algorithms, the extracted time-frequency power distribution features data for slip were divided randomly into two parts, the training part which comprised 80% of the data, and the testing data which comprised the remaining 20%. The performance of any classification model will decrease significantly if the model is overfit, so the feature data were toggled randomly before training and testing the classifier models to prevent any overfitting. Each of the classifier models was run 10 times with randomized selection of the training-testing features and the average classification accuracy was reported.

3. Results

3.1. Liquid Metal Sensors Sliding Across Different Textures

Sample data from the LMS on the little finger sliding across texture T3 with three different speeds showed different characteristics in the time domain (Figure 7e–g). The spectrograms of these experiments showed a higher concentration of power in higher frequencies as the speed of sliding contact increased (Figure 7h–j). Illustrative data from the LMS on the middle finger sliding across the four different textures (T1–T4) with a speed of 20 mm/s showed noticeable differences in the sensor responses (Figure 7k–n). The spectrogram showed that decreasing ridge spacing (d, Figure 6) of the textures resulted in increasing power in high frequency components of the LMS signal (Figure 7o–r). These characteristic signatures related to speed of sliding contact and texture features were exploited by the machine learning classification algorithms.

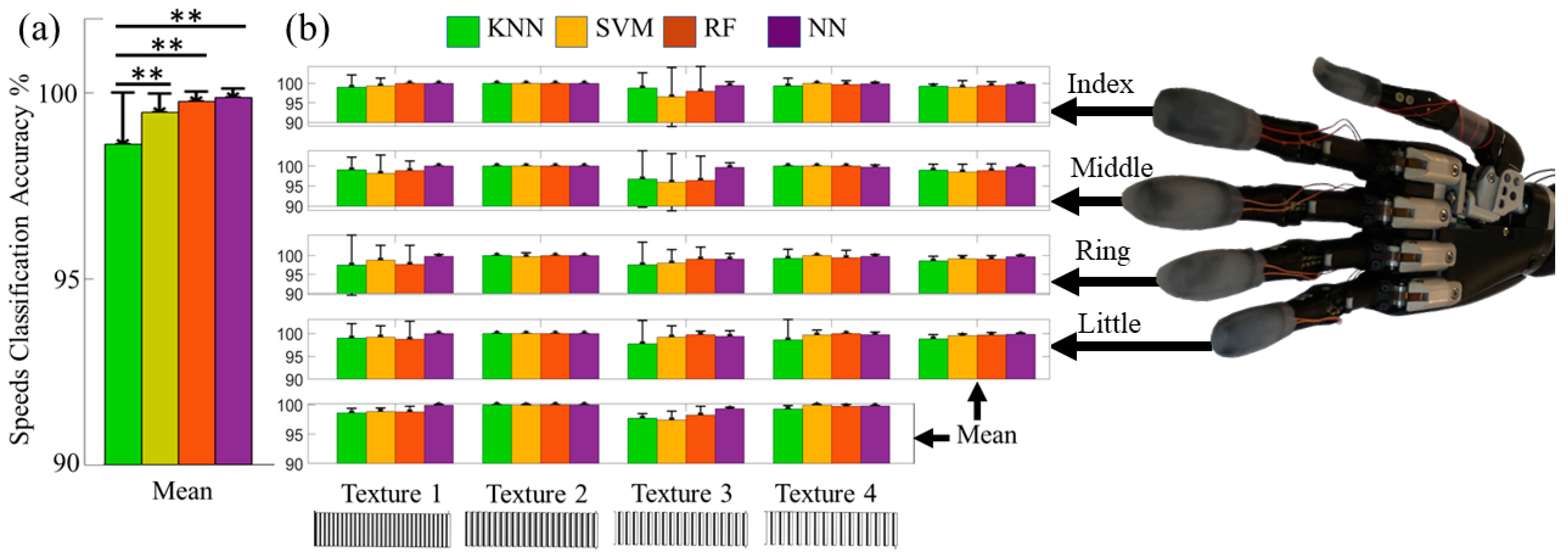

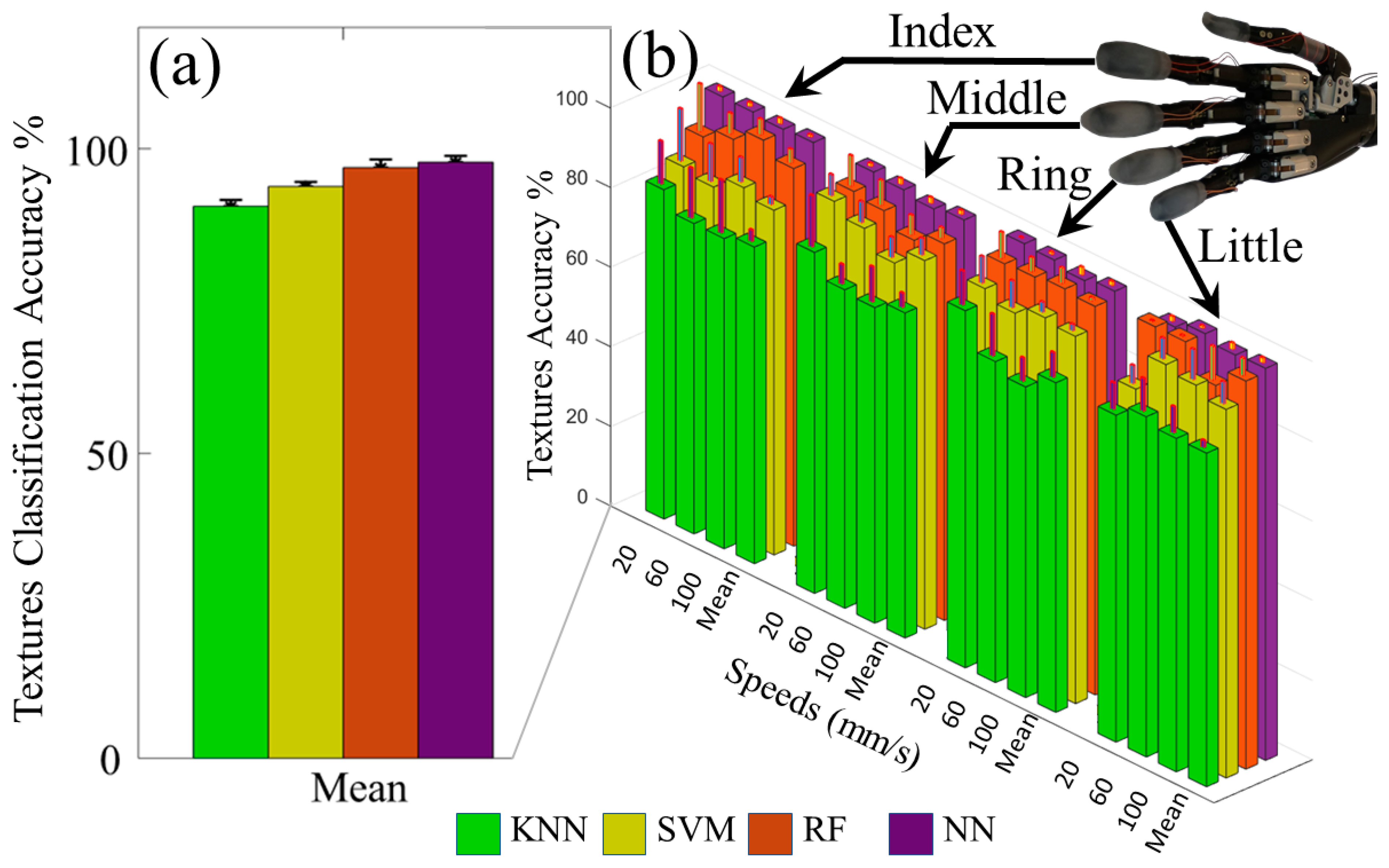

3.2. Detected Speed of Sliding Contact with Individual Fingertip Sensors

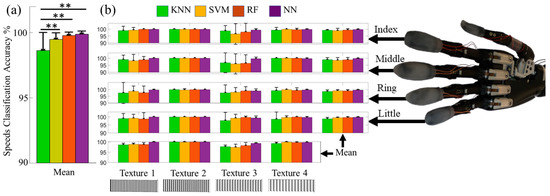

The machine learning algorithms were able to distinguish between all the speeds with each finger with high accuracy (Figure 8b). The overall means and standard deviations of speed classification accuracies from all four fingers are shown in Table 1, Figure 8a. The SVM, RF, and NN all had nearly perfect accuracy > 99% to detect the different speeds of sliding contact on all four textures. A two-factor ANOVA indicated that the classifier accuracy for the KNN was significantly different from the other algorithms (p < 0.01). There were no significant differences among the RF, SVM, and NN (p > 0.05). The classification accuracy to detect the speed of sliding contact was significantly impacted by the textures (p < 0.01), likely because the KNN, SVM, and RF generally had lower accuracies with textures T1 and T3 than with textures T2 and T4 (Figure 8b). However, there was no significant interaction between the different classification algorithms and the textures (p > 0.05).

Figure 8.

(a) The mean classification accuracy results from all four fingers to distinguish between different sliding speeds were > 99% for the SVM, RF, and NN algorithms. (b) Individual finger classification accuracies to detect the speed of sliding contact on specific textures were > 95% in all cases. ** p-value < 0.01.

Table 1.

Mean classification accuracy for detected speed of sliding contact.

3.3. Distinguishing between Different Textures with Individual Fingertip Sensors

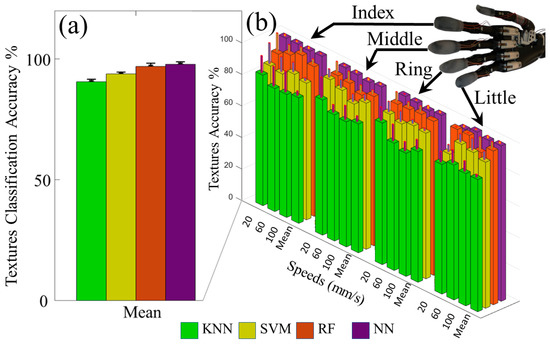

The LMSs were tested to distinguish between the four different textures. The mean texture classification accuracy for each classifier is shown in Table 2, and the classifier with the highest overall accuracy was the NN with 97.8 ± 1.0% (Figure 9a). The NN had the highest overall accuracy at the 20 mm/s and 60 mm/s speeds; however, the RF algorithm outperformed the NN slightly at the 100 mm/s slip speed setting with classification accuracy of 98.3 ± 1.7%. A two factor ANOVA showed that the classification accuracy for all algorithms were significantly different from one another (p < 0.01). This is likely because there was a clear trend of increasing accuracy with the KNN, SVM, RF, and NN (Table 2) that was consistent with each finger (Figure 9b, Figure S6). However, there was no statistically significant impact upon the texture detection accuracy by the speed of sliding contact (p > 0.05). There was also no significant interaction between the classification algorithms and the sliding speeds (p > 0.05).

Table 2.

Mean classification accuracy for detecting different textures with three different speeds using each finger individually.

Figure 9.

(a) Overall classification results to distinguish between different textures with three different speeds of sliding contact. (b) Classification accuracy for each finger to detect the correct texture with three different speeds of slip. (See also Figure S6).

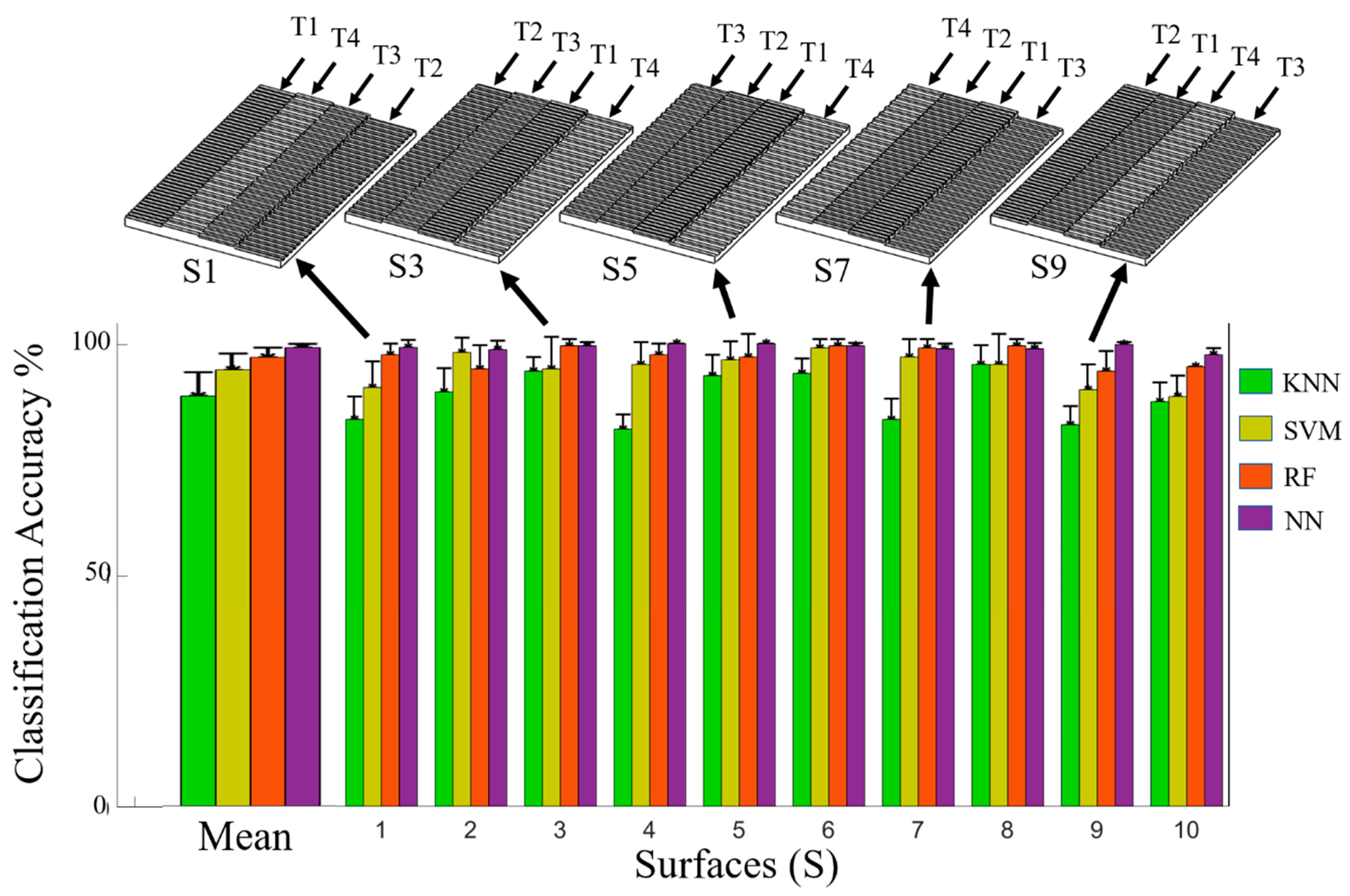

3.4. Hierarchical Tactile Sensation Integration to Distinguish between Complex Multi-Textured Surfaces

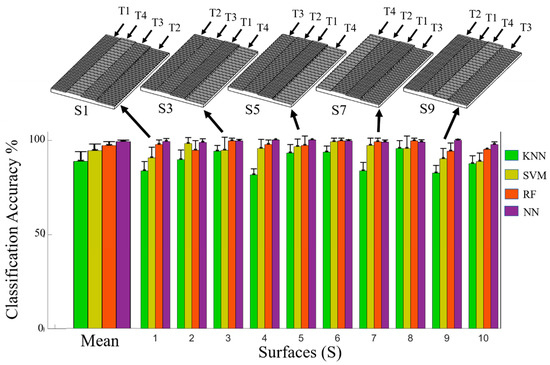

Ten surfaces comprised of multiple textures (Figure S5) were accurately classified using the LMSs from four prosthetic fingertips simultaneously. Examples of five different multi-textured surfaces are shown in the top of Figure 10 and another is shown in Figure 7a–d. The algorithm with the highest overall classification accuracy was again the NN with 99.2 ± 0.7% (Figure 10, Table 3). The NN had the highest overall classification accuracy with all the surfaces except surfaces S7 and S8, where the RF algorithm outperformed the NN slightly. The two-factor ANOVA indicated that the accuracies for all classification algorithms were significantly different (p < 0.01) and the different surfaces also significantly impacted the classification accuracies (p < 0.01). Furthermore, there was a significant interaction between algorithms’ classification accuracies and the multi-textured surfaces (p < 0.01). This interaction was likely caused by the RF outperforming the NN with surfaces S7 and S8 even though the RF had lower overall classification accuracy across all ten surfaces than the NN. Another reason for the significant interaction is that the SVM had better classification accuracy than the RF with surface S2, but lower classification accuracy averaged across all surfaces than the RF.

Figure 10.

Classification results to detect 10 different complex multi-textured surfaces using four fingertip sensors simultaneously. Examples of five different multi-textured surfaces are shown on the top. On average, the NN had the highest classification accuracy for this new form of hierarchical tactile sensation integration.

Table 3.

Mean classification accuracies to detect different multi-textured surfaces via hierarchical tactile sensation integration from four fingertip sensors simultaneously.

4. Discussion

People exhibit the trait of hierarchical control, where tactile sensations from multiple fingers are integrated by the central nervous system to synergistically manage dexterous object manipulation tasks [41]. This capability has recently been demonstrated for dexterous robotic hands to autonomously stabilize grasped objects [42]. People can also detect the speed of sliding contact with their fingertips, and use this information to reliably control slip of grasped objects [43]. Furthermore, people can also discriminate between two different surface textures simultaneously using two different fingers [44]. To enable a more natural feeling prosthetic hand interface, recent work has shown that people can distinguish between several different surface textures using transcutaneous electrical nerve stimulation conveying haptic feedback from a neuromorphic sensor array [25].

Building upon these prior accomplishments, our paper has shown that the LMSs can be reliably used to distinguish between four different textures and three different speeds of sliding contact. Furthermore, we have demonstrated the capacity for hierarchical tactile sensation integration from four fingertips simultaneously to detect differences between complex multi-textured surfaces. This demonstrated the hierarchy of single finger (low-level) and whole hand (high-level) perception, specifically in the form of individual textures (low-level) and complex surfaces comprised of multiple textures (high-level). In other words, tactile information from all the individual fingertips provided the foundation for a higher hand-level of perception enabling the distinction between ten complex multi-textured surfaces that would not have been possible using purely local information from an individual fingertip. We believe that these tactile details could be useful in the future to afford a more realistic experience for prosthetic hand users through an advanced haptic display, which could help prevent prosthetic hand abandonment by enriching the amputee-prosthesis interface [45,46,47]. In the context of a haptic display, where sensations of touch are conveyed from a prosthetic hand to an amputee via actuators or electrotactile stimulation [25], there are many possible approaches for the mapping of tactile sensations from the robotic sensors to the human sensations on a different portion of the amputee’s residual limb. While it is possible that the user could learn to directly interpret the artificial sensations of touch, it is not necessarily the optimal approach. Fundamental differences between robotic sensors/actuators and human mechanoreceptors [7] suggest that an intermediary step of classification via artificial intelligence, such as the work in this paper, could ameliorate human perception of the haptic display. This could be accomplished by mapping the artificial tactile sensations in a manner more conducive to human perception [5,25,48].

Beyond this, the highly successful texture, speed and surface feature classification results were obtained without any explicit force feedback loop. Illustrative differences between LMS signal amplitudes of the little finger (Figure 7e–g) and the middle finger (Figure 7k–n) had no significant impact on the overall successful classification rates with each finger (Figure 8 and Figure 9 and Figure S6). Therefore, this approach for speed, texture, and multi-textured surface recognition does not require a force feedback loop, which could be quite beneficial in the highly unstructured tasks of daily life where amputees may seek to explore surface textures without the cognitive burden of accurate force control of each specific fingertip of a prosthetic hand.

The comparison of the four different machine learning algorithms showed that the NN generally had the highest overall classification accuracy. However, when using a single finger to detect the speed of sliding contact, there were no significant differences between the SVM, RF, and NN algorithms. Furthermore, significant interactions were observed between the accuracies of each algorithm and the complex multi-textured surfaces when demonstrating hand-level intelligence via hierarchical tactile sensation integration. In some cases, the RF slightly outperformed the NN and the performance of any single algorithm was not perfectly uniform across all cases. These nuanced results illustrate the importance of considering many operational factors prior to choosing an algorithm for real-time control, including computational expense, the potential for increased classification accuracy, and the intended use of the sensor, which could be for prosthetic hands or more broadly to fully autonomous manipulators.

5. Conclusions

Stretchable tactile sensors using liquid metal were designed and manufactured for the fingertips of a prosthetic hand. Three novel contributions were made with these new tactile sensors. The LMSs were able to reliably distinguish between different speeds of sliding contact and different textures with individual fingers. Beyond this, we demonstrated the capability for hierarchical tactile sensation integration from four fingertips simultaneously to distinguish between ten complex multi-textured surfaces. The NN produced the highest classification accuracy of 99.2 ± 0.8% to classify the multi-textured surfaces. Due to the compliant, lightweight nature of the LMS and high classification accuracy, this paper has demonstrated the feasibility of their application to robotic hands. Hierarchical tactile sensation integration from multiple fingers is a trait exhibited by people, and could be a useful technique for a haptic display to improve prosthetic hand functionality or to augment the intelligence of autonomous manipulators.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/s21134324/s1, Figure S1: Sensing principle of the LMS, Figure S2: Electronics for the LMS, Figure S3: Calibration of the LMS, Figure S4: Single-texture surfaces used to detect speed of sliding contact and textures, Figure S5: Multi-textured surfaces used to demonstrate hierarchical tactile sensation integration, Figure S6: Texture classification accuracy for each finger with three different speeds of sliding contact, Video S1: Fabrication and Evaluation of the LMS.

Author Contributions

Conceptualization, M.A.A. and E.D.E.; methodology, M.A.A., R.P., M.L., and E.D.E.; software, M.A.A.; validation, M.A.A. and E.D.E.; formal analysis, M.A.A. and E.D.E.; investigation, M.A.A. and E.D.E.; resources, O.B., L.L. and E.D.E.; data curation, M.A.A., R.P., and M.L.; writing—original draft preparation, M.A.A. and E.D.E.; writing—review and editing, M.A.A., R.P., M.L., A.A., O.B., L.L., and E.D.E.; visualization, M.A.A., R.P., M.L., A.A., O.B., L.L., and E.D.E.; supervision, E.D.E.; project administration, A.A., O.B., L.L., and E.D.E.; funding acquisition, A.A., O.B., L.L., and E.D.E. All authors have read and agreed to the published version of the manuscript.

Funding

Research reported in this publication was supported by the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health under Award Number R01EB025819. This research was also supported by the National Institute of Aging under 3R01EB025819-04S1, National Science Foundation awards #1317952, #1536136, and #1659484, Department of Energy contract TOA#0000403076, and pilot grants from Florida Atlantic University’s Brain Institute and I-SENSE. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health, the National Science Foundation, or the Department of Energy.

Data Availability Statement

The data presented in this paper will be made available upon reasonable request.

Acknowledgments

The authors thank K. Mondal, M. Al-Saidi, and G. Liddle for their assistance with the sensor fabrication in the early stages of this work [4].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ziegler-Graham, K.; MacKenzie, E.J.; Ephraim, P.L.; Travison, T.G.; Brookmeyer, R. Estimating the prevalence of limb loss in the United States: 2005 to 2050. Arch. Phys. Med. Rehabil. 2008, 89, 422–429. [Google Scholar] [CrossRef]

- Belter, J.T.; Segil, J.L.; SM, B. Mechanical design and performance specifications of anthropomorphic prosthetic hands: A review. J. Rehabil. Res. Dev. 2013, 50, 599. [Google Scholar] [CrossRef]

- Engeberg, E.D.; Meek, S.G. Adaptive sliding mode control for prosthetic hands to simultaneously prevent slip and minimize deformation of grasped objects. IEEE/ASME Trans. Mechatron. 2011, 18, 376–385. [Google Scholar] [CrossRef]

- Abd, M.A.; Al-Saidi, M.; Lin, M.; Liddle, G.; Mondal, K.; Engeberg, E.D. Surface Feature Recognition and Grasped Object Slip Prevention With a Liquid Metal Tactile Sensor for a Prosthetic Hand. In Proceedings of the 2020 8th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), New York, NY, USA, 29 November–1 December 2020; pp. 1174–1179. [Google Scholar]

- Johansson, R.S.; Flanagan, J.R. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 2009, 10, 345–359. [Google Scholar] [CrossRef] [PubMed]

- Liao, X.; Wang, W.; Lin, M.; Li, M.; Wu, H.; Zheng, Y. Hierarchically distributed microstructure design of haptic sensors for personalized fingertip mechanosensational manipulation. Mater. Horiz. 2018, 5, 920–931. [Google Scholar] [CrossRef]

- Dahiya, R.S.; Metta, G.; Valle, M.; Sandini, G. Tactile sensing—From humans to humanoids. IEEE Trans. Rob. 2009, 26, 1–20. [Google Scholar] [CrossRef]

- Wisitsoraat, A.; Patthanasetakul, V.; Lomas, T.; Tuantranont, A. Low cost thin film based piezoresistive MEMS tactile sensor. Sens. Actuators A Phys. 2007, 139, 17–22. [Google Scholar] [CrossRef]

- Xu, Y.; Hu, X.; Kundu, S.; Nag, A.; Afsarimanesh, N.; Sapra, S.; Mukhopadhyay, S.C.; Han, T. Silicon-Based Sensors for Biomedical Applications: A Review. Sensors 2019, 19, 2908. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krassow, H.; Campabadal, F.; Lora-Tamayo, E. Photolithographic packaging of silicon pressure sensors. Sens. Actuators A Phys. 1998, 66, 279–283. [Google Scholar] [CrossRef]

- Pritchard, E.; Mahfouz, M.; Evans, B.; Eliza, S.; Haider, M.R. Flexible capacitive sensors for high resolution pressure measurement. In Proceedings of the 2008 IEEE Sensors, Institute of Electrical and Electronics Engineers (IEEE), Lecce, Italy, 26–29 October 2008; pp. 1484–1487. [Google Scholar]

- Oh, J.H.; Woo, J.Y.; Jo, S.; Han, C.-S. Pressure-conductive rubber sensor based on liquid-metal-PDMS composite. Sens. Actuators A Phys. 2019, 299, 111610. [Google Scholar] [CrossRef]

- Liang, Z.; Cheng, J.; Zhao, Q.; Zhao, X.; Han, Z.; Chen, Y.; Ma, Y.; Feng, X. High-Performance Flexible Tactile Sensor Enabling Intelligent Haptic Perception for a Soft Prosthetic Hand. Adv. Mater. Technol. 2019, 4, 1900317. [Google Scholar] [CrossRef]

- Zou, L.; Ge, C.; Wang, Z.J.; Cretu, E.; Li, X. Novel tactile sensor technology and smart tactile sensing systems: A review. Sensors 2017, 17, 2653. [Google Scholar] [CrossRef]

- Barbee, M.H.; Mondal, K.; Deng, J.Z.; Bharambe, V.; Neumann, T.V.; Adams, J.J.; Boechler, N.; Dickey, M.D.; Craig, S.L. Mechanochromic Stretchable Electronics. ACS Appl. Mater. Interfaces 2018, 10, 29918–29924. [Google Scholar] [CrossRef]

- Park, Y.-L.; Chen, B.-R.; Wood, R.J. Design and fabrication of soft artificial skin using embedded microchannels and liquid conductors. IEEE Sens. J. 2012, 12, 2711–2718. [Google Scholar] [CrossRef]

- Yin, J.; Aspinall, P.; Santos, V.J.; Posner, J.D. Measuring dynamic shear force and vibration with a bioinspired tactile sensor skin. IEEE Sens. J. 2018, 18, 3544–3553. [Google Scholar] [CrossRef]

- Fishel, J.A.; Loeb, G.E. Bayesian exploration for intelligent identification of textures. Front. Neurorob. 2012, 6, 4. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abd, M.A.; Gonzalez, I.J.; Colestock, T.C.; Kent, B.A.; Engeberg, E.D. Direction of slip detection for adaptive grasp force control with a dexterous robotic hand. In Proceedings of the 2018 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Auckland, New Zealand, 9–12 July 2018; pp. 21–27. [Google Scholar]

- Kaboli, M.; Cheng, G. Robust tactile descriptors for discriminating objects from textural properties via artificial robotic skin. IEEE Trans. Rob. 2018, 34, 985–1003. [Google Scholar] [CrossRef]

- Oddo, C.M.; Controzzi, M.; Beccai, L.; Cipriani, C.; Carrozza, M.C. Roughness encoding for discrimination of surfaces in artificial active-touch. IEEE Trans. Rob. 2011, 27, 522–533. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, B.; Li, Y.; Huang, W.; Li, Y. Magnetostrictive Tactile Sensor Array for Object Recognition. IEEE Trans. Magn. 2019, 55, 1–7. [Google Scholar] [CrossRef]

- Drimus, A.; Petersen, M.B.; Bilberg, A. Object texture recognition by dynamic tactile sensing using active exploration. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 277–283. [Google Scholar]

- Kappassov, Z.; Corrales, J.-A.; Perdereau, V. Tactile sensing in dexterous robot hands. Rob. Auton. Syst. 2015, 74, 195–220. [Google Scholar] [CrossRef] [Green Version]

- Sankar, S.; Balamurugan, D.; Brown, A.; Ding, K.; Xu, X.; Low, J.H.; Yeow, C.H.; Thakor, N. Texture Discrimination with a Soft Biomimetic Finger Using a Flexible Neuromorphic Tactile Sensor Array That Provides Sensory Feedback. Soft Rob. 2020. [Google Scholar] [CrossRef]

- Speeter, T.H. Three-dimensional finite element analysis of elastic continua for tactile sensing. Int. J. Robot. Res. 1992, 11, 1–19. [Google Scholar] [CrossRef]

- Liang, G.; Mei, D.; Wang, Y.; Chen, Z. Modeling and analysis of a flexible capacitive tactile sensor array for normal force measurement. IEEE Sens. J. 2014, 14, 4095–4103. [Google Scholar] [CrossRef]

- Liang, G.; Wang, Y.; Mei, D.; Xi, K.; Chen, Z. An analytical model for studying the structural effects and optimization of a capacitive tactile sensor array. J. Micromech. Microeng. 2016, 26, 045007. [Google Scholar] [CrossRef]

- Zhang, Y.; Duan, X.-G.; Zhong, G.; Deng, H. Initial slip detection and its application in biomimetic robotic hands. IEEE Sens. J. 2016, 16, 7073–7080. [Google Scholar] [CrossRef]

- Shao, F.; Childs, T.H.; Barnes, C.J.; Henson, B. Finite element simulations of static and sliding contact between a human fingertip and textured surfaces. Tribol. Int. 2010, 43, 2308–2316. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, X.; Mei, D.; Zhu, L.; Chen, J. Flexible tactile sensor array for distributed tactile sensing and slip detection in robotic hand grasping. Sens. Actuators A 2019, 297, 111512. [Google Scholar] [CrossRef]

- Kerr, E.; McGinnity, T.M.; Coleman, S. Material classification based on thermal and surface texture properties evaluated against human performance. In Proceedings of the 2014 13th International Conference on Control Automation Robotics & Vision (ICARCV), Singapore, 10–12 December 2014; pp. 444–449. [Google Scholar]

- Araki, T.; Makikawa, M.; Hirai, S. Experimental investigation of surface identification ability of a low-profile fabric tactile sensor. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 4497–4504. [Google Scholar]

- Qin, L.; Yi, Z.; Zhang, Y. Unsupervised surface roughness discrimination based on bio-inspired artificial fingertip. Sens. Actuators A 2018, 269, 483–490. [Google Scholar] [CrossRef]

- Strese, M.; Schuwerk, C.; Iepure, A.; Steinbach, E. Multimodal feature-based surface material classification. IEEE Trans. Haptic 2016, 10, 226–239. [Google Scholar] [CrossRef] [PubMed]

- Chun, S.; Hwang, I.; Son, W.; Chang, J.-H.; Park, W. Recognition, classification, and prediction of the tactile sense. Nanoscale 2018, 10, 10545–10553. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Fix, E. Discriminatory Analysis: Nonparametric Discrimination, Consistency Properties; USAF School of Aviation Medicine: Dayton, OH, USA, 1985; Volume 1. [Google Scholar]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; pp. 278–282. [Google Scholar]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [Green Version]

- Koh, K.; Kwon, H.J.; Yoon, B.C.; Cho, Y.; Shin, J.-H.; Hahn, J.-O.; Miller, R.H.; Kim, Y.H.; Shim, J.K. The role of tactile sensation in online and offline hierarchical control of multi-finger force synergy. Exp. Brain Res. 2015, 233, 2539–2548. [Google Scholar] [CrossRef]

- Veiga, F.; Edin, B.; Peters, J. Grip stabilization through independent finger tactile feedback control. Sensors 2020, 20, 1748. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Damian, D.D.; Arita, A.H.; Martinez, H.; Pfeifer, R. Slip speed feedback for grip force control. IEEE Trans. Biomed. Eng. 2012, 59, 2200–2210. [Google Scholar] [CrossRef] [PubMed]

- Rekik, Y.; Vezzoli, E.; Grisoni, L. Understanding users’ perception of simultaneous tactile textures. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services, Vienna, Austria, 4–7 September 2017; pp. 1–6. [Google Scholar]

- Østlie, K.; Lesjø, I.M.; Franklin, R.J.; Garfelt, B.; Skjeldal, O.H.; Magnus, P. Prosthesis rejection in acquired major upper-limb amputees: A population-based survey. Disabil. Rehabil. Assist. Technol. 2012, 7, 294–303. [Google Scholar] [CrossRef] [PubMed]

- Resnik, L.; Ekerholm, S.; Borgia, M.; Clark, M.A. A national study of Veterans with major upper limb amputation: Survey methods, participants, and summary findings. PLoS ONE 2019, 14, e0213578. [Google Scholar] [CrossRef] [Green Version]

- Carey, S.L.; Lura, D.J.; Highsmith, M.J. Differences in myoelectric and body-powered upper-limb prostheses: Systematic literature review. J. Rehabil. Res. Dev. 2015, 52. [Google Scholar] [CrossRef]

- Osborn, L.; Kaliki, R.R.; Soares, A.B.; Thakor, N.V. Neuromimetic event-based detection for closed-loop tactile feedback control of upper limb prostheses. IEEE Trans. Haptics 2016, 9, 196–206. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).