Multimodal Classification of Parkinson’s Disease in Home Environments with Resiliency to Missing Modalities

Abstract

1. Introduction

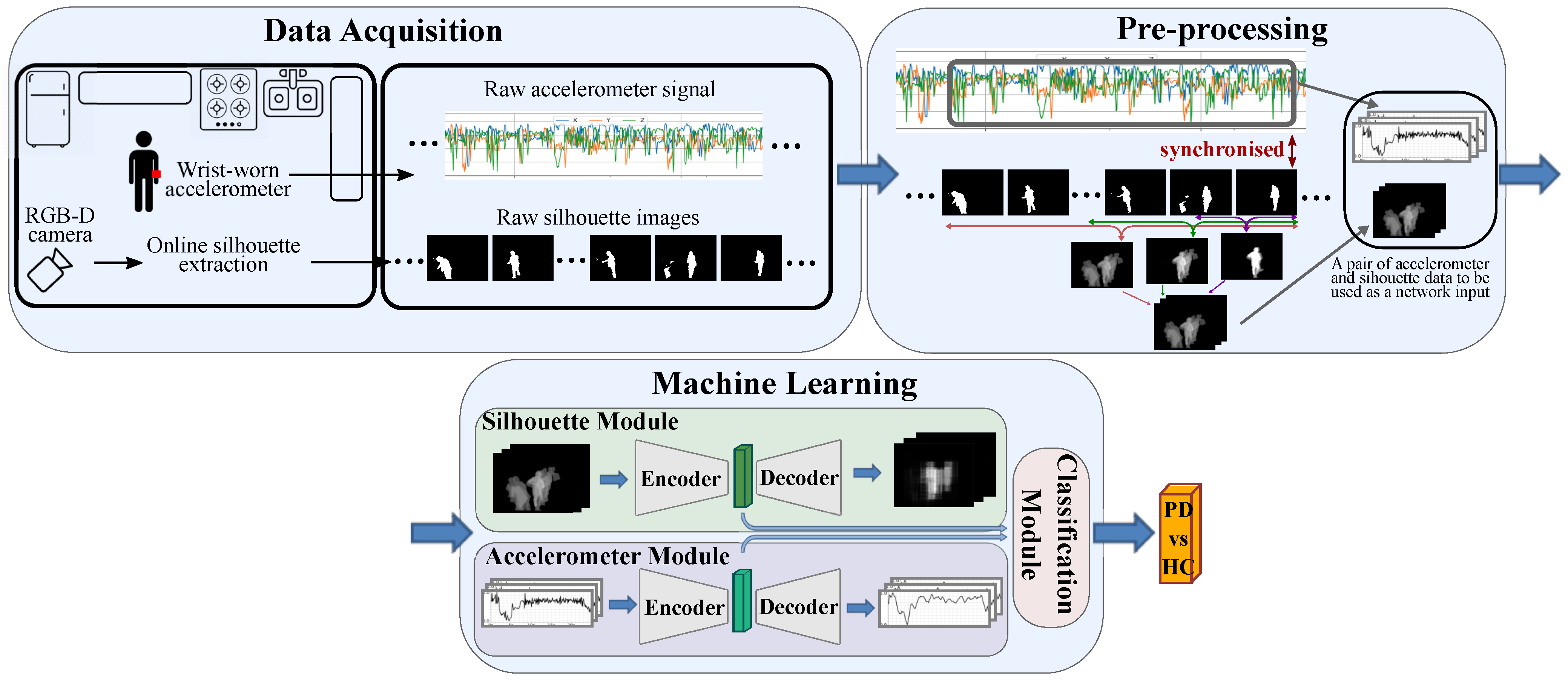

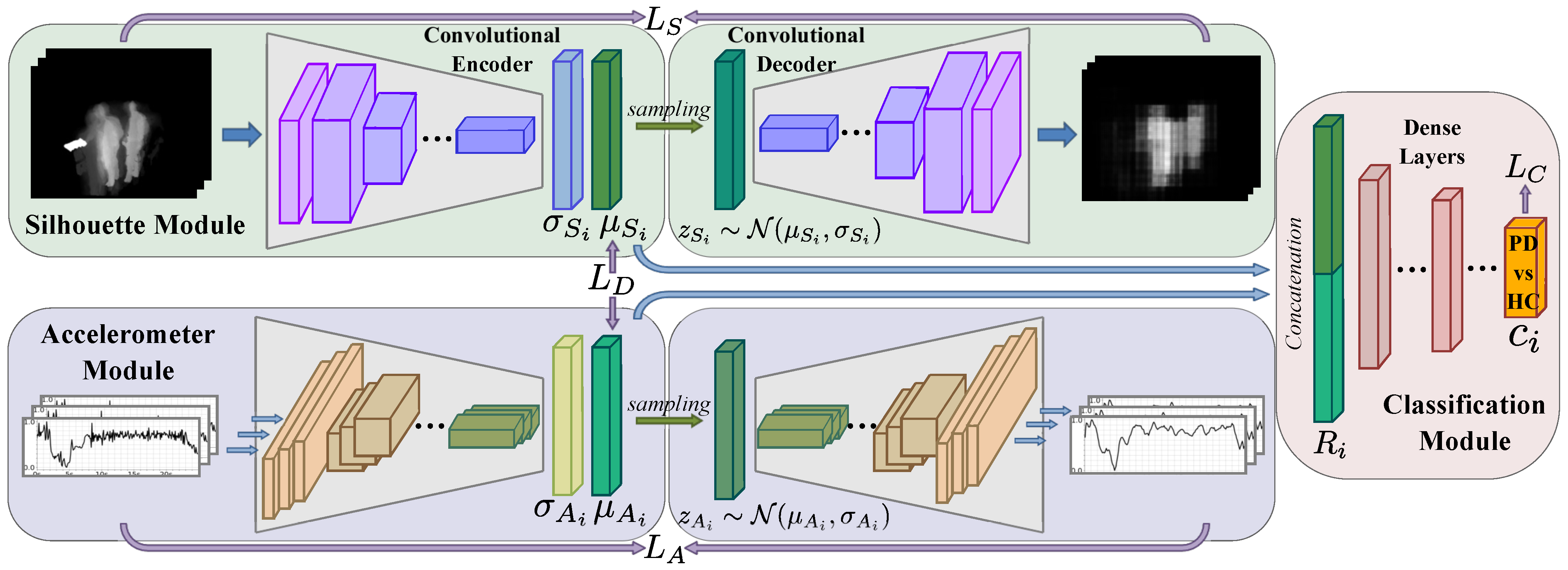

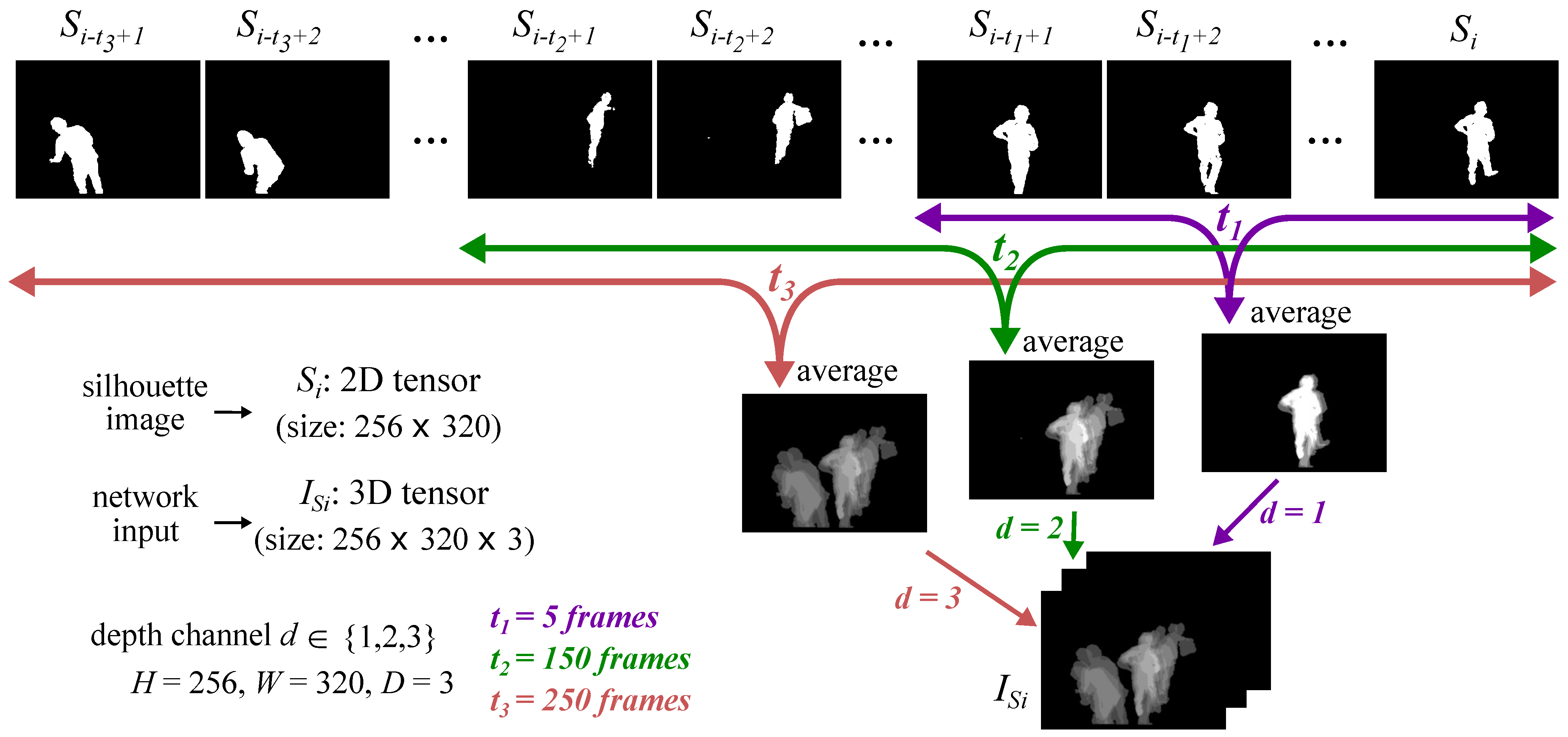

- We propose MCPD-Net, a multimodal deep learning model that jointly learns representations from silhouette and accelerometer data.

- We introduce a loss function to allow our model to handle missing modalities.

- We quantitatively and qualitatively demonstrate the effectiveness of our model when dealing with missing modalities, which, for example, due to cost or privacy reasons, is a common occurrence in deployments.

- We evaluate our proposed model on a data set that includes subjects with and without PD, empirically demonstrating its ability to predict if a subject has Parkinson’s Disease based on a common activity of daily living.

2. Related Works

3. Materials and Methods

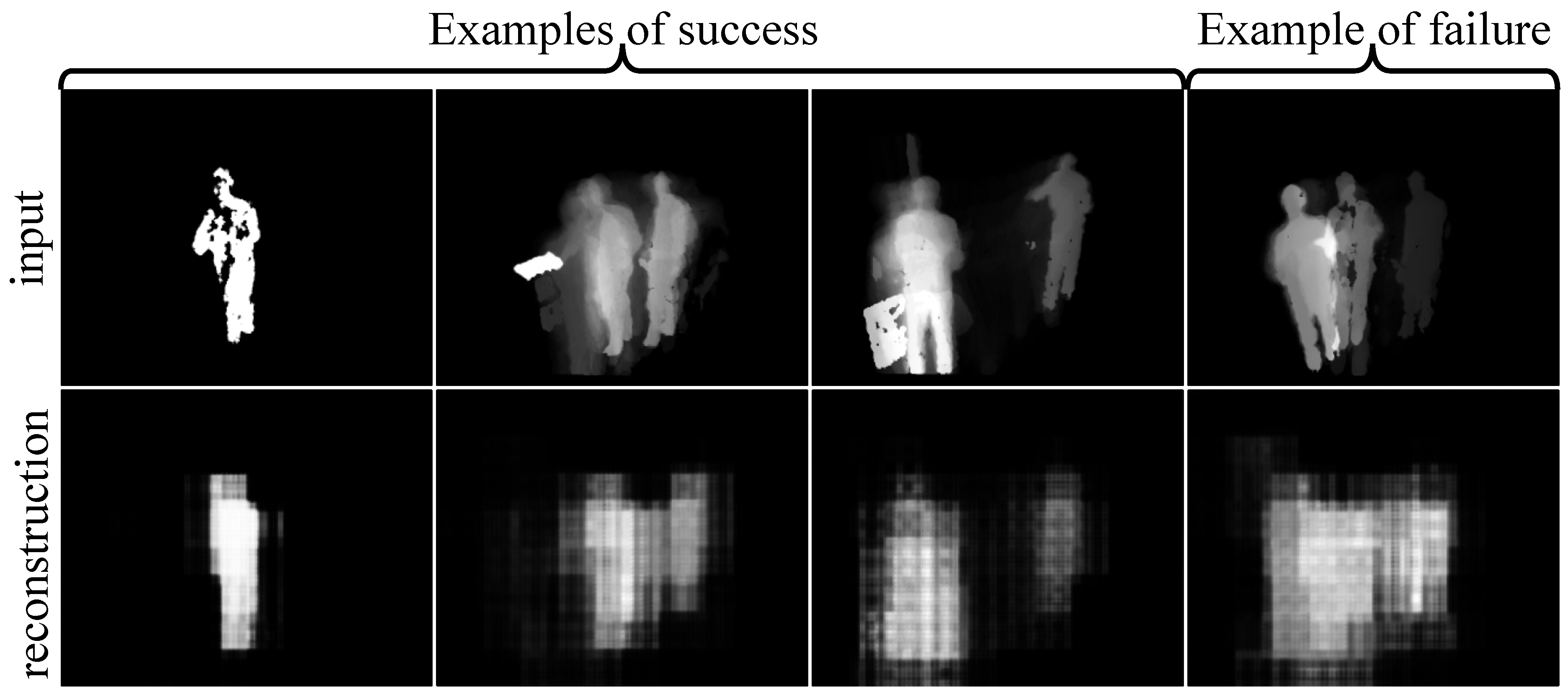

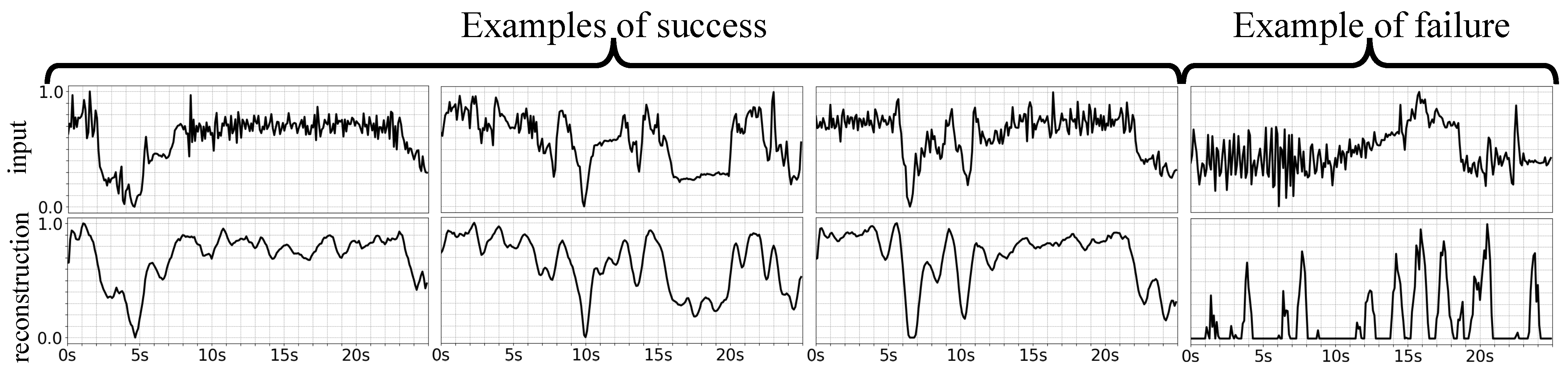

4. Results

4.1. Data Set

4.2. Implementation Details

4.3. Experimental Results

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jankovic, J. Parkinson’s disease: Clinical features and diagnosis. J. Neurol. Neurosurg. Psychiatry 2008, 79, 368–376. [Google Scholar] [CrossRef]

- Rovini, E.; Maremmani, C.; Cavallo, F. How wearable sensors can support Parkinson’s disease diagnosis and treatment: A systematic review. Front. Neurosci. 2017, 11, 555. [Google Scholar] [CrossRef] [PubMed]

- Pereira, C.R.; Pereira, D.R.; Weber, S.A.; Hook, C.; de Albuquerque, V.H.C.; Papa, J.P. A survey on computer-assisted Parkinson’s disease diagnosis. Artif. Intell. Med. 2019, 95, 48–63. [Google Scholar] [CrossRef] [PubMed]

- Morgan, C.; Rolinski, M.; McNaney, R.; Jones, B.; Rochester, L.; Maetzler, W.; Craddock, I.; Whone, A.L. Systematic review looking at the use of technology to measure free-living symptom and activity outcomes in Parkinson’s disease in the home or a home-like environment. J. Parkinson’s Dis. 2020, 10, 429–454. [Google Scholar] [CrossRef] [PubMed]

- Zhu, N.; Diethe, T.; Camplani, M.; Tao, L.; Burrows, A.; Twomey, N.; Kaleshi, D.; Mirmehdi, M.; Flach, P.; Craddock, I. Bridging e-Health and the Internet of Things: The SPHERE Project. IEEE Intell. Syst. 2015, 30, 39–46. [Google Scholar] [CrossRef]

- Woznowski, P.; Burrows, A.; Diethe, T.; Fafoutis, X.; Hall, J.; Hannuna, S.; Camplani, M.; Twomey, N.; Kozlowski, M.; Tan, B.; et al. SPHERE: A sensor platform for healthcare in a residential environment. In Designing, Developing, and Facilitating Smart Cities; Springer: Berlin, Germany, 2017; pp. 315–333. [Google Scholar] [CrossRef]

- Birchley, G.; Huxtable, R.; Murtagh, M.; Ter Meulen, R.; Flach, P.; Gooberman-Hill, R. Smart homes, private homes? An empirical study of technology researchers’ perceptions of ethical issues in developing smart-home health technologies. BMC Med. Ethics 2017, 18, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Ziefle, M.; Rocker, C.; Holzinger, A. Medical technology in smart homes: Exploring the user’s perspective on privacy, intimacy and trust. In Proceedings of the IEEE Computer Software and Applications Conference, Munich, Germany, 18–22 July 2011; pp. 410–415. [Google Scholar] [CrossRef]

- Noyce, A.J.; Schrag, A.; Masters, J.M.; Bestwick, J.P.; Giovannoni, G.; Lees, A.J. Subtle motor disturbances in PREDICT-PD participants. J. Neurol. Neurosurg. Psychiatry 2017, 88, 212–217. [Google Scholar] [CrossRef]

- Greenland, J.C.; Williams-Gray, C.H.; Barker, R.A. The clinical heterogeneity of Parkinson’s disease and its therapeutic implications. Eur. J. Neurosci. 2019, 49, 328–338. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the International Conference on Learning Representations (ICLR), Banff, AL, Canada, 14–16 April 2014. [Google Scholar]

- Fraiwan, L.; Khnouf, R.; Mashagbeh, A.R. Parkinson’s disease hand tremor detection system for mobile application. J. Med. Eng. Technol. 2016, 40, 127–134. [Google Scholar] [CrossRef]

- Um, T.T.; Pfister, F.M.; Pichler, D.; Endo, S.; Lang, M.; Hirche, S.; Fietzek, U.; Kulić, D. Data augmentation of wearable sensor data for parkinson’s disease monitoring using convolutional neural networks. In Proceedings of the ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 216–220. [Google Scholar] [CrossRef]

- Li, M.H.; Mestre, T.A.; Fox, S.H.; Taati, B. Vision-based assessment of parkinsonism and levodopa-induced dyskinesia with pose estimation. J. Neuroeng. Rehabil. 2018, 15, 1–13. [Google Scholar] [CrossRef]

- Cavallo, F.; Moschetti, A.; Esposito, D.; Maremmani, C.; Rovini, E. Upper limb motor pre-clinical assessment in Parkinson’s disease using machine learning. Parkinsonism Relat. Disord. 2019, 63, 111–116. [Google Scholar] [CrossRef] [PubMed]

- Pfister, F.M.; Um, T.T.; Pichler, D.C.; Goschenhofer, J.; Abedinpour, K.; Lang, M.; Endo, S.; Ceballos-Baumann, A.O.; Hirche, S.; Bischl, B.; et al. High-Resolution Motor State Detection in parkinson’s Disease Using convolutional neural networks. Sci. Rep. 2020, 10, 1–11. [Google Scholar] [CrossRef]

- Pintea, S.L.; Zheng, J.; Li, X.; Bank, P.J.; van Hilten, J.J.; van Gemert, J.C. Hand-tremor frequency estimation in videos. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Dadashzadeh, A.; Whone, A.; Rolinski, M.; Mirmehdi, M. Exploring Motion Boundaries in an End-to-End Network for Vision-based Parkinson’s Severity Assessment. In Proceedings of the International Conference on Pattern Recognition Applications and Methods (ICPRAM), Virtual Event, 4–6 February 2021; pp. 89–97. [Google Scholar] [CrossRef]

- Arora, S.; Venkataraman, V.; Zhan, A.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Parkinsonism Relat. Disord. 2015, 21, 650–653. [Google Scholar] [CrossRef] [PubMed]

- Hammerla, N.; Fisher, J.; Andras, P.; Rochester, L.; Walker, R.; Plötz, T. PD disease state assessment in naturalistic environments using deep learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Fisher, J.M.; Hammerla, N.Y.; Ploetz, T.; Andras, P.; Rochester, L.; Walker, R.W. Unsupervised home monitoring of Parkinson’s disease motor symptoms using body-worn accelerometers. Parkinsonism Relat. Disord. 2016, 33, 44–50. [Google Scholar] [CrossRef]

- Rodríguez-Molinero, A.; Pérez-López, C.; Samà, A.; de Mingo, E.; Rodríguez-Martín, D.; Hernández-Vara, J.; Bayés, À.; Moral, A.; Álvarez, R.; Pérez-Martínez, D.A.; et al. A kinematic sensor and algorithm to detect motor fluctuations in Parkinson disease: Validation study under real conditions of use. JMIR Rehabil. Assist. Technol. 2018, 5, e8335. [Google Scholar] [CrossRef] [PubMed]

- Parziale, A.; Senatore, R.; Della Cioppa, A.; Marcelli, A. Cartesian genetic programming for diagnosis of Parkinson disease through handwriting analysis: Performance vs. interpretability issues. Artif. Intell. Med. 2021, 111, 101984. [Google Scholar] [CrossRef] [PubMed]

- Taleb, C.; Likforman-Sulem, L.; Mokbel, C.; Khachab, M. Detection of Parkinson’s disease from handwriting using deep learning: A comparative study. Evol. Intell. 2020, 1–12. [Google Scholar] [CrossRef]

- Gazda, M.; Hireš, M.; Drotár, P. Multiple-Fine-Tuned Convolutional Neural Networks for Parkinson’s Disease Diagnosis From Offline Handwriting. IEEE Trans. Syst. Man Cybern. Syst. 2021, 1–12. [Google Scholar] [CrossRef]

- Lamba, R.; Gulati, T.; Alharbi, H.F.; Jain, A. A hybrid system for Parkinson’s disease diagnosis using machine learning techniques. Int. J. Speech Technol. 2021, 1–11. [Google Scholar] [CrossRef]

- Miao, Y.; Lou, X.; Wu, H. The Diagnosis of Parkinson’s Disease Based on Gait, Speech Analysis and Machine Learning Techniques. In Proceedings of the 2021 International Conference on Bioinformatics and Intelligent Computing, Harbin, China, 22–24 January 2021; pp. 358–371. [Google Scholar] [CrossRef]

- Masullo, A.; Burghardt, T.; Damen, D.; Hannuna, S.; Ponce-López, V.; Mirmehdi, M. CaloriNet: From silhouettes to calorie estimation in private environments. In Proceedings of the British Machine Vision Conference (BMVC), Newcastle upon Tyne, UK, 3–6 September 2018. [Google Scholar]

- Masullo, A.; Burghardt, T.; Damen, D.; Perrett, T.; Mirmehdi, M. Person Re-ID by Fusion of Video Silhouettes and Wearable Signals for Home Monitoring Applications. Sensors 2020, 20, 2576. [Google Scholar] [CrossRef]

- Zhang, C.; Yang, Z.; He, X.; Deng, L. Multimodal intelligence: Representation learning, information fusion, and applications. IEEE J. Sel. Top. Signal Process. 2020, 14, 478–493. [Google Scholar] [CrossRef]

- Baltrušaitis, T.; Ahuja, C.; Morency, L.P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef] [PubMed]

- Guo, W.; Wang, J.; Wang, S. Deep multimodal representation learning: A survey. IEEE Access 2019, 7, 63373–63394. [Google Scholar] [CrossRef]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Li, L.H.; Yatskar, M.; Yin, D.; Hsieh, C.J.; Chang, K.W. Visualbert: A simple and performant baseline for vision and language. arXiv 2019, arXiv:1908.03557. [Google Scholar]

- Nguyen, D.K.; Okatani, T. Multi-task learning of hierarchical vision-language representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–21 June 2019; pp. 10492–10501. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, W.; Sun, F.; Xu, T.; Rong, Y.; Huang, J. Deep multimodal fusion by channel exchanging. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual Event, 6–12 December 2020; pp. 4835–4845. [Google Scholar]

- Hou, M.; Tang, J.; Zhang, J.; Kong, W.; Zhao, Q. Deep multimodal multilinear fusion with high-order polynomial pooling. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Pérez-Rúa, J.M.; Vielzeuf, V.; Pateux, S.; Baccouche, M.; Jurie, F. MFAS: Multimodal fusion architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–21 June 2019. [Google Scholar] [CrossRef]

- Afouras, T.; Chung, J.S.; Senior, A.; Vinyals, O.; Zisserman, A. Deep audio-visual speech recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Gan, C.; Huang, D.; Zhao, H.; Tenenbaum, J.B.; Torralba, A. Music gesture for visual sound separation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10475–10484. [Google Scholar] [CrossRef]

- Tao, L.; Burghardt, T.; Mirmehdi, M.; Damen, D.; Cooper, A.; Camplani, M.; Hannuna, S.; Paiement, A.; Craddock, I. Energy expenditure estimation using visual and inertial sensors. IET Comput. Vis. 2017, 12, 36–47. [Google Scholar] [CrossRef]

- Henschel, R.; von Marcard, T.; Rosenhahn, B. Simultaneous identification and tracking of multiple people using video and IMUs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–21 June 2019. [Google Scholar] [CrossRef]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal deep learning. In Proceedings of the International Conference on Machine Learning (ICML), Bellevue, WA, USA, 28 June–2 July 2011. [Google Scholar]

- Suzuki, M.; Nakayama, K.; Matsuo, Y. Joint multimodal learning with deep generative models. arXiv 2016, arXiv:1611.01891. [Google Scholar]

- Wu, M.; Goodman, N. Multimodal generative models for scalable weakly-supervised learning. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Vedantam, R.; Fischer, I.; Huang, J.; Murphy, K. Generative models of visually grounded imagination. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Tsai, Y.H.H.; Liang, P.P.; Zadeh, A.; Morency, L.P.; Salakhutdinov, R. Learning factorized multimodal representations. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Shi, Y.; Siddharth, N.; Paige, B.; Torr, P.H. Variational mixture-of-experts autoencoders for multi-modal deep generative models. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019; pp. 15692–15703. [Google Scholar]

- Hall, J.; Hannuna, S.; Camplani, M.; Mirmehdi, M.; Damen, D.; Burghardt, T.; Tao, L.; Paiement, A.; Craddock, I. Designing a Video Monitoring System for AAL applications: The SPHERE Case Study. In Proceedings of the 2nd IET International Conference on Technologies for Active and Assisted Living (TechAAL 2016), London, UK, 24–25 October 2016. [Google Scholar]

- OpenNI. Available online: https://structure.io/openni (accessed on 25 May 2021).

- Axivity-AX3. Available online: https://axivity.com/product/ax3 (accessed on 25 May 2021).

- Twomey, N.; Diethe, T.; Fafoutis, X.; Elsts, A.; McConville, R.; Flach, P.; Craddock, I. A comprehensive study of activity recognition using accelerometers. Informatics 2018, 5, 27. [Google Scholar] [CrossRef]

- Elsts, A.; Twomey, N.; McConville, R.; Craddock, I. Energy-efficient activity recognition framework using wearable accelerometers. J. Netw. Comput. Appl. 2020, 168, 102770. [Google Scholar] [CrossRef]

- Robles-García, V.; Corral-Bergantiños, Y.; Espinosa, N.; Jácome, M.A.; García-Sancho, C.; Cudeiro, J.; Arias, P. Spatiotemporal gait patterns during overt and covert evaluation in patients with Parkinson’s disease and healthy subjects: Is there a Hawthorne effect? J. Appl. Biomech. 2015, 31, 189–194. [Google Scholar] [CrossRef] [PubMed]

- Morgan, C.; Craddock, I.; Tonkin, E.L.; Kinnunen, K.M.; McNaney, R.; Whitehouse, S.; Mirmehdi, M.; Heidarivincheh, F.; McConville, R.; Carey, J.; et al. Protocol for PD SENSORS: Parkinson’s Disease Symptom Evaluation in a Naturalistic Setting producing Outcome measuRes using SPHERE technology. An observational feasibility study of multi-modal multi-sensor technology to measure symptoms and activities of daily living in Parkinson’s disease. BMJ Open 2020, 10, e041303. [Google Scholar] [CrossRef] [PubMed]

| Precision | Recall | -Score | ||

|---|---|---|---|---|

| Silhouette (Sil) | CNN | 0.17 | 0.40 | 0.24 |

| VAE | 0.49 | 0.49 | 0.47 | |

| RF | 0.46 | 0.39 | 0.41 | |

| LSTM | 0.45 | 0.40 | 0.41 | |

| Accelerometer (Acl) | CNN | 0.53 | 0.45 | 0.44 |

| VAE | 0.63 | 0.55 | 0.44 | |

| RF | 0.59 | 0.45 | 0.43 | |

| LSTM | 0.58 | 0.47 | 0.42 | |

| MCPD-Net | 0.71 | 0.77 | 0.66 | |

| Precision | Recall | -Score | |

|---|---|---|---|

| CaloriNet [28] | 0.65 | 0.48 | 0.50 |

| AE without | 0.69 | 0.56 | 0.58 |

| AE with | 0.69 | 0.58 | 0.61 |

| VAE without | 0.61 | 0.67 | 0.58 |

| MCPD-Net (VAE with LD) | 0.71 | 0.77 | 0.66 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| (a) Missing Sil (Only Using Acl) | |||

| Acl VAE (unimodal) | 0.69 | 0.66 | 0.58 |

| AE with (multimodal) | 0.61 | 0.40 | 0.46 |

| VAE without (multimodal) | 0.63 | 0.62 | 0.57 |

| MCPD-Net | 0.70 | 0.77 | 0.64 |

| (b) Missing Acl (only Using Sil) | |||

| Sil VAE (unimodal) | 0.57 | 0.63 | 0.59 |

| AE with (multimodal) | 0.67 | 0.42 | 0.48 |

| VAE without (multimodal) | 0.58 | 0.61 | 0.55 |

| MCPD-Net | 0.63 | 0.63 | 0.63 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| (a) Missing Sil (Only Using Acl) | |||

| Acl VAE (unimodal) | 0.63 | 0.55 | 0.44 |

| AE with (multimodal) | 0.20 | 0.22 | 0.20 |

| VAE without (multimodal) | 0.63 | 0.56 | 0.55 |

| MCPD-Net | 0.70 | 0.77 | 0.62 |

| (b) Missing Acl (only Using Sil) | |||

| Sil VAE (unimodal) | 0.49 | 0.49 | 0.47 |

| AE with (multimodal) | 0.30 | 0.25 | 0.23 |

| VAE without (multimodal) | 0.56 | 0.54 | 0.46 |

| MCPD-Net | 0.60 | 0.49 | 0.51 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heidarivincheh, F.; McConville, R.; Morgan, C.; McNaney, R.; Masullo, A.; Mirmehdi, M.; Whone, A.L.; Craddock, I. Multimodal Classification of Parkinson’s Disease in Home Environments with Resiliency to Missing Modalities. Sensors 2021, 21, 4133. https://doi.org/10.3390/s21124133

Heidarivincheh F, McConville R, Morgan C, McNaney R, Masullo A, Mirmehdi M, Whone AL, Craddock I. Multimodal Classification of Parkinson’s Disease in Home Environments with Resiliency to Missing Modalities. Sensors. 2021; 21(12):4133. https://doi.org/10.3390/s21124133

Chicago/Turabian StyleHeidarivincheh, Farnoosh, Ryan McConville, Catherine Morgan, Roisin McNaney, Alessandro Masullo, Majid Mirmehdi, Alan L. Whone, and Ian Craddock. 2021. "Multimodal Classification of Parkinson’s Disease in Home Environments with Resiliency to Missing Modalities" Sensors 21, no. 12: 4133. https://doi.org/10.3390/s21124133

APA StyleHeidarivincheh, F., McConville, R., Morgan, C., McNaney, R., Masullo, A., Mirmehdi, M., Whone, A. L., & Craddock, I. (2021). Multimodal Classification of Parkinson’s Disease in Home Environments with Resiliency to Missing Modalities. Sensors, 21(12), 4133. https://doi.org/10.3390/s21124133